Abstract

America’s community colleges play a major role in increasing access to higher education and, as open access institutions, they are key points of entry to postsecondary education for historically underrepresented populations. However, their students often fall short of completing degrees. Policymakers, scholars, and philanthropists are dedicating unprecedented attention and resources to identifying strategies to improve retention, academic performance, and degree completion among community college students. This chapter reviews experimental evidence on their effectiveness, finding that they often meet with limited success because they typically target just one or two aspects of students’ lives, are of short duration, and fail to improve the institutional context. They also rarely address a serious structural constraint: limited resources. We discuss new directions for future interventions, research and evaluation.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

Community colleges play a critical role in higher education. Intended to provide accessible, flexible, and affordable opportunities for postsecondary education and workforce participation, they have contributed to substantial increases in college participation. This is especially true for groups who are historically underrepresented in postsecondary education—including racial and ethnic minority, low-income, part-time, first-generation, and adult students. Today, almost 40% of all undergraduates—more than 6.6 million Americans—attend community colleges (Kena et al. 2015). However, increased college enrollment does not consistently translate into program or degree completion. Completion rates among community college students—as measured by earning a credential or transferring to a four-year institution—are less than 50% after 6 years of enrollment and below 30% for low-income, Black, Latino, and Native American students (Shapiro et al. 2014). Fewer than two in five community college students who enter with the intent to earn some type of a degree do so within six years of initial enrollment (Shapiro et al. 2014) and only three in five enroll in any college one year later (National Student Clearinghouse Research Center 2015).

While even some college education appears to benefit students, degree completion is essential, especially if students must accrue debt along the way in order to cover college prices (Goldrick-Rab 2016). Low completion rates coupled with substantial lag times between enrollment and completion levy real economic and social costs (Goldrick-Rab 2016; Bailey et al. 2004). Since they broaden access, community colleges appear to substantially raise the educational attainment of those otherwise unlikely to attend college at all, while doing very little harm to students who might otherwise attend four-year colleges (Leigh and Gill 2003; Brand et al. 2012). Scholars, policymakers, and philanthropic foundations are devoting unprecedented attention and resources to identifying strategies to boost retention and degree completion among community college students (Bailey et al. 2015; Grossman et al. 2015; Sturgis 2014). These interventions address a wide variety of conditions and contexts—at the individual, school, and system levels—believed to pose barriers to student success. Many efforts have been evaluated to assess their effectiveness. In doing so, researchers have increasingly relied upon randomized control trials (RCTs) to generate rigorous estimates of causal effects, providing insights into “what works” to boost attainment among community college students.

This chapter reviews evidence from experimental evaluations of a range of interventions—from financial aid to student advisement to developmental education—with two main goals. First, we examine what the evidence from experimental studies reveals about the most promising interventions. It is evident that while sustained and multi-pronged strategies appear most effective at boosting completion, they are also uncommon. Second, we illustrate the role research and evaluations incorporating experimental design should play in future sociological research, especially when assessing the impact of education and social program interventions targeted at disadvantaged youth, adults, and families. Effectively replicating or scaling programs requires that future studies more carefully document the context in which an intervention succeeded or failed, and the resources and costs involved.

2 The Contexts of Community College Education

Unlike other higher education institutions, community colleges were explicitly designed as open entry-points into higher education, emphasizing expanded opportunities for all rather than maximizing outcomes for a few. In the aftermath of World War II, the Truman Commission (1947) called for action to democratize higher education, postsecondary enrollments surged, and higher education leaders sought a means of satisfying popular pressure for access while protecting curricular rigor at existing institutions (Brint and Karabel 1989; Trow 2007). In response, the nation’s existing “junior colleges” were rechristened as “community colleges” and their ranks dramatically expanded. Community colleges would serve their purpose as “agents of democracy” by being both accessible and comprehensive, within the bounds of their resource constraints.

Community colleges aim to minimize three barriers to college entry: price, academic requirements, and distance. They are intended to be cheaper than four-year public colleges; open-enrollment, requiring that prospective students complete high school to gain admission; and geographically dispersed so they are within reasonable commuting distance for all Americans. As public higher education institutions, community colleges are primarily funded by state and local revenues. Historically, public funding sources have buoyed costs, keeping tuition non-existent or very low for students. Low-cost educational opportunities reinforced community colleges’ missions as open access portals for a broad range of students, including those who could not afford higher education at other public or private institutions. Community colleges are comprehensive in their offerings, reflecting the range of needs and interests of the community they served. They have academic courses for students intending to transfer to four-year colleges, vocational training programs for students looking to upgrade skills or change jobs, and general education courses for community members interested in lifelong learning.

Aspects of the community college context— those related to accessibility and comprehensiveness in particular—may work at cross-purposes with the goal of maximizing completion rates. For example, open enrollment and a relatively low cost of attendance help attract a more heterogeneous mix of students, compared to those who attend four-year colleges and universities. Community college students are disproportionately Black and Latino and are far more likely to be a first-generation college-goer or from a lower-income household. Given open-admissions, community college students have on average lower levels of academic preparation and fewer resources than students attending four-year public and non-profit colleges and universities (Table 24.1). Indeed, despite the constant characterization that they are “diverse” spaces, in fact community colleges are highly segregated (Goldrick-Rab and Kinsley 2013).

Stratification by student composition, and by extension aspirations and outcomes, translates into vastly different educational experiences and, by extension, differences in opportunities and outcomes. Moreover, the effects of segregating students across institutional types may be exacerbated by peer-effects. If having more uniformly poorer, less-prepared peers who are more likely to drop out of college impacts the social and intellectual atmosphere and normalizes non-completion, then community college students may be at a particular disadvantage (Century Foundation 2013).

Because community colleges enroll many students without the skills needed to assimilate college-level material, remedial education has been central to them since their inception (Cohen et al. 2014). Remedial policies effectively bar low-performing students from most classes bestowing credit (Hughes and Scott-Clayton 2011; Perin 2006), and the majority of students never complete the sequences of remedial courses to which they are assigned (Bailey et al. 2010). Assignment to remediation substantially increases the cost of a degree in terms of time and money (Melguizo et al. 2008). Critics allege that remedial regimes permit the colleges to maintain appearance of access, while effectively serving as a holding area for students from the “educational underclass” (Deil-Amen and DeLuca 2010).

Part of accessibility is geographic dispersion. Community colleges are, with few exceptions, commuter campuses. Sociological and education research suggests that students who reside on campus are more likely to remain enrolled and to eventually graduate, as such students spend more time on campus and are far more likely to become socially and academically integrated into the life of the institution (Astin 1984; Pascarella and Terenzini 2005; Schudde 2011; Tinto 1987). In addition, part-time and part-year community college enrollment is the norm—fewer than half of community college students enroll either full-time in both fall and spring semesters (Table 24.1). Indeed, many community college students are on campus only a few hours per week, giving the colleges few opportunities to directly engage them and build institutional loyalty or involvement. The low-intensity student enrollment patterns reflect community college students’ “non-traditional” status. Most are older than 23 and, for many, college is negotiated along with full-time work and childcare responsibilities (Table 24.1; Stuart et al. 2014). Students are frequently not exclusively or even primarily oriented towards college-going, and practically speaking completing a degree is often not their top priority. As a result, it is common for community college students to “stop out” for a semester or two to attend to other responsibilities or to transfer when another college is more convenient (Bahr 2009; Crosta 2014).

The effects of more limited opportunities for interaction with faculty are compounded by increasing student–staff ratios. Typically, the ratio of student support and other college staff is double that found at four-year institutions (Baum and Kurose 2013). Staffing shortages are particularly dire for student advisement and counseling; at community colleges, student-to-counselor ratios are frequently higher than 800: or 1000:1 (Park et al. 2013), resulting in inadequate, inconsistent, and often counterproductive academic and career counseling (Deil-Amen and Rosenbaum 2002, 2003; Grubb 2001, 2006; Rosenbaum et al. 2006). Personalized counseling and advisement is crucial to community college student success. Instead, community college counseling offices triage counseling services according to student needs, and devote a limited amount of time to each student during heavy-use periods such as registration.

These challenges are complicated by the role that community colleges play as comprehensive institutions that try to expand college enrollment through a wide range of courses, degree, and certificate programs. However, over time, at many community colleges, “comprehensiveness” has translated into an array of often disconnected courses, programs, and support services that students must navigate with relatively little guidance (Bailey et al. 2015). The immense array of choices can overwhelm students, especially first-generation college students and others with limited experience with postsecondary education (Rosenbaum et al. 2006; Scott-Clayton 2015). Since each program has its own set of required courses, the diversity of programs also causes problems for coordinating and scheduling courses. This poses challenges for part-time and working students to arrange classes in ways that fit their schedule. For students attending community college as an entry point to a bachelor’s degree, the “cafeteria self-service” course-taking model found at many community colleges also can pose challenges for identifying a clear and efficient pathway to a four-year degree. Oftentimes, incoming students do not know to which four-year institutions they will apply, or even the general requirements for transfer to a bachelor’s degree-granting institution. The absence of strong articulation policies that link community colleges with four-year institutions—even public ones—means that the four-year colleges often differ in the courses required for transfer and in the courses they will recognize by transferring credit. For community college students who do manage to transfer, substantial loss of credits is a common occurrence (Monaghan and Attewell 2015; Simone 2014).

Recently, researchers and outside experts have suggested that community colleges narrow their program structures in ways that faculty clearly map out academic programs to create coherent pathways that are aligned with requirements for further education and career advancement (Bailey et al. 2015). This involves presenting students with a small number of program options, developing clear course sequences leading to degree completion, arranging the courses so that they are convenient (i.e., scheduled back-to-back), and providing personalized, mandatory counseling services. They contend that community colleges treat their clientele as if they were “traditional college students,” equipped with the motivation, knowledge, and skills necessary to negotiate college. Change the community colleges’ programs, practices and resources, they argue, and one can improve student outcomes (Bailey et al. 2015; Rosenbaum et al. 2006; Scott-Clayton 2015).

But it is increasingly difficult to maintain both accessibility and comprehensiveness while also increasing completion rates as state governments have reduced support on a per-student basis (Goldrick-Rab 2016). While, on a per-student basis, community colleges receive about as much money from states as do public comprehensives (Baum and Kurose 2013; College Board 2015), they are far more dependent on state funding as a primary source of revenue. On average, community colleges receive about 71% of their revenue from state appropriations, compared to public four-year colleges’ 38% (Kena et al. 2015). This makes community colleges particularly vulnerable to state cuts—particularly during recessionary periods, which tend to couple funding reductions with enrollment surges (Betts and McFarland 1995). Community colleges pass some costs on to students. Between 2000 and 2010 the percentage of revenue covered by state appropriations fell from 57% to 47%. At the same time, that met through tuition and fees rose from 19% to 27% (Kirshstein and Hubert 2012). As community colleges’ capacity to increase tuition is constrained by their mandate to remain affordable, their principal response to dwindling resources is to spend less per student. Per-student instructional spending at community colleges fell by 12% between 2001 and 2011; on average, community colleges now spend 78% as much per student on instruction as public bachelor’s colleges and 56% as much as public research universities, despite enrolling students with arguably greater academic challenges (Desrochers and Kirshstein 2012).

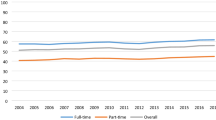

Lower spending may impact educational quality and output (Jenkins and Belfield 2014). The student–faculty ratio at community colleges was 22:1 in 2009, while at public four-year colleges this ratio was 15:1 (Baum and Kurose 2013). The higher ratio constrains the amount of time faculty can devote to individual students and may affect instructional quality. This is particularly problematic in a commuter setting, where classroom time and faculty are the primary opportunity for “socio-academic integrative moments” (Deil-Amen 2011). The impact of higher faculty–student ratios is further exacerbated by community colleges’ other cost-saving strategy: heavy reliance on part-time and contingent faculty. At community colleges, two-thirds of faculty work part time, and only 18% are tenured or tenure-track (Kezar and Maxey 2013). Exposure to part-time and adjunct faculty is negatively associated with degree completion (Eagan and Jaeger 2009). Contingent faculty may not have the institutional knowledge and skills to help students negotiate the institution and contribute to short-term faculty–student relationships that do not last beyond a semester.

Research diligently minimizing selection bias has consistently found negative impacts of initial community college enrollment, relative to four-year college enrollment, on bachelor’s degree attainment (Brand et al. 2012; Reynolds 2012; but see Rouse 1995). But the 60% one-year retention rate at community colleges is not appreciably different from that at non-selective public or non-profit four-year colleges (62% and 61% respectively) (Kena et al. 2015). Monaghan and Attewell (2015), comparing community college students with those at non-selective four-year colleges, find that retention differences do not appear until the fifth semester, after adjusting for student characteristics.

In summary, the community college sector arose to accommodate demands to democratize access to higher education and offer a comprehensive battery of general education, vocational, and academic options. The “imperious immediacy of interest” (Merton 1936) in achieving these goals obscured the consideration of whether their resulting organizational features might stymie degree completion. Early critics alleged that community colleges “cooled out” the aspirations of academically disinclined and/or lower-SES youth by tracking them into vocational programs or permitting them to drop out altogether (Brint and Karabel 1989, Clark 1960). But it wasn’t until the late 1990s that their low completion rates came to be collectively defined, in Blumer’s (1971) sense, as a social problem in need of a solution. In response, policymakers, educational leaders and philanthropists have targeted their efforts at new opportunities to restructure how community colleges deliver education and support services, with an eye towards identifying reforms that improve both the effectiveness and efficiency with which they support not only access to higher education but also completion, for all students.

3 Points of Intervention

Efforts to improve outcomes in community colleges are focused on either the student, the institution, or the system (Goldrick-Rab 2010). Student-focused interventions reduce financial barriers, provide student support, or improve students’ academic skills (e.g., dual enrollment programs, financial aid, advising, or coaching). Financial aid is by far the most popular strategy. Community college tuition is relatively low; yet, many students still struggle with paying for college as well as other living costs incurred while in school. It is hoped that by putting in place programs and resources that alleviate material shortages and reducing stress, financial aid may enable students to focus on academic work and to avoid potentially injurious alternative strategies such as taking out loans, working long hours, or enrolling part-time. Other interventions seek to improve students’ “informational capital”—the knowledge required to select a major, choose the correct classes that will enable them to complete the major, or apply for financial aid (Rosenbaum et al. 2006). Such efforts include informational seminars, orientation courses, and counseling services. Similarly, institutions also intervene to address student academic shortfalls through mandatory remedial courses and through voluntarily-accessed tutoring and writing centers. Finally, given that many community college students enroll part-time, interventions have been developed that encourage full-time attendance or summer course-taking.

School-focused interventions attempt to change how community colleges serve students (e.g., guided pathways, course redesign, or structuring of support services). One frequent leverage point has been the college counseling center: assigning each student to a counselor, making appointments mandatory, lowering student–counselor ratios, and having counselors specialize by degree program. Other interventions include forging social connections among students through linked courses or “learning communities,” and by building students’ connections to the institution through providing in-class tutors or mentors. Skills assessment and remedial coursework also has been an area for reform. Many community college students enter who are not “college ready,” so remediation is widespread; it is estimated that between 60% and 70% take at least one remedial course at some point (Crisp and Delgado 2014; Radford and Horn 2012).

System-level interventions alter community colleges’ incentive structures, the financial structures that govern them, or the landscape in which they operate. Statewide policies that provide free or reduced tuition, such as “promise programs” (e.g., Tennessee and Oregon) reorient the nature of community colleges in the higher education system hierarchy (Miller-Adams 2015). Performance-based funding has been used by states to encourage community colleges to orient programs and resources toward specified goals and metrics, oftentimes closely aligned with student outcomes (Dougherty et al. 2016; Hillman et al. 2014). State articulation policies hold the promise of smoothing transfer from community colleges into four-year institutions.

4 Evaluating Community College Reforms

Until very recently, social scientists sought to understand the community college through naturalistic observation rather than measuring intervention impacts. However, such approaches are limited in their capacity to provide rigorous estimates of causal effects (Morgan and Winship 2014). When participation in an intervention is voluntary, those who choose to participate tend to differ in measureable and unmeasurable ways from non-participants. As a result, it is difficult to disentangle the intervention’s independent impacts from selection bias introduced through these baseline differences. Random assignment to treatment ensures that differences between treated and untreated individuals arise only from chance and are unlikely to be considerable given large enough samples (Rubin 1974). For this reason, randomized experiments permit unbiased, internally valid, and truly causal estimates of treatment effects.

But as with all methods, randomized control trials have limitations. Some questions cannot be answered by experimental evaluations, for reasons of feasibility and ethics (Heckman 2005; Lareau 2008). Additionally, unforeseen issues in program implementation and participant behavior after randomization can have substantial impacts on treatment effects (Lareau 2008; Heckman and Smith 1995). Finally, experiments tell us little about why causes produce their effects, though additional non-causal evidence on mechanisms can be gathered using mixed-methods approaches (Grissmer et al. 2009; Harris and Goldrick-Rab 2012). They also tell us little about how the context in which an intervention occurred may have impacted its outcomes.

Despite limitations, over the past 15 years, experimental evaluations have increasingly been used to understand the impacts of community college reforms. To a great extent, their application has been in response to efforts on the part of the U.S. Department of Education’s Institute of Educational Sciences attention to causal research and the corresponding shift in federal research dollars. At the same time, new institution-level data on student outcomes became readily available, drawing public attention to the considerable gaps in college completion rates between community college students and their peers at other higher education institutions (Bailey et al. 2015). As a result, the “College Completion Agenda” began to coalesce in the early years of the new decade, and philanthropic foundations added their millions of private money to the public money already earmarked for experimental evaluation research.

In this chapter, we catalogue randomized control trials in community college settings. Eligible studies were identified by (1) searching Google Scholar, the Web of Science, EconLit, Social Sciences Full Text, Education Full Text, and the American Economic Association’s RCT Registry with combinations of keywords (experiment, randomized control trial, community college, and two-year college); (2) scouring websites of evaluation organizations such as MDRC and Mathematica; (3) searching programs of research conferences such as SREE, APPAM, and AEA; and (4) making inquiries among scholars knowledgeable in the field. Studies that met the following criteria were included:

-

Subjects were assigned to intervention or control condition using random assignment;

-

Subjects were entering or presently enrolled at community colleges, either exclusively or as a major sub-population; and

-

Interventions were aimed at improving academic outcomes such as retention, credit accumulation, academic performance, and degree completion.

Given these criteria, we excluded observational studies, including those employing rigorous quasi-experimental designs, except to provide context for experimental interventions. Interventions where subjects could not be randomized, such as those altering institutional or policy frameworks, were excluded. Also excluded were interventions intending to impact whether or where individuals choose to enroll in college. Given the fiscal constraints under which community colleges operate, knowing the cost of an intervention is crucial for evaluating its realistic potential to be adopted at scale (Belfield et al. 2014; Belfield and Jenkins 2014; Schneider and McDonald 2007a, b). Therefore, wherever possible, a discussion of costs is included alongside the assessment of impacts. However, to a large extent this information is notably missing from extant research (Belfield 2015).

In total, we identified 30 studies of community college interventions that met the selection criteria. In addition, we included seven in progress studies to give a sense of the future of this research. Next, the studies are discussed according to their level of intervention.

5 Student-Level Interventions

Student-level interventions work principally to augment the resources or change the behavior of individual community college students, while leaving the prevailing institutional environment unchanged. As such, they seek to improve the capacity of individuals to navigate an environment which is taken as given. Individual-level interventions are often, but need not necessarily be, prefaced on an assumption that individual deficits are at the root of outcomes deemed unacceptable. We identified 14 such interventions.

5.1 Financial Aid

The primary policy effort to raise community college completion rates is financial aid, and nationwide governments spend about $57 billion on grant aid and another $96 billion on loans (College Board 2015). But establishing the causal impact of financial aid on college persistence and completion is not straightforward. Since the same trait—financial need—which renders a student eligible for financial aid also tends to disrupt college progress, naïve estimations of aid effects tend to be biased. Most research leverages “natural experiments” such as aid cutoffs, program terminations, and tuition reductions in order to identify causal effects (Alon 2011; Bettinger 2004, 2015; Castleman and Long 2013; Denning 2014; Dynarski 2003; Kane 2003; Singell 2004; Van der Klaauw 2002). Such studies have tended to find that an increase in aid of $1000 increases persistence by 2–4 percentage points, and degree completion by between 1.5 and 5 percentage points (for reviews see Bettinger (2012), Deming and Dynarski (2010), Dynarski and Scott-Clayton (2013), and Goldrick-Rab et al. (2009)).

To date there have been seven randomized experiments examining financial aid in community college contexts. Two are evaluations of privately funded, need-based scholarships affecting both four-year and two-year students: Angrist and associates’ (2015) evaluation of the Buffett Scholarship, and Goldrick-Rab and associates’ investigation of the Wisconsin Scholars Grant (WSG) (N = 2641 and N = 12,722 respectively). Both scholarships targeted low- to moderate-income students, had high school GPA eligibility requirements, and were restricted to residents of a particular state (Nebraska and Wisconsin, respectively) who attended in-state public colleges. The Buffett Scholarship is designed to fully cover tuition and fees; two-year recipients were awarded as much as $5300 per year for up to 5 years. In contrast, the WSG is designed to reduce rather than eliminate tuition expenses; the yearly award was $1800 for two-year students, for up to 5 years. Another crucial difference is the timing of scholarship. The Buffett Scholarship is awarded prior to enrollment, and thus can impact individuals’ choice of college, whereas the WSG is awarded towards the end of the recipient’s first semester.

Both studies found measurable positive impacts for the full population of recipients, but null or negative results for initial two-year enrollers. Anderson and Goldrick-Rab (2016) estimate that the WSG increased one-year retention by 3.7 percentage points at University of Wisconsin branch campuses and decreased it by 1.5 percentage points at Wisconsin Technical Colleges, but neither result was statistically significant and there were no impacts on other indicators of academic progress. The authors point out that the WSG covered just 28% of the students’ unmet financial need at two-year colleges, while it covered 39% at the four-year colleges and universities (and had sizable impacts on degree completion—see Goldrick-Rab et al. 2016). However, offering two-year students the grant did decrease their work hours, and particularly the odds of working the third-shift (Broton et al. 2016). Angrist et al. estimate a statistically non-significant 1.9 percentage point lower one-year retention rate for scholarship recipients who initially enrolled at community colleges. Importantly, Buffet scholarship recipients were 7 percentage points less likely to attend community colleges in the first place than control students, suggesting that the additional aid increased four-year attendance among those who would otherwise have opted for a community college to save money.Footnote 1

In 2004–2005, as part of its larger “Opening Doors” demonstration,Footnote 2 MDRC evaluated a “performance-based scholarship”Footnote 3 (PBS) for low-income, mostly female parents at two community colleges in the New Orleans area (N = 1019). The scholarship provided $1000 per semester for up to two semesters, awarded incrementally: $250 upon enrollment (at least 6 credits), $250 at midterm contingent on remaining enrolled at least half-time and earning a “C” average, and the rest at the end of the semester contingent on GPA. At the end of the program year, treated students had earned 2.4 more credits, and were 12 percentage points more likely to be retained into their second year. And one year later, the credit advantage had grown to 3.5 creditsFootnote 4 (Barrow et al. 2014; Barrow and Rouse 2013; Brock and Ritchburg-Hayes 2006; Ritchburg-Hayes et al. 2009). But Hurricane Katrina brought an end to the experiment. While the program’s evaluation points toward potentially promising effects, it also suggested that intervention’s costs extended beyond the financial outlay for student scholarships. Program implementation required additional time on the part of counselors who monitored students’ enrollment and grades and were available to offer advice and referrals to additional services. The program also required additional personnel time to administer the aid program. That said, the evaluation falls short of identifying the extent of additional time spent by counselors and administrators, and did not estimate the costs associated with implementing the reform.

Encouraged by these results, in 2008 MDRC launched a larger PBS demonstration at community colleges in Ohio, New York City, Arizona, and FloridaFootnote 5 (N = 2285; N = 1502; N = 1028; N = 1075). The scholarships all targeted low-income populations and made continued receipt of aid contingent upon stipulated enrollment intensity and performance benchmarks (usually part-time enrollment and earning at least a “C”). The scholarships varied in terms of generosity and additional behavioral requirements for parts of the aid. In these RCTs, the experimental group experienced short-term gains of smaller size than in the Louisiana experiment. In only two were there impacts on retention: The Arizona scholarship improved one-year retention by between 2 and 5 percentage points, and at one of the New York sites the treatment group was retained at a 9 percentage point higher rate. The scholarships consistently improved credit accumulation over the first year by between 0.9 and 1.7 credits, and modestly improved academic performance, but these effects shrank to insignificance after the end of the scholarship. Completion effects were for the most part not yet available, but in Ohio the treatment group was 3.3 percentage points more likely to have earned an associate degree or certificate at the end of 2 years.

Collectively the five PBS experiments suggest that additional need-based aid can modestly boost retention and credit accumulation, but seems to be more effective when paired with support services such as tutoring and advisement. In all experiments that incorporated such services (Louisiana, Arizona, and Florida) recipients substantially outpaced the control group in meeting program-specified goals. However, in nearly all cases effects were observable only as long as scholarships were still operative (for results of the Arizona RCT, see Patel and Valenzuela 2013; for Florida, Sommo et al. 2014; for New York, Ritchburg-Hayes et al. 2011 and Patel and Rudd 2012; for Ohio, Cha and Patel 2010 and Mayer et al. 2015; for a summary of the demonstration, see Patel et al. 2013). What is unclear, however, is at what cost these gains were achieved. All of the programs involved both financial investments in scholarship payments to students as well as personnel time, particularly at community colleges, to implement. This makes the cost effectiveness of scholarship programs unclear, as well as what might be required of community colleges to implement such programs.

There are at least two ongoing experiments involving either financial aid itself or its method of disbursement. In 2014, the Wisconsin HOPE Lab launched an RCT investigating the impact of need-based scholarships on low-income students who indicate interest in STEM fields. And MDRC is testing a program entitled “Aid Like a Paycheck” that disburses financial aid in small amounts regularly throughout the semester, based on the notion that doing so will temper the “feast or famine” dynamic occurring when aid is distributed in one lump sum. A pilot program was conducted at three community colleges in 2010 (Ware et al. 2013), and a large-scale randomized control trial is presently underway.

5.2 Free Computers

Colleges—and even community colleges—tend to assume that their students have access to the Internet. However, in 2010 only 66% of community college students with household incomes below $20,000 per year had home computers with Internet access (Fairlie and Grunberg 2014). In 2006, a randomized control trial at a community college in northern California tested the impact of providing students with free computers. Researchers recruited 286 students for the experiment, and half were given refurbished computers. Treated students were slightly more likely to take courses which would transfer to a state four-year college: Transfer-eligible courses made up 66% of all courses taken by treated students and 61% of courses taken by untreated students. And in the first 2 years, treated students were slightly more likely to take courses for a letter grade. But no impacts were found on passing courses, earning degrees or certificates, or transferring to a four-year college (Fairlie and Grunberg 2014; Fairlie and London 2012).

5.3 Financial Aid Information

The financial aid system is complex and requires students to make weighty decisions, and many community college students negotiate it alone. Not surprisingly, this can lead to costly errors. For instance, students who receive Pell grants may not know that they need to reapply for them annually. Nationally, 10% of Pell-eligible students fail to re-apply for financial aid in their second year of college, and the resulting loss of aid is strongly predictive of dropping out (Bird and Castleman 2014).

There are two experiments that identify the impacts of providing students with financial aid information. Castleman and Page (2015) conducted a randomized control trial among low-income first-year college students in the Boston area in which the treatment group was sent text-message reminders to re-file the FAFSA. Among community college students, receipt of text reminders improved retention into the fall and spring semesters of sophomore year by 12 and 14 percentage points, respectively. Impacts were larger among students with lower high school GPAs. Barr et al. (2016) carried out an experiment with new student loan applicants at the Community College of Baltimore in which treated students were sent, over the course of a month, a series of texts with student loan facts. The texts told students that they could borrow less (and sometimes more) than the amount offered by their institution, that monthly repayments depend on the amount borrowed and the repayment plan, and that there are lifetime limits on borrowing. Students receiving the texts borrowed 9% less in Stafford loans and 12% less in unsubsidized Stafford loans, and larger declines in borrowing were witnessed among new enrollees, Blacks, low-income students, and students with lower GPAs.

Turner (2015) is presently conducting an experiment with community college students in three states which randomizes the default option presented to loan applicants. For some, the default option will be to take out a loan, and students will have to take action to opt out, while for the others the opposite will be true. Additionally, the experiment will randomly assign some to be presented with a particular loan amount as a default while others will have to choose a loan amount, and some applicants will be prompted to complete a worksheet helping them take stock of their resources and expenses before making a decision while others will not.

5.4 College Skills Classes

College skills classes are one of many interventions that community colleges provide premised on the notion that many students do not have the requisite cultural capital to successfully negotiate higher education. They aim to impact skills in study habits, time management, organization, self-presentation, goal-setting, and negotiating the educational bureaucracy. In this manner, they are analogous to remedial courses, but are lower-stakes as they are usually pass/fail and grant only a credit at most.

College skills courses offered to or required of first-semester freshmen are common, but there is little rigorous research on their effectiveness and most studies are descriptive in nature (Derby and Smith 2004; O’Gara et al. 2009; Zeidenberg et al. 2007). MDRC evaluated the impacts of two college skills course programs for students on academic probation at a community college in the Los Angeles area. In both programs, the treatment consisted of a two-semester college skills course taught by a college counselor, a “Success Center” that provided tutoring services, and a modest voucher to cover the cost of textbooks. In the first program, the skills course was presented to those randomized into treatment as optional; they were merely encouraged to enroll, and participation in tutoring was not enforced. As a result, only half of the treatment group took the first-semester course, very few took the second-semester course, tutoring services were rarely utilized, and treatment effects were nonexistent. In the second iteration, students were told (falsely) that they were required to take the first-semester skills course and were strongly encouraged to take the second-semester course, and attendance at tutoring sessions was enforced by instructors. Take-up was much better in this iteration, and the treatment group earned 2.7 additional credits on average over the two semesters of the program and was 7 percentage points more likely to pass all of their classes. At the end of the program year, the experimental group was 10 percentage points less likely to be on academic probation, though this impact did not persist after one additional semester (Scrivener et al. 2009; Weiss et al. 2011). While the programs’ evaluations suggest that skills course programs might be a promising strategy, the interventions involve additional resources on the part of community colleges (e.g., services and vouchers). However, existing evaluations do not describe the resources required for implementation, nor the programs’ costs.

5.5 Social-Psychological Interventions

Social psychologists have recently explored the impacts of teaching individuals that intelligence is not fixed but rather can be augmented through training and effort. Interventions designed to instill a “growth mindset” informed by this incremental theory of intelligence have been found to effectively boost the academic performance of four-year college students and other groups (Blackwell et al. 2007). Building off this work, Paunesku, Yeager, and colleagues developed a 30-min intervention (a webinar and reinforcement activity) that teaches viewers that intellectual skills are learned rather than fixed, and tested it in a community college context. In one field experiment involving mostly Latino students at a Los Angeles-area community college, treated students earned overall GPAs which were 0.18 grade points higher in the following semester. In a second experiment the intervention was tested among students in remedial math courses. In this case, the treated group dropped out of their math class at less than half the rate of the control group (9% vs 20%) (Yeager and Dweck 2012; Yeager et al. 2013).

Other researchers have investigated ways to impact students’ motivation and therefore performance in academic contexts. Harackiewicz and colleagues have investigated the impacts of both “utility” interventions and “values” interventions. In the former, students are provided information about the labor market value of science and math skills; in the latter, students complete a brief in-class writing assignment in which they select and explore values (such as spiritual or religious values, career, or belonging to a group) that are important to them. Such interventions have been found to improve outcomes among both high school and university students (Harackiewicz et al. 2014; Harackiewicz et al. 2015). The researchers are currently working with the Wisconsin HOPE Lab to assess the impacts of similar interventions at six two-year colleges in Wisconsin.

5.6 Incentivizing Academic Momentum

The academic momentum perspective suggests that the speed at which a student makes progress towards a degree—through accumulating credits or clearing remedial requirements—has an independent causal impact on their likelihood of completion (Adelman 1999; Attewell et al. 2012; Attewell and Monaghan 2016). This may be because rapid completion minimizes cumulative exposure to the risk of an event that could derail schooling, or because students who spend more time involved in schoolwork will be more academically integrated into the institution. Attewell conducted a pair of randomized control trials at community colleges in the City University of New York to test two applications of this theory. In the first, students who were attending college part-time (fewer than 12 credits) in the fall semester were incentivized to “bump up” to 12 or more credits in the spring. In the second, students who had elected not to sign up for summer courses after their first year in college were incentivized to do so. In both cases, the incentive was a generous $1000. In the experiment involving increased credit load, the treatment group was more likely to be retained into the second year, and at the end of the second year had accumulated six additional credits on average. In the summer coursework experiment, treated students were 8 percentage points more likely to still be enrolled two semesters after treatment, and had accumulated an additional three credits by the end of their second year of college (Attewell and Douglas 2016).

6 School-Level Interventions

In contrast to the interventions outlined above, school-level interventions augment or alter the institutional environment that individuals must navigate in order to attain their goals. They may by extension augment students’ stock of knowledge or capacities, and they do not necessarily presume that individual deficits do not contribute to generating unacceptable outcomes. But they do presuppose that the institutional environment is changeable, and that the status quo may contain unnecessary barriers to goal-attainment. We identified 15 such interventions.

6.1 “Enhanced” Student Services

As noted earlier, counseling centers have been singled out for critique by scholars of late. Because of the complexity of community colleges as institutions and students’ lack of assistance from knowledgeable family members, effective counseling emerges as utterly crucial to providing the information and guidance necessary for student success (Allen et al. 2013). Effective counseling could also help students feel more connected to the institution by establishing a relationship with at least one trustworthy staff member. But this is simply not present at most community colleges, where counseling services are student-initiated and at which counselors are responsible for a large number of students and provided little training or time to serve them.

One RCT conducted by MDRC at two Ohio community colleges investigated the effect of “enhanced” counseling services. In this evaluation, treatment group students were assigned to a specific counselor, with whom they were expected to meet regularly, and this counselor was assigned a reduced caseload (160:1 rather than the usual 1000:1). Treated students were also assigned a designated contact person in the financial aid office and were given a $150 stipend per semester conditional on meeting with counselors. During the two semesters the program was active, impacts were substantial. Treated students’ fall-to-spring retention was 7 percentage points higher than the control group, and treated students accumulated a half credit extra over the course of the year. In surveys, the program group also was more likely to describe their college experience as “good” or “excellent,” report that they had a campus staff member on whom they relied for support, and receive financial aid in the spring semester. After the program year these gains did not persist, but many students continued to seek out the counselor formerly assigned to them (Scrivener and Au 2007; Scrivener and Pi 2007; Scrivener and Weiss 2009).

While the programs’ impacts were substantial, replicating this program elsewhere is hampered somewhat by the absence of information on the resources community colleges dedicated to its implementation. It is important to note that these programs required community colleges to potentially dedicate additional personnel hours to carry out the intervention, particularly counselors with whom students met more frequently. However, the study does not describe in detail how community colleges allocated the personnel hours required—either by reallocating or expanding existing counselor time or by adding additional personnel.

6.2 Mentoring

MRDC carried out an evaluation of a “light-touch” mentoring program for students taking developmental and early college-level math courses at a community college in McAllen, Texas. In the program, students’ math sections were randomly assigned to treatment and control categories. Treated sections were assigned a non-faculty college employee who acted as a mentor for the students in the course and informed them about additional support services, such as the tutoring center. The program succeeded in increasing students’ utilization of on-campus services such as the tutoring center, and treated students were more likely to report feeling that they had someone on campus to whom they could turn to for help. However, there were no statistically significant differences in pass rates, GPA, or final exam score. Among part-time students, however, the treatment group was more likely to pass their math course and earned slightly higher scores on the final (Visher et al. 2010).

6.3 Testing and Remediation

As previously discussed, remediation is the near-universal institutional compromise strategy community colleges have adopted to resolve the dilemma of being open-door institutions of advanced education. Analogous to the situation with financial aid, the effect of taking a remedial course must be separated empirically from the effects of the academic weaknesses that landed students in the remedial course (Levin and Calcagno 2008). But the matter is even more complicated because though taking a remedial course could improve one’s skills and odds of completion, being assigned to remediation has considerable (likely negative) consequences in its own right. The net impact of a school’s testing and remediation policy is the balance of these two opposing effects—something that is typically overlooked in the research literature. In part because of this methodological confusion, scholars have failed to reach consensus on remediation’s impacts (Bailey 2009; Melguizo et al. 2011). Observational studies that compare those who take remedial courses and those who do not tend to find only small differences in completion, and their authors have interpreted this as demonstrating that remedial courses are effective (Adelman 1998, 1999; Attewell et al. 2006; Bahr 2008; Fike and Fike 2008). But studies employing more sophisticated quasi-experimental designs have found impacts to be neutral-to-negative (Boatman and Long 2010; Calcagno and Long 2008; Martorell and McFarlin 2011; Scott-Clayton and Rodriguez 2012; for a counter-example, see Bettinger and Long 2009). A recent meta-analysis of this work finds that being placed into remediation has a small, but statistically significant, negative impact on credit accumulation, ever passing the course for which remediation was needed, and degree attainment (Valentine et al. 2016).

There are four randomized control trials that deal with remediation at community colleges in one form or another. Three RCTs investigated the effects of taking remedial courses versus entering directly into college-level work. An early RCT conducted in the late 1960s randomly placed students identified as needing remediation in English either directly into a college-level class or into a remedial course (Sharon 1972). The students assigned to remedial courses were retained at rates similar to control group students, and passed college-level English at similar rates. However, they tended to earn higher grades in this course, suggesting some positive impact of remediation on academic skills. Forty years later, Moss and Yeaton (2013) conducted an experiment in which students immediately below the remedial cutoff on a math placement test were randomly placed into either remedial courses or college-level courses. The authors do not present results for retention or accumulation of college-level credits, but find a positive impact of taking remedial courses on grades in college-level math. Their RCT sample was very small (N = 63), but the authors also conducted supplemental analyses using regression-discontinuity designs and found similar effects. Finally, Logue and others at the City University of New York randomly assigned students identified as requiring remediation into either remedial algebra, remedial algebra with additional tutoring, or college-level statistics with tutoring (Logue et al. 2016). Early results show no difference between the two groups taking developmental algebra, but the group assigned to take statistics passed their assigned course at far higher rates, accumulated more credits in both the program and post-program semesters, and were retained at similar rates. The researchers attribute the gain in credits among the “mainstreamed” group to three factors: They passed their assigned course at higher rates, this course counted for college credit, and it served as a prerequisite for other courses, enabling students to pursue their majors more freely. The costs—to students and institutions—of these remedial course interventions are not well understood. The evaluations did not incorporate direct measures of costs in their analysis.

Another experiment investigated the impacts of alternative methods of remedial placement (Evans and Henry 2015). This project contains two separate experimental groups, both of which take an alternative test called the ALEKS, which provides self-paced personalized learning modules for those who fail the test and allows them to retake it. One of the treatment groups, “ALEKS-2,” could only retake the test once, and only after completing all assigned modules. The other, “ALEKS-5,” could retake the test up to four times but were not required to complete learning modules. Control students took the standard placement test (the COMPASS, in this case). Only first-semester results are at this point available, but both treatment groups were less likely to be placed into remediation. In addition, the ALEKS-2 group was more likely than the control group to take college-level math in their first semester, and the ALEKS-5 group was more likely to pass it.

Two more remediation interventions will be evaluated in the near future. In the first study, being conducted by MDRC, the Community College Research Center (CCRC) and CUNY, students placed into remediation will be randomly assigned to complete a one-semester intensive developmental immersion program (entitled CUNYStart) prior to official matriculation. The second, MDRC’s “Developmental Education Acceleration Project,” evaluates two innovative formats for administering developmental education. The first treatment group will be assigned to developmental courses that are personalized, module-based, and which permit them to enter and exit at their own pace. The second treatment group will take an accelerated program which squeezes two remedial courses into one single semester.

6.4 Summer Bridge Courses

“Summer bridge” programs—courses or programs that take place during the summer prior to freshmen year—are widespread in higher education. These programs vary substantially in their content and are nearly always voluntary. At community colleges, bridge courses are oriented nearly exclusively to teaching basic skills to incoming students who scored low enough on placement exams to require remediation, offering such students an opportunity to complete at least some required remedial coursework prior to the first semester. Oftentimes students are not charged to take these courses. Observational research indicates that these programs effectively boost persistence and even six-year attainment (Douglas and Attewell 2014).

MDRC, in conjunction with the National Center for Postsecondary Research (NCPR), carried out an experimental evaluation of summer bridge programs at eight colleges in Texas, including six community colleges (Barnett et al. 2012; Wathington et al. 2011). Early impacts were encouraging: Treated students were more likely to take and to pass college-level math and English courses in their first year than control-group students, suggesting that the bridge program successfully enabled some students to quickly clear remedial requirements. But there was no impact on one-year retention, and the advantages in college-level course completion and credit accumulation narrowed to statistical insignificance by the fourth semester. Researchers at CUNY carried out another experimental evaluation of summer bridge programs. In this intervention, students who missed the enrollment deadline for bridge courses were recruited into an experimental evaluation, and those selected for treatment were offered $1000 to enroll in sections of these courses reserved for the experiment. Researchers estimated a non-significant negative 5 percentage point effect of taking bridge courses on one-year retention, and a non-significant negative effect on credit accumulation (Attewell and Douglas 2016).

6.5 Learning Communities

Learning communities are geared towards providing community college students the opportunity to build social connections to other students and to faculty that they typically do not form because of their loose connection to the college. They proceed on the notion that “social and academic integration” into the social world of the college is a key mechanism for retaining students. Social bonds engender a feeling of belonging and an obligation to make good on implicit promises to return and complete degrees. They additionally provide students with information networks and sources of emotional support.

Learning communities seek to cultivate student success through three interconnected mechanisms (Tinto 1997). First, a group of students take multiple courses together, providing opportunities for students to form social bonds and to support each other across courses (Karp 2011). Second, the courses are linked in terms of content, allowing for deeper engagement with material. Third, faculty who teach the linked courses collaborate and share information about student progress and engagement. Additionally, many learning communities feature reduced class sizes, block-scheduling, and auxiliary services such as advising and tutoring. Frequently, one of the linked courses is a first-year college skills seminar. Observational research on learning communities almost uniformly finds positive impacts on outcomes such as student engagement, interaction with faculty, relationships with peers, perceptions of institutions, academic performance, and retention (Minkler 2002; Raftery 2005; Tinto et al. 1994).

There have been seven experimental evaluations of learning communities, all by MDRC. In 2003 MDRC evaluated an existing learning community for entering students at Kingsborough Community College in New York City. The treatment group was split into learning communities of roughly 25 students who took three courses together in their first semester: introductory English (mostly remedial), a course in their major, and a college skills course. There were substantial support services: Treated students were assigned an academic advisor (who was granted a smaller caseload), had reduced class sizes, were provided enhanced and often in-class tutoring, and were granted a $150 book voucher for the semester. These supports, and the learning community itself, only lasted a single semester. The program had encouraging early impacts on retention and completion of remedial courses, as well as on non-cognitive outcomes such as self-reported academic engagement and reported feelings of belonging at the school. Positive impacts faded out after four semesters (Bloom and Sommo 2005; Scrivener et al. 2008; Weiss et al. 2014, 2015).

Subsequently, in conjunction with the NCPR, MDRC carried out experimental evaluations of learning communities at six separate community colleges beginning in fall 2007. These evaluations involved, collectively, more than 6500 students, and the programs evaluated varied from the earlier study in two important respects. First, they for the most part lacked any supplementary services, thus presenting purer tests of learning community impacts. Second, whereas the earlier project evaluated an established learning community at scale, the later evaluations involved either newly-created learning community programs or existing programs which were rapidly scaled up, incorporating faculty with little experience with learning communities and no history of collaborating on linked courses. As was the case previously, all were one-semester interventions. The resources required in order to implement learning communities in community colleges, and their corresponding costs, associated with implementing these learning communities are essentially unknown.

“No frills” learning communities at Merced College in California, Hillsborough Community College in Florida, and the Community College of Baltimore had negligible results. At Merced, the treated group was about a third of a course ahead of the control group in the completion of remedial sequences, and at Hillsborough the treatment group was 5 percentage points more likely to be retained into the second semester. No further impacts were detected on academic performance or credit accumulation, and no effects lasted beyond the first post-program semester (Weiss et al. 2010; Weissman et al. 2012). Learning communities at Houston Community College and Queensborough Community College in New York were slightly more elaborate. The Houston program linked remedial math to a student success course, and tutoring and counseling was inconsistently provided. Treated students completed their first remedial math course at a rate 14 percentage points higher during the program semester, and this advantage persisted for two semesters after the program (Weissman et al. 2011). The Queensborough learning community was supported with a full-time coordinator and a college advisor assigned solely to treatment group students. The treatment group was substantially more likely to pass the first developmental math course in their sequence during the program semester and the second math course in the first post-program semester, and there were modest effects on credit accumulation (Weissman et al. 2011). Finally, researchers returned to Kingsborough Community College to evaluate learning communities aimed at students pursuing particular occupational majors. The program was beset by implementation and recruitment problems, and the school was forced to alter the program repeatedly throughout the evaluation. Not surprisingly, no effects were found on outcomes of interest (Visher and Torres 2011).

7 A Comprehensive Support Intervention: CUNY ASAP

In 2007, with support from the City’s Center for Economic Opportunity, the City University of New York launched what is likely the single most ambitious program to boost degree completion in a community college setting. The Accelerated Study in Associate Programs (ASAP) initiative does not rely on a single intervention such as financial aid or smaller class sizes. Instead, it builds on prior research, such as the 2003 learning community evaluation at Kingsborough, which suggested that multifaceted programs that address multiple student needs simultaneously tend to have more robust impacts.

ASAP draws on many of the strategies involved in the interventions we have already described and adds a few more. First, there is financial support: Tuition and fees not met through other grants are waived, and students are provided with subway passes and can rent textbooks free of charge. Building on the academic momentum perspective, participating students are required to enroll full-time (at least 12 credits), though they have the alternative of enrolling at slightly less than full-time and using winter and summer intercessions to meet credit requirements. There are mandatory support services: Students are assigned an advisor (who has a reduced case-load) and required to meet with them at least twice per month, and they are also required to meet once per semester with a career services and employment counselor (dedicated to ASAP). Students are required to attend tutoring if they are in remedial courses, on academic probation, or are re-taking a course they have previously failed. In each semester ASAP students are required to take a non-credit seminar focused on building and developing college skills. Learning communities are also involved in students’ first year, though precisely how these are conducted varies across CUNY campuses. Students are strongly encouraged to take required remedial courses as early as possible, to attend tutoring for courses in which they are struggling, and to make use of winter and summer intercessions to accumulate credits more rapidly. ASAP courses also tend to be somewhat smaller than average courses at CUNY community colleges.

But perhaps most important, in contrast to most interventions reviewed thus far, ASAP is not limited to a single semester or year. Instead, conditional on meeting certain requirements—such as remaining enrolled full-time—students can participate in and access the benefits of ASAP for three full years. Most interventions reviewed above were at least modestly successful during program semesters, but effects faded out rapidly thereafter. One reaction to this is to conclude that the interventions “don’t work” because they did not produce “lasting gains.” ASAP planners drew the opposite conclusion: In order to be successful, an intervention strategy needs to be not only comprehensive but sustained.

In its first few years, ASAP was open only to “college-ready” students—that is, students with no remedial requirements. Internal evaluations, utilizing propensity-score matching methods, suggested that participation in ASAP was associated with a 28.4 percentage point gain in three-year degree completion and a half-semester’s difference in credits accumulated after 3 years (Linderman and Kolenovic 2012). Encouraged by these findings, CUNY contracted with MDRC to carry out a randomized assignment evaluation. This evaluation began in the spring semester of 2010, and involved just under 900 students at three CUNY community colleges. Instead of limiting eligibility to college-ready students, participation was limited to low-income entering students who demonstrated some, though not deep, remedial need (1 or 2 required courses).

The evaluation found that ASAP generated large early impacts. By the end of the first year, the treatment group was 25 percentage points more likely to have completed all required remedial courses, and had earned 3 more college-level credits on average (Scrivener et al. 2012). These impacts grew, rather than attenuating, over time. After 3 years, treated students had accumulated 7.7 more credits on average than the control group. And whereas only 21.8% of the control group had completed a degree, 40.1% of the treatment group had done so—an 83% gain. Treated students were also 9.4 percentage points more likely to have transferred to a four-year college within 3 years (Scrivener et al. 2015). The ASAP evaluation stands out as one of the few that systematically evaluated program costs, providing some guidance to community colleges seeking to replicate the program. That said, the accompanying cost study shows that ASAP’s gains did not come cheaply. The direct costs were estimated to be over $14,000 per student over 3 years. ASAP students also took more classes than control students, and incorporating these costs could raise the per-student total to between $16,000 and $18,500. However, given the large increase in completion, researchers estimated that per degree, ASAP spent $13,000 less than was spent on the control group (Scrivener et al. 2015). Despite information on program costs and effects, given the absence of other similar studies it is impossible to evaluate this evidence relative to other interventions, leaving a lingering question—is ASAP a cost-effective alternative relative to other possible interventions?

Efforts to evaluate comprehensive intervention models like ASAP are continuing. MDRC is currently conducting a replication of ASAP at three community colleges in Ohio; the evaluation cohort enrolled in fall 2015 and will be tracked for 3 years. And at Tarrant County College in Fort Worth, Texas, researchers are carrying out an experimental evaluation of a program called Stay the Course. Operated in partnership with a local non-profit, Stay the Course is designed to address non-academic obstacles faced by low-income community college students through provision of comprehensive case management and emergency financial assistance (Evans et al. 2014).

8 Discussion and Conclusion

As the vast majority of new jobs require postsecondary training (Carnevale et al. 2013), low rates of degree completion increasingly disadvantage lower-SES and minority youth. Community colleges are positioned to play a central role in expanding educational attainment and narrowing educational disparities. But in order to do so, they must pivot institutionally from guaranteeing access to facilitating degree completion—without compromising on the former. But making community colleges deliver on promises of educational opportunity will require not timid reforms or tinkering, but bold innovation and substantial resources.

Community colleges arose in the era of postwar educational optimism with an explicit set of goals—expanding access to college, providing a broad and comprehensive set of programs, and serving local communities—which they have emphatically achieved. Today, politicians, scholars, and foundations are demanding that community colleges do better in terms of degree completion. The simplest method for community colleges to increase degree completion is to restrict access to those who are “college-ready.” Or community colleges could reduce institutional complexity by eliminating scores of occupational programs that serve millions and are valued by employers. However, few policymakers wish to see community colleges abandoning either their democratic mission or the provision of vocational certifications at low cost. Instead, in an era of withering public support, community colleges are being ordered to do more with less (Jenkins and Belfield 2014).

The new focus on completion has brought unprecedented scholarly attention—supported by unprecedented research funding—to community colleges, leading to a number of promising experimentally-evaluated interventions. Need-based financial aid, particularly when accompanied with supports, has increased retention and credit accumulation. Learning communities do not seem to generate large gains on their own, but have short-run impacts on retention and movement through remedial sequences when coupled with counseling and other supports. “Enhanced” counseling appears to benefit students as long as it remains available. And there is evidence that limiting exposure to remediation and can speed progress toward degrees.

Other interventions should be evaluated experimentally. For example, scholars have proposed developing “guided pathways,” clear sequences of courses leading directly to credentials and/or transfer to a four-year college. Others suggest providing housing or food support—campus food pantries or a collegiate equivalent of free and reduced lunch—will provide low-income students greater security and improve educational outcomes (Broton et al. 2014; Goldrick-Rab et al. 2015). Another promising intervention is emergency financial assistance for students facing unexpected crises that endanger their persistence (Dachelet and Goldrick-Rab 2015; Geckler et al. 2008). Single-stop centers, which provide information about and access to a range of benefits and services in a single location, are being established on campuses across the country, and could be evaluated using randomized encouragement (Goldrick-Rab et al. 2014).

The available evidence strongly suggests two tentative conclusions. First, simple interventions do not appear to work as well as multifaceted programs. Complex interventions like ASAP can lead researchers to wonder which interventions are most impactful. But this assumes components to have independent, additive effects, when they may interact with and reinforce each other. Second, that many programs impacts are positive while in operation but fade away afterwards suggests that effective interventions must be prolonged. Underlying problems such as resource scarcity or academic weaknesses or slight college knowledge do not vanish when a program closes up shop, but reassert themselves vigorously. Policymakers should not expect short-term programs to have anything other than short-term impacts.

As community colleges operate with limited and unpredictable resources, policymakers and educational leaders considering reforms must attend to the resources required for implementation (Belfield et al. 2014; Belfield and Jenkins 2014). However, as we noted, existing evaluations largely ignore such matters (Belfield 2015). Community college leaders need to know where to invest scarce dollars and how programmatic decisions influence resource requirements. State-level policymakers are also are at a disadvantage. There are few benchmarks for determining at what level community colleges should be funded (Chancellor’s Office of the California Community Colleges 2003), and none are explicitly tied to performance (Kahlenberg 2015). Future evaluations should examine interventions’ relative cost effectiveness and clearly delineate resources entailed for implementation, or community colleges will risk squandering scarce resources or selecting interventions for which they have insufficient capacity to implement. Research conducted by MDRC, the Wisconsin HOPE Lab, and the Center for Benefit-Cost Studies in Education has begun to incorporate estimates of cost, but more is needed.

The evaluation literature also devotes inadequate attention to the context in which interventions occur. As we discussed, structural features of community colleges work at cross-purposes with efforts to raise completion rates, and recent fiscal developments have further eroded capacity for improvement. Additionally, community college students confront a broader opportunity structure which presents immense obstacles to improving their situation through educational upgrading. If evaluators do not take these structural realities into consideration, unrealistic expectations will be set and improper conclusions reached. Too often, when an intervention has small, short-lived impacts, this is taken as evidence that the strategy in the abstract “doesn’t work.” A more realistic conclusion is likely that the intervention is, by itself, inadequate to overcome the collective weight of countervailing structural forces bearing upon individuals and institutions at the bottom of the educational and social hierarchy.

Failure to take resources and power into account enables the tacit assumption that the only actors that matter in determining community college students’ success are the colleges and the students themselves. This conceals the real and pressing need for broader structural reforms to ensure that community colleges are able to provide real educational opportunity to all Americans, regardless of background.

Notes

- 1.

Applicants to the Buffett Scholarship needed to specify a “target” college in their initial application, but students were not bound to attend these colleges.

- 2.

Opening Doors was a multi-site experimental demonstration examining the impact of different sorts of interventions designed to improve college retention and completion among lower-income students. These various interventions included learning communities, college skills courses, intensive counseling, and performance-based scholarships.

- 3.

This name is something of a misnomer. Most scholarships and grants, need-based or otherwise, have performance and enrollment requirements for continued receipt. Indeed, the specific performance requirements of the PBSs were substantially more lenient than those of the WSG or Buffet scholarship. What distinguishes the PBSs is the incremental disbursal of grants and the tying of these disbursals to the performance of specific behaviors, such as attending tutoring sessions.

- 4.

We are summarizing results for the first two study cohorts (out of four) only, because four semesters of data are available for these cohorts. Program-semester effects for cohorts 3 and 4 are similar, though of smaller magnitude.

- 5.

A performance-based scholarship RCT was carried out at the University of New Mexico, and another targeted low-income high school seniors in California (Cash for College), but these results fall outside the purvey of this review.

References

Adelman, C. (1998). The kiss of death? An alternative view of college remediation. National Crosstalk, 6(3), 11.

Adelman, C. (1999). Answers in the toolbox: Academic intensity, attendance patterns, and bachelor’s degree attainment. Washington, DC: U.S. Department of Education.

Allen, J. M., Smith, C. L., & Muehleck, J. K. (2013). What kinds of advising are important to community college pre-and post-transfer students? Community College Review, 41(4), 330–345.

Alon, S. (2011). Who benefits most from financial aid? The heterogeneous effect of need-based grants on students’ college persistence. Social Science Quarterly, 92(3), 807–829.

Anderson, D. M., & Goldrick-Rab, S. (2016). Impact of a private need-based grant on beginning two-year college students (Working Paper). Madison: Wisconsin HOPE Lab.

Angrist, J. D., Autor, D., Hudson, S., & Pallais, A. (2015, January). Leveling up: Early results from a randomized evaluation of a post-secondary aid. Presented at the annual conference of the American Economic Association. Boston.

Astin, A. W. (1984). Student involvement: A developmental theory for higher education. Journal of College Student Personnel, 25(4), 297–308.

Attewell, P., & Douglas, D. (2016). When randomized control trials and observational studies diverge: Evidence from studies of academic momentum (Working Paper). CUNY Academic Momentum Project.

Attewell, P., & Monaghan, D. B. (2016). How many credits should an undergraduate take? Research in Higher Education, 57(6), 682–713.

Attewell, P., Lavin, D., Domina, T., & Levey, T. (2006). New evidence on college remediation. Journal of Higher Education, 77(5), 886–924.

Attewell, P., Heil, S., & Reisel, L. (2012). What is academic momentum? And does it matter? Educational Evaluation and Policy Analysis, 34(1), 27–44.