Abstract

A task of sequential pattern generation can be considered as a problem which is inverse to sequential pattern mining. This paper presents two novel approaches to the sequential pattern generation with noise, namely the approach based on stochastic automata and context-free grammars and the approach based on Hidden Markov model. The distinctive feature of these methods is the suitability to produce an output in the noisy and fuzzy input data. Also, we present the detailed calculation algorithms to the proposed approaches.

The work was financially supported by Russian Foundation for Basic Research (projects 15-01-03067-a, 16-01-00597-a, 15-08-01886-a).

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction and Preliminary Work

We live in the real world which can be characterized as Big Data era. Intelligent systems, network devices traffic, Internet of Things, Machine-to-Machine interaction and social networks creates unprecedented huge data arrays. The data itself has a heterogeneity and in some sense the unlimited volume. Under this circumstances, the traditional statistical verification-driven Data Mining are giving way to discovery-driven Data Mining [1]. One of the basic elements of modern discovery-driven Data Mining is a pattern concept. Patterns represent some regularities in acquired data and can be obtained in compact form and understandable to people. It is worth to notice that raw data can contain some level of incompleteness, vagueness, uncertainty and other factors making them non-crisp data. The most prominent methods to describe these phenomena, surely the probability theory, fuzzy sets theory, rough sets theory and numerous their extensions.

Patterns discovery is being implemented by methods are not framed any a priori assumptions about the sample structure, probability laws, fuzziness or roughness of sets of values. The important position of modern Data Mining methods is the non-triviality of patterns to be discovered. The found patterns should reflect non-obvious, unexpected regularities in the data that make up the so-called hidden knowledge. One of the promising approaches to Data Mining is Sequential Pattern Mining (SPM). The SPM attracts much research attention [2, 3] in recent years. It has broad applications, including behaviour analysis [4], analysis of web-access patterns [5], discovering the patterns of transactions in databases [6] and patterns of computer attacks [7] and intrusion detection [8].

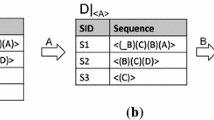

The problem of sequential pattern mining formally defined as follows. A set of sources and a set of items \(I=\left\{ i_1,i_2,...,i_n\right\} \) exists. At some time moment we register an event \(e \subseteq I \) that is a collection of items. An ordered list of events defines a sequence \(s = \left\langle s_1,s_2,...,s_k \right\rangle \). In general case a sequence s can be stored in a database D with corresponded indication of event source. The pattern is a some user-defined subsequence \(s_p \subseteq S\) that is formed depending on \(X_i\left( S,D\right) \) indicator variable, whose value equal 1, if \( s_pi \leqslant D_i\), where \(D_i\) is a ith source sequence in database D, and 0 otherwise. The mining task is finding all \(s_pi\) patterns suited to some user-defined constrains, for example, patterns with specific al values, or related to user-defined threshold.

Further extensions of SPM problem we can divide into three categories: the extensions that concern to adding an uncertainty [9, 10], the extensions that propose improvements concerning to the fuzzification [11, 12] and the pattern discovery in a noisy environment [13]. An uncertainty in SPM problem concerns to the possibility of getting inaccurate data from sources or attribute level uncertainty [14]. Also, an event level uncertainty exists and described in [15].

Fuzzification procedures for input values can be implemented in different ways. A simplest procedure is based on the measure of difference between variables \(x_i\) and \(y_i\) in a, b interval one can define with two parameter membership function: \( \ f_i(x_i,y_i)=\left\{ \begin{array}{ll} 0, \left| {{x}_{i}}-{{y}_{i}} \right| \le a \\ \frac{\left| {{x}_{i}}-{{y}_{i}} \right| -a}{b-a},a<\left| {{x}_{i}}-{{y}_{i}} \right| <a \\ 1,\left| {{x}_{i}}-{{y}_{i}} \right| \le a. \end{array} \right. \).

General considerations about noise structure we describe in the following way. Let’s denote \(E={{\left( {{E}_{i}} \right) }_{i\ge 1}}\) is a stochastic process with \({{E}_{i}}\in \left\{ 0,1 \right\} \) binary random variables. Also we mean that \({{E}_{i}}\in GF\left( 2 \right) \) with standard addition and multiplication, and further we mean this variables as presence or absence of noise in the generated sequence. As a noise model base we consider \(\left\{ \varOmega ,F,{{\left( {{F}_{i}} \right) }_{i\ge 0}},P \right\} \) stochastic basis, where \(\varOmega \) is a probability space (a state of modelling subsequence), F is a sigma algebra subsets of \(\varOmega \), (sequence of random events in modelling subsequence), \(F_i\) is non-decreasing sequence sigma algebras of F and element \(F_i\) is a information about state available up to and including the time i. The initial sigma algebra is \({{F}_{0}}=\sigma \left\{ \varOmega ,\varnothing \right\} \) a trivial sigma algebra \(F=\bigcup \limits _{0}^{\infty }{{{F}_{i}}}\).

The paper contains two sections that present main results. Section 2 proposes a novel algorithm to sequential pattern generation using a stochastic automata model. In Sect. 3 we develop an algorithm for sequential pattern generation based on Hidden Markov model.

2 Fuzzy Sequential Pattern Generation Based on Stochastic Automata Model

In this section we propose a novel algorithm for fuzzy sequential pattern generation and first of all we would notice that fuzzification stage is not being discussed. The pattern, as it is discussed in the previous section consists of events series, that modelling two fuzzy states: a “bad” state and a “good” state. We use of quasi-periodic processes is based on the assumption that the same events can be stored on a set of contiguous positions, that is, a series of events distinguish between a series of “bad” events and a series of “good” events in the pattern. Thus, it is possible to distinguish alternating events, the events, in which the probabilities of errors are significantly different. Let’s define \(\lambda \) is a number of erroneous “bad” events, and \(\mu \) is a number of “good” events without errors. Obviously, the lengths of series of “bad” events and “good” events are random variables with probability distributions \({{f}_{\lambda }}\left( \tau \right) \) and \({{f}_{\mu }}\left( \tau \right) \) respectively, \(\tau =0,1,...,n,...\). The we consider a sequence \({{\left\langle {{\lambda }_{i}},{{\mu }_{i}}\right\rangle }_{i\ge 0}}\). Random variables \({{\lambda }_{i}}\) and \({{\mu }_{i}}\) are independent. Random variables \({{\lambda }_{0}},{{\lambda }_{1}},...\) are independent and identically distributed with distribution \({{f}_{\lambda }}\left( \tau \right) \) and random variables \({{\mu }_{0}},{{\lambda }_{1}},...\) are independent and identically distributed with distribution \({{f}_{\mu }}\left( \tau \right) \). A sequence \({{\left( {{\lambda }_{i}},{{\mu }_{i}} \right) }_{i\ge 0}}\) can be divided into two non-overlapping intervals as follows:

if pattern starts with “good” state

\(\left[ 0,{{\mu }_{0}}-1 \right] ,\,\left[ {{\mu }_{0}},{{\mu }_{0}}+{{\lambda }_{0}}-1 \right] ,...,\left[ \sum \limits _{i=0}^{n}{{{\mu }_{i}}}+\sum \limits _{i=0}^{n-1}{{{\lambda }_{i}}},\sum \limits _{i=0}^{n}{{{\mu }_{i}}}+\sum \limits _{i=0}^{n}{{{\lambda }_{i}}}-1 \right] ,...\),

and if pattern starts with “bad” state

\(\left[ 0,{{\lambda }_{0}}-1 \right] ,\,\left[ {{\lambda }_{0}},{{\lambda }_{0}}+{{\mu }_{0}}-1 \right] ,...,\left[ \sum \limits _{i=0}^{n}{{{\lambda }_{i}}}+\sum \limits _{i=0}^{n-1}{{{\mu }_{i}}},\sum \limits _{i=0}^{n}{{{\lambda }_{i}}}+\sum \limits _{i=0}^{n}{{{\mu }_{i}}}-1 \right] ,...\).

Then, let’s consider a noise as a binary sequence E which is divided into segments by stochastic intervals \({{E}^{0}},{{E}^{1}},...\) and obtain a concatenation of quasi-periods. Based on widely known average moving models we denote \({{M}_{1}}\) is a model related to generation of “bad” erroneous events sequence and \({{M}_{2}}\) is a model related to generation of “good” error-free events sequence. We use following generation rule: if the segment of generated pattern is related to interval \(\left[ \sum \limits _{i=0}^{n}{{{\mu }_{i}}}+\sum \limits _{i=0}^{n-1}{{{\lambda }_{i}}},\sum \limits _{i=0}^{n}{{{\mu }_{i}}}+\sum \limits _{i=0}^{n}{{{\lambda }_{i}}}-1 \right] \) then it is created \({{M}_{1}}\) model; if the segment of generated pattern is related to interval \(\left[ \sum \limits _{i=0}^{n}{{{\lambda }_{i}}}+\sum \limits _{i=0}^{n-1}{{{\mu }_{i}}},\sum \limits _{i=0}^{n}{{{\lambda }_{i}}}+\sum \limits _{i=0}^{n}{{{\mu }_{i}}}-1 \right] \) then it is created \({{M}_{2}}\) model.

The more general generation model can be obtained using attribute grammars [16] in the following way. A context-free grammar is a quad-tuple \(G=\left\langle S,N,T,P \right\rangle \), where S is starting symbol, N is alphabet of non-terminal symbols, T is alphabet of terminal symbols, P is set of inference rules. We assume that in grammar there are no non-terminal symbols that are not belonging to any of the conclusions. Attribute grammar consists of context-free grammar G, which is called the base of attribute grammar, mappings Z and I, which match each character \(X\in N\cup T\) disjoint sets \(Z\left( X\right) \) and \(I\left( X\right) \) of synthesized and inherited attributes, as well as from sets \(M\left( p \right) \) we obtain the sets of semantic rules (rules of calculation of attributes) for each rule \(p\in P\).

2.1 Formal-Grammar Noise Model

Next we propose a modified attribute grammar and as a base we use a stochastic context-free grammar \(G=\left\langle S,N,T,Q \right\rangle \). The element Q is a finite set of stochastic inference rules. The stochastic inference rule is \(Y \xrightarrow {q} X\), where \(Y \in N\), \(X\in {{\left( N\cup T \right) }^{*}}\), q is a probability of application of inference rule. We assume that Q has a set of stochastic inference rules \(Y\xrightarrow {{{q}_{1}}}{{X}_{1}},Y\xrightarrow {{{q}_{2}}}{{X}_{2}},...,Y\xrightarrow {{{q}_{n}}}{{X}_{n}}\), then \(\sum \limits _{i=1}^{n}{{{q}_{i}}=1}\) and all \({{q}_{i}}>0\). Mappings \(Z\left( X \right) \) and \(I\left( X \right) \) are defined for each \(X\in T\). The set of semantic rules is defined for each \(X \in T\).

Next we interpret a terminal symbols alphabet. Attributes in alphabet A are \(a\left( X \right) ,b(X),\,M\left( X \right) \,\). Attribute \(a\left( X \right) \) is inherited attribute, attributes \(b\left( X \right) \) and \(b\left( X \right) \) are synthesized attributes. Variable \(b\left( X \right) \) is a random variable with probability distribution \({{f}_{X}}\left( \tau \right) \). Attribute \(M\left( X \right) \) is an average moving model related to pattern state (“bad” or “good”). Let’s X is included into subsequence \(\lambda \in {{\left( N\cup T \right) }^{*}}\) and if Y is a symbol that previous to X in subsequence \(\lambda \), then \(a(X)=a\left( Y \right) +b\left( Y \right) \). If in the subsequence \(\lambda \) there is no terminal symbols preceding to X, then \(a\left( X \right) =0\). Hence, every terminal symbol creates stochastic interval \(\left[ a\left( X \right) ,a\left( X \right) +b\left( X \right) -1 \right] \) and defines the probability distribution for noisy segments in generated pattern corresponding to interval \(\left[ a\left( X \right) ,a\left( X \right) +b\left( X \right) -1 \right] \) using \(M\left( X \right) \) model. Further, we will consider only automata attribute grammars with following inference rules: \(Y\xrightarrow {q}ZX\), where \(Z\in T\) and \(X\in N\) or \(Y\xrightarrow {q}Z\) or \(Y\xrightarrow {q}\varepsilon \), where \(\varepsilon \) is an empty subsequence. The reasoning above allow us to propose the following Algorithm 1 for generating noisy segments of the pattern.

Next we give some comments about method above. A model is described by modified attribute grammar \(N=\left\{ S,A,B \right\} \), \(T=\left\{ a,b \right\} \), where symbol a is a fuzzy “good” state, and symbol b is a fuzzy “bad” state and inference rules are \(Q=\left\{ S\xrightarrow {q}aB,S\xrightarrow {1-q}bA,A\xrightarrow {1}aB,B\xrightarrow {1}bA \right\} \). Sequence can start on subsequence with “good” state or on a “bad” state with probabilities q or \(1-q\) respectively. The range of good states corresponds to the gap between groups of states. Within the group, the intervals of “bad” states alternate with intervals of “good” states. State groups alternate with intervals of “good” states. The number of “bad” intervals within a group is a random variable distributed geometrically.

Next, we propose more general Algorithm 2, which takes into account intermediate state of subsequence between “bad” and “good” intervals. In the following we use notation “a,b,c” for “bad”, “intermediate” and “good” intervals in generated sequence pattern respectively, \({{f}_{X}}\left( \tau \right) \) is a random variable that denotes length of interval. \(M\left( \mathbf {X} \right) \) is an moving average model the following type: \({{x}_{i}}={{d}_{0}}+{{d}_{1}}{{x}_{i-1}}+{{d}_{2}}{{x}_{i-2}}\), where instead of the coefficients we take \({{a}_{0}},{{a}_{1}},{{a}_{2}}\), \({{b}_{0}},{{b}_{1}},{{b}_{2}}\) and \({{c}_{0}},{{c}_{1}},{{c}_{2}}\) for “bad”, “intermediate” and “good” intervals respectively.

The algorithm above requires the data chosen from arrays must be deleted in order to avoid repetitions in generated sequence.

3 Sequential Pattern Generation with Fuzziness Based on Hidden Markov Model

Hidden Markov models, the doubly embedded stochastic process with an underlying not observable stochastic process now is a tool for SPM [17, 18]. Again, we will consider and additive noise \(E=\left\{ {{E}_{i}} \right\} \), as a random discrete sequence, and will add random state of the generated pattern interval \(D=\left\{ {{D}_{i}} \right\} \) with \({{D}_{i}}\in {{W}_{D}}\). The probability distribution of noise \(E:P\left( E \right) =\sum \limits _{D}{P\left( {}^{E}/{}_{D} \right) P(D)}\).

The presence of the conditional distribution \(P\left( {}^{E}/{}_{D} \right) \) is due to the occurrence of interference is completely determined by the state of interval D. The independence condition leads us to the \(P\left( {}^{E}/{}_{D} \right) =\prod \limits _{i}{P\left( {}^{{{A}_{i}}}/{}_{D} \right) }\) probability distribution. From the casual condition it follows \(P\left( {}^{{{E}_{i}}}/{}_{D} \right) =P\left( {}^{{{E}_{i}}}/{}_{{{D}^{\left( i \right) }}} \right) \), where \({{D}^{\left( i \right) }}=\left( ...,{{D}_{i-1}},{{D}_{i}} \right) \). We decide that enough condition of the noise dependency on the state is \({{D}^{\left( i \right) }}=\left( ...,{{D}_{i-1}},{{D}_{i}} \right) \) which means that \(E_i\) depends on \(D_i\) only. This, the probability distribution law is:

If sequence D is a Markov sequence \(P\left( D \right) =P\left( {{D}_{0}} \right) \prod \limits _{i\ge 1}{P\left( {}^{{{D}_{i}}}/{}_{{{D}_{i-1}}} \right) }\) than (1) has following form:

\(\sum \limits _{{{D}_{n}}}{P\left( {}^{{{E}_{n}}}/{}_{{{D}_{n}}} \right) }P\left( {}^{{{D}_{n}}}/{}_{{{D}_{n-1}}} \right) \), where \(n=\left| E \right| \). We assume sequences E, D are finite. Equation (2) creates recurrent procedure calculation of \(P\left( E \right) \) sequence of functions \(\phi \) as follows:

Hence, from (3) it is following that \({{E}^{k}}=\left\{ {{E}_{i}} \right\} _{i=k}^{n}\), and particularly, \(E=E^1\).

Let’s consider a model with \({E_i \in \left\{ 0,1 \right\} , D_i \in \left\{ 0,1 \right\} }\) interleaving “bad” and “good” states, where \(P\left( {}^{{{E}_{i}}=1}/{}_{0} \right) =0,\,P\left( {}^{{{E}_{i}}=1}/{}_{1} \right) =1-\varepsilon \), \(P\left( {}^{0}/{}_{0} \right) ={{p}_{0}},\,P\left( {}^{1}/{}_{1} \right) =1-{{p}_{1}}\). The proposed model is completely defined by three parameters \(\varepsilon , p_0,p_1\). Condition probability distributions is defined as:

The Eq. (5) is not quite suited for P(E) calculation, thus we use Eq. (3) in the following way:

Let’s consider the estimation of hidden sequence D from observed sequence E. It should be noticed that sequence \(D_{i}^{*}=1\), if \({{E}_{i}}=1\) and \(D_{i}^{*}\) is equal to 0 or 1, if \(D_{i}^{*}\). Due to this circumstance we can divide our task into two subtasks:

where

With our parameters \(\varepsilon ,p_0,p_1\) we can apply following equation

and

And, based on considerations above we propose Algorithm 3 for generating a sequence pattern using a Hidden Markov model. We also use two states of generated interval: g is a “good” state and b is a “bad” state. The probabilities \(P\left( {}^{{{E}_{i}}=1}/{}_{g} \right) \) and \(P\left( {}^{{{E}_{i}}=1}/{}_{b} \right) \) is done. In preliminary we have a stochastic matrix \(Q=\left( \begin{matrix} p &{} 1-q \\ 1-p &{} q \\ \end{matrix} \right) \), where p is a probability of “good” state, and q is probability of “bad” state. Initial probabilities \(p_0\) and \(1-p_0\) also are defined.

Generating a random binary sequence using a generator will be quasi-periodic with alternating “bad” and “good” intervals. The length of “bad” interval is a random variable distributed with \(P\left( \lambda =i \right) ={{q}^{i}}\left( 1-q \right) \). The length of “good” intervals is a random variable distributed with \(P\left( \mu =i \right) ={{p}^{i}}\left( 1-p \right) \).

4 Conclusions

In this paper, two novel approaches to sequential pattern generation problems have been proposed. In the first approach, authors have proposed a novel algorithm suited for fuzzy sequential pattern generation based on stochastic automata model. The distinctive feature of the presented algorithm is the possibility of modeling noisy segments with original formal-grammar noise model. The second novel approach for sequential pattern generation problem authors is based on Hidden Markov model. In this case, authors consider additive noise as a random discrete sequence and fuzzification procedure with stochastic matrix generating based on quasi-periodic intervals. Three detailed algorithms for implementing approaches above have been developed.

References

Sumathi, S., Sivanandam, S.N.: Introduction to Data Mining and its Applications, vol. 29, pp. 1–828. Springer, Heidelberg (2006). doi:10.1007/978-3-540-34351-6

Li, T.R., Xu, Y., Ruan, D., Pan, W.: Sequential pattern mining. In: Intelligent Data Mining, vol. 5. Studies in Computational Intelligence, pp. 103–122 (2005). doi:10.1007/11004011_5

Shen, W., Wang, J., Han, J.: Sequential pattern mining. In: Frequent Pattern Mining, pp. 261–282 (2014). doi:10.1007/978-3-319-07821-2_11

Wang, Y.L., Wen, L.M., Chen, T.S., Chen, R.C.: Using sequential pattern mining to analyze the behavior on the WELS. In: Qu, X., Yang, Y. (eds.) Information and Business Intelligence. CCIS, vol. 267, pp. 95–101 (2012). doi:10.1007/978-3-642-29084-8_15

Zhou, B., Hui, S.C., Fong, A.C.M.: Efficient sequential access pattern mining for web recommendations. Int. J. Knowl. Based Intell. Eng. Syst. 10(2), 155–168 (2006). doi:10.3233/KES-2006-10205

Gouda, K., Hassaan, M.: Mining sequential data patterns in dense databases. Int. J. Database Manag. Syst. (IJDMS ) 3(1), 179–194 (2011). doi:10.5121/ijdms.2011.3112

Sun, H., Sun, J., Chen, H.: Mining frequent attack sequence in web logs. In: Huang, X., Xiang, Y., Li, K.C. (eds.) Green, Pervasive, and Cloud Computing. LNCS, vol. 9663, pp. 243–260 (2016). doi:10.1007/978-3-319-39077-2_16

Song, S.J., Huang, Z., Hu, H.P., Jin, S.Y.: A sequential pattern mining algorithm for misuse intrusion detection. In: Jin, H., Pan, Y., Xiao, N., Sun, J. (eds) Grid and Cooperative Computing - GCC 2004 Workshops, GCC 2004. LNCS, vol. 3252, pp. 458–465 (2004). doi:10.1007/978-3-540-30207-0_57

Muzzamal, M., Raman, R.: Mining sequential patterns from probabilistic databases. Knowl. Inf. Syst. 44(2), 325–358 (2015). doi:10.1007/s10115-014-0766-7

Zhao, Z., Yan, D., Ng, W.: Mining probabilistically frequent sequential patterns in uncertain databases. In: Proceedings of the 15th International Conference on Extending Database Technology, EDBT 2012, pp. 74–85 (2012). doi:10.1145/2247596.2247606

Chen, R.S., Tzeng, G.H., Chen, C.C., Hu, Y.C.: Discovery of fuzzy sequential patterns for fuzzy partitions in quantitative attributes. In: Proceedings of ACS/IEEE International Conference on Computer Systems and Applications, Beirut, pp. 144–150 (2001). doi:10.1109/AICCSA.2001.933967

Chen, Y.L., Huang, T.C.: Discovering fuzzy time-interval sequential patterns in sequence databases. IEEE Trans. Syst. Man Cybern. B Cybern. 35(5), 959–972 (2005). doi:10.1109/TSMCB.2005.847741

Wang, W., Yang, J., Yu, S.: Mining patterns in long sequential data with noise. ACM SIGKDD Explor. Newsl. Spec. Issue Scalable Data Min. Algorithms 2(2), 28–33 (2000). doi:10.1145/380995.381008

Muzzamal, M., Raman, R.: Uncertainty in sequential pattern mining. In: MacKinnon, L.M. (eds.) Data Security and Security Data, BNCOD 2010. LNCS, vol. 6121, pp. 147–150 (2010). doi:10.1007/978-3-642-25704-9-18

Zhao, Z., Yan, D.: NG, W.: Mining probabilistically frequent sequential patterns in large uncertain databases. IEEE Trans. Knowl. Data Eng. 26(5), 1171–1184 (2014). doi:10.1109/TKDE.2D13.124

Shen, A.: Context-free grammars. In: Algorithms and Programming, Part of the series Springer Undergraduate Texts in Mathematics and Technology, pp. 221–242 (2010). doi:10.1007/978-1-4419-1748-5_15

Jeung, H., Shen, H.T., Zhou, X.: Mining trajectory patterns using hidden Markov Models. In: Song, I.Y., Eder, J., Nguyen, T.M. (eds.) Data Warehousing and Knowledge Discovery, DaWaK 2007. LNCS, vol. 4654, pp. 460–480 (2007). doi:10.1007/978-3-540-74553-2_44

Jaroszewicz, S.: Using interesting sequences to interactively build Hidden Markov Models. Data Min. Knowl. Disc. 21, 186–220 (2010). doi:10.1007/s10618-010-0171-0

Acknowledgements

The authors gratefully acknowledge financial support from the Russian Foundation for Basic Research.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG

About this paper

Cite this paper

Butakova, M.A., Chernov, A.V., Guda, A.N. (2018). Algorithms of Sequential Pattern Generation with Noise using Stochastic and Fuzzy Models. In: Abraham, A., Kovalev, S., Tarassov, V., Snasel, V., Vasileva, M., Sukhanov, A. (eds) Proceedings of the Second International Scientific Conference “Intelligent Information Technologies for Industry” (IITI’17). IITI 2017. Advances in Intelligent Systems and Computing, vol 679. Springer, Cham. https://doi.org/10.1007/978-3-319-68321-8_21

Download citation

DOI: https://doi.org/10.1007/978-3-319-68321-8_21

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-68320-1

Online ISBN: 978-3-319-68321-8

eBook Packages: EngineeringEngineering (R0)