Abstract

Bayesian networks typically require thousands of probability para-meters for their specification, many of which are bound to be inaccurate. Know-ledge of the direction of change in an output probability of a network occasioned by changes in one or more of its parameters, i.e. the qualitative effect of parameter changes, has been shown to be useful both for parameter tuning and in pre-processing for inference in credal networks. In this paper we identify classes of parameter for which the qualitative effect on a given output of interest can be identified based upon graphical considerations.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

1 Introduction

A Bayesian network defines a unique joint probability distribution over a set of discrete random variables [8]. It combines an acyclic directed graph, representing the independencies among the variables, with a quantification of local discrete distributions. The individual probabilities of these local distributions are called the parameters of the network. A Bayesian network can be used to infer any probability from the represented distribution.

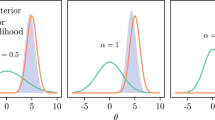

The effect of possible parameter inaccuracies on the output probabilities of a network can be studied with a sensitivity analysis. An output can be described as a fraction of two functions that are linear in any network parameter; the coefficients of the functions are determined by the non-varied parameters [6]. Depending on the coefficients, such so-called sensitivity functions are either monotone increasing or monotone decreasing functions in each parameter. Interestingly, as we showed in previous research, for some outputs and parameters, the sensitivity function is even always increasing (or decreasing) regardless of the specific values of the other network parameters [1]. That is, regardless of the specific quantification of a network, the coefficients of the sensitivity function for a certain parameter can be such that the gradient is always positive (or always negative). In such a case, the qualitative effect of a parameter change on an output probability can be predicted from properties of the network structure, without considering the values of the other network parameters.

Knowledge of the qualitative effect of parameter changes can be exploited for different purposes. Examples of applications can be found in pre-processing for inference in credal networks [1] and in multi-parameter tuning of Bayesian networks [2].

In this paper we present a complete categorisation of a network’s parameters with respect to their qualitative effect on some output, where we assume that the network is pruned before hand to a sub-network that is computationally relevant to the output. The paper extends the work in [1] in which only a partial categorisation of the network parameters was given. Compared to our previous results, the present results also enable a meaningful categorisation for a wider range of parameters.

2 Preliminaries

2.1 Bayesian Networks and Notation

A Bayesian network \(\mathscr {B}=(G, \Pr )\) represents a joint probability distribution \(\Pr \) over a set of random variables \(\mathbf{W}\) as a factorisation of conditional distributions [5]. The independences underlying this factorisation are read from the directed acyclic graph G by means of the well-known d-separation criterion. In this paper we use upper case W to denote a single random variable, writing lowercase \(w \in W\) to indicate a value of W. For binary-valued W, we use w and \(\overline{w}\) to denote its two possible value assignments. Boldfaced capitals are used to indicate sets of variables or sets of value assignments, the distinction will be clear from the context; boldface lower cases are used to indicate a joint value assignment to a set of variables.

Two value assignments are said to be compatible, denoted by \(\sim \), if they agree on the values of the shared variables; otherwise they are said to be incompatible, denoted by \(\not \sim \). We use \(\mathbf{W}_{pa(V)} = \mathbf{W} \cap pa(V)\) to indicate the subset of \(\mathbf{W}\) that is among the parents of V, and \(\mathbf{W}_{\overline{pa}(V)} = \mathbf{W}{\setminus }\mathbf{W}_{pa(V)}\) to indicate its complement in \(\mathbf{W}\); descendants of V are captured by de(V). To conclude, \(\langle \mathbf{T},\mathbf{U}\vert \mathbf{V}\rangle _d\), \(\mathbf{T},\mathbf{U},\mathbf{V}\subseteq \mathbf{W}\), denotes that all variables in \(\mathbf{T}\) are d-separated from all variables in \(\mathbf{U}\) given the variables in \(\mathbf{V}\), where we assume that \(\langle \mathbf{T},\emptyset \vert \mathbf{V}\rangle _d=\) True.

A Bayesian network specifies for each variable \(W\in \mathbf{W}\) exactly one local distribution \(\Pr (W\vert \varvec{\pi })\) over the values of W per value assignment \(\varvec{\pi }\) to its parents pa(W) in G, such that

where the notation  is used to indicate the properties the arguments in the preceding formula adhere to. The individual probabilities in the local distributions are termed the network’s parameters. A Bayesian network allows for computing any probability over its variables \(\mathbf{W}\). A typical query is \(\Pr (\mathbf{h}\vert \mathbf{f})\), involving two disjoint subsets of \(\mathbf{W}\), often referred to as hypothesis variables (\(\mathbf{H}\)) and evidence variables (\(\mathbf{F}\)); \(\mathbf{W}\) can also include variables not involved in the output query of interest.

is used to indicate the properties the arguments in the preceding formula adhere to. The individual probabilities in the local distributions are termed the network’s parameters. A Bayesian network allows for computing any probability over its variables \(\mathbf{W}\). A typical query is \(\Pr (\mathbf{h}\vert \mathbf{f})\), involving two disjoint subsets of \(\mathbf{W}\), often referred to as hypothesis variables (\(\mathbf{H}\)) and evidence variables (\(\mathbf{F}\)); \(\mathbf{W}\) can also include variables not involved in the output query of interest.

The example network from Fig. 1 after query dependent preprocessing given the output probability \(\Pr (ghk\vert def)\).

An example Bayesian network is shown in Fig. 1. For output \(\Pr (ghk\vert def)\) we identify hypothesis variables \(\mathbf{H}= \{G,H,K\}\) (double circles), evidence variables \(\mathbf{F}= \{D,E,F\}\) (shaded), and remaining variables \(\{R,S\}\). In addition to the graph, conditional probability tables (CPTs) need to be specified for each node.

2.2 Query Dependent Pre-processing

Prior to computing the result of a query, the Bayesian network can be pre-processed by removing parts of its specification that are easily identified as being irrelevant to the computations. Sets of nodes that can be removed based upon graphical considerations only are nodes d-separated from \(\mathbf{H}\) given \(\mathbf{F}\), irrelevant evidence nodes (effects blocked by other evidence), and barren nodes, that is, nodes in \(\mathbf{W}{\setminus }(\mathbf{H}\cup \mathbf{F})\) which are leafs or have only barren descendants; the remaining nodes coincide with the so-called parameter sensitivity set [3, 7]. In addition, evidence absorption can be applied, where the outgoing arcs of variables with evidence are removed and the CPTs of the former children are reduced by removing the parameters that are incompatible with the observed value(s) of their former parent(s) [4]. After evidence absorption, all variables with evidence correspond to leafs in the graph and the CPT parameters of their former children will all be compatible with the evidence.

From here on we consider Bayesian networks that are reduced to what we call its query-dependent backbone \({\mathscr {B}}_q\), using the above-mentioned pre-processing optionsFootnote 1. \({\mathscr {B}}_q\), tailored to the original query \(\Pr (\mathbf{h}\vert \mathbf{f})\), is assumed to be a Bayesian network over variables \(\mathbf{V} \subseteq \mathbf{W}\) from which the now equivalent query \(\Pr (\mathbf{h}{\vert } \mathbf{e})\) is computed for evidence variables \(\mathbf{E}\subseteq \mathbf{F}\); the remaining variables \(\mathbf{V}{\setminus } (\mathbf{H}\cup \mathbf{E})\) will be denoted by \(\mathbf{R}\).

The backbone given output \(\Pr (ghk\vert def)\) in our example network from Fig. 1 is depicted in Fig. 2. After evidence absorption, the arc from F to K and the last two rows of K’s CPT are removed. The node S is removed since it is barren, and D is removed, since it is d-seperated from the variables in \(\mathbf{H}\) given \(\mathbf{F}\). In the backbone network we have the hypothesis variables \({\mathbf{H}}=\{G,H,K\}\), the evidence variables \({\mathbf{E}}=\{E,F\}\) and the remaining variable \(\mathbf{R}=\{R\}\).

2.3 Relating Queries to Parameters

It is well-known that an output of a Bayesian network relates to a network parameter x as a fraction of two functions linear in that parameter:

where the constants \(\tau _{1},\tau _{2},\kappa _{1}\) and \(\kappa _{2}\) are composed of network parameters independent of x [3]. The above function can be generalised to multiple parameters [6] and is typically exploited in the context of sensitivity analysis, to determine how a change in one or more parameters affects \(\Pr (\mathbf{h}\vert \mathbf{e})\). We note that upon varying a parameter x of a distribution, the other parameters of the same distribution have to be co-varied to let the distribution sum to 1. If the distribution is associated with a binary variable, the co-varying parameter equals \(1-x\). If a variable is multi-valued, however, different co-variation schemes are possible [9]. Sensitivity functions are monotonic functions in each parameter, and are either increasing or decreasing functions in such a parameter. Here we consider increasing (decreasing) in a non-strict sense, that is, increasing (decreasing) includes non-decreasing (non-increasing).

3 Categorisation of Parameters in a Backbone Network \({\mathscr {B}}_q\)

We will discuss the parameters of the variables in \(\mathbf{R}\), \(\mathbf{H}\) and \(\mathbf{E}\) of a backbone network and categorise these parameters according to their qualitative effect on \(\Pr (\mathbf{h}\vert \mathbf{e})\) as summarised in Table 1. In the proofs of our propositions we repeatedly use the definition of conditional probability and the factorisation defined by \({\mathscr {B}}_q\):

in which for the numerator \(\Pr (\mathbf{h}\mathbf{e})\) we find

and for the denominator \(\Pr (\mathbf{e})\) we find that

Parameters which are not present in Eqs. (1) and (2) cannot affect the output directly and are categorised as ‘\(*\)’. The effect of all other parameters is investigated by studying properties of their sensitivity functions \(\Pr (\mathbf{h}\vert \mathbf{e})(x)\). Parameters that are guaranteed to give a monotone increasing sensitivity function are classified as ‘\(+\)’; parameters that are guaranteed to give monotone decreasing sensitivity functions as ‘−’. Parameters for which the sign of the derivative of the sensitivity function depends on the actual quantification of the network will be categorised as ‘?’. Note that sensitivity functions for parameters of category ‘\(*\)’ are not necessarily constant: variation of such a parameter may result in co-variation of a parameter from the same local distribution which is present in Eqs. (1) or (2). As such, parameters of category ‘\(*\)’ may be indirectly affecting output \(\Pr (\mathbf{h}\vert \mathbf{e})\), yet for the computation of \(\Pr (\mathbf{h}\vert \mathbf{e})\) it suffices to know the values of parameters in the categories ‘\(+\)’, ‘−’ and ‘?’. In the relation between parameter changes and output changes these latter parameters are pivotal.

For the backbone network of our example in Fig. 2, the categories of its parameters are indicated in the CPTs.

4 Categorisation of the Parameters of Variables in \(\mathbf{R}\) and \(\mathbf{H}\)

4.1 Parameters of Variables in \(\mathbf{R}\)

For a variable \(R \in \mathbf{R}\) the qualitative effect of a change in one of its parameters x cannot be predicted without additional information: the sensitivity function \(\Pr (\mathbf{h}\vert \mathbf{e})(x)\) can be either monotone increasing or decreasing. Therefore, these parameters are categorised as ‘?’.

Proof of the above claim, and of all further propositions concerning parameters in the category ‘?’, is omitted due to space restrictions. All these proofs are based on demonstrating that additional knowledge—for example the specific network quantification—is required to determine whether the one-way sensitivity function is increasing or decreasing.

4.2 Parameters of Variables in \(\mathbf{H}\) Without Descendants in \(\mathbf{E}\)

The propositions in this section concern parameters \(x=\Pr (v\vert \varvec{\pi })\) of nodes \(V\in \mathbf{H}\) without descendants in \(\mathbf{E}\). The parameters of such a node which are fully compatible with \(\mathbf{h}\) have a monotone increasing sensitivity function \(\Pr (\mathbf{h}\vert \mathbf{e})(x)\) and therefore are classified as ‘\(+\)’. The parameters not fully compatible with \(\mathbf{h}\) are not used in the computation of \(\Pr (\mathbf{h}\vert \mathbf{e})\) and therefore are classified ‘\(*\)’.

Proposition 1

Consider a query-dependent backbone Bayesian network \({\mathscr {B}}_q\) with probability of interest \(\Pr (\mathbf{h}\vert \mathbf{e})\). Let \(x=\Pr (v\vert \varvec{\pi })\) be a parameter of a variable \(V\in \mathbf{H}\) such that \(de(V) \cap \mathbf{E}= \emptyset \). If both \(v\sim \mathbf{h}\) and \(\varvec{\pi }\sim \mathbf{h}\), then \(\Pr (\mathbf{h}\vert \mathbf{e})(x)\) is a monotone increasing function.

Proof

Let \(\mathbf{{r}}_{\pi }\) denote the configuration of \(\mathbf{R}_{pa(V)}\) compatible with \(\varvec{\pi }\). In addition, let \(\mathbf{h}=v\mathbf{{h}}_{\pi }\mathbf{{h}}_{\overline{\pi }}\), where \(\mathbf{{h}}_{\pi }\) and \(\mathbf{{h}}_{\overline{\pi }}\) are assignments, compatible with \(\mathbf{h}\), to \(\mathbf{\mathbf{H}}_{pa(V)}\) and \(\mathbf{\mathbf{H}}_{\overline{pa}(V)}\), respectively. First consider the general form of \(\Pr (\mathbf{h}\mathbf{e})\) given by Eq. (1). We observe that under the given conditions we can write:

where \(\Pr (v\mathbf{{h}}_{\pi }\mathbf{e}\mathbf{{r}}_{\pi })/\Pr (v\vert \varvec{\pi })\) represents a sum of products of parameters no longer including \(\Pr (v\vert \varvec{\pi })\). This expression thus is of the form \(x\!\cdot \!\tau _{1}\!\cdot \!\tau _{2}+\tau _{3},\) for non-negative constants \(\tau _{1},\tau _{2}, \tau _{3}\).

For \(\Pr (\mathbf{e})\), as given in Eq. (2), we observe that since V has no descendants in \(\mathbf{E}\), this node in fact is barren with respect to \(\Pr (\mathbf{e})\). As a result, none of V’s parameters are relevant to the computation and \(\Pr (\mathbf{e})(x)\) therefore equals a constant \(\kappa _{1}>0\).

The sensitivity function for parameter x thus is of the form \(\Pr (\mathbf{h}\vert \mathbf{e})(x) = (x\!\cdot \!\tau _{1}\!\cdot \!\tau _{2}+\tau _{3})/{\kappa _{1}}\) with \(\tau _{1},\tau _{2},\tau _{3}\ge 0\) and \(\kappa _{1}>0\). The first derivative of this function equals \((\tau _{1}\!\cdot \!\tau _{2})/\kappa _{1}\), which is always non-negative. \(\Box \)

Proposition 2

Let \({\mathscr {B}}_q\) and \(\Pr (\mathbf{h}\vert \mathbf{e})\) be as before. Let \(\Pr (v\vert \varvec{\pi })\) be a parameter of a variable \(V\in \mathbf{H}\) such that \(de(V) \cap \mathbf{E}= \emptyset \). If \(v\not \sim \mathbf{h}\) or \(\varvec{\pi }\not \sim \mathbf{h}\), then \(\Pr (v\vert \varvec{\pi })\) is not used in the computation of \(\Pr (\mathbf{h}\vert \mathbf{e})\).

Proof

We again consider \(\Pr (\mathbf{h}\mathbf{e})\) as given by Eq. (1) and observe that a parameter \(\Pr (v\vert \varvec{\pi })\) with \(v\not \sim \mathbf{h}\) or \(\varvec{\pi }\not \sim \mathbf{h}\) is not included in this expression. Moreover, as argued in the proof of Proposition 1, no parameter of V is used in computing \(\Pr (\mathbf{e})\) from Eq. (2). \(\Pr (v\vert \varvec{\pi })\) is therefore not used in the computation of \(\Pr (\mathbf{h}\vert \mathbf{e})\). \(\Box \)

4.3 Parameters of Variables in \(\mathbf{H}\) with Descendants in \(\mathbf{E}\)

For a parameter of a non-binary variable \(V\in \mathbf{H}\) with at least one descendant in \(\mathbf{E}\), we cannot predict without additional knowledge whether the sensitivity function \(\Pr (\mathbf{h}\vert \mathbf{e})(x)\) is monotone increasing or monotone decreasing. These parameters therefore are classified as ‘?’. The same observation applies to a parameter of a binary variable \(V \in \mathbf{H}\) with descendants in \(\mathbf{E}\) for which \(\varvec{\pi }\not \sim \mathbf{h}\) or for which \(\mathbf{\mathbf{H}}_{\overline{pa}(V)}\) and \(\mathbf{R}_{pa(V)}\) are not d-separated given \(\mathbf{\mathbf{H}}_{pa(V)}\), V itself and the evidence.

If \(V\in \mathbf{H}\) is binary, \(\varvec{\pi }\sim \mathbf{h}\) and \(\mathbf{\mathbf{H}}_{\overline{pa}(V)}\) and \(\mathbf{R}_{pa(V)}\) are d-separated given \(\mathbf{\mathbf{H}}_{pa(V)}\), V itself and the evidence, then we do have sufficient knowledge to determine the qualitative effect of varying parameter \(x=\Pr (v\vert \varvec{\pi })\) of V. If \(v\sim \mathbf{h}\) then \(\Pr (\mathbf{h}\vert \mathbf{e})(x)\) is monotone increasing, and the parameter is classified as ‘\(+\)’. If \(v\not \sim \mathbf{h}\) then \(\Pr (\mathbf{h}\vert \mathbf{e})(x)\) is monotone decreasing, and the parameter is classified as ‘−’ These observations are captured by Proposition 3. This proposition extends Proposition 2 in [1] by replacing the condition that \(\mathbf{R}_{pa(V)}=\emptyset \) by the less strict d-separation condition mentioned above.

Proposition 3

Let \({\mathscr {B}}_q\) and \(\Pr (\mathbf{h}\vert \mathbf{e})\) be as before. Let \(\Pr (v\vert \varvec{\pi })\) with \(\varvec{\pi }\sim \mathbf{h}\) be a parameter of a binary variable \(V\in \mathbf{H}\) and let \(\langle \mathbf{\mathbf{H}}_{\overline{pa}(V)}, \mathbf{R}_{pa(V)}\vert \mathbf{E}\cup \mathbf{\mathbf{H}}_{pa(V)}\cup V\rangle _d\). If \(v\sim \mathbf{h}\) then \(\Pr (\mathbf{h}\vert \mathbf{e})(x)\) is a monotone increasing function; if \(v\not \sim \mathbf{h}\) then \(\Pr (\mathbf{h}\vert \mathbf{e})(x)\) is a monotone decreasing function.

Proof

First consider the case where \(v\sim \mathbf{h}\). Under the given conditions we have from the proof of Proposition 1 that \(\Pr (\mathbf{h}\mathbf{e})\) takes on the form \(\Pr (\mathbf{h}\mathbf{e})(x) = x\!\cdot \!\tau _{1}\!\cdot \!\tau _{2}+\tau _{3}\), for constants \(\tau _{1},\tau _{2},\tau _{3} \ge 0\).

For \(\Pr (\mathbf{e})\) and binary V we note that Eq. (2) can be written as

which takes on the following form: \(\Pr (\mathbf{e})(x) = x\!\cdot \!\tau _{2}+(1-x)\!\cdot \!\kappa _{2}+\kappa _{1} + \kappa _{3} + \kappa _{4}\), with constants \(\kappa _{i}\ge 0\), \(i=1,\ldots ,4\).

The sign of the derivative of the sensitivity function is determined by the numerator \(\tau _{1}\!\cdot \!\tau _{2}\!\cdot \!(\kappa _{1}+\kappa _{2}+\kappa _{3}+\kappa _{4}) - \tau _{3}\!\cdot \!(\tau _{2}-\kappa _{2})\) of \(\Pr (\mathbf{h}\vert \mathbf{e})'(x)\). We observe that given \(\langle \mathbf{\mathbf{H}}_{\overline{pa}(V)}, \mathbf{R}_{pa(V)}\vert \mathbf{E}\cup \mathbf{\mathbf{H}}_{pa(V)}\cup V\rangle _d\) we find that \(\tau _{1}\!\cdot \!\kappa _{1} = \tau _{3}\) which guarantees the derivative to be non-negative. This implies that, for \(v\sim \mathbf{h}\), \(\Pr (\mathbf{h}\vert \mathbf{e})(x)\) is a monotone increasing function.

Now consider the case where \(v \not \sim \mathbf{h}\). Since V is binary, this implies that \(\overline{v}\sim \mathbf{h}\). The proof for this case follows by replacing, in the above formulas, every occurrence of v by \(\overline{v}\) and, hence, every x with \(1-x\). As a result we find that in this case \(\Pr (\mathbf{h}\vert \mathbf{e})(x)\) is a monotone decreasing function. \(\Box \)

5 Categorisation of the Parameters of the Variables in \(\mathbf{E}\)

5.1 Parameters \(\Pr (v\vert \pi )\) of a Variable \(V\in \mathbf{E}\) with \(v\not \sim \mathbf{e}\)

Recall that after evidence absorption all parameters with \(\varvec{\pi }\not \sim \mathbf{e}\) are removed from the network. For a parameter \(\Pr (v\vert \varvec{\pi })\) of a variable in \(V\in \mathbf{E}\), however, we may still find that \(v\not \sim \mathbf{e}\); these parameters are in category ‘\(*\)’.

Proposition 4

Let \({\mathscr {B}}_q\) and \(\Pr (\mathbf{h}\vert \mathbf{e})\) be as before. Let \(\Pr (v\vert \varvec{\pi })\) be a parameter of a variable \(V\in \mathbf{E}\). If \(v\not \sim \mathbf{e}\), then \(\Pr (v\vert \varvec{\pi })\) is not used in the computation of \(\Pr (\mathbf{h}\vert \mathbf{e})\).

Proof

This proposition is equivalent to Proposition 3 in [1], but stated for \({\mathscr {B}}_q\) rather than for \(\mathscr {B}\). \(\Box \)

5.2 Parameters \(\Pr (v\vert \pi )\) of a Variable \(V\in \mathbf{E}\) with \(v\sim \mathbf{e}\) and \(\pi \not \sim \mathbf{h}\)

We now consider the parameters \(\Pr (v\vert \pi )\) of \(V\in \mathbf{E}\), with \(v\sim \mathbf{e}\) and \(\varvec{\pi }\not \sim \mathbf{h}\). The one-way sensitivity functions of such parameters are monotone decreasing. These parameters therefore are categorised as ‘−’.

Proposition 5

Let \({\mathscr {B}}_q\) and \(\Pr (\mathbf{h}\vert \mathbf{e})\) be as before. Let \(x=\Pr (v\vert \varvec{\pi })\) be a parameter of \(V\in \mathbf{E}\) such that \(v\sim \mathbf{e}\). If \(\varvec{\pi }\not \sim \mathbf{h}\), then \(\Pr (\mathbf{h}\vert \mathbf{e})(x)\) is a monotone decreasing function.

Proof

This proposition is equivalent to Proposition 1 in [1], but stated for \({\mathscr {B}}_q\) rather than for \(\mathscr {B}\). \(\Box \)

5.3 Parameters \(\Pr (v\vert \pi )\) of a Variable \(V\in \mathbf{E}\) with \(v\sim \mathbf{e}\) and \(\pi \sim \mathbf{h}\)

We now consider parameters \(\Pr (v\vert \pi )\) of \(V\in \mathbf{E}\) with \(v\sim \mathbf{e}\) and \(\varvec{\pi }\sim \mathbf{h}\). The one-way sensitivity functions of such parameters are monotone increasing under the condition that \(\mathbf{H}_{\overline{pa}(V)}\) is d-separated from \(\mathbf{R}_{pa(V)}\) given \(\mathbf{H}_{pa(V)}\) and the evidence. Under this condition, these parameters therefore can be categorised as ‘\(+\)’. This proposition extends Proposition 1 in [1] by replacing the condition that \(\mathbf{R}_{pa(V)}=\emptyset \) by the less strict d-separation condition mentioned above.

Proposition 6

Let \({\mathscr {B}}_q\) and \(\Pr (\mathbf{h}\vert \mathbf{e})\) be as before. Let \(x=\Pr (v\vert \varvec{\pi })\) with \(v\sim \mathbf{e}\) and \(\varvec{\pi }\sim \mathbf{h}\) be a parameter of \(V\in \mathbf{E}\) and let \(\langle \mathbf{\mathbf{H}}_{\overline{pa}(V)}, \mathbf{R}_{pa(V)}\vert \mathbf{\mathbf{H}}_{pa(V)} \cup \mathbf{E}\rangle _d\). Then \(\Pr (\mathbf{h}\vert \mathbf{e})(x)\) is a monotone increasing function.

Proof

For \(\Pr (\mathbf{h}\mathbf{e})\) we observe that Eq. (1) can be written as the expression in the proof of Proposition 1, but with v included in \(\mathbf{e}\) rather than in \(\mathbf{h}\). We therefore have that \(\Pr (\mathbf{h}\mathbf{e})(x)= x\!\cdot \!\tau _{1}\!\cdot \!\tau _{2}+\tau _{3}\) for constants \(\tau _{1},\tau _{2},\tau _{3} \ge 0\).

For \(\Pr (\mathbf{e})\), given by Eq. (2), we observe that we can write

which is of the form \(x\!\cdot \!\tau _{2}+\kappa _{1}+\kappa _{2}\), for constants \(\kappa _{1},\kappa _{2}\ge 0\).

We now find that the numerator of the first derivative of the sensitivity function equals \(\tau _{1}\!\cdot \!\tau _{2}\!\cdot \!(\kappa _{1}+ \kappa _{2}) - \tau _{2}\!\cdot \!\tau _{3}.\) We observe that given \(\langle \mathbf{\mathbf{H}}_{\overline{pa}(V)}, \mathbf{R}_{pa(V)}\vert \mathbf{\mathbf{H}}_{pa(V)} \cup \mathbf{E}\rangle _d\) we find that \(\tau _{1}\!\cdot \!\kappa _{1} = \tau _{3}\) which guarantees the derivative to be non-negative. This implies that \(\Pr (\mathbf{h}\vert \mathbf{e})(x)\) is a monotone increasing function. \(\Box \)

In case the above mentioned d-separation property does not hold, we need additional information to predict whether the sensitivity function \(\Pr (\mathbf{h}\vert \mathbf{e})(x)\) of a parameter x of a variable \(V\in \mathbf{E}\) with \(v\sim \mathbf{e}\) and \(\varvec{\pi }\sim \mathbf{h}\) is monotone increasing or monotone decreasing, without additional knowledge. If the property does not hold, therefore, these parameters are in category ‘?’.

6 Discussion

In this paper we presented fundamental results concerning the qualitative effect of parameter changes on the output probabilities of a Bayesian network. Based on the graph structure and the query at hand, we categorised all network parameters into one of four categories: parameters not included in the computation of the output, parameters with guaranteed monotone increasing sensitivity functions, parameters with guaranteed monotone decreasing sensitivity functions, and parameters of which the qualitative effect cannot be predicted without additional information. Previously we demonstrated that knowledge of the qualitative effects can be exploited in inference in credal networks [1] and in multiple-parameter tuning of Bayesian networks [2]. In our previous research only a partial categorisation of the parameters was given. Our present paper allocates a wider range of parameters into one of the meaningful categories ‘\(+\)’, ‘−’ and ‘\(*\)’. For future research we would like to further study properties of the additional information required to predict the qualitative effect of the parameters in category ‘?’.

Notes

- 1.

Note that the parameters of the local distributions that are in \(\mathscr {B}\) but not in \({\mathscr {B}}_q\) do not affect the output of interest in any way.

References

Bolt, J.H., De Bock, J., Renooij, S.: Exploiting Bayesian network sensitivity functions for inference in credal networks. In: Proceedings of the 22nd European Conference on Artificial Intelligence, vol. 285, pp. 646–654 (2016)

Bolt, J.H., van der Gaag, L.C.: Balanced sensitivity functions for tuning multi-dimensional Bayesian network classifiers. Int. J. Approx. Reason. 80c, 361–376 (2017)

Coupé, V.M.H., van der Gaag, L.C.: Properties of sensitivity analysis of Bayesian belief networks. Ann. Math. Artif. Intell. 36(4), 323–356 (2002)

van der Gaag, L.C.: On evidence absorption for belief networks. Int. J. Approx. Reason. 15(3), 265–286 (1996)

Jensen, F.V., Nielsen, T.D.: Bayesian Networks and Decision Graphs, 2nd edn. Springer, New York (2007)

Kjærulff, U., van der Gaag, L.C.: Making sensitivity analysis computationally efficient. In: Boutilier, C., Goldszmidt, M. (eds.) Proceedings of the Sixteenth Conference on Uncertainty in Artificial Intelligence, pp. 317–325. Morgan Kaufmann Publishers, San Francisco (2000)

Meekes, M., Renooij, S., Gaag, L.C.: Relevance of evidence in Bayesian networks. In: Destercke, S., Denoeux, T. (eds.) ECSQARU 2015. LNCS, vol. 9161, pp. 366–375. Springer, Cham (2015). doi:10.1007/978-3-319-20807-7_33

Pearl, J.: Probabilistic Reasoning in Intelligent Systems: Networks of Plausible Inference. Morgan Kaufmann Publishers, Palo Alto (1988)

Renooij, S.: Co-variation for sensitivity analysis in Bayesian networks: properties, consequences and alternatives. Int. J. Approx. Reason. 55, 1022–1042 (2014)

Acknowledgements

This research was supported by the Netherlands Organisation for Scientific Research (NWO).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Bolt, J.H., Renooij, S. (2017). Structure-Based Categorisation of Bayesian Network Parameters. In: Antonucci, A., Cholvy, L., Papini, O. (eds) Symbolic and Quantitative Approaches to Reasoning with Uncertainty. ECSQARU 2017. Lecture Notes in Computer Science(), vol 10369. Springer, Cham. https://doi.org/10.1007/978-3-319-61581-3_8

Download citation

DOI: https://doi.org/10.1007/978-3-319-61581-3_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-61580-6

Online ISBN: 978-3-319-61581-3

eBook Packages: Computer ScienceComputer Science (R0)