Abstract

Heart failure is known to influence heart rhythm in patients. Complexity analysis techniques, including techniques associated with entropy, have great potential for providing a better understanding of cardiac rhythms, and for helping research in this area. We review the analysis principles of conventional time-domain analysis, frequency-domain analysis and of newer complexity analysis. We then illustrate the techniques using real clinical data, allowing a comparison of the techniques, and also of the differences between normal heart rate variability and that associated with heart failure.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

1.1 Introduction to Non-Linear Heart Rate Variability Methods for Cardiovascular Analysis

The application of non-linear dynamic methods to quantify the complexity of the cardiovascular system has opened up new ways to perform cardiac rhythm analysis, thereby enhancing our knowledge, and stimulating significant and innovative research into cardiovascular dynamics. Heart rate variability (HRV) has been conventionally analyzed with time- and frequency-domain methods, which allowed researcher to obtain information on the sinus node response to sympathetic and parasympathetic activities. However, heart rate (HR) regulation is one of the most complex systems in humans due to the variety of influence factors, e.g. parasympathetic and sympathetic ganglia, humoral effect, and respiration or mental load. Therefore, to extract the relevant properties of a non-linear cardiovascular dynamic system, classical linear signal analysis methods (time- and frequency-domain) are often inadequate. HRV can exhibit very complex behaviour, which is far from a simple periodicity. Thus the application of non-linear HRV methods can provide essential information regarding the physiological and pathological states of cardiovascular time series [1,2,3,4].

Typical non-linear HRV analysis methods can be classified as four types:

-

(1)

Poincare plot

The Poincare plot is a quantitative visual technique, whereby the shape of the plot is categorized into functional classes and provides detailed beat-to-beat information on the behaviour of the heart. Weiss et al. observed the respective Poincare plots of interbeat intervals from a variety of arrhythmia patients [5]. Brennan et al. proposed a physiological oscillator model of which the output could mimic the shape of the R–R interval Poincare plot [6]. By defining the quantitative indices for Poincare plots, different cardiovascular diseases can be identified from healthy control subjects, including: parasympathetic nervous activity [7], postoperative ischaemia [8], ventricular tachyarrhythmia [5], and heart failure [9].

-

(2)

Fractal method

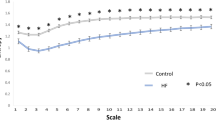

Fractal measures aim to assess self-affinity of heartbeat fluctuations over multiple time scales [4]. Kobayashi & Musha first reported the frequency dependence of the power spectrum of RR interval time series fluctuations [10]. The broadband spectrum, characterized by the slow HR fluctuations, indicates a fractal-like process with a long-term dependence [11]. Peng et al. developed this method to detrended fluctuation analysis (DFA) [12], which quantifies the presence or absence of fractal correlation properties in non-stationary time series data. Using DFA, healthy subjects revealed a scaling exponent of approximately 1, indicating fractal-like behaviour, and cardiovascular patients revealed reduced scaling exponents, suggesting a loss of fractal-like HR dynamics [13, 14]. The fractal method was also extended into multifractality applications [15].

-

(3)

Symbolic dynamics measures

In 1898, Hadamard proposed a symbolic dynamics measure named as SDyn, which investigates short-term fluctuations caused by vagal and baroreflex activities and allows a simple description of a system’s dynamics with a limited amount of symbols [16]. SDyn was applied in detecting the disease of sudden cardiac death [17, 18]. Later, Porta et al. developed this SDyn method into short-term 300 beat RR intervals [19] and 24 h Holter recording analysis [20].

Another popular symbolic dynamics measure, named LZ complexity, was proposed by Lempel and Ziv (LZ) [21]. LZ can evaluate the irregularity of RR interval time series. In most cases, LZ complexity algorithm is executed by transforming an original signal into a binary sequence. However, the binary coarse-graining process is associated with a risk of losing detailed information. Thus higher quantification levels (or symbols) are employed in the coarse-graining process. Abásolo et al. used three quantification levels for LZ calculation [22] and Sarlabous et al. developed a multistate LZ complexity algorithm [23]. Unlike the multistate coarse-graining, Zhang et al. recently proposed an encoding LZ algorithm aiming to explicitly discern between the irregularity and chaotic characteristics of RR interval time series [24].

-

(4)

Entropy methods

Entropy can assess the regularity/irregularity or randomness of RR interval time series. Since Pincus proposed the approximate entropy (ApEn) method in 1991 [25], ApEn has achieved wide applications for analyzing physiological time series in clinic research. The popularity of ApEn stems from its capability in providing quantitative information about the complexity of both short- and long-term data recordings that are often corrupted with noise, and the calculation methods are relatively easy [26,27,28,29]. As an improved version of ApEn, Richman and Moorman proposed the sample entropy (SampEn) method in 2010 [29], which enhances the inherent bias estimation of ApEn method. SampEn quantifies the conditional probability that two sequences of m consecutive data points that are similar to each other (within a given tolerance r) will remain similar when one consecutive point is included. Self-matches are not included in calculating the probability. In a clinical application, reductions in SampEn of neonatal HR prior to the clinical diagnosis of sepsis and sepsis-like illness were reported in [30] and before the onset of atrial fibrillation [31]. The new improvements for entropy methods include: fuzzy-function based entropy methods [32, 33], multiscale entropy (MSE) methods [26] and multivariate multiscale entropy methods [34,35,36].

1.2 Common Heart Rate Variability Analysis in Congestive Heart Failure Patients

Congestive heart failure (CHF) is a typical degeneration of the heart function featured by the reduced ability for the heart to pump blood efficiently. CHF is also a difficult condition to manage in clinical practice, and the mortality from CHF remains high. Previous studies have proven that HRV indices relate to outcome for CHF patients [37,38,39,40].

For healthy subjects, it has been proven that the increased sympathetic and the decreased parasympathetic activity results in the decrease of mean RR interval, as well as the decrease of indices of the standard deviation of beat-to-beat intervals (SDNN), low frequency content (LF), and also non-linear indices VAI and VLI [7]. Moreover, the decreased parasympathetic activity has been proven to be a the major contributor to the increase in the index for high frequency (HF) content [41]. HRV analysis has also given an insight into understanding the abnormalities of CHF, and can also be used to identify the higher-risk CHF patients. Depressed HRV has been used as a risk predictor in CHF [42,43,44]. CHF patients usually have a higher sympathetic and a lower parasympathetic activity [42, 44].

Typical linear HRV analysis for CHF patients include the following publications: Nolan et al. performed a prospective study on recruited 433 CHF patients and found that SDNN was the most powerful predictor of the risk of death for CHF disease [38]. Binkley et al. studied 15 healthy subjects and 10 CHF patients, and reported that parasympathetic withdrawal, in addition to the augmentation of sympathetic drive, is an integral component of the autonomic imbalance characteristic for CHF patients and can be detected noninvasively by HRV spectral analysis [42]. Rovere et al. studied 202 CHF patients and reported that the LF component was a powerful predictor of sudden death in CHF patients [45]. Hadase et al. also confirmed that the very low frequency (VLF) content was a powerful predictor from a 54 CHF patient study [46]. All those studies have verified that decreased HRV was associated with the increased mortality in CHF patients.

Typical non-linear HRV analysis for CHF patients include the following publications: Woo et al. studied 21 patients with heart failure and demonstrated that Poincare plot analysis is associated with marked sympathetic activation for heart failure patients and may provide additional prognostic information and an insight into autonomic alterations and sudden cardiac death [44]. Guzzetti et al. tracked 20 normal subjects and 30 CHF patients for 2 years and found significantly lower normalized LF power and lower 1/f slope in CHF patients compared with controls. Moreover, the patients who died during the follow-up period presented further reduced LF power and steeper 1/f slope than the survivors [47]. Makikallio et al. studied 499 CHF patients and showed that a short-term fractal scaling exponent was the strongest predictor of mortality of CHF [48]. Poon and Merrill studied 8 healthy subjects and 11 CHF patients, and found that the short-term variations of beat-to-beat interval exhibited strongly and consistently chaotic behaviour in all healthy subjects but were frequently interrupted by periods of seemingly non-chaotic fluctuations in patients with CHF [43]. Peng et al. used DFA analysis and confirmed a reduction in HR complexity in CHF patients [12]. Liu et al. studied 60 CHF patients and 60 healthy control subjects, and reported decrease of ApEn values in CHF group [27]. Costa et al. [49] used the MSE method for classifying CHF patients and healthy subjects, and reported that the best discrimination between CHF and healthy HR signals with the scale 5 in MSE calculation [49].

1.3 Main Aims of This Review

We seek by the use of examples to illustrate the differences between normal cardiac physiology and those associated with CHF, determined from both time-domain, frequency-domain and typical short-term (5 min) complexity analysis, and also show the differences between the three types of analysis. These subjects can be seen as proof of the concept that, from the current literature on cardiovascular complexity and heart failure, the methods do have potential.

2 Review of Typical Methods and Indices for Heart Rate Variability Analysis

2.1 Time-Domain Indices

The typical and commonly used time-domain indices include: SDNN where SD is the standard deviation and NN was originally derived from the Normal-to-Normal interval, the square root of the mean squared successive differences in RR intervals (RMSSD), and the proportion of differences between successive RR intervals greater than 50 ms (PNN50) [50, 51].

2.2 Frequency-Domain Indices

Frequency-domain analysis is usually performed by a modern spectral estimation method, such as Burg’s method, to produce the HRV spectrum [52]. The HRV spectrum is then integrated to derive a low-frequency power (in frequency range 0.04–0.15 Hz) and a high-frequency power (0.15–0.40 Hz). Indices are obtained by the calculation of the normalized low-frequency power (LFn), normalized high-frequency power (HFn), and the ratio of low-frequency power to high-frequency power (LF/HF) [51].

2.3 Non-Linear Methods

2.3.1 Poincare Plot

The Poincare plot analysis is a graphical non-linear method to assess the dynamics of HRV. This method provides summary information as well as detailed beat-to-beat information on the behaviour of the heart. It is a graphical representation of temporal correlations within RR intervals. The Poincare plot is known as a return map or scatter plot, where each RR interval from the RR time series is plotted against the next RR interval. Two paired parameters are commonly used as indices derived from the Poincare plot. One pair of parameters includes SD1 and SD2, another pair of parameters is vector length index (VLI) and vector angle index (VAI). SD2 is defined as the standard deviation of the projection of the Poincare plot on the line of identity (y = x), and SD1 is the standard deviation of projection of the Poincare plot on the line perpendicular to the line of identity. SD1 has been correlated with high frequency power, while SD2 has been correlated with both low and high frequency power [51]. VAI measures the mean departure of all Poincare points from the line of identity (y = x) and VLI measures the mean distance of all Poincare points from the centre poincare point. They are defined as:

where, θ i is the vector angle in degree, l i is the vector length for each Poincare point, L is the mean vector length of all Poincare points, and N is the total number of Poincare points.

2.3.2 Histogram Plot

A histogram plot is another graphical method for the HRV analysis, which reflects directly the distribution of the RR sequence. There is no specific quantitative index in the traditional histogram plot. To resolve this, Liu et al. proposed a new histogram analysis for HRV, referred to as RR sequence normalized histogram [53]. The RR sequence normalized histogram divides all sequence elements into seven sections based on the element values. Then, three quantitative indices are defined from the normalized histogram, named as center-edge ratio (CER), cumulative energy (CE) and range information entropy (RIEn) respectively [53]. CER characterizes the element fluctuation apart from the sequence mean value. CE indicates the equilibrium of the percentage p i in all seven sections and RIEn reflects the element distributions. If the element distribution in the RR sequence exhibits more uniformity in each section, RIEn is larger, the RR sequence is more complex, and the uncertainty of the sequence is higher. The general construction procedure for the RR sequence normalized histogram and the calculations for the three quantitative indices are summarized in the Appendix.

2.3.3 Entropy Measures

ApEn represents a simple index for the overall complexity and predictability of time series [25]. ApEn quantifies the likelihood that runs of patterns which are close, remain similar for subsequent incremental comparisons [54]. High values of ApEn indicate high irregularity and complexity in time-series data. However, inherent bias exists in ApEn due to the counting of self-matches, resulting in ApEn having a dependency on the record length and lacking relative consistency. SampEn, improving ApEn, quantifies the conditional probability that two sequences of m consecutive data points that are similar to each other (within a given tolerance r) will remain similar when one consecutive point is included. Self-matches are not included in SampEn when calculating the probability [29].

Whether ApEn or SampEn is utilized, the decision rule for vector similarity is based on the Heaviside function and it is very rigid because two vectors are considered as similar vectors only when they are within the tolerance threshold r, whereas the vectors just outside this tolerance are ignored [32, 33, 55]. This rigid boundary may induce abrupt changes of entropy values when the tolerance threshold r changes slightly, and even fail to define the entropy if no vector-matching could be found for very small r. To enhance the statistical stability, a fuzzy measure entropy (FuzzyMEn) method was proposed in [33, 56], which used a fuzzy membership function to substitute the Heaviside function to make a gradually varied entropy value when r monotonously changes. Figure 11.1 illustrates the Heaviside and fuzzy functions that are mathematically given as

where d i , j represents the distance of two vectors X i and Y j , r is the tolerate threshold and n is the vector similarity weight. The rigid membership degree determination in the Heaviside function could induce the weak consistency of SampEn, which means that the entropy value may have a sudden change when the parameter r changes slightly. This phenomenon has been reported in recent studies [32, 33, 57]. For fuzzy functions, this determination criterion exhibits a smooth boundary effect, while the traditional 0–1 judgment criterion of the Heaviside function is rigid in the boundary of the parameter r. Besides, FuzzyMEn also uses the information from both local and global vector sequences by removing both local baseline and global mean values, thus introducing the fact that FuzzyMEn has better consistency than SampEn.

The detailed calculation processes of SampEn and FuzzyMEn are given in the Appendix.

2.3.4 Lempel-Ziv (LZ) Complexity

Lempel-Ziv (LZ) complexity is a measure of signal complexity and has been applied to a variety of biomedical signals, including identification of ventricular tachycardia or atrial fibrillation [58]. In most cases, the LZ complexity algorithm is executed by transforming an original signal into a binary sequence by comparing it with a preset median [59] or mean value [58] as the threshold. So the HRV signal should be coarse-grained and be transformed into a symbol sequence before the LZ calculation. For generating the binary sequence, the signal x is converted into a 0–1 sequence R by comparing with the threshold T h , producing the binary symbolic sequence R = {s 1, s 2,…, s n } as follows:

where n is the length of x(n) and the mean value of the sequence is used as the threshold T h in this chapter.

LZ complexity reflects the rate of new patterns arising within the sequence, and index C(n) is usually denoted as the measure for the arising rate of new patterns in a normalized LZ complexity. The detailed calculation for index C(n) is also attached in the Appendix to simple the text content.

3 Demonstration of Heart Rate Variability Analysis for Normal and Congestive Heart Failure RR time series

In this section, 5-min RR time series from a NSR subject and a CHF patient are employed as shown in Fig. 11.2, and are used to illustrate the various analysis techniques. Note that this is a “proof of the concept” about the current literature on cardiovascular complexity and heart failure, without statistical inference. The chapter focuses on demonstrating the differences of different techniques when dealing with the examples, using the same examples in each analysis to allow easy comparison. The graphical results for the various HRV methods are shown to facilitate the comparison between these example RR time series.

It can be seen in Fig. 11.2 that the CHF patient has a shorter mean RR, and hence, a faster heart rate. However, the more important difference can be seen in the variability.

3.1 Time-Domain Indices

Figure 11.3 shows the calculations for the time-domain HRV indices. In each sub-figure, the upper panel shows the difference from the MEAN of the 5-min RR interval time series with ± SD lines (red dashed lines) and the lower panel shows the modulus of the sequential differences. RR intervals greater than 50 ms are marked as ‘red squares’. The values of time-domain indices of SDNN, RMSSD and PNN50 are shown.

3.2 Frequency-Domain Indices

Figure 11.4 shows the analysis for the frequency-domain HRV indices. In each sub-figure, the left panel shows the classical spectrum estimation from the Fast Fourier Transform (FFT) method, and the right panel shows the modern spectrum estimation using the Burg method. Low frequency power (LF, 0.04–0.15 Hz) and high frequency power (HF, 0.15–0.40 Hz) areas are marked. The index values of LF/HF are also shown.

3.3 Non-Linear Methods

3.3.1 Poincare Plot

Figure 11.5 shows the analysis for the Poincare plot. In each sub-figure, the values of the four indices, i.e., SD1, SD2, VAI and VLI, are shown.

3.3.2 Histogram Plot

Figure 11.6 shows a traditional histogram plot. It is clear that the RR intervals have a more uniform distribution for the NSR subject, in comparison with a more concentrated distribution for the CHF patient.

Figure 11.7 demonstrates the RR sequence normalized histogram plot. The percentage p i in NSR subject is fairly evenly distributed in all seven sections, while the percentage p i in the CHF patient has an uneven distribution. In each sub-figure, the values of the three indices CER, CE and RIEn are shown.

3.3.3 Entropy Measures

As an intermediate step, SampEn needs to determine the similarity degree between any two vectors \( {X}_i^m \) and \( {Y}_i^m \) at both embedding dimension m and m + 1 respectively, by calculating the distance between them. As shown in Appendix, the distance between \( {X}_i^m \) and \( {Y}_i^m \) is defined as \( {d}_{i,j}^m=\underset{k=0}{\overset{m-1}{ \max }}\left|x\left(i+k\right)-x\left(j+k\right)\right| \). In SampEn, if the distance is within the threshold parameter r = 0.2, the similarity degree between the two vectors is 1; if the distance is beyond the threshold parameter r, the similarity degree is 0. There is absolutely a 0 or 1 determination. Figure 11.8 shows the demonstrations of the similarity degree matrices in this intermediate calculation procedure of SampEn. Only 1 ≤ i ≤ 100 and 1 ≤ j ≤ 100 are shown for illustrating the details. Black-coloured areas indicate the similarity degree = 1 and vice versa. In each sub-figure, the upper panel shows the results from the embedding dimension m = 2, and the lower panel shows the results from the embedding dimension m + 1 = 3. As shown in Fig. 11.8, it is clear that when the embedding dimension changes from m to m + 1, the number of similar vectors (i.e., matching vectors) decreases.

Unlike the 0 or 1 discrete determination for vector similarity degree in SampEn, FuzzyMEn permits the outputs of continuous real values between 0 and 1 for the vector similarity degree, by converting the absolute distance of \( {d}_{i,j}^m=\underset{k=0}{\overset{m-1}{ \max }}\left|x\left(i+k\right)-x\left(j+k\right)\right| \) using a fuzzy exponential function (see Appendix). Since FuzzyMEn not only measures the global vector similarity degree, but also refers to the local vector similarity degree, thus, Figs. 11.9 and 11.10 show the global and local similarity degree matrices in this intermediate calculation procedure of FuzzyMEn respectively. Furthermore, only 1 ≤ i ≤ 100 and 1 ≤ j ≤ 100 are shown to illustrate the details. Dark-colored areas indicate the higher similarity degree and vice versa. In each sub-figure, the upper panel shows the results from the embedding dimension m = 2, and the lower panel shows the results from the embedding dimension m + 1 = 3. As shown in Figs. 11.9 and 11.10, it is also evident that when the embedding dimension changes from m to m + 1, the similarity degrees in both Figures decrease.

Figure 11.11 demonstrates the results for the index of SampEn calculation. The SampEn was calculated by counting the mean percentages of matching vectors of the dimension m = 2 and m + 1 = 3, respectively. The percentages of matching vectors are illustrated for different threshold values r, and the corresponding SampEn values are shown. The NSR subject exhibits higher SampEn values than the CHF patient, indicating the more complicated component or irregular change in the RR time series.

Figure 11.12 demonstrates the results for the index of the FuzzyMEn calculation. Unlike SampEn identifying the vector similarity degree as binary determination (matching vector with similarity degree = 1 or not matching vector with similarity degree = 0), FuzzyMEn could output any value for similarity degree between 0 and 1. In each sub-figure, the upper panel shows the mean level of the global similarity degree, while the lower panel shows the mean level of the local similarity degree, at the dimension m = 2 and dimension m + 1 = 3 respectively. The results corresponding to different threshold values r, and the corresponding FuzzyMEn values are shown. The NSR subject exhibits lower FuzzyMEn values than the CHF patient, although the mean levels of the vector similarity degree in the NSR subject are higher than those in the CHF patient, for both global and local aspects.

3.3.4 LZ Complexity

Figure 11.13 shows the results for the LZ complexity index. With the increase of the RR sequence length, the number of the new patterns increases. The number of new patterns in the CHF patient increases more significantly than that in the NSR subject. The final LZ complexity values for the 5-min RR time series are depicted in this Figure.

4 Discussion

It is well accepted in the literature reviewed that congestive heart failure patients have a reduced heart rate variability in comparison with normal subjects, and also a different pattern in this variability. We have reviewed both the traditional linear analysis techniques (time- and frequency-domain) and the newer non-linear complexity techniques.

We have shown that this difference in heart rate variability in congestive heart failure has been identified by most of the techniques investigated. Those that were less successful in the examples analysed were the time-domain RMSSD index, but we have shown that other time-domain HRV indices were able to clearly separate heart failure from normal. For the newer non-linear complexity techniques, rather than only showing the values of the indices, we performed a detailed calculation progress analysis and revealed the inherent change of time series or patterns, providing further clear observations for these techniques. As expected, all newer non-linear complexity techniques were able to distinguish the differences between the illustrated examples.

We accept that this analysis is illustrative, and requires follow up analysis, but by illustrating the differences between normal and congestive heart failure rhythms we hope that this will encourage the use of the complete range of analysis techniques in research into both normal and abnormal cardiac rhythms.

References

Acharya, U.R., Joseph, K.P., Kannathal, N., Lim, C.M., Suri, J.S.: Heart rate variability: a review. Med. Biol. Eng. Comput. 44, 1031–1051 (2006)

Huikuri, H.V., Perkiömaki, J.S., Maestri, R., Pinna, G.D.: Clinical impact of evaluation of cardiovascular control by novel methods of heart rate dynamics. Philos. Trans. A Math. Phys. Eng. Sci. 367, 1223–1238 (2009)

Makikallio, T.H., Tapanainen, J.M., Tulppo, M.P., Huikuri, H.V.: Clinical applicability of heart rate variability analysis by methods based on nonlinear dynamics. Card. Electrophysiol. Rev. 6, 250–255 (2002)

Voss, A., Schulz, S., Schroeder, R., Baumert, M., Caminal, P.: Methods derived from nonlinear dynamics for analysing heart rate variability. Philos. Trans. A Math. Phys. Eng. Sci. 367, 277–296 (2009)

Weiss, J.N., Garfinkel, A., Spano, M.L., Ditto, W.L.: Chaos and chaos control in biology. J. Clin. Invest. 93, 1355–1360 (1994)

Brennan, M., Palaniswami, M., Kamen, P.: Poincare plot interpretation using a physiological model of HRV based on a network of oscillators. Am. J. Physiol. Heart Circ. Physiol. 283, H1873–H1886 (2002)

Kamen, P.W., Krum, H., Tonkin, A.M.: Poincare plot of heart rate variability allows quantitative display of parasympathetic nervous activity in humans. Clin. Sci. 91, 201–208 (1996)

Laitio, T.T., Mäkikallio, T.H., Huikuri, H.V., Kentala, E.S., Uotila, P., Jalonen, J.R., Helenius, H., Hartiala, J., Yli-Mäyry, S., Scheinin, H.: Relation of heart rate dynamics to the occurrence of myocardial ischemia after coronary artery bypass grafting. Am. J. Cardiol. 89, 1176–1181 (2002)

Kamen, P., Tonkin, A.: Application of the Poincare plot to heart rate variability: a new measure of functional status in heart failure. Aust. NZ J. Med. 25, 18–26 (1995)

Kobayashi, M., Musha, T.: 1/f fluctuation of heartbeat period. I.E.E.E. Trans. Biomed. Eng. 29, 456–457 (1982)

Lombardi, F.: Chaos theory, heart rate variability, and arrhythmic mortality. Circulation. 108, 8–10 (2000)

Peng, C.K., Havlin, S., Stanley, H.E., Goldberger, A.L.: Quantification of scaling exponents and crossover phenomena in nonstationary heartbeat time series. Chaos. 5, 82–87 (1995)

Huikuri, H.V., Mäkikallio, T.H., Peng, C.K., Goldberger, A.L., Hintze, U., Møller, M.: Fractal correlation properties of R-R interval dynamics and mortality in patients with depressed left ventricular function after an acute myocardial infarction. Circulation. 101, 47–53 (2000)

Mäkikallio, T.H., Høiber, S., Køber, L., Torp-Pedersen, C., Peng, C.K., Goldberger, A.L., Huikuri, H.V.: Fractal analysis of heart rate dynamics as a predictor of mortality in patients with depressed left ventricular function after acute myocardial infarction. Am. J. Cardiol. 83, 836–839 (1999)

Ivanov, P.C., Amaral, L.A.N., Goldberger, A.L., Havlin, S., Rosenblum, M.G., Struzik, Z.H.E.: Multifractality in human heartbeat dynamics. Nature. 399, 461–465 (1999)

Hadamard, J.: Les surfaces a courbures opposees et leurs lignes geodesiques. J. Math. Pures. Appl. 4, 27–73 (1898)

Kurths, J., Voss, A., Saparin, P., Witt, A., Kleiner, H.J., Wessel, N.: Quantitative analysis of heart rate variability. Chaos. 5, 88–94 (1995)

Voss, A., Kurths, J., Kleiner, H.J., Witt, A., Wessel, N., Saparin, P., Osterziel, K.J., Schurath, R., Dietz, R.: The application of methods of non-linear dynamics for the improved and predictive recognition of patients threatened by sudden cardiac death. Cardiovasc. Res. 31, 419–433 (1996)

Porta, A., Guzzetti, S., Montano, N., Furlan, R., Pagani, M., Malliani, A., Cerutti, S.: Entropy, entropy rate, and pattern classification as tools to typify complexity in short heart period variability series. I.E.E.E. Trans. Biomed. Eng. 48, 1282–1291 (2001)

Porta, A., Faes, L., Masé, M., D’Addio, G., Pinna, G.D., Maestri, R., Montano, N., Furlan, R., Guzzetti, S., Nollo, G., Malliani, A.: An integrated approach based on uniform quantization for the evaluation of complexity of short-term heart period variability: application to 24 h Holter recordings in healthy and heart failure humans. Chaos. 17, 015117 (2007)

Lempel, A., Ziv, J.: On the complexity of finite sequences. IEEE Trans. Inf. Theory. 22, 75–81 (1976)

Abásolo, D., Hornero, R., Gómez, C., García, M., López, M.: Analysis of EEG background activity in Alzheimer’s disease patients with Lempel-Ziv complexity and central tendency measure. Med. Eng. Phys. 28, 315–322 (2006)

Sarlabous, L., Torres, A., Fiz, J.A., Morera, J., Jané, R.: Index for estimation of muscle force from mechanomyography based on the Lempel-Ziv algorithm. J. Electromyogr. Kinesiol. 23, 548–557 (2013)

Zhang, Y.T., Wei, S.S., Liu, H., Zhao, L.N., Liu, C.Y.: A novel encoding Lempel-Ziv complexity algorithm for quantifying the irregularity of physiological time series. Comput. Methods Prog. Biomed. 133, 7–15 (2016)

Pincus, S.M.: Approximate entropy as a measure of system complexity. Proc. Natl. Acad. Sci. U. S. A. 88, 2297–2301 (1991)

Costa, M., Goldberger, A.L., Peng, C.K.: Multiscale entropy analysis of biological signals. Phys. Rev. E. 71, 021906 (2005)

Liu, C.Y., Liu, C.C., Shao, P., Li, L.P., Sun, X., Wang, X.P., Liu, F.: Comparison of different threshold values r for approximate entropy: application to investigate the heart rate variability between heart failure and healthy control groups. Physiol. Meas. 32, 167–180 (2011b)

Pincus, S.M., Goldberger, A.L.: Physiological time-series analysis: what does regularity quantify? Am. J. Physiol. Heart Circ. Physiol. 266, H1643–H1656 (1994)

Richman, J.S., Moorman, J.R.: Physiological time-series analysis using approximate entropy and sample entropy. Am. J. Physiol. Heart Circ. Physiol. 278, H2039–H2049 (2000)

Lake, D.E., Richman, J.S., Griffin, M.P., Moorman, J.R.: Sample entropy analysis of neonatal heart rate variability. Am. J. Phys. Regul. Integr. Comp. Phys. 283, R789–R797 (2002)

Tuzcu, V., Nas, S., Börklü, T., Ugur, A.: Decrease in the heart rate complexity prior to the onset of atrial fibrillation. Europace. 8, 398–402 (2006)

Chen, W.T., Zhuang, J., Yu, W.X., Wang, Z.Z.: Measuring complexity using FuzzyEn, ApEn, and SampEn. Med. Eng. Phys. 31, 61–68 (2009)

Liu, C.Y., Li, K., Zhao, L.N., Liu, F., Zheng, D.C., Liu, C.C., Liu, S.T.: Analysis of heart rate variability using fuzzy measure entropy. Comput. Biol. Med. 43, 100–108 (2013)

Ahmed, M.U., Mandic, D.P.: Multivariate multiscale entropy: a tool for complexity analysis of multichannel data. Phys. Rev. E. 84, 061918 (2011)

Ahmed, M.U., Mandic, D.P.: Multivariate multiscale entropy analysis. IEEE Signal. Proc. Lett. 19, 91–94 (2012)

Azami, H., Escudero, J.: Refined composite multivariate generalized multiscale fuzzy entropy: a tool for complexity analysis of multichannel signals. Phys. A. 465, 261–276 (2017)

Arbolishvili, G.N., Mareev, V.I., Orlova, I.A., Belenkov, I.N.: Heart rate variability in chronic heart failure and its role in prognosis of the disease. Kardiologiia. 46, 4–11 (2005)

Nolan, J., Batin, P.D., Andrews, R., Lindsay, S.J., Brooksby, P., Mullen, M., Baig, W., Flapan, A.D., Cowley, A., Prescott, R.J.: Prospective study of heart rate variability and mortality in chronic heart failure results of the United Kingdom heart failure evaluation and assessment of risk trial (UK-Heart). Circulation. 98, 1510–1516 (1998)

Rector, T.S., Cohn, J.N.: Prognosis in congestive heart failure. Annu. Rev. Med. 45, 341–350 (1994)

Smilde, T.D.J., van Veldhuisen, D.J., van den Berg, M.P.: Prognostic value of heart rate variability and ventricular arrhythmias during 13-year follow-up in patients with mild to moderate heart failure. Clin. Res. Cardiol. 98, 233–239 (2009)

Malliani, A., Pagani, M., Lombardi, F., Cerutti, S.: Cardiovascular neural regulation explored in the frequency domain. Circulation. 84, 482–492 (1991)

Binkley, P.F., Nunziata, E., Haas, G.J., Nelson, S.D., Cody, R.J.: Parasympathetic withdrawal is an integral component of autonomic imbalance in congestive heart failure: demonstration in human subjects and verification in a paced canine model of ventricular failure. J. Am. Coll. Cardiol. 18, 464–472 (1991)

Poon, C.S., Merrill, C.K.: Decrease of cardiac chaos in congestive heart failure. Nature. 389, 492–495 (1997)

Woo, M.A., Stevenson, W.G., Moser, D.K., Middlekauff, H.R.: Complex heart rate variability and serum norepinephrine levels in patients with advanced heart failure. J. Am. Coll. Cardiol. 23, 565–569 (1994)

La Rovere, M.T., Pinna, G.D., Maestri, R., Mortara, A., Capomolla, S., Febo, O., Ferrari, R.,Franchini, M., Gnemmi, M., Opasich, C., Riccardi, P.G., Traversi, E., Cobelli, F.: Short-term heart rate variability strongly predicts sudden cardiac death in chronic heart failure patients. Circulation. 107, 565–570 (2003)

Hadase, M., Azuma, A., Zen, K., Asada, S., Kawasaki, T., Kamitani, T., Kawasaki, S., Sugihara, H., Matsubara, H.: Very low frequency power of heart rate variability is a powerful predictor of clinical prognosis in patients with congestive heart failure. Circ. J. 68, 343–347 (2004)

Guzzetti, S., Mezzetti, S., Magatelli, R., Porta, A., De Angelis, G., Rovelli, G., Malliani, A.: Linear and non-linear 24 h heart rate variability in chronic heart failure. Auton. Neurosci. 86, 114–119 (2000)

Mäkikallio, T.H., Huikuri, H.V., Hintze, U., Videbaek, J., Mitrani, R.D., Castellanos, A., Myerburg, R.J., Møller, M., DIAMOND Study Group: Fractal analysis and time- and frequency-domain measures of heart rate variability as predictors of mortality in patients with heart failure. Am. J. Cardiol. 87, 178–182 (2001)

Costa, M., Goldberger, A.L., Peng, C.K.: Multiscale entropy analysis of complex physiologic time series. Phys. Rev. Lett. 89, 068102 (2002)

Singh, J.P., Larson, M.G., Tsuji, H., Evans, J.C., O’Donnell, C.J., Levy, D.: Reduced heart rate variability and new-onset hypertension–insights into pathogenesis of hypertension: The Framingham Heart Study. Hypertension. 32, 293–297 (1998)

Task Force of the European Society of Cardiology and the North American Society of Pacing and Electrophysiology: Heart rate variability: standards of measurement, physiological interpretation and clinical use. Circulation. 93, 1043–1065 (1996)

Goldberger, J.J., Ahmed, M.W., Parker, M.A., Kadish, A.H.: Dissociation of heart rate variability from parasympathetic tone. Am. J. Phys. 266, H2152–H2157 (1994)

Liu, C.Y., Li, P., Zhao, L.N., Yang, Y., Liu, C.C.: Evaluation method for heart failure using RR sequence normalized histogram. In: Murray, A. (ed.) Computing in Cardiology, pp. 305–308. IEEE, Hangzhou (2011a)

Ho, K.K., Moody, G.B., Peng, C.K., Mietus, J.E., Larson, M.G., Levy, D., Goldberger, A.L.: Predicting survival in heart failure case and control subjects by use of fully automated methods for deriving nonlinear and conventional indices of heart rate dynamics. Circulation. 96, 842–848 (1997)

Liu, C.Y., Zhang, C.Q., Zhang, L., Zhao, L.N., L C, C., Wang, H.J.: Measuring synchronization in coupled simulation and coupled cardiovascular time series: a comparison of different cross entropy measures. Biomed. Signal. Process. Control. 21, 49–57 (2015)

Liu, C.Y., Zhao, L.N.: Using Fuzzy Measure Entropy to improve the stability of traditional entropy measures. In: Murray, A. (ed.) Computing in cardiology, pp. 681–684. IEEE, Hangzhou (2011)

Zhao, L.N., Wei, S.S., Zhang, C.Q., Zhang, Y.T., Jiang, X.E., Liu, F., Liu, C.Y.: Determination of sample entropy and fuzzy measure entropy parameters for distinguishing congestive heart failure from normal sinus rhythm subjects. Entropy. 17, 6270–6288 (2015)

Zhang, X.S., Zhu, Y.S., Thakor, N.V., Wang, Z.Z.: Detecting ventricular tachycardia and fibrillation by complexity measure. I.E.E.E. Trans. Biomed. Eng. 46, 548–555 (1999)

Aboy, M., Hornero, R., Abasolo, D., Alvarez, D.: Interpretation of the Lempel-Ziv complexity measure in the context of biomedical signal analysis. I.E.E.E. Trans. Biomed. Eng. 53, 2282–2288 (2006)

Yentes, J.M., Hunt, N., Schmid, K.K., Kaipust, J.P., McGrath, D., Stergiou, N.: The appropriate use of approximate entropy and sample entropy with short data sets. Ann. Biomed. Eng. 41, 349–365 (2013)

Ruan, X.H., Liu, C.C., Liu, C.Y., Wang, X.P. and Li, P.: Automatic detection of atrial fibrillation using RR interval signal. In: 4th International Conference on Biomedical Engineering and Informatics: IEEE. pp. 644–647. (2011)

Acknowledgement

We acknowledge and thank Dr. Charalampos Tsimenidis of Newcastle University for his helpful review of the manuscript.

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

A. Appendix

A. Appendix

1.1 A.1 RR Sequence Normalized Histogram

The general construction procedure for the RR sequence normalized histogram is summarized as follows [53]:

Given an RR sequence {RR 1, RR 2,…, RR N }, where N denotes the sequence length. The maximum (RR max) and minimum (RR min) values were firstly determined to calculate the range in the sequence:

The threshold a = 0.1 × RR range is set and then the left-step parameter H l and right-step parameter H r can be calculated as

where RR mean denotes the mean value of the RR sequence. Based on these parameters, the RR i is divided into seven sections. Table 11.1 details the element division rules.

The element percentage p i in each of the sections is calculated as follows:

In a rectangular coordinate system, the p i corresponding to the seven sections (i.e. L1, L2, L3, C, R3, R2 and R1) is drawn to form the normalized histogram. Three quantitative indices can be obtained from the normalized histogram. They are respectively named as center-edge ratio (CER), cumulative energy (CE) and range information entropy (RIEn) and are defined as [53]:

1.2 A.2 Sample Entropy (SampEn)

The algorithm for SampEn is summarized as follows [29]: For the HRV series x(i), 1 ≤ i ≤ N, forms N − m + 1 vectors \( {X}_i^m=\left\{x(i),x\left(i+1\right),\cdots, x\left(i+m-1\right)\right\} \), 1 ≤ i ≤ N − m + 1. The distance between two vectors \( {X}_i^m \) and \( {Y}_i^m \) is defined as: \( {d}_{i,j}^m=\underset{k=0}{\overset{m-1}{ \max }}\left|x\left(i+k\right)-x\left(j+k\right)\right| \). Denote \( {B}_i^m(r) \) the average number of j that meets \( {d}_{i,j}^m\le r \) for all 1 ≤ j ≤ N − m, and similarly define \( {A}_i^m(r) \) by \( {d}_{i,j}^{m+1} \). SampEn is then defined by:

wherein the embedding dimension is usually set at m = 2 and the threshold at r = 0.2 × sd (sd indicates the standard deviation of the HRV series under-analyzed) [57, 60].

1.3 A.3 Fuzzy Measure Entropy (FuzzyMEn)

The calculation process of FuzzyMEn is summarized as follows [33, 56]:

For the RR or PTT segment x(i) (1 ≤ i ≤ N), firstly form the local vector sequences \( {XL}_i^m \) and global vector sequences \( {XG}_i^m \) respectively:

The vector \( {XL}_i^m \) represents m consecutive x(i) values but removing the local baseline \( \overline{x}(i) \), which is defined as:

The vector \( {XG}_i^m \) also represents m consecutive x(i) values but removing the global mean value \( \overline{x} \) of the segment x(i), which is defined as:

Subsequently, the distance between the local vector sequences \( {XL}_i^m \) and \( {XL}_j^m \), and the distance between the global vector sequences \( {XG}_i^m \) and \( {XG}_j^m \) are computed respectively as:

Given the parameters n L , r L , n G and r G , we calculate the similarity degree \( {DL}_{i,j}^m\left({n}_L,{r}_L\right) \) between the local vectors \( {XL}_i^m \) and \( {XL}_j^m \) by the fuzzy function \( \mu L\left({dL}_{i,j}^m,{n}_L,{r}_L\right) \), as well as the similarity degree \( {DG}_{i,j}^m\left({n}_G,{r}_G\right) \) between the global vectors \( {XG}_i^m \) and \( {XG}_j^m \) by the fuzzy function \( \mu G\left({dG}_{i,j}^m,{n}_G,{r}_G\right) \):

We define the functions ϕL m(n L , r L ) and ϕG m(n G , r G ) as:

Similarly, we define the function ϕL m + 1(n L , r L ) for m + 1 dimensional vectors \( {XL}_i^{m+1} \) and \( {XL}_j^{m+1} \) the function ϕG m + 1(n G , r G ) for m + 1 dimensional vectors \( {XG}_i^{m+1} \) and \( {YG}_j^{m+1} \):

Then the fuzzy local measure entropy (FuzzyLMEn) and fuzzy global measure entropy (FuzzyGMEn) are computed as:

Finally, the FuzzyMEn of RR segment x(i) is calculated as follows:

In this study, the local similarity weight was set to n L = 3 and the global vector similarity weight was set to n G = 2. The local tolerance threshold r L was set equal to the global threshold r G, i.e., r L = r G = r. Hence, the formula (11.21) becomes:

For both SampEn and FuzzyMEn, the entropy results were only based on the three parameters: the embedding dimension m, the tolerance threshold r and the RR segment length N.

1.4 A.4 Lempel-Ziv (LZ) Complexity

The calculation process of LZ complexity is summarized as follows [21, 59]:

-

1.

For a binary symbolic sequence R = {s 1, s 2,…, s n }, let S and Q denote two strings, respectively, and SQ is the concatenation of S and Q, while the string SQπ is derived from SQ after its last character is deleted (π means the operation to delete the last character in the string). v(SQπ) denotes the vocabulary of all different substrings of SQπ. Initially, c(n) = 1, S = s 1, Q = s 2, and so SQπ = s 1;

-

2.

In summary, S = s 1 s 2, …, s r , Q = s r+1, and so SQπ = s 1 s 2, …, s r ; if Q belongs to v(SQπ), then s r+1, that is, Q is a substring of SQπ, and so S does not change, and Q is updated to be s r+1 s r+2, and then judge if Q belongs to v(SQπ) or not. Repeat this process until Q does not belong to v(SQπ);

-

3.

Now, Q = s r+1 s r+2, …, s r+i , which is not a substring of SQπ = s 1 s 2, …, s r s r+1,…, s r+i-1, so increase c(n) by one;

-

4.

Thereafter, S is renewed to be S = s 1 s 2, …, s r+i , and Q = s r+i+1;

-

5.

Then the procedures repeat until Q is the last character. At this time c(n) is the number of different substrings contained in R. For practical application, c(n) should be normalized. It has been proved that the upper bound of c(n) is

where ε n is a small quantity and ε n → 0 (n → ∞). In fact,

-

6.

Finally, LZ complexity is defined as the normalized output of c(n):

where C(n) is the normalized LZ complexity, and denotes the arising rate of new patterns within the sequence.

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this chapter

Cite this chapter

Liu, C., Murray, A. (2017). Applications of Complexity Analysis in Clinical Heart Failure. In: Barbieri, R., Scilingo, E., Valenza, G. (eds) Complexity and Nonlinearity in Cardiovascular Signals. Springer, Cham. https://doi.org/10.1007/978-3-319-58709-7_11

Download citation

DOI: https://doi.org/10.1007/978-3-319-58709-7_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-58708-0

Online ISBN: 978-3-319-58709-7

eBook Packages: Biomedical and Life SciencesBiomedical and Life Sciences (R0)