Abstract

ePHoRt is a project that aims to develop a web-based system for the remote monitoring of rehabilitation exercises in patients after hip replacement surgery. The tool intends to facilitate and enhance the motor recovery, due to the fact that the patients will be able to perform the therapeutic movements at home and at any time. As in any case of rehabilitation program, the time required to recover is significantly diminished when the individual has the opportunity to practice the exercises regularly and frequently. However, the condition of such patients prohibits transportations to and from medical centers and many of them cannot afford a private physiotherapist. Thus, low-cost technologies will be used to develop the platform, with the aim to democratize its access. By taking into account such a limitation, a relevant option to record the patient’s movements is the Kinect motion capture device. The paper describes an experiment that evaluates the validity and accuracy of this visual capture by a comparison to an accelerometer sensor. The results show a significant correlation between both systems and demonstrate that the Kinect is an appropriate tool for the therapeutic purpose of the project.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The enhancement of internet brings new advancements even in the field of health. A current trend in medicine is home therapy systems. This concept consists in enabling patients to carry out part of the rehabilitation at home and to communicate through the Web the evolution of the recovery process. On the other side, health professionals can proceed with a remote monitoring of the patient’s performance and an adaptation of the treatment accordingly. In Ecuador, telemedicine systems are still little developed. However, this technology could bring several advantages for the individual and the society in terms of healthcare (improvement of the recovery process by the possibility to perform rehabilitation exercises more frequently), economy (reduction of the number of medical appointments and the time patients spend at the hospital), mobility (diminution of the transportation to and from the hospital) and ethics (healthcare democratization and increased empowerment of the patient).

By taking into account these considerations, the project ePHoRt proposes to develop a Web-based platform for home motor rehabilitation. The tool is developed for patients after hip arthroplasty surgery. This orthopaedic procedure is an excellent case study, because it involves people who need a postoperative functional rehabilitation program to recover strength, function and joint stability. In addition, due to the condition of the patients, it is difficult to carry them to the hospital. The project intends to tackle three main issues. First, the motion capture technology must be low-cost, in order to be used worldwide. Second, the system should automatically detect the correctness of the executed movement, in order to provide the patient with real time feedback. Third, new computational approaches have to be researched, in order to promote patient’s motivation to regularly complete the rehabilitation tasks (e.g., by the use of affective computing and serious games paradigms to detect difficulties and stimulate effort in the patients, respectively).

This paper focuses on the first issue of the project, which is the identification and validation of a reliable low-cost technology for the motion capture. The manuscript is divided into five parts. The first part is a state of the art in terms of technologies for home rehabilitation and telemedicine. The second is a description of the architecture that will support the platform. The third exposes the methodology used to evaluate the accuracy of the device used for the motion capture. The fourth part consists in a presentation of the results. Finally, some conclusions and perspectives are drawn up regarding this initial step of the project.

1.1 State of the Art

In any kind of rehabilitation, repeated exercises of an impaired limb maximize the chances of recovery [1]. In practice, medical and economic situations limit the number of therapeutic sessions the patient can take at the hospital or medical centers. This fact justifies the increased trend in developing telemedicine systems to enhance home therapy. However, this new approach of healthcare copes with several other obstacles. First, the technology used must be both reliable and affordable in order to significantly reduce the costs. Second, the system has to provide a rigorous and real time monitoring of the patient’s movements to make sure that the rehabilitation protocol is properly executed. For instance, it has to detect the patients’ tendency to compensate their diminished limb with other functional part of their body, which makes rehabilitation progress slower [2]. Third, providing a therapeutic framework at home does not automatically implicate a speedy recovery, because of the lack of motivation among patients to exercise for sustainable period of time.

Home-based rehabilitation has gained prominence over the recent years through the development of exercise platform [3], virtual reality-based system [4], gaming console [5] and the widely popular Kinect camera-based system [6]. For the purpose of this project it is necessary to make a survey on three critical points regarding home therapy. First, the existing technologies used in telemedicine for patient’s rehabilitation. Second, systems and methods developed to perform a real time analysis and recognition of the movement. Third, the main approaches implemented to enhance motivation in patients to regularly complete the exercises. In the context of this paper that focuses on the motion capture part of the project, the state of the art is based on the first point, only.

Therapeutic Patient Education.

Telemedicine implies an empowerment of the patients, who must take their responsibilities to conscientiously complete the exercises with regularity. This transition from a passive to an active role of the patient in the management of the therapy is called Therapeutic Patient Education (TPE). In terms of assessment of physical limitations, the e-ESPOIRS-Handi project is a web-based tool developed to facilitate the evaluation of motor disabled people [7, 8]. The system is designed to be used on the client’s side by both healthcare specialists and patients, through two distinct interfaces. The platform implements a user-friendly application easily customizable by the user. This tool intends to ensure a tailored evaluation according to the specific impairments and needs of each individual. The overall objective of this project is to improve the management of the multidisciplinary healthcare cooperative network and promote an active involvement of the patient, by allowing the self-evaluation.

Cognitive and Motor Rehabilitation.

For the therapy, a majority of projects focuses on cognitive rehabilitation, because of a certain facility of implementation. For instance, the WebLisling project was designed to be a complementary aid in the process of rehabilitating aphasic speaking patients [9]. This platform is composed with several exercises to train the different components of linguistic skills, such as: reading, speaking and writing. Most of the tasks are conducted in a three dimensional environment, where the user can interact with domestic objects with the aim of promoting a treatment as ecologic as possible. Due to the fact that it is a web-based software application, it allows an additional remote monitoring by speech therapists. Tangible interfaces are also used in order to promote a multimodal process of rehabilitation of cognitive functions [10]. However, motor stimulations are still marginally developed on the field, because of the technological complexity to implement a reliable tool for medical purposes. The major advancements are dedicated on systems for the rehabilitation of people that suffered a stroke [11]. Even so, they are essentially based on wearable devices to facilitate the tracking and monitoring of functional limb movements [12].

Quantitative and Qualitative Monitoring.

The development of wearable devices has enhanced health monitoring. Useful information for medical purposes can be based on quantitative and/or qualitative monitoring. One part of quantitative monitoring consists in recording physiological data, such as heart rate, breathing rate, blood pressure… through wearable sensors. This information is useful for clinical evaluation of patients during rehabilitation [13]. Another part focuses on data collection and interpretation through an objective measurement of the functional activity of the individuals [14]. Finally, integrative approaches, such as in the StrokeBack project, aim to a body area sensing network for monitoring [15]. The network is composed with several sensors for capturing the physiological and kinematic data and data analysis techniques for extracting the clinically relevant information. The ultimate step consists in transferring the collected data to a remote medical center or authorized caregivers [16].

A qualitative monitoring can be added in order to complement the quantitative data. This monitoring is carried out by the use of questionnaires the patients can complete at home on the base of their daily activities [17]. Paper-based [18] or computer-based [7] forms can be used for such a monitoring. These data help to synthesize the patient’s activities, which are monitored periodically by the respective clinicians. There is a wide range of popular clinical questionnaires that are used by the therapists to assess patient’s motor recovery, such as the Harris Hip Score for functionality and pain [19] and the Borg Scale of perceived exertion [20].

Motion Capture Systems.

Motion capture is used in a wide range of areas, from the entertainment industry (e.g., three-dimensional animation) [21] to scientific studies, such as biological motion analysis [22]. However, it can be an expensive and complex technology that it is not always usable at home. For instance, one of the most famous motion capture systems for professional use is the Vicon motion system. This kind of technology is based on light-reflecting markers positioned on the individual body and infrared-camera sensors that enable a precise analysis of movements. The main limitations of this tool are its high-cost and the fact that it only works in very controlled conditions (usually in laboratories). A quite more affordable equipment is the popular Kinect camera from Microsoft. This system has several advantages over the other technologies. It is a cheap motion capture device (around 100USD). It is quite easy to install and use at home. And it provides an automatic reconstruction of the three-dimensional coordinates of the body main joints, with a reasonable spatial and temporal resolution [23]. A study comparing the Vicon and the Kinect system for measuring movement in people with Parkinson’s disease shows that the Kinect has the potential to be a suitable equipment to capture gross spatial characteristics of clinical relevant movements [24]. Also, a computer vision approach based on the use of CCD webcams can be implemented, but the apparatus usually requires two optical sensors in order to calculate the 3D-coordinates by triangulation and it needs the development of additional image processing algorithms [25]. An attempt to reconstruct body postures and 3D movements from monocular video sequences was proposed by [26], but the true accuracy and applicability for real-time tele-rehabilitation are not yet demonstrated. Finally, an alternative to vision-based monitoring is a wearable system. However, the use of complicated wearable devices makes it tiresome and, consequently, tends to decrease the effectiveness of rehabilitation exercises [27].

1.2 Platform Architecture

The ePHoRt project is a Web application based on a three layers Client-Server architecture (Fig. 1). The client layer (browser) will be developed in JavaScript by the use of two possible frameworks: jQuery or AngularJS. The application layer (domain server) will be implemented either in Django framework from Python or Java framework. The data server layer (database) will be developed in MySQL.

The client and the server layers will be connected by Internet. A different user interface will be implemented for each of the three kinds of user: patient, physiotherapist and physician. After login, the patients will have an access to the programmed exercises they have to achieve. The patient’s interface will be composed with two dynamic frames: (i) one to display a video example of the exercises to be completed and (ii) another to display a 3D-avatar that will mimic in real-time the patient’s movements captured by the Kinect. The patient will receive a real-time feedback regarding the correctness of the movements, thanks to the assessment module, and game scores. In addition, questionnaires will be available on this interface, in order to record qualitative information. The main functionalities of the physiotherapist’s interface will be: (i) accessing to the patient’s performance for each exercise, (ii) consulting the answers to the self-reported functions, (iii) watching the three-dimensional reproduction of the patient’s movement through an animated avatar and (iv) updating (to more challenging parameters of the same exercise or to new kinds of exercise) the rehabilitation program according to the patient’s progress and/or medical advices. Finally, an interface for the physicians will enable them to supervise all the recovery process and communicate with the physiotherapist to authorize or not certain movements according to the specific condition of each patient. The communication between the three stakeholders will be supported by an exchange of messages.

The application layer will contain the logical structure of the platform. This intermediate layer will be connected to the client, through Internet, and to the database server. It will receive and process the requests from the three types of user. Here, the Unity 3D game engine will be used to develop the game-based exercises and animate the avatar. Also, the affective and movement assessment modules will be integrated at this level.

The database layer will be connected to the application layer. Heterogeneous data will be stored into the database, such as: (i) quantitative data about the 3D-coordinates of the movements, (ii) qualitative data about the responses to the questionnaires, (iii) videos of the exercises and (iv) comments made by the physiotherapists and physicians.

2 Evaluation of the Vision-Based Motion Capture

2.1 Material and Method

Experimental Setup.

Four subjects participated in the experiment. They were asked to execute arm’s movements in two different planes (frontal and sagittal) and at two different speeds (regular and fast). Each movement was repeated three times. Thus, the experimental design was: 2 Planes × 2 Velocities × 3 Repetitions. The frontal plane movements consisted in a shoulder abduction without bending the elbow (Fig. 2, on the left). This abduction had to be extended to approximately 90°, leaving the arm in a horizontal position. This exercise was executed at fast (400–600 ms) and regular (650–850 ms) speed. The sagittal plane movements consisted in a shoulder flexion, also extended to 90° and performed at fast and regular velocity (Fig. 2, on the right). During the execution of the movements, subjects were in stand up position and at approximatively 2.5 m in front of a Kinect V2 camera (Fig. 3, on the left). The Kinect height was aligned with the xiphoid apophysis of the subjects. In addition, participants wore a 3-axis accelerometer developed by Kionix. This inertial-based motion sensor was located on the upper right arm (20–25 cm from shoulder joint). The y-axis of the accelerometer was aligned parallel to the arm.

The two distinct arm movements as observed in the Kinect perspective: shoulder abduction (on the left) and flexion (on the right). The red markers are the used skeleton joints as retrieved from the Kinect SDK. The yellow marker is a translation of the mid-spine joint and assists in a convenient way the calculation of the angles. The positive inertial axes in x and y directions are showed with the blue arrows. (Color figure online)

Data Acquisition.

An application for the Android system (SDK version 24) with the use of Processing programming language (version 3.2.2) was developed to record the motion capture from the accelerometer. The data were extracted by using the ketai sensors library. Then, these data were sent by Wi-Fi to a PC by the mean of OSC messages. These data included the raw sensor readings and a timestamp of this reading. The same PC was used to save Kinect skeleton data with the use of KinectPV2 library for Processing. A GUI was implemented in order to enable the experimentalist to adjust (i) the minimum and maximum time of movement and (ii) the sampling rate of the recording (Fig. 3, on the right). A sound was emitted by pushing a start button, which indicated to the participant the beginning of a trial. In the meantime, the data recording from the accelerometer and the Kinect were synchronized with a framerate of approximately 33 Hz. After the stop button pressed, the data were automatically examined and compared to the set condition thresholds, in order to provide an instantaneous feedback on the correctness of the movement. If the movement was classified as correct it was saved to a csv file for the posterior analysis of the results.

Data Processing.

The data from the accelerometer gave a representation of the change of the acceleration in each of the three spatial axis and permit to deduce the location of the arm. However, the effect of the centripetal force was also calculated and subtracted to the raw data, in order to get the real value of the angular position of the limb. To measure the position of the arm with the Kinect, the neck joint of the skeleton was translated onto the right shoulder. The same vector of translation was applied to the skeleton mid-spine joint, in order to calculate the angle of the arm relative to the body by the use of the Heron’s formula:

Once the raw data processed, it was possible to proceed with a comparison of the angles obtained with the vision-based sensor vs. the inertial-based sensor, through the calculation of the Pearson’s coefficient of correlation between the two data sources.

2.2 Results

Table 1 shows the correlation between accelerometer and Kinect measurements, for the four subjects and the different experimental conditions. In all of the conditions and subjects, the r is higher than 0.9 (Mean = 0.96; SD = 0.03). This result suggests a very strong correlation between the accuracy of the two motion capture devices. The r is as high for the abduction (Mean = 0.96; SD = 0.02) as for the flexion (Mean = 0.96; SD = 0.03). However, the r for the regular movements (Mean = 0.98; SD = 0.01) tends to be higher than the r for the fast movements (Mean = 0.95; SD = 0.04).

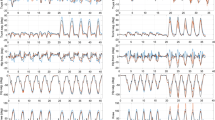

Figure 4 (left side) shows the arm’s angular movement over time, which was typically monitored during the experiment. It is possible to notice that the accelerometer and Kinect recordings overlap. A plot of the angles captured by the inertial sensor against the angles captured by the vision sensor shows that the points obtained are almost perfectly aligned on a regression line (Fig. 4, on the right).

On the left is an example of the arm’s angles (in degrees) captured by the accelerometer (plain line) and the Kinect (dotted line) over time (in sec.), for shoulder flexion at regular speed. On the right is the correlation (linear regression represented by the dotted line) between these angles recorded by the inertial sensor, on the x-axis, and the vision sensor, on the y-axis.

3 Conclusions and Perspectives

The paper presents an innovative project based on a telemedicine platform to enhance the motor rehabilitation after hip replacement surgery. A mandatory request to justify the implementation of such a system is the use of low-cost technologies. The Kinect was identified as a potential tool for the motion capture, but its validity and accuracy for the therapeutic purpose of the project had to be proved. To do so, movements performed in the frontal and sagittal planes are simultaneously recorded through an accelerometer and a Kinect. The former is used as a reference to gauge the precision of the latter. The results show a very high correlation between the inertial-based and the vision-based motion sensors, whatever the plane in which the movement is executed. The velocity of the movement seems to have a slightly effect on the Pearson’s coefficient, such as the faster is the movement the lower is the r. This fact can be explained by the relatively low framerate of the Kinect (around 30 Hz). However, the correlation maintains high even for the fastest movements tested. These experimental data are suitable for a physical rehabilitation program, in which the patients are not expected to perform exercises at very high velocity. Overall, this study confirms that the Kinect is perfectly adapted to monitor the patient’s movements and will be next integrated in the ePHoRt platform.

References

Feys, H., De Weerdt, W., Verbeke, G., et al.: Early and repetitive stimulation of the arm can substantially improve the long-term outcome after stroke: a 5-year follow-up study of a randomized trial. Stroke 35(4), 924–929 (2004)

Cramp, M.C., Greenwood, R.J., Gill, M., et al.: Effectiveness of a community-based low intensity exercise programme for ambulatory stroke survivors. Disabil. Rehabil. 32(3), 239–247 (2010)

Mavroidis, C., Nikitczuk, J., Weinberg, B., et al.: Smart portable rehabilitation devices. J. Neuroeng. Rehabil. 2, 18 (2005). doi:10.1186/1743-0003-2-18

Holden, M.K., Dyar, T.A., Dayan-Cimadoro, L.: Telerehabilitation using a virtual environment improves upper extremity function in patients with stroke. IEEE Trans. Neural Syst. Rehabil. Eng. 15(1), 36–42 (2007)

Rand, D., Kizony, R., Weiss, P.T.L.: The Sony PlayStation II EyeToy: low-cost virtual reality for use in rehabilitation. J. Neurol. Phys. Ther. 32(4), 155–163 (2008)

Oikonomidis, I., Kyriazis, N., Argyros, A.A.: Efficient model-based 3D tracking of hand articulations using Kinect. In: Proceedings of the 22nd British Machine Vision Conference. University of Dundee (2011)

Rybarczyk, Y., Rybarczyk, P., Oliveira, N., Vernay, D.: e-ESPOIR: a user-friendly web-based tool for disability evaluation. In: Proceedings of the 11th conference of the Association for the Advancement of Assistive Technology in Europe. Maastricht (2011)

Mendes, P., Rybarczyk, Y., Rybarczyk, P., Vernay, D.: A web-based platform for the therapeutic education of patients with physical disabilities. In: Proceedings of the 6th International Conference of Education, Research and Innovation, Seville (2013)

Rodrigues, F., Rybarczyk, Y., Gonçalves, M.J.: On the use of IT for treating aphasic patients: a 3D web-based solution. In: Proceedings of the 13th International Conference on Applications of Computer Engineering, Lisbon (2014)

Rybarczyk, Y., Fonseca, J.: Tangible interface for a rehabilitation of comprehension in aphasic patients. In: Proceedings of the 11th conference of the Association for the Advancement of Assistive Technology in Europe, Maastricht (2011)

Birns, J., Bhalla, A., Rudd, A.: Telestroke: a concept in practice. Age Ageing 39(6), 666–667 (2010)

Nguyen, K.D., Chen, I.M., Luo, Z., et al.: A wearable sensing system for tracking and monitoring of functional arm movement. IEEE/ASME Trans. Mechatron. 16(2), 213–220 (2011)

Patel, S., Park, H., Bonato, P., et al.: A review of wearable sensors and systems with application in rehabilitation. J. Neuroeng. Rehabil. 9(1), 21–37 (2012)

Rand, D., Eng, J.J., Tang, P.F., et al.: How active are people with stroke? use of accelerometers to assess physical activity. Stroke 40(1), 163–168 (2009)

Biswas, D., Cranny, A., Maharatna, K.: Body area sensing networks for remote health monitoring. In: Vogiatzaki, E., Krukowski, A. (eds.) Modern Stroke Rehabilitation through e-Health-Based Entertainment, pp. 85–136. Springer, Heidelberg (2016)

Jovanov, E., Milenkovic, A., Otto, C., De Groen, P.C.: A wireless body area network of intelligent motion sensors for computer assisted physical rehabilitation. J. Neuroeng. Rehabil. 2, 6–15 (2005)

Strath, S.J., Kaminsky, L.A., Ainsworth, B.E., et al.: Guide to the assessment of physical activity: clinical and research applications - a scientific statement from the American heart association. Circulation 128(20), 2259–2279 (2013)

Vernay, D., Edan, G., Moreau, T., Visy, J.M., Gury, C.: OSE: a single tool for evaluation and follow-up patients with relapsing-remitting multiple sclerosis. Multiple Sclerosis 12, suppl. 1 (2006)

Nilsdotter, A., Bremander, A.: Measures of hip function and symptoms. Arthritis Care Res. 63, 200–207 (2011)

Borg, G.A.: Psychophysical bases of perceived exertion. Med. Sci. Sports Exerc. 14(5), 377–381 (1982)

Gameiro, J., Cardoso, T., Rybarczyk, Y.: Kinect-Sign: teaching sign language to listeners through a game. In: Rybarczyk, Y., et al. (eds.) Innovative and Creative Developments in Multimodal Interaction Systems, pp. 141–159. Springer, Heidelberg (2014)

Rybarczyk, Y., Santos, J.: Motion integration in direction perception of biological motion. In: Proceedings of the 4th Asian Conference on Vision, Matsue (2006)

Dutta, T.: Evaluation of the kinect sensor for 3-D kinematic measurement in the workplace. Appl. Ergonomics 43, 645–649 (2012)

Brook, G., Barry, G., Jackson, D., Mhiripiri, D., Olivier, P., Rochester, L.: Accuracy of the microsoft kinect sensor for measuring movement in people with Parkinson’s disease. Gait Posture 39(4), 1062–1068 (2014)

Rybarczyk, Y.: 3D markerless motion capture: a low cost approach. In: Proceedings of the 4th World Conference on Information Systems and Technologies, Recife (2016)

Remondino, F., Roditakis, A.: 3D reconstruction of human skeleton from single images or monocular video sequences. In: Proceedings of Joint Pattern Recognition Symposium, Magdeburg (2003)

Krukowski, A., Vogiatzaki, E., Rodríguez, J.M.: Patient health record (PHR) system. In: Maharatna, K., et al. (eds.) Next Generation Remote Healthcare: A Practical System Design Perspective. Springer, New York (2013). Chap. 6

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Rybarczyk, Y., Deters, J.K., Gonzalvo, A.A., Gonzalez, M., Villarreal, S., Esparza, D. (2017). ePHoRt Project: A Web-Based Platform for Home Motor Rehabilitation. In: Rocha, Á., Correia, A., Adeli, H., Reis, L., Costanzo, S. (eds) Recent Advances in Information Systems and Technologies. WorldCIST 2017. Advances in Intelligent Systems and Computing, vol 570. Springer, Cham. https://doi.org/10.1007/978-3-319-56538-5_62

Download citation

DOI: https://doi.org/10.1007/978-3-319-56538-5_62

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-56537-8

Online ISBN: 978-3-319-56538-5

eBook Packages: EngineeringEngineering (R0)