Abstract

In this paper, an optimal dynamic combination model of automatic forecasts is proposed for corporate budgeting purposes. During the budgeting process, financial managers usually have to create forecasts for various economic quantities such as sales, the cost of goods sold, net earnings, etc. This situation is becoming exponentially more complex if we take into account the various production lines and business segments of the firms. In such an environment, it is very important for a forecasting model to have the ability to produce fast, reliable, accurate, and cost-effective forecasts on a timely basis. The purpose of this paper is to provide a forecasting methodology for budgeting purposes that incorporates all the desirable properties that financial managers expect of a forecasting model that supports a modern budgeting system. Our forecasting methodology utilizes various automatic forecasting models for univariate time series that are combined to produce a single optimal forecast for each financial quantity under consideration.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

Introduction

Probably the most important function of business is forecasting, which is a starting point for planning and budgeting. Traditionally, budgeting is regarded as one of the most important financial and accounting functions (Ekholm and Wallin 2000; Tanlu 2007). In recent years, rapidly changing market conditions have made financial planning tools of increasing importance both for managers and practitioners. However, the use of traditional budgets has been criticized as a management control tool (Hope and Fraser 2003). In the relevant literature, a number of new tools have been proposed as a replacement to traditional budgets, such as rolling forecasts and beyond budgeting (Bergstrand 2009; Bogsnes 2009). The purpose of rolling forecast is to use the most frequent data in order to make more flexible and adaptable organizations that are able to cope with changing environments (Lorain 2010). There is an increased number of companies adopting rolling forecasts as a part of the Beyond Budgeting model (Bogsnes 2009), and the main reason is to become more adaptive and hence can better support company planning and control processes (Hope and Fraser 2003).

Management, typically operates under conditions of uncertainty or risk and one of the fundamental objectives of forecasting is to reduce risk and uncertainty of financial decisions. A variety of forecasting techniques is available for the analyst to choose the most appropriate one. However, in real business life, the number of time series to be forecasted is enormous and the forecasts have to be updated frequently making forecasting modeling an almost impossible procedure. Therefore, automatic forecasts of large numbers of univariate time series are often needed in business (Leonard 2002).

When multiple forecasts are available for a target variable, forecast combination methods provide a simple and effective way to improve the forecasting performance of individual forecasting models. Further, they provide a simple procedure to manage misspecified and unstable forecasters, small sample sizes, and structural breaks in the data (Huang and Lee 2010; Mandel and Sani 2016). Usually, forecast combination methods outperform the best individual forecaster. For example, combination of forecasts has been applied with success, most of the time, in forecasting interest rates (Guidolin and Timmermann 2009), equity premium prediction (Rapach et al. 2010), realized volatility (Patton and Sheppard 2009), stock market return prediction (Nikolopoulos and Papakyriazis 2004), etc. However, most of the existing combination models focus on environments that ignore the complexity of real-world data. Several studies propose combination models capable of adapting to various environments and system instabilities (Aiolfi and Timmermann 2006; Nikolopoulos and Papakyriazis 2002; Smith and Wallis 2009; Tian and Anderson 2014).

In this paper, we apply, in the not trivial problem of forecasting monthly sales and cost of goods sold (COGS) for a manufacturing company, a combination scheme of automatic forecasts, based on a state-space representation where the combination weights are estimated online by means of the Kalman Filter.

Combining Automatic Forecasts

An automatic forecasting system can be used to automatically fit various models (i.e., exponential smoothing models, ARIMA, and dynamic regression models). Automatic forecasts may be used in cases where there is not an experienced forecaster; the number of the forecasts to be generated is large; the frequency of forecasting updates is high; the real model in not known or it is difficult to be identified (Leonard 2002). Combining a number of automatic forecasting models may produce superior forecasts especially out-of-sample. The forecast package (Hyndman 2016) for the R system for statistical computing, implements various automatic forecasting models. In the current work, two general automatic forecasting models are utilized. That is an exponential smoothing state-space model (ETS) and an autoregressive integrated moving average (ARIMA) model (Hyndman and Khandakar 2008; Hyndman et al. 2002). In particular, the ETS model offers 15 methods, such as simple exponential smoothing (N, N), Holt’s linear method (Ad, N), etc. (Hyndman 2016; Hyndman and Khandakar 2008; Hyndman et al. 2002).

The automatic ARIMA model identifies a seasonal ARIMA model in the following form ARIMA (p, d, q)(P, D, Q)m. The three components (p, d, q) are the AR order, the degree of differencing, and the MA order. The other three components are a specification of the seasonal part of the ARIMA model, plus the number of periods per season m. The automatic function in R in order to estimate the (p, d, q, P, D, Q) uses a variation of the Hyndman and Khandakar algorithm, (Hyndman and Khandakar 2008) which combines unit root tests, minimization of the AICc and MLE to obtain an ARIMA model.

For the combination of the forecasts, we propose a state-space representation where a dynamic linear model combines in real time the automatic forecasts. A State-Space model, is composed of an unobservable state: \( x_{0} ,x_{1} ,x_{2} , \ldots ,x_{t} , \ldots \) which forms a Markov Chain, and an observable variable: \( y_{0} ,y_{1} ,y_{2} , \ldots ,y_{t} , \ldots \) which are conditionally independent given the state. A very important class of state-space models is the dynamic linear model, which is specified by three equations. Equation (1), is a normal prior distribution for the p-dimensional state vector at time t = 0, Eq. (2) is called the observation equation and Eq. (3) the state equation or system equation.

where \( G_{t} \) and \( F_{t} \) are known matrices (of order \( p \times p \) and \( m \times p \) respectively) and \( (\upsilon_{t} )_{t} \) and \( (w_{t} )_{t} \) are two independent sequences of independent Gaussian random vectors with mean zero and known variance matrices \( (V_{t} )_{t} \ge 1 \) and \( (W_{t} )_{t} \ge 1 \), respectively. Furthermore, it is assumed that \( \theta_{0} \) is independent of \( (\upsilon_{t} ) \) and \( (w_{t} ) \) (Petris et al. 2009).

In this form, one can model nonlinear relationships between x and y, structural changes in the process under study, as well as the omission of some variables. For the optimal properties of the algorithm, the interested reader is referred to the following work (Kalman 1960; Gelb 1974; Hamilton 1994; Nikolopoulos and Papakyriazis 2002).

In our work, the dynamic linear regression model that is used to combine the automatic forecasts is described by

where \( x_{t}^{{\prime }} = \left\lfloor {x_{1,t} \ldots x_{t}^{p} } \right\rfloor \) are the values of the p explanatory variables at time \( t \). Setting \( G_{t} \) as the identity matrix and \( W \) diagonal, correspond to modeling the regression coefficients as independent random walks (Petris et al. 2009).

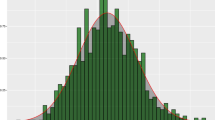

Application

In this section, we implement the automatic models presented in Sect. 2 and then we combine the forecasts with a simple average model, an unrestricted linear regression model, and our proposed Dynamic Linear Model. In our experiments, we utilize the data of a Greek manufacturing company that belongs to the chemical sector. In particular, we use monthly sales from January 2008 to December 2010 that is 36 observations, for “in sample” model estimation. The monthly sales of 2011 are used for “out of sample” forecast, combination and forecast evaluation. The estimated automatic models for the sales are ARIMA(0, 0, 0)(1, 1, 0)[12] with drift and ETS(M, N, M), while the respective models for the COGS are ARIMA(1, 1, 0) and ETS(M, A, N). The triplet (E, T, S) refers to the three components: error, trend, and seasonality. Thus, the model ETS(M, A, M) has multiplicative error, additive trend, and multiplicative seasonality, while the model ETS(M, N, M) has multiplicative error, no trend and multiplicative seasonality. More information for model description and measures of forecast accuracy can be found in Hyndman (2016) and Hyndman and Koehler (2006) respectively (Figs. 1 and 2).

Conclusions

A dynamic linear model was applied for the combination of monthly sales and COGS forecasts. The combination of forecasts greatly reduced the model selection risk, while the out-of-sample performance of the proposed combination model was better than any other single or combined forecasting model applied in this work. It is noticeable that in terms of RMSE the forecast improves from 1 to 55% points with regard to any other forecasting model (see Tables 1 and 2). We expect the dynamic combination benefits to be higher when we combine more automatic forecasts, and this experiment is left for a future work.

References

Aiolfi, M., and A. Timmermann. 2006. Persistence in forecasting performance and conditional combination strategies. Journal of Econometrics 135 (1–2): 31–53.

Bergstrand, J. 2009. Accounting for management control. Studentlitteratur.

Bogsnes, B. 2009. Implementing beyond budgeting: Unlocking the performance potential. Wiley.

Ekholm, B.G., and J. Wallin. 2000. Is the annual budget really dead? European Accounting Review 9 (4): 519–539.

Gelb, A. (1974). Applied optimal estimation. MIT press.

Guidolin, M., and A. Timmermann. 2009. Forecasts of US short-term interest rates: A flexible forecast combination approach. Journal of Econometrics 150 (2): 297–311.

Hamilton, J.D. 1994. Time series analysis, vol. 2. Princeton: Princeton University press.

Hope, J., and Fraser, R. 2003. Beyond budgeting: How managers can break free from the annual performance trap. Harvard Business School Press.

Huang, H., and T.H. Lee. 2010. To combine forecasts or to combine information? Econometric Reviews 29 (56): 534–570.

Hyndman, R.J. 2016. Forecasting functions for time series and linear models, R package version 7.1. http://github.com/robjhyndman/forecast.

Hyndman, R.J., and Y. Khandakar. 2008. Automatic time series forecasting: The forecast package for R. Journal of Statistical Software 27 (3): 1–22.

Hyndman, R.J., and A.B. Koehler. 2006. Another look at measures of forecast accuracy. International Journal of Forecasting 22 (4): 679–688.

Hyndman, R.J., A.B. Koehler, R.D. Snyder, and S. Grose. 2002. A state space framework for automatic forecasting using exponential smoothing methods. International Journal of Forecasting 18 (3): 439–454.

Kalman, R.E. 1960. A new approach to linear filtering and prediction problems. Journal of Basic Engineering 82 (1): 35–45.

Leonard, M. 2002. Large-scale automatic forecasting: millions of forecasts. In International Symposium of Forecasting, 156.

Lorain, M.A. 2010. Should rolling forecasts replace budgets in uncertain environments? vol. 20, 177–208. Elsevier.

Mandel, A., and Sani, A. 2016. Learning Time-varying forecast combinations. Documents de travail du Centre d’Economie de la Sorbonne 2016, 36. https://halshs.archives-ouvertes.fr/halshs-01317974/.

Nikolopoulos, S.D., and Papakyriazis, P. 2002. On dynamic model combination. In 5th Hellenic-European Conference on Computer Mathematics and its Applications, 627–638.

Nikolopoulos, S.D., and Papakyriazis, P. 2004. Improved market forecast with optimal dynamic combiners. In 6th Hellenic-European Conference on Computer Mathematics and its Applications, 774–781.

Patton, A.J., and K. Sheppard. 2009. Optimal combinations of realised volatility estimators. International Journal of Forecasting 25 (2): 218–238.

Petris, G., S. Petrone, and C. Patrizia. 2009. Dynamic linear models with R. New York: Springer.

Rapach, D., J. Strauss, and G. Zhou. 2010. Out-of-sample equity premium prediction: Combination forecasts and links to the real economy. Review of Financial Studies 23 (2): 821–862.

Smith, J., and K.F. Wallis. 2009. A simple explanation of the forecast combination puzzle. Oxford Bulletin of Economics and Statistics 71 (3): 331–355.

Tanlu, L. 2007. Does the adoption of rolling forecasts improve planning? An empirical investigation of the consequences of rolling forecasts. Social Science Research Network. http://papers.ssrn.com/sol3/Delivery.cfm?abstractid=1004125.

Tian, J., and H.M. Anderson. 2014. Forecast combinations under structural break uncertainty. International Journal of Forecasting 30 (1): 161–175.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Nikolopoulos, S.D. (2017). Dynamic Combination of Automatic Forecasts for Corporate Budgeting. In: Kavoura, A., Sakas, D., Tomaras, P. (eds) Strategic Innovative Marketing. Springer Proceedings in Business and Economics. Springer, Cham. https://doi.org/10.1007/978-3-319-56288-9_15

Download citation

DOI: https://doi.org/10.1007/978-3-319-56288-9_15

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-56287-2

Online ISBN: 978-3-319-56288-9

eBook Packages: Business and ManagementBusiness and Management (R0)