Abstract

In a semi-aggregative representation of a game, the payoff of a player depends on a player’s own strategy and on a personalized aggregate of all players’ strategies. Suppose that each player has a conjecture about the reaction of the personalized aggregate to a change in the player’s own strategy. The players play an equilibrium given their conjectures, and evolution selects conjectures that lead to a higher payoff in such an equilibrium. Considering one player role, I show that for any conjectures of the other players, only conjectures that are consistent can be evolutionarily stable, where consistency means that the conjecture is, to a first approximation, correct at equilibrium. I illustrate this result in public good games and contests.

Paper prepared for a volume honoring the memory of Richard Cornes. In his time at the University of Nottingham, Richard was a helpful colleague, ready to give advice in his usual witty and entertaining manner.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Aggregative games are a special class of games in which the payoff of a player depends on the player’s own strategy and on a (common across players) aggregate of players’ strategies. An early example of a work on aggregative games is Corchón (1994) but in a more recent series of works, Richard Cornes and Roger Hartley elucidated the usefulness of studying the mathematical structure of these games for establishing equilibrium existence and for finding equilibria in situations going beyond textbook symmetric examples. They applied this methodology to such classic examples of economic analysis as public good games (Cornes and Hartley 2007) and contests (Cornes and Hartley 2003, 2005),Footnote 1 as well as studying the general structure of aggregate games further (Cornes and Hartley 2012).

Before turning his attention to aggregative games, Richard also worked on applications of the concept of conjectural variations. This concept was extensively analyzed in the context of industrial organization games (see e.g. Laitner 1980; Bresnahan 1981; Perry 1982); its application in common property exploitation model was considered in Cornes and Sandler (1983) and in public good games in Cornes and Sandler (1984a).

In this paper, I also focus on a representation of games that is similar to the aggregative one and on conjectural variations. The representation is such that a player’s payoff depends on the player’s strategy and on a certain aggregate of all player’s strategies, personalized for the player. Thus the aggregates do not have to be the same for all players, as in a usual aggregative game. Nevertheless, the aggregates fulfill a similar role of reducing the dimensionality of what a player needs to consider about the other players. In such a representation (which I call semi-aggregative), what is relevant for the player is how the aggregate measure of players’ strategies possibly changes. This is precisely what the conjectures of the players in such a game are about.

Given their conjectures about possible changes in the respective aggregates, the players in the game behave rationally, that is, maximize their payoff. Their decisions characterize an equilibrium, for the given conjectures. But where do the conjectures come from? I suppose that they represent players’ innate beliefs, but those beliefs are subject to evolution. Different conjectures will lead to different equilibrium choices and thus different payoffs. From the point of view of evolution, those conjectures that led to higher payoff are more likely to propagate.Footnote 2

I focus on the setting where a game is not necessarily symmetric, thus players can have different roles (for example, one player can have a larger marginal benefit from a public good than another player, or a lower cost of contributing to it). Since roles are different, evolution is considered as happening within each role separately. Instead of considering an explicit dynamic process, I look for evolutionarily stable conjectures, which are conjectures that no other conjectures can invade by achieving a higher payoff for this player’s role, given the conjectures of the other players and the equilibrium that the players play.

I find that the evolutionary stability of conjectures is linked to their consistency. An equilibrium in the model is at the intersection of the reaction functions of the players, which also define the reaction of the aggregates. If a player’s strategy changes, for whatever reason, the reaction functions determine how the other players change (optimally) their strategies, and thus how the aggregates change. Conjectures are considered consistent if the belief of a player locally coincides, to the first approximation, to the actual change in the player’s personalized aggregate. The main result of the paper is that, in well-behaved games, only consistent conjectures of a player can be evolutionary stable for this player.

The result extends the link between consistent and evolutionarily stable conjectures. Previous works noted this connection in simple duopoly models (Dixon and Somma 2003; Müller and Normann 2005), in two-player games (Possajennikov 2009) and in symmetric aggregative games (Possajennikov 2015). What I add in this paper is that the link between consistent and evolutionarily stable conjectures hold in more general n-player asymmetric situations. Thus it is not only that evolution selects consistent conjectures when other players’ conjectures are consistent; for any conjectures of the other players, it is best, from the evolutionary point of view, to have a consistent conjecture.

This result is illustrated on two examples of games that were often the subject of Richard Cornes’s work and that are aggregative or naturally semi-aggregative, namely public good games and contests. In these settings, I show that for many parameter values consistent and evolutionarily stable conjectures coincide, thus consistency is not only a necessary but also a sufficient condition for evolutionary stability.

2 Games and Conjectures

2.1 Semi-aggregative Representation of Games

A simultaneous-move game on the real line is G = (N, {X i } i = 1 n, {u i } i = 1 n), where N = { 1, …, n} is the set of players, \(X_{i} \subset \mathbb{R}\) is the strategy set of player i, and \(u_{i}: X_{1} \times \ldots \times X_{n} \rightarrow \mathbb{R}\) is the payoff function of player i. It is assumed that the game is well-behaved: strategy sets are convex and the payoff functions are differentiable as many times as required.

For any game, the payoff of player i can be written as u i (x i , A i ), where A i = f i (x 1, …, x n ) for some function \(f_{i}: X_{1} \times \ldots \times X_{n} \rightarrow \mathbb{R}\).Footnote 3 I call the representation of the payoffs in the form u i (x i , A i ) semi-aggregative, since A i can be seen as a personalized aggregate of player i, which summarizes the dependence of the payoff of player i on the strategies of other players. Note that the aggregate A i can include the strategy x i of player i. A game is aggregative if there exists a semi-aggregative representation with A = A i for all i, i.e. with the same functions f i for all players and a common aggregate A.

While in general games the payoff representation discussed above may appear strange, there are classes of games for which a (semi-)aggregative representation is natural. For example, in a differentiated product oligopoly, the price p i (q 1, …, q n ) for the product of firm i is determined by the inverse demand from the quantities chosen by all firms. This price can then naturally be taken as the personalized aggregate of firm i. The payoff for firm i is the profit π i (q i , p i ) = p i q i − C i (q i ), where C i (q i ) is the cost function of firm i.Footnote 4

For another example, consider a (pure) public good game. Each player i contributes a part x i of the endowment m i to the public good, leaving m i − x i for private good consumption. The aggregate production of the public good is A = ∑ i = 1 n x i . Player i’s payoff is given by the utility function u i (m i − x i , A), which is already a semi-aggregative representation. In fact, with pure public goods the game is properly aggregative, since the aggregate amount of public good A is the same for all players; it is not needed to have personalized aggregates for each player.

The advantage of the semi-aggregative representation is the reduction in the dimensionality of the problem. In a sense, a player sees his or her opponents as one aggregate opponent and is only concerned about the aggregate effect of such an opponent on payoff. In the next section I discuss how this can be used to formulate in a simple manner players’ expectations about the behavior of other players.

2.2 Conjectures and Conjectural Variation Equilibria

Suppose that player i has some conjectures r i about the reaction of other players to a change in the player’s own strategy. With the semi-aggregative representation of the game, the conjectures are about the change in the personalized aggregate, \(r_{i} = \left (\frac{dA_{i}} {dx_{i}} \right )^{e}\), where the superindex is meant to convey that it is an expected, rather than actual, change. It is assumed that the conjectures are constant, r i ∈ R i , where R i is a convex subset of \(\mathbb{R}\), i.e. conjectures do not depend on the current strategies of players. This assumption again reduces the dimensionality of the problem while still allowing consideration of consistent conjectures.

A change in player i’s own strategy x i also can directly affect the aggregate A i = f i (x 1, …, x n ). But the conjecture is about the total effect of a change in x i on A i : it incorporates the direct effect \(\frac{\partial A_{i}} {\partial x_{i}}\) but also the effect from the expected changes in the other players’ strategies. This formulation is slightly more general than the one with the aggregate being a function of the other players’ strategies only, as was used in e.g. Perry (1982) for oligopoly and in Cornes and Sandler (1984a) for a public good model. It can still represent the usual Nash behavior: \(r_{i} = \frac{\partial A_{i}} {\partial x_{i}}\) means that the strategies of the other players are kept fixed; player i does not expect the other players to react.

Having conjecture r i , player i maximizes payoff u i (x i , A i ). The first-order condition for maximization is

Suppose now that all players have certain conjectures, summarized by vector r = (r 1, …, r n ). Suppose further that for each player i, the solution of the player’s maximization problem is characterized by Eq. (1). A conjectural variation equilibrium (CVE) for the given vector r of conjectures consists of the vector of players’ strategies x ∗(r) = (x 1 ∗(r), …, x n ∗(r)) and the vector of personalized aggregates A ∗(r) = (A 1 ∗(r), …, A n ∗(r)) that satisfy the system of equations

It is assumed that the solution of this system of equations exists for the values of conjectures in sets R i . There may be multiple solutions of the system; in the analysis below I consider any particular solution that is locally unique and well-behaved.

Although conjectures are about changes in a player’s strategy and reactions to them, the conjectural variation equilibrium is a static concept. However, it can be interpreted as a convenient short-cut summarizing the result of a more explicit dynamic analysis,Footnote 5 and this is the interpretation I have in mind by focusing on CVE in this paper.

2.3 Consistent Conjectures

Recall that a conjecture of player i is a belief about the change in the personalized aggregate A i in response to a change in player i’s strategy x i . To define consistent conjectures, let x i vary unconstrained and concentrate on optimal responses of the other players. Consider the system of equations

which is like system (2) except that the first-order condition for player i is not there. Thus, the strategy x i of player i is not constrained to be optimal; it can take any value. The strategies of the other players are still characterized by the first-order conditions; thus the system describes optimal responses of the other players to arbitrary values of x i . Denote a solution of system (3) as (x 1 ∗∗(x i ), …, x i−1 ∗∗(x i ), x i+1 ∗∗(x i ), …, x n ∗∗(x i ); A 1 ∗∗(x i ), …, A n ∗∗(x i )).

Consider a vector of conjectures r and a certain CVE (x ∗, A ∗) = (x 1 ∗, …, x n ∗; A 1 ∗, …, A n ∗) for these conjectures. Note that for x i = x i ∗ there exists a solution of system (3) with x j ∗∗(x i ∗) = x j ∗ for all j ≠ i and A j ∗∗(x i ∗) = A j ∗ for all j = 1, …, n. Consider such a solution and consider A i ∗∗(x i ). Conjecture r i of player i is consistent if \(r_{i} = \frac{dA_{i}^{{\ast}{\ast}}} {dx_{i}} (\mathbf{x^{{\ast}}},\mathbf{A^{{\ast}}})\), i.e. the conjecture about the reaction of the personalized aggregate is, to a first approximation, correct at equilibrium.Footnote 6

Whether a particular conjecture r i is consistent depends on the vector of conjectures r −i of the other players. Given a vector r, it is possible that some players hold consistent conjectures and others not. One can define conjectures to be mutually consistent if for all i, r i is consistent against r −i . However, it will not be important for the analysis of conjectures of player i what conjectures the other players hold thus I do not focus only on mutually consistent conjectures.

3 Evolutionary Stability of Conjectures

Imagine that for each of the n player roles there is a large (infinite) population of players, and players from each population from time to time are called to play the game G against opponents randomly drawn from the other populations. Consider the population for the role of player i. Each player in the population has some conjectures. Suppose that in all other player populations conjectures have stabilized on some values r −i . Thus, if called to play, a player with a certain conjecture r i from the population of players i will play the game against the other players with conjectures r −i . Suppose that when the game is played, a CVE is played. The question is: for the given conjectures r −i of the other players, which conjecture of player i is evolutionarily stable?

Different conjectures in the population for the role of player i will lead to different CVEs and thus to different payoffs. Conjecture r i ES is said to be evolutionarily stable (Maynard Smith and Price 1973; Selten 1980) if

The above inequality means that in the population for the role of player i, a player with conjecture r i ES will get a higher payoff when called to play than a player with any other value r i of the conjecture. The evolutionary intuition is that players with any other conjecture r i in the population for the role of player i would have lower fitness than the players with conjecture r i ES. Therefore evolution will favor players with conjecture r i ES to survive and thrive.Footnote 7

With the alternative interpretation that players first choose their conjectures and then play a CVE of the game G, an evolutionarily stable conjecture of player i is a strict best response of player i to the given conjectures of the other players. If a vector of conjectures r ES = (r 1 ES, …, r n ES) is such that for each player i the conjecture r i ES is evolutionarily stable given r −i ES, then (r 1 ES, …, r n ES) is a strict Nash equilibrium in the game where players choose conjectures and their payoffs are determined via conjectural variations equilibria.

Whatever the interpretation, an evolutionary stable conjecture solves

The first-order condition for maximization isFootnote 8

Therefore (provided that \(\frac{\partial u_{i}} {\partial A_{i}}\neq 0\) and \(\frac{\partial x_{i}^{{\ast}}} {\partial r_{i}} \neq 0\)), \(-\frac{\partial u_{i}/\partial x_{i}} {\partial u_{i}/\partial A_{i}} = \frac{dA_{i}^{{\ast}}/dr_{ i}} {\partial x_{i}^{{\ast}}/\partial r_{i}}\). Since from Eq. (1) \(r_{i} = -\frac{\partial u_{i}/\partial x_{i}} {\partial u_{i}/\partial A_{i}}\), an interior evolutionarily stable conjecture satisfies

Speaking somewhat loosely in mathematical terms, if dr i = ∂ r i is treated as a small change in the independent variable r i , then it can be canceled from (4). Note also that dx i = dx i ∗ = ∂ x i ∗ if only r i changes. Therefore \(r_{i}^{ES} = \frac{dA_{i}^{{\ast}}} {dx_{i}}\). Recall that a conjecture is consistent if \(r_{i} = \frac{dA_{i}^{{\ast}{\ast}}} {dx_{i}}\). Since at a CVE A i ∗∗(x i ∗) = A i ∗, the first-order condition for evolutionary stability and the consistency condition are essentially the same.Footnote 9

For a more formal demonstration of the reasoning, consider system (2). To simplify notation, focus on i = 1. Differentiating each line of (2) with respect to r 1,

Define

and

If | M | ≠ 0, by Cramer’s rule, \(\frac{\partial x_{1}^{{\ast}}} {\partial r_{1}} = \frac{1} {\vert M\vert }\left (-\frac{\partial F_{1}} {\partial r_{1}} \right )\vert M_{-11}\vert \) and \(\frac{\partial A_{1}^{{\ast}}} {\partial r_{1}} = \frac{1} {\vert M\vert }(-1)^{n}\frac{\partial F_{1}} {\partial r_{1}} \vert M_{-1A}\vert \). Therefore, if | M −11 | ≠ 0 (from Eq. (1) \(\frac{\partial F_{1}} {\partial r_{1}} = \frac{\partial u_{i}} {\partial A_{i}}\) thus \(\frac{\partial F_{1}} {\partial r_{1}} \neq 0\) if \(\frac{\partial u_{i}} {\partial A_{i}}\neq 0\)), Eq. (4) becomes

To determine \(\frac{dA_{1}^{{\ast}{\ast}}} {dx_{1}}\), consider system (3). Differentiating each of the equations with respect to x 1,

Define

and

Then | L −11 | = (−1)n | M −11 | and | L −1A | = − | M −1A | . Using Cramer’s rule again, \(\frac{dA_{1}^{{\ast}{\ast}}} {dx_{1}} = \frac{1} {\vert L_{-11}\vert }\vert L_{-1A}\vert = \frac{-\vert M_{-1A}\vert } {(-1)^{n}\vert M_{-11}\vert } = \frac{(-1)^{n-1}\vert M_{ -1A}\vert } {\vert M_{-11}\vert }\), which is the same as the right-hand side of Eq. (5). Thus, the following proposition is proved:

Proposition 1

Consider a semi-aggregative representation of the game G and consider conjecture profile r = (r 1 ,…,r n ). Suppose that there exists a CVE ( x ∗ ( r ), A ∗ ( r )) for this r . If \(\frac{\partial u_{i}} {\partial A_{i}}\neq 0\) , |M| ≠ 0 and |M −11 | ≠ 0 at r and ( x ∗ ( r ), A ∗ ( r )), then if r i is an evolutionarily stable conjecture for player i, then it is a consistent conjecture for player i.

Since the analysis was based only on the first-order condition for evolutionary stability, it is not necessarily the case that a consistent conjecture is evolutionarily stable. Concavity or quasi-concavity conditions on the indirect function u i (x i (r), A i (r)) can guarantee this. Instead of stating these conditions in general, evolutionarily stability of consistent conjectures is demonstrated for particular games in Sect. 4.

The practical usefulness of the result is that it is usually easier to find consistent conjectures than to derive the indirect function to search for the evolutionarily stable ones. Since the result shows that in well-behaved games only consistent conjectures can be evolutionarily stable in the interior of the conjecture space, the search for evolutionarily stable conjectures can be reduced to the consistent ones.

The conceptual usefulness of the result is to provide foundations for consistent conjectures. Consistency of conjectures is not always accepted as a plausible criterion for preferring some conjectures over others.Footnote 10 The result in this paper shows though, that if players are endowed with conjectures that are subject to evolutionary pressure (or, equivalently, if players could choose conjectures before playing the game), then only consistent conjectures can survive such a process.

Note that the proof of the result concentrated on player i, while taking arbitrary conjectures held by the other players. The conjectures of the other players may or may not be consistent; if one wants all players to have evolutionarily stable conjectures, then only profiles with mutually consistent conjectures can be such. The result shows that it is best for player i to have consistent conjectures whatever the conjectures of the other players are (but which value of the conjecture is consistent, and thus possibly evolutionarily stable, for player i depends on the current conjectures of the other players).Footnote 11

The current result generalizes the previous ones in Possajennikov (2009, 2015) to asymmetric games with more than two players. In principle, the games do not even need to have an obvious aggregative structure: what was used is that the players make conjectures about the appropriate quantity A i that was relevant for their payoff. In general, the function f i determining this quantity may be complicated and thus it is not likely that the players would consider conjectures about it; however, in some games, illustrated in the next section, the aggregate quantity A i arises naturally in the formulation of the problem.

4 Examples

4.1 Semi-public Good Games

Cornes and Sandler (1984a,b) explored the public good model, including the impact of various conjectures and the possibility of impure public goods, where a player’s contribution to a public good also provides a private benefit. I will use instead the formulation of semi-public goods from Costrell (1991) that models the same idea—that a player benefits more from his or her own contribution to a public good than the other players do—in a more transparent manner. The formulation also encompasses a pure public good model.

Suppose that each player i has a money endowment m i that can be spent either on a private good or on a semi-public good. Assuming for simplicity that prices of all goods are equal and normalizing the price to 1, m i = y i + x i , where y i is the amount spent on the private good and x i the amount spent on the public good. Player i has the utility function u i (y i , G i ), where G i is the quantity of the public good available to player i. The semi-public nature of the public good is modeled by G i = x i + b i ∑ j ≠ i x j , where 0 < b i ≤ 1. Player i benefits most from his or her own contribution to the public good, but other players’ contributions also spillover to player i’s benefit. Quantity G i naturally plays the role of the personalized aggregate for player i.Footnote 12

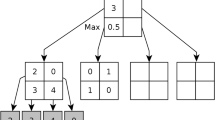

To illustrate the result in the previous section, consider the three-player case (n = 3) and Cobb-Douglas utility functions for all players

with 0 < α i < 1. Suppose that each player i has conjecture r i ≥ 0. Player i’s first-order condition for utility maximization is \(-\alpha _{i}(m_{i} - x_{i})^{\alpha _{i}-1}G_{i}^{1-\alpha _{i}} + (1 -\alpha _{i})(m_{i} - x_{i})^{\alpha _{i}}G_{i}^{-\alpha _{i}}r_{i} = 0\), or, in the interior where x i ≠ m i and G i ≠ 0, −α i G i + (1 −α i )(m i − x i )r i = 0. Therefore

characterizes the solution of player i utility maximization problem.Footnote 13

To find consistent conjectures for player 1, consider the system

Substituting the last three equations into the first two,

If b j < 1, j = 2, 3, then the solution of these two equations is guaranteed to exist. It is

Since G 1 ∗∗(x 1) = x 1 + b 1 x 2 ∗∗(x 1) + b 1 x 3 ∗∗(x 1), the consistent conjecture is

This consistent conjecture is the unique candidate to be evolutionarily stable. Note that the consistent conjecture is less than unity, meaning that player 1 (correctly) expects the other players to partially offset an increase in his or her contribution to the public good. This exacerbates the inefficiency of the private provision of the good.

A CVE of the game is characterized by the equations

Substituting the last three equations into the first three, the system becomes

Let

Then \(x_{1}^{{\ast}} = \frac{\vert M_{1}\vert } {\vert M\vert }\), \(x_{2}^{{\ast}} = \frac{\vert M_{2}\vert } {\vert M\vert }\), \(x_{3}^{{\ast}} = \frac{\vert M_{3}\vert } {\vert M\vert }\) and \(G_{1}^{{\ast}} = \frac{\vert M_{1}\vert } {\vert M\vert } + b_{1}\frac{\vert M_{2}\vert +\vert M_{3}\vert } {\vert M\vert }\).

Evolutionarily stable conjectures of player 1 are found from the problem

The first-order condition for maximization is

Since in a CVE −α i G i + (1 −α i )(m i − x i )r i = 0, the condition simplifies to

Consider \(\frac{dx_{1}^{{\ast}}} {dr_{1}} = \frac{1} {\vert M\vert }\left (\frac{\partial \vert M_{1}\vert } {\partial r_{1}} \vert M\vert -\vert M_{1}\vert \frac{\partial \vert M\vert } {\partial r_{1}} \right )\). Since \(\frac{\partial \vert M_{1}\vert } {\partial r_{1}} = m_{1}(1 -\alpha _{1})((1 -\alpha _{2})r_{2} +\alpha _{2})((1 -\alpha _{3})r_{3} +\alpha _{3}) - m_{1}(1 -\alpha _{1})\alpha _{2}b_{2}\alpha _{3}b_{3}\) and \(\frac{\partial \vert M\vert } {\partial r_{1}} = (1 -\alpha _{1})((1 -\alpha _{2})r_{2} +\alpha _{2})((1 -\alpha _{3})r_{3} +\alpha _{3}) - (1 -\alpha _{1})\alpha _{2}b_{2}\alpha _{3}b_{3}\),

where | K 1 | = α 1(1−α 1)m 1((1−α 2)r 2(1−α 3)r 3+(1−α 2)r 2 α 3(1−b 1 b 3)+α 2(1−α 3)r 3(1−b 1 b 2)+α 2 α 3(1−b 2 b 3−b 1 b 3−b 1 b 2+2b 1 b 2 b 3)+b 1(((1−α 2)r 2+α 2(1−b 2))m 3(1−α 3)r 3+m 2(1−α 2)r 2((1−α 3)r 3+α 3(1−b 3)))) > 0.

Consider now \(\frac{dG_{1}^{{\ast}}} {dr_{1}} = \frac{dx_{1}^{{\ast}}} {dr_{1}} + b_{1}\left (\frac{dx_{2}^{{\ast}}} {dr_{1}} + \frac{dx_{3}^{{\ast}}} {dr_{1}} \right )\). Since \(\frac{dx_{2}^{{\ast}}} {dr_{1}} = \frac{1} {\vert M\vert }\left (\frac{\partial \vert M_{2}\vert } {\partial r_{1}} \right.\vert M\vert -\vert M_{2}\vert \left.\frac{\partial \vert M\vert } {\partial r_{1}} \right )\) and \(\frac{\partial \vert M_{2}\vert } {\partial r_{1}} = (1-\alpha _{1})m_{2}(1-\alpha _{2})r_{2}((1-\alpha _{3})r_{3}+\alpha _{3})+m_{1}(1-\alpha _{1})\alpha _{2}b_{2}\alpha _{3}b_{3}-(1-\alpha _{1})\alpha _{2}b_{2}m_{3}(1-\alpha _{3})r_{3}-m_{1}(1-\alpha _{1})\alpha _{2}b_{2}((1-\alpha _{3})r_{3}+\alpha _{3})\),

Analogously,

Therefore, \(-r_{1}\frac{dx_{1}^{{\ast}}} {dr_{1}} + \frac{dG_{1}^{{\ast}}} {dr_{1}} = 0\) is equivalent to

and thus a candidate evolutionarily stable conjecture is

the same as the consistent conjecture.

Now note that the left-hand side of the first order condition (6) is positive for r 1 < r 1 ES and negative for r 1 > r 1 ES. Thus r 1 ES is indeed evolutionarily stable.

Proposition 2

If the parameters of the semi-public good game of this section are such that for given r j ,r k and consistent

the CVE ( x ∗ ( r ), A ∗ ( r )) is interior, then conjecture r i C is evolutionarily stable for player i.

To illustrate the proposition, consider first the symmetric case m 1 = m 2 = m 3 = m, a 1 = a 2 = a 3 = a, b 1 = b 2 = b 3 = b. It is then natural to expect conjectures to be symmetric too, r 1 = r 2 = r 3 = r. The (mutual) consistency condition then reduces to \(r = 1 -\frac{2\alpha b^{2}(\alpha +(1-\alpha )r-\alpha b)} {(\alpha +(1-\alpha )r)^{2}-\alpha ^{2}b^{2}} = 1 - \frac{2\alpha b^{2}} {\alpha +(1-\alpha )r+\alpha b}\). This holds if (1 −α)r 2 + (2α +α b − 1)r +α(2b 2 − b − 1) = 0. For r = 0, the left-hand side is α(2b 2 − b − 1) < 0 for 0 < b < 1; for r = 1, the left-hand side is 2α b 2 > 0. For positive values of conjectures there is thus one consistent r C ∈ (0, 1), confirming the result in Costrell (1991) that consistent conjectures correspond to negative reactions, i.e. if a player increases his or her contributions, the other players decrease theirs.Footnote 14

The proposition can be used to find consistent and evolutionarily stable conjectures also for cases that are asymmetric either in parameters or conjectures. For example, consider symmetric values of parameters m = 1, b = 0. 5 and α = 0. 5. If players 2 and 3 have the (Nash) conjectures r 2 = r 3 = 1, then the consistent conjecture for player 1 is r 1 C = 0. 8 (and it is evolutionarily stable because the CVE for these conjectures is interior). Table 1 shows the numerical calculations to find (mutually) consistent conjectures for some particular values of the parameters, and it also shows that the CVEs for these conjectures are interior. Therefore the consistent conjectures in Table 1 are also evolutionarily stable. Note that as the parameter α increases, less weight is put in the utility function on the public good; mutually consistent conjectures then decrease and so do contributions to the public good. The last line in the table shows that asymmetries between players should not be too large for an interior solution to exist; if the parameter α 1 increased further, x 1 ∗ becomes 0 and the propositions cease to apply.

4.2 Contests

Consider rent-seeking contests introduced in Tullock (1980) and investigated using the techniques for aggregative games in Cornes and Hartley (2003, 2005). Each player i contributes a costly effort x i ≥ 0 and can win a prize of value V with probability \(\frac{x_{i}} {x_{1}+\ldots +x_{n}}\). Each player i’s payoff function is thus given by

where A = x 1 + … + x n is the aggregate.Footnote 15 This aggregate is the same for all players; the game is truly aggregative. The game can still be asymmetric though, represented by possibly different marginal costs c i of the players.

Consider player i with conjecture r i ≥ 0. The first-order conditions for player i’s payoff maximization problem is \(F_{i} = \frac{b_{i}A-b_{i}x_{i}r_{i}} {A^{2}} V - c_{i} = 0\), or \(\frac{1} {A^{2}} (b_{i}V (A - x_{i}r_{i}) - c_{i}A^{2}) = 0\). Writing A = x i + A −i , the first order condition becomes \(\frac{1} {A^{2}} (-c_{i}x_{i}^{2} + ((1 - r_{i})V - 2c_{i}A_{-i})x_{i} + (V - c_{i}A_{-i})A_{-i} = 0\). The left-hand side is negative as x i → ∞ and positive at x i = 0 if V − c i A −i > 0. In this case, the equation

characterizes the choice of player i.

Consider again for illustration the case of three players (n = 3). The system describing a CVE is

(since there is only one aggregate, there is only one additional accounting equation). To solve the system, from the first three equations \(x_{i} = \frac{A} {r_{i}V }(V - c_{i}A)\). Therefore \(A - \frac{A} {r_{1}V }(V - c_{1}A) - \frac{A} {r_{2}V }(V - c_{2}A) - \frac{A} {r_{3}V }(V - c_{3}A) = 0\), or r 1 r 2 r 3 V 3 − r 2 r 3 V 2(V − c 1 A) − r 1 r 2 V 2(V − c 2 A) − r 1 r 2 V 2(V − c 3 A) = 0. Thus

To find consistent conjectures of player 1, consider the system

Solving for x 2 and x 3 from the first two equations and substituting into the third one gives \(A - x_{1} - \frac{A} {r_{2}V }(V - c_{2}A) - \frac{A} {r_{3}V }(V - c_{3}A) = 0\), or (r 3 c 2 + r 2 c 3)A 2 + (r 2 r 3 − r 2 − r 3)VA − r 2 r 3 Vx 1 = 0. Using the implicit function theorem,

Since A ∗∗(x 1 ∗) = A ∗, \(\frac{dA^{{\ast}{\ast}}} {dx_{1}} (x_{1}^{{\ast}})\) can be found using A ∗. Rearranging, the consistent conjecture satisfies

Simplifying this expression leads to (c 2 r 1 r 3 − c 1 r 2 r 3 + c 3 r 1 r 2)(r 2 r 3 + r 1 r 3 + r 1 r 2 − r 1 r 2 r 3) = 0. If r 2 r 3 + r 1 r 3 + r 1 r 2 − r 1 r 2 r 3 = 0, then A ∗ = 0 thus x 1 ∗ = x 2 ∗ = x 3 ∗ = 0, which is not an interior equilibrium. Thus consider c 2 r 1 r 3 − c 1 r 2 r 3 + c 3 r 1 r 2 = 0. The consistent conjecture of player 1 possibly leading to an interior CVE is thus

For evolutionary stability analysis of conjectures of player 1, consider

The first order condition for maximization is \(\frac{1} {(A^{{\ast}})^{2}} V \left (\frac{dx_{1}^{{\ast}}} {dr_{1}} A^{{\ast}}- x_{1}^{{\ast}}\frac{dA^{{\ast}}} {dr_{1}} \right ) - c_{1}\frac{dx_{1}^{{\ast}}} {dr_{1}} = 0\). Using Eq. (7), the condition can be rewritten as

Equation (7) also implies that \(x_{1}^{{\ast}} = \frac{1} {r_{1}} A^{{\ast}}- \frac{c_{1}} {r_{1}V }(A^{{\ast}})^{2}\). Therefore \(\frac{dx_{1}^{{\ast}}} {dr_{1}} = \frac{1} {r_{1}^{2}} \left (\frac{dA^{{\ast}}} {dr_{1}} r_{1} - A^{{\ast}}\right ) -\frac{c_{1}} {V } \frac{1} {r_{1}^{2}} \left (2A^{{\ast}}\frac{dA^{{\ast}}} {dr_{1}} r_{1} - (A^{{\ast}})^{2}\right )\). Equation (9) can then be written as

Using A ∗ from Eq. (8), finding \(\frac{dA^{{\ast}}} {dr_{1}} = \frac{-r_{2}r_{3}(c_{2}r_{3}+c_{3}r_{2}-c_{1}r_{3}-c_{1}r_{2}+c_{1}r_{2}r_{3})} {(r_{2}r_{3}c_{1}+r_{1}r_{3}c_{2}+r_{1}r_{2}c_{3})^{2}} V\), and substituting, the first-order condition (9) becomes

Therefore, provided that the CVE is interior, the first order condition is satisfied only if c 3 r 1 r 2 − c 1 r 2 r 3 + c 2 r 1 r 3 = 0, i.e.

The unique candidate for the evolutionarily stable conjecture of player 1 is the consistent conjecture of the player.

Suppose that c 2 r 3 + c 3 r 2 − c 1 r 3 − c 1 r 2 + c 1 r 2 r 3 = (c 2 − c 1)r 3 + (c 3 − c 1)r 2 + c 1 r 2 r 3 > 0,which is the case unless all r i = 0 or unless player 1 has marginal cost much higher than those of the other players. Then the left-hand side of (10) is positive if r 1 < r 1 ES and negative if r 1 > r 1 ES. The consistent conjecture is then evolutionarily stable.

Proposition 3

If the parameters of the contest game of this section are such that for given r j ,r k and consistent

the CVE ( x ∗ ( r ), A ∗ ( r )) is interior and (c j − c i )r k + (c k − c i )r j + c i r j r k > 0, then conjecture r i C is evolutionarily stable for player i.

Consider again a few numerical examples to illustrate the proposition. Suppose that the players are symmetric, c 1 = c 2 = c 3 = c, and that they hold symmetric conjectures r 1 = r 2 = r 3 = r. Then the condition for the (mutually) consistent conjectures becomes \(r = \frac{r} {2}\). The consistent conjecture is then r C = 0, i.e. each player expects that a increase in his or her effort is fully offset by the decrease in the effort of the other players, leaving A unchanged. Although A ∗ = V and \(x_{i}^{{\ast}} = \frac{V } {3}\) is an interior equilibrium with such a conjecture, the proposition does not apply because the condition (c j − c i )r k + (c k − c i )r j + c i r j r k > 0 is not satisfied. Indeed, if r j = 0 for one of the players, then Eq. (7) becomes A(V − A) = 0, leading to A = V in equilibrium and zero payoff to all players. Any conjecture r i ≠ 0 of player i implies a corner solution x i ∗ = 0 in a CVE, again with zero payoff. Therefore r C = 0 for all players is not evolutionarily stable but can be seen as neutrally stable for player i: alternative conjectures cannot lead to a higher payoff although they can be equally successful.Footnote 16

Although the proposition does not apply to the symmetric case, it still can be used for asymmetric costs or conjectures. Table 2 shows numerical calculations for finding consistent conjectures of player 1, for given conjectures of players 2 and 3 (conjectures r 2 and r 3 are not consistent; mutually consistent conjectures are always zero for the parameters in the table). Those conjectures of player 1 are also evolutionarily stable because the conditions in Proposition 3 are satisfied. Consistent conjectures of player 1 increase with the given conjectures of the other players and with the cost of player 1 but typically stay below unity, implying that the player correctly expects the aggregate to increase by less than the increase in his or her own effort. However, it is also possible that player 1 correctly anticipates that the aggregate increases by more than the increase in player’s own effort if the cost and the other players’ conjectures are high enough (the last line of the table). Equilibrium efforts are inversely related to cost parameters and to conjectures; but it is possible (the penultimate line in the table) that a player with a higher cost makes a higher effort in equilibrium than the other players, due to this player holding lower conjectures (that also happen to be consistent).

5 Conclusion

Richard Cornes has done much work on public good games, on contests, and on games with aggregative structure in general. Some of his work also considered conjectural variations, mostly in public good games. In this paper I also consider conjectures and I use representations of games that share some properties with aggregative games. In such representations, there is a personalized aggregate for each player; I call these representations semi-aggregative.

The idea of a semi-aggregative representation is that a player forms appropriate conjectures about how the aggregate changes and how it affects the player’s payoff. In a sense, the game is reduced to just two players: the player him- or herself and the aggregate opponent. Thus the dimensionality of players’ conjectures is reduced and such conjectures can be analyzed.

I show that if conjectures are subject to evolution, then only consistent conjectures can be evolutionarily stable. The result provides foundations for the (much discussed) notion of consistent conjectures as the result of evolution. On the other hand, the result can be used to find evolutionarily stable conjectures more easily, through finding first consistent conjectures. While this observation is not new for some classes of games, the result in this paper extends it to any well-behaved game.

The result is illustrated on the examples of (impure) public good games and contests. Although finding the exact value of consistent (and evolutionarily stable) conjectures in specific asymmetric games is still a difficult task (thus only 3-player examples are considered for illustration), the point of the examples is to demonstrate that it can be done, and that often consistent conjectures are indeed evolutionarily stable. The choice of public good games and contests as the examples shows that those games, to which Richard Cornes dedicated much of his work, are still a source of useful insights.

Notes

- 1.

- 2.

Another interpretation is that players first choose conjectures and then play the game. The search is then for an equilibrium in the game of choosing conjectures. I nevertheless prefer the evolutionary interpretation, which makes it clearer that the process of forming beliefs and choosing strategies occur at different times. This evolutionary interpretation is an example of the “indirect evolution approach” (Güth and Yaari 1992).

- 3.

For example, consider the identity u i (x 1, …, x n ) = x i + u i (x 1, …, x n ) − x i . Let A i = f i (x 1, …, x n ) = u i (x 1, …, x n ) − x i and write u i (x i , A i ) = x i + A i .

- 4.

In a homogeneous good market with one price, the aggregate (the price) is the same for all firms, and the game is properly aggregative.

- 5.

- 6.

- 7.

Note that the definition focuses on player i treating the other players conjectures as fixed; Selten (1980) showed that such an approach is appropriate in asymmetric games.

- 8.

To save space, arguments of derivatives are omitted. It is understood that they are evaluated at r = (r i ES, r −i ) and CVE (x ∗(r), A ∗(r)).

- 9.

The relationship between consistent conjectures and the conjectures that maximize the indirect payoff function u i (x i ∗(r i , r j ), A i ∗(r i , r j )) was noted by Itaya and Dasgupta (1995) for a two-player public good game.

- 10.

- 11.

The observation that the consistent conjecture is the best response conjecture of one player to any given conjecture of the other player was made in Dixon and Somma (2003) for a linear-quadratic Cournot duopoly game.

- 12.

Note that if b i = 1 for all i, then the public good becomes a pure public good and the same aggregate G = ∑ i = 1 n x i can be used for all players.

- 13.

The second-order condition \(\alpha _{i}(1 -\alpha _{i})(m_{i} - x_{i})^{\alpha _{i}-2}G_{i}^{-\alpha _{i}-1}(-G_{i}^{2} - 2(m_{i} - x_{i})G_{i}r_{i} - (m_{i} - x_{i})^{2}r_{i}^{2}) < 0\) is satisfied for r i ≥ 0 and all interior x i , G i .

- 14.

Note that if b = 1, then r = 0 is the solution of the consistency condition (Sugden 1985). However, for r = 0 the solution of the players’ maximization problem is not interior and the first-order conditions do not characterize it. The propositions do not apply in this case.

- 15.

To avoid indeterminacies, let \(u_{i} = \frac{1} {n}\) if A = 0.

- 16.

For n = 2, there are non-zero symmetric conjectures that are consistent and evolutionarily stable. The consistency condition for n = 2 is \(r_{i} = \frac{c_{i}} {c_{j}}r_{j}\). If c i = c j , then any r is consistent. It is shown in Possajennikov (2009) that any 0 < r < 2 is evolutionarily stable then.

References

Bresnahan, T. F. (1981). Duopoly models with consistent conjectures. American Economic Review, 71, 934–945.

Cabral, L. M. B. (1995). Conjectural variations as a reduced form. Economics Letters, 49, 397–402.

Corchón, L. C. (1994). Comparative statics for aggregative games. The strong concavity case. Mathematical Social Sciences, 28, 151–165.

Cornes, R. (2016). Aggregative environmental games. Environmental and Resource Economics, 63, 339–365.

Cornes, R., & Hartley, R. (2003). Risk aversion, heterogeneity and contests. Public Choice, 117, 1–25.

Cornes, R., & Hartley, R. (2005). Asymmetric contests with general technologies. Economic Theory, 26, 923–946.

Cornes, R., & Hartley, R. (2007). Aggregative public good games. Journal of Public Economic Theory, 9, 201–219.

Cornes, R., & Hartley, R. (2011). Well-behaved aggregative games. Presented at the 2011 Workshop on Aggregative Games at Strathclyde University, Glasgow.

Cornes, R., & Hartley, R. (2012). Fully aggregative games. Economics Letters, 116, 631–633.

Cornes, R., & Sandler, T. (1983). On commons and tragedies. American Economic Review, 73, 787–792.

Cornes, R., & Sandler, T. (1984a). The theory of public goods: Non-nash behavior. Journal of Public Economics, 23, 367–379.

Cornes, R., & Sandler, T. (1984b). Easy riders, joint production and public goods. Economic Journal, 94, 580–598.

Cornes, R., & Sandler, T. (1996) The theory of externalities, public goods and club goods (2nd ed.). Cambridge: Cambridge University Press.

Costrell, R. M. (1991). Immiserizing growth with semi-public goods under consistent conjectures. Journal of Public Economics, 45, 383–389.

Dixon, H. D., & Somma, E. (2003). The evolution of consistent conjectures. Journal of Economic Behavior & Organization, 51, 523–536.

Dockner, E. J. (1992). Dynamic theory of conjectural variations. Journal of Industrial Economics, 40, 377–395.

Güth, W., & Yaari, M. (1992). An evolutionary approach to explain reciprocal behavior in a simple strategic game. In U. Witt (Ed.), Explaining process and change – Approaches to evolutionary economics (pp. 23–34). Ann Arbor: University of Michigan Press.

Itaya, J.-I., & Dasgupta, D. (1995). Dynamics, consistent conjectures, and heterogeneous agents in the private provision of public goods. Public Finance/Finances Publiques, 50, 371–389.

Itaya, J.-I., & Okamura, M. (2003). Conjectural variations and voluntary public good provision in a repeated game setting. Journal of Public Economic Theory, 5, 51–66.

Laitner, J. (1980). Rational duopoly equilibria. Quarterly Journal of Economics, 95, 641–662.

Makowski, L. (1987). Are ‘rational conjectures’ rational? Journal of Industrial Economics, 36, 35–47.

Maynard Smith, J., & Price, G. R. (1973). The logic of animal conflict. Nature, 246(Nov 2), 15–18.

Müller, W., & Normann, H.-T. (2005). Conjectural variations and evolutionary stability: A rationale for consistency. Journal of Institutional and Theoretical Economics, 161, 491–502.

Perry, M. K. (1982). Oligopoly and consistent conjectural variations. Bell Journal of Economics, 13, 197–205.

Possajennikov, A. (2009). The evolutionary stability of constant consistent conjectures. Journal of Economic Behavior & Organization, 72, 21–29.

Possajennikov, A. (2015). Conjectural variations in aggregative games: An evolutionary perspective. Mathematical Social Sciences, 77, 55–61.

Selten, R. (1980). A note on evolutionarily stable strategies in asymmetric animal conflicts. Journal of Theoretical Biology, 84, 93–101.

Sugden, R. (1985). Consistent conjectures and voluntary contributions to public goods: Why the conventional theory does not work. Journal of Public Economics, 27, 117–124.

Tullock, G. (1980). Efficient rent-seeking. In J. M. Buchanan, R. D. Tollison, & G. Tullock (Eds.), Toward a theory of the rent-seeking society (pp. 131–146). College Station: Texas A&M University Press.

Acknowledgements

I would like to thank Dirk Rübbelke and Wolfgang Buchholz for this opportunity. I would also like to thank Alex Dickson for inviting me to participate in April 2011 in a workshop on aggregative games, which incited me to think about conjectures and aggregative games, and develop the ideas leading to this paper. I also thank Maria Montero for improving the exposition in the paper.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this chapter

Cite this chapter

Possajennikov, A. (2017). Evolution of Consistent Conjectures in Semi-aggregative Representation of Games, with Applications to Public Good Games and Contests. In: Buchholz, W., Rübbelke, D. (eds) The Theory of Externalities and Public Goods. Springer, Cham. https://doi.org/10.1007/978-3-319-49442-5_5

Download citation

DOI: https://doi.org/10.1007/978-3-319-49442-5_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-49441-8

Online ISBN: 978-3-319-49442-5

eBook Packages: Economics and FinanceEconomics and Finance (R0)