Abstract

In order to locate eyes for iris recognition, this paper presents a fast and accurate eye localization algorithm under active infrared (IR) illumination. The algorithm is based on binary classifiers and fast radial symmetry transform. First, eye candidates can be detected by the fast radial symmetry transform in infrared image. Then three-stage binary classifiers are used to eliminate most unreliable eye candidates. Finally, the mean eye template is employed to identify the real eyes from the reliable eye candidates. A large number of tests have been completed to verify the performance of the proposed algorithm. Experimental results demonstrate that the algorithm proposed in this article is robust and efficient.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

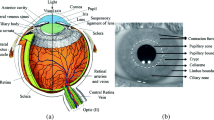

At present, iris recognition is regarded as one of the most reliable and accurate biometrics in all biological recognition technologies [1]. It will gain a wide range of application for personal identification in the public security system, military, counter-terrorism and bank payment system etc. The wide application of iris recognition could create a safer and more convenient living environment for society. In order to exploit the capability of iris recognition, it is extremely crucial to develop the eye localization technology with low complexity and high accuracy.

The existing technologies could be classified into three categories based on the information or patterns [2]. One of the three categories is based on measuring eye characteristics [3–6]. This type of method exploits the inherent features of eyes, such as their distinct shapes and strong intensity contrast [2]. This approach is relatively simple, however, its accuracy is highly affected by eyebrow, fringe, glass frame and illumination etc. Another type of method is based on learning statistic appearance model [2], which extracts useful visual features from a large set of training images [7, 8]. The performance of this method is limited by the training sample space and the high complexity is inevitable. The third type of method is based on structural information [9, 10]. This approach exploits the spatial structure of interior components of eyes or the geometrical regularity between eyes and other facial features in the face context [2]. To overcome the complicated uncontrolled conditions, a statistical eye model is usually integrated into the third type of method.

For our proposed method in this paper, both eye characteristics and structural information are considered. In the next section, the theory of fast radial symmetry transform will be introduced. In Sect. 3, the proposed eye localization method will be described in detail. Assessment method of eye localization precision and experimental results are presented in Sect. 4. The last section concludes this work.

2 Fast Radial Symmetry Transform

Fast radial symmetry transform (FRST) [11] is regarded as the extension of general symmetry transform and a gradient-based interest descriptor in space domain. It can be used to detect high radial symmetry objects. Firstly, it is necessary to introduce two important concepts: orientation projection image \( O_{n} \) and magnitude projection image \( M_{n} \). Orientation projection image \( O_{n} \) denotes the projection count accumulation of each pixel on its gradient orientation under radius \( n. \) Magnitude projection image \( M_{n} \) denotes the gradient magnitude accumulation of each pixel on its gradient orientation under radius \( n. \)

As shown in Fig. 1, \( P_{ + ve} \left( P \right) \) represents the positively-affected pixel location on the positive gradient direction of pixel P. Similarly, \( P_{ - ve} \left( P \right) \) represents the negatively-affected pixel location on the negative gradient direction of pixel P. The coordinates of \( P_{ + ve} \left( P \right) \) and \( P_{ - ve} \left( P \right) \) are given by

Where \( round\left( \cdot \right) \) denotes the operation of getting the nearest integer of each vector element. g(P) is the gradient vector.

Orientation projection image \( O_{n} \) and magnitude projection image \( M_{n} \) should be initialized to zero [11]. For each pair of affected pixels, the values of orientation projection and magnitude projection corresponding points \( P_{ + ve} \left( P \right) \) and \( P_{ - ve} \left( P \right) \) are given by

For the radius n, fast radial symmetry contribution \( S_{n} \) is defined as the convolution

Where

\( A_{n} \) is a 2-D Gaussian filter. \( \alpha \) is the radial strictness parameter. \( k_{n} \) is a scaling factor. The final symmetry transform image S is determined by the average of all radial symmetry contribution \( S_{n} \)

Where N is a vector which is composed of radius n considered, \( len\left( \cdot \right) \) calculates the length of the input vector.

3 Eye Localization Method

3.1 Eye Candidate Detection

Since the excellent performance of FRST on detecting radial symmetry structure, it is exploited to detect the eye candidates in the target image because of the highly symmetry irises and pupils.

The first step of detecting candidates is to search the regions of interest (ROI) in the target image and scale each ROI to 256 × 256 pixels. A ROI is a relatively small region where contains two eyes. While the visual angle of iris camera is usually very small in recognition products, so the whole target image can be an available ROI as shown in Fig. 2. If the background of the target image is extremely rich, it is necessary to employ face detection technology [15] to search ROIs in the target image.

Next, gradient vector \( \left[ {g_{x} \left( P \right)g_{y} \left( P \right)} \right]^{T} \) of each pixel in every ROI is computed by 3 × 3 Sobel operator. Then each ROI can be processed by the FRST according to Sect. 2 and the output image S is shown in Fig. 3. The considered radii values can be set according to the size of ROI. Here, we set the vector N of considered radius to be {7, 9, 11, 12}.

In the FRST output image S, it is obvious that there are some bright regions. These bright regions contain eyes, nostrils, node pads of glasses etc. In order to eliminate the background from the bright regions, we utilize the binarization method to process the image S and the processing result is shown in Fig. 4. Then region generation skill is employed to generate the coordinate of the middle pixel of each eye candidate region as shown in Fig. 5. The coordinate vector of eye candidates could be denoted as \( \left\{ {e_{0} \left( {x_{0} ,y_{0} } \right), \ldots ,e_{M - 1} \left( {x_{M - 1} ,\;y_{M - 1} } \right)} \right\} \), where M is the number of eye candidates.

3.2 Binary Classifiers

Although it is efficient and reliable to detect eye candidates by FRST, binarization and region generation, it also remains a great challenge to identify the eye(s) accurately from the candidates. Because the candidates may also be nostrils, nevus, node pads of glasses etc.

Three-stage binary classifiers are designed to further exclude unreliable eye candidates. The binary classifiers are denoted as \( C_{k} (w_{k} ,h_{k} ),\;k \in \left\{ {0,1,2} \right\} \) shown in Fig. 6, where \( w_{k} \) and \( h_{k} \) are respectively the width and height of classifier \( C_{k} \). Classifier \( C_{0} \) is only a \( 2n_{\hbox{max} } \times 2n_{\hbox{max} } \) square and classifier \( C_{1} \) is composed of three \( 2n_{\hbox{max} } \times 2n_{\hbox{max} } \) squares, where black color represents “1” and white color represents “0”, \( n_{\hbox{max} } \) is the maximum element of radius value vector \( N \). Classifier \( C_{2} \) is a little bit complex and this paper only describes the black region for simplicity. The black region is composed of a \( 2n_{\hbox{max} } \times 2n_{\hbox{max} } \) square, two \( n_{\hbox{max} } \times 0.5n_{\hbox{max} } \) rectangles and two \( 0.25n_{\hbox{max} } \times 0.25n_{\hbox{max} } \) squares. Here \( n_{\hbox{max} } \) is equal to 12.

These binary classifiers are used to classify the binary eye candidates. Then, binarization transform is independently processed with dynamic threshold for each ROI, not for the whole target image. Figure 7 shows the result of ROI binarization transform. Then the eye candidates \( \left\{ {e_{0} \left( {x_{0} ,y_{0} } \right), \ldots ,e_{M - 1} \left( {x_{M - 1} ,\;y_{M - 1} } \right)} \right\} \) are classified respectively by the three-stage binary classifiers. And the steps of classification is shown below.

Firstly, segment the eye candidate regions \( B_{i,k} \left( {s_{i,k} ,\;\;t_{i,k} ,\;w_{i,k} ,\;h_{i,k} } \right) \), \( i \in \left[ {0,\;M - 1} \right], \) \( k \in \left\{ {0,1,2} \right\} \) from the ROI binary image, where \( \left( {s_{i,k} ,\;t_{i,k} } \right) \), \( w_{i,k} \) and \( h_{i,k} \) are respectively the left corner coordinate, width and height of the eye candidate regions. The width \( w_{i,k} \) and height \( h_{i,k} \) are the same as these of classifier \( C_{k} \). The left corner coordinates of eye candidate regions \( B_{i,k} \) are given by

Where \( 1.625n_{\hbox{max} } \) is a half of the black region width in Classifier \( C_{2} \) and the mean of \( round\left( \cdot \right) \) is the same with Eq. (1). If \( s_{i,k} < 0 \) or \( t_{i,k} < 0 \), the eye candidate \( e_{i} \left( {x_{i} ,y_{i} } \right) \) should be rejected.

Secondly, calculate eigenvalue difference \( \Delta v_{k,i} ,\;i \in \left[ {0,\;M - 1} \right],\;k \in \left\{ {0,1,2} \right\} \). Eigenvalue difference \( \Delta v_{k,i} \) is defined as

Where \( v_{k,i} \) is given by

Thirdly, compare the eigenvalue difference \( \Delta v_{k,i} \) with the threshold \( \lambda_{k} \). If \( \Delta v_{k,i} < \lambda_{k} \), it represents the eye candidate \( e_{i} \left( {x_{i} ,y_{i} } \right) \) passes the classification of classifier \( C_{k} \). Suppose there are \( Q \) eye candidates passed the classification of the three-stage classifiers, which are denoted as \( \left\{ {\tilde{e}_{0} \left( {x_{0} ,y_{0} } \right), \ldots ,\tilde{e}_{Q - 1} \left( {x_{Q - 1} ,\;y_{Q - 1} } \right)} \right\} \).

3.3 Eye Localization by IMED Matching

After three-stage classification, one or more non-eye candidates maybe also exist. To detect the real eyes from \( \left\{ {\tilde{e}_{0} \left( {x_{0} ,y_{0} } \right), \ldots ,\tilde{e}_{Q - 1} \left( {x_{Q - 1} ,\;y_{Q - 1} } \right)} \right\} \), template matching is employed which is based on intensity appearance [12]. This approach is relatively reliable and does not require any training. The template is obtained by the average result of 200 eye samples with \( 4n_{\hbox{max} } \times 4n_{\hbox{max} } \) pixels cut from CASIA-Iirs-Distance database (CASIA-Iirs-Distance database is authorized by CASIA). Suppose \( S_{n}^{e} \) is the eye samples cut from ROIs of database images, the mean eye template \( T_{e} \) is given by

Finally, the real eyes are identified through the similarity between eye candidates and eye template \( T_{e} \). Image Euclidean Distance (IMED) [13] is proved to be an excellent assessment of image similarity. Because it can reduce mismatching caused by eye rotation or deformation. The IMED \( d_{r}^{2} \left( {G_{e} ,\;G_{T} } \right)\; \) between eye template and eye candidates is calculated by

Where \( G_{T} \) and \( G_{e} \) are the vectors \( \left\{ {g_{T}^{1} ,g_{T}^{2} , \cdots ,g_{T}^{WH} } \right\} \) and \( \left\{ {g_{e}^{1} ,g_{e}^{2} , \cdots ,g_{e}^{WH} } \right\} \) which are respectively composed of all the pixels of eye template \( T_{e} \) and each eye candidate region \( D_{r} \left( {s,t,w,h} \right),\;r \in \left[ {0,\;Q - 1} \right] \). \( s,\;t,w \) and \( h \) are given by

These eye candidate regions are different from the ones used to be classified.

Smaller image Euclidean distance means higher similarity. So it is easy to search two real eyes from the IMED set \( d_{r}^{2} ,\;r \in \left[ {0,\;Q - 1} \right] \). Table 1 shows the proposed eye localization algorithm framework. And the result of eyes localization is shown in Fig. 8.

4 Experimental Results

4.1 Assessment Method of Eye Localization Precision

In order to assess the precision of the proposed method, the most commonly used measurement proposed in [14] is used in this article, which is given by

Where \( C_{l} \) and \( C_{r} \) are the true eye center. \( d_{l} \) and \( d_{r} \) are the Euclidean distances between the detected eye center and the true eye center.

4.2 Results and Analysis

The proposed method is tested on the JAFFE and CASIA-Iirs-Distance databases for its performance. The database of CASIA-Iirs-Distance is from the Center for Biometrics and Security Research of CASIA. There are 2576 images in the database which are taken under active infrared (IR) illumination. People on the images have different postures and some wear glasses.

The experimental results on JAFFE database are shown in the Table 2. The proposed method performance is superior to multi-view eyes localization method [16], and worse than Tang’s [17]. Tang’s method employs the Adaboost and SVM skills to obtain excellent performance, but it introduces a large amount of computation and complex training. So it is extremely difficult to achieve real-time eye localization.

The methods proposed by Zhu [3] and Zhao [18] can be compared with the method proposed in this article since they also exploit the active infrared illumination. Table 3 shows the eye localization performance of proposed method when comparing with other representative ones. From Table 3, the proposed method is superior to other representative methods except the one proposed by Zhao. But Zhao’s method is sensitive to the interference of glasses.

5 Conclusions

A novel method based on binary classifiers and fast radial symmetry transform is proposed to improve the accuracy rate of eye localization under active infrared (IR) illumination. It is able to efficiently overcome the detrimental interference from eyebrow, fringe, glass frame and the reflected light from glasses etc. Because besides the geometric structure and intensity information, binary information is also considered in this research. From the experimental results, the proposed method is proved to be superior to most of other states-of-art methods. It is worth mentioning that the complexity degree of our method is highly reduced because the training step is eliminated. Therefore the proposed method could achieve a better tradeoff between eye localization performance and complexity.

References

Sachin, G., Chander, K.: Iris recognition: the safest biometrics. Int. J. Eng. Sci. 4, 265–273 (2011)

Song, F., Tan, X., Chen, S., Zhou, Z.H.: A literature survey on robust and efficient eye localization in real-life scenarios. J. Pattern Recogn. 46(12), 3157–3173 (2013)

Zhu, Z., Fujimura, K., Ji, Q.: Real-time eye detection and tracking under various light conditions. In: Proceedings of the 2002 Symposium on Eye Tracking Research and Applications, vol. 25, pp. 139–144 (2002)

Yuille, A.L., Cohen, D.S., Hallinan, P.W.: Feature extraction from faces using deformable templates. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 104–109 (1989)

Feng, G.C., Yuen, P.C.: Variance projection function and its application to eye detection for human face recognition. Pattern Recogn. Lett. 19(9), 899–906 (1998)

Kothari, R., Mitchell, J.L.: Detection of eye locations in unconstrained visual images. In: Proceedings of International Conference on Image Processing, vol. 3, pp. 519–522 (1996)

Everingham, M.R., Zisserman, A.: Regression and classification approaches to eye localization in face images. In: Proceedings of IEEE Conference on Automatic Face and Gesture Recognition, pp. 441–448 (2006)

Wang, P., Green, M., Ji, Q., Wayman, J.: Automatic eye detection and its validation. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, vol. 3, pp. 164–172 (2005)

Cootes, T.F., Taylor, C.J., Cooper, D.H., Graham, J.: Active shape models-their training and application. Comput. Vis. Image Underst. 61(1), 38–59 (1995)

Tan, X., Song, F., Zhou, Z.H., Chen, S.: Enhanced pictorial structures for precise eye localization under uncontrolled conditions. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 1621–1628, 20–25 June 2009

Loy, G., Zelinsky, A.: Fast radial symmetry for detecting points of interest. IEEE Trans. PAMI 25(8), 959–973 (2003). IEEE Press

Li, W., Wang, Y., Wang, Y.: Eye location via a novel integral projection function and radial symmetry transform. Int. J. Digital Content Technol. Appl. 5(8), 70–80 (2011)

Wang, L., Zhang, Y., Feng, J.: On the euclidean distance of images. IEEE Trans. PAMI 27(8), 1334–1339 (2005). IEEE Press

Jesorsky, O., Kirchberg, K., Frischholz, R.: Robust face detection using the hausdorff distance. In: Proceedings of International Conference on Audio and Video-Based Biometric Person Authentication, pp. 90–95 (2001)

Viola, P., Jones, M.: Robust real-time face detection. Int. J. Comput. Vis. 57(2), 137–154 (2004)

Fu, Y., Yan, H., Li, J., Xiang, R.: Robust facial features localization on rotation arbitrary multi-view face in complex background. J. Comput. 6(2), 337–342 (2011)

Tang, X., Ou, Z., Su, T., Sun, H., Zhao, P.: Robust precise eye location by AdaBoost and SVM techniques. In: Proceedings of International Conference on Advances in Neural Networks, pp. 93–98 (2005)

Zhao, S., Grigat, R.: Robust Eye Detection under active infrared illumination. In: Proceedings of International Conference on Pattern Recognition, vol. 4, pp. 481–484 (2006)

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing AG

About this paper

Cite this paper

Qin, P., Gao, J., Li, S., Ma, C., Yi, K., Fernandes, T. (2016). Binary Classifiers and Radial Symmetry Transform for Fast and Accurate Eye Localization. In: You, Z., et al. Biometric Recognition. CCBR 2016. Lecture Notes in Computer Science(), vol 9967. Springer, Cham. https://doi.org/10.1007/978-3-319-46654-5_4

Download citation

DOI: https://doi.org/10.1007/978-3-319-46654-5_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-46653-8

Online ISBN: 978-3-319-46654-5

eBook Packages: Computer ScienceComputer Science (R0)