Abstract

Face recognition is a significant and pervasively applied computer vision task. With the specific application scenarios being explored gradually, general face recognition methods dealing with visible light images are unqualified. Cross-domain face recognition refers to a series of methods in response to face recognition problems whose inputs may come from multiple modalities, such as visible light images, sketch, near infrared images, 3D data, low-resolution images, thermal infrared images, or cross different ages, expressions, and ethnicities. Compared with general face recognition, cross-domain face recognition has not been widely explored and only few literatures systematically discuss this topic. Face recognition aiming at matching face images from photographs and other image modalities, which is usually called heterogeneous face recognition, has larger cross-domain gap and is a harder problem in this topic. This paper mainly investigates heterogeneous face databases, provides an up-to-date review of research efforts, and addresses common problems and related issues in cross-domain face recognition techniques.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

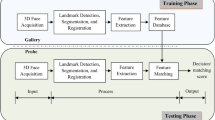

When appearing before a camera, the automated face recognition system can accurately tell who you are, which makes our society move further towards intellectualization. The rapid increase of requirements in different application scenarios, like public security, financial domain, personal safety, and etc., brings big challenges to face recognition. With the development of imaging techniques, the acquirement of image data becomes easier and face images can be collected from kinds of sources, resulting in the emergence of some researches on cross-domain face recognition. We mainly discuss some of the heterogeneous face recognition tasks in this paper, since they are more difficult cross-domain problems: sketches is often used to match a suspect for forensic use; near infrared face images can help eliminate the impact of ambient light; 3D images can model real three dimensional face structures, and capture the surface shape; thermal infrared face images recognition is not very mature but of huge potential which can also be used in medical treatment; low resolution recognition is useful for identifying a person in surveillance video.

Different statistical distribution of face images in kinds of domains makes traditional methods simply computing the distance between the features of two face images invalid. The cross-domain gap from the intra-class distance is bigger than that from inter-class distance. If features of different modalities are pull closer to each other compulsively, the data space will become misshapen and thus cause the over-fitting problem. There are often three ideas to alleviate the cross-modality differences throughout the related literatures: exploring invariant face representation by designing universal face features; synchronizing other modalities based on a corresponding modality; projecting images in multiple domains to a common subspace based on some subspace learning methods. Due to the differences in imaging techniques, the recognition methods are specific in detail for different heterogeneous face recognition tasks. The following parts of this paper will introduce the existing heterogeneous face databases which contain both visible light images (VIS) and face images from other modalities in Sect. 2, list and summarize established heterogeneous face recognition methods in Sect. 3, compare and analyze the experimental results in Sect. 4, and discuss some challenging problems and future research direction in Sect. 5.

2 Database

Heterogeneous face image databases provide intuitional experience and first-hand information for heterogeneous face recognition system. These heterogeneous face databases are used for normal research and as standard test data sets. The general situation is that the images collected in these databases are small-scale, due to that special and professional imaging equipment or manual work are needed.

2.1 Sketch-VIS Face Database

Sketch database is originally used for the research of criminal investigations. However, real forensic sketch is hard to collect and the number of sketch is limited. To create more images for research, there are often four kinds of sketch databases: forensic sketch database, sketches are drawn by artists according to the description of eyewitnesses based on their recollection of the suspects; viewed sketch database, sketches are drawn by artists viewing the corresponding digital photos; semi-forensic sketch database, facial sketches are drawn based on the memory of sketch artists rather than the description of eyewitnesses; composite sketch database, the sketches are composited with candidate facial components using soft kits. The composite sketches are more affordable and easy-acquired than forensic sketches.

-

CUHK Face Sketch Database (CUFS) [viewed]

CUFS includes a total of 606 frontal view faces and the corresponding sketches drawn by artists. The face images are collected under normal lighting condition and with a neutral expression.

-

CUHK Face Sketch FERET Database (CUFSF) [viewed]

CUFSF includes 1,194 photo-sketch pairs in total, being the biggest available sketch database. The photos are from 1194 persons from the FERET database with lighting variations, and the sketches are drawn by artists when viewing the photos with shape exaggeration.

-

IIIT-Delhi Semi-forensic Sketch Database [semi-forensic]

Totally, 140 photos in III-D Viewed Sketch Database are used to compose the semi-forensic sketch database.

-

Forensic Sketch Database [forensic]

This database is collected by Image Analysis and Biometrics Lab. It contains 190 forensic sketch-digital image pairs.

-

PRIP-VSGC Database [composite]

The PRIP-VSGC Database is a software-generated sketch database. There are 123 subjects from the AR database, each with one digital image and 3 composite images using two different kits.

2.2 NIR-VIS Face Database

Owing to the intensity of the near infrared light (NIR) is stronger than visible light, the gray values of face images collected under near infrared lights are only relevant to the distances between objects and the near infrared light sources, which is an excellent characteristic for face recognition.

-

CASIA HFB Database

This database collects face images from 3 modalities: visual, near infrared and three-dimensional (3D) face images. 57 females, and 43 males are included and each has 4 VIS and 4 NIR face images.

-

CASIA NIR-VIS 2.0 Database

It is the existing biggest NIR-VIS database, which consists of NIR and VIS images from 725 subjects in total, each with 1–22 VIS and 5–50 NIR face images. Two views are developed to report unbiased performance. This database also has standard protocols for performance evaluation and reporting.

2.3 Thermal-VIS Face Database

Thermal infrared images are taken under thermal infrared imagery systems and are generated due to the specific heat pattern of each individual. The characteristic of not relying on ambient light helps lower the intra-class variability and contributes to improve the recognition rate. Thermal infrared images can be captured in night environments and are very useful for crime investigation.

-

IRIS Thermal/Visible Face Database (OTCBVS Benchmark Dataset Collection)

This database contains both thermal and visible face images and includes pose, expression and illumination variations. There are 30 identities in the database and 4228 pairs of thermal/visible face images are generated.

-

Natural Visible and Infrared Facial Expression Database(NVIE Database)

The NVIE database collectes images recorded simultaneously by a visible and thermal infrared camera from more than 100 subjects. Expression, illumination and wearing glasses variations are included.

-

PUCV- Visible Thermal-Face (VTF) Database

A total of 12160 images in both visible and thermal spectra from 76 individuals are collected in the PUCV-VTF database. Images from two modalities are acquired simultaneously. There are variations in wearing glasses and facial expressions such as frown, smile, and vowel.

-

Other Thermal Face Recognition Database

The database collected in [4] has 385 participants and different collecting sessions. Wilder et al. [5] acquire a 101 subjects thermal database. A co-registered visual and LWIR images of 3244 face images from 90 individuals are collected by the Equinox Company [6]. A database simultaneously acquired in visible, near-infrared and thermal spectrums is published in [7] which can undergo the research on multiple modalities and get more general perspective on face recognition.

2.4 3D-2D Face Database

Besides can be captured in different spectral bands, faces can also be recorded as measurements of 3D facial shape. The 3D face data has good performance when dealing with illumination and pose variations.

-

UHDB11 Database

The UHDB11 database is collected for 3D-2D face recognition and consists of samples from 23 individuals. This database is generated with different illumination conditions and head poses, and is very challenging companying with the 3D-2D variations.

-

Texas 3D Face Recognition Database (Texas 3DFRD)

This database is a collection of 1149 pairs of facial color and range images of 105 human subjects with high resolution. The images pairs are captured simultaneously. Besides, facial points are located in both 2D and 3D images manually which can help face recognition.

-

FRGCv2.0 database

The FRGCv2.0 database is the biggest 3D-2D face database to the best of my knowledge which consists of 4007 3D-2D face image pairs from 466 individuals and includes pose and expression variations.

3 Methods

3.1 Sketch-VIS Face Recognition

To address the sketch recognition problem, researchers propose photo/sketch synthesis method. It is the earliest proposed method, and once images from different modalities are synthesized into the same modality, traditional face recognition methods can be used. The whole trends for face synthesis methods are: the approximation relation of two modalities is from linear [8], locally linear [9], to nonlinear [1]; the concerned object is from basic pixels [10] to their statistical distribution with subspace method [11]. Linear approximate methods apply transformation globally and may not work well if the hair region is included. Thus patch-based methods are proposed to simulate the nonlinear projection but ignore the spatial relationship of patches. To reduce the blurring and aliasing effects, embedded hidden Markov model are applied into the theme which can approximate the nonlinear relationship well and achieve better performance. Since the synthesis task is more complicate than recognition, some researchers study another method for heterogeneous face recognition omitting the synthesis procedure. Such methods aim to project two image modalities on to an intermediate modality and conduct recognition in the common subspace [12]. Another breakthrough in heterogeneous face recognition methods is on the feature extraction stage, and the key point lies in finding the modality-invariable features [2]. The acquired near infrared face image and sketch share much similarities with normal visible face images intuitively though they are different at pixel-level, and the data is both two dimensional, so some methods are applicable for both sketch and near infrared face recognition and in fact some articles do experiments in these two modalities with their proposed methods.

3.2 NIR-VIS Face Recognition

Near infrared face images are mainly to deal with the illumination variations [38]. Due to the distinction of imagery technology, the data distribution of NIR and VIS face images in high dimensional space are inconsistency. Thus general face recognition methods are not suitable. The three common methods for heterogeneous face recognition are applied in NIR and VIS domain as follows: in the work of [9, 33], synthesis based methods are employed to transform one face modality to the other. Tang et al. [33] propose an Eigen-transformation method while Liu et al. [9] reconstruct image patches using LLE. A recent work done by Felix et al. [39] proposes a \(\ell _{0}\)-Dictionary based approach to reconstruct the corresponding image modalities and acquires good performance; the common subspace methods are used respectively in [31, 34] by employing LDA and TCA (transfer component analysis) respectively; modal-invariant features are often based on SIFT or LBP. The development of deep learning driving the heterogeneous face recognition algorithm further. Some unsupervised deep learning methods are used in this topic: J. Ngiam et al. [30] propose a Bimodal Deep AE method based on denoising auto encoder; To exert the potential effects of all layers, a multi-modal DBM approach is suggested by [32]; a RBM method combined with removed PCA features is proposed in the work of [35]. With these methods, the matching accuracies of heterogeneous face images are improved gradually, but still far below than the state-of-the-art VIS face recognition rates. Recently, a unified CNN framework is proposed in the study of [29], which integrates the deep representation transferring and the triplet loss to get consolidated feature representations for face images in two modalities. It alleviates the over-fitting problem for CNNs on small-scale datasets, and achieves great performance on the existing publicly available biggest CASIA 2.0 NIR-VIS Face Database.

3.3 Thermal-VIS Face Recognition

Different with visible imaging receiving the reflected lights, the thermal images are acquired by receiving the emitted radiation, thus causing a large cross-modal gap [16]. The emitted radiation is affected by many factors, and usually time-lapse, physical exercise and mental tasks are considered, which makes the recognition tasks even more challenging. There are already some studies on the within-domain thermal infrared face image recognition, but few addressed on the cross-modal thermal-visible face recognition. The first work on thermal to visible face recognition is done by [13], which resorts to a partial least squares-discriminant analysis (PLS-DA) method; A MWIR-to-visible face recognition system which consists of preprocessing, feature extraction (HOG, SIFT, LBP), and similarity matching is proposed by [14]; Klare and Jain [15] propose a nonlinear kernel prototype representation method for both thermal and visible light images and use LDA to improve the discriminative capabilities of the prototype representations; The authors of [13] improve their methods by incorporating multi-modal information into the PLS model building stage, and design different preprocessing and feature extraction stages to reduce the modality gap [16]; Recently, a graphical representation method which employs Markov networks and considers the spatial compatibility between neighboring image patches is proposed by [17], and the method achieves excellent performance on multiple heterogeneous face modalities including the thermal-visible scenario.

3.4 3D-2D Face Recognition

3D images are robust to illumination and pose variations compared to 2D images. The face recognition based on 3D dada is more accurate than visible light images and more practical than 3D-3D. Early work focus more on 3d-aided face recognition or 3D images reconstruction and little work explore the cross-modal 3D-2D face recognition. Partial Principle Component Analysis based method is used in [18] to extract features in two modalities and reduce the feature dimension. Kernel Canonical Correlation Analysis (CCA) is employed in [20] to maximize the feature correlation between patches in 2D texture images and 3D depth images. A fusion scheme based on Partial Least Square (PLS) and CCA is suggested in [21] to further improve the performance by learning the correlation mapping between 2D-3D. In the work of Riccio et al. [19], they propose to calculate the geometrical invariants based on several control points where locating the fiducial points accurately in both 2D and 3D modalities is also a challenging problem. Di Huang et al. [9] present a new biological vision-based facial description method, namely Oriented Gradient Maps (OGMs) which can simulate the response of complex neuron to gradient information in a pre-defined neighborhood and hence describe local texture changes of 2D faces and local shape variations of 3D face models. Recently, a 3D-2D face recognition framework is proposed in [23]. They use a bidirectional relighting method for non-linear, local illumination normalization and a global orientation-based correlation metric for pairwise similarity scoring, which can generalize well for diverse illumination conditions.

3.5 High-Low Resolution Face Recognition

The HR-LR face recognition is mainly used in video surveillance scenario where the face images acquired in surveillance pictures are usually of very low resolution. To match the detected faces with the enrolled face images of high resolution, a large cross-modal gap should be bridged. Existing related investigates try to improve the recognition rate of a LR image by reconstructing HR face, but the matching between faces in these two modalities are less studied. Since the reconstruction can help asymmetric face recognition, this paper will introduce some super resolution reconstruction (SR) algorithm. Usually two classes of methods are used in this topic [24]: multi-frames SR retrieving high-frequency details from a set of images, and single-frame SR inferring the HR counterpart of a single image based on extra information from the training samples. Specific to the topic of heterogeneous HR-LR face recognition, the subspace projection based method which is efficient to the similarity measure between different resolutions plays an important role, such as [25, 26]. A recent study done by [27] proposes a local optimization based coupled mappings algorithm and constrains the LR/HR consistency, intraclass compactness and interclass separability. The newest work up to now is proposed in [28] which employs a coupled kernel-based enhanced discriminant analysis (CKEDA) method to maximize the discrimination property of the projected common space. This work is demonstrated effectiveness on a public face database and the LR face images are acquired by downsampling as other HR/LR face experiments set.

4 Results

To evaluate the recognition performance of heterogeneous face recognition, usually rank-1 recognition rate and verification rate at certain false accept rate are computed. As there are too many possible factors affecting the final results, different methods are not comparable if their experiments are employed on distinct datasets or with diverse experimental protocols. Sketch and near infrared face images are studied early and extensively, so there are some widely used datasets and standard protocols and related recognition results are listed in Tables 1 and 2. The recognition rate of sketch-VIS faces achieves a level of nearly 100 percent on CUFS database, but there are still promotion spaces for real world forensic sketch recognition. The highest rank-1 recognition rate and verification rate on the existing publicly available biggest NIR-VIS database, CASIA 2.0 NIR-VIS Face Database, are achieved by [29] as shown in Table 2. As for other above mentioned domains, transverse comparison is hard for the non-unified experimental settings. There is a trend that patch based methods are better than global based methods, non-linear based methods are better than linear based methods and usually the combination of related methods may generate higher accuracy.

5 Discussion

A good face recognition algorithm should have the power to deal with face images coming from different image sources or even different modalities, and is general adaptive for different scenes. Due to different characteristics for heterogeneous modalities or scenes, most existing algorithms have to deal with different data respectively though few methods try to deal with two or three modalities. However, humans can recognize an identity quickly no matter in sketch, near infrared image or long-distance monitoring scene. Neurologists and psychologists have been studying this phenomenon, and they want to find the recognition mechanism for face images having a low correlation with the phenomenological information. Specific to the problem of limited data, long life learning might be the future solution which can learn with one or two inputs even without labels and remember the knowledge learned before. The challenges in live detection would find their solutions if two or more modalities can be combined and well fused for recognition, which is deserved to study further.

References

Wang, X., Tang, X.: Face photo-sketch synthesis and recognition. IEEE Trans. Pattern Anal. Mach. Intell. 31(11), 1955–1967 (2009)

Zhang, W., Wang, X., Tang, X.: Coupled information-theoretic encoding for face photo-sketch recognition. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 513–520 (2011)

Li, S., Yi, D., Lei, Z., et al.: The casia nir-vis 2.0 face database. In: IEEE Conference on Computer Vision and Pattern Recognition Workshops (2013)

Socolinsky, D.A., Selinger, A.: Thermal face recognition in an operational scenario. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 2, pp. II-1012–II-1019 (2004)

Kevin, X., Bowyer, W.: Visible-light, infrared face recognition. In: Workshop on Multimodal User Authentication, p. 48 (2003)

Socolinsky, D.A., Selinger, A.: A comparative analysis of face recognition performance with visible and thermal infrared imagery. Equinox Corp., Baltimore (2002)

Espinosa-Dur, V., Faundez-Zanuy, M., Mekyska, J.: A new face database simultaneously acquired in visible, near-infrared and thermal spectrums. Cogn. Comput. 5(1), 119–135 (2013)

Tang, X., Wang, X.: Face photo recognition using sketch. In: IEEE International Conference on Image Processing, pp. I-257–I-260 (2002)

Liu, Q., Tang, X., Jin, H., et al.: A nonlinear approach for face sketch synthesis and recognition. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 1, pp. 1005–1010 (2005)

Uhl Jr., R.G., Lobo, N.D.V., Kwon, Y.H.: Recognizing a facial image from a police sketch. In: IEEE Conference on Applications of Computer Vision Workshop, pp. 129–137 (1994)

Li, Y., Savvides, M., Bhagavatula, V.: Illumination tolerant face recognition using a novel facefrom sketch synthesis approach and advanced correlation filters. In: IEEE Conference on Acoustics, Speech, and Signal Processing, pp. 357–360 (2006)

Lei, Z., Liao, S., Jain, A.K., et al.: Coupled discriminant analysis for heterogeneous face recognition. IEEE Trans. Inf. Forensics Secur. 7(6), 1707–1716 (2012)

Choi, J., Hu, S., Young, S.S., et al.: Thermal to visible face recognition. In: SPIE Defense, Security, Sensing. International Society for Optics, Photonics, pp. 83711L–83711L-10 (2012)

Bourlai, T., Ross, A., Chen, C., et al.: A study on using mid-wave infrared images for face recognition. In: SPIE Defense, Security, and Sensing. International Society for Optics and Photonics, pp. 83711K–83711K-13 (2012)

Klare, B.F., Jain, A.K.: Heterogeneous face recognition using kernel prototype similarities. IEEE Trans. Pattern Anal. Mach. Intell. 35(6), 1410–1422 (2013)

Hu, S., Choi, J., Chan, A.L., et al.: Thermal-to-visible face recognition using partial least squares. JOSA A 32(3), 431–442 (2015)

Peng, C., Gao, X., Wang, N., et al.: Graphical Representation for Heterogeneous Face Recognition. arXiv preprint arXiv:1503.00488 (2015)

Rama, A., Tarres, F., Onofrio, D., et al.: Mixed 2D-3D Information for pose estimation and facerecognition. In: IEEE Conference on Acoustics, Speech and Signal Processing, vol. 2, pp. II-211–II-217 (2006)

Riccio, D., Dugelay, J.L.: Geometric invariants for 2D/3D face recognition. Pattern Recogn. Lett. 28(14), 1907–1914 (2007)

Yang, W., Yi, D., Lei, Z., et al.: 2D3D face matching using CCA. In: IEEE Conference on Automatic Face & Gesture Recognition, pp. 1–6 (2008)

Wang, X., Ly, V., Guo, G., et al.: A new approach for 2d–3d heterogeneous face recognition. In: IEEE International Symposium on Multimedia, pp. 301–304 (2013)

Huang, D., Ardabilian, M., Wang, Y., et al.: Oriented gradient maps based automatic asymmetric 3D-2D face recognition. In: International Conference on Biometrics, pp. 125–131 (2012)

Kakadiaris, I.A., Toderici, G., Evangelopoulos, G., et al.: 3D–2D face recognition with pose and illumination normalization. Comput. Vis. Image Underst. (2016)

Zhang, Q., Zhou, F., Yang, F., et al.: Face super-resolution via semi-kernel partial least squares and dictionaries coding. In: IEEE Conference on Digital Signal Processing, pp. 590–594 (2015)

Li, B., Chang, H., Shan, S., et al.: Low-resolution face recognition via coupled locality preserving mappings. IEEE Sig. Process. Lett. 17(1), 20–23 (2010)

Biswas, S., Bowyer, K.W., Flynn, P.J.: Multidimensional scaling for matching low-resolution face images. IEEE Trans. Pattern Anal. Mach. Intell. 34(10), 2019–2030 (2012)

Shi, J., Qi, C.: From local geometry to global structure: learning latent subspace for low-resolution face image recognition. IEEE Sig. Process. Lett. 22(5), 554–558 (2015)

Wang, X., Hu, H., Gu, J.: Pose robust low-resolution face recognition via coupled kernel-based enhanced discriminant analysis. IEEE/CAA J. Autom. Sin. 3(2), 203–212 (2016)

Liu, X., Song, L., Wu, X., Tan, T.: Transferring deep representation for NIR-VIS heterogeneous face recognition. In: International Conference on Biometrics (2016)

Ngiam, J., Khosla, A., Kim, M., et al.: Multimodal deep learning. In: International Conference on Machine Learning, pp. 689–696 (2011)

Pan, S.J., Tsang, I.W., Kwok, J.T., et al.: Domain adaptation via transfer component analysis. IEEE Trans. Neural Netw. 22(2), 199–210 (2011)

Srivastava, N., Salakhutdinov, R.R.: Multimodal learning with deep boltzmann machines. In: Advances in Neural Information Processing Systems, pp. 2222–2230 (2012)

Tang, X., Wang, X.: Face sketch synthesis and recognition. In: IEEE Conference on Computer Vision, pp. 687–694 (2003)

Wang, R., Yang, J., Yi, D., Li, S.Z.: An analysis-by-synthesis method for heterogeneous face biometrics. In: Tistarelli, M., Nixon, M.S. (eds.) ICB 2009. LNCS, vol. 5558, pp. 319–326. Springer, Heidelberg (2009). doi:10.1007/978-3-642-01793-3_33

Yi, D., Lei, Z., Li, S.Z.: Shared representation learning for heterogeneous face recognition. In: IEEE Conference and Workshops on Automatic Face and Gesture Recognition, vol. 1, pp. 1–7 (2015)

Dhamecha, T.I., Sharma, P., Singh, R., et al.: On effectiveness of histogram of oriented gradient features for visible to near infrared face matching. In: International Conference on Pattern Recognition, pp. 1788–1793 (2014)

Klare, B.F., Li, Z., Jain, A.K.: Matching forensic sketches to mug shot photos. IEEE Trans. Pattern Anal. Mach. Intell. 33(3), 639–646 (2011)

Li, S.Z., Zhang, L., Liao, S.C., et al.: A near-infrared image based face recognition system. In: FG, pp. 455–460 (2006)

Juefei-Xu, F., Pal, D., Savvides, M.: NIR-VIS heterogeneous face recognition via cross-spectral joint dictionary learning and reconstruction. In: IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 141–150 (2015)

Acknowledgments

This work is supported by the Youth Innovation Promotion Association of the Chinese Academy of Sciences (CAS) (Grant No. 2015190), the National Natural Science Foundation of China (Grant No. 61473289) and the Strategic Priority Research Program of the Chinese Academy of Sciences (Grant No. XDB02070000).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing AG

About this paper

Cite this paper

Liu, X., Sun, X., He, R., Tan, T. (2016). Recent Advances on Cross-Domain Face Recognition. In: You, Z., et al. Biometric Recognition. CCBR 2016. Lecture Notes in Computer Science(), vol 9967. Springer, Cham. https://doi.org/10.1007/978-3-319-46654-5_17

Download citation

DOI: https://doi.org/10.1007/978-3-319-46654-5_17

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-46653-8

Online ISBN: 978-3-319-46654-5

eBook Packages: Computer ScienceComputer Science (R0)