Abstract

In previous work we have developed illustrative, neurocomputational models to describe mechanisms associated with mental processes. In these efforts, we have considered mental processes in phenomena such as neurosis, creativity, consciousness/unconsciousness, and some characteristics of the psychoses. Memory associativity is a key feature in the theoretical description of these phenomena, and much of our work has focused on modeling this mechanism. In traditional neural network models of memory, the symmetry of synaptic connections is a necessary condition for reaching stationary states. The assumption of symmetric weights seems however to be biologically unrealistic. Efforts to model stationary network states with asymmetric weights are mathematically complex and are usually applied to restricted situations. This has motivated us to explore the possibility of a new approach to the synaptic symmetry problem, based on its analogies with some features of the nonlinear Fokker-Planck formalism.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Much of our previous work [1–3] regards the search for neuronal network mechanisms, whose emergent states underlie behavioral aspects traditionally studied by psychiatry, psychoanalysis and neuroscience [4–9]. A working hypothesis in neuroscience is that human memory is encoded in the neural net of the brain, and associativity is frequently used to describe mental processes, both in normal and pathological functioning. Neuronal models of associative memory [10] have therefore formed a central component of our descriptions.

In traditional neural network models of memory, such as the Hopfield model [10], the symmetry of synaptic connections is a necessary mathematical requirement for reaching stationary states (memory) [10, 11]. This is the case both when using the Boltzmann Machine (BM) procedure, as when employing more recent approaches based on the Generalized Simulated Annealing (GSA) algorithm [12]. Real biological neural networks, however, do not seem to comply with the synaptic symmetry condition. We then face the curious situation that the main mathematical-mechanistic neural models for memory are based on an assumption that is at odds with biological reality. There have been efforts to model stationary memory attractor states with asymmetric weights, but they are mathematically complex and usually applicable only to restricted situations [13, 14]. In spite of these interesting attempts, and even though memory modeling with neural networks has been an active field of research for decades, the (a)symmetry issue remains largely an unexplored (and almost forgotten) open problem. This indicates the need to consider new alternative approaches to this subject. Our main aim in the present exploratory work is to point out basic similarities between the synaptic symmetry problem and some aspects of the nonlinear Fokker-Planck (NLFP) dynamics. These connections may lead to a new possible way to address the symmetry problem in neural networks. Here we advance the first steps in the development of a formalism based on the non-linear Fokker-Planck equation, which we summarize in this paper, along with some preliminary results. A more detailed discussion is being prepared for an extended publication.

In previous work, we have used the Boltzmann Machine [10] and Generalized Simulated Annealing [12] to simulate memory. In the BM and GSA, pattern retrieval on the net is achieved by a simulated annealing (SA) process, where the temperature T is gradually lowered by an annealing schedule \(\alpha \). For a BM or GSA network with N nodes, where each node i has a discrete state \(S_i\) in \(\{-1, 1\}\), it is a necessary condition for the network to have stable states that synaptic weights between nodes i and j obey \(w_{ij} = w_{ji}\). One can then define an Energy function, representing the potential energy corresponding to the interactions between neurons,

and stored memories correspond to minimum energy (stable) states, which are attractors in the memory retrieval mechanism (SA process).

In the SA process, the energy surface is sampled according to the following transition probabilities. For the Boltzmann Machine (BM)

and for the Generalized Simulated Annealing or Tsallis Machine (GSA) [12]

These transition probabilities tend to take the system from a current state towards a final, more favorable minimum energy state (although energy may increase at intermediate steps).

In Sect. 2, we briefly review neural network models as related to basic theory of Dynamical Systems. We then introduce basic aspects of the Fokker-Planck formalism. In Subsect. 4.2, we show that it is possible to introduce a drift or force term not arising from the gradient of a potential, which is related to asymmetric couplings, and still achieve stationary states for the probability density function, in the phase space describing the system. We also mention further developments and present our conclusions in the last section.

2 Dynamical Systems and Neural Networks

For a continuous deterministic dynamical system with phase space variables \( \{ X_1, X_2, \cdots , X_N\} \), considering that there is no noise, the equations of motion can be expressed as

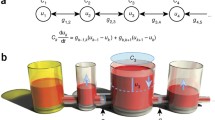

which in self-explanatory vector notation is expressed as \( \frac{d\mathbf X}{dt}\,=\,\mathbf{G}(\mathbf{X})\), with \(\varvec{X}, \varvec{G} \in \mathfrak {R}^N \). That is, the time evolution of the system’s state \(\varvec{X} \) is described by a phase space flux given by the vectorial field \(\varvec{G}\). Neural networks have been widely studied within this framework [10]. In neural network models, the synaptic weight \(w_{ij}\) expresses the intensity of the influence of neuron j on neuron i (the coupling). So the net signal input to neuron i is given by

where \( V_{O_j}\) is the output signal of neuron j.

It is possible to generalize the McCulloch-Pitts (discrete activation) neural model, in order to consider continuous state variables [10, 11], so that \(V_{O_i}\) (in equilibrium) is updated by a continuous function of \(u_i\),

In Eq. (6), the activation function g(u) is usually nonlinear and saturates for large values of |u|, such as a sigmoid or \(\tanh (u)\). One possible continuous-time rule for updating the \(V_{O_i}\) [11, 15], is the set of differential equations

where \(\tau _i\) are suitable time constants.

In traditional neural network models of memory, such as the Hopfield model, BM and GSA, \(w_{ij} = w_{ji}\) is a necessary condition for reaching stationary states (memory). This symmetry restriction seems to be biologically unrealistic. In this contribution we comment on the similarities between the synaptic symmetry problem and some features of the nonlinear Fokker-Planck dynamics, which may shed new light on this problem and suggest possible ways to tackle it.

3 Fokker-Planck Equation

We now consider an ensemble of identical systems, each consisting of N elements, that evolve from different initial conditions. This ensemble is described by a time-dependent probability density in phase space \({\mathcal P}(X_1, \cdots , X_N, t)\) obeying the Liouville equation

If the system presents noisy behavior, it is necessary to add a new diffusion-like term in Eq. (8), which results in the Fokker-Planck equation (FPE)

where D is the diffusion coefficient and the second term on the right, involving the field \(\varvec{G}\), is referred to as the drift term. We shall call \(\varvec{G}\) the drift field. If

for some potential function \(V(\varvec{X})\), there is a Boltzmann-Gibbs-like stationary solution to Eq. (9),

where Z is an appropriate normalization constant. That is, \({\mathcal P}_{BG}\) satisfies (9) with \( \frac{\partial {\mathcal P}_{BG}}{\partial t} = 0 \). The distribution \({\mathcal P}_{BG}\) maximizes the Boltzmann-Gibbs entropy \(S_{BG}\) under the constraints of normalization and the mean value \(\langle V \rangle \) of the potential V.

Note that a dynamical system with a flux in phase space of the form (10) (gradient form) corresponds to a system that evolves so as to minimize V, i.e. down-hill along the potential energy surface. For a field \(\varvec{G} \) of the form (10) one has,

In the case of a Hopfield Neural Network, if the activation g(u) is linear, for example in Eq. (7), \(G_i \propto \sum \limits _j w_{ij} X_j \) (corresponding to linear forces),

and therefore, by Eq. (12), \(w_{ij} = w_{ji}\). We see that the general condition (12), that guarantees that the Fokker-Planck dynamics evolves towards a stationary Boltzmann-Gibbs distribution (11), is very similar to the synaptic symmetry requirement, necessary for a neural network to evolve towards minima of an energy surface. This similarity is, of course, also closely related to the fact that the simulated annealing technique provides a useful algorithm to find the minima of the network’s energy landscape. In the Fokker-Planck case, however, it is possible to relax the condition (12), considering more general drift fields, and still have a dynamics that leads to a stationary Boltzmann-Gibbs distribution. This suggests that the Fokker-Planck scenario with non-gradient drift fields may be relevant to the synaptic symmetry problem. In the following sections we explore some basic aspects of this scenario, within the more general context of the nonlinear Fokker-Planck equation.

4 Nonlinear Fokker-Planck Equation

In [16], Ribeiro, Nobre and Curado state: “The linear differential equations in physics are, in many cases, valid for media characterized by specific conditions, like homogeneity, isotropy, and translational invariance, with particles interacting through short-range forces and with a dynamical behavior characterized by short-time memories”. It is possible to introduce a nonlinear diffusion term to the FPE to describe a physical ensemble of interacting particles, so that the nonlinearity is an effective description of the interactions [16–20]. Physical systems characterized by spatial disorder and/or long-range interactions seem to be natural candidates for this formalism, which has recently attracted considerable attention from the complex systems research community.

We thus use the nonlinear Fokker-Planck equation (NLFP)

to study systems which may deviate from the linear description. Since we need to model stable properties of interesting physical systems, such as the stored memory states in a neural network, we search for possible stationary solutions to Eq. (14).

4.1 Stationary Solution - \(\varvec{G}\) of Gradient Form

In the most frequently studied case, where the field \(\varvec{G}\) is of the gradient form (10), the stationary solution of the NLFP is found by solving,

considering the Tsallis ansatz [20]

where A and \(\beta \) are constants to be determined. One finds that the ansatz given by Eq. (16) is a stationary solution of the NLFP equation, if

We call Eq. (16) the q-exponential ansatz. As already mentioned, it constitutes a stationary solution of the NLFP equation, when \(\varvec{G} \) is minus the gradient of a potential V (Eq. (10)), and A and \(\beta \) satisfy Eq. (17). The distribution \({\mathcal P}_{q}\) is also called a q-maxent distribution because it optimizes the nonextensive q-entropy \(S_{q}\), under the constraints of normalization and the mean value of the potential V [17, 20]. In the limit \(q \rightarrow 1\), the q-maxent stationary distribution (16) reduces to the Boltzmann-Gibbs one (11), with \(\beta = 1/D\).

4.2 Stationary Solution - \(\varvec{G}\) Not of the Gradient Form

Now we consider the NLFP equation, with a drift term not arising from the gradient of a potential and with the form

where \(\varvec{F}\) is equal to minus the gradient of some potential \(V(\varvec{X})\), while \(\varvec{E}\) does not come from a potential function (that is, we have \(\partial E_i /\partial X_j \ne \partial E_j /\partial X_i\)). We then substitute this \(\varvec{G}\) and \({\mathcal P}_q\) (Eq. (16)) in the stationary NLFP Eq. (15) and obtain

The first two terms in Eq. (19) vanish, because we know that \({\mathcal P}_q\) is a stationary solution of Eq. (15), when only the gradient field \(\varvec{F}\) is present. In order for \({\mathcal P}_q\) to satisfy (19), we then require \( \sum _{i=1}^N \partial ( {\mathcal P}_q E_i )/\partial X_i = 0. \) If this relation is satisfied, then \({\mathcal P}_q\) is also a stationary solution of the full NLFP equation, including the non-gradient term corresponding to \(\varvec{E}\). We therefore require

This equation constitutes a consistency requirement that the potential function V, the non-gradient field \(\varvec{E}\), the inverse temperature \(\beta \), and the entropic parameter q have to satisfy in order that the nonlinear Fokker-Planck equation admits a stationary solution of the q-maxent form. In the most general \(\beta \)-dependent situation, the condition given by Eq. (20) leads to a rather complicated relation between the non-gradient component E and the potential V. However, there are cases where a \(\beta \)-independent set of constraints can be obtained. We illustrate this with a two-dimensional example. Consider two-dimensional fields F and G with components of the form,

with the \(w_{ij}\) and \(h_{ij}\) constant real parameters. The field F is minus the gradient of the potential

It can be verified after some algebra that the q-maxent distribution (16) is a stationary solution of the NLFP equation, if the parameters characterizing the potential V and the (non-gradient) drift term E satisfy,

We see that we have a family of noisy dynamical systems (described by NLFP equations) characterized by 3 independent parameters, that have q-maxent stationary solutions in spite of having drift fields not necessarily arising from a potential. This can be appreciated from the fact that the constraints (23) are compatible with \(h_{12} \ne h_{21}\). Therefore, the drift field given by Eq. (21) does not necessarily comply with the symmetry restriction described by Eq. (12), which is akin to the standard symmetry condition in neural networks.

We are preparing an extended manuscript with a more detailed and general discussion of the ideas that we presented here briefly, due to space limitations. There we plan to explore systematically the conditions for having a q-maxent stationary state in more general scenarios described by NLFP equations, where the deterministic part of the concomitant dynamics involves a phase space flux not having the gradient form.

5 Conclusions

Inspired on the symmetry problem in neural networks, we explored properties of multi-dimensional NLFP equations endowed with drift fields not arising from a potential. We considered drift fields having both a gradient term and a non-gradient contribution. The non-gradient component of the drift field exhibits asymmetric features akin to those associated with the dynamics of neural networks with asymmetric synaptic weights. We identified cases where a NLFP equation having a non-gradient drift field still has a stationary solution of the q-maxent form (i.e. a q-exponential of the potential associated with the gradient part of the drift field). In future contributions, we plan to continue exploring the connections between the NLFP equation and the synaptic symmetry problem in the dynamics of neural networks, in order to apply the formalism of a non-potential drift term to account for attractor states in neuronal circuits, with asymmetric synaptic interactions.

References

Vidal de Carvalho, L.A., Quintella Mendes, D., Wedemann, R.S.: Creativity and delusions: the dopaminergic modulation of cortical maps. In: Sloot, P.M.A., Abramson, D., Bogdanov, A.V., Gorbachev, Y.E., Dongarra, J., Zomaya, A.Y. (eds.) ICCS 2003, Part I. LNCS, vol. 2657, pp. 511–520. Springer, Heidelberg (2003)

Wedemann, R.S., Donangelo, R., Vidal de Carvalho, L.A.: Generalized memory associativity in a network model for the neuroses. Chaos 19(1), 015116 (2009)

Wedemann, R.S., Vidal de Carvalho, L.A.: Some things psychopathologies can tell us about consciousness. In: Villa, A.E.P., Duch, W., Érdi, P., Masulli, F., Palm, G. (eds.) ICANN 2012, Part I. LNCS, vol. 7552, pp. 379–386. Springer, Heidelberg (2012)

Freud, S.: Introductory Lectures on Psycho-Analysis, Standard Edition. W. W. Norton and Company, New York, London (1966). First German edition (1917)

Kandel, E.: Psychiatry, Psychoanalysis, and the New Biology of Mind. American Psychiatric Publishing Inc., Washington, D.C., London (2005)

Shedler, J.: The efficacy of psychodynamic psychotherapy. Am. Psychol. 65(2), 98–109 (2010)

Cleeremans, A., Timmermans, B., Pasquali, A.: Consciousness and metarepresentation: a computational sketch. Neural Netw. 20, 1032–1039 (2007)

Taylor, J.G., Villa, A.E.P.: The “Conscious I”: a neuroheuristic approach to the mind. In: Baltimore, D., Dulbecco, R., Francois, J., Levi-Montalcini, R. (eds.) Frontiers of Life, pp. 349–368. Academic Press (2001)

Taylor, J.G.: A neural model of the loss of self in schizophrenia. Schizophrenia Bull. 37(6), 1229–1247 (2011)

Hertz, J.A., Krogh, A., Palmer, R.G. (eds.): Introduction to the Theory of Neural Computation. Lecture Notes, vol. 1. Perseus Books, Cambridge (1991)

Cohen, M.A., Grossberg, S.: Absolute stability of global pattern formation and parallel memory storage by competitive neural networks. IEEE Trans. Syst. Man Cybern. 13, 815–826 (1983)

Tsallis, C., Stariolo, D.A.: Generalized simulated annealing. Phys. A 233, 395–406 (1996)

Parisi, G.: Asymmetric neural networks and the process of learning. J. Phys. A Math. Gen. 19, L675–L680 (1986)

Xu, Z.B., Hu, G.Q., Kwong, C.P.: Asymmetric hopfield-type networks: theory and applications. Neural Netw. 9(3), 483–501 (1996)

Hopfield, J.J.: Neurons with graded responses have collective computational properties like those of two-state neurons. Proc. Nat. Acad. Sci. U.S.A. 81, 3088–3092 (1988)

Ribeiro, M.S., Nobre, F., Curado, E.M.F.: Classes of N-dimensional nonlinear Fokker-Planck equations associated to Tsallis entropy. Entropy 13, 1928–1944 (2011)

Plastino, A.R., Plastino, A.: Non-extensive statistical mechanics and generalized Fokker-Planck equation. Phys. A 222, 347–354 (1995)

Tsallis, C., Buckman, D.J.: Anomalous diffusion in the presence of external forces: Exact time-dependent solutions and their thermostatistical basis. Phys. Rev. E 54(3), R2197–R2200 (1996)

Franck, T.D.: Nonlinear Fokker-Planck Equations: Fundamentals and Applications. Springer, Heidelberg (2005)

Tsallis, C.: Introduction to Nonextensive Statistical Mechanics. Approaching a Complex World. Springer, New York (2009)

Acknowledgments

We acknowledge financial support from the Brazilian National Research Council (CNPq), the Rio de Janeiro State Research Foundation (FAPERJ) and the Brazilian agency which funds graduate studies (CAPES).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Wedemann, R.S., Plastino, A.R. (2016). Asymmetries in Synaptic Connections and the Nonlinear Fokker-Planck Formalism. In: Villa, A., Masulli, P., Pons Rivero, A. (eds) Artificial Neural Networks and Machine Learning – ICANN 2016. ICANN 2016. Lecture Notes in Computer Science(), vol 9886. Springer, Cham. https://doi.org/10.1007/978-3-319-44778-0_3

Download citation

DOI: https://doi.org/10.1007/978-3-319-44778-0_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-44777-3

Online ISBN: 978-3-319-44778-0

eBook Packages: Computer ScienceComputer Science (R0)