Abstract

The present need to identify and understand non-measurable/non-quantifiable of the user experience with software has been the moto for may researchers in the area of Human Computer Interaction (HCI) to adopt qualitative methods. On the other hand, the use of qualitative analysis to support software has been growing. The integration of these types of tools in research is accompanied by an increase in the number of software packages available. Depending on the design and research questions, researchers can explore various solutions available in the market. Thus, it is urgent to ensure that these tools, apart from containing the necessary functionality for the purposes of research projects, are also usable. This study presents an assessment of the usability of the qualitative data analysis software webQDA® (version 2.0). To assess its usability, Content Analysis was used. The results indicate that the current version is “acceptable” in terms of usability, as users, in general, show a positive perception is perceived from users with completed PhDs as compared to those who are still doing their PhDs; no relevant differences can be found between the views obtained from different professional or research areas, although a more positive assessment may be drawn from Education and Teaching. Suggestions for future studies are put forward, even though we recognise that, in spite of most studies on usability defining quantitative metrics, the present study is offered as a contribution that aims to show that qualitative analysis has a great potential to deepen various dimensions of usability and functional and emotional interrelationships that are not by any means quantifiable.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

The evaluation of usability is much discussed, especially when we approach the graphic interfaces software. The study of usability is critical because certain software applications are “one-click” away from not being used at all, nor used in an appropriate manner. Usability comes as the most “rational” side of a product, allowing users to reach specific objectives in an efficient and satisfactory manner. Moreover, the User Experience is largely provided by the feedback on the usability of a system, reflecting the more “emotional” side of the use of a product. The experiment is related to the preferences, perceptions, emotions, beliefs, physical and psychological reactions of the user during the use of a product (ISO9241-210 2010). Thus, one comprehends “the pleasure or satisfaction” that many interfaces offer users, as evidence of efficiency in the integration of the concepts of Usability and User Experience in the development of HCI solutions (Scanlon et al. 2015). Despite being linked, in this paper we will focus the study on the dimension of Usability.

When dealing with authoring tools, in which you have to apply your knowledge to produce something, it becomes even more sensitive to gauge the usability of the software. Being webQDA® (Web Qualitative Data Analysis: www.webqda.net) an authoring tool for qualitative data analysis and a new version of it being developed (whose release is due April 2016) (Souza et al. 2016), it is of extreme relevance to keep this dimension in mind.

webQDA is a qualitative data analysis software that is meant to provide a collaborative, distributed environment (www.webqda.net) for qualitative researchers. Although there are some software packages that deal with non-numeric and unstructured data (text, image, video, etc.) in qualitative analysis, webQDA is a software directed to researchers in academic and business contexts who need to analyse qualitative data, individually or collaboratively, synchronously or asynchronously. webQDA follows the structural and theoretical design of other programs, with the difference that it provides online collaborative work in real time and a complementary service to support research (Souza et al. 2011).

In this paper, the main objective is to answer the following question: How to assess usability and functionality of the qualitative analysis software webQDA? After these initial considerations, it is important to understand the content of the following sections of this paper. Thus, in Sect. 2 we will present concepts associated with Qualitative Data Analysis Software. Sect. 3 will discuss Design through Research, while Sect. 4 will assess webQDA from the point of view of Usability, a methodological aspect where we present the methods and techniques for assessing the usability of software and the results of this study. And finally, we conclude with the study’s findings.

2 Qualitative Data Analysis Software (QDAS)

The use of software for scientific research is currently very common. The spread of computational tools can be perceived through the popularization of software for quantitative and qualitative research. Nevertheless, nonspecific and mainly quantitative tools, like Word®, SPSS®, Excel®, etc., are the ones with major incidence or dissemination. This is also a reflection of the large number of books that can be found on quantitative research. However, the integration of specific software for qualitative research is a relatively marginal and more recent phenomenon.

In the context of postgraduate educational research in Brazil, some authors Teixeira et al. (2015) studied the use of computational resource in research. They concluded that among those who reported using software (59.9 %), there is a higher frequency of the use of quantitative analysis software (41.1 %), followed by qualitative analysis software (39.4 %), and finally the use of bibliographic reference software (15.5 %).

The so-called Computer-Assisted Qualitative Data Analysis Software or Computer Assisted Qualitative Data Analysis (CAQDAS) are systems that go back more than three decades (Richards 2002b). Today we can simply call them Qualitative Data Analysis Software (QDAS). However, even today many researchers are unaware of these specific and useful tools. Cisneros Puebla (2012) specifies at least three types of researchers in the field of qualitative analysis: (i) Researchers who are pre-computers, who prefer coloured pencils, paper and note cards; (ii) Researches who use non-specific software, such as word processors, spreadsheet calculations and general databases, and (iii) Researchers who use specific software to analyse qualitative research, such as NVivo, Atlas. it, webQDA, MaxQDA, etc.

We can summarize the story of specific qualitative research software with some chronological aspects:

-

(1)

In 1966, MIT developed “The General Inquiry” software to help text analysis, but some authors (Tesch 1991; Cisneros Puebla 2003) refer that this was not exactly a qualitative analysis software.

-

(2)

In 1984, the software Ethnograph comes to light, and still exists in its sixth version. (http://www.qualis.research.com/).

-

(3)

In 1987, Richards and Richards developed the Non-Numerical Unstructured Data Indexing, Searching and Theorizing (NUD*IST), the software that evolved into the current NVivo index system.

-

(4)

In 1991, the prototype of the conceptual network ATLAS-ti is launched, mainly related with Grounded Theory.

-

(5)

Approximately in the transition of the 2000 decade it was possible to integrate video, audio and image in text analysis of qualitative research software. Nevertheless, HyperRESEARCH had been presented before as software that also allowed to encode and recover text, audio and video. Transcriber and Transana are other software systems that emerged to handle this type of data.

-

(6)

In 2004, “NVivo summarizes some of the most outstanding hallmark previous software, such as ATLAS-ti—recovers resource coding in vivo, and ETHNOGRAPH—a visual presentation coding system” (Cisneros Puebla 2003).

-

(7)

2009 marks the beginning of the developments of qualitative software in cloud computer contexts. Examples of this are Dedoose and webQDA, that were developed almost simultaneously in USA and Portugal, respectively.

-

(8)

From 2013 onwards we saw an effort from software companies to develop iOS versions, incorporating data from social networks, multimedia and other visual elements in the analysis process.

Naturally this story is not complete. We can include other details and software such as MaxQDA, AQUAD, QDA Miner, etc. For example, we can see a more exhaustive list in Wikipedia’s entrance: “Computer-assisted qualitative data analysis software”.

What is the implication of Qualitative Data Analysis Software on scientific research in general and specifically on qualitative research? Just as the invention of the piano allowed composers to begin writing new songs, the software for qualitative analysis also affected researchers in the way they dealt with their data. These technological tools do not replace the analytical competence of researchers, but can improve established processes and suggest new ways to reach the most important issue in research: to find answers to research questions. Some authors (Kaefer et al. 2015; Neri de Souza et al. 2011) recognize that QDAS allow making data visible in ways not possible with manual methods or non-specific software, allowing for new insights and reflections on a research or corpus of data.

Kaefer et al. (2015) wrote a paper with step-by-step QDAS software descriptions about the 230 journal articles they analysed about climate change and carbon emissions. They concluded that while qualitative data analysis software does not do the analysis for the researcher, “it can make the analytical process more flexible, transparent and ultimately more trustworthy” (Kaefer et al. 2015). There are obvious advantages in the integration of QDAS in standard analytical processes, as these tools open up new possibilities, such as agreed by Richards (2002b): (i) computers have enabled new qualitative techniques that were previously unavailable, (ii) computation has produced some influence on qualitative techniques. We therefore can summarize some advantage of QDAS: (i) faster and more efficient data management; (ii) increased possibility to handle large volumes of data; (iii) contextualization of complexity; (iv) technical and methodological rigor and systematization; (v) consistency; (vi) analytical transparency; (vii) increased possibility of collaborative teamwork, etc. However, many critical problems present challenges to the researchers in this area.

There are many challenges in the QDAS field. Some are technical or computational issues, whereas other are methodological or epistemological prerequisites, although Richards (2002a, b), recognized that methodological innovations are rarely discussed. For example, Corti and Gregory (2011) discuss the problem of exchangeability and portability of current software. They argue the need of data sharing, archiving and open data exchange standards among QDAS, to guarantee sustainability of data collections, coding and annotations on these data. Several researchers place expectations on the QDAS utilities on an unrealistic basis, while others think that the system has insufficient analytical flexibility. Richards (2002a) refers that many novice researchers develop a so-called “coding fetishism”, that transforms coding processes into an end in itself. For this reason, some believe that QDAS can reduce critical reading and reflection. For many researchers, the high financial cost of the more popular QDAS is a problem, but in this paper we would like to focus on the challenge of the considerable time and effort required to learn them.

Choosing a QDAS is a first difficulty that, in several cases, is coincidental with the process of qualitative research learning. Kaefer et al. (2015) suggest to compare and test software through sample projects and literature review. Today, software companies offer many tutorial videos and trial times to test their systems. Some authors Pinho et al. (2014) studied the determinant factors in the adoption and recommendation of qualitative research software. They analysed five factors: (i) Difficulty of use; (ii) Learning difficulty; (iii) Relationship between quality and price; (iv) Contribution to research; and (v) Functionality. These authors indicate the two first factors as the most cited ones in the corpus of the data analysed:

-

“NVivo is not exactly friendly. I took a whole course to learn to use it, and if you don’t use it often enough, you’re back to square one, as those “how-to” memories tend to fade quickly.” Difficulty of use

-

“I use Nvivo9 and agree that it is more user-friendly than earlier versions. I do not make full use of everything you can do with it however—and I’ve never come across anyone who does” “NVivo”. Learning difficulty

In this context it is very important to study the User Experience and Usability of the QDAS, because these types of tools need to be at the service of the researcher, and not the opposite, therefore reducing the initial learning time and increasing the effectiveness and efficiency of all processes of analysis. Usability is an important dimension in the design and development of software. It is important to understand when to involve the user in the process.

3 Design Through Research: Usability Evaluation

Scientific research based on the Research and Development (R&D) methodology has a prevalence for quantitative studies. Primarily, it is in the interest of the researcher to test/prove some theory, through the actions of individuals involved in the study. The researcher intends to generalize and uses, usually, numerical data. When we apply an R&D to design of software packages, it becomes poor to reduce the researcher to someone who does not attempt to perceive the context in which the study takes place, interpreting the meanings of the participants resorting to interactive and iterative processes. Thus, the R&D methodology (or Design Research, also emerging under the expression Research through Design) has been gaining ground in software projects that, according to Pierce (2014), “include devices and systems that are technically and practically capable of being deployed in the field to study participants or end users” (p. 735). Zimmerman et al. (2007) state that Design Research “mean[s] an intention to produce knowledge and not the work to more immediately inform the development of a commercial product” (p. 494).

Associated to this methodology, expression such as Human-Computer Interaction (HCI), User-Centred Design (UCD) e Human-Centred Design (HCD) crop up. Maguire (2001), in his study “Methods to support human-centred design” tackles the importance of software packages being usable and how we can reach that wish. This study lists a series of methods that can be applied in the planning, comprehension of the context of use, in the definition of requirements, in the development and evaluation of project solutions.

According to Van et al. (2008), depending on the phase of the project, evaluation can serve different purposes. At the initial phase, when there still does not exist any software, evaluation provides information that supports decision making; at an intermediate phase and through the use of prototypes, it allows the detection of problems; at a final phase, and already in possession of a complete version of the software, it allows to assess quality. The process offered by these authors, named Iterative Design, is divided in four phases (Van et al. 2008):

-

Without software: one aims at making decisions through collection of data with questionnaires, interviews and focus-groups and its analysis. These instruments are developed to characterise and define the user requirements;

-

Low-Fidelity Prototype: one aims at detecting problems through gathering and analysis of data obtained from interviews and focus-groups. One identifies the need to feel appreciation, perceived usefulness and security and safety aspects;

-

High-Fidelity Prototype: one aims at detecting problems through gathering and analysis of data obtained from questionnaires, interviews, think-aloud protocols and observation. Apart from the previous metrics, one aims at assessing comprehensibility, usability, adequacy, and the behaviour and performance of the user;

-

Final version of the software: in the final version, the same data gathering instruments are applied. Besides some of the metrics mentioned before, one aims at perceiving the Experience and Satisfaction of the User.

According to Godoy (1995) “a phenomenon can be better understood in the context in which it occurs and of which it is part, having to be analysed from an integrative perspective” (p. 21)Footnote 1. It is along this framework that in specific moments in the Iterative Design phases the researcher goes to the field to “capture” the phenomenon under study from the point of view of the users involved, considering every relevant point of view. The researcher collects and analyses various types of data to understand the dynamics of the phenomenon (Godoy 1995).

The recent need to identify and understand non-measurable/non-quantifiable aspects of the experience of the user is leading several researchers in the area of HCI to take hand of quantitative methods. HCI researchers started to understand that the context, both physical and social, in which specific actions take place along with associated behaviours, allow, through a structure of categories and their respective interpretation, to analyse a non-replicable phenomenon, not transferable nor applicable to other contexts (Costa et al. 2015).

The criteria defined by the standards are essentially oriented to technical issues. However, for a qualitative analysis to support that a software is of quality, it is necessary to take into account the research methodologies to be applied. Being an authoring software, researchers/users need to have knowledge of the techniques, processes and tools available in terms of data analysis in qualitative research.

4 WebQDA Usability Evaluation: Methodological Aspect

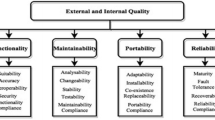

Despite the ISO 9126 providing 6 dimensions, in this study we will focus on the proposal to evaluate the usability of webQDA qualitative analysis software.

For an effective understanding of usability, there are quality factors that can be assessed through the evaluation criteria. Collecting and analysing data to answer the following questions will help determine if the software is usable or not (Seffah et al. 2008):

-

Is it easy to understand the theme of the software? (Understandable)

-

Is it easy to learn to use it? (Ease of learning)

-

What is the speed of execution? (Use efficiency)

-

Does the user show evidence of comfort and positive attitudes to its use? (Subjective satisfaction).

The answers to these questions can be carried out through a quantitative, qualitative or mixed methodology. However, we believe that they all have strengths and weaknesses that can be overcome when properly articulated.

Our study is based on the application of two questionnaires: (1) General use of the software, applied to the participants of the Iberian-American Congress on Qualitative Research—CIAIQ (years 2013, 2014 and 2015); and (2) review of the use of webQDA, applied only to users of this software. We obtained 98 replies in this second questionnaire. The survey questionnaire administered to CIAIQ participants obtained 362 answers. This questionnaire analysed only the answers to the question “When selecting software to support qualitative analysis, what criterion/criteria did you follow/would you follow for your choice?,” related to Usability (see Fig. 1).

Software usability (Costa et al. 2016)

Of the participants, 86.7 % (n = 314) defined as selection criteria “somewhat relevant” qualitative analysis software (n = 91, 25.1 %), and “very relevant” (n = 223 61.6 %). “Do not know/” was chosen by 29 of the participants and 5.2 % chose “not very relevant” (n = 11) and “irrelevant” (n = 8). Focusing our analysis on software webQDA, version 2.0, we analysed the qualitative data that allow to supplement what was considered in the evaluation of its usability. When we used the System Usability Scale (SUS) (Brooke 1996) we got the average central tendency of 70 points (SD = 14.2), which allows us to conclude that webQDA 2.0 was “acceptable” in terms of usability, according to the SUS criteria (Costa et al.2016).

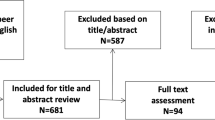

In this article we will examine the open questions of the survey applied to webQDA users (98 replies). Open questions were as follows: (i) What are the three main positive aspects of webQDA? (ii) What are the three main limitations of webQDA? (iii) What features would you propose for a new version of webQDA? (iv) Suggestions and general comments to help improve webQDA (training, references, etc.). To analyse the answers to these questions we used webQDA itself, under a simple analysis system as shown in Fig. 2.

In the analysis dimension “Features/Navigation” we tried to verify the positive and negative aspects of the various subroutines and webQDA features, as well as factors related to the navigability of the different screens. Table 1 shows some attributes of the users who responded to the survey applied in relation to the positive and negative aspects of this dimension. We found out, according to the “Qualifications” attribute, that users who had completed a PhD have a more positive perception (77 %) than those who are still developing their PhDs (55 %).

Some of these positive assessments may be perceived from the following statements:

-

“Ability to join or cross two projects that work the same database, or similar database” (Q5, Female; 39; Brazilian; PhD completed; Health Sciences; Investigator/Researcher).

-

“Work with internal and external sources in a simplified manner; ease of compiling qualitative data; quick access to graphics” (Q7, Female, 55; Portuguese; PhD in development; Other; Teacher).

-

“Easy import of data; undo actions without harming the entire analysis” (Q98, Female; 41; Brazilian; Masters under development; Teaching and Education Sciences; Teacher).

In addition to these positive assessment on webQDA features one can find some negative comments like:

-

“There should be a “help” in the interactive programme or even a more direct aid sector, via skype or another interactive environment …” (Q10, Female; 58; Brazilian; PhD completed; Engineering and Technology; Teacher; Investigator/Researcher).

-

“Platform frailties that is not available on tablet nor on mobile” (Q16, Male; 44; Brazilian; PhD under development; Teaching and Education Sciences; businessman).

Table 2 shows the positive and negative assessment of the participants in relation to the webQDA interface. Here we cannot point out a difference between PhD students and those who have completed their PhDs. Some of the positive appreciations as to the Interface can be drawn from the following statements:

-

“Although somewhat intuitive it requires mastery of analytical techniques; Operationalize more” (Q29, Female; 53; Portuguese; PhD under development; Education Sciences and Teaching; Teacher; Information organisation).

-

“Better visibility of data. Better organization of the data which favours analysis” (Q40, Female; 47; Brazilian; PhD completed; Health Sciences; Teacher, Investigator/Researcher).

-

“Being networked, easy viewing layout” (Q54, Female; 35; Brazilian; PhD completed; Health Sciences; Teacher; language).

Negative assessments about the interface:

-

“Not all commands are intuitive: only with explanation is it possible to know its usefulness” (Q5, Female; 39; Brazilian; PhD completed; Health Sciences; Investigator/Researcher).

-

“I think some menus could be more intuitive” (Q6, Male; 44; Portuguese; PhD under development; Other; Teacher).

Overall the perception of the webQDA interface is positive, although several aspects that should be improved were pointed out. Table 2 shows the positive and negative assessment of the participants in relation to the webQDA interface. Here we cannot point out a difference between PhD students and those who have completed their PhDs.

5 Final Remarks

Although no usability metric was referred in this article, qualitative analysis of the open questions in a questionnaire to users of the webQDA software showed positive and negative aspects that fall into two broad dimensions of analysis: (i) Functionalities/Navigation; and (ii) Interface. The main conclusions that we can draw from this analysis are:

-

The users in general have a positive perception of the webQDA software and its features, namely Navigation and Interface.

-

There seems to be, on average, a more positive assessment from users with completed PhDs as compared to those with their “in progress” doctoral programmes for the dimension Functionality/Navigation. This difference does not exist in the Interface dimension.

-

There are no relevant differences between the views obtained from different professional or research areas, although a more positive assessment may be drawn as to Functionality/Navigation from users from the area of Education and Teaching (See Table 2).

Although most studies on usability define quantitative metrics, our study is a contribution that shows that qualitative analysis has great potential to deepen various dimensions of usability and functional and emotional interrelationships. Future studies should aim to diversify sources of data such as clinical interviews, semi-structured interviews and focus groups in advanced training contexts of qualitative research supported by webQDA.

Notes

- 1.

Our translation.

References

Cisneros-Puebla, C. A. (2003). Analisis cualitativo asistido por computadora. Sociologias, 9, 288–313.

Corti, L., & Gregory, A. (2011). CAQDAS comparability. what about CAQDAS data exchange? forum: Qualitative. Social Research, 12(1), 1–17.

Costa, A. P., et al. (2016). webQDA—Qualitative data analysis software usability assessment. In: Conferência Ibérica de Sistemas e Tecnologias de Informação. Gran Canária—Espanha: AISTI—Associação Ibérica de Sistemas e Tecnologias de Informação, (in press).

Costa, A.P., Faria, B.M. & Reis, L.P. (2015). Investigação através do Desenvolvimento: Quando as Palavras “Contam.” RISTI—Revista Ibérica de Sistemas e Tecnologias de Informação, (E4), pp.vii–x. Available at: Investiga??o atrav?s do Desenvolvimento: Quando as.

Godoy, A. S. (1995). Pesquisa Qualitativa: tipos fundamentais. Revista de Administração de Empresas, 35(3), 20–29.

ISO9241-210. (2010). Ergonomics of human-system interaction (210: Human-centred design for interactive systems).

Kaefer, F., Roper, J. & Sinha, P. (2015). A software-assisted qualitative content analysis of news articles : Example and reflections. Forum Qualitative Sozialforschung, 16(2).

Maguire, M., (2001). Methods to support human-centred design. International Journal of Human-Computer Studies, 55(4), 587–634. Available at: http://linkinghub.elsevier.com/retrieve/pii/S1071581901905038 Accessed November 1, 2013.

Neri de Souza, F., Costa, A.P. & Moreira, A. (2011). Questionamento no Processo de Análise de Dados Qualitativos com apoio do software webQDA. EduSer: revista de educação, Inovação em Educação com TIC, 3(1), 19–30.

Pierce, J. (2014). On the presentation and production of design research artifacts in HCI. In: Proceedings of the 2014 conference on Designing interactive systems—DIS’14. New York, New York, USA: ACM Press, pp. 735–744. Available at: http://dl.acm.org/citation.cfm?doid=2598510.2598525.

Pinho, I., et al. (2014). Determinantes na Adoção e Recomendação de Software de Investigação Qualitativa: Estudo Exploratório. Internet Latent Corpus Journal, 4(2), 91–102.

Puebla, C.A.C. & Davidson, J. (2012). Qualitative computing and qualitative research: Addressing the challenges of technology and globalization. Historical Social Research, 37(4), 237–248. Available at: http://www.jstor.org/stable/41756484.

Richards, L. (2002a). Qualitative computing–a methods revolution? International Journal of Social Research Methodology, 5(3), 263–276.

Richards, L. (2002b). Rigorous, rapid, reliable and qualitative? computing in qualitative method. American Journal Health Behaviour, 26(6), 425–430.

Scanlon, E., Mcandrew, P., & Shea, T. O. (2015). Designing for educational technology to enhance the experience of learners in distance education: How open educational resources, learning design and moocs are influencing learning. Journal of Interactive Media in Education, 1(6), 1–9.

Seffah, A., et al. (2008). Reconciling usability and interactive system architecture using patterns. Journal of Systems and Software, 81(11), 1845–1852. Available at: http://linkinghub.elsevier.com/retrieve/pii/S016412120800085X Accessed November 1, 2013.

Souza, F. N., Costa, A. P., & Moreira, A. (2011). Análise de Dados Qualitativos Suportada pelo Software webQDA. VII Conferência Internacional de TIC na Educação: Perspetivas de Inovação (pp. 49–56). VII Conferência Internacional de TIC na Educação: Braga.

Souza, F.N. de, Costa, A.P. & Moreira, A. (2016). webQDA. Available at: www.webqda.net.

Teixeira, R. A. G., Neri de Souza, F., & Vieira, R. M. (2015). Docentes Investigadores de Programas de Pós-graduação em Educação no Brasil: Estudo Sobre o Uso de Recursos Informaticos no Processo de Pesquisa (pp. 741–768). No prelo (: Revista da Avaliação da Educação Superior.

Tesch, R. (1991). Introduction. Qualitative Sociology, 14(3), 225–243.

Van Velsen, L. et al. (2008). User-centered evaluation of adaptive and adaptable systems: A literature review. The Knowledge Engineering Review, 23(03), 261–281. Available at: http://www.journals.cambridge.org/abstract_S0269888908001379.

Zimmerman, J., Forlizzi, J. & Evenson, S. (2007). Research through design as a method for interaction design research in HCI. In Proceedings of the SIGCHI conference on Human factors in computing systems—CHI’07. New York, New York, USA: ACM Press, p. 493. Available at: http://portal.acm.org/citation.cfm?doid=1240624.1240704.

Acknowledgments

The first author thanks the Foundation for Science and Technology (FCT) the financial support that enabled the development of this study and presentation. The authors thank Micro IO company and its employees for the development of the new version of webQDA and participants of this study.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Costa, A.P., de Sousa, F.N., Moreira, A., de Souza, D.N. (2017). Research through Design: Qualitative Analysis to Evaluate the Usability. In: Costa, A., Reis, L., Neri de Sousa, F., Moreira, A., Lamas, D. (eds) Computer Supported Qualitative Research. Studies in Systems, Decision and Control, vol 71. Springer, Cham. https://doi.org/10.1007/978-3-319-43271-7_1

Download citation

DOI: https://doi.org/10.1007/978-3-319-43271-7_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-43270-0

Online ISBN: 978-3-319-43271-7

eBook Packages: EngineeringEngineering (R0)