Abstract

Recommender systems can be viewed as prediction systems where we can predict the ratings which represent users’ interest in the corresponding item. Typically, items having the highest predicted ratings will be recommended to the users. But users do not know how certain these predictions are. Therefore, it is important to associate a confidence measure to the predictions which tells users how certain the system is in making the predictions. Many different approaches have been proposed to estimate confidence of predictions made by recommender systems. But none of them provide guarantee on the error rate of these predictions. Conformal Prediction is a framework that produces predictions with a guaranteed error rate. In this paper, we propose a conformal prediction algorithm with item-based collaborative filtering as the underlying algorithm which is a simple and widely used algorithm in commercial applications. We propose different nonconformity measures and empirically determine the best nonconformity measure. We empirically prove validity and efficiency of proposed algorithm. Experimental results demonstrate that the predictive performance of conformal prediction algorithm is very close to its underlying algorithm with little uncertainty along with the measures of confidence and credibility.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Collaborative filtering (CF) is a very promising approach in recommender systems and is the most widely adopted technique both in academic research and commercial applications. CF algorithms can be classified in two ways: in neighborhood based approaches prediction and recommendation can be done either by computing the similarities between users (user-based collaborative filtering (UBCF) [16]) or similarities between items (item-based collaborative filtering (IBCF) [2]) and model-based approaches [17] use mathematical models for making predictions. Many model-based algorithms are very complex which involves estimation of large number of parameters. Moreover if the assumptions of the model do not hold, it may lead to wrong predictions. On the other hand, neighborhood approaches are very simple both in terms of underlying principles and implementation while achieving reasonably accurate results. But UBCF does not perform well when the active user is having too few neighbors and neighbors with very low correlation to the active user [8]. In our paper, for the proposed conformal prediction algorithm we have chosen IBCF as the underlying algorithm because of its large potential in research and commercial applications [12].

Most of the CF algorithms are limited to making only single point predictions. Metrics such as MAE and RMSE [21] were proposed in the literature to measure the prediction accuracy. But accuracy of individual predictions can not be estimated using these measures, as these measures are used to predict the overall accuracy of the recommendation algorithm. Some confidence estimation algorithms have been proposed in the literature to estimate the confidence of each prediction. But none of these algorithms provide an upper bound on the error rate. In contrast, conformal predictors are able to produce confidence measures specific to each individual prediction with guaranteed error rate.

Conformal Prediction (CP) [4, 5] is the framework used in machine learning (ML) to make reliable predictions with known level of significance or error probability. Moreover, CP is increasingly becoming popular due to the fact that it can be built on top of any conventional point prediction algorithms like K-NN [6], SVM [18], decision trees [19], neural networks [20] etc. The confidence measures produced by CPs are not only useful in practice, but also their accuracy is comparable to, and sometimes even better than that of their underlying algorithms. So we use CP to associate a confidence measure for each individual prediction made by our chosen underlying algorithm.

The regions produced by any CP algorithm are automatically valid. But efficiency in terms of tightness and usefulness of prediction regions depends on the nonconformity measure (NCM) used by the CP algorithm. Moreover, we can define many different NCMs for a given underlying algorithm and each of these measures defines a different CP. So determining an efficient NCM based on the underlying algorithm is one important step in CP. In this work, we define different NCMs based on the underlying algorithm and empirically demonstrates that the CP with simple NCM which is a variant of NCM used in K-NN [6] performs well from both accuracy and efficiency perspectives.

Major contributions of this paper are: 1. Adaptation of conformal prediction to Item-based collaborative filtering. 2. Define NCMs based on similarity measure used in IBCF and empirically determine the best NCM. 3. Empirically demonstrate validity and efficiency of our conformal prediction algorithm.

The rest of the paper is organized as follows: In the next section we discuss related work. In Sect. 3, we discuss general idea of conformal prediction. In Sect. 4, we describe Item-based collaborative filtering. Section 5 describes our proposed algorithm which apply conformal prediction on top of Item-based collaborative filtering and defines different NCMs based on IBCF. Section 6 details our experimental results and show the validity and efficiency of our proposed conformal prediction algorithm. Finally, Sect. 7 gives our conclusions.

2 Related Work

In this section we review existing methods proposed in the literature to estimate confidence of CF algorithms. McNee et al. [7] estimate the confidence of an item as support for the item. Mclaughlin and Herlocker [8] proposed an algorithm for UBCF, which generates belief distributions for each prediction. Although their algorithm is good at achieving good precision by making sure that more popular items are recommended, they did not demonstrate the accuracy of their algorithm. Adomavicius et al. [1] estimate the confidence based on rating variance of each item. Shani and Gunawardana [9] defines confidence as the system’s trust in its recommendations. They proposed a method to estimate the confidence of recommendation algorithms, but no experiments are provided due to the broader scope of the chapter. Koren and Sill [10] formulated the problem of confidence estimation as a binary classification problem and find whether the predicted rating is within one rating level of the true rating. Although confidence is associated with each item in the recommendation list, the proposed confidence estimation algorithm is applicable only for their proposed CF algorithm. Mazurowski [3] introduced three confidence estimation algorithms based on resampling and standard deviation of predictions to predict confidence of individual predictions.

But all of the above algorithms failed to provide an upper bound on the error rate i.e., the probability of excluding the correct class label is guaranteed to be smaller than a predetermined significance level. On the other hand, our CP algorithms produce prediction regions with a bound on the probability of error. When forced to make point predictions, the confidence of a prediction is \(1-\) the second largest p-value and this second largest p-value becomes the upper bound on the probability of error. Moreover, with CP we can control the number of erroneous predictions by varying the significance level, thus making it suitable to different kinds of applications.

3 Conformal Prediction

In this section, we introduce the general idea behind conformal prediction [4, 5]. We have a training set of examples \(Z = \{z_1, z_2, ..., z_l\}\). Each \(z_i \in Z\) is a pair \((x_i,y_i); x_i \in \mathbb {R}^{\textit{d}}\) is the set of attributes for \(i^{\text {th}}\) example and \(y_i\) is the class label for that example. Our only assumption in CP is that all \(Z_i{'}s\) are independently and identically distributed (i.i.d.). Given a new object our task is to predict the class label \(y_{l+1}\). We try out all possible class labels \(y_j\) for the label \(y_{l+1}\) and append \(z_{l+1}\) (=\((x_{l+1},y_{l+1}\))) to Z. Then estimate the typicalness of the sequence \(Z \cup z_{l+1}\) with respect to i.i.d by using p-value function. Our prediction for \(y_{l+1}\) is the set of \(y_j\) for which p-value \(> \epsilon \), where \(\epsilon \) is the significance level. One way of obtaining p-value function is by considering how strange each example in our sequence is from all other examples. To measure these strangeness values we use NCM. NCM \(\mathcal {A}\) is a family of functions which assigns a numerical score to each example \(z_i\) indicating how different it is from the examples in the set \(\{z_1,...,z_{i-1},z_{i+1},...,z_n\}\).

NCM has to satisfy the following properties [4]:

-

1.

Nonconformity score of an example is invariant w.r.t. permutations. i.e., for any permutation \(\pi \) of 1, 2..., n

$$\begin{aligned} \begin{array}{r} \quad \mathcal {A}(z_1,z_2,...,z_n) = (\alpha _1,\alpha _2,...,\alpha _n) \implies \\ \mathcal {A}(z_{\pi (1)},z_{\pi (2)},...,z_{\pi (n)}) = (\alpha _{\pi (1)},\alpha _{\pi (2)},...,\alpha _{\pi (n)}) . \end{array} \end{aligned}$$(1) -

2.

\(\mathcal {A}\) is chosen such that larger the value of \(\alpha _i\) stranger is \(z_i\) to other examples.

p-value is computed by comparing \(\alpha _{l+1}\) with all other nonconformity scores.

$$\begin{aligned} p(y_j)= \dfrac{\#{\{i = 1,2,....,l+1}: \alpha _i \ge \alpha _{l+1}\}}{l+1} . \end{aligned}$$(2)An important property of p-value is that \(\forall \epsilon \in [0,1]\) and for all probability distributions P on Z,

$$\begin{aligned} P\{\{z_1,z_2,...,z_{l+1}\}:p(y_{l+1}) \le \epsilon \} \le \epsilon . \end{aligned}$$(3)

This original approach to CP is called Transductive Conformal Prediction (TCP). The p-values obtained from CP for each possible classification can be used in two different modes: Point prediction: for each test example, predict the classification with the highest p-value. The confidence of this prediction is \(1 -\) the second largest p-value and credibility is the highest p-value(credibility tells how well the new item with the assumed label conforms to the training set of items). Region prediction: given the \(\epsilon > 0\), output the prediction as the set of all classifications whose p-value \(> \epsilon \) with \(1-\epsilon \) confidence that the true label will be in this set. A method for finding \((1-\epsilon )\) prediction set is said to be valid if it has atleast \(1-\epsilon \) probability of containing the true label. Efficiency of CP is the tightness of prediction regions it produces. The narrower (small number of labels) the prediction region the more efficient the conformal predictor is.

4 Item Based Collaborative Filtering Algorithm

In IBCF, prediction and recommendation are based on item to item similarity. The key motivation behind this scheme is that a user will more likely purchase items that are similar to items he already purchased. This can be done as follows: Assume that I is the set of all available items. For every target user \(u_t\), first the algorithm looks into the set of items that he has rated (training set \(C_t\)) and computes how similar they are to the target item \(i_t \in S_t\) (\(S_t\) set of test items for the target user \(u_t\)) using a similarity measure, and then selects k most similar items \(\{i_1,i_2,...,i_k\}\). Once the most similar items are found, the prediction is then computed by taking weighted average of the target user’s ratings on these similar items. So two main tasks in IBCF are: computing item similarities and rating prediction.

In order to apply CP to IBCF, it is appropriate to convert all ratings to binary i.e., the user likes or dislikes the item. The reason for this is twofold: first, uneven distribution of ratings in the data sets: For instance, more than 80 % of all ratings in MovieLens 100 K are greater than 2 and nearly 70 % of all ratings in Eachmovie are greater than 3. As a result, it becomes very difficult to identify k most similar items consumed by the target user which are rated as 1 when the target item rating is assumed as 1. Second, in [14] authors have shown that user’s rating as the noisy evidence of user’s true rating. Therefore identifying k most similar items which are rated as 1 when the new item rating is assumed as 1 does not make sense.

The simple way to convert all ratings to binary is as follows: take the middle of the rating scale as the threshold (for instance, 3 in the rating scale of 1–6) and assume all ratings greater than the threshold as liked and all other ratings as disliked. But this approach works fine when the distribution of all ratings is even which is not the case in most of the data sets. Other approach to convert the ratings into binary is, compute the average rating for every user and consider all ratings whose rating is greater than the average as liked and the all ratings below this average as disliked. This is the best approach to deal with all types of users including pessimistic, optimistic and strict users. For example, pessimistic (optimistic) users who usually give low (high) rating to every item they consume, we assume that they like items rated above their average rating and dislike items rated below the average. Similarly, in case of strict users who rate every item correctly according to whether they like that item or not (gives high rating when they like and low rating when they do not like) in which case we assume all ratings above the average (approximately equal to the middle of the rating scale) as like and other ratings as dislike [13]. This ensures that our CP algorithm have a reasonable number of liked and disliked items in the data set which makes it easier to find k similar items when the target item is assumed as like or dislike. Item similarity computation and prediction are done as follows:

-

Item Similarity Computation: In our IBCF we have chosen cosine based similarity measure to compute similarities among the items. Since, we do not have rating values, our algorithm uses binary cosine similarity measure [15] that finds the number of common users between the two items i and j and is defined as follows:

$$\begin{aligned} similarity(i,j) = \dfrac{\#common\_users(i,j)}{\sqrt{\#users(i)}.\sqrt{\#users(j)}} . \end{aligned}$$(4)The above equation will give the value in between [0,1]. \(Similarity(i,j) = 1\) when these two items are rated by exactly same set of users. For simplicity, we use this simple cosine similarity measure as our aim is not to improve the accuracy of the algorithm, but to provide confidence to the predictions generated by the algorithm. We can use efficient similarity measures instead of this simple measure to obtain more accurate results.

-

Predicting the label (like or dislike): Once the similarities are computed, find the k most similar items (k nearest neighbors) of the target item among the consumed items of the target user. Then predict the label for the target item as the most common label among its k nearest neighbors.

5 Application of TCP to IBCF

In this section we discuss how to build TCP on top of IBCF algorithm (a variant of the algorithm in [6]). We first discuss the algorithm setup and then define different NCMs based on IBCF algorithm. Finally, we present our TCP algorithm with IBCF as an underlying algorithm.

5.1 Algorithm Setup

In order to apply CP, we need a training set of examples \(\{z_1,z_2,...,z_l\}\) and a test example \(z_{l+1}\) for which we want to make the prediction. Here we discuss how this is formulated in the context of IBCF. For every target user \(u_t\), there is a set of items \(W_t\) rated by this user and we consider a part of \(W_t\) as the training set \(C_t\). For every item \(i \in C_t\), user has assigned a label which tells whether the user liked(+1) or disliked(−1) the item i. Therefore, Y = {\(+\)1, −1}. We also have a test set of items \(S_t\) (\(W_t - C_t\)) for which we hide the actual labels assigned by the user. Now, our task is to assign a label (which makes the current test item conforms to the training set) to each of the test set items with an associated confidence measure which is valid according to Eq. (3).

5.2 Nonconformity Measures (NCMs)

We propose different NCMs based on IBCF. First we introduce the terminology to define NCMs. Since we are having only two labels, Y = {\(+1,-1\)}, we are assuming that if \(y = 1\) \(\bar{y} = -1\) and vice-versa. Assume that \(similarity_i^y\) is vector which is a sorted sequence (in descending order) of similarity of an item i with items \(\in C_t\) with the same label y and \(similarity_i^{\bar{y}}\) is a sorted sequence (in descending order) of similarity of an item i with items \(\in C_t\) with the label \(\bar{y}\). The weight for the item i with label y, \(w_i^y\) is defined as the sum of similarity values of k most similar items with the label y among the set of rated items \(C_t\) of the target user \(u_t\). Similarly \(w_i^{\bar{y}}\) is defined as the sum of similarity values of k most similar items with the label \(\bar{y}\) among the items in \(C_t\) of the target user \(u_t\).

where k is the number of most similar items, \(similarity(i,j)^y\) is jth most similar item in \(similarity_i^y\), y is the label of the item i, \(similarity(i,j)^{\bar{y}}\) is jth most similar item in \(similarity_i^{\bar{y}}\), \(\bar{y}\) is the label other than the label of item i.

In what follows we define NCMs based on IBCF:

-

1.

NCM1 & NCM2: The simple NCMs for an item i are as follows: The maximum value of the similarity function defined in Eq. (4) is 1. As a result, the maximum value of \(w_i^y\) becomes k. The higher the value of \(w_i^y\), the more conforming the item i is with respect to the other items. NCM1 will be high when \(w_i^y\) is small and smaller value of \(w_i^y\) indicates that the item with label y is nonconforming with other items of the same label. As a consequence, the higher the value of NCM1, the stranger the item i is with respect to the other examples with the same label according to the second property of NCM. Similarly, smaller the value of \(w_i^y\) the higher the value of NCM2. The higher the value of NCM2, the more nonconforming the item i is with respect to the other examples.

-

2.

NCM3: A more efficient and a variant of NCM proposed in [6] can be defined by taking into consideration \(w_i^{\bar{y}}\) along with \(w_i^y\) in computing the nonconformity score. According to NCM3, example i with label y is nonconforming when it is very similar to the items with label \(\bar{y}\) (high value of \(w_i^{\bar{y}}\)) and dissimilar to the items with label y (low value of \(w_i^y\)).

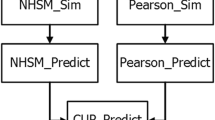

5.3 Item-Based Collaborative Filtering with TCP (IBCFTCP)

Algorithm 1 describes the application of TCP to IBCF in detail. For every item i in \(S_t\) of the target user \(u_t\), try all possible labels in Y and compute the typicalness of the sequence E resulting from appending i with the assumed label to \(C_t\) using p-value function which in turn uses nonconformity values (calculated using any of the NCMs discussed above) of all items in E. For region predictions, output the prediction as the set of all labels whose p-values are \(> \epsilon \) with confidence \(1-\epsilon \) or in case of point predictions, output the label with the highest p-value with confidence \(1-\) the second highest p-value and credibility as the highest p-value.

6 Experimental Results

We tested our algorithm on four data sets: MovieLens 100K, MovieLens 1M, MovieLens-latest-small and EachMovie. We randomly selected 50 users and for each user first 60 % of the data is considered as training set and remaining 40 % is taken as the test set. Details of data sets is given in Table 1. As TCP is a time consuming approach and it increases with the number of items when applied to IBCF, we do not conduct our experiments on data sets in other domains such as books and music where there are large number of items. Moreover, we do not compare our results with other state-of-the-art algorithms, as our aim is not to improve the performance of the algorithm but to associate confidence to the predictions made by the algorithm without compromising the performance.

Here we compare performance of CP with the underlying algorithm (IBCF) in terms of percentage of correct classifications (%CC) for different data sets and for different k values. In our experiments, we considered 4 different k values: 5,10,15 and 20. In order to do this comparison, we have to make single point predictions, since the underlying algorithm make only single point predictions. In single point predictions we output a label with the highest p-value. In case, if both labels share this p-value then we can take any one of these labels randomly. In our experiments we take both labels: one that is same as the true label (conforming one) and other one which is other than the true label (nonconforming one) and compute the performance of CP algorithm separately for both cases. In this way we can measure the certainty in predictions. In Table 2 we compare the performance in terms of %CC of IBCFTCP with that of IBCF with both conforming labels (CL) and nonconforming labels (NL). We got same results for both NCMs1&2 in terms of %CC, validity and efficiency. So we do not show their results separately. We also calculate the uncertainty in the predictions as the percentage of items having more than one label (in our case both labels) shares the highest p-value. As the percentage of uncertainty is increased the deviation between performance of CP with conforming label and nonconforming label will also be increased as shown in Table 2.

From Table 2 when CLs are considered, the CP algorithm (for all NCMs) is outperforming IBCF for odd k values (5 & 15) due to its slightly higher uncertainty values compared to IBCF, whereas IBCF is performing better for even k values (10 & 20) because of its significantly higher uncertainty values. In case of NLs, CP with NCMs1&2 is showing performance improvement for even k values and lower performance for odd k values (5&15) compared to IBCF. On the other hand, CP with NCM3 is outperforming IBCF for all k values when NLs are taken into account. From Table 2 we can say that NCM3 is the best NCM (shown in bold numbers) as it is showing good performance with less uncertainty compared to NCMs1&2. Although NCMs1&2 are outperforming NCM3 in terms of %CC when CLs are taken into consideration, these are not good NCMs, as this improvement is due to uncertain predictions produced by these NCMs and though IBCF with CLs is performing well for even values of k compared to CP with NCM3, this is also because of high uncertainty involved in predictions made by IBCF. Uncertainty of IBCF for odd k values is 0 (because in this case there is no possibility of obtaining equal number of \(+1\) s and \(-1\) s) and for even k values its uncertainty is even greater than IBCFTCP with NCMs1&2.

All CPs are automatically valid. Notice that in IBCFTCP the training set and test set of items differ from user to user in contrast to CP in ML where the training set and test sets are fixed for the whole algorithm. So, it is necessary to show that the validity is satisfied for each user. The validity of CP with NCM3 for each user for different data sets and for \(k = 5\) is shown in Fig. 1 (We got similar results for validity and efficiency for other k values. Because of space limitations we are not showing their results). Although validity for each user is also satisfied for NCMs1&2 we did not show the results here because NCM3 is the best NCM in terms of prediction accuracy as shown in Table 2 and efficiency (which will be discussed in the following paragraphs). From Fig. 2 we can see that the error values (validity) of most of the users are within the bounds of \(\epsilon \).

Figure 2 shows average validity of IBCFTCP for different data sets and for different NCMs for \(k = 5\). From Fig. 2, it is clear that prediction regions produced by CP with all NCMs are valid and follows a straight line as required.

Usefulness of CPs depend on its efficiency. Vovk et al. [11] proposed ten different ways of measuring the efficiency of CPs. In our experiments we use Observed Excess criterion which gives the the average number of false labels (ANFL) included in the prediction set at significance level \(\epsilon \). The formula to calculate ANFL is:

where \(\varGamma _i^\epsilon \) is the prediction region for the \(i^{th}\) test item at significance level \(\epsilon \), \(T_i\) is the true label of the \(i^{th}\) test element, \(|S_t|\) is the total number of test items.

The efficiency of CP algorithm in terms of ANFL for different data sets, different NCMs and for \(k = 5\) is shown in Fig. 3 shows that CP with NCM3 is producing less number of false labels compared to NCMs1&2. From Fig. 3, we can observe that the number of false labels are decreasing with the decrease of confidence level (if we go from higher to lower confidence levels).

In addition to ANFL we use three other criteria to compute the efficiency of CP: 1. % of test elements having prediction regions with single label. 2. % of test elements having prediction regions with more than one label. 3. % of test elements having empty prediction region. A CP is said to be efficient when the % of second and third criteria are relatively small whereas the % of first should be high especially at higher confidence levels (50–99%). Our algorithm is optimal in the sense that it produces 0 % empty prediction regions from 70–99% confidence levels and < 20 % from 50–70 % confidence levels, while showing moderate performance in minimizing the second one and maximizing the first one at these confidence levels. The % of test elements having prediction regions producing single labels at different confidence levels for different data sets, different NCMs and for \(k = 5\) is shown in Fig. 4. From Fig. 4 we can observe that NCM3 is outperforming NCMs1&2, in producing the higher number of prediction regions with single labels. The % of single labels produced by NCMs1&2 never exceed 20 % at any confidence level, whereas NCM3 is producing sufficiently large % of single labels especially from 30 %–90 % confidence levels. Figure 5 shows the % of correct predictions among the % of single labels produced by NCM3 at each confidence level. From Fig. 5 we can see that the % of correct predictions at any confidence level is \(> 60\,\%\). The % of test elements having prediction regions with more than one rating at different confidence levels is shown in Fig. 6. In this case also NCM3 is performing better than NCM1&2 as NCM3 is producing less number of prediction regions with multiple labels compared to NCMs1&2 at all confidence levels and in case of NCM3 this number is zero from 0–60 % confidence levels. The % of test elements having empty prediction regions at different confidence levels is shown in Fig. 7. In this case also NCM3 is giving good results compared to NCMs1&2 by producing less number of empty prediction regions compared to NCMs1&2 and this number is zero from 70–99% confidence levels.

The mean confidence and mean credibility of single point predictions produced by IBCFTCP is shown in Table 3. We also calculated mean difference between highest p-value and lowest p-value for all test ratings. We will get confidence predictions when this difference is high. From Table 3, we can observe that this difference for NCM3 is around 50 %, whereas it is only around 15 % with NCMs1&2. Also, the mean confidence and mean credibility values produced by CP with NCM3(shown in bold numbers) are better than that of NCMs1&2.

In summary, NCM3 is the best NCM compared to NCMs1&2 in terms of prediction accuracy and efficiency. Moreover, when restricted to make single point predictions, mean confidence and credibility values produced by CP with NCM3 are higher than that of NCMs1&2.

7 Conclusions

In this work, we show the adaptation of CP to IBCF and proposed different NCMs for CP based on the underlying algorithm. Using our CP algorithm we are associating confidence values to each prediction along with the guaranteed error rate unlike IBCF which produces only bare predictions. Our algorithm is tested on different data sets and we experimentally proved that NCM3 is the best NCM compared to NCMs1&2 in achieving the prediction accuracy as good as the underlying algorithm with little uncertainty and also in producing efficient prediction regions. When making single point predictions, the mean confidence and credibility values produced by the proposed algorithm are reasonably high. Although our algorithm failed in producing large percentage of single labels at 90–99 % confidence levels (desired confidence levels in medical applications) we can use this algorithm to make predictions in certain recommendation domains such as movies, books, news articles, restaurants, music and in tourism where the confidence level of 50 %–90 % is acceptable.

References

Adomavicius, G., Kamireddy, S., Kwon, Y.: Towards more confident recommendations:improving recommender systems using filtering approach based onrating variance. In: Proceedings of (WITS 2007) (2007)

Sarwar, B.M., Karypis, G., Konstan, J.A., Riedl, J.: Item-based collaborative filtering recommendation algorithms. In: Proceedings of WWW, pp. 285–295 (2001)

Mazurowski, M.A.: Estimating confidence of individual rating predictions in collaborative filtering recommender systems. Expert Syst. Appl. 40(10), 3847–3857 (2013)

Shafer, G., Vovk, V.: A tutorial on conformal prediction. J. Mach. Learn. Res. 9, 371–421 (2008)

Vovk, V., Gammerman, A., Shafer, G.: Algorithmic Learning in a Random World. Springer, New York (2005)

Proedrou, K., Nouretdinov, I., Vovk, V., Gammerman, A.J.: Transductive confidence machines for pattern recognition. In: Elomaa, T., Mannila, H., Toivonen, H. (eds.) ECML 2002. LNCS (LNAI), vol. 2430, p. 381. Springer, Heidelberg (2002)

McNee, S.M., Lam, S.K., Guetzlaff, C., Konstan, J.A., Riedl, J.: Confidence displays and training in recommender systems. In: International Conference on Human-Computer Interaction (2003)

McLaughlin, M.R., Herlocker, J.L.: A collaborative filtering algorithm and evaluation metric that accurately model the user experience. SIGIR 2004, 329–336 (2004)

Shani, G., Gunawardana, A.: Evaluating recommendation systems. In: Ricci, F., Rokach, L., Shapira, B., Kantor, P.B. (eds.) Recommender Systems Handbook, pp. 257–297. Springer, New York (2011)

Koren, Y., Sill, J.: Ordrec: an ordinal model for predicting personalized item rating distributions. In: RecSys, pp. 117–124 (2011)

Vovk, V., Fedorova, V., Nouretdinov, I., Gammerman, A.: Criteria of Efficiency for Conformal Prediction. Technical report (2014)

Linden, G., Smith, B., York, J.: Amazon.com recommendations: item-to-item collaborative filtering. IEEE Internet Comput. 7(1), 76–80 (2003)

Lemire, D., Maclachlan, A.: Slope One Predictors for Online Rating-Based Collaborative Filtering. CoRR abs/cs/0702144 (2007)

Hill, W., Stead, L., Rosenstein, M., Furnas, G.W.: Recommending and evaluating choices in a virtual community of use. In: Proceedings of ACM CHI95 Conference on Human Factors in Computing Systems, pp. 194–201 (1995)

Gunawardana, A., Shani, G.: A survey of accuracy evaluation metrics of recommendation tasks. J. Mach. Learn. Res. 10, 2935–2962 (2009)

Johansson, U., Bostrom, H., Lofstrom, T.: Conformal prediction using decisiontrees. In: IEEE 13th International Conference on Data Mining, pp. 330–339 (2013)

Koren, Y., Bell, R.M., Volinsky, C.: Matrix factorization techniques for recommender systems. IEEE Comput. 42(8), 30–37 (2009)

Saunders, C., Gammerman, A., Vovk, V.: Transduction with confidence and credibility. In: Proceedings of IJCAI 1999, pp. 722–726 (1999)

Johansson, U., Bostrom, H., Lofstrom, T.: Conformal prediction using decision trees. In: IEEE 13th International Conference on Data Mining, pp. 330–339 (2013)

Papadopoulos, H., Vovk, V., Gammerman, A.: Conformal prediction with neural networks. In: 19th IEEE ICTAI 2007, pp. 388–395 (2007)

Breese, J. S., Heckerman, D., and Kadie, C. Empirical analysis of predictive algorithms for collaborative filtering. In: Proceedings of the 14th Conference on UAI 1998, pp. 43–52 (1998)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Himabindu, T.V.R., Padmanabhan, V., Pujari, A.K., Sattar, A. (2016). Prediction with Confidence in Item Based Collaborative Filtering. In: Booth, R., Zhang, ML. (eds) PRICAI 2016: Trends in Artificial Intelligence. PRICAI 2016. Lecture Notes in Computer Science(), vol 9810. Springer, Cham. https://doi.org/10.1007/978-3-319-42911-3_11

Download citation

DOI: https://doi.org/10.1007/978-3-319-42911-3_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-42910-6

Online ISBN: 978-3-319-42911-3

eBook Packages: Computer ScienceComputer Science (R0)