Abstract

Inspired by the group discussion behavior of students in class, a new group topology is designed and incorporated into original particle swarm optimization (PSO). And thus, a novel modified PSO, called group discussion mechanism based particle swarm optimization (GDPSO), is proposed. Using a group discussion mechanism, GDPSO divides a swarm into several groups for local search, in which some smaller teams with a dynamic change topology are included. Particles with the best fitness value in each group will be selected to learn from each other for global search. To evaluate the performance of GDPSO, four benchmark functions are selected as test functions. In the simulation studies, the performance of GDPSO is compared with some variants of PSOs, including the standard PSO (SPSO), PSO-Ring and PSO-Square. The results confirm the effectiveness of GDPSO in some of the benchmarks.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Inspired by a swarm behavior of bird flock and fish school, particle swarm optimization (PSO) was originally proposed by Kenney and Eberhart [1, 2]. Since its inception, numerous scholars have been increasingly interested in the work of employing PSO to solve various complicated optimization problems and putting forward a series of methods to improve the performance of PSO in case of trapping in local optimum. The analysis and improvement of PSO can be mainly summarized into three categories: parameters adjustment [3, 4], new population topology design [5–9] and hybrid strategies [10, 11]. In PSO, each particle searches for a better position in accordance with its own experience and the best experience of its neighbors [12]. Accordingly, a variety of researches have been dedicated to modifying the information exchange mechanisms between neighbors (learning exemplars) with various population topological structures. Kennedy proposed a Ring topology, with which each particle is only connected to its immediate neighbors [6]. Mendes presented three other topologies, i.e., four clusters, Pyramid and Square to guarantee every individual fully informed [7]. Jiang proposed a novel age-based PSO with age-group topology [8]. Lim proposed a new variant of PSO with increasing topology connectivity that increases the particle’s topology connectivity with time as well as performs the shuffling mechanism [9].

By mimicking the group discussion behavior of students in class, a new topology is designed. And thus an improved PSO, named GDPSO is proposed. Through some of the benchmarks, the experimental results showed that the proposed GDPSO algorithm adjusts the balance between the local search and global search and it can improve the performance of PSO significantly.

The remainder of this paper can be outlined as follows. In Sect. 2, the substance of standard PSO is presented. Afterwards, we introduce the origins and procedures of the proposed GDPSO in detail in Sect. 3. In Sect. 4, we describe the experimental settings and discuss the results. Finally, conclusions are drawn in the last section.

2 Standard Particle Swarm Optimization

In SPSO, a potential solution of a problem is represented by the position of each particle x i . The position x i is updated by a velocity v i , which controls not only the distance and but also the direction of a particle. The position and velocity are updated for the next iteration according to the following equations:

where \( x_{id} \in [l_{d} ,\,u_{d} ],\,\,d \) is the dimension of the search space, l d is the lower bound and u d is the upper bound of the dth dimension. The t means that the algorithm is going on the tth generation. The inertia weight w, which is linearly reduced during the search time, controls how much the previous velocity influences the new velocity [13]. The w is updated by

The c 1 and c 2 are called positive acceleration coefficients, which are taken as 2 normally. The r 1 and r 2 are random values in the range [0, 1]. The best previous position of the ith particle is called personal best particle (P best ). The best one of all the P best is called global best particle (G best ), denoting the best previous position of the swarm. p id presents the P best while p gd presents the G best .

3 Group Discussion Mechanism Based Particle Swarm Optimization

Group discussion means that all the members in a group take an active part in discussion around a specific theme, during which face to face communication activities, the team spirit, sense of responsibility and mutual benefit are contained. A group member should reflect on their own learning and thinking, and then seriously consider the others’ view. With every member adhering to the correct opinion and correcting the error, a better idea is more likely to be come up with than ever before.

In general, there is a teacher and a number of students involved in the group discussion in a classroom. The students are divided into several groups randomly by a teacher and each group includes some smaller teams to discuss a specific problem. The structure of a class is shown in Fig. 1. A team may consist of two classmates who are seated in the same desk. During the discussion, the tasks of a teacher are to evaluate everyone’s idea and control the discussion time. In order to increase the diversity of the ideas, it’s better to enlarge the scope of a team after desk-mates discussing with each other for many times. Hence the teacher let students discuss with someone whose seats are before or after their desks so that there are six members in a new team totally. The team transformation is shown in Fig. 2.

After the teacher’s evaluation, a student with the best idea in each group is ordinarily chosen as a group leader. Each group leader then makes a presentation in the class. Considering the selfishness of human being, we suppose that all group leaders discuss with each other without the participation of other group members.

Incorporating the aforementioned idea into PSO, we designed a new topology and proposed group discussion mechanism based particle swarm optimization (GDPSO). The procedure of GDPSO is described as follow:

-

Step 1: generate n particles randomly and evaluate each particle according to their fitness function.

-

Step 2: separate these n particles into m groups. The number of each group is set as the same for the sake of fairness.

-

Step 3: update the positions and velocities using Eqs. (1) and (2).

-

Step 4: calculate the fitness value of the updated particles.

-

Step 5: select a particle as a group leader with the best fitness value from each group.

-

Step 6: After m group leaders learning from each other, evaluate them again. If the fitness value is not better than before, the previous position should be still remained.

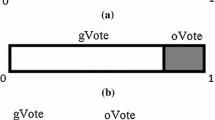

In order to ensure that every particle has the same number of neighbors, we design a topology as shown in Fig. 3. The pseudo-code of GDPSO is given in Table 1.

4 Simulation Experiments and Analysis

To measure the performance of GDPSO, the experiments were conducted to compare three PSOs on four benchmark functions listed in Table 2. These three PSOs are: standard PSO, PSO-Ring [5] and PSO-Square [6]. All these four heuristic algorithms presented in this paper were coded in MATLAB language. And the experiments were implemented on an Intel Core i5 processor with 2.27 GHz CPU speed and 2.93 GB RAM machine, running Windows 7. All experiments were run 20 times.

4.1 Parameter Settings

The set of parameters are listed in Table 3 below.

4.2 Experimental Results

The results of the experiment are presented in Table 4. In particular, the best results are shown in bold. Figure 4 shows the average best fitness convergent curves. As can be clearly seen from the tables and figures, the performance of GDPSO algorithms is not always better than other PSOs in different functions. From the data of the minimum value, it is obvious that GDPSO can find the best solution in three benchmark functions, i.e., Easom2D, Himmelblan and Michalewicz10 which are all multimodal functions. The standard deviation of GDPSO in Rosenbrock is better than other algorithms. From the curves of these three benchmarks, it is obvious that the improvement we suggest has effect on some of optimization problems.

5 Conclusion

In this paper, we proposed an improved PSO based on group discussion behaviors in class, which provides a new insight to adjust the balance between the local search and global search. GDPSO divides a swarm into several groups for local search and particles with the best fitness value in each group will be selected to learn from each other for global search. Moreover, GDPSO provides a dynamic topology instead of static topology in SPSO. By testing on four benchmark functions, GDPSO demonstrates better performance than other PSOs in three benchmarks. Although GDPSO doesn’t perform best all the time, we believe that it still has a potentiality and capability to solve other different kinds of optimization problems.

However, only applying in four benchmark problems to GDPSO are not enough. Hence more experiments on quantities of benchmark functions must be investigated in the future. Besides, we will focus on utilizing the new topology to other swarm intelligence algorithms as possible. We are also setting about applying the proposed GDPSO algorithm to more applications like portfolio optimization to verify its effectiveness in solving real-world problems.

References

Eberchart, R.C., Kennedy, J.: Particle swarm optimization. In: IEEE International Conference on Neural Networks, Perth, Australia (1995)

Eberchart, R.C., Kennedy, J.: A new optimizer using particle swarm theory. In: Proceedings of the 6th International Symposium on Micromachine and Human Science, Nagoya, Japan, pp. 39–43 (1995)

Clerc, M., Kennedy, J.: The particle swarm: explosion, stability, and convergence in multidimensional complex space. IEEE Trans. Evol. Comput. 6, 58–73 (2002)

Clerc, M.: The swarm and the queen: towards a deterministic and adaptive particle swarm optimization. In: Proceedings of the 1999 Congress on Evolutionary Computation, pp. 1927–1930 (1999)

Suganthan, P.N.: Particle swarm optimizer with neighborhood operator. In: Proceedings of the IEEE Congress of Evolutionary Computation, pp. 1958–1961 (1999)

Kennedy, J.: Small worlds and mega-minds: effects of neighborhood topology on particle swarm performance. In: Proceedings of the 1999 Congress on Evolutionary Computation, Washington, DC, pp. 1931–1938 (1999)

Mendes, R., Kennedy, J., Neves, J.: The fully informed particle swarm: simpler, maybe better. IEEE Trans. Evol. Comput. 8, 204–210 (2004)

Jiang, B., Wang, N., Wang, L.: Particle swarm optimization with age-group topology for multimodal functions and data clustering. Commun. Nonlinear Sci. Numer. Simul. 18, 3134–3145 (2013)

Wei, H.L., Isa, N.A.M.: Particle swarm optimization with increasing topology connectivity. Eng. Appl. Artif. Intell. 27, 80–102 (2014)

Angeline, P.J.: Using selection to improve particle swarm optimization. In: Proceedings of IEEE World Congress on Computational Intelligence, Anchorage, Alaska, pp. 84–89 (1998)

Li, L.L., Wang, L., Liu, L.H.: An effective hybrid PSOSA strategy for optimization and its application to parameter estimation. Appl. Math. Comput. 179, 135–146 (2006)

Kennedy, J., Mendes, R.: Population structure and particle swarm performance. In: Proceedings of the 2002 Congress on Evolutionary Computation, pp. 1671–1676 (2002)

Shi, Y., Eberhart, R.: A modified particle swarm optimizer. In: Proceedings of IEEE World Congress on Computational Intelligence Evolutionary Computation (1998)

Acknowledgments

This work is partially supported by The National Natural Science Foundation of China (Grants Nos. 71571120, 71001072, 71271140, 71471158, 71501132, 2016A030310067) and the Natural Science Foundation of Guangdong Province (Grant no. 2016A030310074).

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Tan, L.J., Liu, J., Yi, W.J. (2016). Group Discussion Mechanism Based Particle Swarm Optimization. In: Huang, DS., Han, K., Hussain, A. (eds) Intelligent Computing Methodologies. ICIC 2016. Lecture Notes in Computer Science(), vol 9773. Springer, Cham. https://doi.org/10.1007/978-3-319-42297-8_9

Download citation

DOI: https://doi.org/10.1007/978-3-319-42297-8_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-42296-1

Online ISBN: 978-3-319-42297-8

eBook Packages: Computer ScienceComputer Science (R0)