Abstract

We point out that the ideas underlying some test procedures recently proposed for testing post-model-selection (and for some other test problems) in the econometrics literature have been around for quite some time in the statistics literature. We also sharpen some of these results in the statistics literature. Furthermore, we show that some intuitively appealing testing procedures, that have found their way into the econometrics literature, lead to tests that do not have desirable size properties, not even asymptotically.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

1 Introduction

Suppose we have a sequence of statistical experiments given by a family of probability measures \(\left \{P_{n,\alpha,\beta }:\alpha \in A,\beta \in B\right \}\) where α is a “parameter of interest”, and β is a “nuisance-parameter”. Often, but not always, A and B will be subsets of the Euclidean space. Suppose the researcher wants to test the null hypothesis H 0: α = α 0 using the real-valued test-statistic T n (α 0), with large values of T n (α 0) being taken as indicative for violation of H 0.Footnote 1 Suppose further that the distribution of T n (α 0) under H 0 depends on the nuisance parameter β. This leads to the key question: How should the critical value then be chosen? [Of course, if another, pivotal, test-statistic is available, this one could be used. However, we consider here the case where a (non-trivial) pivotal test-statistic either does not exist or where the researcher—for better or worse—insists on using T n (α 0).] In this situation a standard way (see, e.g., [3, p.170]) to deal with this problem is to choose as critical value

where 0 < δ < 1 and where c n, β (δ) satisfies \(P_{n,\alpha _{0},\beta }\left (T_{n}(\alpha _{0}) > c_{n,\beta }(\delta )\right ) =\delta\) for each β ∈ B, i.e., c n, β (δ) is a (1 −δ)-quantile of the distribution of T n (α 0) under \(P_{n,\alpha _{0},\beta }\). [We assume here the existence of such a c n, β (δ), but we do not insist that it is chosen as the smallest possible number satisfying the above condition, although this will usually be the case.] In other words, c n, sup(δ) is the “worst-case” critical value. While the resulting test, which rejects H 0 for

certainly is a level δ test (i.e., has size ≤ δ), the conservatism caused by taking the supremum in (1) will often result in poor power properties, especially for values of β for which c n, β (δ) is much smaller than c n, sup(δ). The test obtained from (1) and (2) above (more precisely, an asymptotic variant thereof) is what Andrews and Guggenberger [1] call a “size-corrected fixed critical value” test.Footnote 2

An alternative idea, which has some intuitive appeal and which is much less conservative, is to use \(c_{n,\hat{\beta }_{n}}(\delta )\) as a random critical value, where \(\hat{\beta }_{n}\) is an estimator for β (taking its values in B), and to reject H 0 if

obtains (measurability of \(c_{n,\hat{\beta }_{n}}(\delta )\) being assumed). This choice of critical value can be viewed as a parametric bootstrap procedure. Versions of \(c_{n,\hat{\beta }_{n}}(\delta )\) have been considered by Williams [14] or, more recently, by Liu [9]. However,

clearly holds for every β, indicating that the test using the random critical value \(c_{n,\hat{\beta }_{n}}(\delta )\) may not be a level δ test, but may have size larger than δ. This was already noted by Loh [8]. A precise result in this direction, which is a variation of Theorem 2.1 in [8], is as follows:

Proposition 1

Suppose that there exists a β n max = β n max (δ) such that \(c_{n,\beta _{n}^{\max }}(\delta ) = c_{n,\sup }(\delta )\) . Then

implies

i.e., the test using the random critical value \(c_{n,\hat{\beta }_{n}}(\delta )\) does not have level δ. More generally, if \(\hat{c}_{n}\) is any random critical value satisfying \(\hat{c}_{n} \leq c_{n,\beta _{n}^{\max }}(\delta )(= c_{n,\sup }(\delta ))\) with \(P_{n,\alpha _{0},\beta _{n}^{\max }}\) -probability 1, then ( 4 ) still implies ( 5 ) if in both expressions \(c_{n,\hat{\beta }_{n}}(\delta )\) is replaced by \(\hat{c}_{n}\) . [The result continues to hold if the random critical value \(\hat{c}_{n}\) also depends on some additional randomization mechanism.]

Proof

Observe that \(c_{n,\hat{\beta }_{n}}(\delta ) \leq c_{n,\sup }(\delta )\) always holds. But then the l.h.s. of (5) is bounded from below by

the last inequality holding in view of (4). The proof for the second claim is completely analogous. ■

To better appreciate condition (4) consider the case where c n, β (δ) is uniquely maximized at β n max and \(P_{n,\alpha _{0},\beta _{n}^{\max }}(\hat{\beta }_{n}\neq \beta _{n}^{\max })\) is positive. Then

holds and therefore we can expect condition (4) to be satisfied, unless there exists a quite strange dependence structure between \(\hat{\beta }_{n}\) and T n (α 0). The same argument applies in the more general situation where there are multiple maximizers β n max of c n, β (δ) as soon as \(P_{n,\alpha _{0},\beta _{n}^{\max }}(\hat{\beta }_{n}\notin \arg \max c_{n,\beta }(\delta )) > 0\) holds for one of the maximizers β n max.

In the same vein, it is also useful to note that Condition (4) can equivalently be stated as follows: The conditional cumulative distribution function \(P_{n,\alpha _{0},\beta _{n}^{\max }}(T_{n}(\alpha _{0}) \leq \cdot \mid \hat{\beta }_{n})\) of T n (α 0) given \(\hat{\beta }_{n}\) puts positive mass on the interval \((c_{n,\hat{\beta }_{n}}(\delta ),c_{n,\sup }(\delta )]\) for a set of \(\hat{\beta }_{n}\)’s that has positive probability under \(P_{n,\alpha _{0},\beta _{n}^{\max }}\). [Also note that Condition (4) implies that \(c_{n,\hat{\beta }_{n}}(\delta ) < c_{n,\sup }(\delta )\) must hold with positive \(P_{n,\alpha _{0},\beta _{n}^{\max }}\)-probability.] A sufficient condition for this then clearly is that for a set of \(\hat{\beta }_{n}\)’s of positive \(P_{n,\alpha _{0},\beta _{n}^{\max }}\)-probability we have that (i) \(c_{n,\hat{\beta }_{n}}(\delta ) < c_{n,\sup }(\delta )\), and (ii) the conditional cumulative distribution function \(P_{n,\alpha _{0},\beta _{n}^{\max }}(T_{n}(\alpha _{0}) \leq \cdot \mid \hat{\beta }_{n})\) puts positive mass on every non-empty interval. The analogous result holds for the case where \(\hat{c}_{n}\) replaces \(c_{n,\hat{\beta }_{n}}(\delta )\) (and conditioning is w.r.t. \(\hat{c}_{n}\)), see Lemma 5 in the Appendix for a formal statement.

The observation that the test (3) based on the random critical value \(c_{n,\hat{\beta }_{n}}(\delta )\) typically will not be a level δ test has led Loh [8] and subsequently Berger and Boos [2] and Silvapulle [13] to consider the following procedure (or variants thereof) which leads to a level δ test that is somewhat less “conservative” than the test given by (2)Footnote 3: Let I n be a random set in B satisfying

where 0 ≤ η n < δ. That is, I n is a confidence set for the nuisance parameter β with infimal coverage probability not less than 1 −η n (provided α = α 0). Define a random critical value via

Then we have

This can be seen as follows: For every β ∈ B

Hence, the random critical value \(c_{n,\eta _{n},\mathrm{Loh}}(\delta )\) results in a test that is guaranteed to be level δ. In fact, its size can also be lower bounded by δ −η n provided there exists a β n max(δ −η n ) satisfying \(c_{n,\beta _{n}^{\max }(\delta -\eta _{n})}(\delta -\eta _{n}) =\sup _{\beta \in B}c_{n,\beta }(\delta -\eta _{n})\): This follows since

The critical value (6) (or asymptotic variants thereof) has also been used in econometrics, e.g., by DiTraglia [4], McCloskey [10, 11], and Romano et al. [12].

The test based on the random critical value \(c_{n,\eta _{n},\mathrm{Loh}}(\delta )\) may have size strictly smaller than δ. This suggests that this test will not improve over the conservative test based on c n, sup(δ) for all values of β: We can expect that the test based on (6) will sacrifice some power when compared with the conservative test (2) when the true β is close to β n max(δ) or β n max(δ −η n ); however, we can often expect a power gain for values of β that are “far away” from β n max(δ) and β n max(δ −η n ), as we then typically will have that \(c_{n,\eta _{n},\mathrm{Loh}}(\delta )\) is smaller than c n, sup(δ). Hence, each of the two tests will typically have a power advantage over the other in certain parts of the parameter space B.

It is thus tempting to try to construct a test that has the power advantages of both these tests by choosing as a critical value the smaller one of the two critical values, i.e., by choosing

as the critical value. While both critical values c n, sup(δ) and \(c_{n,\eta _{n},\mathrm{Loh}}(\delta )\) lead to level δ tests, this is, however, unfortunately not the case in general for the test based on the random critical value (8). To see why, note that by construction the critical value (8) satisfies

and hence can be expected to fall under the wrath of Proposition 1 given above. Thus it can be expected to not deliver a test that has level δ, but has a size that exceeds δ. So while the test based on the random critical value proposed in (8) will typically reject more often than the tests based on (2) or on (6), it does so by violating the size constraint. Hence it suffers from the same problems as the parametric bootstrap test (3). [We make the trivial observation that the lower bound (7) also holds if \(\hat{c}_{n,\eta _{n},\min }(\delta )\) instead of \(c_{n,\eta _{n},\mathrm{Loh}}(\delta )\) is used, since \(\hat{c}_{n,\eta _{n},\min }(\delta ) \leq c_{n,\eta _{n},\mathrm{Loh}}(\delta )\) holds.] As a point of interest we note that the construction (8) has actually been suggested in the literature, see McCloskey [10].Footnote 4 In fact, McCloskey [10] suggested a random critical value \(\hat{c}_{n,\mathrm{McC}}(\delta )\) which is the minimum of critical values of the form (8) with η n running through a finite set of values; it is thus less than or equal to the individual \(\hat{c}_{n,\eta _{n},\min }\)’s, which exacerbates the size distortion problem even further.

While Proposition 1 shows that tests based on random critical values like \(c_{n,\hat{\beta }_{n}}(\delta )\) or \(\hat{c}_{n,\eta _{n},\min }(\delta )\) will typically not have level δ, it leaves open the possibility that the overshoot of the size over δ may converge to zero as sample size goes to infinity, implying that the test would then be at least asymptotically of level δ. In sufficiently “regular” testing problems this will indeed be the case. However, for many testing problems where nuisance parameters are present such as testing post-model- selection, it turns out that this is typically not the case: In the next section we illustrate this by providing a prototypical example where the overshoot does not converge to zero for the tests based on \(c_{n,\hat{\beta }_{n}}(\delta )\) or \(\hat{c}_{n,\eta _{n},\min }(\delta )\), and hence these tests are not level δ even asymptotically.

2 An Illustrative Example

In the following we shall—for the sake of exposition—use a very simple example to illustrate the issues involved. Consider the linear regression model

under the “textbook” assumptions that the errors ε t are i.i.d. N(0, σ 2), σ 2 > 0, and the nonstochastic n × 2 regressor matrix X has full rank (implying n > 1) and satisfies X ′ X∕n → Q > 0 as n → ∞. The variables y t , x ti , as well as the errors ε t can be allowed to depend on sample size n (in fact may be defined on a sample space that itself depends on n), but we do not show this in the notation. For simplicity, we shall also assume that the error variance σ 2 is known and equals 1. It will be convenient to write the matrix (X ′ X∕n)−1 as

The elements of the limit of this matrix will be denoted by σ α, ∞ 2, etc. It will prove useful to define ρ n = σ α, β, n ∕(σ α, n σ β, n ), i.e., ρ n is the correlation coefficient between the least-squares estimators for α and β in model (9). Its limit will be denoted by ρ ∞ . Note that \(\left \vert \rho _{\infty }\right \vert < 1\) holds, since Q > 0 has been assumed.

As in [7] we shall consider two candidate models from which we select on the basis of the data: The unrestricted model denoted by U which uses both regressors x t1 and x t2, and the restricted model denoted by R which uses only the regressor x t1 (and thus corresponds to imposing the restriction β = 0). The least-squares estimators for α and β in the unrestricted model will be denoted by \(\hat{\alpha }_{n}(U)\) and \(\hat{\beta }_{n}(U)\), respectively. The least-squares estimator for α in the restricted model will be denoted by \(\hat{\alpha }_{n}(R)\), and we shall set \(\hat{\beta }_{n}(R) = 0\). We shall decide between the competing models U and R depending on whether \(\vert \sqrt{n}\hat{\beta }(U_{n})/\sigma _{\beta,n}\vert > c\) or not, where c > 0 is a user-specified cut-off point independent of sample size (in line with the fact that we consider conservative model selection). That is, we select the model \(\hat{M}_{n}\) according to

We now want to test the hypothesis H 0: α = α 0 versus H 1: α > α 0 and we insist, for better or worse, on using the test-statistic

That is, depending on which of the two models has been selected, we insist on using the corresponding textbook test-statistic (for the known-variance case). While this could perhaps be criticized as somewhat simple-minded, it describes how such a test may be conducted in practice when model selection precedes the inference step. It is well known that if one uses this test-statistic and naively compares it to the usual normal-based quantiles acting as if the selected model were given a priori, this results in a test with severe size distortions, see, e.g., [5] and the references therein. Hence, while sticking with T n (α 0) as the test-statistic, we now look for appropriate critical values in the spirit of the preceding section and discuss some of the proposals from the literature. Note that the situation just described fits into the framework of the preceding section with β as the nuisance parameter and \(B = \mathbb{R}\).

Calculations similar to the ones in [7] show that the finite-sample distribution of T n (α 0) under H 0 has a density that is given by

where \(\Delta (a,b) = \Phi (a + b) - \Phi (a - b)\) and where ϕ and \(\Phi \) denote the density and cdf, respectively, of a standard normal variate. Let H n, β denote the cumulative distribution function (cdf) corresponding to h n, β .

Now, for given significance level δ, 0 < δ < 1, let c n, β (δ) = H n, β −1(1 −δ) as in the preceding section. Note that the inverse function exists, since H n, β is continuous and is strictly increasing as its density h n, β is positive everywhere. As in the preceding section let

denote the conservative critical value (the supremum is actually a maximum in the interesting case δ ≤ 1∕2 in view of Lemmata 6 and 7 in the Appendix). Let \(c_{n,\hat{\beta }_{n}(U)}(\delta )\) be the parametric bootstrap-based random critical value. With η satisfying 0 < η < δ, we also consider the random critical value

where

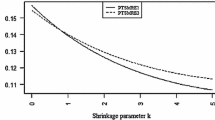

is an 1 −η confidence interval for β. [Again the supremum is actually a maximum.] We choose here η independent of n as in [4, 10, 11] and comment on sample size dependent η below. Furthermore define

Recall from the discussion in Sect. 1 that these critical values have been used in the literature in the contexts of testing post-model-selection, post-moment-selection, or post-model-averaging. Among the critical values c n, sup(δ), \(c_{n,\hat{\beta }_{n}(U)}(\delta )\), c n, η, Loh(δ), and \(\hat{c}_{n,\eta,\min }(\delta )\), we already know that c n, sup(δ) and c n, η, Loh(δ) lead to tests that are valid level δ tests. We next confirm—as suggested by the discussion in the preceding section—that the random critical values \(c_{n,\hat{\beta }_{n}(U)}(\delta )\) and \(\hat{c}_{n,\eta,\min }(\delta )\) (at least for some choices of η) do not lead to tests that have level δ (i.e., their size is strictly larger than δ). Moreover, we also show that the sizes of the tests based on \(c_{n,\hat{\beta }_{n}(U)}(\delta )\) or \(\hat{c}_{n,\eta,\min }(\delta )\) do not converge to δ as n → ∞, implying that the asymptotic sizes of these tests exceed δ. These results a fortiori also apply to any random critical value that does not exceed \(c_{n,\hat{\beta }_{n}(U)}(\delta )\) or \(\hat{c}_{n,\eta,\min }(\delta )\) (such as \(\hat{c}_{n,\mathrm{McC}}(\delta )\) in [10] or \(c_{n,\eta,\mathrm{Loh}^{{\ast}}}(\delta )\)). In the subsequent theorem we consider for simplicity only the case ρ n ≡ ρ, but the result extends to the more general case where ρ n may depend on n.

Theorem 2

Suppose ρ n ≡ρ ≠ 0 and let 0 < δ ≤ 1∕2 be arbitrary. Then

Furthermore, for each fixed η, 0 < η < δ, that is sufficiently small we have

Proof

We first prove (14). Introduce the abbreviation γ = n 1∕2 β∕σ β, n and define \(\hat{\gamma }(U) = n^{1/2}\hat{\beta }(U)/\sigma _{\beta,n}\). Observe that the density h n, β (and hence the cdf H n, β ) depends on the nuisance parameter β only via γ, and otherwise is independent of sample size n (since ρ n = ρ is assumed). Let \(\bar{h}_{\gamma }\) be the density of T n (α 0) when expressed in the reparameterization γ. As a consequence, the quantiles satisfy \(c_{n,\beta }(v) =\bar{ c}_{\gamma }(v)\) for every 0 < v < 1, where \(\bar{c}_{\gamma }(v) =\bar{ H}_{\gamma }^{-1}(1 - v)\) and \(\bar{H}_{\gamma }\) denotes the cdf corresponding to \(\bar{h}_{\gamma }\). Furthermore, for 0 < η < δ, observe that \(c_{n,\eta,\mathrm{Loh}}(\delta ) =\sup _{\beta \in I_{n}}c_{n,\beta }(\delta -\eta )\) can be rewritten as

Now define γ max = γ max(δ) as a value of γ such that \(\bar{c}_{\gamma ^{\max }}(\delta ) =\bar{ c}_{\sup }(\delta ):=\sup _{\gamma \in \mathbb{R}}\bar{c}_{\gamma }(\delta )\). That such a maximizer exists follows from Lemmata 6 and 7 in the Appendix. Note that γ max does not depend on n. Of course, γ max is related to β n max = β n max(δ) via γ max = n 1∕2 β n max∕σ β, n . Since \(\bar{c}_{\sup }(\delta ) =\bar{ c}_{\gamma ^{\max }}(\delta )\) is strictly larger than

in view of Lemmata 6 and 7 in the Appendix, we have for all sufficiently small η, 0 < η < δ, that

Fix such an η. Let now ɛ > 0 satisfy \(\varepsilon <\bar{ c}_{\sup }(\delta ) - \Phi ^{-1}(1 - (\delta -\eta ))\). Because of the limit relation in the preceding display, we see that there exists M = M(ɛ) > 0 such that for \(\left \vert \gamma \right \vert > M\) we have \(\bar{c}_{\gamma }(\delta -\eta ) <\bar{ c}_{\sup }(\delta )-\varepsilon\). Define the set

Then on the event \(\left \{\hat{\gamma }(U) \in A\right \}\) we have that \(\hat{c}_{n,\eta,\min }(\delta ) \leq \bar{ c}_{\sup }(\delta )-\varepsilon\). Furthermore, noting that \(P_{n,\alpha _{0},\beta _{n}^{\max }}\left (T_{n}(\alpha _{0}) > c_{n,\sup }(\delta )\right ) = P_{n,\alpha _{0},\beta _{n}^{\max }}\left (T_{n}(\alpha _{0}) >\bar{ c}_{\sup }(\delta )\right ) =\delta\), we have

We are hence done if we can show that the probability in the last line is positive and independent of n. But this probability can be written as follows:Footnote 5

where we have made use of independence of \(\hat{\alpha }(R)\) and \(\hat{\gamma }(U)\), cf. Lemma A.1 in [6], and of the fact that \(n^{1/2}\left (\hat{\alpha }(R) -\alpha _{0}\right )\) is distributed as \(N(-\sigma _{\alpha,n}\rho \gamma ^{\max },\sigma _{\alpha,n}^{2}\left (1 -\rho ^{2}\right ))\) under \(P_{n,\alpha _{0},\beta _{n}^{\max }}\). Furthermore, we have used the fact that \(\left (n^{1/2}\left (\hat{\alpha }(U) -\alpha _{0}\right )/\sigma _{\alpha,n},\right.\) \(\left.\hat{\gamma }(U)\right )^{{\prime}}\) is under \(P_{n,\alpha _{0},\beta _{n}^{\max }}\) distributed as \(\left (Z_{1},Z_{2}\right )^{{\prime}}\) where

which is a non-singular normal distribution, since \(\left \vert \rho \right \vert < 1\). It is now obvious from the final expression in the last but one display that the probability in question is strictly positive and is independent of n. This proves (14).

We turn to the proof of (13). Observe that \(c_{n,\hat{\beta }_{n}(U)}(\delta ) =\bar{ c}_{\hat{\gamma }(U)}(\delta )\) and that

in view of Lemmata 6 and 7 in the Appendix. Choose ɛ > 0 to satisfy \(\varepsilon <\bar{ c}_{\sup }(\delta ) - \Phi ^{-1}(1-\delta )\). Because of the limit relation in the preceding display, we see that there exists M = M(ɛ) > 0 such that for \(\left \vert \gamma \right \vert > M\) we have \(\bar{c}_{\gamma }(\delta ) <\bar{ c}_{\sup }(\delta )-\varepsilon\). Define the set

Then on the event \(\left \{\hat{\gamma }(U) \in B\right \}\) we have that \(c_{n,\hat{\beta }_{n}(U)}(\delta ) =\bar{ c}_{\hat{\gamma }(U)}(\delta ) \leq \bar{ c}_{\sup }(\delta )-\varepsilon\). The rest of the proof is then completely analogous to the proof of (14) with the set A replaced by B. ■

Remark 3

-

(i)

Inspection of the proof shows that (14) holds for every η, 0 < η < δ, that satisfies (15).

-

(ii)

It is not difficult to show that the suprema in (13) and (14) actually do not depend on n.

Remark 4

If we allow η to depend on n, we may choose η = η n → 0 as n → ∞. Then the test based on \(\hat{c}_{n,\eta _{n},\min }(\delta )\) still has a size that strictly overshoots δ for every n, but the overshoot will go to zero as n → ∞. While this test then “approaches” the conservative test that uses c n, sup(δ), it does not respect the level for any finite-sample size. [The same can be said for Loh’s [8] original proposal \(c_{n,\eta _{n},\mathrm{Loh}^{{\ast}}}(\delta )\), cf. footnote 3.] Contrast this with the test based on \(c_{n,\eta _{n},\mathrm{Loh}}(\delta )\) which holds the level for each n, and also “approaches” the conservative test if η n → 0. Hence, there seems to be little reason for preferring \(\hat{c}_{n,\eta _{n},\min }(\delta )\) (or \(c_{n,\eta _{n},\mathrm{Loh}^{{\ast}}}(\delta )\)) to \(c_{n,\eta _{n},\mathrm{Loh}}(\delta )\) in this scenario where η n → 0.

Notes

- 1.

This framework obviously allows for “one-sided” as well as for “two-sided” alternatives (when these concepts make sense) by a proper definition of the test-statistic.

- 2.

While Andrews and Guggenberger [1] do not consider a finite-sample framework but rather a “moving-parameter” asymptotic framework, the underlying idea is nevertheless exactly the same.

- 3.

Loh [8] actually considers the random critical value \(c_{n,\eta _{n},\mathrm{Loh}^{{\ast}}}(\delta )\) given by \(\sup _{\beta \in I_{n}}c_{n,\beta }(\delta )\), which typically does not lead to a level δ test in finite samples in view of Proposition 1 (since \(c_{n,\eta _{n},\mathrm{Loh}^{{\ast}}}(\delta ) \leq c_{n,\sup }(\delta )\)). However, Loh [8] focuses on the case where η n → 0 and shows that then the size of the test converges to δ; that is, the test is asymptotically level δ if η n → 0. See also Remark 4.

- 4.

This construction is no longer suggested in [11].

- 5.

The corresponding calculation in previous versions of this paper had erroneously omitted the term \(\rho \left (1 -\rho ^{2}\right )^{-1/2}\gamma ^{\max }\) from the expression on the far right-hand side of the subsequent display. This is corrected here by accounting for this term. Alternatively, one could drop the probability involving \(\left \vert \hat{\gamma }(U)\right \vert \leq c\) altogether from the proof and work with the resulting lower bound.

References

Andrews, D.W.K., Guggenberger, P.: Hybrid and size-corrected subsampling methods. Econometrica 77, 721–762 (2009)

Berger, R.L., Boos, D.D.: P values maximized over a confidence set for the nuisance parameter. J. Am. Stat. Assoc. 89 1012–1016 (1994)

Bickel, P.J., Doksum, K.A.: Mathematical Statistics: Basic Ideas and Selected Topics. Holden-Day, Oakland (1977)

DiTraglia, F.J.: Using invalid instruments on purpose: focused moment selection and averaging for GMM. Working Paper, Version November 9, 2011 (2011)

Kabaila, P., Leeb, H.: On the large-sample minimal coverage probability of confidence intervals after model selection. J. Am. Stat. Assoc. 101, 619–629 (2006)

Leeb, H., Pötscher, B.M.: The finite-sample distribution of post-model-selection estimators and uniform versus non-uniform approximations. Economet. Theor. 19, 100–142 (2003)

Leeb, H., Pötscher, B.M.: Model selection and inference: facts and fiction. Economet. Theor. 21, 29–59 (2005)

Loh, W.-Y.: A new method for testing separate families of hypotheses. J. Am. Stat. Assoc. 80, 362–368 (1985)

Liu, C.-A.: A plug-in averaging estimator for regressions with heteroskedastic errors. Working Paper, Version October 29, 2011 (2011)

McCloskey, A.: Powerful procedures with correct size for test statistics with limit distributions that are discontinuous in some parameters. Working Paper, Version October 2011 (2011)

McCloskey, A.: Bonferroni-based size correction for nonstandard testing problems. Working Paper, Brown University (2012)

Romano, J.P., Shaikh, A., Wolf, M.: A practical Two-step method for testing moment inequalities. Econometrica 82, 1979–2002 (2014)

Silvapulle, M.J.: A test in the presence of nuisance parameters. J. Am. Stat. Assoc. 91, 1690–1693 (1996) (Correction, ibidem 92 (1997) 801)

Williams, D.A.: Discrimination between regression models to determine the pattern of enzyme synthesis in synchronous cell cultures. Biometrics 26, 23–32 (1970)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix

Appendix

Lemma 5

Suppose a random variable \(\hat{c}_{n}\) satisfies \(\Pr \left (\hat{c}_{n} \leq c^{{\ast}}\right ) = 1\) for some real number c ∗ as well as \(\Pr \left (\hat{c}_{n} < c^{{\ast}}\right ) > 0\) . Let S be real-valued random variable. If for every non-empty interval J in the real line

holds almost surely, then

The same conclusion holds if in (16) the conditioning variable \(\hat{c}_{n}\) is replaced by some variable w n , say, provided that \(\hat{c}_{n}\) is a measurable function of w n.

Proof

Clearly

the last equality being true, since the first term in the product is zero on the event \(\hat{c}_{n} = c^{{\ast}}\). Now note that the first factor in the expectation on the far right-hand side of the above equality is positive almost surely by (16) on the event \(\left \{\hat{c}_{n} < c^{{\ast}}\right \}\), and that the event \(\left \{\hat{c}_{n} < c^{{\ast}}\right \}\) has positive probability by assumption. ■

Recall that \(\bar{c}_{\gamma }(v)\) has been defined in the proof of Theorem 2.

Lemma 6

Assume ρ n ≡ρ ≠ 0. Suppose 0 < v < 1. Then the map \(\gamma \rightarrow \bar{ c}_{\gamma }(v)\) is continuous on \(\mathbb{R}\) . Furthermore, \(\lim _{\gamma \rightarrow \infty }\bar{c}_{\gamma }(v) =\lim _{\gamma \rightarrow -\infty }\bar{c}_{\gamma }(v) = \Phi ^{-1}(1 - v)\).

Proof

If γ l → γ, then \(\bar{h}_{\gamma _{l}}\) converges to \(\bar{h}_{\gamma }\) pointwise on \(\mathbb{R}\). By Scheffé’s Lemma, \(\bar{H}_{\gamma _{l}}\) then converges to \(\bar{H}_{\gamma }\) in total variation distance. Since \(\bar{H}_{\gamma }\) is strictly increasing on \(\mathbb{R}\), convergence of the quantiles \(\bar{c}_{\gamma _{l}}(v)\) to \(\bar{c}_{\gamma }(v)\) follows. The second claim follows by the same argument observing that \(\bar{h}_{\gamma }\) converges pointwise to a standard normal density for γ → ±∞. ■

Lemma 7

Assume ρ n ≡ρ ≠ 0.

-

(i)

Suppose 0 < v ≤ 1∕2. Then for some \(\gamma \in \mathbb{R}\) we have that \(\bar{c}_{\gamma }(v)\) is larger than \(\Phi ^{-1}(1 - v)\).

-

(ii)

Suppose 1∕2 ≤ v < 1. Then for some \(\gamma \in \mathbb{R}\) we have that \(\bar{c}_{\gamma }(v)\) is smaller than \(\Phi ^{-1}(1 - v)\).

Proof

Standard regression theory gives

with \(\hat{\alpha }_{n}(R)\) and \(\hat{\beta }_{n}(U)\) being independent; for the latter cf., e.g., [6], Lemma A.1. Consequently, it is easy to see that the distribution of T n (α 0) under \(P_{n,\alpha _{0},\beta }\) is the same as the distribution of

where, as before, γ = n 1∕2 β∕σ β, n , and where W and Z are independent standard normal random variables.

We now prove (i): Let q be shorthand for \(\Phi ^{-1}(1 - v)\) and note that q ≥ 0 holds by the assumption on v. It suffices to show that \(\Pr \left (T^{{\prime}}\leq q\right ) < \Phi (q)\) for some γ. We can now write

Here, A and B are the events given in terms of W and Z. Picturing these two events as subsets of the plane (with the horizontal axis corresponding to Z and the vertical axis corresponding to W), we see that A corresponds to the vertical band where | Z +γ | ≤ c, truncated above the line where \(W = (q -\rho Z)/\sqrt{1 -\rho ^{2}}\); similarly, B corresponds to the same vertical band | Z +γ | ≤ c, truncated now above the horizontal line where \(W = q +\rho \gamma /\sqrt{1 -\rho ^{2}}\).

We first consider the case where ρ > 0 and distinguish two cases:

- Case 1::

-

\(\rho c \leq \left (1 -\sqrt{1 -\rho ^{2}}\right )q\).

In this case the set B is contained in A for every value of γ, with A∖B being a set of positive Lebesgue measure. Consequently, \(\Pr (A) >\Pr (B)\) holds for every γ, proving the claim.

- Case 2::

-

\(\rho c > \left (1 -\sqrt{1 -\rho ^{2}}\right )q\).

In this case choose γ so that −γ − c ≥ 0, and, in addition, such that also \((q -\rho (-\gamma - c))/\sqrt{1 -\rho ^{2}} < 0\), which is clearly possible. Recalling that ρ > 0, note that the point where the line \(W = (q -\rho Z)/\sqrt{1 -\rho ^{2}}\) intersects the horizontal line \(W = q +\rho \gamma /\sqrt{1 -\rho ^{2}}\) has as its first coordinate \(Z = -\gamma + (q/\rho )(1 -\sqrt{1 -\rho ^{2}})\), implying that the intersection occurs in the right half of the band where | Z +γ | ≤ c. As a consequence, \(\Pr (B) -\Pr (A)\) can be written as follows:

where

and

Picturing A∖B and B∖A as subsets of the plane as in the preceding paragraph, we see that these events correspond to two triangles, where the triangle corresponding to A∖B is larger than or equal (in Lebesgue measure) to that corresponding to B∖A. Since γ was chosen to satisfy −γ − c ≥ 0 and \((q -\rho (-\gamma - c))/\sqrt{1 -\rho ^{2}} < 0\), we see that each point in the triangle corresponding to A∖B is closer to the origin than any point in the triangle corresponding to B∖A. Because the joint Lebesgue density of (Z, W), i.e., the bivariate standard Gaussian density, is spherically symmetric and radially monotone, it follows that \(\Pr (B\setminus A) -\Pr (A\setminus B) < 0\), as required.

The case ρ < 0 follows because T ′(ρ, γ) has the same distribution as T ′(−ρ, −γ).

Part (ii) follows, since T ′(ρ, γ) has the same distribution as − T ′(−ρ, γ). ■

Remark 8

If ρ n ≡ ρ ≠ 0 and v = 1∕2, then \(\bar{c}_{0}(1/2) = \Phi ^{-1}(1/2) = 0\), since \(\bar{h}_{0}\) is symmetric about zero.

Remark 9

If ρ n ≡ ρ = 0, then T n (α 0) is standard normally distributed for every value of β, and hence \(\bar{c}_{\gamma }(v) = \Phi ^{-1}(1 - v)\) holds for every γ and v.

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this chapter

Cite this chapter

Leeb, H., Pötscher, B.M. (2017). Testing in the Presence of Nuisance Parameters: Some Comments on Tests Post-Model-Selection and Random Critical Values. In: Ahmed, S. (eds) Big and Complex Data Analysis. Contributions to Statistics. Springer, Cham. https://doi.org/10.1007/978-3-319-41573-4_4

Download citation

DOI: https://doi.org/10.1007/978-3-319-41573-4_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-41572-7

Online ISBN: 978-3-319-41573-4

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)