Abstract

The quickest change detection/isolation (multidecision) problem is of importance for a variety of applications. Efficient statistical decision tools are needed for detecting and isolating abrupt changes in the properties of stochastic signals and dynamical systems, ranging from on-line fault diagnosis in complex technical systems (like networks) to detection/classification in radar, infrared, and sonar signal processing.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The quickest change detection/isolation (multidecision) problem is of importance for a variety of applications. Efficient statistical decision tools are needed for detecting and isolating abrupt changes in the properties of stochastic signals and dynamical systems, ranging from on-line fault diagnosis in complex technical systems (like networks) to detection/classification in radar, infrared, and sonar signal processing. The early on-line fault diagnosis (detection/isolation) in industrial processes (SCADA systems) helps in preventing these processes from more catastrophic failures.

The quickest multidecision detection/isolation problem is the generalization of the quickest changepoint detection problem to the case of \(K-1\) post-change hypotheses. It is necessary to detect the change in distribution as soon as possible and indicate which hypothesis is true after a change occurs. Both the rate of false alarms and the misidentification (misisolation) rate should be controlled by given levels.

2 Problem Statement

Let \(X_1, X_2, \ldots \) denote the series of observations, and let \(\nu \) be the serial number of the last pre-change observation. In the case of multiple hypothesis, there are several possible post-change hypotheses \(\mathcal{H}_j\), \(j=1,2,\ldots ,K-1\). Let \(\mathbb {P}_k^j\) and \(\mathbb {E}_k^j\) denote the probability measure and the expectation when \(\nu =k\) and \(\mathcal{H}_j\) is the true post-change hypothesis, and let \(\mathbb {P}_\infty \) and \(\mathbb {E}_\infty =\mathbb {E}_0^0\) denote the same when \(\nu =\infty \), i.e., there is no change. Let (see [1] for details)

where T is the stopping time, d is the final decision (the number of post-change hypotheses) and the event \(\{d_r=j\}\) denotes the first false alarm of the j-th type, be the class of detection and isolation procedures for which the average run length (ARL) to false alarm and false isolation is at least \(\gamma >1\). In the case of detection–isolation procedures, the risk associated with the detection delay is defined analogously to Lorden’s worst-worst-case and it is given by [1]

Hence, the minimax optimization problem seeks to

where \({\mathbb {C}}_\gamma \) is the class of detection and isolation procedures with the lower bound \(\gamma \) on the ARL to false alarm and false isolation defined in (1).

Another minimax approach to change detection and isolation is as follows [2, 3]; unlike the definition of the class \({\mathbb {C}}_\gamma \) in (1), where we fixed a priori the changepoint \(\nu =0\) in the definition of false isolation to simplify theoretical analysis, the false isolation rate is now expressed by the maximal probability of false isolation \(\sup _{\nu \ge 0}{\mathbb {P}}^{\ell }_{\nu }(d = j \ne \ell | T > \nu )\). As usual, we measure the level of false alarms by the ARL to false alarm \(\mathbb {E}_{\infty } T\). Hence, define the class

Sometimes Lorden’s worst-worst-case ADD is too conservative, especially for recursive change detection and isolation procedures, and another measure of the detection speed, namely the maximal conditional average delay to detection \({\mathsf {SADD}}(T)=\sup _\nu \mathbb {E}_{\nu }(T-\nu |T>\nu )\), is better suited for practical purposes. In the case of change detection and isolation, the SADD is given by

We require that the \({\mathsf {SADD}}(\delta )\) should be as small as possible subject to the constraints on the ARL to false alarm and the maximum probability of false isolation. Therefore, this version of the minimax optimization problem seeks to

A detailed description of the developed theory and some practical examples can be found in the recently published book [4].

3 Efficient Procedures of Quickest Change Detection/isolation

Asymptotic Theory. In this paragraph we recall a lower bound for the worst mean detection/isolation delay over the class \({\mathbb {C}}_\gamma \) of sequential change detection/isolation tests proposed in [1]. First, we start with a technical result on sequential multiple hypotheses tests and then we give an asymptotic lower bound for \({\mathsf {ESADD}}(\delta )\).

Lemma 1

Let \((X_k)_{k\ge 1}\) be a sequence of i.i.d. random variables. Let \(\mathcal{H}_0,\ldots ,\mathcal{H}_{K-1}\) be \(K \ge 2\) hypotheses, where \(\mathcal{H}_i\) is the hypothesis that X has density \(f_i\) with respect to some probability measure \(\mu \), for \(i=0,\ldots ,K-1\) and assume the inequality

to be true.

Let \(\mathbb {E}_i(N)\) be the average sample number (ASN) in a sequential test \((N,\delta )\) which chooses one of the K hypotheses subject to a \(K \times K\) error matrix \(A=\left[ a_{ij}\right] \), where \(a_{ij}=\mathbb {P}_i(\textit{accepting}\;\;\mathcal{H}_j),\;i,j= 0,\ldots ,K-1\).

Let us reparameterize the matrix A in the following manner :

Then a lower bound for the ASN \(\mathbb {E}_i(N)\) is given by the following formula :

for \(i=1,\ldots ,K-1\), where

Theorem 1

Let \((Y_k)_{k \ge 1}\) be an independent random sequence observed sequentially :

The distribution \(P_\ell \) has density \(f_\ell \), \(\ell =0,\ldots ,K-1\). An asymptotic lower bound for \({\mathsf {ESADD}}(\delta )\), which extends the result of Lorden [5] to multiple hypotheses case, is:

where

is the K-L information.

Generalized CUSUM Test. The generalized CUSUM (non recursive) test asymptotically attains the above mentioned lower bound [1]. Let us introduce the following stopping time and final decision

of the detection/isolation algorithm. The stopping time \(\tilde{N}^\ell \) is responsible for the detection of hypothesis \(\mathcal{H}_\ell \):

The generalized matrix recursive CUSUM test, which also attains the asymptotic lower bound, has been considered in [6, 7]. Let us introduce the following stopping time and final decision

of the detection/isolation algorithm. The stopping time \(\widehat{N}^\ell \) is responsible for the detection of hypothesis \(\mathcal{H}_l\) :

For some safety critical applications, a more tractable criterion consists in minimizing the maximum detection/isolation delay:

subject to :

for \(1 \le \ell ,j \ne \ell \le K-1\). An asymptotic lower bound in this case is given by the following theorem [3].

Theorem 2

Let \((Y_k)_{k \ge 1}\) be an independent random sequence observed sequentially:

Then

where \(\rho ^*_{\mathrm {d}}\! =\!\min _{1\! \le j \le K-1}\rho _{j,0}\) and \( \rho ^*_{\mathrm {i}} \!=\!\min _{1 \le \ell \le K-1}\min _{1 \le j \ne \ell \le K-1}\rho _{\ell ,j}\).

Vector Recursive CUSUM Test. If \(\gamma \rightarrow \infty \), \(\beta \rightarrow 0\) and \(\log \gamma \ge \log \beta ^{-1}(1+o(1))\), then the above mentioned lower bound can be realized by using the following recursive change detection/isolation algorithm [2] :

where \({N}_r(\ell )\!=\!\inf \left\{ n \ge 1:\min _{0\le j \ne \ell \le K-1}\left[ S_n(\ell ,j)\!-\! h_{\ell ,j}\right] \! \ge \!0\right\} \),

with \(Z_n(\ell ,0)=\log \frac{f_{\ell }(Y_n)}{f_{0}(Y_n)}\), \({g}_0(\ell ,0)=0\) for every \(1 \le \ell \le K-1\) and \(g_n(0,0) \equiv 0\),

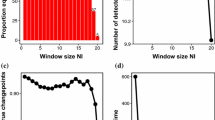

4 Applications to Network Monitoring

In this section the above mentioned theoretical results are illustrated by application of the proposed detection/isolation procedures to the problem of network monitoring.

Let us consider a network composed of r nodes and n mono-directional links, where \(y_\ell \) denotes the volume of traffic on the link \(\ell \) at discrete time k (see details in [8, 9]). For the sake of simplicity, the subscript k denoting the time is omitted now. Let \(x_{i,j}\) be the Origin-Destination (OD) traffic demand from node i to node j at time k. The traffic matrix \(X=\{x_{i,j}\}\) is reordered in the lexicographical order as a column vector \(X=\left[ (x_{(1)},\ldots ,x_{(m)})\right] ^T\), where \(m=r^2\) is the number of OD flows.

Let us define an \(n \times m\) routing matrix \(A=\left[ a_{\ell ,k}\right] \) where \(0 \le a_{\ell ,k} \le 1\) represents the fraction of OD flow k volume that is routed through link \(\ell \). This leads to the linear model

where \(Y={(y_1,\ldots ,y_n)}^T\) is the Simple Network Management Protocol (SNMP) measurements. Without loss of generality, the known matrix A is assumed to be of full row rank, i.e., \(\mathrm{rank}\,{A}=n\).

The problem consists in detecting and isolating a significant volume anomaly in an OD flow \(x_{i,j}\) by using only SNMP measurements \(y_1,\ldots ,y_n\). In fact, the main problem with the SNMP measurements is that \(n \ll m\). To overcome this difficulty a parsimonious linear model of non-anomalous traffic has been developed in the following papers [10–17].

The derivation of this model includes two steps: (i) description of the ambient traffic by using a spatial stationary model and (ii) linear approximation of the model by using piecewise polynomial splines.

The idea of the spline model is that the non-anomalous (ambient) traffic at each time k can be represented by using a known family of basis functions superimposed with unknown coefficients, i.e., it is assumed that

where the \(m \times q\) matrix B is assumed to be known and \(\mu _t \in \mathbb {R}^q\) is a vector of unknown coefficients such that \(q < n\). Finally, it is assumed that the model residuals together with the natural variability of the OD flows follow a Gaussian distribution, which leads to the following equation:

where \(\xi _k \sim {\mathcal N}(0,\varSigma )\) is Gaussian noise, with the \(m \times m\) diagonal covariance matrix \(\varSigma =\mathrm{diag}{(\sigma _1^2,\ldots ,\sigma _m^2)}\). The advantages of the detection algorithm based on a parametric model of ambient traffic and its comparison to a non-parametric approach are discussed in [11, 14], (see also [18] for PCA based approach). Hence, the link load measurement model is given by the following linear equation :

where \(Y_k={(y_1,\ldots ,y_n)_k}^T\) and \(\zeta _k \sim {\mathcal N}(0,A\varSigma A^T)\). Without any loss of generality, the resulting matrix \(H=A\,B\) is assumed to be of full column rank. Typically, when an anomaly occurs on OD flow \(\ell \) at time \(\nu +1\) (change-point), the vector \(\theta _\ell \) has the form \(\theta _\ell =\varepsilon \,a(\ell )\), where \(a(\ell )\) is the \(\ell \)-th normalized column of A and \(\varepsilon \) is the intensity of the anomaly. The goal is to detect/isolate the presence of an anomalous vector \(\theta _\ell \), which cannot be explained by the ambient traffic model \(X_k \approx B\mu _k\).

Therefore, after the de-correlation transformation, the change detection/isolation problem is based on the following model with nuisance parameter \(X_k\) :

where H is a full rank matrices of size \(n \times q\), \(n>q\), and \(\theta (k,\nu )\) is a change occurring at time \(\nu +1\), namely :

This problem is invariant under the group \(G=\{Y \rightarrow g(Y)=Y+HX\}\) (see details in [19]). The invariant test is based on maximal invariant statistics. The solution is the projection of Y on the orthogonal complement \(R(H)^{\bot }\) of the column space R(H) of the matrix H. The parity vector \(Z=W Y\) is a maximal invariant to the group G.

Transformation by W removes the interference of the nuisance parameter X

Hence, the sequential change detection/isolation problem can be re-written as

Theorem 3

Let \((Y_k)_{k \ge 1}\) be the output of the model given by (10) observed sequentially. Then the generalized CUSUM or matrix recursive CUSUM tests attain the lower bound corresponding to the minimax setup :

where \(X^\ell \) (resp. \(X^j\)) corresponds to the hypothesis \({\mathcal H}_\ell \) (resp. \({\mathcal H}_j\)). The vector recursive CUSUM test attains the lower bound

where

References

Nikiforov, I.V.: A generalized change detection problem. IEEE Trans. Inf. Theor. 41(1), 171–187 (1995)

Nikiforov, I.V.: A simple recursive algorithm for diagnosis of abrupt changes in random signals. IEEE Trans. Inf. Theor. 46(7), 2740–2746 (2000)

Nikiforov, I.V.: A lower bound for the detection/isolation delay in a class of sequential tests. IEEE Trans. Inf. Theor. 49(11), 3037–3047 (2003)

Tartakovsky, A., Nikiforov, I., Basseville, M.: Sequential Analysis: Hypothesis Testing and Changepoint Detection. CRC Press, Taylor & Francis Group, Boca Raton (2015)

Lorden, G.: Procedures for reacting to a change in distribution. Annals Math. Stat. 42, 1897–1908 (1971)

Oskiper, T., Poor, H.V.: Online activity detection in a multiuser environment using the matrix CUSUM algorithm. IEEE Trans. Inf. Theor. 48(2), 477–493 (2002)

Tartakovsky, A.G.: Multidecision quickest change-point detection: previous achievements and open problems. Sequential Anal. 27, 201–231 (2008)

Lakhina, A. et al.: Diagnosing network-wide traffic anomalies. In: SIGCOMM (2004)

Zhang, Y. et al.: Network anomography. In: IMC 2005 (2005)

Fillatre, L., Nikiforov, I., Vaton, S. Sequential Non-Bayesian Change Detection-Isolation and Its Application to the Network Traffic Flows Anomaly Detection. In: Proceedings of the 56th Session of ISI, Lisboa, 22–29 August 2007, pp. 1–4 (special session)

Casas, P., Fillatre, L., Vaton, S., Nikiforov, I.: Volume anomaly detection in data networks: an optimal detection algorithm vs. the PCA approach. In: Valadas, R., Salvador, P. (eds.) FITraMEn 2008. LNCS, vol. 5464, pp. 96–113. Springer, Heidelberg (2009)

Fillatre, L., Nikiforov, I., Vaton, S., Casas, P.: Network traffic flows anomaly detection and isolation. In: 4th edition of the International Workshop on Applied Probability, IWAP 2008, 7–10 July 2008, Compiègne, p. 1–6 (invited paper)

Fillatre, L., Nikiforov, I., Casas, P., Vaton, S.: Optimal volume anomaly detection in network traffic flows. In: 16th European Signal Processing Conference (EUSIPCO 2008), Lausanne, p. 5, 25–29 August 2008

Casas, P., Fillatre, L., Vaton, S., Nikiforov, I.: Volume anomaly detection in data networks: an optimal detection algorithm vs. the PCA approach. In: Valadas, R., Salvador, P. (eds.) FITraMEn 2008. LNCS, vol. 5464, pp. 96–113. Springer, Heidelberg (2009)

Casas, P., Vaton, S., Fillatre, L., Nikiforov, I.V.: Optimal volume anomaly detection and isolation in large-scale IP networks using coarse-grained measurements. Comput. Netw. 54, 1750–1766 (2010)

Casas, P., Fillatre, L., Vaton, S., Nikiforov, I.: Reactive robust routing: anomaly localization and routing reconfiguration for dynamic networks. J. Netw. Syst. Manage. 19(1), 58–83 (2010)

Fillatre, L., Nikiforov, I.: Asymptotically uniformly minimax detection and isolation in network monitoring. IEEE Trans. Signal Process. 60(7), 3357–3371 (2012)

Ringberg, H. et al.: Sensitivy of PCA for traffic anomaly detection. In: SIGMETRICS (2007)

Fouladirad, M., Nikiforov, I.: Optimal statistical fault detection with nuisance parameters. Automatica 41(7), 1157–1171 (2005)

Acknowledgement

This work was partially supported by the French National Research Agency (ANR) through ANR CSOSG Program (Project ANR-11-SECU-0005).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Nikiforov, I. (2016). Quickest Multidecision Abrupt Change Detection with Some Applications to Network Monitoring. In: Vishnevsky, V., Kozyrev, D. (eds) Distributed Computer and Communication Networks. DCCN 2015. Communications in Computer and Information Science, vol 601. Springer, Cham. https://doi.org/10.1007/978-3-319-30843-2_10

Download citation

DOI: https://doi.org/10.1007/978-3-319-30843-2_10

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-30842-5

Online ISBN: 978-3-319-30843-2

eBook Packages: Computer ScienceComputer Science (R0)