Abstract

The main goal of this paper is to study sensitivity analysis, with respect to the parameters of the model, in the framework of time-inhomogeneous Lévy process. This is a slight generalization of recent results of Fournié et al. (Finance Stochast 3(4):391–412, 1999 [9]), El-Khatib and Privault (Finance Stochast 8(2):161–179, 2004 [7]), Bally et al. (Ann Appl Probab 17(1):33–66, 2007 [1]), Davis and Johansson (Stochast Process Appl 116(1):101–129, 2006 [5]), Petrou (Electron J Probab 13(27):852–879, 2008 [12]), Benth et al. (Commun Stochast Anal 5(2):285–307, 2011 [2]) and El-Khatib and Hatemi (J Statist Appl Probab 3(1):171–182, 2012 [8]), using Malliavin calculus developed by Yablonski (Rocky Mountain J Math 38:669–701, 2008 [16]). This relatively recent result will help us to provide tools that are necessary for the calculation of the sensitivities. We provide some simple examples to illustrate the results achieved. In particular, we discussed the time-inhomogeneous versions of the Merton model and the Bates model.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Additive processes

- Time-inhomogeneous lévy process

- Malliavin calculus

- Integration by parts formula

- Sensitivity analysis

Mathematics Subject Classification 2010

1 Introduction

A trader selling a financial product to a customer usually tends to avoid any risk involved in that product and therefore wants to get rid of these risks by hedging. In some cases we can make use of a static hedge and we can hedge—and—forget it, additionally we can calculate the price from the products used for hedging. But for most options this is not possible and we have to use a dynamic hedging strategy. The price sensitivities with respect to the model parameters—the Greeks—are vital inputs in this context.

The Greeks are calculated as differentials of the derivative price, which can be expressed as an expectation (in risk—neutral measure) of the discounted payoff. The Greeks are traditionally estimated by means of a finite difference approximation. This approximation contains two errors: one on the approximation of the derivative function by means of its finite difference and another one on the numerical computation of the expectation. In addition the theoretical convergence rates for finite difference approximations are not met for discontinuous payoff functions.

Fournié et al. [9] propose a method with faster convergence which consists in shifting the differential operator from the payoff functional to the diffusion kernel, introducing a weighting function. The main idea is the use of the Malliavin integration by parts formula to transform the problem of calculating derivatives by finite difference approximations to calculating expectations of the form

where the weight \(\pi \) is a random variable and the underling price process is a Markov diffusion given by:

There have been several studies that attempt to produce similar results for markets governed by processes with jumps. We mention León et al. [10], have approximated a jump—diffusion model for a simple Lévy process, and hedged an european call option using a Malliavin Calculus approach. El-Khatib and Privault [7] where a market generated by Poisson processes is considered. Their setup allows for random jump sizes, and by imposing a regularity condition on the payoff they use Malliavin calculus on Poisson space to derive weights for Asian options. Bally et al. [1] reduce the problem to a setting in which only ‘finite—dimensional’ Malliavin calculus is required in the case where stochastic differential equations are driven by Brownian motion and compound Poisson components. Davis and Johansson [5] have developed the Malliavin calculus for simple Lévy process which allows them to calculate the Greeks in a jump diffusion setting which satisfy a separability condition. Petrou [12] has calculated the sensitivities using Malliavin Calculus for markets generated by square integrable Lévy processes which is a extension of the paper [9]. Benth et al. [2] studied the computation of the deltas in model variation within a jump—diffusion framework with two approaches, the Malliavin calculs technics and the Fourier method. El-Khatib and Hatemi [8] estimated the price sensitivities of a trading position with regard to underlying factors in jump—diffusion models using jump times Poisson noise.

While Lévy processes offer nice features in terms of analytical tractability, the constraints of independence and stationarity of their increments prove to be rather restrictive. On one hand, the stationarity of increments of Lévy processes leads to rigid scaling properties for marginal distributions of returns, which are not observed in empirical time series of returns. On the other hand, from the point of view of risk neutral modeling, the Lévy models allow to reproduce the phenomenon of volatility smile for a given maturity, but it becomes more complicated when one tries to stick to several maturities. The inhomogeneity in time increments can improve it, hence the importance of introducing the additive processes in financial modeling. Each of the previous papers has its advantages in specific cases. However, they can only treat subclasses of Lévy processes except that of [12] but in time-homogeneous case setting.

The objective of this work is to derive stochastic weights in order to compute the Greeks in market models with jump when the discontinuity is described by a Poisson random measure with time-inhomogeneous intensity and then to use different numerical methods to compare the results for simpler time dependent models. The main tool uses Malliavin calculus, developed by Yablonski [16] for additive processes, that will be presented shortly at the appendix of the present document for the sake of completeness. Essentially, we introduce the time-inhomogeneity in the jump component of the risky asset price. In particular, we focus on a class of models in which the price of the underlying asset is governed by the following stochastic differential equation:

where \(\mathbb {R}^{d}_{0}:=\mathbb {R}^{d}\setminus \{0_{\mathbb {R}^{d}}\}\), \(x=(x_{i}) _{1\le i\le d}\in \mathbb {R}^{d}\). The functions \(b: \mathbb {R}^{+}\times \mathbb {R}^{d}\longrightarrow \mathbb {R}^{d}\), \(\sigma : \mathbb {R}^{+}\times \mathbb {R}^{d}\longrightarrow \mathbb {R}^{d\times d}\) and \(\varphi : \mathbb {R}^{+}\times \mathbb {R}^{d}\times \mathbb {R}^{d}\longrightarrow \mathbb {R}^{d\times d}\), are continuously differentiable with bounded derivatives. Here

is a d-dimensional standard Brownian motion and

where \(N_{k}, k=1,\dots , d\) are independent Poisson random measures on \([0,T]\times \mathbb {R}_{0}\), \(\mathbb {R}_{0}:=\mathbb {R}_{0}^1\), with time-inhomogeneous Lévy measures \(\nu _{t}^{k}, k=1,\ldots , d\) coming from d independent one-dimensional time-inhomogeneous Lévy processes. The family of positive measures \((\nu _{t}^k) _{1\le k\le d}\) satisfies

and \(\nu ^k_{t}(\{0\}) =0, k=1,\ldots , d\). Let \(b(t,x) =b_{i}(t,x) ) _{1\le i\le d}\), \(\sigma (t,x) =\sigma _{ij}(t,x) _{1\le i\le d, 1\le j\le d}\) and \(\varphi (t,x,z) =\varphi _{ik}(t,x,z) _{1\le i\le d, 1\le k\le d}\) be the coefficients of (1) in the component form. Then \(S_t=(S_i(t) ) _{1\le i\le d}\) in (1) can be equivalently written as

To guarantee a unique strong solution to (1), we assume that the coefficients of (1) satisfy linear growth and Lipschitz continuity, i.e.,

and

for all \(x, y \in \mathbb {R}^{d}\) and \({t\in [0,T]}\), with C and \(K_1\) are positive constants.

We suppose that there exists a family of functions \(\rho _{k} :\mathbb {R} \longrightarrow \mathbb {R}\), \(k=1,\dots , d\) such that

and a positive constant \(K_2\) such that

for all \(x,y\in \mathbb {R}^{d}\), \({t\in [0,T]}\) and \(z_k\in \mathbb {R}\), \(k=1,\dots , d\). Similarly to the homogeneous case, we have the following lemma:

Lemma 1.1

Under the above conditions there exists a unique solution \( (S_{t}) _{t\in [0,T]}\) for (1). Moreover, there exists a positive constant \(C_{0}\) such that

2 Regularity of Solutions of SDEs Driven by Time-Inhomogeneous Lévy Processes

The aim of this section is to prove that under specific conditions the solution of a stochastic differential equation belongs to the domains \( \mathbb {D}^{1,2}\) (see Sects. 4.14 and 4.16). Having in mind the applications in finance, we will also provide a specific expression for the Wiener directional derivative of the solution.

Remark 2.1

The theory developed in the Appendix also holds in the case that our space is generated by an d-dimensional Wiener process and d-dimensional random Poisson measures. However, we will have to introduce new notation for the directional derivatives in order to simplify things. For the multidimensional case,

will denote a row vector, where the element \(D^{(j) }_{t,0}\) of the jth row is the directional derivative for the Wiener process \(W_{j}\), for all \(j=1,\ldots , d\). Similarly, for all \(z=(z_k) _{1\le k\le d}\in \mathbb {R}_{0}^d\) we define the row vector

where the element \(D^{(k) }_{t,z_k}\) of the kth row is the directional derivative for the random Poisson measure \(\widetilde{N}_{k}\), for all \(k=1,\ldots , d\). For what follows we denote with \(\sigma _{j}\) the jth column vector of \(\sigma \) and \(\varphi _{k}\) the kth column vector of \(\varphi \).

Theorem 2.2

Let \((S_{t}) _{t\in [0,T]}\) be the solution of (1). Then \(S_{t}\in \mathbb {D}^{1,2}\) for all \(t\in [0,T]\), and we have

-

1.

The Malliavin derivative \(D^{(j) }_{r,0}S_{t}\) with respect to \(W_j\) satisfies the following linear equation:

$$\begin{aligned} D^{(j) }_{r,0}S_{t}= & {} \sum _{i=1}^{d}\int _{r}^{t}\frac{\partial b}{\partial x_i} (u,S_{u-}) D^{(j) }_{r,0}S^{i}_{u-}du+\sigma _{j}(r,S_{r-}) \\&+\sum _{i=1}^{d}\sum _{\alpha =1}^{d}\int _{r}^{t}\frac{\partial \sigma _{\alpha } }{\partial x_i} (u,S_{u-}) D^{(j) }_{r,0}S^{i}_{u-}dW_{\alpha }(u) \\&+\sum _{i=1}^{d}\int _{r}^{t}\int _{\mathbb {R}^{d}_{0}}\frac{\partial \varphi }{\partial x_i} (u,S_{u-},y) D^{(j) }_{r,0}S^{i}_{u-}\widetilde{N}(du,dy), \end{aligned}$$for \(0\le r\le t\) a.e. and \(D^{(j) }_{r,0}S_{t}=0\) a.e. otherwise.

-

2.

For all \(z\in \mathbb {R}^{d}_{0}\), The Malliavin derivative \(D_{r,z}S_{t}\) with respect to \(\widetilde{N}\) satisfies the following linear equation:

$$\begin{aligned} D_{r,z}S_{t}= & {} \int _{r}^{t}D_{r,z}b(u,S_{u-}) du+\int _{r}^{t}D_{r,z}\sigma (u,S_{u-}) dW_{u} \\&+\,\varphi (r,S_{r-},z) +\int _{r}^{t}\int _{\mathbb {R}^{d}_{0}}D_{r,z}\varphi (u,S_{u-},y) \widetilde{N}(du,dy), \end{aligned}$$for \(0\le r\le t\) a.e. and \(D_{r,z}S_{t}=0\) a.e. otherwise.

Proof

-

1.

We consider the Picard approximations \(S_{t}^{n},\;n\ge 0\), given by

$$\begin{aligned} \left\{ \begin{array}{l} S_{t}^{0}=x \\ S_{t}^{n+1}=\displaystyle x+\int _{0}^{t}b(u,S_{u-}^{n}) du+\int _{0}^{t}\sigma (u,S_{u-}^{n}) dW_{u} \\ \ \ \ \ \ \ \ \ \ \ \displaystyle +\int _{0}^{t}\int _{\mathbb {R}^{d}_{0}}\varphi (u,S_{u-}^{n},z) \widetilde{N}(du,dz) . \end{array} \right. \end{aligned}$$(7)From Lemma 1.1 we know that

$$\begin{aligned} \mathrm {E}\left[ \underset{0\le t\le T}{\sup }|S_{t}^{n}-S_{t}|^{2}\right] \underset{n\rightarrow \infty }{\longrightarrow }0. \end{aligned}$$By induction, we prove that the following statements hold true for all \(n\ge 0\).

Hypothesis (H)

-

(a)

\(S_{t}^{n}\in \mathbb {D}^{1,2}\) for all \({t\in [0,T]}\).

-

(b)

\(\xi _{n}(t) =\underset{0\le r\le t}{\sup }\mathrm {E}\left[ \underset{ r\le u\le t}{\sup }\left| D_{r,0}S_{u}^{n}\right| ^{2}\right] <\infty \).

-

(c)

\(\xi _{n+1}(t) \le \alpha +\beta \int _{0}^{t}\xi _{n}(u) du\) for some constants \(\alpha \), \(\beta \).

For \(n=0\), it is straightforward that (H) is satisfied. Assume that (H) holds for a certain n. We would prove it for \(n + 1\). By Proposition 4.12 \(b(u,S_{u-}^{n}) \), \(\sigma (u,S_{u-}^{n}) \) and \(\varphi (u,S_{u-}^{n},z) \) are in \(\mathbb {D}^{1,2}\). Furthermore,

$$\begin{aligned} D_{r,0}b_{i}(u,S_{u-}^{n})= & {} \sum _{\alpha =1}^{d}\dfrac{\partial b_{i}}{\partial x_{\alpha }} (u,S_{u-}^{n}) D_{r,0}S_{u-}^{n,\alpha }\mathbf{1 }_{\{r\le u\}}, \\ D_{r,0}\sigma _{ij} (u,S_{u-}^{n})= & {} \sum _{\alpha =1}^{d}\dfrac{\partial \sigma _{ij}}{\partial x_{\alpha }} (u,S_{u-}^{n}) D_{r,0}S_{u-}^{n,\alpha }\mathbf{1 }_{\{r\le u\}}, \\ D_{r,0}\varphi _{ik}(u,S_{u-}^{n},z_k)= & {} \sum _{\alpha =1}^{d}\dfrac{\partial \varphi _{ik} }{\partial x_{\alpha }} (u,S_{u-}^{n},z_k) D_{r,0}S_{u-}^{n,\alpha }\mathbf{1 }_{\{r\le u\}}. \end{aligned}$$Since the functions b, \(\sigma \) and \(\varphi \) are continuously differentiable with bounded first derivatives in the second direction and taking into account the conditions (4) and (6) we have

$$\begin{aligned} \left\| D_{r,0}b_{i}(u,S_{u-}^{n}) \right\| ^{2}\le & {} K_{1}\left\| D_{r,0}S_{u-}^{n}\right\| ^{2}, \nonumber \\ \left\| D_{r,0}\sigma _{ij} (u,S_{u-}^{n}) \right\| ^{2}\le & {} K_{1}\left\| D_{r,0}S_{u-}^{n}\right\| ^{2}, \\ \left\| D_{r,0}\varphi _{ik} (u,S_{u-}^{n},z_{k}) \right\| ^{2}\le & {} K_{2}|\rho (z_{k}) |^{2}\left\| D_{r,0}S_{u-}^{n}\right\| ^{2}. \nonumber \end{aligned}$$(8)However, \(\int _{0}^{t}b(u,S_{u-}^{n}) du\), \(\int _{0}^{t}\sigma (u,S_{u-}^{n}) dW_{u}\) and \(\int _{0}^{t}\int _{\mathbb {R}_{0}^{d}}\varphi (u,S_{u-}^{n},z) \widetilde{N}(dt,dz) \) are in \(\mathbb {D}^{1,2}\). Which implies that \(S_{t}^{n+1}\) to \(\mathbb {D}^{1,2}\) and we have

$$\begin{aligned}&D^{(j) }_{r,0}\int _{0}^{t}b_{i}(u,S_{u-}^{n}) du \ =\ \int _{r}^{t}D^{(j) }_{r,0}b_{i}(u,S_{u-}^{n}) du, \\&D^{(j) }_{r,0}\sum _{\alpha =1}^{d}\int _{0}^{t}\sigma _{i\alpha } (u,S_{u-}^{n}) dW^{\alpha }_{u} \ =\ \sigma _{ij} (r,S_{r-}^{n}) +\sum _{\alpha =1}^{d}\int _{r}^{t}D^{(j) }_{r,0}\sigma _{i\alpha } (u,S_{u-}^{n}) dW_{\alpha }(u), \\&D^{(j) }_{r,0}\sum _{k=1}^{d}\int _{0}^{t}\int _{\mathbb {R}_{0}}\varphi _{ik}(u,S_{u-}^{n},z_{k}) \widetilde{N}_{k} (dt,dz_{k}) \ =\ \sum _{k=1}^{d}\int _{r}^{t}\int _{\mathbb {R}_{0}}D^{(j) }_{r,0}\varphi _{ik}(u,S_{u-}^{n},z_k) \widetilde{N}_{k}(dt,dz_k). \end{aligned}$$Thus

$$\begin{aligned} D^{(j) }_{r,0}S_{t}^{n+1}= & {} \int _{r}^{t}D^{(j) }_{r,0}b(u,S_{u-}^{n}) du+\sigma _{j} (r,S_{r-}^{n}) +\sum _{\alpha =1}^{d}\int _{r}^{t}D^{(j) }_{r,0}\sigma _{\alpha }(u,S_{u-}^{n}) dW_{\alpha }(u) \\&+\sum _{k=1}^{d}\int _{r}^{t}\int _{\mathbb {R}_{0}}D^{(j) }_{r,0}\varphi _{k}(u,S_{u-}^{n},z_{k}) \widetilde{N}_{k} (dt,dz_{k}). \end{aligned}$$We conclude that

$$\begin{aligned}&\mathrm {E}\left[ \underset{r\le v\le t}{\sup }|D^{(j) }_{r,0}S_{v}^{n+1}|^{2} \right] \le 4\left\{ \mathrm {E}\left[ \underset{r\le v\le t}{\sup } \left| \int _{r}^{v}D^{(j) }_{r,0}b(u,S_{u-}^{n}) du\right| ^{2}\right] \right. \\&+\,\mathrm {E}\left[ \underset{0\le t\le T}{\sup }|\sigma _{j} (t,S_{t}^{n}) |^{2}\right] +\mathrm {E}\left[ \underset{r\le v\le t}{\sup }\left| \sum _{\alpha =1}^{d}\int _{r}^{v}D^{(j) }_{r,0}\sigma _{\alpha }(u,S_{u-}^{n}) dW_{\alpha }(u) \right| ^{2}\right] \\&+\left. \mathrm {E}\left[ \underset{r\le v\le t}{\sup }\left| \sum _{k=1}^{d}\int _{r}^{v}\int _{\mathbb {R}_{0}}D^{(j) }_{r,0}\varphi _{k}(u,S_{u-}^{n},z_{k}) \widetilde{N}_{k} (dt,dz_{k}) \right| ^{2}\right] \right\} . \end{aligned}$$Using Cauchy–Schwarz inequality and Burkholder–Davis–Gundy inequality (see [14], Theorem 48 p. 193), there exists a constant \(K>0\) such that

$$\begin{aligned}&\mathrm {E}\left[ \underset{r\le v\le t}{\sup }|D^{(j) }_{r,0}S_{v}^{n+1}|^{2} \right] \le K\left\{ (t-r) \mathrm {E}\left[ \int _{r}^{t}|D^{(j) }_{r,0}b(u,S_{u-}^{n}) |^{2}du\right] \right. \\&+\,\mathrm {E}\left[ \underset{0\le t\le T}{\sup }|\sigma _{j} (t,S_{t}^{n}) |^{2}\right] +\mathrm {E}\left[ \sum _{\alpha =1}^{d}\int _{r}^{t}|D^{(j) }_{r,0}\sigma _{\alpha }(u,S_{u-}^{n}) |^{2}du\right] \\&+\left. \mathrm {E}\left[ \sum _{k=1}^{d}\int _{r}^{v}\int _{\mathbb {R}_{0}}|D^{(j) }_{r,0}\varphi _{k}(u,S_{u-}^{n},z_{k}) |^{2}\nu ^{k} _{u}(dz_k) du\right] \right\} . \end{aligned}$$$$\begin{aligned}&\mathrm {E}\left[ \underset{r\le u\le t}{\sup }|D^{(j) }_{r,0}S_{u}^{n+1}|^{2} \right] \le K\mathrm {E}\left[ \underset{0\le t\le T}{\sup }|\sigma _{j} (t,S_{t}^{n}) |^{2}\right] \\&\quad +K\left( K_{1}(T+1) +K_{2}\underset{0\le t\le T}{\sup }\int _{\mathbb {R} _{0}}\sum _{k=1}^{d}|\rho _k(z_k) | ^{2}\nu ^k_{t}(dz_k) \right) \int _{r}^{t}\mathrm {E}\left[ |D^{(j) }_{r,0}S_{u-}^{n}|^{2}\right] du. \end{aligned}$$Then, from (3)

$$\begin{aligned}&\mathrm {E}\left[ \underset{r\le u\le t}{\sup }|D^{(j) }_{r,0}S_{u}^{n+1}|^{2} \right] \le KC\left( 1+\mathrm {E}\left[ \underset{0\le t\le T}{\sup }|S_{t}^{n}|^{2}\right] \right) \\&\quad +K\left( K_{1}(T+1) +K_{2}\underset{0\le t\le T}{\sup }\int _{\mathbb {R} _{0}}\sum _{k=1}^{d}|\rho _k(z_k) | ^{2}\nu ^k_{t}(dz_k) \right) \int _{r}^{t}\mathrm {E}\left[ \underset{r\le v\le u}{\sup }|D^{(j) }_{r,0}S_{v-}^{n}|^{2}\right] du. \end{aligned}$$Consequently

$$\begin{aligned} \xi _{n+1}(t) \le \alpha +\beta \int _{0}^{t}\xi _{n}(u) du, \end{aligned}$$where

$$\begin{aligned} \alpha :=KC\left( 1+\underset{n\in \mathbb {N}}{\sup }\mathrm {E}\left[ \underset{0\le t\le T}{\sup }|S_{t}^{n}|^{2}\right] \right) <\infty \end{aligned}$$and, using (5), we have

$$\begin{aligned} \beta :=K\left( K_{1}(T+1) +K_{2}+\underset{0\le t\le T}{\sup }\int _{\mathbb { R}_{0}}\sum _{k=1}^{d}|\rho _k(z_k) | ^{2}\nu ^k_{t}(dz_k) \right) <\infty . \end{aligned}$$By induction, we can easily prove that, for all \(n\in \mathbb {N}\) and \(t\in [0,T]\)

$$\begin{aligned} \xi _{n}(t) \le \alpha \sum _{i=0}^{n}\frac{(\beta t) ^{i}}{i!}. \end{aligned}$$Hence, for all \(n\in \mathbb {N}\) and \(t\in [0,T]\)

$$\begin{aligned} \xi _{n}(t) \le \alpha e^{\beta t}<\infty , \end{aligned}$$which implies that the derivatives of \(S_{t}^{n}\) are bounded in \(\mathrm {L} ^{2}(\varOmega \times [0,T]) \) uniformly in n. Hence, we deduce that the random variable \(S_{t}\) belongs to \(\mathbb {D}^{1,2}\) and by applying the chain rule to (1) we achieve our proof.

-

(a)

-

2.

Following the same steps we can prove the second claim of the theorem.

As in the classical Malliavin calculus we are able to associate the solution of (1) with the first variation process \(Y_{t}:=\nabla _{x}S_{t}\). We reach the following proposition which provides us with a simpler expression for \(D_{r,0}S_{t}\).

Proposition 2.3

Let \((S_{t}) _{t\in [0,T]}\) be the solution of (1). Then the derivative satisfies the following equation:

where \((Y_{t}) _{t}\) is the first variation process of \((S_{t}) _{t}\).

Proof

Let \((S_{t}) _{t\in [0,T]}\) be the solution of (1). Then

The \(d\times d\) matrix–valued process \(Y_t\) is given by

with \(\delta _{ii}=1\) and \(\delta _{ij}=0\) if \(i\ne j\). Let \((Z_{t}) _{0\le t\le T}\) be a \(d\times d\) matrix–valued process that satisfies the following equation

By means of Itô’s formula, one can check that

Hence \(Z_{t}Y_{t}=Z_{t}Y_{t}=I_{d}\) where \(I_{d}\) is the unit matrix of size d. As a consequence, for any \(t\ge 0\) the matrix \(Y_{t}\) is invertible and \(Y_{t}^{-1}=Z_{t}\). Applying again Itô’s formula, it holds that

Then the result follows.

2.1 Greeks

For \(n\in \mathbb {N}^{*}\) we define the payoff \( H:=H(S_{t_{1}},S_{t_{2}},\ldots , S_{t_{n}}) \) to be a square integrable function discounted from maturity T and evaluated at the times \( t_{1},t_{2},\ldots , t_{n}\) with the convention that \(t_{0}=0\) and \(t_{n}=T\). Under a chosen, since we do not have uniqueness, risk neutral measure, denoted by \(\mathbb {Q}\), the price \(\mathscr {C}(x) \) of the contingent claim given an initial value is then expressed as:

In what follows, we assume the next ellipticityFootnote 1 condition for the diffusion matrix \(\sigma \).

Assumption 2.4

The diffusion matrix \(\sigma \) satisfies the uniform ellipticity condition:

Using the Malliavin calculus developed in the Sect. 2.1 we are able to calculate the Greeks for the one–dimensional process \((S_{t}) _{t\in [0,T]}\) that satisfies equation (1).

2.2 Variation in the Initial Condition

In this section, we provide an expression for the derivatives of the expectation \(\mathscr {C}(x) \) with respect to the initial condition x in the form of a weighted expectation of the same functional.

Let us define the set:

where \(t_{i}\), \(i=1,2,\ldots , n\) are as defined in the Sect. 2.1.

Proposition 2.5

Assume that the diffusion matrix \(\sigma \) is uniformly elliptic. Then for all \(a\in T_{n}\),

Proof

Let H be a continuously differentiable function with bounded gradient. Then we can differentiate inside the expectation (see Fournié et al. [9] for details) and we have

where \(\nabla _{i}H(S_{t_{1}},S_{t_{2}},\ldots , S_{t_{n}}) \) is the gradient of H with respect to \(S_{t_{i}}\) for \(i=1,\ldots , n\). For any \(a\in T_{n}\) and \(i=1,\ldots , n\) and using (9) we find

From Proposition 4.12 we reach

Into measure \(\pi (dudz) \) defined in Sect. 4.12 we replace \(\varDelta \) by 0 and \(\mu (du) \) by a Lebesgue measure du. Then

Using the integration by parts formula (see Sect. 4.14), we have

However, \(\left( a(u) \sigma ^{-1}(t,S_{t-}) Y_{t-}\right) _{0\le t\le T}\) is a predictable process, thus the Skorohod integral coincides with the Itô stochastic integral.

Since the family of continuously differentiable functions is dense in \(L^2\), the result hold for any \(H\in L^2\) (see Fournié et al. [9] for details).

2.3 Variation in the Drift Coefficient

Let \(\widetilde{b}:\mathbb {R}^{+}\times \mathbb {R}^{d}\longrightarrow \mathbb {R}^{d}\) be a function such that for every \(\varepsilon \in [-1,1]\), \(\widetilde{b}\) and \(b+\varepsilon \widetilde{b}\) are continuously differentiable with bounded first derivatives in the space directions.

We then define the drift–perturbed process \((S_{t}^{\varepsilon }) _{t}\) as a solution of the following perturbed stochastic differential equation:

We can relate to this perturbed process the perturbed price \(\mathscr {C} ^{\varepsilon }(x) \) defined by

Proposition 2.6

Assume that the diffusion matrix \(\sigma \) is uniformly elliptic. Then we have

Proof

We introduce the random variable

The Novikov condition is satisfied since

As well as \(\mathrm {E}_{\mathbb {Q}}[\widetilde{D}_{T}^{\varepsilon }]=1\), then we can define new probability measure \(\mathbb {Q}^{\varepsilon }\) by its Radon–Nikodym derivative with respect to the risk–neutral probability measure \(\mathbb {Q}\):

By changing of measure, we can write

where

which implies that

2.4 Variation in the Diffusion Coefficient

In this section, we provide an expression for the derivatives of the price \( \mathscr {C}(x) \) with respect to the diffusion coefficient \(\sigma \). We introduce the set of deterministic functions

where \(t_{i}\), \(i\,{=}\,1,\ 2,\ldots , n\) are as defined in the Sect. 2.1. Let \(\widetilde{\sigma }:\mathbb {R}^{+}\,{\times }\,\mathbb {R}^{d}\,{\longrightarrow }\,\mathbb {R}^{d}\,{\times }~\mathbb {R}^{d}\) a direction function for the diffusion such that for every \(\varepsilon \in [-1,1]\), \(\widetilde{\sigma }\) and \(\sigma +\varepsilon \widetilde{\sigma }\) are continuously differentiable with bounded first derivatives in the second direction and verify Lipschitz conditions such that the following assumption is satisfied:

Assumption 2.7

The diffusion matrix \(\sigma +\varepsilon \widetilde{\sigma }\) satisfies the uniform ellipticity condition for every \(\varepsilon \in [-1,1]\):

We then define the diffusion–perturbed process \((S_{t}^{\varepsilon ,\widetilde{\sigma }}) _{0\le t\le T}\) as a solution of the following perturbed stochastic differential equation:

We can also relate to this perturbed process the perturbed price \(\mathscr {C} ^{\varepsilon ,\widetilde{\sigma } }(x) \) defined by

We will need to introduce the variation process with respect to the parameter \(\varepsilon \)

so that \(\frac{\partial S_{t}^{\varepsilon ,\widetilde{\sigma } }}{\partial \varepsilon } =Z_{t}^{\varepsilon ,\widetilde{\sigma } }\). We simply use the notation \(S_{t}\), \(Y_{t}\) and \( Z^{\widetilde{\sigma }}_{t}\) for \(S_{t}^{0,\widetilde{\sigma }}\), \(Y_{t}^{0,\widetilde{\sigma }}\) and \(Z_{t}^{0,\widetilde{\sigma }}\) where the first variation process is given by \(Y_{t}^{0,\widetilde{\sigma }}:=\nabla _{x}S_{t}^{0,\widetilde{\sigma }}\). Next, consider the process \((\beta ^{\widetilde{\sigma }}_{t})_{t\in [0,T]}\) defined by

Proposition 2.8

Assume that Hypothesis 2.7 holds. Set

Suppose further that the process \((\sigma ^{-1}(t,S_{t}) Y_{t}\widetilde{\beta }_{t}^{a,\widetilde{\sigma }}\delta _{0}(z) ) _{(t,z) }\) belongs to \( Dom(\delta ) \), then we have for any \(a\in \widetilde{T}_{n}\):

Moreover, if the process \(\left( \beta ^{\widetilde{\sigma }}_{t}\delta _{0}(z) \right) _{t\in [0,T]}\) belongs to \(\mathbb {D}^{1,2}\), then

Proof

Let H be a continuously differentiable function with bounded gradient. Then we can differentiate inside the expectation

Hence

On the other hand we have

From Proposition 2.3, we conclude that

Which implies that

Using the duality formula in Sect. 4.14 and taking into account the fact that \((\sigma ^{-1}(t,S_{t}) Y_{t}\widetilde{\beta }_{t}^{a,\widetilde{\sigma }}\delta _{0}(z) ) _{(t,z) }\) belongs to \(Dom(\delta ) \), we reach

2.5 Variation in the Jump Amplitude

To derive a stochastic weight for the sensitivity with respect to the amplitude parameter \(\varphi \) we use the same technique as in the Proposition 2.6. To do this, we consider the perturbed process

where \(\varepsilon \in [-1,1]\) and \(\widetilde{\varphi }:\mathbb {R}^{+}\times \mathbb {R}^{d}\times \mathbb {R}^{d}\longrightarrow \mathbb {R}^{d\times d}\) is continuously differentiable function with bounded first derivative in the second direction. The variation process with respect to the parameter \(\varepsilon \) becomes

We can also relate to this perturbed process the perturbed price \(\mathscr {C} ^{\varepsilon ,\widetilde{\varphi } }(x) \) defined by

Hence, the statement of the following proposition is practically identical to Proposition 2.8:

Proposition 2.9

Assume that the diffusion matrix \(\sigma \) is uniformly elliptic and the process \((\sigma ^{-1}(t,S_{t}) Y_{t}\widetilde{\beta }_{t}^{a,\widetilde{\varphi }}\delta _{0}(z) ) _{(t,z) }\in Dom(\delta ) \), then we have for any \(a\in \widetilde{T}_{n}\):

Moreover, if the process \((\beta ^{\widetilde{\varphi }}_{t}\delta _{0}(z) ) _{t\in [0,T]}\) belongs to \(\mathbb {D}^{1,2}\), then

3 Numerical Experiments

In this section, we provide some simple examples to illustrate the results achieved in the previous section. In particular, we will look at time-inhomogeneous versions of the Merton model and the Bates model.

3.1 Examples

3.1.1 Time-Inhomogeneous Merton Model

We consider time-inhomogeneous versions of the Merton model when the riskless asset is governed by the equation:

and the evolution of the risky asset is described by:

where

-

\(\{W_{t},0\le t \le T\}\) is a standard Brownian motion.

-

The process \(\{X_{t},0\le t\le T\}\) is defined by \( X_{t}:=\sum _{j=1}^{N_{t}}Z_{j}\) for all \(t\in [0,T]\), such that \( \{N_{t},t\ge 0\}\) is a inhomogeneous Poisson process with intensity function \(\lambda (t) \) and \((Z_{n}) _{n\ge 1}\) is a sequence of square integrable random variables which are i.i.d. (we set \(\kappa :=\mathrm {E}_{ \mathbb {Q}}[Z_{1}]\)).

-

\(\{W_{t},t\ge 0\}\), \(\{N_{t},t\ge 0\}\) and \(\{Z_{n},n\ge 1\}\) are independent.

-

r, b, \(\sigma \) and \(\varphi \) are deterministic functions.

We can write

where \(J_{X}(du,dz)\) and \(\widetilde{J}_{X}(du,dz)\) are, respectively, the jump measure and the compensated jump measure of the process X. By Itô’s formula, we have for all \(t\in [0,T]\):

Set \(A_{t}=\exp (-\int _{0}^{t}r(u) du) \), we conclude that the process \( (A_{t}S_{t}) _{t\in [0,T]}\) is a martingale if and only if the following condition is satisfied:

Hence, for all \(t\in [0,T]\):

The price of a contingent claim \(H(S_{T}) \) is then expressed as

and for all \(t\in [0,T]\), the processes \(Y_{t}\), \(Z_{t}^{\widetilde{\sigma }}\), \( \beta _{t}^{\widetilde{\sigma }}\), \(Z_{t}^{\widetilde{\varphi }}\) and \(\beta _{t}^{ \widetilde{\varphi }}\) are, respectively, given by

By using the general formulae developed in the previous section, we are able to compute analytically the values of the different Greeks \((a(u) =\frac{1}{T} ) \):

For numerical simplicity we suppose that the coefficients \(r>0\), \(\sigma >0\) are real constants and \(\varphi =1\) such that \(\ln (1+Z_{1}) \sim \mathscr {N}(\mu , \delta ^{2}) \) where \(\mu \in \mathbb {R}\) and \(\delta >0\). The intensity function \(\lambda (t) \) is exponentially decreasing given by \( \lambda (t) =a e^{-b t}\) for all \(t\in [0,T]\), where \(a >0\) and \(b >0\).

In this case we have \(\kappa =\mathrm {E}[Z_{1}]=e^{\mu +\frac{\delta ^{2}}{2}}-1\) and the mean–value function of the Poisson process \( \{N_{t},t\ge 0\}\) is \(m(t) =\int _{0}^{t}\lambda (s) ds=\frac{a }{b } \left( 1-e^{-b t}\right) ,\;\;\forall \ t\in [0,T]\).

3.1.2 Binary Call Option

We consider the payoff of a digital call option of strike \(K>0\) and maturity T i.e. \(H(S_{T}) =\mathbf {1}_{\left\{ S_{T}\ge K\right\} }\), such that:

The price of a digital option is given by:

Delta: variation in the initial condition

-

Delta computed from a derivation under expectation: By conditioning on the number of jumps, we can express the price as a weighted sum of Black–Scholes prices:

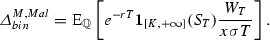

$$\begin{aligned} \mathscr {C}_{bin}^{M}=\sum _{n\ge 0}\frac{e^{-m(T) }(m(T) ) ^{n}}{n!}\mathscr {C} _{bin}^{BS}(0,T,S_{n},K,r,\sigma _{n}) \end{aligned}$$where \(m(T) =\frac{a }{b }(1-e^{-b T}) \), \( S_{n}=x\exp (n(\mu +\frac{\delta ^{2}}{2}) -m(T) \kappa ) \), \( \sigma _{n}^{2}=\sigma ^{2}+n\frac{\delta ^{2}}{2}\) and \(\mathscr {C} _{bin}^{BS}(0,T,S_{n},K,r,\sigma _{n}) \) stands for the Black–Scholes price of a digital option.

$$\begin{aligned} \varDelta _{bin}^{M}:=\frac{\partial \mathscr {C}_{bin}^{M}}{\partial x} =\sum _{n\ge 0}\frac{e^{-m(T) }(m(T) ) ^{n}}{n!}\frac{S_{n}}{x}\frac{\partial \mathscr {C}_{bin}^{BS}(0,T,S_{n},K,r,\sigma _{n}) }{\partial S_{n}}. \end{aligned}$$Recall that

$$\begin{aligned} \mathscr {C}_{bin}^{BS}(0,T,S_{n},K,r,\sigma _{n}) =e^{-rT}\mathscr {N}(d_{2,n}) \end{aligned}$$and

$$\begin{aligned} \frac{\partial \mathscr {C}_{bin}^{BS}(0,T,S_{n},K,r,\sigma _{n}) }{\partial S_{n}}=\frac{e^{-rT}}{S_{n}\sigma _{n}\sqrt{T}}\Phi (d_{2,n}) \end{aligned}$$where \(d_{1,n}=\frac{\ln (\frac{S_{n}}{K}) +(r+\frac{\sigma _{n}^{2}}{2}) T}{ \sigma _{n}\sqrt{T}}\), \(d_{2,n}=d_{1,n}-\sigma _{n}\sqrt{T}\) and \(\Phi (z) = \frac{1}{\sqrt{2\pi }}e^{\frac{-z^{2}}{2}}\). Consequently

$$\begin{aligned} \varDelta _{bin}^{M}=\frac{e^{-(rT+m(T) ) }}{x\sqrt{T}}\sum _{n\ge 0}\frac{ (m(T) ) ^{n}}{n!}\frac{\Phi (d_{2,n}) }{\sigma _{n}}. \end{aligned}$$ -

Finite difference approximation scheme of Delta:

$$\begin{aligned} \varDelta _{bin}^{M,DF}=\frac{\partial }{\partial x}\mathrm {E}_{\mathbb {Q} }[e^{-rT}H(S_{T}^{x}) ]\simeq \frac{\mathrm {E}_{\mathbb {Q} }[e^{-rT}H(S_{T}^{x+\varepsilon }) ]-\mathrm {E}_{\mathbb {Q} }[e^{-rT}H(S_{T}^{x-\varepsilon }) ]}{2\varepsilon }. \end{aligned}$$ -

Global Malliavin formula for Delta:

The stochastic Malliavin weight for the delta is written:

$$\begin{aligned} \delta (\omega ) =\int _{0}^{T}\frac{1}{T}\frac{S_{t}}{x\sigma S_{t}}dW_{t}= \frac{W_{T}}{x\sigma T} \end{aligned}$$where \(\omega (t) =a(t) \frac{Y_{t}}{\sigma S_{t}}\) and \(Y_{t}=\frac{S_{t}}{x}\) and \(a(t) =\frac{1}{T}\)

-

Localized Malliavin formula for Delta:

Empirical studies have shown that the theoretical estimators produced by the techniques of Malliavin are unbiased. We will adopt the localization technique introduced by Fournié et al. [9], which aims is to reduce the variance of the Monte–Carlo estimator for the sensitivities by localizing the integration by part formula around the singularity at K.

Consider the decomposition:

$$\begin{aligned} H(S_{T}) =H_{\varepsilon , loc}(S_{T}) +H_{\varepsilon , reg}(S_{T}). \end{aligned}$$The regular component is defined by:

$$\begin{aligned} H_{\varepsilon , reg}(S_{T}) :=G_{\varepsilon }(S_{T}-K). \end{aligned}$$where \(\varepsilon \) is a localization parameter and the localization function \(G_{\varepsilon }\), that we propose, is given by:

$$\begin{aligned} G_{\varepsilon }(z) =\left\{ \begin{array}{ll} 0; &{} z\le -\varepsilon \\ \frac{1}{2}\left( 1-\frac{z}{\varepsilon }\right) \left( 1+\frac{z}{ \varepsilon }\right) ^{3}; &{} -\varepsilon<z<0 \\ 1-\frac{1}{2}\left( 1+\frac{z}{\varepsilon }\right) \left( 1-\frac{z}{ \varepsilon }\right) ^{3}; &{} 0\le z<\varepsilon \\ 1; &{} z\ge \varepsilon . \end{array} \right. \end{aligned}$$Then

$$\begin{aligned} H_{\varepsilon , reg}(S_{T})= & {} \frac{1}{2}\left( 1-\frac{S_{T}-K}{ \varepsilon }\right) \left( 1+\frac{S_{T}-K}{\varepsilon }\right) ^{3} \mathbf {1}_{\left\{ K-\varepsilon<S_{T}<K\right\} } \\&+\left( 1-\frac{1}{2}\left( 1+\frac{S_{T}-K}{\varepsilon }\right) \left( 1- \frac{S_{T}-K}{\varepsilon }\right) ^{3}\right) \mathbf {1}_{\left\{ K\le S_{T}<K+\varepsilon \right\} } \\&+\,\mathbf {1}_{\left\{ S_{T}\ge K+\varepsilon \right\} }. \end{aligned}$$The localized component is given by:

$$\begin{aligned} H_{\varepsilon , loc}(S_{T}) =H(S_{T}) -H_{\varepsilon , reg}(S_{T}). \end{aligned}$$We find that the Delta computed by localized Malliavin formula:

$$\begin{aligned} \varDelta _{LocMall}=e^{-rT}\mathrm {E}_{\mathbb {Q}}\left[ H_{\varepsilon ,loc}(S_{T}) \frac{W_{T}}{x\sigma T}\right] +e^{-rT}\mathrm {E}_{\mathbb {Q}} \left[ H_{\varepsilon , reg}^{\prime }(S_{T}) \frac{S_{T}}{x}\right] . \end{aligned}$$

In Fig. 1 we plot the delta for a digital option for a simplest time-inhomogeneous Merton model.

Delta of a digital option computed by global, localized Malliavin like formula and finite difference. The parameters are \(S_0=100\), \(K=100\), \(\sigma =0.10\), \(T=1\), \(r=0.02\), \(\mu =-0.05\), \(\delta =0.01\), \(\varphi =1\), the intensity function \(\lambda \) is exponentially decreasing given by \(\lambda (t) =a e^{-b t}\) for all \(t \in [0,T]\), where \(a=1\) and \(b=1\)

Furthermore, we have

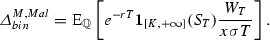

Rho: variation in the drift coefficient

-

Rho computed from a derivation under expectation: Recall that

$$\begin{aligned} \mathscr {C}_{bin}^{BS}(0,T,S_{n},K,r,\sigma _{n}) =e^{-rT}\mathscr {N}(d_{2,n}) \end{aligned}$$and

$$\begin{aligned} \frac{\partial \mathscr {C}_{bin}^{BS}(0,T,S_{n},K,r,\sigma _{n}) }{\partial r} =-Te^{-rT}\mathscr {N}(d_{2,n}) +\frac{\sqrt{T}e^{-rT}}{\sigma _{n}}\Phi (d_{2,n}) \end{aligned}$$$$\begin{aligned} Rho_{bin}^{M}:= & {} \frac{\partial \mathscr {C}_{bin}^{M}}{\partial r} \\= & {} \sum _{n\ge 0}\frac{e^{-m(T) }(m(T) ) ^{n}}{n!}\frac{\partial \mathscr {C} _{bin}^{BS}(0,T,S_{n},K,r,\sigma _{n}) }{\partial r} \\= & {} \sum _{n\ge 0}\frac{e^{-m(T) }(m(T) ) ^{n}}{n!}(-Te^{-rT}\mathscr {N} (d_{2,n}) +\frac{\sqrt{T}e^{-rT}}{\sigma _{n}}\Phi (d_{2,n}) ) \\= & {} Te^{-(rT+m(T) ) }\sum _{n\ge 0}\frac{(m(T) ) ^{n}}{n!}\left( -\mathscr {N} (d_{2,n}) +\frac{\Phi (d_{2,n}) }{\sqrt{T}\sigma _{n}}\right) . \end{aligned}$$ -

Finite Difference Approximation scheme of Rho:

$$\begin{aligned} Rho_{FD}:=\frac{\partial }{\partial r}\mathrm {E}_{\mathbb {Q} }[e^{-rT}H(S_{T}) ]\simeq \frac{\mathrm {E}_{\mathbb {Q}}[e^{-(r+\varepsilon ) T}H(S_{T}^{r+\varepsilon }) ]- \mathrm {E}_{\mathbb {Q}}[e^{-(r-\varepsilon ) T}H(S_{T}^{r-\varepsilon }) ]}{2\varepsilon }. \end{aligned}$$ -

Global Malliavin formula for Rho:

$$\begin{aligned} Rho_{GMall}=e^{-rT}\mathrm {E}_{\mathbb {Q}}\left[ \left( \frac{W_{T}}{\sigma } -T\right) \mathbf {1}_{\left\{ S_{T}\ge K\right\} }\right] . \end{aligned}$$ -

Localized Malliavin formula for Rho:

$$\begin{aligned} Rho_{LocMall}= & {} e^{-rT}\mathrm {E}_{\mathbb {Q}}\left[ H_{ \varepsilon ,loc}(S_T) \left( \frac{W_{T}}{\sigma }-T\right) \right] \\&+e^{-rT}\mathrm {E}_{\mathbb {Q}}\left[ H^{\prime }_{\varepsilon ,reg}(S_T) TS_T\right] -Te^{-rT}\mathrm {E}_{\mathbb {Q}}\left[ H'_{\varepsilon ,reg}(S_T) \right] . \end{aligned}$$

In Fig. 2 we plot the Rho for a digital option for a simplest time-inhomogeneous Merton model.

Rho of a digital option computed by global, localized Malliavin like formula and finite difference. The parameters are \(S_0=100\), \(K=100\), \( \sigma =0.1\), \(T=1\), \(r=0.03\), \( \mu =-0.05\), \( \delta =0.01\), \(\varphi =1\), the intensity function \( \lambda \) is exponentially decreasing given by \(\lambda (t) =a e^{-b t}\) for all \(t \in [0,T]\), where \(a=1\) and \(b=1\)

Vega: variation in the diffusion coefficient

-

Vega computed from a derivation under expectation:

$$\begin{aligned} Vega_{bin}^{M}: & {} =\frac{\partial \mathscr {C}_{bin}^{M}}{\partial \sigma } \\= & {} \sum _{n\ge 0}\frac{e^{-m(T) }(m(T) ) ^{n}}{n!}\frac{\partial \sigma _{n}}{ \partial \sigma }\frac{\partial \mathscr {C}_{bin}^{BS}(0,T,S_{n},K,r,\sigma _{n}) }{\partial \sigma _{n}} \\= & {} \sum _{n\ge 0}\frac{e^{-m(T) }(m(T) ) ^{n}}{n!}\frac{\sigma }{\sigma _{n}} (-e^{-rT}) (\sqrt{T}+\frac{d_{2,n}}{\sigma _{n}}) \Phi (d_{2,n}) \\= & {} -\sigma e^{-(rT+m(T) ) }\sum _{n\ge 0}\frac{(m(T) ) ^{n}}{n!}\left( \frac{ \sigma _{n}\sqrt{T}+d_{2,n}}{\sigma _{n}^{2}}\right) \Phi (d_{2,n}). \end{aligned}$$ -

Finite Difference Approximation scheme of Vega:

$$\begin{aligned} Vega_{FD}:=\frac{\partial }{\partial \sigma }\mathrm {E}_{\mathbb {Q} }[e^{-rT}H(S_{T}^{\sigma }) ]\simeq e^{-rT}\frac{\mathrm {E}_{\mathbb {Q} }[H(S_{T}^{\sigma +\varepsilon }) ]-\mathrm {E}_{\mathbb {Q}}[H(S_{T}^{\sigma -\varepsilon }) ]}{2\varepsilon }. \end{aligned}$$ -

Global Malliavin formula for Vega:

$$\begin{aligned} {Vega_{GMall}=e^{-rT}\mathrm {E}_{\mathbb {Q}}\left[ \left( \frac{ W_{T}^{2}-\sigma TW_{T}-T}{\sigma T}\right) \mathbf {1}_{\left\{ S_{T}\ge K\right\} }\right] .} \end{aligned}$$ -

Localized Malliavin formula for Vega:

$$\begin{aligned} Vega_{LocMall} =&e^{-rT}\mathrm {E}_{\mathbb {Q}}\left[ H_{\varepsilon ,loc}(S_{T}) \left( \frac{W_{T}^{2}-\sigma TW_{T}-T}{\sigma T}\right) \right] \\&+e^{-rT}\mathrm {E}_{\mathbb {Q}}\left[ H_{\varepsilon , reg}^{\prime }(S_{T}) \left( W_{T}-\sigma T\right) S_{T}\right] . \end{aligned}$$

In Fig. 3 we plot the Vega for a digital option for a simplest time-inhomogeneous Merton model.

Vega of a digital option computed by global, localized Malliavin like formula and finite difference. The parameters are \(S_0=100\), \(K=100\), \( r=0.02\), \(\sigma =0.20\), \(T=1\), \(r=0.05\), \(\mu =-0.05\), \( \delta =0.01\), \(\varphi =1\), the intensity function \( \lambda \) is exponentially decreasing given by \(\lambda (t) =a e^{-b t}\) for all \(t \in [0,T]\), where \(a=1\) and \(b=1\)

Alpha: variation in the jump amplitude

-

Alpha computed from a derivation under expectation:

$$\begin{aligned} Alpha_{bin}^{M}:= & {} \frac{\partial \mathscr {C}_{bin}^{M}}{\partial \varphi } \\= & {} \sum _{n\ge 0}\frac{e^{-m(T) }(m(T) ) ^{n}}{n!}\frac{\partial S_{n}}{ \partial \varphi }\frac{\partial \mathscr {C}_{bin}^{BS}(0,T,S_{n},K,r,\sigma _{n}) }{\partial S_{n}} \\= & {} \sum _{n\ge 0}\frac{e^{-m(T) }(m(T) ) ^{n}}{n!}\frac{m(T) \kappa S_{n}}{\varphi }\frac{ \partial \mathscr {C}_{bin}^{BS}(0,T,S_{n},K,r,\sigma _{n}) }{\partial S_{n}} \\= & {} \frac{\kappa e^{-(rT+m(T) ) }}{\varphi \sqrt{T}}\sum _{n\ge 0}\frac{(m(T) ) ^{n+1}}{ n!}\frac{\Phi (d_{2,n}) }{\sigma _{n}}. \end{aligned}$$ -

Finite Difference Approximation scheme of Alpha:

$$\begin{aligned} Alpha_{FD}:=\frac{\partial }{\partial \varphi }\mathrm {E}_{\mathbb {Q} }[e^{-rT}H(S_{T}^{\varphi }) ]\simeq e^{-rT}\frac{\mathrm {E}_{\mathbb {Q} }[H(S_{T}^{\varphi +\varepsilon }) ]-\mathrm {E}_{\mathbb {Q}}[H(S_{T}^{\varphi -\varepsilon }) ]}{2\varepsilon }. \end{aligned}$$ -

Global Malliavin formula for Alpha:

$$\begin{aligned} Alpha_{GMall}=e^{-rT}\mathrm {E}_{\mathbb {Q}}\left[ \left( \sum _{j=1}^{N_{T}} \frac{Z_{j}}{1+\varphi Z_{j}}-\kappa \frac{a }{b }(-e^{-b T}+1) \right) \frac{W_{T}}{\sigma T}\mathbf {1}_{\left\{ S_{T}\ge K\right\} } \right] . \end{aligned}$$ -

Localized Malliavin formula for Alpha:

$$\begin{aligned} Alpha_{LocMall}= & {} e^{-rT}\mathrm {E}_{\mathbb {Q}}\left[ H_{\varepsilon ,loc}(S_{T}) \left( \sum _{j=1}^{N_{T}}\frac{Z_{j}}{1+\varphi Z_{j}}-\kappa \frac{a }{b }(-e^{-b T}+1) \right) \frac{W_{T}}{\sigma T}\right] \\&+\,e^{-rT}\mathrm {E}_{\mathbb {Q}}\left[ H_{\varepsilon , reg}^{\prime }(S_{T}) \left( \sum _{j=1}^{N_{T}}\frac{Z_{j}}{1+\varphi Z_{j}}-\kappa \frac{ a }{b }(-e^{-b T}+1) \right) S_{T}\right] . \end{aligned}$$

In Fig. 4 we plot the sensitivity with respect to the jump size parameter \(\varphi \) for a digital option for a simplest time-inhomogeneous Merton model.

Alpha of a digital option computed by global, localized Malliavin like formula and finite difference. The parameters are \(S_0=100\), \(K=100\), \(\sigma =0.20\), \(T=1\), \(r=0.02\), \(\mu =-0.05\), \(\delta =0.01\), \(\varphi =1\), the intensity function \( \lambda \) is exponentially decreasing given by \(\lambda (t) =a e^{-b t}\) for all \(t \in [0,T]\), where \(a=1\) and \(b=1\)

3.1.3 Time-Inhomogeneous Bates Model:

We consider the solution of the stochastic differential equation:

where \((W_{t}^{1},B_{t}) _{t\in [0,T]}\) is a two–dimensional correlated Brownian motion with correlation parameter \(\rho \in ]-1,1[\). The stochastic process \((S^{1}_{t}) \) is the underling price process and \((V_{t}) \) is the square of the volatility process which follows a CIRFootnote 2 process with an initial value \(v_{0}>0\), with long–run mean \(\theta \), and rate of reversion \( \kappa \), \(\sigma \) is referred to as the volatility of volatility.

For all \({t\in [0,T]}\), we define

The process \((W_{t}^{2}) _{t\in [0,T]}\) is a Brownian motion which is independent of \((W_{t}^{1}) _{t\in [0,T]}\). Then, the system of stochastic differential equations can be rewritten in a matrix form

where \(S_{t}=(S^{1}_{t},V_{t}) \), \(W_{t}^{*}=(W_{t}^{1},W_{t}^{2}) ^{*}\), \(b^{*}(t,S_{t-}) =(rS^{1}_{t-},\kappa (\theta -V_{t}) ) ^{*}\), \(\varphi ^{*} (t,S_{t-},z) =((e^{z}-1) S^{1}_{t-},0) ^{*}\) and

The inverse of \(\sigma \) is

The price of the contingent claim in this setting is expressed as:

Note that by Itô’s formula we have for all \(t\in [0,T]\)

The Rho

In the drift—perturbed process \((S_{t}^{\varepsilon }) _{t}\) which is a solution of the stochastic differential equation (10), we take \( \widetilde{b}^{*}(t,x) =(x_{1},0) ^{*}\) and we get

From Proposition 2.6, we have

The Delta

The first variation process is given by

where

By Proposition 2.5 we conclude that

Since \(Y_{t-}^{1,1}=\frac{S^{1}_{t-}}{x_{0}}\) and if we take \(a(t) = \frac{1}{T}\), we get

The Vega

We perturb the original diffusion matrix with \(\widetilde{\sigma }\) to get the perturbed process given by (11) such that

For all \(t\in [0,T]\), the processes \(Z_{t}^{\widetilde{\sigma }}\) and \(\beta _{t}^{\widetilde{\sigma }}\) are, respectively, given by

Using the chain rule (Proposition 4.12) on a sequence of continuously differentiable functions with bounded derivatives approximating \(\sqrt{V_{u}} \), together with Proposition 2.3 we obtain

Thus

Then

Consequently,

The alpha

We consider the perturbed process

with

For all \(t\in [0,T]\), the processes \(Z_{t}^{\widetilde{\varphi }}\) and \( \beta _{t}^{\widetilde{\varphi }}\) defined above are, respectively, given by

Then

Consequently

4 Malliavin Calculus for Square Integrable Additive Processes

4.1 Additive Processes

Definition 4.1

( see Cont [3], Definition 14.1 ) A stochastic process \((S_{t}) _{t\ge 0}\) on \(\mathbb {R}^{d}\) is called an additive process if it is càdlàg, satisfies \(S_{0}=0\) and has the following properties:

-

1.

Independent increments: for every increasing sequence of times \( t_{0},\ldots , t_{n}\), the random variables \(S_{t_{0}},S_{t_{1}}-S_{t_{0}}, \ldots , S_{t_{n}}-S_{t_{n-1}}\) are independent.

-

2.

Stochastic continuity: \(\forall \ \varepsilon >0\ \text {and}\ \forall \ t\ge 0,\ \lim _{h\rightarrow 0}\mathbb {P} [|S_{t+h}-S_{t}|\ge \varepsilon ]=0.\)

Theorem 4.2

(see Sato [15], Theorems 9.1–9.8) Let \((S_{t}) _{t\ge 0}\) be an additive process on \(\mathbb {R}^{d}\). Then \( S_{t}\) has an infinitely divisible distribution for all t. The law of \( (S_{t}) _{t\ge 0}\) is uniquely determined by its spot characteristics \( (A_{t},\mu _{t},\varGamma _{t}) _{t\ge 0}\):

where

The spot characteristics \((A_{t},\mu _{t},\varGamma _{t}) _{t\ge 0}\) satisfy the following conditions

-

1.

For all t, \(A_{t}\) is a positive definite \(d\times d\) matrix and \( \mu _{t}\) is a positive measure on \(\mathbb {R}^{d}\) satisfying \(\mu _{t}({0} ) =0\) and \(\int _{\mathbb {R}^{d}_0}(|z|^{2}\wedge 1) \mu _{t}(dz) <\infty \).

-

2.

Positiveness: \(A_{0}=0\), \(\mu _{0}=0\), \(\varGamma _{0}=0\) and for all s, t such that \(s\le t\), \(A_{t}-A_{s}\) is a positive definite \(d\times d\) matrix and \(\mu _{t}(B) \ge \mu _{s}(B) \) for all measurable sets \(B\in \mathscr {B}( \mathbb {R}^{d}) \).

-

3.

Continuity: if \(s\longrightarrow t\) then \(A_{s}\longrightarrow A_{t}\), \(\varGamma _{s}\longrightarrow \varGamma _{t}\) and \(\mu _{s}(B) \longrightarrow \mu _{t}(B) \) for all \(B\in \mathscr {B}(\mathbb {R}^{d}) \) such that \(B\subset \{z:|z|\ge \varepsilon \}\) for some \(\varepsilon >0\).

Conversely, for a family of \((A_{t},\mu _{t},\varGamma _{t}) _{t\ge 0}\) satisfying the conditions (1) , (2) and (3) above there exists an additive process \((S_{t}) _{t\ge 0}\) with \((A_{t},\mu _{t},\varGamma _{t}) _{t\ge 0}\) as spot characteristics.

Example 1

We consider a class of spot characteristics \((A_{t},\mu _{t},\varGamma _{t}) _{t \ge 0}\) constructed in the following way:

-

A continuous matrix valued function \(\sigma : [0,T]\longrightarrow M_{d\times d}(\mathbb {R}) \) such that \(\sigma _t\) is symmetric for all \(t\in [0,T]\) and verifies \(\int _{0}^{T}\sigma ^{2}_tdt<\infty \).

-

A family \((\nu _{t}) _{t\in [0,T]}\) of Lévy measures verifying \( \int _{0}^{T}\left( \int _{\mathbb {R}^{d}_0}(|z|^{2}\wedge 1) \nu _{t}(dz) \right) dt< \infty \).

-

A deterministic function with finite variation \(\gamma : [0,T]\longrightarrow \mathbb {R}^d\) (e.g., a piecewise continuous function).

Then the spot characteristics \((A_{t},\mu _{t},\varGamma _{t}) _{t\ge 0}\) defined by

satisfy the conditions 1, 2, 3 and therefore define a unique additive process \((S_{t}) _{t\ge 0}\) with spot characteristics \((A_{t},\mu _{t},\varGamma _{t}) _{t\in [0,T]}\). The triplet \((\sigma _{t}^{2},\nu _{t},\gamma _{t}) _{t\in [0,T]}\) are called local characteristics of the additive process.

Remark 4.3

Not all additive processes can be parameterized in this way, but we will assume this parametrization in terms of local characteristics in the rest of this paper. In particular, the assumptions above on the local characteristics implies that the process \((S_{t}) _{t\ge 0}\) is a semimartingale which will allow us to apply the Itô formula.

The local characteristics of an additive process enable us to describe the structure of its sample paths: the positions and sizes of jumps of \( (S_{t}) _{t\ge 0}\) are described by a Poisson random measure on \([0,T]\times \mathbb {R}^{d}\)

with (time-inhomogeneous) intensity given by \(\nu _{t}(dz) dt\):

The compensated Poisson random measure can therefore be defined by:

4.2 Isonormal Lévy Process (ILP)

Let \(\mu \) and \(\nu \) are \(\sigma \)–finite measures without atoms on the measurable spaces \((\mathrm {T},\mathscr {A}) \) and \((\mathrm {T\times X_{0}}, \mathscr {B}) \) respectively.

Define a new measure

on a measurable space \((\mathrm {T\times X},\mathscr {G}) \), where \(\mathrm {X}= \mathrm {X_0}\cup {\varDelta }\), \(\mathscr {G}=\sigma (\mathscr {A}\times {\varDelta }, \mathscr {B}) \) and \(\delta _{\varDelta }(dz) \) is the measure which gives mass one to the point \(\varDelta \).

We assume that the Hilbert space \(\mathscr {H}=\mathrm {L}^{2}(\mathrm {T\times X },\mathscr {G},\pi ) \) is separable.

Definition 4.4

We say that a stochastic process \(\mathrm {L}=\{\mathrm {L}(h), h\in \mathscr {H} \}\) defined in a complete probability space \((\varOmega ,\mathscr {F},P) \) is an isonormal Lévy process (or Lévy process on \(\mathscr {H}\)) if the following conditions are satisfied:

-

1.

The mapping \(h\longrightarrow L(h) \) is linear.

-

2.

\(\mathrm {E}[e^{ixL(h) }]=\exp (\Psi (x,h) ) \), where

$$\begin{aligned} \Psi (x,h) =\int _{\mathrm {T\times X}}\left( (e^{ixh(t,z) }-1-ixh(t,z) ) {\mathbf {1}}_{\mathrm {X}_{0}}(z) -\frac{1}{2}x^{2}h^{2}(t,z) \mathbf {1}_{\varDelta }(z) \right) \pi (dt,dz). \end{aligned}$$

4.3 Generalized Orthogonal Polynomials (GOP)

Denote by \(\overline{x}=(x_{1},x_{2},\ldots , x_{n},\ldots ) \) a sequence of real numbers. Define a function \(F(z,\overline{x}) \) by

If

then the series in (12) converge for all \(|z|<R(\overline{x}) \). So the function \(F(z,\overline{x}) \) is analytic for \(|z|<R(\overline{x}) \).

Consider an expansion in powers of z of the function \(F(z,\overline{x}) \):

One can easily show the following equalities:

4.4 Examples

-

1.

If \(\overline{x}(h) =(x,\lambda , 0,\ldots , 0,\ldots ) \), then

$$\begin{aligned} F(z,\overline{x}) =\exp \left( zx-\frac{z^{2}}{2}\lambda \right) =\sum _{n=0}^{\infty }H_{n}(x,\lambda ) z^{n}, \end{aligned}$$where \(H_{n}(x,\lambda ) \) are the Hermite polynomials (Brownian case). So

$$\begin{aligned} P_{n}(x,\lambda , 0,\ldots , 0) =H_{n}(x,\lambda ). \end{aligned}$$ -

2.

If \(\overline{x}(h) =(x-t,x,\ldots , x,\ldots ) \), then

$$\begin{aligned} F(z,\overline{x}) =(1+z) ^{x}e^{-tz}=\sum _{n=0}^{\infty }C_{n}(x,\lambda ) \frac{z^{n}}{n!}, \end{aligned}$$where \(C_{n}(x,\lambda ) \) are the Charlier polynomials (Poissonian case). So

$$\begin{aligned} n!P_{n}(x-t,x,\ldots , x) =C_{n}(x,\lambda ). \end{aligned}$$

4.5 Relationship Between Generalized Orthogonal Polynomials and Isonormal Lévy Process

For \(h\in \mathscr {H}\cap L^{\infty }(T\times X_{0},\mathscr {B},\nu ) \), let \(\overline{x}(h) =(x_{k}(h) ) _{k=1}^{\infty }\) denote the sequence of the random variables such that

Lemma 4.5

Let h and \(g\in \mathscr {H}\cap L^{\infty }(T\times X_{0},\mathscr {B},\nu ) \). Then for all \(n,m\ge 0\) we have \(P_{n}(\overline{x}(h) ) \) and \(P_{m}(\overline{x} (g) ) \in L^{2}(\varOmega ) \), and

4.6 The Chaos Decomposition

Lemma 4.6

The random variables \(\{e^{L(h) }, h\in \mathscr {H}\cap L^{\infty }(T\times X_0, \mathscr {B},\nu ) \}\) form a total subset of \(L^{2}(\varOmega ,\mathscr {F} ,P) \).

For each \(n\ge 1\) we will denote by \(\mathscr {P}_n\) the closed linear subspace of \(L^{2}(\varOmega ,\mathscr {F},P) \) generated by the random variables \( \{P_{n}(\overline{x}(h) ), h\in \mathscr {H}\cap L^{\infty }(T\times X_0,\mathscr {B} ,\nu ) \}\). \(\mathscr {P}_0\) will be the set of constants. For \(n=1\), \(\mathscr { P}_1\) coincides with the set of random variables \(\{L(h),h\in \mathscr {H}\}\). We will call the space \(\mathscr {P}_n\) chaos of order n.

Theorem 4.7

The space \(L^{2}(\varOmega ,\mathscr {F},P) \) can be decomposed into the infinite orthogonal sum of the subspace \(\mathscr {P}_n\):

4.7 The Multiple Integral

Set \(\mathscr {G}_{0}=\left\{ A\in \mathscr {G}|\pi (A) <\infty \right\} \). For any \(m\ge 1\) we denote by \(\mathscr {E}_{m}\) the set of all linear combinations of the following functions \(f\in \mathrm {L}^{2}((T\times X) ^{m}, \mathscr {G}^{m},\pi ^{m}) \)

where \(A_{1},\ldots , A_{m}\) are pairwise–disjoint sets in \(\mathscr {G}_{0}\).

The fact that \(\pi \) is a measure without atoms implies that \(\mathscr {E} _{m} \) is dense in \(\mathrm {L}^{2}((T\times X) ^{m}) \). (See, e.g. Nualart [11] pp. 8–9).

For the function of the form (13) we define the multiple integral of order m

Then, by linearity we conclude \(I_{m}(f) \) for all functions \(f\in \mathscr {E} _{m}\) and by continuity \(I_{m}(f) \) for all functions \(f\in \mathrm {L} ^{2}((T\times X) ^{m}) \).

The following properties hold:

-

1.

\(I_m\) is linear.

-

2.

\(I_{m}(f) =I_{m}(\widetilde{f}) \), where \(\widetilde{f}\) denotes the symmetrization of f, which is defined by

$$\begin{aligned} \widetilde{f}(t_{1},x_{1},\ldots , t_{m},x_{m}) =\frac{1}{m!}\sum _{\sigma \in \mathscr {S}_{m}}f(t_{\sigma (1) },x_{\sigma (1) },\ldots , t_{\sigma (m) },x_{\sigma (m) }). \end{aligned}$$ -

3.

$$\begin{aligned} \mathrm {E}\left[ I_{n}(f) I_{m}(g) \right] =\left\{ \begin{array}{llc} 0 &{} if &{} n\ne m, \\ m!<\widetilde{f},\widetilde{g}>_{\mathrm {L}^{2}((T\times X) ^{m}) } &{} if &{} n=m. \end{array} \right. \end{aligned}$$

4.8 Relationship Between Generalized Orthogonal Polynomials And multiple Stochastic Integrals

Proposition 4.8

Let \(P_{n}\) be the nth generalized orthogonal polynomial and \(\overline{x} (h) =(x_{k}(h) ) _{k=1}^{\infty }\), where \(h\in \cap _{p\ge 2}L^{p}(T\times X_{0},\mathscr {B},\nu ) \cap \mathscr {H}\) and

Then it holds that

where

4.9 Expansion into a Series of Multiple Stochastic Integrals

Corollary 4.9

Any square integrable random variable \(\xi \in L^{2}(\varOmega , \mathscr {F},P) \) can be expanded into a series of multiple stochastic integrals:

Here \(f_{0}=\, \)E\([\xi ]\), and \(I_{0}\) is the identity mapping on the constant. Furthermore, this representation is unique provided the functions \( f_{k}\in L^{2}((T\times X) ^{k}) \) are symmetric.

4.10 The Derivative Operator

Let \(\mathscr {S}\) denote the class of smooth random variables such that a random variable \(\xi \in \mathscr {S}\) has the form

where f belongs to \(\mathrm {C}_{b}^{\infty }(\mathbb {R}^{n}),h_{1},\ldots ,h_{n}\) are in \(\mathscr {H}\), and \(n\ge 1\). The set \(\mathscr {S}\) is dense in \(L^{p}(\varOmega ) \), for any \(p\ge 1\).

Definition 4.10

The stochastic derivative of a smooth functional of the form (15) is the \(\mathscr {H}\)–valued random variable \(D\xi =\{D_{t,x}\xi , (t,x) \in T\times X\}\) given by

We will consider \(D\xi \) as an element of \(\xi \in L^{2}(T\times X \times \varOmega ) \cong L^{2}(\varOmega ;\mathscr {H})\), namely, \(D\xi \) is a random process indexed by the parameter space \(T\times X\).

-

1.

If the measure \(\nu \) is zero or \(h_{k}(t,x) =0\), \(k=1,\ldots , n\) when \( x\ne \varDelta \) then \(D\xi \) coincides with the Malliavin derivative (see, e.g. Nualart [11] Def. 1.2.1 p. 38).

-

2.

If the measure \(\mu \) is zero or \(h_{k}(t,x) =0\), \(k=1,\ldots , n\) when \( x=\varDelta \) then \(D\xi \) coincides with the difference operator (see, e.g. Picard [13]).

4.11 Integration by Parts Formula

Theorem 4.11

Suppose that \(\xi \) and \(\eta \) are smooth functionals and \(h\in \mathscr {H}\). Then

-

1.

$$\begin{aligned} \mathrm {E}[\xi L(h) ]=\mathrm {E}[\left\langle D\xi ;h\right\rangle _{\mathscr {H}}]. \end{aligned}$$

-

2.

$$\begin{aligned} \mathrm {E}[\xi \eta L(h) ]=\mathrm {E}[\eta \left\langle D\xi ;h\right\rangle _{\mathscr {H}}]+\mathrm {E}[\xi \left\langle D\eta ;h\right\rangle >_{\mathscr {H}}]+\mathrm {E} [\left\langle D\eta ;h\mathbf {1}_{X_{0}}D\xi \right\rangle _{\mathscr {H}}]. \end{aligned}$$

As a consequence of the above theorem we obtain the following result:

-

The expression of the derivative \(D\xi \) given in (16) does not depend on the particular representation of \(\xi \) in (15).

-

The operator D is closable as an operator from \(L^2(\varOmega ) \) to \( L^2(\varOmega ;\mathscr {H}) \).

We will denote the closure of D again by D and its domain in \( L^2(\varOmega ) \) by \(\mathbb {D}^{1,2}\).

4.12 The Chain Rule

Proposition 4.12

(See Yablonski [16], Proposition 4.8) Suppose \(F=(F_{1},F_{2},\ldots , F_{n}) \) is a random vector whose components belong to the space \(\mathbb {D}^{1,2}\). Let \(\phi \in \mathscr {C}^{1}(\mathbb {R}^{n}) \) be a function with bounded partial derivatives such that \(\phi (F) \in \mathrm {L}^{2}(\varOmega ) \). Then \(\phi (F) \in \mathbb {D}^{1,2}\) and

4.13 The Action of the Operator D via the Chaos Decomposition

Lemma 4.13

It holds that \(P_{n}(\overline{x}(h) ) \in \mathbb {D}^{1,2}\) for all \(h\in \mathscr {H}\cap L^{\infty }(T\times X_{0},\mathscr {B},\nu ) \), \(n=1,2,\ldots \) and

Proposition 4.14

Let \(\xi \in L^{2}(\varOmega , \mathscr {F},P) \) with an expansion \(\xi =\sum _{k=0}^{\infty }I_{k}(f_{k}) \) where \(f_{k}\in L^{2}((T\times X) ^{k}) \) are symmetric for all k. Then \(\xi \in \mathbb {D}^{1,2}\) if and only if

and in this case we have

and

coincides with the sum of the series (14).

4.14 The Skorohod Integral

We recall that the derivative operator D is a closed and unbounded operator defined on the dense subset \(\mathbb {D}^{1,2}\) of \(\mathrm {L} ^{2}(\varOmega ) \) with values in \(\mathrm {L}^{2}(\varOmega ;\mathscr {H}) \).

Definition 4.15

We denote by \(\delta \) the adjoint of the operator D and we call it the Skorohod integral.

The operator \(\delta \) is a closed and unbounded operator on \(\mathrm {L} ^{2}(\varOmega ;\mathscr {H}) \) with values in \(\mathrm {L}^{2}(\varOmega ) \) defined on \( Dom(\delta ) \), where \(Dom(\delta ) \) is the set of processes \(u\in \mathrm {L} ^{2}(\varOmega ;\mathscr {H}) \) such that

for all \(F\in \mathbb {D}^{1,2}\), where c is some constant depending on u.

If \(u\in Dom(\delta ) \), then \(\delta (u) \) is the element of \(\mathrm {L} ^{2}(\varOmega ) \) such that

for any \(F\in \mathbb {D}^{1,2}\).

4.15 The Behavior of \(\delta \) in Terms of the Chaos Expansion

Proposition 4.16

Let \(u\in \mathrm {L}^{2}(\varOmega ;\mathscr {H}) \) with the expansion

Then \(u\in Dom(\delta )\) if and only if the series

converges in \(\mathrm {L}^{2}(\varOmega ) \).

It follows that \(Dom(\delta )\) is the subspace of \(\mathrm {L}^{2}(\varOmega ) \) formed by the processes that satisfy the following condition:

Note that the Skorohod integral is a linear operator and has a zero mean, e.g. \(\mathbb {E}\left[ \delta (u) \right] =0\) if \(u\in Dom(\delta )\). The following statements prove some properties of \(\delta \).

Proposition 4.17

Suppose that u is a Skorohod integrable process. Let \(F\in \mathbb {D}^{1,2}\) be such that \(\mathrm {E}\left[ \int _{T\times X}\left( F^{2}+(D_{t,z}F) ^{2}\mathrm {1}_{X_0}\right) u(t,z) ^{2}\pi (dt,dz) \right] <\infty \). Then it holds that

provided that one of the two sides of the equality (21) exists.

4.16 Commutativity Relationship Between the Derivative and Divergence Operators

Let \(\mathbb {L}^{1,2}\) denote the class of processes \(u\in \mathrm {L} ^{2}(T\times X\times \varOmega ) \) such that \(u(t,x) \in \mathbb {D}^{1,2}\) for almost all (t, x) , and there exists a measurable version of the multi–process \(D_{t,x}u(s,y) \) satisfying

Proposition 4.18

Suppose that \(u\in \mathbb {L}^{1,2}\) and for almost all \((t,z) \in T\times X\) , the two–parameter process \(\left( D_{t,z}u(s,y) \right) _{(s,y) \in T\times X} \) is Skorohod integrable, and there exists a version of the process \( \left( \delta (D_{t,z}u(\cdot ,\cdot ) ) \right) _{(t,z) \in T\times X}\) which belongs to \(\mathrm {L}^{2}(T\times X\times \varOmega ) \). Then \(\delta (u) \in \mathbb {D}^{1,2}\), and we have

4.17 The Itô Stochastic Integral as a Particular Case of the Skorohod Integral

Let \(W=\{W_{t},0\le t\le T\}\) is a be an d-dimensional standard Brownian motion, \(\widetilde{N}\) a compensated Poisson random measure on \( [0,T]\times \mathbb {R}_{0}^d\) with (time-inhomogeneous) intensity measure \(\nu (dt,dx) =\beta _{t}(dx) dt\), where \((\beta _{t}) _{t\in [0,T]}\) is a family of Lévy measures verifying \(\int _{0}^{T}\left( \int _{ \mathbb {R}^{d}}(\Vert z\Vert ^{2}\wedge 1) \beta _{t}(dz) \right) dt<\infty \). Here \(\mathbb {R}_{0}:=\mathbb {R}\setminus \{0\}\) and for each \(t\in [0,T]\), \(\mathscr {F}_{t}\) is the \(\sigma \)–algebra generated by the random variables

and the null sets of \(\mathscr {F}\).

We denote by \(L_{p}^{2}\) the subset of \(L^{2}(\varOmega ;\mathscr {H}) \) formed by \(( \mathscr {F}_{t}) \)–predictable processes.

Proposition 4.19

\(L_{p}^{2}\subset Dom(\delta )\), and the restriction of the operator \(\delta \) to the space coincides with the usual stochastic integral, that is

Notes

- 1.

This is to ensure that we can find some solutions for the weighting functions, since it often requires to take the inverse of the volatility function.

- 2.

Cox, Ingersoll and Ross model. See [4].

References

Bally, V., Bavouzet, M.-P., Massaoud, M.: Integration by parts formula for locally smooth laws and applications to sensitivity computations. Ann. Appl. Probab. 17(1), 33–66 (2007)

Benth, F.E., Di Nunno, G., Khedher, A.: Robustness of option prices and their deltas in markets modelled by jump-diffusions. Commun. Stochast. Anal. 5(2), 285–307 (2011)

Cont, R., Tankov, P.: Financial Modelling with Jump Processes. Chapman & Hall/CRC, Boca Raton (2004)

Cox, J.C., Ingersoll, J.E., Ross, S.A.: A theory of the term structure of interest rates. Econometrica 53, 385–407 (1985)

Davis, M.A., Johansson, M.P.: Malliavin Monte Carlo Greeks for jump diffusions. Stochast. Process. Appl. 116(1), 101–129 (2006)

Di Nunno, G., Øksendal, B., Proske, F.: Malliavin Calculus for Lévy Processes with Applications to Finance, Springer (2008)

El-Khatib, Y., Privault, N.: Computations of greeks in a market with jumps via the malliavin calculus. Finance Stochast. 8(2), 161–179 (2004)

El-Khatib, Y., Hatemi, J.A.: On the calculation of price sensitivities with jump-diffusion structure. J. Stat. Appl. Probab. 3(1), 171–182 (2012)

Fournié, E., Lasry, J.-M., Lebuchoux, J., Lions, P.-L., Touzi, N.: Applications of Malliavin calculus to Monte Carlo methods in finance. Finance Stochast. 3(4), 391–412 (1999)

León, J.A., Solé, J.L., Utzet, F., Vives, J.: On Lévy processes, Malliavin calculus and market models with jumps. Finance Stochast. 6, 197–225 (2002)

Nualart, D.: The Malliavin Calculus and Related Topics, 2nd edn. Springer, Berlin Heidelberg (2006)

Petrou, E.: Malliavin calculus in Lévy spaces and applications to finance. Electron. J. Probab. 13(27), 852–879 (2008)

Picard, J.: On the existence of smooth densities for jump processes. Probab. Theory Relat. Fields 105(4), 481–511 (1996)

Protter, P.: Stochastic Integration and Differential Equations. Stochastic Modeling and Applied Probability, vol. 21. Springer, Berlin (2005)

Sato, K.-I.: Lévy Processes and Infinitely Divisible Distributions. Cambridge University Studies in Advanced Mathematics, vol. 68. Cambridge University Press, Cambridge (1999)

Yablonski, A.: The calculus of variations for processes with independent increments. Rocky Mt J. Math. 38, 669–701 (2008)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Eddahbi, M., Lalaoui Ben Cherif, S.M. (2016). Sensitivity Analysis for Time-Inhomogeneous Lévy Process: A Malliavin Calculus Approach and Numerics. In: Eddahbi, M., Essaky, E., Vives, J. (eds) Statistical Methods and Applications in Insurance and Finance. CIMPA School 2013. Springer Proceedings in Mathematics & Statistics, vol 158. Springer, Cham. https://doi.org/10.1007/978-3-319-30417-5_2

Download citation

DOI: https://doi.org/10.1007/978-3-319-30417-5_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-30416-8

Online ISBN: 978-3-319-30417-5

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)