Abstract

Although there exist several measures for intuitionistic fuzzy sets (IFSs), many unreasonable cases made by the such measures can be observed in literature. The main aim of this paper is to present a new reliable measure of amount of knowledge for IFSs. First we define a new knowledge measure for IFSs and prove some properties of the proposed measure. We present a new entropy measure for IFSs as a dual measure to the proposed knowledge measure. Then we use some examples to illustrate that the proposed measures, though simple in concept and calculus, outperform the existing measures. Finally, we use the proposed knowledge measure for IFSs to deal with the data classification problem.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

As a generalization of fuzzy set, intuitionistic fuzzy set (IFS) was introduced by Atanassov [1] to deal with uncertainty of imperfect information. Since the IFS represents information by both membership and non-membership degrees and hesitancy degree being a lack of information, it is found to be more powerful to deal with vagueness and uncertainty than the fuzzy set (FS). Many measures [2, 4–6, 10, 13–15, 17, 23] have been proposed by scholars to evaluate IFSs. Basically, it is desired that the measure made on IFSs should be able to evaluate degrees of fuzziness and intuitionism from the imperfect information. Among the most interesting measures in IFSs theory, knowledge measure is an essential tool for evaluating amount of knowledge from information contained in IFSs. Based on knowledge measure of IFSs, the entropy measure and similarity measure between IFSs can be constructed.

The entropy mentioned first in 1965 by Zadeh [26], described the fuzziness of a FS. In order to measure the degree of fuzziness of FSs, De Luca and Termini [7] introduced a non-probabilistic entropy, that was also called a measure of a quantity of information. Kaufmann [11] proposed a method for measuring the fuzziness degree of a fuzzy set by a metric distance between its membership function and membership function of its nearest crisp set. Yager [24] suggested the entropy measure expressed by the distance between a fuzzy set and its complement. In 1996, Bustince and Burillo [3] firstly introduced a notion that entropy on IFSs can be used to evaluate intuitionism of an IFS. Szmidt and Kacprzyk [18] reformulated De Luca and Termini’s axioms and proposed an entropy measure for IFSs, based on geometric interpretation as a ratio of the distance from the IFS to the nearer crisp set and the distance to the another (farer) one. Hung and Yang [9] gave their axiomatic definitions of entropy of IFSs by using the concept of probability. Vlachos and Sergiadis [21] pointed out that entropy as a measure of fuzziness can measure both fuzziness and intuitionism for IFSs. On the other hand, Szmidt at el. [19] emphasized that the entropy alone may be not a satisfactory dual measure of knowledge useful from the viewpoint of decision making and introduced a new measure of knowledge for IFSs, which involves both entropy and hesitation margin. Dengfeng and Chuntian [8] gave the axiomatic definition of similarity measures between IFSs and proposed similarity measures based on high and low membership functions. Ye [25] proposed cosine and weighted cosine similarity measures for IFSs and applied to a small medical diagnosis problem. However, Li et al. [12] pointed out that there always are counterintuitive examples in pattern recognition among these existing similarity measure. Many unreasonable results of other measures for IFSs are also revealed in [19, 25]. The main reason of unreasonable cases of the existing entropy measures and similarity measures is that there is no reliable measurement of amount of knowledge carried by IFSs, which can be used for measuring and comparing them. In this paper, we present a new knowledge measure for IFSs that provides reliable results. The performance evaluation of the proposed measure is twofold: assessing how much the measure is reasonable, and indicating the accuracy of the measure in comparison with others.

2 Basic Concept of Intuitionistic Fuzzy Sets

For any elements of the universe of discourse X, an intuitionistic fuzzy set A is described by:

where \(\upmu_{\text{A}} ({\text{x}})\) denotes a degree of membership and \(\upnu_{\text{A}} ({\text{x}})\) denotes a degree of non-membership of x to A, \(\upmu_{\text{A}} :{\text{X}} \to [0,1]\) and \(\upnu_{\text{A}} :{\text{X}} \to [0,1]\) such that \(0 \le\upmu_{\text{A}} \left( {\text{x}} \right) +\upnu_{\text{A}} \left( {\text{x}} \right) \le 1,\forall {\text{x}} \in {\text{X}}\). To measure hesitancy of membership of an element to an IFS, Atanassov introduced a third function given by:

which is called the intuitionistic fuzzy index or the hesitation margin. It is obvious, that \(0 \le\uppi_{\text{A}} \left( {\text{x}} \right) \le 1,\forall {\text{x}} \in {\text{X}}\). If \(\uppi_{\text{A}} \left( {\text{x}} \right) = 0,\forall {\text{x}} \in {\text{X}}\), then \(\upmu_{\text{A}} \left( {\text{x}} \right) +\upnu_{\text{A}} \left( {\text{x}} \right) = 1\) and the IFS A is reduced to an ordinary fuzzy set. The concept of a complement of an IFS A, denoted by Ac is defined as [1]:

Many measures for IFSs, such as well-known measures given by De Luca and Termini [7], Szmidt and Kacprzyk [18], Wang [22] and Zhang [27] have been presented. But in their works, Szmidt and Kacprzyk [19] have found some problems with the existing distance measures, entropy measures and similarity measures. To deal with these situations, in [20] Szmidt and Kacprzyk proposed a measure of amount of knowledge for IFSs, considering both entropy measure and hesitation margin as follows:

However, this measure also gives unreasonable results because evaluates equally amounts of knowledge for two different IFSs. For example, in the case of two singleton IFSs \({\text{A}} = \left\langle {{\text{x}},0.5,0.5} \right\rangle\) and \({\text{B}} = \left\langle {{\text{x}},0,0.5} \right\rangle\), from Eq. (4) we get \({\text{K}}\left( {\text{A}} \right) = 0.5\) and \({\text{K}}\left( {\text{B}} \right) = 0.5\). It can well be argued, that amount of knowledge for \({\text{A}} = \left\langle {{\text{x}},0.5,0.5} \right\rangle\) should be bigger than for \({\text{B}} = \left\langle {{\text{x}},0,0.5} \right\rangle\) from the viewpoint of decision making. To overcome this drawback we propose a new measure of amount of knowledge carried by IFSs.

3 A New Proposed Measure of Knowledge for the Intuitionistic Fuzzy Sets

The knowledge measure of an FS evaluates a distance to the most fuzzy set, i.e. the set with membership and non-membership grades equal to 0.5. Motivated by this idea, we define a new measure of amount of knowledge for IFSs as follows:

Definition 1

Let A be an IFS in the finite universe of discourse \(X = \left\{ {x_{1} , x_{2} , \ldots ,x_{n} } \right\}\). The new knowledge measure of A is defined as a normalized Euclidean distance from A to the most fuzzy intuitionistic set, i.e. \(F = \left\langle {x,0,0} \right\rangle\) and expressed as:

Hence, the proposed knowledge measure evaluates quantity of information of an IFS A as its normalized Euclidean distance from the reference level 0 of information. For example, knowledge measure is equal to 1 for the crisp sets \((\mu_{A} \left( {x_{i} } \right) = 1 \;or \;\nu_{A} \left( {x_{i} } \right) = 1)\) and 0 for the most intuitionistic fuzzy set \(F = \left\langle {x,0,0} \right\rangle .\)

Entropy measure for IFSs, as a dual measure of the amount of knowledge is defined as:

Theorem 1

Let A be an IFS in \(X = \left\{ {x_{1} , x_{2} , \ldots ,x_{n} } \right\}\) . The proposed knowledge measure K F of A is a metric and satisfies the following axiomatic properties.

-

(P1)

\(\begin{array}{*{20}c} {K_{F} \left( A \right) = 1\quad {\text{iff}}} & {{\text{A}}\;{\text{is}}\;{\text{a}}\;{\text{crisp}}\;{\text{set}}} \\ \end{array}\)

-

(P2)

\(\begin{array}{*{20}c} {{\text{K}}_{\text{F}} \left( {\text{A}} \right) = 0\quad {\text{iff}}} & {\uppi_{\text{A}} \left( {{\text{x}}_{\text{i}} } \right) = 1} \\ \end{array}\)

-

(P3)

\(0 \le K_{F} \left( A \right) \le 1\)

-

(P4)

\({\text{K}}_{\text{F}} \left( {\text{A}} \right) = {\text{K}}_{\text{F}} \left( {{\text{A}}^{\text{c}} } \right)\)

Proof

-

(P1)

Having in mind \(\upmu_{A} \left( {x_{i} } \right) + \nu_{A} \left( {x_{i} } \right) + \pi_{A} \left( {x_{i} } \right) = 1\) we have:

$$\begin{aligned} {\text{K}}_{\text{F}} \left( {\text{A}} \right) & = 1 \Leftrightarrow \mathop \sum \limits_{i = 1}^{n} \sqrt {\left( {\upmu_{A} \left( {x_{i} } \right)} \right)^{2} + \left( {\nu_{A} \left( {x_{i} } \right)} \right)^{2} + \left( {1 - \pi_{A} \left( {x_{i} } \right)} \right)^{2} } \\ & = n\sqrt 2 \Leftrightarrow \mathop \sum \limits_{i = 1}^{n} \sqrt {\left( {\upmu_{A} \left( {x_{i} } \right)} \right)^{2} + \left( {\nu_{A} \left( {x_{i} } \right)} \right)^{2} + \left( {\upmu_{A} \left( {x_{i} } \right) + \nu_{A} \left( {x_{i} } \right)} \right)^{2} } \\ & = n\sqrt 2 \Leftrightarrow \mathop \sum \limits_{i = 1}^{n} \sqrt {\left( {\upmu_{A} \left( {x_{i} } \right) + \nu_{A} \left( {x_{i} } \right)} \right)^{2} -\upmu_{A} \left( {x_{i} } \right)\nu_{A} \left( {x_{i} } \right)} = n. \\ \end{aligned}$$As \(0 \le\upmu_{A} \left( {x_{i} } \right) + \nu_{A} \left( {x_{i} } \right) \le 1\) and \(0 \le\upmu_{A} \left( {x_{i} } \right)\nu_{A} \left( {x_{i} } \right) \le 1\) imply inequality

$$\begin{aligned} & 0 \le \left( {\upmu_{A} \left( {x_{i} } \right) + \nu_{A} \left( {x_{i} } \right)} \right)^{2} -\upmu_{A} \left( {x_{i} } \right)\nu_{A} \left( {x_{i} } \right) \le 1 \Leftrightarrow \\ & 0 \le \sqrt {\left( {\upmu_{A} \left( {x_{i} } \right) + \nu_{A} \left( {x_{i} } \right)} \right)^{2} -\upmu_{A} \left( {x_{i} } \right)\nu_{A} \left( {x_{i} } \right)} \le 1, \\ \end{aligned}$$then \(\sum\nolimits_{i = 1}^{n} {\sqrt {\left( {\upmu_{A} \left( {x_{i} } \right)} \right)^{2} + \left( {\nu_{A} \left( {x_{i} } \right)} \right)^{2} +\upmu_{A} \left( {x_{i} } \right)\nu_{A} \left( {x_{i} } \right)} = n \Leftrightarrow }\)

\(\left( {\mu_{A} \left( {x_{i} } \right) = 1\; and\; \nu_{A} \left( {x_{i} } \right) = 0} \right) or \left( {\mu_{A} \left( {x_{i} } \right) = 0 \;and\;\nu_{A} \left( {x_{i} } \right) = 1} \right)\) holds that A is a crisp set.

-

(P2)

From Eq. (5) we have

$$\begin{aligned} K_{F} \left( A \right) & = 0 \Leftrightarrow \mathop \sum \limits_{i = 1}^{n} \sqrt {\left( {\mu_{A} \left( {x_{i} } \right)} \right)^{2} + \left( {\nu_{A} \left( {x_{i} } \right)} \right)^{2} + \left( {1 - \pi_{A} \left( {x_{i} } \right)} \right)^{2} } \\ & = 0 \Leftrightarrow \pi_{A} \left( {x_{i} } \right) = 1, \mu_{A} \left( {x_{i} } \right) = 0, \nu_{A} \left( {x_{i} } \right) = 0 \Leftrightarrow \pi_{A} \left( {x_{i} } \right) = 1. \\ \end{aligned}$$ -

(P3)

From (P1) we have

$$\begin{aligned} & 0 \le \sqrt {\left( {\mu_{A} \left( {x_{i} } \right) + \nu_{A} \left( {x_{i} } \right)} \right)^{2} - \mu_{A} \left( {x_{i} } \right)\nu_{A} \left( {x_{i} } \right)} \le 1 \\ & \quad \Leftrightarrow 0 \le \sqrt {\left( {\mu_{A} \left( {x_{i} } \right)} \right)^{2} + \left( {\nu_{A} \left( {x_{i} } \right)} \right)^{2} + \mu_{A} \left( {x_{i} } \right)\nu_{A} \left( {x_{i} } \right)} \le 1 \\ & \quad \Leftrightarrow 0 \le \frac{1}{\sqrt 2 }\sqrt {2\left( {\mu_{A} \left( {x_{i} } \right)} \right)^{2} + 2\left( {\nu_{A} \left( {x_{i} } \right)} \right)^{2} + 2\mu_{A} \left( {x_{i} } \right)\nu_{A} \left( {x_{i} } \right)} \le 1 \\ & \quad \Leftrightarrow 0 \le \frac{1}{n\sqrt 2 }\mathop \sum \limits_{i = 1}^{n} \sqrt {\left( {\mu_{A} \left( {x_{i} } \right)} \right)^{2} + \left( {\nu_{A} \left( {x_{i} } \right)} \right)^{2} + \left( {\mu_{A} \left( {x_{i} } \right) + \nu_{A} \left( {x_{i} } \right)} \right)^{2} } \le 1. \\ \end{aligned}$$Having in mind \(1 - \pi_{A} \left( {x_{i} } \right) =\upmu_{A} \left( {x_{i} } \right) + \nu_{A} \left( {x_{i} } \right)\), then \(0 \le K_{F} \left( A \right) \le 1\) that implies (P3).

-

(P4)

Combining Eqs. (5) and (3) we have

$$K_{F} \left( A \right) = \frac{1}{n\sqrt 2 }\mathop \sum \limits_{i = 1}^{n} \sqrt {\left( {\mu_{A} \left( {x_{i} } \right)} \right)^{2} + \left( {\nu_{A} \left( {x_{i} } \right)} \right)^{2} + \left( {1 - \pi_{A} \left( {x_{i} } \right)} \right)^{2} } = K_{F} \left( {A^{c} } \right).$$This completes the proof.

A geometrical interpretation of proposed knowledge measure KF for IFSs is shown in Fig. 1. The IFS A is mapped into the triangle MNF, where each element x of A corresponds a point of triangle MNF with coordinates \(\left( {\mu_{A} (x), \nu_{A} (x),\pi_{A} (x)} \right)\) fulfilling Eq. (2). Point M(1,0,0) represents elements fully belonging to A \((\mu_{A} \left( x \right) = 1)\). Point N(0,1,0) represents elements fully not belonging to A \((\nu_{A} \left( x \right) = 1)\). Point F(0,0,1) represents elements fully being hesitation, i.e. we are not able to say whether they belong or not belong to A. In such a way the whole information about IFS A can be described by location of corresponding point \(\left( {\mu_{A} (x), \nu_{A} (x),\pi_{A} (x)} \right)\) inside the triangle MNF or rather by its distance to the point F(0,0,1) (distance c in Fig. 1). Clearly that the closer distance c, the smaller knowledge measure (the higher degree of fuzziness). When distance c = 0, point F represents elements with zero information level \((K_{F} = 0)\), i.e. the highest degree of fuzziness.

4 Comparative Examples

In order to testify the validity and capability of the new entropy, some comparative examples are presented in this section.

Example 1

Let us calculate knowledge measure \(K_{F}\) for two singleton IFSs \(A = x,0.5,0.5\) and \(B = x,0,0.5\). Adopting the proposed knowledge measure \(K_{F}\) from Eq. (5) we derive \(K_{F} \left( A \right) = 0.866\) and \(K_{F} \left( B \right) = 0.5\). Thus, \({\text{K}}_{\text{F}} \left( A \right) > K_{F} \left( B \right)\) indicates that amount of knowledge of A is bigger than of B while the Szmidt and Kacprzyk’s measure gave \(K\left( A \right) = K\left( B \right) = 0.5\) in [20]. The result is consistent with our intuition because A and B have the same non-membership degrees, but A has additional information about membership degree. Therefore amount of knowledge of A should be bigger than of B.

Example 2

In this example we compare our knowledge-based entropy measure with some existing entropy measures. We first recall some widely used entropy measures for IFSs as follows.

-

(a)

Bustine and Burillo [3]:

$${\text{E}}_{\text{bb}} \left( {\text{A}} \right) = \mathop \sum \limits_{{{\text{i}} = 1}}^{\text{n}}\uppi_{\text{A}} \left( {{\text{x}}_{\text{i}} } \right);$$(7) -

(b)

Hung and Yang [9]:

$$E_{hc}^{\alpha } \left( A \right) = \left\{ {\begin{array}{*{20}c} {\frac{1}{\alpha - 1}\left[ {1 - \left( {\mu_{A}^{\alpha } + \nu_{A}^{\alpha } + \pi_{A}^{\alpha } } \right)} \right] \alpha \ne 1, \alpha > 0} \\ { - \left( {\upmu_{A } \ln\upmu_{A} + \nu_{A} \ln \nu_{A} + \pi_{A} \ln \pi_{A} } \right),\alpha = 1} \\ \end{array} } \right.,$$(8)$$E_{r}^{\beta } \left( A \right) = \frac{1}{1 - \beta }ln\left( {\mu_{A}^{\beta } + \nu_{A}^{\beta } + \pi_{A}^{\beta } } \right), 0 < \beta < 1;$$(9) -

(c)

Szmidt and Kacprzyk [18]:

$$E_{sk} \left( A \right) = \frac{1}{\text{n}}\mathop \sum \limits_{{{\text{i}} = 1}}^{\text{n}} \frac{{\hbox{min} \left( {\upmu_{\text{A}} \left( {{\text{x}}_{\text{i}} } \right),\upnu_{\text{A}} \left( {{\text{x}}_{\text{i}} } \right)} \right) +\uppi_{\text{A}} \left( {{\text{x}}_{\text{i}} } \right)}}{{\hbox{max} \left( {\upmu_{\text{A}} \left( {{\text{x}}_{\text{i}} } \right),\upnu_{\text{A}} \left( {{\text{x}}_{\text{i}} } \right)} \right) +\uppi_{\text{A}} \left( {{\text{x}}_{\text{i}} } \right)}};$$(10) -

(d)

Vlachos and Sergiadis [21]:

$$E_{vs1} \left( A \right) = - \frac{1}{nln2}\mathop \sum \limits_{i = 1}^{n} \left[ {\begin{array}{*{20}c} {\upmu_{A} \left( {x_{i} } \right)\ln\upmu_{A} \left( {x_{i} } \right) + \nu_{A} \left( {x_{i} } \right)\ln \nu_{A} \left( {x_{i} } \right) - } \\ {\left( {1 - \pi_{A} \left( {x_{i} } \right)\ln \left( {1 - \pi_{A} \left( {x_{i} } \right)} \right)} \right) - \pi_{A} \left( {x_{i} } \right)\ln 2} \\ \end{array} } \right],$$(11)$$E_{vs2} \left( A \right) = \frac{1}{n}\mathop \sum \limits_{i = 1}^{n} \frac{{2\upmu_{A} \left( {x_{i} } \right)\nu_{A} \left( {x_{i} } \right) + \pi_{A}^{2} \left( {x_{i} } \right)}}{{\upmu_{A}^{2} \left( {x_{i} } \right) + \nu_{A}^{2} \left( {x_{i} } \right) + \pi_{A}^{2} \left( {x_{i} } \right)}}.$$(12)

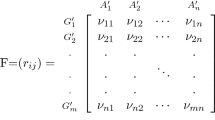

Let us consider seven single-element IFSs given by \({\text{A}}_{1} = \left\langle {{\text{x}}, 0.7,0.2} \right\rangle\), \({\text{A}}_{2} = \left\langle {{\text{x}}, 0.5,0.3} \right\rangle\), \({\text{A}}_{3} = \left\langle {{\text{x}}, 0.5,0} \right\rangle\), \({\text{A}}_{4} = \left\langle {{\text{x}}, 0.5,0.5} \right\rangle\), \({\text{A}}_{5} = \left\langle {{\text{x}}, 0.5,0.4} \right\rangle\), \({\text{A}}_{6} = \left\langle {{\text{x}}, 0.6,0.2} \right\rangle\) and \({\text{A}}_{7} = \left\langle {{\text{x}}, 0.4,0.4} \right\rangle\). These IFSs are used for comparing calculations of the recalled entropy measures with our new measure \({\text{E}}_{\text{F}}\) from formula (6). The calculated results of specific measures are summarized in columns of Table 1.

It can be seen that the recalled measures give some unreasonable cases (in bold type). For instance, the measures \(E_{hc}^{\alpha }\) and \(E_{r}^{\beta }\) \((\alpha = 1/2, 1, 2 \;and \;3;\beta = 1/3 \;and\; 1/2)\) from Hung and Yang [9] cannot distinguish two different IFSs \({\text{A}}_{3} = \left\langle {{\text{x}}, 0.5,0} \right\rangle\) and \({\text{A}}_{4} = \left\langle {{\text{x}}, 0.5,0.5} \right\rangle\). The measures \({\text{E}}_{\text{sk}}\) from [18] and \({\text{E}}_{{{\text{vs}}1}}\) and \({\text{E}}_{{{\text{vs}}2}}\) from [21] give the same entropy measures for two different IFSs \({\text{A}}_{4} = \left\langle {{\text{x}}, 0.5,0.5} \right\rangle\) and \({\text{A}}_{7} = \left\langle {{\text{x}}, 0.4,0.4} \right\rangle\). In turn, \({\text{E}}_{\text{bb}}\) evaluates entropy by only the hesitation margin, omitting fuzziness involved relation between membership/non-membership degrees in cases \({\text{A}}_{1} = \left\langle {{\text{x}}, 0.7,0.2} \right\rangle\) and \({\text{A}}_{5} = \left\langle {{\text{x}}, 0.5,0.4} \right\rangle\) or \({\text{A}}_{2} = \left\langle {{\text{x}}, 0.5,0.3} \right\rangle\), \({\text{A}}_{6} = \left\langle {{\text{x}}, 0.6,0.2} \right\rangle\) and \({\text{A}}_{7} = \left\langle {{\text{x}}, 0.4,0.4} \right\rangle\). Based on results of the new entropy measure \({\text{E}}_{\text{F}}\) (last column), we can rank the IFSs in accordance with the increasing related entropy measures as follows: \({\text{A}}_{4} \prec {\text{A}}_{1} \prec {\text{A}}_{5} \prec {\text{A}}_{6} \prec {\text{A}}_{2} \prec {\text{A}}_{7} \prec {\text{A}}_{3}\). Thus, from the tested sets, the most fuzzy set is \({\text{A}}_{3}\) and the sharpest set is \({\text{A}}_{4}\). This order is met only by Burillo and Bustine’s measure [3] known as \({\text{E}}_{\text{bb}} \left( {\text{A}} \right) = \mathop \sum \limits_{{{\text{i}} = 1}}^{\text{n}}\uppi_{\text{A}} ({\text{x}}_{\text{i}} )\), which is a function only of the hesitation margin \(\uppi_{\text{A}}\). Therefore, \({\text{E}}_{\text{bb}}\) is not able to point out the influence of relationship between \(\mu_{A} \;and\;\nu_{A}\) on degree of fuzziness. Nevertheless it indicates the overwhelming importance of the hesitation margin in evaluating degree of fuzziness for IFSs.

Example 3

We consider the problem of data classification so-called “Saturday mornings”, introduced by Quinlan [16] and solved by using decision trees and selecting the minimal possible tree. The presented example is quite small, but it is a challenge to many classification and machine learning methods. The objects of classification were attributes describing the weather on Saturday mornings, and each attribute was assigned by a set of linguistic disjoint values as follows [16]: Outlook = {sunny, overcast, rain}, Temperature = {cold, mild, hot}, Humidity = {high, normal} and Windy = {true, false}. Each object belongs to one of two classes of C = {P, N}, where P denotes positive and N—negative. Quinlan pointed out the best ranking of the attributes in context of amount of knowledge as: Outlook Humidity Windy Temperature.

The representation of the “Saturday Mornings” data in terms of the IFSs is shown in Table 2. The results of evaluating amount of knowledge of “Saturday Morning” data for particular attributes are \(K_{F} \left( {Outlook} \right) = 0.51\), \(K_{F} \left( {Temperature} \right) = 0.25\), \(K_{F} \left( {Humidity} \right) = 0.498\) and \(K_{F} \left( {Windy} \right) = 0.49\). From the viewpoint of decision making, the best attribute is the most informative one, i.e. with the highest amount of knowledge KF. Therefore, the order of ranking of the attributes indicated by KF is following: Outlook Humidity Windy Temperature, which is exactly the same in comparison with the results presented by Quinlan [16] and Szmidt et al. [20].

5 Conclusion

We have discussed on some features of the measures for IFSs existing in literature and proposed a new knowledge measure for IFSs. The new proposed measures have been verified by comparison with the existing measures in the illustrative examples. From the obtain results we can see that the proposed measure overcomes the drawbacks of the existing measures. The new measure points out the relationship between positive and negative information and strong influence of a lack of information on amount of knowledge. Finally, the proposed measure gives reasonable results in comparison with other measures, for dealing with the data classification problem.

References

Atanassov, K.T.: Intuitionistic fuzzy sets. Fuzzy Sets Syst. 20, 87–96 (1986)

Boran, F.R., Akay, D.: A biparametric similarity measure on intuitionistic fuzzy sets with applications to pattern recognition. Inf. Sci. 255, 45–57 (2014)

Bustince, H., Burillo, P.: Vague sets are intuitionistic fuzzy sets. Fuzzy Sets Syst. 79(3), 403–405 (1996)

Chen, S.M.: Measures of similarity between vague sets. Fuzzy Sets Syst. 74(2), 217–223 (1995)

Chen, X., Yang, L., Wang, P., Yue, W.: A fuzzy multicriteria group decision-making method with new entropy of interval-valued intuitionistic fuzzy sets. J. Appl. Math. 1, 1–8 (2013)

Davarzani, H., Khorheh, M.A.: A novel application of intuitionistic fuzzy sets theory in medical science: Bacillus colonies recognition. Artif. Intell. Res. 2(2), 1–16 (2013)

De Luca, A., Termini, S.: A definition of a non-probabilistic entropy in the setting of fuzzy sets theory. Inform. Control 20, 301–312 (1972)

Dengfeng, L., Chuntian, C.: New similarity measures of intuitionistic fuzzy sets and application to pattern recognitions. Pattern Recogn. Lett. 23(1–3), 221–225 (2002)

Hung, W.L., Yang, M.S.: Fuzzy entropy on intuitionistic fuzzy sets. Int. J. Intell. Syst. 21(4), 443–451 (2006)

Jing, L., Min, S.: Some entropy measures of interval-valued intuitionistic fuzzy sets and their applications. Adv. Model. Optim. 15(2), 211–221 (2013)

Kaufmann, A.: Introduction to the Theory of Fuzzy Subsets. Academic Press, New York (1975)

Li, Y., Chi, Z., Yan, D.: Similarity measures between vague sets and vague entropy. J. Comput. Sci. 29, 129–132 (2002)

Liang, Z., Shi, P.: Similarity measures on intuitionistic fuzzy sets. Pattern Recogn. Lett. 24(15), 2687–2693 (2003)

Mitchell, H.B.: On the Dengfeng-Chuntian similarity measure and its application to pattern recognitions. Pattern Recogn. Lett. 24(16), 3101–3104 (2003)

Miaoying, T.: A new fuzzy similarity measure based on cotangent function for medical diagnosis. Adv. Model. Optim. 15(2), 151–156 (2013)

Quinlan, J.R.: Induction of decision trees. Mach. Learn. 1, 81–106 (1986)

Song, Y., Wang, X., Lei, L., Xue, A.: A new similarity measure between intuitionistic fuzzy sets and its application to pattern recognition. Appl. Intell. 42, 252–261 (2015)

Szmidt, E., Kacprzyk, J.: Entropy for intuitionistic fuzzy sets. Fuzzy Sets Syst. 118(3), 467–477 (2001)

Szmidt, E., Kacprzyk, J.: A new similarity measure for intuitionistic fuzzy sets: straightforward approaches may not work. In: IEEE Conference on Fuzzy Systems 2007, pp. 481–486

Szmidt, E., Kacprzyk, J., Bujnowski, P.: How to measure the amount of knowledge conveyed by Atanassov’s intuitionistic fuzzy sets. Inf. Sci. 257, 276–285 (2014)

Vlachos, I.K., Sergiadis, G.D.: Intuitionistic fuzzy information-application to pattern recognition. Pattern Recogn. Lett. 28(2), 197–206 (2007)

Wang, H.: Fuzzy entropy and similarity measure for intuitionistic fuzzy sets. In: International Conference on Mechanical Engineering and Automation, vol. 10, pp. 84–89 (2012)

Xu, Z., Liao, H.: Intuitionistic fuzzy analytic hierarchy process. IEEE Trans. Fuzzy Syst. 22(4), 749–761 (2014)

Yager, R.R.: On the measure of fuzziness and negation, part I: membership in unit interval. Int. J. Gen. Syst. 5(4), 221–229 (1979)

Ye, J.: Cosine similarity measures for intuitionistic fuzzy sets and their applications. Math. Comput. Model. 53(1–2), 91–97 (2011)

Zadeh, L.A.: Fuzzy sets. Inf. Control 8(3), 338–353 (1965)

Zhang, H.: Entropy for intuitionistic fuzzy sets based on distance and intuitionistic index. Int. J. Uncertainty Fuzziness Knowl. Based Syst. 21(1), 139–155 (2013)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Nguyen, H. (2016). A New Knowledge Measure of Information Carried by Intuitionistic Fuzzy Sets and Application in Data Classification Problem. In: Wilimowska, Z., Borzemski, L., Grzech, A., Świątek, J. (eds) Information Systems Architecture and Technology: Proceedings of 36th International Conference on Information Systems Architecture and Technology – ISAT 2015 – Part IV. Advances in Intelligent Systems and Computing, vol 432. Springer, Cham. https://doi.org/10.1007/978-3-319-28567-2_19

Download citation

DOI: https://doi.org/10.1007/978-3-319-28567-2_19

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-28565-8

Online ISBN: 978-3-319-28567-2

eBook Packages: EngineeringEngineering (R0)