Abstract

This paper presents a novel sufficient condition for the existence, uniqueness and global robust asymptotic stability of the equilibrium point for the class of delayed neural networks by using the Homomorphic mapping and the Lyapunov stability theorems. An important feature of the obtained result is its low computational complexity as the reported result can be verified by checking some well-known properties of some certain classes of matrices, which simplify the verification of the derived result.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In recent years, dynamical neural networks have been widely used in solving various classes of engineering problems such as image and signal processing, associative memory, pattern recognition, parallel computation, control and optimization. In such applications, the equilibrium and stability properties of neural networks are of great importance in the design of dynamical neural networks. It is known that in the VLSI implementation of neural networks, time delays are unavoidably encountered during the processing and transmission of signals, which may affect the dynamics of neural networks. On the other hand, some deviations in the parameters of the neural network may also affect the stability properties. Therefore, we must consider the time delays and parameter uncertainties in studying stability of neural networks, which requires to deal with the robust stability of delayed neural networks. Recently, many conditions for global robust stability of delayed neural networks have been reported [1–19]. In this paper, we present a new sufficient condition for the global robust asymptotic stability of neural networks with multiple time delays.

Consider the following neural network model:

where n is the number of the neurons, \({x}_{i}(t)\) denotes the state of the neuron i at time t, \({f_i}({\cdot })\) denote activation functions, \(a_{ij}\) and \(b_{ij}\) denote the strengths of connectivity between neurons j and i at time t and \(t-{\tau _{ij}}\), respectively; \({\tau _{ij}}\) represents the time delays, \(u_i\) is the constant input to the neuron i, \({c}_{i}\) is the charging rate for the neuron i.

The parameters \(a_{ij}\) and \(b_{ij}\) and \({c}_{i}\) are assumed to satisfy the conditions

The activation functions \(f_i\) are assumed to satisfy the condition:

where \(\ell _i>0\) denotes a constant. This class of functions is denoted by \(f\in \mathcal{L}\).

The following lemma will play an important role in the proofs:

Lemma 1

[3]: Let A be any real matrix defined by

Let \(x =(x_{1},x_{2}, ..., x_{n})^{T} \) and \(y =(y_{1},y_{2}, ..., y_{n})^{T} \). Then, we have

where \(\beta \) is any positive constant, and

with \( {\hat{a}_{ij}}=max\{|{\underline{a}_{ij}}|, |{\overline{a}_{ij}}|\}, i,j=1,2,...,n\).

2 Global Robust Stability Analysis

In this section, we present the following result:

Theorem 1

For the neural system (1), let the network parameters satisfy (2) and \(f\in \mathcal{L}\). Then, the neural network model (1) is globally asymptotically robust stable, if there exist positive constants \(\alpha \) and \(\beta \) such that

where \({p_i}=\sum _{ j=1}^{ n}({\hat{a}_{ji}}\sum _{ k=1}^{ n}{\hat{a}_{jk}}), {\,\,\,}{i=1,2,...,n}\) and \( {\hat{a}_{ij}}=max\{|{\underline{a}_{ij}}|, |{\overline{a}_{ij}}|\}\) and \( {\hat{b}_{ij}}=max\{|{\underline{b}_{ij}}|, |{\overline{b}_{ij}}|\},\) \( {\,\,\,}{i,j=1,2,...,n}\).

Proof

In order to prove the existence and uniqueness of the equilibrium point of system (1), we consider the following mapping associated with system (1):

Clearly, if \(x^*\) is an equilibrium point of (1), then, \(x^*\) satisfies the equilibrium equation of (1):

Hence, we can easily see that every solution of \(H(x)=0\) is an equilibrium point of (1). Therefore, for the system defined by (1), there exists a unique equilibrium point for every input vector u if H(x) is homeomorphism of \(R^n\). Now, let x, \(y\in R^n\) be two different vectors such that \(x\ne y\). For H(x) defined by (3), we can write

For \(f\in \mathcal{L}\), first consider the case where \(x\ne y\) and \({f}({x})-{f}({y})=0\). In this case, we have

from which \(x-y\ne 0\) implies that \({H}({x})\ne {H}({y})\) since C is a positive diagonal matrix. For \(f\in \mathcal{L}\), now, consider the case where \(x-y\ne 0\) and \({f}({x})-{f}({y})\ne 0\). In this case, multiplying both sides of (4) by \(2(x-y)^{T}\) results in

We first note the following inequality:

For any positive constant \(\beta \), we can also write

For \(f\in \mathcal{L}\), from Lemma 1, we can write

Hence, in the light of (6)–(8), (5) takes the form:

which is equivalent to

where \({\varepsilon _m}=min\{{\varepsilon _i}\},i=1,2,...,n\). Let \(x-y\ne 0\) and \({\varepsilon _m}>0\). Then,

from which we can conclude that \({H}({x})\ne {H}({y})\) for all \(x\ne y\). In order to show that \(||{H}({x})||\rightarrow \infty \) as \(||{x}||\rightarrow \infty \), we let \(y=0\) in (9), which yields

from which it follows that \(||H(x)-H(0)||_{1} \ge {{\varepsilon _m}}{||x||_2}\). Using the property \(||H(x)-H(0)||_{1}\le ||H(x)||_{1}+||H(0)||_{1}\), we obtain \(||H(x)||_{1}\ge {{\varepsilon _m}}{||x||_2}-||H(0)||_{1}\) Since \(||H(0)||_{1}\) is finite, it follows that \(||{H}({x})||\rightarrow \infty \) as \(||{x}||\rightarrow \infty \). This completes the proof of the existence and uniqueness of the equilibrium point of (1).

We will now prove the global asymptotic stability of the equilibrium point of system (1). We first shift the equilibrium point \(x^*\) of system (1) to the origin. Using \({z}_{i}(\cdot )={x}_{i}(\cdot )-{x_i^*},{\,\,\,}{i=1,2,...,n}\), puts the (1) in the form:

where \( {g_i}({z}_{i}(\cdot ))={f_i}({z}_{i}(\cdot )+{x_i^*})-{f_i}({x_i^*})\). Note that \(f\in \mathcal{L}\) implies that \(g\in \mathcal{L}\) with

Since \(z(t)\rightarrow 0\) implies that \(x(t)\rightarrow x^*\), the asymptotic stability of \(z(t)=0\) is equivalent to that of \(x^*\). In order to prove the global asymptotic stability of \(z(t)=0\), we will employ the following positive definite Lyapunov functional:

where \(\alpha \) and \(\gamma \) are some positive constants. The time derivative of the functional along the trajectories of system (10) is obtained as follows

We have

For any positive constant \(\beta \), we can write

From Lemma 1, we obtain:

Since \( |{g_i}{({z}_{i}(t))}|{\le }{\ell _i}|{z}_{i}(t)|,{\,\,}({i=1,2,...,n}) \), (14) can be written as

We also note that

where \(\alpha \) is a positive constant. Using (12), (15) and (16) in (11), we obtain

which can be written as

In (17), \({\gamma }<{{\varepsilon _m} \over n}\) implies that \(\dot{V}({z}(t))\) is negative definite for all \({z}(t)\ne 0\). Now let \({z}(t)=0\). Then, \(\dot{V}({z}(t))\) is of the form:

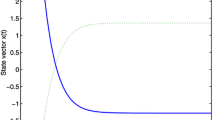

in which \(\dot{V}({z}(t))<0\) if there exists at least one nonzero \({z_j}(t-\tau _{ij})\), implying that \(\dot{V}({z}(t))=0\) if and only if \({z}(t)=0\) and \({z_j}(t-\tau _{ij})=0\) for all i, j, and \(\dot{V}({z}(t))<0\) otherwise. Also note that, V(z(t)) is radially unbounded since \(V({z}(t))\rightarrow \infty \) as \(||{z}(t)||\rightarrow \infty \). Hence, the origin system (10), or equivalently the equilibrium point of system (1) is globally asymptotically stable.

3 Conclusions

By employing Homomorphic mapping theorem and Lyapunov stability theorem, we have derived a new result for the existence, uniqueness and global robust stability of equilibrium point for neural networks with constant multiple time delays with respect to the Lipschitz activation functions. The key contribution of this paper is to establish some new relationships between the upper bound absolute values of the elements of the interconnection matrix, which is given in Lemma 1. The obtained condition is independently of the delay parameters and establishes a new a relationship between the network parameters of the system.

References

Bao, G., Wen, S., Zeng, Z.: Robust stability analysis of interval fuzzy Cohen-Grossberg neural networks with piecewise constant argument of generalized type. Neural Netw. 33, 32–41 (2012)

Deng, F., Hua, M., Liu, X., Peng, Y., Fei, J.: Robust delay-dependent exponential stability for uncertain stochastic neural networks with mixed delays. Neurocomputing 74(10), 1503–1509 (2011)

Arik, S.: An improved robust stability result for uncertain neural networks with multiple time delays. Neural Netw. 54, 1–10 (2014)

Faydasicok, O., Arik, S.: A new upper bound for the norm of interval matrices with application to robust stability analysis of delayed neural networks. Neural Netw. 44, 64–71 (2013)

Guo, Z., Huang, L.: LMI conditions for global robust stability of delayed neural networks with discontinuous neuron activations. Appl. Math. Comput. 215(3), 889–900 (2009)

Huang, T.: Robust stability of delayed fuzzy Cohen-Grossberg neural networks. Comput. Math. Appl. 61(8), 2247–2250 (2011)

Kao, Y.K., Guo, J.F., Wang, C.H., Sun, X.Q.: Delay-dependent robust exponential stability of Markovian jumping reaction-diffusion Cohen-Grossberg neural networks with mixed delays. J. Franklin Inst. 349(6), 1972–1988 (2012)

Kwon, O.M., Park, J.H.: New delay-dependent robust stability criterion for uncertain neural networks with time-varying delays. Appl. Math. Comput. 205(1), 417–427 (2008)

Liao, X.F., Wong, K.W., Wu, Z., Chen, G.: Novel robust stability for interval-delayed Hopfield neural networks. IEEE Trans. Circuits Syst. I 48(11), 1355–1359 (2001)

Luo, M., Zhong, S., Wang, R., Kang, W.: Robust stability analysis for discrete-time stochastic neural networks systems with time-varying delays. Appl. Math. Comput. 209(2), 305–313 (2009)

Pan, L., Cao, J.: Robust stability for uncertain stochastic neural network with delay and impulses. Neurocomputing 94(1), 102–110 (2012)

Shen, T., Zhang, Y.: Improved global robust stability criteria for delayed neural networks. IEEE Trans. Circuits Syst. II: Express Briefs 54(8), 715–719 (2007)

Wang, Z., Liu, Y., Liu, X., Shi, Y.: Robust state estimation for discrete-time stochastic neural networks with probabilistic measurement delays. Neurocomputing 74(1–3), 256–264 (2010)

Wang, Z., Zhang, H., Yu, W.: Robust exponential stability analysis of neural networks with multiple time delays. Neurocomputing 70(1315), 2534–2543 (2007)

Yang, R., Gao, H., Shi, P.: Novel robust stability criteria for stochastic Hopfield neural networks with time delays. IEEE Trans. Syst. Man Cybern. Part B: Cybern. 39(2), 467–474 (2009)

Zhang, H., Wang, Z., Liu, D.: Robust stability analysis for interval Cohen-Grossberg neural networks with unknown time-varying delays. IEEE Trans. Neural Netw. 19(11), 1942–1955 (2008)

Zhang, Z., Zhou, D.: Global robust exponential stability for second-order Cohen-Grossberg neural networks with multiple delays. Neurocomputing 73(13), 213–218 (2009)

Zheng, M., Fei, M., Li, Y.: Improved stability criteria for uncertain delayed neural networks. Neurocomputing 98(3), 34–39 (2012)

Zhu, S., Shen, Y.: Robustness analysis for connection weight matrices of global exponential stability of stochastic recurrent neural networks. Neural Netw. 38, 17–22 (2013)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Ozcan, N., Yucel, E., Arik, S. (2015). A Novel Condition for Robust Stability of Delayed Neural Networks. In: Arik, S., Huang, T., Lai, W., Liu, Q. (eds) Neural Information Processing. ICONIP 2015. Lecture Notes in Computer Science(), vol 9491. Springer, Cham. https://doi.org/10.1007/978-3-319-26555-1_31

Download citation

DOI: https://doi.org/10.1007/978-3-319-26555-1_31

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-26554-4

Online ISBN: 978-3-319-26555-1

eBook Packages: Computer ScienceComputer Science (R0)