Abstract

In the wake of Euclid’s proof of the infinitude of the primes, the question of how the primes were distributed among the integers became central — a question that has intrigued and challenged mathematicians ever since. The sieve of Eratosthenes provided a simple but very inefficient means of identifying which integers were prime, but attempts to find explicit, closed formulas for the nth prime, or for the number π(x) of primes less than or equal to a given number x, proved fruitless. Eventually extensive tables of integers and their least factors were compiled, detailed examination of which suggested that the apparently unpredictable occurrence of primes in the sequence of integers nonetheless exhibited some statistical regularity. In particular, in 1792 Euler asserted that for large values of x, π(x) was approximately given by \(\dfrac{x} {\ln x}\); six years later, Legendre suggested \(\dfrac{x} {\ln x - 1}\) and (wrongly) \(\dfrac{x} {\ln x - 1.0836}\) as better approximations; and in 1849, in a letter to his student Encke (translated in the appendix to Goldstein (1973)), Gauss mentioned his apparently long-held belief that the logarithmic integral

gave a still better approximation. Using the notation f(x) ∼ g(x) to denote the equivalence relation defined by \(\lim _{x\rightarrow \infty }\dfrac{f(x)} {g(x)} = 1\), those conjectures may be expressed in asymptotic form by the statements

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

In the wake of Euclid’s proof of the infinitude of the primes, the question of how the primes were distributed among the integers became central — a question that has intrigued and challenged mathematicians ever since. The sieve of Eratosthenes provided a simple but very inefficient means of identifying which integers were prime, but attempts to find explicit, closed formulas for the nth prime, or for the number π(x) of primes less than or equal to a given number x, proved fruitless. Eventually extensive tables of integers and their least factors were compiled, detailed examination of which suggested that the apparently unpredictable occurrence of primes in the sequence of integers nonetheless exhibited some statistical regularity. In particular, in 1792 Euler asserted that for large values of x, π(x) was approximately given by \(\dfrac{x} {\ln x}\); six years later, Legendre suggested \(\dfrac{x} {\ln x - 1}\) and (wrongly) \(\dfrac{x} {\ln x - 1.0836}\) as better approximations; and in 1849, in a letter to his student Encke (translated in the appendix to Goldstein 1973), Gauss mentioned his apparently long-held belief that the logarithmic integral

gave a still better approximation.Footnote 1 Using the notation f(x) ∼ g(x) to denote the equivalence relation defined by \(\lim _{x\rightarrow \infty }\dfrac{f(x)} {g(x)} = 1\), those conjectures may be expressed in asymptotic form by the statements

Clearly \(\dfrac{x} {\ln x} \sim \dfrac{x} {\ln x - 1}\), and integration by parts can be used to show that \(\dfrac{x} {\ln x} \sim \text{li}(x)\) as well. For

where K is a constant. Then since 1∕(ln t)2 is less than or equal to 1 on the interval \([e,\sqrt{x}]\) and less than or equal to \(1/(\ln \,\sqrt{x})^{2} = 4/(\ln \,x)^{2}\) on the interval \([\sqrt{x},x]\),

But also, \(\text{li}(x) \geq (x - 2)/\ln \,x\), since 1∕ln t is decreasing on [2, x]. Hence

It follows immediately by L’Hopital’s rule that \(\lim _{x\rightarrow \infty }\dfrac{\ln x} {x}\text{li}(x) = 1\); that is, \(\text{li}(x) \sim \dfrac{x} {\ln x}\).

The three statements (PNT) are thus logically equivalent. Ipso facto, however, they do not capture the full strength of the conjectures made by Legendre and Gauss, since they do not indicate how accurately each of the three formulas approximates π(x). For that, estimates of the absolute or relative errors involved in those approximations are also needed.

The truth of those conjectures, together with error estimates, was finally established in 1896, independently and nearly simultaneously, by Jacques Hadamard and Charles de la Vallée Poussin, using techniques of complex contour integration discussed further below. Their long and complicated proofs of what has ever since been called the Prime Number Theorem were distinct, and have been analyzed in detail in Narkiewicz (2000). Later, proofs were devised that avoided some of the delicate issues involved in contour integration over infinite paths by invoking Norbert Wiener’s Tauberian theory for Fourier integrals; but the arguments remained complex. Eventually, more than 80 years after the original proofs, Donald Newman found a short proof involving integration only over finite contours (Newman 1980); and in the meantime, against the expectation of most of the mathematical community, Atle Selberg and Paul Erdős (partly independently and again nearly simultaneously) published so-called ‘elementary’ proofs, involving no recourse to complex-analytic methods (Selberg 1949; Erdős 1949).Footnote 2

Because of the length and complexity of all but Newman’s proof, the discussion that follows, unlike that in the preceding chapters, does not give full details of the various proofs, but rather focuses on their essential ideas and the differences among them. Readers seeking further details may consult the recent monograph (Jameson 2003), which provides a self-contained presentation of a descendant of the original proofs, as well as Newman’s proof and an elementary proof based on that given in Levinson (1969), all presented at a level accessible to students who have had only basic courses in real and complex analysis. An overview of work on the Prime Number Theorem in the century since its first proofs is given in Bateman and Diamond (1996), while Diamond (1982) and Goldfeld (2004) discuss the history of elementary proofs of the theorem. Narkiewicz (2000) provides a comprehensive history of the overall development of prime number theory up to the time of Hardy and Littlewood, including alternative proofs of many results.

10.1 Steps toward the goal: Prior results of Dirichlet, Chebyshev, and Riemann

As noted in Narkiewicz (2000), p. 49, “the first use of analytic methods in number theory was made by P.G. Dirichlet” during the years 1837–39. In particular, Dirichlet proved that if k and l are relatively prime integers with k < l, then the arithmetic progression {nk + l}, where n ranges over the positive integers, must contain infinitely many primes. In doing so Dirichlet considered the series now named after him \(\left (\text{those of the form}\sum _{n=1}^{\infty }\dfrac{f(n)} {n^{s}} \right )\) and gave an argument that could be adapted to show that if f is a completely multiplicative complex-valued function (that is, f is not identically zero and f(mn) = f(m)f(n) for all integers m and n) and \(\sum _{n=1}^{\infty }\dfrac{f(n)} {n^{s}}\) converges absolutely for some real value s 0, then it converges absolutely for all complex s with \(\text{Re}\,s \geq s_{0}\) and

With s restricted to real values, the special case f(n) = 1 is Euler’s product formula (cf. Chapter 7, footnote 6):

(from which it follows that ζ(s) ≠ 0 for Re s > 1).

Nine years later, in the first of two papers on π(x) (Chebyshev 1848), Pafnuty Chebyshev proved that for all integers k > 1 and any constant C > 0, there are infinitely many integers m and n for which

(For further details, see Narkiewicz (2000), pp. 98–102.) Consequently,

for arbitrarily large integers m and n. Therefore, since \(\text{li}(x) \sim \dfrac{x} {\ln x}\), if the \(\lim _{x\rightarrow \infty }\dfrac{\pi (x)\ln (x)} {x}\) exists, then it must equal 1 \(\left (\text{that is,}\ \pi (x) \sim \dfrac{x} {\ln \,x}\right )\).

In proving (30), Chebyshev made use of the Gamma function, defined for Re s > 0 by \(\Gamma (s) =\int _{ 0}^{\infty }e^{-t}t^{s-1}\,\mathit{dt}\). In his second paper (Chebyshev 1850), however, he introduced two number-theoretic functions related to π(x), namely

where m is the largest integer for which 2m ≤ x, and obtained important bounds on their values using only elementary means. In particular, since lnx is an increasing function, \(\theta (x) \leq \pi (x)\ln x \leq x\ln x\ \text{and}\ \psi (x) \leq \pi (x)\ln x\); and since m ≤ lnx∕ln2,

because the maximum value of \(\dfrac{\ln x} {\ln 2} \dfrac{1} {x^{1/6}}\) occurs for x = e 6 and is less than 3. 2. Thus \(\psi (x) - 4\sqrt{x}\ln \,x \leq \theta (x) \leq \psi (x).\)

Hence if \(\lim _{x\rightarrow \infty }\dfrac{\psi (x)} {x}\) exists, then so does \(\lim _{x\rightarrow \infty }\dfrac{\theta (x)} {x}\), and they are equal. Furthermore, if \(\lim _{x\rightarrow \infty }\dfrac{\theta (x)} {x}\) exists, then so must \(\lim _{x\rightarrow \infty }\dfrac{\pi (x)\ln (x)} {x}\), because for 0 < ε < 1,

so

As already noted, \(\lim _{x\rightarrow \infty }\dfrac{\pi (x)\ln (x)} {x}\) must then equal 1. So to prove the Prime Number Theorem it suffices to prove that either \(\lim _{x\rightarrow \infty }\dfrac{\psi (x)} {x}\) or \(\lim _{x\rightarrow \infty }\dfrac{\theta (x)} {x}\) exists.

The principal theorem of Chebyshev (1850) was that there exist constants C 1, C 2, and C 3 such that for all x ≥ 2,

Indeed, Chebyshev’s methods (involving Stirling’s formula) showed that C 2 can be taken to be 1.1224 and, if x ≥ 37, that C 3 can be taken to be.73 (see Narkiewicz 2000, p. 111); and from the latter estimate Chebyshev deduced Bertrand’s postulate (that for every x > 1 the interval (x, 2x] contains some prime) as an immediate corollary. For direct calculation shows that Bertrand’s postulate holds for 1 ≤ x < 37, and if it failed for some x ≥ 37, the estimate would imply the contradiction that 1. 46x ≤ θ(2x) = θ(x) ≤ 1. 13x.

Without using Stirling’s formula, considerations involving binomial coefficients yield the weaker inequality \(\theta (x) \leq x\ln 4 \approx 1.3863x\). The argument, given in Jameson (2003), p. 36, uses no number-theoretic facts beyond Euclid’s lemma and its corollary that if distinct primes divide n, so does their product. Namely, for any given n the expansion of \((1 + 1)^{2n+1}\) includes the two equal binomial coefficients \(\binom{2n + 1}{n}\) and \(\binom{2n + 1}{n + 1}\), so \(\binom{2n + 1}{n} < 2^{2n} = 4^{n}\). If \(p_{i},\ldots,p_{i+j}\) are all the primes between n + 2 and 2n + 1, inclusive, then none of those primes divides the denominator n! of \(\binom{2n + 1}{n}\), but each of them, and hence the product of all of them, divides its numerator, \(n!\binom{2n + 1}{n}\). Therefore that product must divide \(\binom{2n + 1}{n}\) itself and so must be less than \(\binom{2n + 1}{n}\). Consequently,

Then since \(\theta (n) \leq n\ln 4\) for n = 2, 3, 4, we may assume by induction on m that θ(k) ≤ kln4 for k ≤ 2m. The assumption holds for m = 2, and m + 1 < 2m for m ≥ 2. So \(\theta (m + 1) \leq (m + 1)\ln 4\), whence by the last displayed inequality, \(\theta (2m + 1) \leq (2m + 1)\ln 4\); and since 2m + 2 is not prime, \(\theta (2m + 2) =\theta (2m + 1)\). Thus \(\theta (2m + 2) \leq (2m + 1)\ln 4 < (2m + 2)\ln 4\), finishing the induction.

The inequality (31)(ii) also entails corresponding bounds on π(x). For π(x) can be expressed as the sum \(\sum _{2\leq n\leq x}(f(n)/\ln n)\), where f(n) = lnn if n is prime and 0 otherwise, and Abel summationFootnote 4 then gives

so (31)(ii)) yields

On the other hand, integration by parts shows that

Hence

Similar arguments (see Jameson (2003), p. 35) show that for any ε > 0, an x 0 must exist such that \((C_{3}-\epsilon )\text{li}(x) \leq \pi (x) \leq (C_{2}-\epsilon )\text{li}(x)\) for all x > x 0. The Prime Number Theorem would follow if it could be shown that the bounds in Chebyshev’s estimate can be taken to be \(C_{2} = C_{3} = 1\). But Chebyshev’s methods were inadequate for that task.

A key breakthrough came a decade later in Bernhard Riemann’s memoir “Ueber die Anzahl der Primzahlen unter einer gegebener Größe” (Riemann 1860). Riemann began by recalling Euler’s product formula (29), but took s therein to be a complex number and initially defined ζ(s) to be the function of s given by the expressions on each side of Euler’s formula whenever both expressions converged — that is, when Re s > 1. So defined, ζ(s) is analytic on the half plane Re s > 1, but Riemann went on immediately to extend it to a function analytic on all of \(\mathbb{C}\) except s = 1. To do so he employed the Gamma function (actually, the function \(\Pi (s) = \Gamma (s + 1)\)) together with the substitution t = nx to obtain

as the nth term of the geometric series for \(\Gamma (s)\zeta (s)\). Then

For s ≠ 1 he equated \(e^{-\pi \mathit{si}} - e^{\pi \mathit{si}}\) times the latter integral to the contour integral

“taken from + ∞ to + ∞ in a positive sense” around a region containing “in its interior the point 0 but no other point of discontinuity of the integrand.” He concluded that ζ(s) could then be defined for all s ≠ 1 by the equation

and that so defined ζ(s) would be analytic except for a simple pole at s = 1; that is, \(\zeta (s) - 1/(s - 1)\) would be an entire function.

Riemann noted that for Re s < 0 the same integral could be taken in the reverse direction, surrounding the region exterior to the curve C. It would then be “infinitely small for all s of infinitely large modulus,” and the integrand would be discontinuous only at the points 2n π i. Its value would thus be \(\sum _{n=1}^{\infty }(-n2\pi i)^{s-1}(-2\pi i)\), whence

which reduces to the functional equation

In the remainder of his memoir, Riemann made some assertions concerning π(x) and the non-real zeros of the zeta function — assertions equivalent to the following statements:

-

(i)

The number N(T) of non-real roots ρ of ζ(s) for which \(\left \vert \text{Im}\ \rho \right \vert \leq T\) (with multiple roots counted according to their multiplicity) is asymptotically equal to

$$\displaystyle{ \dfrac{T} {2\pi } \ln \,\dfrac{T} {2\pi } -\dfrac{T} {2\pi }. }$$(33) -

(ii)

It is “very likely” that all non-real zeros of ζ(s) satisfy \(\text{Re}\,s = 1/2\).

-

(iii)

If \(F(x) = 1/2k +\sum _{ n=1}^{\infty }\dfrac{\pi (x^{1/n})} {n}\) whenever x = p k for some prime p and \(F(x) =\sum _{ n=1}^{\infty }\dfrac{\pi (x^{1/n})} {n}\) otherwise, then for x > 1,

$$\displaystyle{ F(x) = \text{li}(x) -\sum _{\rho }\text{li}(x^{p}) +\int _{ x}^{\infty } \dfrac{\mathit{dx}} {(x^{2} - 1)x\ln x} -\ln 2, }$$where ρ ranges over all non-real roots of ζ(s).

Assertions (i) and (iii) were later proved rigorously in von Mangoldt (1895), while the conjecture that all non-real zeros of ζ(s) satisfy \(\text{Re}\,s = 1/2\) is the still unproven Riemann Hypothesis.

Beyond the specific results it contained, three aspects of Riemann’s memoir were seminal for the subsequent proofs of the Prime Number Theorem: the idea of regarding the series \(\sum _{n=1}^{\infty }n^{-s}\) as a function of a complex argument; the extension of that function to a function analytic in the region s ≠ 1, to which Cauchy’s theory of contour integration could be applied; and the revelation that the distribution of prime numbers was intimately related to the location of the non-real zeros of that extended zeta function.Footnote 5

10.2 Hadamard’s proof

Just a year after receiving his degree of Docteur ès Sciences, Jacques Hadamard published the first of a series of papers concerning properties of the Riemann zeta function. The series culminated in his paper Hadamard (1896b), in which he proved the Prime Number Theorem in the form \(\lim _{x\rightarrow \infty }\dfrac{\theta (x)} {x} = 1\).

Hadamard began that paper by noting that ζ(s) was analytic except for a simple pole at s = 1, and though it had infinitely many zeros with real part between 0 and 1 (a consequence of results in his earlier paper Hadamard 1893), it was non-zero for all s with Re s > 1. His first goal was then to show that ζ(s) was also non-zero for all s with Re s = 1.

To establish that he considered ln(ζ(s)), which by Euler’s product theorem and the Maclaurin series for ln(1 − x) is equal to \(\sum _{k=1}^{\infty }\sum _{\begin{array}{c}p\ \mathit{prime}\end{array}} \dfrac{1} {\mathit{kp}^{\mathit{ks}}}\). He split that sum into two parts, so that

and noted that since (s − 1)ζ(s) is an entire function, it is bounded in any closed neighborhood of s = 1. Thus in any such neighborhood the difference \(\ln (\zeta (s)) -\ln \dfrac{1} {s - 1} =\ln ((s - 1)\zeta (s))\) is a bounded function, so if s approaches 1 from the right along the real axis, ln(ζ(s)) must approach + ∞. But by (34), in any sufficiently small neighborhood of s = 1, ln(ζ(s)) also differs from S(s) by a bounded expression, since the second term on the right of (34) is analytic for Re s > 1∕2. So S(s) also approaches + ∞ as s approaches 1 from the right along the real axis.

On the other hand, consider ln( | ζ(s) | ). By (34), for \(s =\sigma +\mathit{it}\), \(\ln (\vert \zeta (s)\vert ) = \text{Re}\,\ln (\zeta (s))\) differs from

by a bounded function; and if \(\zeta (1 + \mathit{it}_{0}) = 0\) for some t 0 ≠ 0, then \(1 + \mathit{it}_{0}\) must be a simple root of ζ(s), whence \(\ln (\vert \zeta (\sigma +\mathit{it})\vert )\) must differ from \(\ln (\sigma -1)\) by a bounded function. So \(\ln (\vert \zeta (\sigma +\mathit{it}_{0})\vert )\) and P(σ +it 0) must both approach −∞ as σ approaches 1 from the right, and P(σ +it 0) must differ from \(-S(\sigma +\mathit{it}_{0})\) by a bounded function.

Now suppose \(0 <\rho = 1-\epsilon < 1\), 0 < α < 1, and let P n and S n denote the partial sums of P and S for p ≤ n. Hadamard divided the set of primes p ≤ n into two subsets, according to whether or not, for some integer k, the prime p satisfied the inequality \(\vert t_{0}\ln p - (2k + 1)\pi \vert <\alpha\). Writing \(S_{n} = S_{n}^{{\prime}} + S_{n}^{{\prime\prime}}\) and \(P_{n} = P_{n}^{{\prime}} + P_{n}^{{\prime\prime}}\) to correspond to that division, he used elementary inequalities to conclude that there must exist an integer N ρ such that for all \(n \geq N_{\rho },\rho _{n}(\sigma ) = S_{n}^{{\prime}}(\sigma +\mathit{it}_{0})/S_{n}(\sigma +\mathit{it}_{0}) >\rho\), since otherwise \(P(\sigma +\mathit{it}_{0}) \geq -\theta S(\sigma )\), where \(\theta = 1 -\epsilon +\epsilon \cos \alpha < 1\). Then, by the result of the previous paragraph, for some function F bounded throughout the half-plane Re s > 1∕2, \(-S(\sigma +\mathit{it}_{0}) + F(\sigma +\mathit{it}_{0}) \geq -\theta S(\sigma )\). That is, \(F(\sigma +\mathit{it}_{0}) \geq (1-\theta )S(\sigma )\), with 1 −θ > 0. But as noted above, \(\lim _{\sigma \rightarrow 1^{+}}S(\sigma ) = +\infty \).

The argument just given, which rests on the assumption that \(\zeta (1 + \mathit{it}_{0}) = 0\), applies to any ρ satisfying 0 < ρ < 1. If ρ is further required to satisfy \(\dfrac{1} {1 +\cos (2\alpha )} <\rho < 1\), then similar manipulations of inequalities show that for n ≥ N ρ and \(s =\sigma +i(2t_{0})\),

with \(\Theta =\rho [(1 +\cos (2\alpha )) - 1] > 0\). Hence \(P(s) =\lim _{n\rightarrow +\infty }P_{n}(s) \geq \Theta S(\sigma )\). Once again, \(\lim _{\sigma \rightarrow 1}S(\sigma ) = +\infty \), so P(σ + i(2t 0)), and thus also \(\ln \vert \zeta (\sigma +i(2t_{0}))\vert \), must approach + ∞ as σ approaches 1 from the right. But that cannot be, since ζ(s) is analytic at the point 1 + i(2t 0). The assumption that \(\zeta (1 + \mathit{it}_{0}) = 0\) is thereby refuted.

For the rest of his proof Hadamard drew upon ideas of E. Cahen, who in his doctoral dissertation at the École Normale Supérieure had unsuccessfully attempted to prove the Prime Number Theorem.

Given real numbers a and x, with 0 < x ≠ 1, Cahen had considered the contour integrals

Hadamard considered instead the integrals

where μ > 0, which he denoted by J μ and ψ μ , respectively.

In order to evaluate the integrals J μ , Hadamard distinguished three cases: μ = an integer, n; μ is non-integral and x < 1; or μ is non-integral and x > 1. For his proof of the Prime Number Theorem, however, only the first case was needed (indeed, just the case n = 2), which he established by integrating by parts n − 1 times, using the identity \(1/z^{n} = \dfrac{(-1)^{n-1}} {(n - 1)!} \, \dfrac{d^{n-1}} {\mathit{dz}^{n-1}}(1/z)\).Footnote 6 That gave

whence

since von Mangoldt had shown the year before that

To evaluate the integrals ψ μ , Hadamard noted that by (29), \(\ln (\zeta (s)) = -\sum _{p}\ln (1 - p^{-s})\), so logarithmic differentiation gives

where \(\Lambda \) denotes the von Mangoldt function, defined by \(\Lambda (k) =\ln p\) if k = p m for some prime p and integer \(m\) and \(\Lambda (k) = 0\) otherwise.

Then for μ = 2,

where by (35) the integrals in the last member are equal to 0 if x∕p k < 1 (that is, if x 1∕k < p) and equal to \(\ln \left ( \dfrac{x} {p^{k}}\right )\) otherwise. Hence

The double sum in the last term of the equation above is only apparently infinite, since the inner sum is vacuous for \(k >\ln x/\ln 2\). Thus finally

where the brackets above the penultimate summation symbol denote the greatest integer function.

In the second term of (38) the first summation involves no more than \(\ln x/\ln 2\) summands and the second summation no more than \(\sqrt{x}\), the largest of which is that for k = 2. Consequently,

When divided by x, the last term above approaches 0 as x approaches ∞. Hadamard’s final goals were then to show

-

(i)

that \(\lim _{x\rightarrow \infty }\dfrac{1} {x}\sum _{p\leq x}\ln p\ln \left (\dfrac{x} {p}\right ) = 1\)

and

-

(ii)

that (i) implies that \(\lim _{x\rightarrow \infty }\dfrac{\theta (x)} {x} = 1\).

Hadamard established (ii) via elementary but rather involved \(\epsilon -\delta\) computations, showing that for every ε > 0, \(x - 2\epsilon x \leq \theta (x) \leq x + \dfrac{5\epsilon } {2}x\). (For details, see Narkiewicz 2000, pp. 202–204.) To prove (i) he showed that \(\lim _{x\rightarrow \infty }\dfrac{\psi _{2}(x)} {x} = 1\), using Cauchy’s integral theorem, von Mangoldt’s theorem justifying Riemann’s asymptotic estimate (33) of the number N(T) of non-real roots ρ of ζ(s) for which | Im ρ | ≤ T, and two results from his earlier paper Hadamard (1896a)— in particular,

-

(A)

If \(\rho _{1},\rho _{2},\ldots\) are the non-real roots of ζ(s) ordered according to increasing absolute value, then \(\sum _{n}1/\vert \rho _{n}\vert ^{2}\) converges.

and

-

(B)

If s ≠ 1 is not a root of ζ(s), then for some constant K

$$\displaystyle{ \dfrac{\zeta ^{{\prime}}(s)} {\zeta (s)} = \dfrac{1} {1 - s} +\sum _{\rho }\left ( \dfrac{1} {s-\rho } + \dfrac{1} {\rho } \right ) + K, }$$(39)where ρ ranges over all roots of ζ(s), ordered according to increasing absolute value.

It follows from (A) that for any ε > 0 there is an integer M such that \(\sum _{n>M}1/\vert \rho _{n}\vert ^{2} <\epsilon\). Let I be the maximum of \(\vert \text{Im}\,\rho _{n}\vert \) for n ≤ M and R the maximum of | Re ρ n | for n ≤ M. Since ζ(s) has no roots ρ with Re ρ ≥ 1, R < 1. Then, given a > 1, to compute

Hadamard considered the infinite family of polygons \(\boldsymbol{\Gamma } = \mathbf{ABGECDFHA}\) defined in terms of a real parameter y as follows (see Figure 10.1): Choose d > I such that no root of ζ(s) lies on the line Im(s) = d, take c to be a real number satisfying R < c < 1, and let C be the point c +di and D the point c −di. For y > d, let A be the point a −yi and B the point a +yi. Finally, fix e < 0, let E be the point e +di and F the point e −di, and denote by G and H the points where the lines Im(s) = ±y intersect the lines from the origin that pass through E and F.

Hadamard used (33) and (39) to show that the integral of \(\dfrac{\zeta ^{{\prime}}(s)} {\zeta (s)} \dfrac{x^{s}} {s^{2}}\) along each of the segments BG and AH approaches 0 as y approaches ∞.

In particular, he concluded from (33) that for any A > 1 and any positive integer λ the number of roots ρ of ζ(s) for which | Im ρ | lies between A 3λ+3 and A 3λ does not exceed K λ A 3λ, where K is a constant. The same bound applies a fortiori to the number of roots N r for which | Im ρ | lies between 3λ + 1 and 3λ + 2. On the other hand, the asymptotic expression for N(T) given in (33) approaches ∞ as λ does, so for sufficiently large λ there must be two consecutive roots ρ j and ρ j+1 whose imaginary parts β j and β j+1 differ by at least \(\dfrac{A^{3\lambda +2} - A^{3\lambda +1}} {K\lambda A^{3\lambda }} = \dfrac{A(A - 1)} {K\lambda }\). Consequently, for \(y_{\lambda } = (\beta _{j} +\beta _{j+1})/2\), any root of ζ(s) must lie above or below the line Im(s) = y λ by at least \(\dfrac{A(A - 1)} {2K\lambda }\).

That being so, if BG lies along \(\text{Im}\,(s) = y_{\lambda }\), the summation in (39) may be estimated by splitting it into two parts, the first sum ranging over all roots ρ satisfying \(A^{3\lambda } \leq \text{Im}\,\rho \leq A^{3\lambda +3}\) and the second over all other roots. The definition of y λ entails that the first sum is bounded by \(C_{1}y_{\lambda }\ln ^{2}\,y_{\lambda }\) and the second by \(C_{2}y_{\lambda }\ln y_{\lambda }\), for some constants C 1 and C 2, so \(\vert \zeta ^{{\prime}}(s)/\zeta (s)\vert \) is itself bounded by a constant multiple of \(y_{\lambda }\ln ^{2}y_{\lambda }\). Hence

which approaches 0 as y λ approaches ∞. Similar considerations show that the integral along AH also approaches 0 as y λ approaches ∞.

Cauchy’s integral theorem yields that

is equal to the sum of the residues at the poles of \(\zeta ^{{\prime}}(s)\) and the roots of ζ(s) that lie within \(\boldsymbol{\Gamma }\). The only such pole occurs at s = 1, where the residue is − x, and by construction, the sum of the residues of the roots inside \(\boldsymbol{\Gamma }\) cannot exceed ε x. Thus, writing \(I_{\boldsymbol{\Gamma }} = I_{\mathbf{AB}} + I_{\mathbf{BG}} + I_{\mathbf{GE}} + I_{\mathbf{ECDF}} + I_{\mathbf{FH}} + I_{\mathbf{HA}}\) and taking the limit as λ approaches ∞,

The quantity I ECDF is a constant, since the boundary segment ECDF is fixed regardless of the value of λ. So \(\lim _{x\rightarrow \infty }(I_{\mathbf{ECDF}}/x) = 0\), and to show that \(\lim _{x\rightarrow \infty }(\psi _{2}(x)/x) = 1\), it remains only to show that

For that, Hadamard noted that for s on GE and FH (regardless of the value of λ, which determines the position of G and H) and any root ρ of ζ(s), the ratio \(\left \vert \dfrac{s-\rho } {\rho } \right \vert \) must be greater than a fixed constant, so by (39), \(\left \vert \dfrac{1} {s} \dfrac{\zeta ^{{\prime}}(s)} {\zeta (s)} \right \vert \) is finite. Therefore for some constant K, I GE is less than \(K\int _{\mathit{GE}}\vert x^{s}\vert /\vert s\vert \,\mathit{ds}\) — a finite quantity — and likewise for I FH . So \(\dfrac{I_{\mathbf{GE}}} {x}\) and \(\dfrac{I_{\mathbf{FH}}} {x}\) both approach 0 as x approaches ∞.

10.3 The proof of de la Vallée Poussin

In a note appended to the end of Hadamard (1896b), Hadamard remarked that while correcting the proofs for that paper he had been notified of de la Vallée Poussin’s paper on the same topic (de la Vallée Poussin 1896), and he acknowledged that their proofs, found independently, had some points in common. In particular, both involved showing that ζ(s) had no roots on the line Re s = 1. But their methods for doing so were entirely different, and Hadamard judged that his own method was simpler.Footnote 7

Indeed, of the twenty-one pages in Hadamard (1896b), only two and a half are devoted to his proof that ζ(s) has no root of the form 1 +β i; and as Hadamard noted, that proof rested on only two simple properties of ζ(s): that its logarithm could be expressed as a series of the form \(\sum a_{n}e^{-\lambda _{n}s}\), for some positive constants a n and λ n , and that ζ itself was analytic on the limiting line of convergence of that series, with the exception of a single simple pole.

In contrast, de la Vallée Poussin’s proof that ζ(s) had no roots on the line Re s = 1 was the subject of the third chapter of his paper de la Vallée Poussin (1896), which took up 18 of its 74 pages. Like Hadamard’s proof, de la Vallée Poussin’s was by contradiction and used the fact that any root \(s = 1 +\beta i\) of ζ(s) would have to be a simple root. But unlike Hadamard’s argument, which rested on boundedness considerations, de la Vallée Poussin’s was based on the uniqueness of certain Fourier series expansions. Specifically, he considered complex-valued functions f(y) of a real variable y that for y > 1 have the form L(y) + P(y), where L(y) denotes a function that approaches a finite limit A as y approaches ∞ and P(y) denotes an infinite series of the form

in which the coefficients α n do not approach zero as n approaches ∞ and the series ∑ c n and ∑ d n are absolutely convergent. He proved that in any such representation the values A, c n , d n and α n are all uniquely determined by f(y). On the other hand, if 1 +β i were a root of ζ(s), he showed by a long and delicate argument that the function \(\dfrac{1 +\cos (\beta \ln y)} {y} \sum _{p<y}\ln p\), where p ranges over primes, would have two distinct representations of the form L(y) + P(y). Salient details of the computations involved are given on pp. 208–214 of Narkiewicz (2000).

Two other differences between the tools used by Hadamard and those employed by de la Vallée Poussin are worth noting.

First, although de la Vallée Poussin referred to Riemann’s functional equation for the ζ-function and observed that it could be used to define ζ(s) throughout \(\mathbb{C} -\{ 1\}\), he did not make use of that extension in his proof of the Prime Number Theorem. Instead, he noted that integration by parts yields

from which it follows that for Re s > 1,

But

so

and since the sum on the right side of (40) converges absolutely for Re s > 0, that equation may be used to define ζ(s) in that half-plane, except for a simple pole at s = 1 with residue 1.

Second, in place of the integral ψ 2(x) employed by Hadamard, both in his proof that no \(s = 1 +\beta i\) is a root of ζ(s) and in his proof that π(x) ∼ x∕lnx de la Vallée Poussin made use of the integral

in which neither u nor v are poles or zeros of ζ(s), y is a real number greater than 1, and a is a real number greater than any of 1, Re u and Re v.

Replacing the fraction \(\zeta ^{{\prime}}(s)/\zeta (s)\) in the integrand of I u, v by the expression on the right side of (39) and integrating the result, de la Vallée Poussin obtained the identity

where ρ ranges over the roots of ζ(s), ordered according to increasing absolute value.

On the other hand, using (37) and (36), de la Vallée Poussin found that

valid for all u, v whenever y > 1.

Equating those two expressions for I u, v (y), solving for the quantity in parentheses in (42), setting v = 0, dividing by y u and letting u approach 1 yields

where the term lny is obtained by writing \(\dfrac{u} {1 - u}y^{1-u}\) as \(\dfrac{u} {1 - u}[1 + (y^{1-u} - 1)]\) and applying l’Hopital’s Rule to \(\lim _{u\rightarrow 1}\dfrac{y^{1-u} - 1} {1 - u}\).

Recalling the definition of \(\Lambda (n)\), the left member of (43) can be written as \(\sum _{p^{m}<y} \dfrac{\ln p} {p^{m}} -\dfrac{1} {y}\sum _{p^{m}<y}\ln p\). In the right member the fraction \(\dfrac{u} {u - 1}\) can be split up as \(\dfrac{1} {u - 1} + 1\), and both summations there approach zero as y approaches ∞. (In particular, since the real part of any root ρ of ζ(s) has been shown to be less than one, the real part of ρ − 1 must be negative. The terms of the first summation are thus dominated by those of the absolutely convergent series \(\sum _{\rho } \dfrac{1} {\rho (\rho -1)}\), so that sum must converge uniformly to zero as y increases without bound.) Consequently, setting u = s, (43) takes the form

where f(y) approaches 0 as y approaches ∞.

De la Vallée Poussin next noted that the difference \(\sum _{p<y} \dfrac{\ln p} {p - 1} -\sum _{p^{m}<y} \dfrac{\ln p} {p^{m}}\) approaches 0 as y approaches ∞, and proved that \(\dfrac{1} {y}\sum _{p^{m}<y}\ln p -\dfrac{1} {y}\sum _{p<y}\ln p\) does so as well. He also proved that

defined as \(\gamma =\lim _{n\rightarrow \infty }(\sum _{k=1}^{n}1/k -\ln n)\).

Equation (44) can therefore be replaced by

where g(y) approaches 0 as y approaches ∞.

Equation (45) is the key to the final two steps in de la Vallée Poussin’s derivation of the prime number theorem, namely

-

(i)

showing that

$$\displaystyle{ \int _{1}^{x}\dfrac{1} {y}\sum _{p<y}\ln p\ \mathit{dy} = x(1 + h(x)),\text{where}\ \lim _{x\rightarrow \infty }h(x) = 0, }$$(46)

and

-

(ii)

showing that (46) implies that \(\lim _{x\rightarrow \infty }\dfrac{\theta (x)} {x} = 1\).

To establish (46) de la Vallée Poussin integrated (45) from 1 to x and then multiplied each term by 1∕x, thus obtaining

where j(x) approaches 0 as x approaches ∞. He then noted that the first term in the left member of (47) can be rewritten as

The first two terms in the last member of (48) make up the left member of (45), with x in place of y. Moreover, as Franz Mertens had shown in 1874, it follows from Chebyshev’s upper bound (31) for ψ(x) that \(\sum _{p<y} \dfrac{\ln p} {p - 1}\) is less than a constant multiple of lny. Consequently,

where k(x) approaches 0 as x approaches ∞. Substituting the expression on the right of this equation for the first integral in (47) and carrying out the integration in the right member of (47) then yields

which after cancellation of terms common to both members is (i), with \(h(x) = k(x) - j(x)\).

To show that \(\lim _{x\rightarrow \infty }\dfrac{\theta (x)} {x} = 1\), take ε > 0, and use (46) to evaluate

Dividing (49) by ε x gives

and since \(\theta (y) =\sum _{p\leq y}\ln p\), upper and lower bounds on the integrand give

that is,

Since both h(x) and h((1 +ε)x) approach zero as x approaches ∞, (50) shows that

and by replacing x in the latter inequality by \(\dfrac{x} {1+\epsilon }\), it follows that

By L’Hopital’s rule, \(\lim _{\epsilon \rightarrow 0} \dfrac{\epsilon } {\ln (1+\epsilon )} = 1\), so finally, \(\lim _{x\rightarrow \infty }\dfrac{\theta (x)} {x} = 1\).

10.4 Later refinements

In the wake of Hadamard’s and de la Vallée Poussin’s proofs, various simplifications, generalizations, and improvements of their arguments were developed, by Edmund Landau, Franz Mertens, de la Vallée Poussin himself (who in 1899 obtained the error bound \(\pi (x) -\text{li}(x) \leq \mathit{Kxe}^{-c\sqrt{\ln x}}\) for some positive constants K and c — a result not bettered for a quarter of a century thereafterFootnote 8), and others. Modern proofs of the Prime Number Theorem that are descendants of the classical ones incorporate many of those refinements and also make use of other tools such as the Riemann-Lebesgue lemma, integral transforms, and Tauberian theorems (discussed further below).

The proof of the Prime Number Theorem given in Jameson (2003) may be taken as an exemplar of such proofs. Here, in outline, is its structure:

-

1.

The comparison test is used to prove Dirichlet’s result that if

$$\displaystyle{ \sum _{n=1}^{\infty }\vert a_{ n}/n^{\alpha }\vert }$$converges for some real α, then the corresponding Dirichlet series \(\sum _{n=1}^{\infty }a_{n}/n^{s}\) converges absolutely for all \(s =\sigma +\mathit{it}\) with σ ≥ α. In particular, if | a n | ≤ 1 for all n, then \(\sum _{n=1}^{\infty }a_{n}/n^{s}\) converges absolutely when Re s > 1, so \(\sum _{n=1}^{\infty }1/n^{s}\) can be used to define ζ(s) in that region.

-

2.

It is shown that if \(F(s) =\sum _{ n=1}^{\infty }a_{n}/n^{s}\) converges whenever Re s > c, then F(s) is analytic at all points s with Re s > c and \(F^{{\prime}}(s) = -\sum _{n=1}^{\infty }a_{n}\ln n/n^{s}\). In particular, \(\zeta ^{{\prime}}(s) = -\sum _{n=1}^{\infty }\ln n/n^{s}\) for Res > 1.

-

3.

After defining the Möbius function μ(n) by μ(1) = 1; μ(n) = 0 if p 2 divides n for some prime p; and \(\mu (n) = (-1)^{k}\) if \(n = p_{1}p_{2}\ldots p_{k}\) for distinct primes \(p_{1},p_{2},\ldots p_{k}\), the generalized Euler product identity (stated in footnote 3 above) is invoked and inverted to obtain

$$\displaystyle{ \dfrac{1} {\sum _{n=1}^{\infty }f(n)} =\prod _{p\ \mathit{prime}}(1 - f(p)) =\sum _{ n=1}^{\infty }\mu (n)f(n). }$$Consequently \(\dfrac{1} {\zeta (s)} =\sum _{ n=1}^{\infty }\dfrac{\mu (n)} {n^{s}}\) for Re s > 1.

-

4.

It is verified that for | s | < 1 the series \(\sum _{n=1}^{\infty }s^{n}/n\) defines an analytic function h(s) that is a logarithm of \(\dfrac{1} {1 - s}\). The Euler product identity then implies that the logarithm of ζ(s) should be given by \(H(s) =\sum _{p\,\mathit{prime}}h(p^{-s}) =\sum _{p\,\mathit{prime}}\sum _{n=1}^{\infty } \dfrac{1} {\mathit{np}^{\mathit{ns}}}\). It is proved that that double series converges when Re s > 1 and that its sum is equal to that of the series \(\sum _{n=1}^{\infty }\dfrac{c(n)} {n^{s}}\), where \(c(n) = 1/m\) if n = p m for some prime p, and c(n) = 0 otherwise.

-

5.

Consequently, \(H^{{\prime}}(s) = \dfrac{\zeta ^{{\prime}}(s)} {\zeta (s)} = -\sum _{n=1}^{\infty }\dfrac{c(n)\ln n} {n^{s}} = -\sum _{n=1}^{\infty }\dfrac{\Lambda (n)} {n^{s}}\), where \(\Lambda (n)\) is the von Mangoldt function.

-

6.

Abel summation is used to show that for any sequence a(n) and corresponding summation function \(A(x) =\sum _{n\leq x}a(n)\), if X > 1 then

$$\displaystyle{ \sum _{n\leq X}\dfrac{a(n)} {n^{s}} = \dfrac{A(x)} {X^{s}} + s\int _{1}^{X}\dfrac{A(x)} {x^{s+1}} \,\mathit{dx}. }$$Furthermore, if s ≠ 0, A(x)∕x s approaches 0 as x approaches ∞ and the Dirichlet series \(\sum _{n=1}^{\infty }\dfrac{a(n)} {n^{s}}\) converges, then the Dirichlet integral

$$\displaystyle{ s\int _{1}^{\infty }\dfrac{A(x)} {x^{s+1}} \,\mathit{dx} }$$converges to the same value. Since ψ(x) is the summation function for \(\Lambda (n)\) and ψ(x)∕x s approaches 0 as x approaches ∞ if Re s > 1, it follows from 5 that

$$\displaystyle{ \dfrac{\zeta ^{{\prime}}(s)} {\zeta (s)} = -s\int _{1}^{\infty } \dfrac{\psi (x)} {x^{s+1}}\,\mathit{dx}. }$$(51) -

7.

A simplification due to Edmund Landau is used to extend the domain of definition of ζ(s) without using the functional equation for ζ(s).Footnote 9 Specifically, for Re s > 0, ζ(s) may be defined by

$$\displaystyle{ \zeta (s) = \dfrac{1} {s - 1} + 1 - s\int _{1}^{\infty }\dfrac{x - [x]} {x^{s+1}} \,\mathit{dx}\,, }$$(52)where [x] denotes the greatest integer not exceeding x, and differentiation under the integral sign shows that \(\zeta (s) - \dfrac{1} {s - 1}\) is analytic at s = 1, so ζ(s) has a simple pole there. Furthermore,

$$\displaystyle{ \lim _{s\rightarrow 1}\left (\zeta (s) - \dfrac{1} {s - 1}\right ) = 1 -\int _{1}^{\infty }\dfrac{x - [x]} {x^{2}} \,\mathit{dx} = \text{Euler's constant}\ \gamma. }$$ -

8.

Hence \(\zeta (s), \dfrac{1} {\zeta (s)}\) and \(\dfrac{\zeta ^{{\prime}}(s)} {\zeta (s)}\) are represented by Laurent series of the forms

$$\displaystyle\begin{array}{rcl} \zeta (s)& =& \dfrac{1} {s - 1} +\gamma +\sum _{n=1}^{\infty }c_{ n}(s - 1)^{n} {}\\ \dfrac{1} {\zeta (s)}& =& (s - 1) -\gamma (s - 1)^{2} +\ldots \quad \text{and} {}\\ \dfrac{\zeta ^{{\prime}}(s)} {\zeta (s)} & =& - \dfrac{1} {s - 1} + a_{0} + a_{1}(s - 1)+\ldots \,, {}\\ \end{array}$$all converging in some disk with center s = 1.

-

9.

Since

$$\displaystyle{ \left \vert \int _{N}^{\infty }\dfrac{x - [x]} {x^{s+1}} \,\mathit{dx}\right \vert \leq \int _{N}^{\infty } \dfrac{1} {x^{\sigma +1}}\,\mathit{dx} = \dfrac{1} {\sigma N^{\sigma }}, }$$Euler’s summation formula for finite sumsFootnote 10 yields

$$\displaystyle{ \zeta (s) =\sum _{ n=1}^{N} \dfrac{1} {n^{s}} + \dfrac{N^{1-s}} {s - 1} - s\int _{N}^{\infty }\dfrac{x - [x]} {x^{s+1}} \,\mathit{dx} \leq \sum _{n=1}^{N} \dfrac{1} {n^{s}} + \dfrac{N^{1-s}} {s - 1} + \dfrac{\vert s\vert } {\sigma N^{\sigma }}. }$$Straightforward calculations using the last inequality then show that when σ ≥ 1 and t ≥ 2, \(\vert \zeta (\sigma +\mathit{it})\vert \leq \ln t + 4\) and \(\vert \zeta ^{{\prime}}(\sigma +\mathit{it})\vert \leq \dfrac{1} {2}(\ln t + 3)^{2}\). Those inequalities can in turn be used to show that \(1/\vert \zeta (\sigma +\mathit{it})\vert \leq 4(\ln t + 5)^{7}\) for σ > 1 and t ≥ 2. (For details see Jameson 2003, pp. 108–109.)

-

10.

The proof that ζ(s) ≠ 0 when Re s = 1 is carried out by a simplified argument based on Hadamard’s approach and later ideas of de la Vallée Poussin and Franz Mertens. It rests on the trigonometric identity \(0 \leq 2(1+\cos \theta )^{2} = 3 + 4\cos \theta +\cos (2\theta )\). For if a Dirichlet series \(\sum _{n=1}^{\infty }\dfrac{a(n)} {n^{s}}\) with positive real coefficients a(n) converges for σ > σ 0 to a function f(s), then for such σ

$$\displaystyle{ 3f(\sigma ) + 4f(\sigma +\mathit{it}) + f(\sigma +2\mathit{it}) =\sum _{ n=1}^{\infty }\dfrac{a(n)} {n^{\sigma }} (3 + 4n^{-\mathit{it}} + n^{-2\mathit{it}}) }$$has real part

$$\displaystyle{ \sum _{n=1}^{\infty }\dfrac{a(n)} {n^{\sigma }} \text{Re}(3 + 4n^{-\mathit{it}} + n^{-2\mathit{it}}) =\sum _{ n=1}^{\infty }\dfrac{a(n)} {n^{\sigma }} (3 + 4\cos (t\ln n) +\cos (2t\ln n) \geq 0. }$$In particular, by 4. the Dirchlet series for \(\ln (\zeta (s))\) has positive coefficients and converges when σ > 1, so for such σ and all t,

$$\displaystyle\begin{array}{rcl} & & 3\ln (\zeta (\sigma )) + 4\text{Re}\,\zeta (\sigma +\mathit{it}) + \text{Re}\,\zeta (\sigma +2\mathit{it}) {}\\ & & \phantom{3\ln (\zeta (\sigma )) + 4\text{Re}\,\zeta (\sigma +\mathit{it}) + \text{Re}} =\ln \left [\zeta (\sigma )^{3}\vert \zeta (\sigma +\mathit{it})\vert ^{4}\vert \zeta (\sigma +2\mathit{it})\vert \right ] \geq 0 {}\\ \end{array}$$(because \(\text{Re}\,\ln z =\ln \vert z\vert \)), that is

$$\displaystyle{ \zeta (\sigma )^{3}\vert \zeta (\sigma +\mathit{it})\vert ^{4}\vert \zeta (\sigma +2\mathit{it})\vert \geq 1. }$$(53)Suppose then that \(\zeta (1 + \mathit{it}_{0}) = 0\) for some t 0 ≠ 0. Then

$$\displaystyle\begin{array}{rcl} & & \zeta (\sigma )^{3}\vert \zeta (\sigma +\mathit{it}_{ 0})\vert ^{4}\vert \zeta (\sigma +2\mathit{it}_{ 0})\vert \\ &&\phantom{\zeta (\sigma )^{3}\vert \zeta (\sigma +} = [(\sigma -1)\zeta (\sigma )]^{3}\left (\dfrac{\vert \zeta (\sigma +\mathit{it}_{0})\vert } {\sigma -1} \right )^{4}(\sigma -1)\vert \zeta (\sigma +2\mathit{it}_{ 0})\vert. {}\end{array}$$(54)But as σ approaches 1+, (σ − 1)ζ(σ) approaches 1, while \(\dfrac{\zeta (\sigma +\mathit{it}_{0})} {\sigma -1}\) approaches \(\zeta ^{{\prime}}(1 + \mathit{it}_{0})\) and \(\zeta (\sigma +2\mathit{it}_{0})\) approaches ζ(1 + 2it 0). So by (54), the product \(\zeta (\sigma )^{3}\vert \zeta (\sigma +\mathit{it}_{0})\vert ^{4}\vert \zeta (\sigma +2\mathit{it}_{0})\vert \) approaches 0, contrary to (53).

-

11.

Cauchy’s integral theorem is used to evaluate three infinite contour integrals, namely

$$\displaystyle{ \dfrac{1} {2\pi i}\int _{c-i\infty }^{c+i\infty }\dfrac{x^{s}} {s^{2}} \,\mathit{ds} = S(x)\ln x\quad \quad \;\text{for}\;x > 0\;\text{and}\;c > 0 }$$(55)$$\displaystyle{ \dfrac{1} {2\pi i}\int _{c-i\infty }^{c+i\infty } \dfrac{x^{s}} {s(s - 1)}\,\mathit{ds} = (x - 1)S(x)\quad \text{for}\;x > 0\;\text{and}\;c > 1 }$$(56)$$\displaystyle{ \dfrac{1} {2\pi i}\int _{c-i\infty }^{c+i\infty } \dfrac{x^{s-1}} {s(s - 1)}f(s)\,\mathit{ds} =\int _{ 1}^{x}\dfrac{A(y)} {y^{2}} \,\mathit{dy}\quad \text{for}\;x > 1\;\text{and}\;c > 1\,, }$$(57)where S(x) denotes the step-function equal to 0 for x < 1 and 1 for x ≥ 1 and A(x) = ∑ n ≤ x a(n) is the summation function corresponding to a Dirichlet series \(\sum _{n=1}^{\infty }a(n)/n^{s}\) that converges absolutely to f(s) whenever Re s > 1.

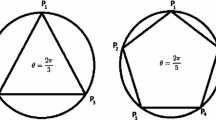

In all three cases the infinite contour integrals are obtained as the limit, as R approaches ∞, of integrals taken along the paths \(\Gamma _{1} = C_{1} \cup L_{R}\) or \(\Gamma _{2} = C_{2} \cup L_{R}\), where C 1 and C 2 are the arcs of the circle of radius R centered at s = 0 that lie, respectively, to the left and right of the vertical line segment L R with endpoints c − t R and c + t R on the circle. (See Figure 10.2.)

Fig. 10.2 The integrand of (55) may be rewritten as

$$\displaystyle{ \dfrac{x^{s}} {s^{2}} = \dfrac{e^{\mathit{ls}}} {s^{2}} = \dfrac{1} {s^{2}}\sum _{n=0}^{\infty }\dfrac{l^{n}s^{n}} {n!}, }$$where l = lnx. Since the exponential series converges uniformly on any closed interval, the integration may then be carried out term by term. For x ≥ 1 the path \(\Gamma _{1}\) is taken, enclosing the pole at s = 0 of x s∕s 2, where the residue is l. For 0 < x < 1 the path \(\Gamma _{2}\) is taken instead, which encloses no poles of x s∕s 2; so that integral is 0. In the first case, | s | = R and \(\vert x^{s}\vert = x^{\sigma } \leq x^{c}\) on C 1, so the integral along C 1 has absolute value less than or equal to \(\dfrac{1} {2\pi } \dfrac{x^{c}} {R^{2}}2\pi R = \dfrac{x^{c}} {R}\), which approaches 0 as R approaches ∞. In the second case, \(x^{\sigma } \leq x^{c}\) on C 2 as well, since for \(0 < x < 1,x^{\sigma }\) decreases as σ increases. The integral along C 2 thus also approaches 0 as R approaches ∞. The proof of (56) is similar, using \(\vert s(s - 1\vert \geq R(R - 1)\) and writing \(\dfrac{x^{s}} {s(s - 1)}\) as \(\dfrac{x^{s}} {s - 1} -\dfrac{x^{s}} {s}\).

To obtain (57), first note that \(x^{s}f(s)=\sum _{n\leq x}a(n)\left (\dfrac{x} {n}\right )^{s}\!+\!\sum _{n>x}a(n)\left (\dfrac{x} {n}\right )^{s}.\) The first sum is finite, and so can be integrated term by term. By (56), the result is \(\sum _{n\leq x}S\left (\dfrac{x} {n}\right )\left (\dfrac{x} {n} - 1\right ) =\sum _{n\leq x}a(n)\left (\dfrac{x} {n} - 1\right )\). The second term, on the other hand, is an analytic function of s that for Re s ≥ c is bounded by ∑ n > x | a(n) | (x∕n)c. By Cauchy’s theorem, its integral over \(\Gamma _{2}\) is zero, and by the same reasoning as before, its integral over C 2 tends to zero as R approaches ∞. Consequently, after dividing by x,

$$\displaystyle{ \dfrac{1} {2\pi i}\int _{c-i\infty }^{c+i\infty } \dfrac{x^{s-1}} {s(s - 1)}f(s)\,\mathit{ds} =\sum _{n\leq x}a(n)\left ( \dfrac{1} {n} -\dfrac{1} {x}\right ). }$$By Abel summation applied to the function 1∕y, the last sum is equal to \(\int _{1}^{x}A(y)/y^{2}\,\mathit{dy}\).

-

12.

Finally, the Prime Number Theorem in the form \(\lim _{x\rightarrow \infty }\dfrac{\psi (x)} {x} = 1\) is obtained as a special case of the following much more general result.

Theorem:

Suppose the function f(s) is analytic throughout the region Re s ≥ 1, except perhaps at s = 1, and satisfies the following conditions:

-

(C1)

\(f(s) =\sum _{ n=1}^{\infty }\dfrac{a(n)} {n^{s}}\) converges absolutely when Re s > 1.

-

(C2)

\(f(s) = \dfrac{\alpha } {s - 1} + \alpha _{0} + (s - 1)h(s)\) , where h is differentiable at s = 1.

-

(C3)

There is a function P(t) such that |f(σ + it)|≤ P(t) for σ ≥ 1 and \(t \geq t_{0} \geq 1\) , and \(\int _{1}^{\infty }P(t)/t^{2}\,\mathit{dt}\) is convergent.

Then \(\int _{1}^{\infty }\dfrac{A(x) -\alpha x} {x^{2}} \,\mathit{dx}\;\) converges to α 0 −α where \(A(x) =\sum _{n\leq x}a(n).\)

If, furthermore, A(x) is increasing and non-negative, then \(\lim _{x\rightarrow \infty }\dfrac{A(x)} {x} =\alpha\).

To prove the first claim, note that \(\dfrac{1} {s - 1} = \dfrac{s} {s - 1} - 1\), so \((s - 1)h(s) = f(s) -\alpha \dfrac{s} {s - 1} - (\alpha _{0}-\alpha )\), and \(\vert (s - 1)h(s)\vert \leq P(t) + \vert \alpha \vert + \vert \alpha _{0}\vert \) when | σ ≥ 1 and | t | ≥ t 0. Then for x > 1 and c > 1, (55), (56), and (57) give

Careful examination (see Jameson 2003, p. 121) shows that the result just obtained also holds for c = 1. That is,

The path of integration for the contour integral on the left is the vertical line Re s = 1, where \(s = 1 + \mathit{it}\), so that integral may be rewritten as

\(\left (\text{which converges absolutely since}\left \vert \dfrac{h(s)} {s} \right \vert \leq \dfrac{P(t) + \vert \alpha \vert + \vert \alpha _{0}\vert } {t^{2}} \right )\). That the latter integral approaches 0 as x approaches ∞ then follows from the Riemann-Lebesgue lemma, which states that

If ϕ(t) is a continuously differentiable function from \(\mathbb{R}\) to \(\mathbb{C}\) and \(\int _{-\infty }^{+\infty }\vert \phi (t)\vert \,\mathit{dt}\) converges, then the integral

approaches 0 as λ approaches ∞.

The convergence of \(\int _{1}^{\infty }(A(x) -\alpha x)/x^{2}\,\mathit{dx}\) implies the remaining claim (that if A(x) is increasing and non-negative, then \(\lim _{x\rightarrow \infty }\dfrac{A(x)} {x} =\alpha\)). For such convergence means that for any δ > 0, there is an R such that

Then for 0 < δ < 1∕2, the assumption either that A(x 0) > (1 +δ)x 0 for some x 0 > R (and hence for all x ≥ x 0, since A(x) is increasing), or that \(A(x_{0}) < (1-\delta )x_{0}\) for some x 0 ≥ 2R, leads to a contradiction. (See Jameson 2003, p. 131.)

The Prime Number Theorem follows from the Theorem by taking \(f(s) = -\dfrac{\zeta ^{{\prime}}(s)} {\zeta (s)}\): For the result of step 5. shows that \(f(s) =\sum _{ n=1}^{\infty }\dfrac{\Lambda (n)} {n^{s}}\) (whence (C1) is satisfied); \(\psi (x) =\sum _{n\leq x}\Lambda (n)\) is increasing; (C2) holds by the third equation in step 8., with α = 1; and the inequalities found in step 9. show that \(\vert f(s)\vert = \vert f(\sigma +\mathit{it})\vert < P(t) = 2(\ln t + 5)^{9}\). Since \(\int _{1}^{\infty }P(t)/t^{2}\,\mathit{dt}\) converges, (C3) is thus also satisfied.

10.5 Tauberian theorems and Newman’s proof

The Theorem stated in the previous section is an example of a so-called Tauberian theorem, broadly defined (as in Edwards 1974, p. 279) as a theorem that “permits a conclusion about one kind of average [in this case, A(x)∕x] given information about another kind of average [here, the integral from 1 to ∞ of \((A(x) -\alpha x)/x^{2}\)].”

The Prime Number Theorem was first deduced from a Tauberian theorem by Edmund Landau in his paper Landau (1908).Footnote 11 Seven years later Hardy and Littlewood gave another such proof (Hardy and Littlewood 1915), and in the 1930s Tauberian theorems based on Norbert Wiener’s methods in the theory of Fourier transforms were employed to deduce the Prime Number Theorem from the non-vanishing of ζ(s) on the line Re s = 1. In particular, the Prime Number Theorem was so deduced using

The Wiener-Ikehara Theorem:

Suppose that f is a non-decreasing real-valued function on [1, ∞) for which \(\int _{1}^{\infty }\vert f(u)\vert u^{-\sigma -1}\,\mathit{du}\) converges for all real σ > 1. If in addition \(\int _{1}^{\infty }f(u)u^{-s-1}\,\mathit{du} =\alpha /(s - 1) + g(s)\) for some real α, where g(s) is the restriction to Re s > 1 of a function that is continuous on Re s ≥ 1, then \(\lim _{u\rightarrow \infty }f(u)/u =\alpha\).

But proofs of the Wiener-Ikehara Theorem are themselves difficult.

In 1935 A.E. Ingham proved another Tauberian theorem that related the Laplace transform \(\int _{0}^{\infty }f(t)e^{-\mathit{zt}}\,\mathit{dt}\) of a function f(t) defined on [0, ∞) to the integral of f(t) itself over that interval.Footnote 12 But that proof, too, was complicated, and was also based on results from Fourier analysis (Ingham 1935). In 1980, however, D.J. Newman found a way to prove a variant of Ingham’s theorem and to derive the Prime Number Theorem from it without resort either to Fourier techniques or to contour integrals over infinite paths.

Newman’s original proof (Newman 1980) was couched in terms of Dirichlet series: he proved that if \(\sum _{n=1}^{\infty }a_{n}n^{-s}\) converges to an analytic function f(s) for all s with \(\text{Re}\,s > 1\), if | a n | ≤ 1 for every n, and if f(s) is also analytic when Re s = 1, then \(\sum _{n=1}^{\infty }a_{n}n^{-s}\) converges for all s with Re s = 1. Subsequent refinements of that proof, as given in Korevaar (1982), Zagier (1997), chapter 7 of Lax and Zalcman (2012), and Jameson (2003), recast it as an alternative proof of Ingham’s Tauberian theorem. The formulation of that result given in Lax and Zalcman (2012) reads:

Let f be a bounded measurable function on [0,∞). Suppose that the Laplace transform

which is defined and analytic on the open half plane {z: Re z > 0}, extends analytically to an open set containing {z: Re z ≥ 0}. Then the improper integral \(\int _{0}^{\infty }f(t)\,\mathit{dt} =\lim _{T\rightarrow \infty }\int _{0}^{T}f(t)\,\mathit{dt}\) converges and coincides with g(0), the value of the analytic extension of g at z = 0.

The proof there proceeds as follows:

Say | f(t) | ≤ M for all t ≥ 0. For T > 0 let g T (z) be the entire function defined by \(\int _{0}^{T}f(t)e^{-\mathit{zt}}\,\mathit{dt}\). The theorem then asserts that \(\lim _{T\rightarrow \infty }\vert g(0) - g_{T}(0)\vert = 0\).

To establish that, choose R > 0 and δ(R) sufficiently small that g is analytic throughout the region \(D =\{ z: \vert z\vert \leq R\ \text{and Re}\ z \geq -\delta (R)\}\). Let \(\Gamma \) be the boundary of D (shown as the solid curve in Figure 10.3), traversed counterclockwise, and consider

By Cauchy’s theorem, the value of (58) is g(0) − g T (0). Writing σ = Re z, if σ > 0 then

Also, when | z | = R,

Now let \(\Gamma _{+}\) be the semicircle \(\Gamma \cap \{\text{Re}\,z > 0\}\), let \(\Gamma _{-}\) denote \(\Gamma \cap \{\text{Re}\,z < 0\}\), and let \(\Gamma _{-}^{{\prime}}\) be the semicircle \(\{z: \vert z\vert = R\,\,\text{and Re}\,z < 0\}\). By (59) and (60), for \(z \in \Gamma _{+}\), the absolute value of the integrand in (58) is bounded by 2M∕R 2, so

For \(z \in \Gamma _{-}\), first consider g T . As an entire function, its integral over \(\Gamma _{-}\) is the same as its integral over \(\Gamma _{-}^{{\prime}}\). Since for σ < 0,

it follows from (60) that

Next consider g(z). It is analytic on \(\Gamma _{-}\), so the quantity \(\left \vert g(z)\left (1 + \dfrac{z^{2}} {R^{2}}\right )\dfrac{1} {z}\right \vert \) is bounded on \(\Gamma _{-}\) by some constant K (whose value depends on δ and R). Likewise, e zT is bounded on \(\Gamma _{-}\), and converges uniformly to 0 on compact subsets of {Re z < 0} as T approaches ∞. Consequently,

Recalling that g(0) − g T (0) is given by the integral in (58), it follows from (61), (63), and (64) that

Since that holds for arbitrarily large values of \(R,\,\lim _{T\rightarrow \infty }\vert g(0) - g_{T}(0)\vert = 0\).

The Prime Number Theorem in the form \(\lim _{x\rightarrow \infty }\psi (x)/x = 1\) is then deduced, as in the previous section, from the convergence of the improper integral \(\int _{1}^{\infty }[\psi (x) - x]/x^{2}\,\mathit{dx}\). That, in turn, follows from Ingham’s Tauberian theorem by setting x = e t and taking \(f(t) =\psi (e^{t})e^{-t} - 1\). For, by equation (51) above,

so

Chebyshev’s upper bound for ψ(x) shows that f(t) is bounded, and the Laurent series for ζ ′(s)∕ζ(s) given in step 8 of the previous section shows that the expression in the last member of the equation above can be extended to an analytic function on the half plane Re s ≥ 0. Hence the hypotheses of Ingham’s theorem are satisfied.

10.6 Elementary proofs

In 1909 Edmund Landau published an influential handbook (Landau 1909) that “presented in accessible form nearly everything that was then known about the distribution of prime numbers” (Bateman and Diamond 1996, p. 737). It popularized use of the O-notationFootnote 13 in statements concerning growth rates of functions, and drew attention to the power of complex-analytic methods in number theory.

In particular, in contrast to the elementary methods of Chebyshev, those of complex analysis had yielded the Prime Number Theorem. The question thus arose: Were such methods essential to the proof of that theorem?

Many leading number theorists came to believe that they were. G.H. Hardy, for example, in an address to the Mathematical Society of Copenhagen in 1921, declared

No elementary proof of the prime number theorem is known, and one may ask whether it is reasonable to expect one. [For] …we know that … theorem is roughly equivalent to … the theorem that Riemann’s zeta function has no roots on a certain line.Footnote 14 A proof of such a theorem, not fundamentally dependent on the theory of functions, [thus] seems to me extraordinarily unlikely.” (Quoted from Goldfeld 2004.)

In 1948, however, Atle Selberg and Paul Erdős, independently but each using results of the other, found ways to prove the Prime Number Theorem without reference to the ζ-function or complex variables and without resort to methods of Fourier analysis. Their proofs, however, were ‘elementary ’ only in that technical sense. Indeed, in Edwards (1974) Harold Edwards expresses the widely shared opinion that “Since 1949 many variations, extensions and refinements of [Selberg’s and Erdős’s] elementary proof[s] have been given, but none of them seems very straightforward or natural, nor does any of them give much insight into the theorem.” Furthermore, as noted in Jameson (2003), p. 207, no elementary proof so far devised has given error estimates for the approximation of π(x) by li(x)] that are “as strong as that of de la Vallée Poussin.” The interest in such proofs would thus seem to stem primarily from concern for purity of method.

The basis of most elementary proofs of the Prime Number Theorem is a growth estimate for a logarithmic summation found by Selberg. In one form it states that for x > 1

where p and q denote prime numbers. Other statements equivalent to (65), shown to be so in Diamond (1982), p. 566 and Jameson (2003), pp. 214–215, are

in which the symbol ∗ denotes the Dirichlet convolution operation on arithmetic sequences, defined by \(a {\ast} b =\sum _{\mathit{jk}=n}a(j)b(k) =\sum _{j\vert n}a(j)b(n/j)\).

Selberg’s original proof derived the equation \(\lim _{x\rightarrow \infty }\theta (x)/x = 1\) from (66) using a consequence of (66) discovered by Erdős. In particular, denoting \(\liminf \theta (x)/x\) by a and limsupθ(x)∕x by A, Selberg deduced from (66) that \(a + A = 2\). Meanwhile, unaware of that fact, Erdős used (66) to show that for any δ > 0 there is a constant K(δ) such that for sufficiently large values of x there are more than K(δ)x∕ln x primes in the interval (x, x + K(δ)x). Erdős communicated his proof of that fact to Selberg, who then, via an intricate argument, used Erdős’s result to prove that A ≤ a. Consequently, \(A = a = 1.\) (See Erdős 1949 for details of all those proofs. The proof given in Selberg 1949 is a later, more direct one that does not use Erdős’s result.Footnote 15)

The most accessible elementary proof of the Prime Number Theorem is probably that in Levinson (1969), whose very title (“A motivated elementary proof of the Prime Number Theorem”) suggests that the strategies underlying elementary proofs of that theorem are not perspicuous.Footnote 16 Variants of Levinson’s proof are also given in Edwards (1974) and Jameson (2003). Here, in outline, is the structure of the latter version:

-

1.

The goal is to show that \(\lim _{x\rightarrow \infty }\psi (x)/x = 1\). Toward that end, three related functions whose behavior is easier to study are defined, namely

$$\displaystyle{ R(x) = \left \{\begin{array}{@{}l@{\quad }l@{}} 0 \quad &\mbox{ if $x < 1$}\\ \psi (x) - x\quad &\mbox{ if $x \geq 1$}\end{array} \right.,\quad S(x) =\int _{ 0}^{x}\dfrac{R(t)} {t} \,\mathit{dt}\quad \text{and}\quad W(x) = \dfrac{S(e^{x})} {e^{x}}. }$$Then \(\lim _{x\rightarrow \infty }\psi (x)/x = 1\) if and only if \(\lim _{x\rightarrow \infty }R(x)/x = 0\).

-

2.

It follows from Chebyshev’s result ψ(x) ≤ 2x that | R(x) | ≤ x for x > 0. Furthermore, \(\int _{1}^{x}R(t)/t^{2}\,\mathit{dt} =\int _{ 1}^{x}\psi (t)/t^{2}\,\mathit{dt} -\ln x\), and Abel summation yields

$$\displaystyle{ \int _{1}^{x}\dfrac{\psi (t)} {t^{2}} \,\mathit{dt} =\sum _{n\leq x}\dfrac{\Lambda (n)} {n} -\dfrac{\psi (x)} {x}. }$$On the other hand, Mertens in 1874 applied Chebyshev’s bound to obtain that \(\sum _{n\leq x}\Lambda (n)/n =\ln x + O(1)\). (See Jameson 2003, p. 90 for details.) Consequently, applying Chebyshev’s bound once more, \(\int _{1}^{x}R(t)/t^{2}\,\mathit{dt}\) is bounded for x > 1.

-

3.

Since, by 2., the absolute value of the integrand in the definition of S is bounded by 1, S satisfies the Lipschitz condition \(\vert S(x_{2}) - S(x_{1})\vert \leq x_{2} - x_{1}\) for x 2 > x 1 > 0. That, in turn, together with the inequality \(e^{-x} \geq 1 - x\), shows that W likewise satisfies the Lipschitz condition \(\vert W(x_{2}) - W(x_{1}\vert \leq 2(x_{2} - x_{1})\) for x 2 > x 1 > 0.

-

4.

The Lipschitz condition on S gives | S(x) | ≤ x for x > 0, that is, \(\left \vert S(x)/x\right \vert \leq 1\) for x > 0. Then | W(x) | ≤ 1 and

$$\displaystyle\begin{array}{rcl} \int _{1}^{x}\dfrac{S(t)} {t^{2}} \,\mathit{dt}& =& \int _{1}^{x} \dfrac{1} {t^{2}}\int _{1}^{t}\dfrac{R(u)} {u} \,\mathit{du}\,\mathit{dt} {}\\ & =& \int _{1}^{x}\dfrac{R(u)} {u} \int _{u}^{x} \dfrac{1} {t^{2}}\,\mathit{dt}\,\mathit{du} {}\\ & =& \int _{1}^{x}\dfrac{R(u)} {u} \left (\dfrac{1} {u} -\dfrac{1} {x}\right )\,\mathit{du} {}\\ & =& \int _{1}^{x}\dfrac{R(u)} {u^{2}} \,\mathit{du} -\dfrac{S(x)} {x} \,, {}\\ \end{array}$$which is bounded for all x > 1 by the result of step 2. above. Consequently, \(\int _{0}^{x}W(t)\,\mathit{dt}\) is bounded for all x > 0.

-

5.

Straightforward arguments with inequalities yield the following Tauberian theorem:

$$\displaystyle{ \begin{array}{ll} &\mathit{If }\ A(x) \geq 0,A(x)\ \mathit{is\ increasing\ for}\ x > 1,\ \mathit{and}\ \dfrac{1} {x}\int _{1}^{x}\dfrac{A(t)} {t} \,\mathit{dt} \rightarrow 1\ \mathit{as}\ x \rightarrow \infty, \\ &\mathit{then}\ \dfrac{A(x)} {x} \rightarrow 1\ \mathit{as}\ x \rightarrow \infty.\end{array} }$$Then since \(S(x)/x = (1/x)\int _{1}^{x}\psi (t)/t\,\mathit{dt} - 1 - 1/x\), taking A(x) = ψ(x) in the Tauberian theorem shows that to prove that ψ(x)∕x → 1 as x → ∞ it suffices to prove that S(x)∕x → 0 as x → ∞.

-

6.

Equivalently, it suffices to show that W(x) → 0 as x → ∞. For that purpose, let

$$\displaystyle{ \alpha =\limsup _{x\rightarrow \infty }\vert W(x)\vert \leq 1\quad \text{and}\quad \beta =\limsup _{x\rightarrow \infty }\dfrac{1} {x}\int _{0}^{x}\vert W(t)\vert \,\mathit{dt}. }$$Then β ≤ α. Crucially, however,

$$\displaystyle{ \beta =\alpha \quad \text{only if}\quad \alpha = 0; }$$(70)so to prove the Prime Number Theorem it suffices to show that α ≤ β.

To prove (70), assume α > 0. Since \(\int _{0}^{x}W(t)\,\mathit{dt}\) is bounded for all x > 0, there is a constant B such that \(\vert \int _{x_{1}}^{x_{2}}W(t)\,\mathit{dt}\vert \leq B\) for all x 2 > x 1 > 0. Also, by the definition of α, for any a > α there is some x a such that | W(x) | ≤ a for x > x a . So suppose α < a ≤ 2α and consider \(\int _{x_{1}}^{x_{1}+h}\vert W(t)\vert \,\mathit{dt}\), where x 1 ≥ x a and h ≥ 2α is to be determined. If W(x) changes sign within the interval [x 1, x 1 + h], the intermediate-value theorem yields the existence of a point z in that interval where W(z) = 0. The Lipschitz condition on W then gives | W(x) | ≤ 2 | x − z | , and since h ≥ a, at least one of the points \(z \pm \dfrac{a} {2}\) lies between x 1 and x 1 + h. So either the interval \([z,z + a/2]\) lies within [x 1, x 1 + h] or \([z - a/2,z]\) does. Whichever does, call it I. (If both do, pick one.) Then \(\int _{I}\vert W(x)\vert \,\mathit{dx} \leq \int _{I}2\vert x - z\vert \,\mathit{dx} = a^{2}/4\). The part of \([x_{1},x_{1} + h]\) lying outside I has length \(h - a/2\), and there | W(x) | ≤ a. So, finally,

$$\displaystyle{ \int _{x_{1}}^{x_{1}+h}\vert W(x)\vert \,\mathit{dx} \leq \dfrac{a^{2}} {4} + a\left (h -\dfrac{a} {2}\right ) = a\left (h -\dfrac{a} {4}\right ) < a\left (h - \dfrac{\alpha } {4}\right ). }$$(71)By choosing h to be the greater of α and \(B/\alpha +\alpha /4\), (71) can be ensured to hold as well if W(x) does not change sign within [x 1, x 1 + h].

To complete the proof, note that for any x ≥ x 1 + h there is an integer n such that \(x_{a} + \mathit{nh} \leq x < x_{a} + (n + 1)h\). Then

$$\displaystyle\begin{array}{rcl} \int _{0}^{x}\vert W(x)\vert \,\mathit{dx}& \leq &\int _{ 0}^{x_{a} }\vert W(x)\vert \,\mathit{dx} + (n + 1)\left (h - \dfrac{\alpha } {4}\right )ah {}\\ & =& C + (n + 1)\left (h - \dfrac{\alpha } {4}\right )ah, {}\\ \end{array}$$where C is constant and n → ∞ as x does. Hence, since x > nh,

$$\displaystyle{\dfrac{1} {x}\int _{0}^{x}\vert W(x)\vert \,\mathit{dx} \leq \dfrac{C} {x} + \left (1 + \dfrac{1} {n}\right )\left (1 - \dfrac{\alpha } {4h}\right )a.}$$As x → ∞, the right member of that inequality approaches \((1 -\alpha /4h)a\). Consequently \(\beta \leq (1 -\alpha /4h)a\) for any a > α. Therefore \(\beta \leq (1 -\alpha /4h)\alpha <\alpha\).

-

7.

It is at this point that Selberg’s inequality enters in. In Levinson (1969) and Jameson (2003) that inequality, in the forms (68) and (69), is derived as a corollary to the Tatuzawa-Iseki identity, which states that if F is a function defined on the interval [1, ∞) and \(G(x) =\sum _{n\leq x}F(x/n)\), then for x ≥ 1:

$$\displaystyle{ \sum _{k\leq x}\mu (k)\ln \dfrac{x} {k}G\left (\dfrac{x} {k}\right ) = F(x)\ln x +\sum _{n\leq x}\Lambda (n)F\left (\dfrac{x} {n}\right ), }$$(72)where μ denotes the Möbius function and \(\Lambda \) the von Mangoldt function.

The Tatuzawa-Iseki identity is a variant of the Möbius inversion formula, which states that under the same hypotheses, \(F(x) =\sum _{n\leq x}\mu (n)G(x/n)\). It is obtained by multiplying the Möbius formula by lnx to get the first term in the right member of (72), rewriting the factor \(\ln (x/k)\) in the left member as \(\ln x -\ln k\), replacing \(G\left (\dfrac{x} {k}\right )\) in \(\sum _{k\leq x}\mu (k)\ln k\,G\left (\dfrac{x} {k}\right )\) by \(\sum _{j\leq x/k}F\left ( \dfrac{x} {\mathit{jk}}\right )\), interchanging the order of summation in the double sum, and expressing the result in terms of \(\Lambda (n)\).Footnote 17

To obtain inequality (68), the Tatuzawa-Iseki identity is applied with \(F(x) = R(x) +\gamma +1\), where γ is Euler’s constant. One proves that then \(\vert G(x)\vert \leq \ln x + 2\) for x ≥ 1. The derivation is completed by invoking the integral test for series together with Chebyshev’s upperbound for ψ(x).

-

8.

Inequality (67) is deduced as a corollary to (68), using Abel summation and Chebyshev’s result that ψ(x) ≤ 2x. Since the integral test implies that \(\sum _{n\leq x}\ln n = x\ln x + O(x\)), a further equivalent of Selberg’s formula is \(\sum _{n\leq x}[\Lambda (n)\ln n + (\Lambda {\ast} \Lambda )(n)] - 2\ln n = O(x)\).

-

9.

Dividing (69) by t, where 1 ≤ t ≤ x, gives

$$\displaystyle{ R(t)\dfrac{\ln t} {t} + \dfrac{1} {t}\sum _{n\leq x}\Lambda (n)R\left ( \dfrac{t} {n}\right ) = O(1). }$$Integrating and using | S(t)∕t | ≤ 1 from step 4. then shows that for x ≥ 1,

$$\displaystyle{ S(x)\ln x +\sum _{n\leq x}\Lambda (n)S(x/n) = O(x), }$$(73)which is (69) with R replaced by S.

-

10.

Three technical lemmas are now proved, using (68), (69) and the Lipschitz condition on S, respectively. First, for all x ≥ 1 there is a constant K 1 such that

$$\displaystyle{ \vert S(x)\vert (\ln x)^{2} \leq \sum _{ n\leq x}[\Lambda (n)\ln n + (\Lambda {\ast} \Lambda )(n)]\left \vert S\left (\dfrac{x} {n}\right )\right \vert + K_{1}x\ln x. }$$(74)Second,

$$\displaystyle{ \sum _{n\leq x}[\Lambda (n)\ln n + (\Lambda {\ast} \Lambda )(n)]\left \vert S\left (\dfrac{x} {n}\right )\right \vert = 2\sum _{n\leq x}\ln n\left \vert S\left (\dfrac{x} {n}\right )\right \vert + O(x\ln x); }$$(75)and third, for all x ≥ 1 there is a constant K 2 such that

$$\displaystyle{ \sum _{n\leq x}\ln n\left \vert S\left (\dfrac{x} {n}\right )\right \vert \leq \int _{1}^{x}\ln t\left \vert S\left (\dfrac{x} {t} \right )\right \vert \,\mathit{dt} + K_{2}x\,. }$$(76) -

11.

Together, (74), (75), and (76) yield that for all x ≥ 1 there is a constant K 3 such that

$$\displaystyle{ \vert S(x)\vert (\ln x)^{2} \leq 2\int _{ 1}^{x}\ln t\left \vert S\left (\dfrac{x} {t} \right )\right \vert \,\mathit{dt} + K_{3}x\ln \,x\,,\quad \text{so that} }$$$$\displaystyle{ \vert W(x)\vert \leq \dfrac{2} {x^{2}}\int _{0}^{x}(x - u)\vert W(u)\vert \,\mathit{du} + \dfrac{K_{3}} {x} \,. }$$(77) -

12.

Finally, for α and β as defined in step 6., (77) implies that α ≤ β. For, by the definition of β, for every ε > 0 there exists an x 1 such that for every x ≥ x 1, \(\int _{0}^{x}\vert W(t)\vert \,\mathit{dt} \leq (\beta +\epsilon )x\). In order to apply (77), consider \(\int _{0}^{x}(x - u)\vert W(u)\vert \,\mathit{du}\), which may be rewritten as

$$\displaystyle{ \int _{0}^{x}\vert W(u)\vert \int _{ u}^{x}\mathit{dt}\,\mathit{du} =\int _{ 0}^{x}\int _{ 0}^{t}\vert W(u)\vert \,\mathit{du}\,\mathit{dt}. }$$Then for x ≥ x 1,

$$\displaystyle{ \int _{x_{1}}^{x}\int _{ 0}^{t}\vert W(u)\vert \,\mathit{du}\,\mathit{dt} \leq \int _{ x_{1}}^{x}(\beta +\epsilon )t\,\mathit{dt} = \dfrac{1} {2}(\beta +\epsilon )(x^{2} - x_{ 1}^{2}). }$$On the other hand, | W(u) | ≤ 1 for all u by step 4. So

$$\displaystyle{ \int _{0}^{x_{1} }\int _{0}^{t}\vert W(u)\vert \,\mathit{du}\,\mathit{dt} \leq \int _{ 0}^{x_{1} }t\,\mathit{dt} = \dfrac{1} {2}x_{1}^{2}. }$$Therefore

$$\displaystyle\begin{array}{rcl} \int _{0}^{x}(x - u)\vert W(u)\vert \,\mathit{du}& =& \int _{ 0}^{x_{1} }(x - u)\vert W(u)\vert \,\mathit{du} +\int _{ x_{1}}^{x}(x - u)\vert W(u)\vert \,\mathit{du} {}\\ & \leq & \dfrac{1} {2}(\beta +\epsilon )x^{2} + \dfrac{1} {2}x_{1}^{2}\,. {}\\ \end{array}$$Then by (77), \(\vert W(x)\vert \leq \beta +\epsilon + \dfrac{x_{1}^{2}} {x^{2}} + \dfrac{K_{3}} {x}\). By definition of α, that means α ≤ β +ε; and since ε can be chosen to be arbitrarily small, α ≤ β. q.e.d.

10.7 Overview

The five proofs of the Prime Number Theorem considered here employ a wide range of methodologies, including analytic continuation, Abel summation, Dirichlet convolution, contour integration, Fourier analysis, Laplace transforms, and Tauberian theorems. They differ from one another in many respects, including the ways in which the domain of definition of ζ(s) is extended and whether or not recourse is made to the functional equation for the ζ-function.

The proofs also exemplify several of the different motivations discussed in Chapter 2 For example, Riemann’s program for proving the Prime Number Theorem by examining the behavior of the complex ζ-function proposed bringing the methods of complex analysis to bear on the seemingly remote field of number theory; so the successful carrying out of that program by Hadamard and de la Vallée Poussin may be deemed instances of benchmarking. And the marked differences in the independent and nearly simultaneous proofs of the theorem that Hadamard and de la Vallée Poussin gave — each of which was based upon the same corpus of earlier work (especially Chebyshev’s results) and followed the same basic steps in Riemann’s program (proving that ζ(s) has no roots of the form 1 +it and then applying complex contour integration to expressions involving \(\zeta ^{{\prime}}/\zeta\)) — are surely attributable to differences in their individual patterns of thought.

In the case of Hadamard, a further motive was that of correcting deficiencies in Cahen’s earlier proof attempt. Indeed, at the beginning of section 12 of his memoir, Hadamard noted explicitly that Cahen had claimed to have proved that θ(x) ∼ x, but that his demonstration was based on an unsubstantiated claim by Stieltjes (who thought he had proved the Riemann Hypothesis). Nonetheless, Hadamard declared, “we will show that the same result can be obtained in a completely rigorous way” via “an easy modification” of Cahen’s analysis.Footnote 18

The modern descendants of those classical proofs illustrate how simplifications, generalizations, and refinements of mathematical arguments gradually evolve and are incorporated into later proofs. Examples include the proof that ζ(s) has no roots with real part equal to one, which was significantly shortened and simplified through the use of a trigonometric identity; the deduction of the Prime Number Theorem from a general Tauberian theorem, which showed it to be a particular instance of a family of such theorems concerning Dirichlet series;Footnote 19 and the use in Newman’s proof of a contour integral that is much more easily evaluated than the classical ones. Newman’s proof also exhibits economy of means with regard to conceptual prerequisites, making it comprehensible to those having only a rudimentary knowledge of complex analysis.

The ‘elementary’ proofs, on the other hand, are no simpler than the classical analytic ones, are generally regarded as less perspicuous (giving little or no insight into why the Prime Number Theorem is true), and do not yield as sharp error bounds as those obtained by analytic means. The esteem nonetheless accorded them by the mathematical community, as reflected in the prizes awarded to their discoverers, may thus be taken as a quintessential manifestation of the high regard mathematicians have for purity of method in and of itself.

Notes

- 1.

Some texts instead define \(\text{li}(x)\) as \(\lim _{\epsilon \rightarrow 0}\big(\int _{0}^{1-\epsilon }1/\ln t\,\mathit{dt} +\int _{ 1+\epsilon }^{x}1/\ln t\,\mathit{dt}\big)\), which adds a constant (approximately 1.04) to li(x) as defined above, but does not affect asymptotic arguments.

- 2.

In part for those proofs, Selberg was awarded a Fields Medal in 1950 and Erdős the 1951 Cole Prize in Number Theory.

- 3.

In fact, for any absolutely convergent series \(\sum _{n=1}^{\infty }f(n)\) of non-zero terms, if f(n) is completely multiplicative and no f(n) = −1, then \(\sum _{n=1}^{\infty }f(n) =\prod _{p\,\mathit{prime}}(1 - f(p))^{-1}\).

- 4.

The result of applying the formula

$$\displaystyle{\sum _{2\leq n\leq x}a(n)g(n) =\Big [\sum _{n\leq x}a(n)\Big]g(x) -\int _{\,2}^{x}\Big[\sum _{ n\leq t}a(n)\Big]\,g^{{\prime}}(t)\,\mathit{dt},}$$valid whenever a(1) = 0 and g(x) has a continuous derivative on [2, x].

- 5.

A detailed commentary on developments stemming from Riemann’s classic paper is given in Edwards (1974).

- 6.

In the other cases Hadamard used the identity \(1/z^{\mu } = \dfrac{(-1)^{\mu -1}} {\Gamma (\mu )} \, \dfrac{d^{\mu -1}} {\mathit{dz}^{\mu -1}}(1/z)\), together with Cauchy’s integral theorem, to obtain the general formula

$$\displaystyle{ J_{\mu } = \dfrac{1} {2\pi i}\int _{a-\infty i}^{a+\infty i}\dfrac{x^{z}} {z^{\mu }} \,\mathit{dz} = \left \{\begin{array}{@{}l@{\quad }l@{}} 0 \quad &\mbox{ if $x < 1$,} \\ \dfrac{(\ln \,x)^{\mu -1}} {\Gamma (\mu )} \quad &\mbox{ if $x > 1$}. \end{array} \right. }$$ - 7.

In a statement quoted on page 198 of Narkiewicz (2000), de la Vallée Poussin agreed, but nevertheless claimed priority for the proof of that result.

- 8.

Cf. the discussion in Bateman and Diamond (1996), p. 736.

- 9.

According to Bateman and Diamond (1996), p. 737, Landau was the first to prove the PNT without recourse to that functional equation.

- 10.

\(\sum _{n=2}^{N}f(n) =\int _{ 1}^{N}f(x)\,\mathit{dx} +\int _{ 1}^{N}(x - [x])f^{{\prime}}(x)\,\mathit{dx}\).

- 11.

Discussed in detail in Narkiewicz (2000), pp. 298–302.

- 12.

Ingham’s theorem may alternatively be stated in terms of the Mellin transform \(\int _{1}^{\infty }f(t)t^{-s}\,\mathit{dt}\). See, e.g., Korevaar (1982) or Jameson (2003), pp. 124–129.

- 13.

Whereby f(x) = O(g(x)) for \(x > x_{1} \geq x_{0}\) means that f is eventually dominated by g, that is, that f and g are both defined for x > x 0, g(x) > 0 for x > x 0, and there is a constant K such that | f(x) | ≤ Kg(x) for all x > x 1.

- 14.

For as noted in the preceding section, the Wiener-Ikehara Theorem implies that the Prime Number Theorem follows from the absence of zeroes of the ζ-function on the line Re s = 1, a fact that is also implied by the Prime Number Theorem. (See, for example, Diamond 1982, pp. 572–573.)

- 15.

Regrettably, the interaction between Erdős and Selberg in this matter was a source of lasting bitterness between them. Goldfeld (2004) provides a balanced account of the dispute, based on primary sources. As noted there, the issue was not one of priority of discovery, but “arose over the question of whether a joint paper (on the entire proof) or separate papers (on each individual contribution) should appear”.

- 16.

Levinson’s paper won the Mathematical Association of America’s Chauvenet Prize for exposition in 1971. Nevertheless, after reading it, the number theorist Harold Stark commented “Well, Norman tried, but the thing is as mysterious as ever.” (Quoted in Segal 2009, p. 99.)

- 17.

The Möbius inversion formula by itself does not suffice to give the desired bound on R(x), and that, in Levinson’s opinion, accounts for “the long delay in the discovery of an elementary proof” of the Prime Number Theorem. (Levinson 1969, p. 235)

- 18.

“Nous allons voir qu’en modifiant légèrement l’analyse de l’auteur on peut établir le même résultat en toute rigeur.”

- 19.

Three other instances given in Jameson (2003) are \(\sum _{n=1}^{\infty }\dfrac{\mu (n)} {n} = 0\), where μ denotes the Möbius function, \(\sum _{n=1}^{\infty } \dfrac{\mu (n)} {n^{1+\mathit{it}}} = \dfrac{1} {\zeta (1 + \mathit{it})}\), and \(\sum _{n=1}^{\infty }\dfrac{(-1)^{\Omega (n)}} {n} = 0\), where \(\Omega (n)\) denotes the number of prime factors of n, each counted according to its multiplicity.

References

Bateman, P., Diamond, H.: A hundred years of prime numbers. Amer. Math. Monthly 103, 729–741 (1996)

Chebyshev, P.: Sur la fonction qui détermine la totalité des nombres premiers inférieurs à une limite donnée. Mémoires des savants étrangers de l’Acad. Sci. St.Pétersbourg 6, 1–19 (1848)

Chebyshev, P.: Mémoire sur nombres premiers. Mémoires des savants étrangers de l’Acad. Sci. St.Pétersbourg 7, 17–33 (1850)

de la Vallée Poussin, C.: Recherches analytiques sur la théorie des nombres premiers. Ann. Soc. Sci. Bruxelles 20, 183–256 (1896)