Abstract

Structural properties of clusters of galaxies have been routinely used to claim either tension or consistency with the fiducial cosmological hypothesis, known as ΛCDM. However, standard approaches are unable to quantify the preference for one hypothesis over another. We advocate using a ‘weighted’ variant of approximate Bayesian computation (ABC), whereby the parameters of the strong lensing-mass scaling relation, α and β, are treated as the summary statistics. We demonstrate then, for the first time, the procedure for estimating the likelihood for observing α and β under the ΛCDM framework. We employ computer simulations for producing mock samples, and account for variation between samples for modelling the likelihood function. We also consider the effects on the likelihood, and consequential ability to compare competing hypotheses, if only simplistic computer simulations are available.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Key words

1 Introduction

Our standard model of cosmology, ΛCDM, is one in which our universe is made up primarily of dark energy, has a large amount of dark matter, and only a small fraction of ordinary matter; it is currently undergoing a period of expansion and an expansion that is accelerating. This model appears to describe the contents and evolution of the universe very well, and has been determined through the analysis of several astrophysical objects and phenomena. One additional dataset with the potential to provide complementary information is the mass-structure of clusters of galaxies [1, 2, 22, 24]. These objects contain hundreds to thousands of galaxies, as well as hot gas and dark matter, and they gravitationally lens and distort the images of more distant galaxies.

Strong lensing efficiencies, as characterised by the effective Einstein radii (denoted θ E ) scale well with the mass of clusters at large over-densities [14]. If any given set of galaxy clusters sample are, in fact, stronger lenses than predicted by the ΛCDM model, they will have larger θ E for a given total mass at low over-densities (e.g., M 500). The earliest studies of similar galaxy-cluster properties revealed a significant difference between the observations and ΛCDM predictions [1, 15]. Thus began the hunt for solutions to the so-called tension with ΛCDM cosmology.

Previous works in the literature have claimed either ‘tension’ or ‘consistency’ with ΛCDM, or insufficient data [6, 11, 18, 21, 23, 27], but do not allow one to compare competing cosmological hypotheses. In the present work, we propose a Bayesian approach to the cosmological test using galaxy cluster strong lensing properties.

2 The Bayesian Framework

A Bayesian approach is advocated, in which one determines the relative preference of two hypothetical cosmological models, C 1 and C 2, in light of the data D, by calculating the Bayes factor B:

where \(\mathcal{L}\) denotes the likelihood of the data assuming a cosmology.

The aim then is to calculate, under one chosen hypothesis: ΛCDM, the likelihood of observing the structural properties of a particular sample of galaxy clusters. This sample is detected using a well-defined selection criteria and all relevant properties have been measured [5, 7, 13, 16, 17, 26, 27].

2.1 Weighted ABC

Achieving the aforementioned goal is non-trivial, because a likelihood function related to the original observables (θ E and M 500) is intractable. This is because: (a) computer simulations are deemed necessary for describing the irregular structure of galaxy clusters, which undergo non-linear structure formation; (b) there are a finite (and relatively small) number of clusters that can be simulated in a reasonable amount of time, and thus the full θ E –M 500 space cannot be sampled. Therefore, this problem is an ideal case for which one may apply a variant of approximate Bayesian computation (ABC) [4, 25]. What we propose is not a likelihood-free approach, however, and rather than rejecting mock samples that are dissimilar to the real data, they are down-weighted. Thus, we refer to the novel approach described below as Weighted ABC.

We assume a power-law relation between the strong lensing and mass proxies, and perform a fitting to the following function in logarithmic spaceFootnote 1:

with parameters α and β, and aim to find the likelihood of observing the scaling relationship. α and β act as summary statistics for the dataset. However, rather than calculating precise values for α and β, one would determine a probability distribution that reflects the degree of belief in their respective values. The relevant fitting procedure is described in Sect. 10.2.2.

Next, we outline how to calculate the likelihood of observing α and β. In the following, ι represents background information such as knowledge of the cluster selection criteria, the method of characterising the Einstein radius, and the assumption that there exists a power-law relation between strong lensing and mass.

-

1.

Computer simulations (see [3, 14, 19, 20]) are run within the framework of a chosen cosmological hypothesis, C. In our case, C represents the assumption that ΛCDM (or specific values for cosmological parameters) is the true description of cosmology.

-

2.

Simulated galaxy clusters are selected according to specified criteria, ideally reflecting the criteria used to select the real clusters.

-

3.

Different on-sky projections of these three-dimensional objects produce different apparent measurements of structural properties. Therefore, we construct a large number of mock samples from these by randomly choosing an orientation-angle for each cluster. Equation (10.2) is fit to each mock sample (See Sect. 10.2.2), to determine a posterior over α and β: P i (α, β | C, ι) denotes the result for the ith of N mock samples. We combine these, to give the probability, \(P(\alpha,\beta \vert C,\iota ) \equiv \sum _{i=1}^{N}P_{i}(\alpha,\beta \vert C,\iota )\), that one would observe the scaling relation {α,β} under the hypothesis C. The result can be interpreted as a likelihood function as a function of data: α and β.

-

4.

Fit Eq. (10.2) to the data to obtain the posterior probability distribution for α and β, P(α, β | ι). The normalised posterior is then interpreted as a single ‘data point’: the distribution represents the uncertainty on the measurement of α and β.

-

5.

Calculate the likelihood, \(\mathcal{L}\), of observing the α–β fit as we did, by integrating over the product of the two aforementioned posteriors—now re-labelled ‘data-point’ and ‘likelihood function’.

The result of integrating the product of P(α, β | C, ι) and P(α, β | ι) for the dataset is mathematically equivalent to integrating the product for each mock separately, then taking the average over all mock samples:

Thus, what we have described above is equivalent to the weighting of each mock sample according to its similarity to the real data, where the metric is the convolution of the two (mock and real) posterior probability distributions P(α, β | ι).

2.2 Summary Statistic Fitting

The summary statistics α and β are parameters of the scaling relation between strong lensing efficiency and total cluster mass [Eq. (10.2)]. The procedure for calculating \(\mathcal{L}\), as described in Sect. 10.2.1, requires one to fit real or mock data to determine the posterior distribution on α and β. We employ the Bayesian linear regression method outlined in [10]. Additionally, we acknowledge that intrinsic scatter is likely to be present, and thus introduce a nuisance parameter, V, which represents intrinsic Gaussian variance orthogonal to the line.

For this subsection, we change notation in order to reduce the subscripts: the mass of the i-th cluster lens as M i , and the scaled Einstein radius as E i . Each data-point is denoted by the vector \(\mathbf{Z}_{i} = [\log M_{i},\log E_{i}]\). Their respective uncertainties (on the logarithms) are denoted \(\sigma _{M}^{2}\) and \(\sigma _{E}^{2}\). Since we assume the uncertainties for Einstein radii and cluster mass are uncorrelated, the covariance matrix, \(\mathbf{S}_{i}\), reduces to:

In the case of a mock sample of simulated clusters, \(\mathbf{S}_{i} = 0\).

Consider now the following quantities: \(\varphi \equiv \arctan \alpha\), which denotes the angle between the line and the x-axis, and \(b_{\perp }\equiv \beta \cos \varphi\) which is the orthogonal distance of the line to the origin. The orthogonal distance of each data-point to the line is:

where \(\hat{\mathbf{v}} = [-\sin \varphi,\cos \varphi ]\) is a vector orthogonal to the line.

Therefore, the orthogonal variance is

Following [10], we calculate the likelihood over the three-dimensional parameter space: \(\varTheta _{1} \equiv \{\alpha,\beta,V \}\):

where K is an arbitrary constant, and the summation is over all clusters in the considered sample.

While we ultimately (aim to) provide the parameter constraints on α and β, flat priors for these tend to unfairly favour large slopes. A more sensible choice is flat for the alternative parameters \(\varphi\) and b ⊥ . We apply a modified Jeffreys prior on V:

This is linearly uniform on V for small values and logarithmically uniform on V for larger values with a turnover, V t , chosen to reflect the typical uncertainties.

Thus, for each Θ 1, we may define an alternative set of parameters \(\varTheta _{2} \equiv \{\varphi,b_{\perp },V \}\), for which the prior is given by:

where π(V ) is given by Eq. 10.8. The prior on Θ 1 is then dependent on the magnitude of the Jacobian of the mapping between the two sets of parameters:

Boundaries on the priors are sufficiently largeFootnote 2: − 8 ≤ β ≤ 8; − 40 ≤ α ≤ 40; 0 ≤ V ≤ V max. V max is chosen to reflect the overall scatter in the data. The posterior is calculated following Bayes’ theorem:

and is normalised. In practice, the posterior distribution was sampled by employing emcee [8], the python implementation of the affine-invariant ensemble sampler for Markov chain Monte Carlo (MCMC) proposed by [9].

As we are interested in the constraints on α and β, we then marginalise over the nuisance parameter, V.

3 Results

In Fig. 10.1, we show the relation between the Einstein radii and the cluster mass M500. The real cluster sample is represented by red circles. For simulated clusters, the situation is more complicated. Since different lines of sight provide a large variation in projected mass distribution, each cluster cannot be associated with an individual Einstein radius, nor a simple Gaussian or log-normal distribution [14]. We therefore measure the Einstein radius for 80 different lines of sight and, for ease of visualisation, describe the distribution of Einstein radii for each simulated cluster by a box-plot.

Strong lensing efficiency, characterised by scaled Einstein radii, θ E,eff, plotted as a function of mass. The range of Einstein radii for simulated clusters are shown by the blue box-plots. The red circles represent the real clusters. The red line marks the maximum a-posteriori fit to observational data, while the thin blue lines mark the fit to 20 randomly chosen mock samples from simulations

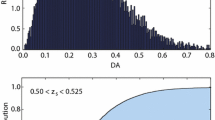

We fit the observational data to the lensing-mass relation and after marginalising out the nuisance parameter, V, present the posterior distribution for α and β, denoted by red contours in the left-hand panel of Fig. 10.2. This fit is reinterpreted as a single ‘data-point’. To estimate the likelihood, as a function of possible data, we employ simulations. Many mock samples are individually fit to the lensing-mass relations; the maximum of the posterior is shown as a blue point and a typical 1-σ error shown as a blue ellipse. By adding the posteriors for each mock sample and renormalising, we estimate the required likelihood function, shown by the blue contours in the right-hand panel of Fig. 10.2. By multiplying by the ‘data-point’ distribution and integrating over the parameter space, we find \(\mathcal{L}\approx 0.3\).

Left: 1-σ and 2-σ constraints on parameters of the strong lensing—mass relation given the real cluster data (red contours), with a maximum a posteriori fit marked by a red circle. Overplotted in blue dots are the best fits to 80 mock observations of simulated galaxy clusters. A typical 1-σ error is shown as a blue ellipse. Right: Same as the middle panel, but the blue circle and curves mark, respectively, the maximum and the 1-σ and 2-σ contours of the likelihood function found by combining all 80 mocks. Ultimately, the likelihood, \(\mathcal{L}\approx 0.3\), is found by convolving the functions marked by the red and blue contours

Note that one cannot comment on whether the likelihood is large or small. Currently, such simulations are only available for the fiducial ΛCDM cosmological model. However, if the same process is repeated for simulations under a different model, then the Bayes factor can be calculated [see Eq. (10.1)] and, after accounting for priors, may (or may not) reveal a preference for one of the cosmologies, in light of this data. Alternative cosmological models may include, for example, those with a different relative dark matter to dark energy ratio, interactions between the two dark components, or a different normalisation for the structure power spectrum.

4 Computational Challenge

The approach described above is an exciting new strategy for calculating the likelihood for observing strong lensing galaxy clusters for a chosen cosmological hypothesis. However, we recognise that the calculation involves running computer simulations that can take months. Computationally ‘cheaper’ simulations ignore several astrophysical processes in the formation of galaxy clusters and it is debatable whether these would be sufficient.

In order to determine the severity of this problem, we repeat the aforementioned procedure using galaxy cluster counterparts from such simulations, at varying levels of complexity and realism, and find that the likelihood, \(\mathcal{L}\), can then vary by a factor of three or four. If the cheaper simulations are employed, then the selection criteria must also be replaced with an alternative compromise. We test this alternative and find that \(\mathcal{L}\) changes by a factor of two.

Our findings suggest that if a model-comparison study was carried out using a simulation based on an alternative cosmological hypothesis and resulting in a Bayes factor of 20 or more [see Eq. (10.1)], then the cheaper simulations (or toy models based on these) would be sufficient. However, in the event that the Bayes factor B is found to be smaller, then the computationally expensive but realistic simulations would be necessary.

Notes

- 1.

The pivot mass 9 × 1014 M ⊙ is chosen to approximate the logarithmic average of the observed and simulated clusters. Similarly, the pivot Einstein radius is chosen to be 20 arcseconds. D d represents the angular diameter distance from an observer on Earth to the galaxy cluster lens, while D ds represents the angular diameter distance from the galaxy cluster lens to a more distant galaxy, in our case chosen to be fixed to a redshift of z = 2.

- 2.

The physically motivated choice of restricting α ≥ 0 is also explored; however, this has very minor effects on the final results despite removing the (small) secondary peak in the marginal posterior on α and β.

- 3.

- 4.

- 5.

- 6.

References

Bartelmann, M., Huss, A., Colberg, J.M., Jenkins, A., Pearce, F.R.: Arc statistics with realistic cluster potentials. IV. Clusters in different cosmologies. A&A 330, 1–9 (1998)

Bartelmann, M., Meneghetti, M., Perrotta, F., Baccigalupi, C., Moscardini, L.: Arc statistics in cosmological models with dark energy. A&A 409, 449–457 (2003). Doi:10.1051/0004-6361:20031158

Bonafede, A., Dolag, K., Stasyszyn, F., Murante, G., Borgani, S.: A non-ideal MHD gadget: simulating massive galaxy clusters. ArXiv e-prints (2011)

Cameron, E., Pettitt, A.N.: Approximate Bayesian computation for astronomical model analysis: a case study in galaxy demographics and morphological transformation at high redshift. MNRAS 425, 44–65 (2012). Doi:10.1111/j.1365-2966.2012.21371.x

Coe, D., Zitrin, A., Carrasco, M., Shu, X., Zheng, W., Postman, M., Bradley, L., Koekemoer, A., Bouwens, R., Broadhurst, T., Monna, A., Host, O., Moustakas, L.A., Ford, H., Moustakas, J., van der Wel, A., Donahue, M., Rodney, S.A., Benítez, N., Jouvel, S., Seitz, S., Kelson, D.D., Rosati, P.: CLASH: three strongly lensed images of a candidate z ≈ 11 galaxy. ApJ 762, 32 (2013). Doi:10.1088/0004-637X/762/1/32

Dalal, N., Holder, G., Hennawi, J.F.: Statistics of giant arcs in galaxy clusters. ApJ 609, 50–60 (2004). Doi:10.1086/420960

Ebeling, H., Barrett, E., Donovan, D., Ma, C.J., Edge, A.C., van Speybroeck, L.: A complete sample of 12 very X-ray luminous galaxy clusters at z > 0. 5. ApJ Lett. 661, L33–L36 (2007). Doi:10.1086/518603

Foreman-Mackey, D., Hogg, D.W., Lang, D., Goodman, J.: emcee: the MCMC hammer. PASP 125, 306–312 (2013). Doi:10.1086/670067

Goodman, J., Weare, J.: Ensemble samplers with affine invariance. App. Math. Comput. Sci. 5(1), 65–80 (2010)

Hogg, D.W., Bovy, J., Lang, D.: Data analysis recipes: fitting a model to data. ArXiv e-prints (2010)

Horesh, A., Maoz, D., Hilbert, S., Bartelmann, M.: Lensed arc statistics: comparison of millennium simulation galaxy clusters to hubble space telescope observations of an X-ray selected sample. MNRAS 418, 54–63 (2011). Doi:10.1111/j.1365-2966.2011.19293.x

Hunter, J.D.: Matplotlib: a 2d graphics environment. Comput. Sci. Eng. 9(3), 90–95 (2007)

Johnson, T.L., Sharon, K., Bayliss, M.B., Gladders, M.D., Coe, D., Ebeling, H.: Lens models and magnification maps of the six hubble Frontier fields clusters. ArXiv e-prints (2014)

Killedar, M., Borgani, S., Meneghetti, M., Dolag, K., Fabjan, D., Tornatore, L.: How baryonic processes affect strong lensing properties of simulated galaxy clusters. MNRAS 427, 533–549 (2012). Doi:10.1111/j.1365-2966.2012.21983.x

Li, G.L., Mao, S., Jing, Y.P., Bartelmann, M., Kang, X., Meneghetti, M.: Is the number of giant arcs in ΛCDM consistent with observations? ApJ 635, 795–805 (2005). Doi:10.1086/497583

Mantz, A., Allen, S.W., Ebeling, H., Rapetti, D., Drlica-Wagner, A.: The observed growth of massive galaxy clusters - II. X-ray scaling relations. MNRAS 406, 1773–1795 (2010). Doi:10.1111/j.1365-2966.2010.16993.x

Medezinski, E., Umetsu, K., Nonino, M., Merten, J., Zitrin, A., Broadhurst, T., Donahue, M., Sayers, J., Waizmann, J.C., Koekemoer, A., Coe, D., Molino, A., Melchior, P., Mroczkowski, T., Czakon, N., Postman, M., Meneghetti, M., Lemze, D., Ford, H., Grillo, C., Kelson, D., Bradley, L., Moustakas, J., Bartelmann, M., Benítez, N., Biviano, A., Bouwens, R., Golwala, S., Graves, G., Infante, L., Jiménez-Teja, Y., Jouvel, S., Lahav, O., Moustakas, L., Ogaz, S., Rosati, P., Seitz, S., Zheng, W.: CLASH: complete lensing analysis of the largest cosmic lens MACS J0717.5+3745 and surrounding structures. ApJ 777, 43 (2013). Doi:10.1088/0004-637X/777/1/43

Meneghetti, M., Fedeli, C., Zitrin, A., Bartelmann, M., Broadhurst, T., Gottlöber, S., Moscardini, L., Yepes, G.: Comparison of an X-ray-selected sample of massive lensing clusters with the MareNostrum Universe ΛCDM simulation. A&A 530, A17+ (2011). Doi:10.1051/0004-6361/201016040

Planelles, S., Borgani, S., Fabjan, D., Killedar, M., Murante, G., Granato, G.L., Ragone-Figueroa, C., Dolag, K.: On the role of AGN feedback on the thermal and chemodynamical properties of the hot intracluster medium. MNRAS 438, 195–216 (2014). Doi:10.1093/mnras/stt2141

Ragone-Figueroa, C., Granato, G.L., Murante, G., Borgani, S., Cui, W.: Brightest cluster galaxies in cosmological simulations: achievements and limitations of active galactic nuclei feedback models. MNRAS 436, 1750–1764 (2013). Doi:10.1093/mnras/stt1693

Sereno, M., Giocoli, C., Ettori, S., Moscardini, L.: The mass-concentration relation in lensing clusters: the role of statistical biases and selection effects. ArXiv e-prints (2014)

Takahashi, R., Chiba, T.: Gravitational lens statistics and the density profile of Dark Halos. ApJ 563, 489–496 (2001). Doi:10.1086/323961

Waizmann, J.C., Redlich, M., Meneghetti, M., Bartelmann, M.: The strongest gravitational lenses: III. The order statistics of the largest Einstein radii. ArXiv e-prints (2014)

Wambsganss, J., Bode, P., Ostriker, J.P.: Giant arc statistics in concord with a concordance lambda cold dark matter universe. ApJ Lett. 606, L93–L96 (2004). Doi:10.1086/421459

Weyant, A., Schafer, C., Wood-Vasey, W.M.: Likelihood-free cosmological inference with type ia supernovae: approximate bayesian computation for a complete treatment of uncertainty. ApJ 764, 116 (2013). Doi:10.1088/0004-637X/764/2/116

Zheng, W., Postman, M., Zitrin, A., Moustakas, J., Shu, X., Jouvel, S., Høst, O., Molino, A., Bradley, L., Coe, D., Moustakas, L.A., Carrasco, M., Ford, H., Benítez, N., Lauer, T.R., Seitz, S., Bouwens, R., Koekemoer, A., Medezinski, E., Bartelmann, M., Broadhurst, T., Donahue, M., Grillo, C., Infante, L., Jha, S.W., Kelson, D.D., Lahav, O., Lemze, D., Melchior, P., Meneghetti, M., Merten, J., Nonino, M., Ogaz, S., Rosati, P., Umetsu, K., van der Wel, A.: A magnified young galaxy from about 500 million years after the Big Bang. Nature 489, 406–408 (2012). Doi:10.1038/nature11446

Zitrin, A., Broadhurst, T., Barkana, R., Rephaeli, Y., Benítez, N.: Strong-lensing analysis of a complete sample of 12 MACS clusters at z > 0. 5: mass models and Einstein radii. MNRAS 410, 1939–1956 (2011). Doi:10.1111/j.1365-2966.2010.17574.x

Acknowledgements

The authors thank Ewan Cameron, Geraint Lewis, Jiayi Liu, Hareth Saad Mahdi, Julio Navarro, Matthias Redlich, Christian Robert, Alexandro Saro, Anja von der Linden and Adi Zitrin for helpful discussions, and Giuseppe Murante for his involvement in running the simulations.

This work has made use of the following open-source software: python,Footnote 3 numpy,Footnote 4 scipy,Footnote 5 matplotlib [12] and cosmolopy.Footnote 6

MK acknowledges a fellowship from the European Commission’s Framework Programme 7, through the Marie Curie Initial Training Network CosmoComp (PITN-GA-2009-238356) and support by the DFG project DO 1310/4-1. SP acknowledges support by the PRIN-INAF09 project “Towards an Italian Network for Computational Cosmology” and by the PRIN-MIUR09 “Tracing the growth of structures in the Universe” as well as partial support by Spanish Ministerio de Ciencia e Innovación (MICINN) (grants AYA2010-21322-C03-02 and CONSOLIDER2007-00050). Simulations have been carried out at the CINECA supercomputing Centre in Bologna (Italy), with CPU time assigned through ISCRA proposals and through an agreement with University of Trieste. This work has been supported by the PRIN-MIUR 2012 grant “Evolution of Cosmic Baryons”, by the PRIN-INAF 2012 grant “Multi-scale Simulations of Cosmic Structure“ and by the INFN “INDARK” grant. We acknowledge support from “Consorzio per la Fisica - Trieste”.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Killedar, M. et al. (2015). A New Strategy for Testing Cosmology with Simulations. In: Frühwirth-Schnatter, S., Bitto, A., Kastner, G., Posekany, A. (eds) Bayesian Statistics from Methods to Models and Applications. Springer Proceedings in Mathematics & Statistics, vol 126. Springer, Cham. https://doi.org/10.1007/978-3-319-16238-6_10

Download citation

DOI: https://doi.org/10.1007/978-3-319-16238-6_10

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-16237-9

Online ISBN: 978-3-319-16238-6

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)