Abstract

Emotions serve an important role in learning and performance, yet their role in medical education has been largely overlooked. In this chapter, we examine how multiple research methodologies and measures can be used to detect and analyze emotions within authentic medical learning environments. Our goal is to highlight conceptual, methodological, and practical considerations that should be attended to by researchers, educators, and medical professionals interested in examining the role of emotions within medical education. Findings from our literature review and empirical work suggest that appraisal models that treat emotions as multi-componential (e.g., control-value theory) can provide a fruitful framework for examining links between emotions and learning. In terms of measuring emotions, self-report can be useful with respect to scalability and capturing subjective experience, whereas behavioral and physiological measures provide continuous data streams and are less susceptible to cognitive or memory biases. Other factors researchers and health sciences professionals should take into consideration when selecting a measure of emotion include: efficiency; level of granularity; and person-centered versus group-level analyses. Recent work suggests that multiple measures of emotions can be integrated into affect-aware learning technologies to aid instructional design by detecting, tracing, and modeling emotional processes during learning.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Theories of achievement emotions (e.g., Pekrun, Elliot, & Maier, 2006) and self-regulated learning (e.g., Boekaerts, 2011; Pintrich, 2000, 2004; Zimmerman, 2000, 2011) suggest that emotions can either enhance or hinder learning, motivation, and performance (Pekrun & Linnenbrink-Garcia, 2014). For instance, positive emotions (e.g., enjoyment) have been linked to more creative and flexible modes of thinking, such as elaboration, critical thinking, and metacognitive monitoring, whereas negative emotions (e.g., anxiety) have been related to more rigid thinking, such as the use of rehearsal strategies (Pekrun, Goetz, Frenzel, Barchfeld, & Perry, 2011; Pekrun, Goetz, Titz, & Perry, 2002). Within medicine and health sciences, however, researchers have largely overlooked the functional role of emotions for learning and performance (McNaughton, 2013). Recently, calls have been made to address this gap (e.g., Artino, 2013; Artino, Holmboe, & Durning, 2012; McConnell & Eva, 2012; O’Callaghan, 2013; Shapiro, 2011). As Croskerry, Abbass, and Wu (2008) argue, there is a need for medical educators to better understand the role of emotions and apply this knowledge to clinical training given the potential impact on decision-making and affect-based errors.

In order to effectively address these calls and empirically examine the role of emotions within medical education, there is a need to critically examine how tools and methods can be used to measure emotions within medical education. With this in mind, we will review a diverse range of measures and methods from fields such as psychology, education, and affective sciences. We will also provide examples from our current research, which examines emotions among medical trainees and experts within authentic learning environments. This includes our empirical work on surgical skills training within operating rooms (e.g., Duffy, Lajoie, Jarrell et al., 2015; Duffy, Lajoie, Azevedo, Pekrun, & Lachapelle, 2014; Duffy, Lajoie, Pekrun, Ibrahim, & Lachapelle, 2015), emergency care training within simulation settings (e.g., Duffy et al., 2013, Duffy, Azevedo, Sun et al., 2015), and diagnostic reasoning training within BioWorld, a computer-based learning environment (e.g., Lajoie, 2009; Lajoie et al., 2013). Although this chapter focuses on medical education, it also has broader implications for research and instruction within other domains of health science education, particularly those professions that involve patient care, team dynamics, complex tasks, and high-stakes situations where a range of emotions are likely to be more strongly elicited and influential for learning and performance. As Leblanc, McConnell, and Monteiro (2015) note: “… health professions education could be enriched by greater understanding of how these emotions can shape cognitive processes in increasingly predictable ways” (p. 265).

In the sections that follow, we discuss issues related to validity and reliability, challenges of collecting and analyzing data, as well as theoretical and conceptual considerations. In order to design advanced learning technologies that are sensitive to the role of emotions for learning and performance, we argue that it is critical to understand the advantages and limitations of various methodological approaches. As such, rather than provide an exhaustive review, the goal of this chapter is to provide a better understanding of considerations when measuring emotions within medical education. We start by providing a theoretical background of emotions as the assumptions and issues therein have important methodological implications.

2 Theoretical and Empirical Background

2.1 The Nature and Structure of Emotions

Emotions are assumed to serve important functions for survival by allowing individuals to react quickly, intensely, and in an adaptive fashion (Plutchik, 1980). Emotions are also important for psychological health and well-being and can impact the surrounding social climate (Izard, 2010). But what is meant by the term emotion? How are emotions generated? And what is their role in learning and performance? Emotions are considered by theorists and researchers to fall within the broader concept of affect, but are distinct from other affective phenomena, such as moods, in that they are more intense, have a clearer object-focus, a more salient cause (linked to an event and situation), and are experienced for a shorter duration (Scherer, 2005; Shuman & Scherer, 2014). A rich history of scholarly interest in emotions (e.g., Darwin, 1872; James, 1890) has produced a number of theories of emotion (for review see Gendron & Barrett, 2009; Gross & Barrett, 2011) including theories of basic emotions (e.g., Ekman, 1992; Ekman & Cordar, 2011; Izard, 1993), psychological construction (e.g., Barrett, 2009; Russell, 2003), and appraisal theories (e.g., Frijda, 1986; Lazarus, 1991; Scherer, 1984). For the purposes of this review we focus on componential and appraisal approaches to studying emotions as these frameworks have gained greater traction within educational psychology.

According to the componential model, emotions are widely considered to be multifaceted in that each emotion is composed of coordinated sets of psychological states that include subjective feeling, as well as cognitive, motivational, expressive, and physiological components (Gross & Barrett, 2011; Pekrun, 2006; Scherer, 2005, 2009). As such, this framework considers emotions to be dynamic and recursive episodesFootnote 1 that reflect a continuous change in each of the multiple components over time (Scherer, 2009). According to appraisal models of emotions (for review see Moors, Ellsworth, Scherer, & Frijda, 2013) and related frameworks such as control-value theory (Pekrun, 2006; Pekrun, Frenzel, Goetz, & Perry, 2007; Pekrun & Perry, 2014), subjective cognitive appraisals lie at the heart of emotion generation. Within the control-value framework (Pekrun, 2006), for example, individuals will experience specific emotions depending on whether or not they feel personally in control of activities or outcomes that they perceive to be important to them. For instance, if an activity is perceived to be controllable and is positively valued, then an individual is expected to experience enjoyment (Pekrun, 2006; Pekrun et al., 2007), whereas, if an activity is perceived to be controllable but is negatively valued, then anger is likely to occur (Pekrun, 2006; Pekrun et al., 2007). Within this framework, subjective appraisals of value and control (high versus low) play a key role in predicting the specific emotions experienced at a given point in time. The antecedents for these subjective appraisals are influenced by a host of features in the social environment, such as information related to controllability, autonomy support, quality of instruction, achievement-related expectancies of peers/instructors (i.e., how definitions of success and failure are influenced by social expectations), consequences of achievement, and cultural values related to achievement (Pekrun et al., 2006). Theoretically, once emotions are activated, they can in turn influence performance through the mediated role of cognition (e.g., working memory, information processing), motivation (e.g., persistence, effort), and self-regulated learning (e.g., monitoring understanding, evaluating progress; Pekrun et al., 2007; Pekrun & Perry, 2014). In the following section we describe empirical work that has examined these relations within the context of learning and achievement.

2.2 Linking Emotions to Learning and Achievement

Research has demonstrated that positive emotions are generally related to more creative and flexible modes of thinking such as elaboration, critical thinking, and metacognitive monitoring, whereas negative emotions (e.g., anger, anxiety) have been linked with more rigid thinking, such as rehearsal strategies (Pekrun et al., 2002). It has also been found that certain negative emotions (e.g., boredom) may hamper self-regulation, whereas positive emotions are related to facets of self-regulation including goal setting, strategy use, monitoring, and evaluation (Pekrun et al., 2002, 2011). In addition, negative emotions are generally negatively related to achievement whereas positive emotions are positively related to achievement (Pekrun et al., 2002, 2011). Within medical education, for example, there is some evidence to suggest that within classroom settings, positive emotions, such as enjoyment, may be beneficial for achievement (Artino, La Rochelle, & Durning, 2010). However, both positive and negative emotions can focus attention away from the task and lead to task-irrelevant thinking by redirecting attention toward the object of the emotion (Meinhardt & Pekrun, 2003; Pekrun et al., 2006). For example, anxiety can be useful if it leads to increases in effort or studying but ineffective if it interferes with attention (Pekrun et al., 2006). This is consistent with research in medical and health science education that has demonstrated complex relationships between anxiety and performance; in some cases anxiety is positively related to performance (e.g., LeBlanc & Bandiera, 2007; LeBlanc, Woodrow, Sidhu, & Dubrowski, 2008), whereas in others it has a negative relationship (e.g., Harvey, Bandiera, Nathens, & LeBlanc, 2012; Hunziker et al., 2011; LeBlanc et al., 2012; Leblanc, MacDonald, McArthur, King, & Lepine, 2005). As Harvey et al. (2012) suggest, these differential effects may be related to the complexity of the task: during less complex tasks (e.g., suturing) anxiety may serve to enhance attention, whereas during more complex tasks (e.g., trauma resuscitation) anxiety may place excessive demands on limited cognitive resources and impede performance. As such, whether or not a particular emotion is considered adaptive is context-dependent (Pekrun et al., 2006). For example, as McConnell and Eva (2012) suggest based on their review, positive emotions may enhance more global big-picture thinking among health professionals, whereas negative emotions may lead an individual to become detail-oriented, which can be beneficial for tasks demanding such attention. On the other hand, negative emotions may also promote reliance on well-rehearsed and formulaic problem-solving strategies, whereas positive emotions may lead to more cognitively flexibility and adaptation of strategies, which can be more useful when a strategy is ineffective. More broadly, emotions can influence health professionals’ decision-making and performance through its impact on various cognitive processes, such as attention, perception, reasoning, and memory (Leblanc et al., 2015).

Within medicine and health sciences, emotions have typically been assessed in terms of psychological health, burn-out, and well-being (e.g., Chew, Zain, & Hassan, 2013; Dyrbye et al., 2014; Satterfield & Hughes, 2007) rather than their functional role in learning and performance. Research that has examined links between affect-related variables and clinical performance have focused primarily on the role of stress (e.g., Harvey et al., 2012; Leblanc, 2009; LeBlanc et al., 2011; Piquette, Tarshis, Sinuff, Pinto, & Leblanc, 2014), which is typically considered to be a negative state that aligns closely with anxiety (Sharma & Gedeon, 2012). Table 10.1 includes representative examples of studies and measures resulting from a literature search of research examining the role of emotions within medical and health sciences education. These studies range from emergency medicine and technical skills training to clinical reasoning and communication skills training.

As demonstrated in Table 10.1, previous research has typically employed a combination of self-report and physiological measures to identify stress patterns in particular. The few studies within medical education that have examined a broader range of emotions from a learning and achievement perspective have typically relied on self-report measures (e.g., Artino et al., 2010; Fraser et al., 2012; 2014; Hunziker et al., 2011; Kasman, Fryer-Edwards, & Braddock, 2003). As such, there is a need to consider a more diverse array of emotional states and measures.

3 Measuring Emotions

As the emotion literature grows, there remain several unresolved questions and discussions regarding the nature and structure of emotions, which have methodological and analytical implications. For example: should emotions be characterized as discrete or dimensional? Are they precipitated by cognitive appraisals or are they largely automated responses? Are they universal or context-specific? Are they distinct from other psychological states? Despite these unresolved conceptual issues, emotion research continues to move forward, as do methodological and analytic developments. Perhaps, as Picard (2010) and other affective computing researchers (Calvo & D’Mello, 2012) and neuroscience researchers (Immordino-Yang & Christodoulou, 2014) have suggested, data collected from advanced emotion-detection technologies and methodologies may provide insights that allow researchers to overcome conceptual hurdles and enrich theoretical models. To assess the utility of these methods for medical education, however, it is important to first consider approaches to organizing the array of emotion measures.

3.1 Organizing and Classifying Emotion Measures

Emotion measures and their corresponding analytic techniques can be organized based on conceptual features and methodological approaches. For example, from a conceptual standpoint, emotions can be grouped according to valence: positive emotions (pleasant valence) versus negative emotions (unpleasant valence); physiological arousal: activating versus deactivating (Pekrun et al., 2002, 2006); and action tendency: whether the emotion is associated with a tendency to approach stimuli (e.g., anger, excitement) or avoid stimuli (e.g., anxiety; Frijda, 1986, 1987; Mulligan & Scherer, 2012). Emotions can also be classified according to the object or event focus, which refers to the focus of the attention (Pekrun & Linnenbrink-Garcia, 2014; Shuman & Scherer, 2014). For example, outcome emotions relate to the outcome of an event (e.g., anticipating success on an exam), whereas activity emotions relate to the task at hand (e.g., frustration during problem-solving; Pekrun, 1992; Pekrun et al., 2002). A distinction can also be made based on temporal generality of an emotion: state emotions (occur for a specific activity at a specific point of time) or trait emotions (recurring emotions that relate to a specific activity or outcome; Pekrun et al., 2011). Emotions can also be organized based on taxonomies, which consist of discrete emotions (e.g., frustration) that are likely to be activated during specific activities or within specific contexts (e.g., academic emotions).

In addition to these conceptual groupings, emotion measures can also be organized based on the methodological approaches employed. For example, emotion measures can be distinguished according to the type of data channel used (e.g., self-report, behavioral physiological), the frequency of data points (e.g., one point in time versus continuous process measures), and the time of administration in relation to the event of interest (e.g., concurrent versus retrospective). Overall, these systems of organizing emotions can be used to identify underlying assumptions, as well as the benefits and limitations of each measure. These conceptual and methodological features will be taken into consideration in the following review of emotion measures, which we organize according to the type of data channel (self-report, behavioral, physiological). Table 10.2 contains a summary of each data channel, including example measures, advantages, limitations, and applications for medical education.

3.2 Self-Report Measures

Self-report measures of emotions include data channels that rely on individuals’ perceptions and communication of emotional states, typically collected through questionnaires and interviews. Given that emotions are considered to be subjective experiences (Shuman & Scherer, 2014) that can be verbally communicated (Pekrun & Linnenbrink-Garcia, 2014), self-reports will likely continue to be widely used (Shuman & Scherer, 2014). Questionnaires are typically designed to measure frequency and intensity of emotions, whereas interviews can be used to further explore participants’ perceptions, experiences, and antecedents related to the onset of emotional states. These types of measures vary greatly in terms of breadth and structure, such as single versus multi-item scales, open-ended questions versus structured ratings, state versus trait perspectives, and retrospective versus concurrent reporting (Pekrun & Bühner, 2014). On the surface, self-reports seem to solely assess the subjective feeling component of emotions yet questionnaires and interviews can also be designed to measure other emotion components by designing questions or scale items that include physiological, cognitive, motivational, and expressive responses corresponding to each unique emotion (Pekrun & Bühner, 2014).

Questionnaires can be administered in several manners, including traditional paper-based formats, as well as electronic and web-based questionnaires accessible through computers and Wi-Fi-enabled handheld mobile devices, such as tablets and smartphones (see Fig. 10.1). These electronic questionnaires afford the flexibility to unobtrusively measure emotions in diverse environments. They also allow responses to be reviewed in real-time, which can be useful for identifying key emotional events to examine further during follow-up interviews or adaptive learning systems.

However, there are several unresolved questions regarding the ideal frequency and timing of administration. For instance: how often should a questionnaire be administered to provide a representative picture of concurrent emotions during learning? Should the deployment of questionnaires be aligned to key events or set time intervals? Whereas time-based sampling captures the trajectory of emotions across different phases of learning, event-based sampling can reveal sources of emotions (Turner & Trucano, 2014). Decisions regarding administration will largely depend on the nature of the learning environment and research questions. For instance, researchers may aim to capture salient emotions experienced during learning or they may be interested in linking emotions to specific events, such as the deployment of self-regulated learning processes (e.g., cognitive, metacognitive, motivational) or instructor prompts and feedback. Furthermore, some naturalistic learning environments may require that the questionnaire be administered less frequently to reduce interruptions to the learning activity or performance task at hand.

Experience sampling methods (ESM; Csikszentmihalyi & Larson, 1987; Hektner, Schmidt, & Csikszentmihalyi, 2007) have been used to provide an unobtrusive and representative sample of individuals’ emotions in naturalistic settings (e.g., Becker, Goetz, Morger, & Ranellucci, 2014; Goetz et al., 2014; Nett, Goetz, & Hall, 2011) by prompting participants to respond to questions at random time points over a specific interval (e.g., over the course of a day, week, or month). This technique has also been used within medical settings to measure trainees’ emotional experiences (e.g., Kasman et al., 2003). Although this method is intended to capture a representative selection of emotional experiences, it falls short of providing a continuous measure of emotional states at a fine-grained (second-minute) level of temporality. More recently, emote-aloud methods have been used to prompt participants to communicate their emotional states during learning in a manner similar to think-aloud protocols (Craig, D’Mello, Witherspoon, & Graesser, 2008); thus, capturing an online trace of emotions and potentially state-transitions as they occur in real-time. However, further work is needed to determine whether this approach is cognitively taxing for participants, whether it is susceptible to social desirability biases or individual variability in verbosity, and how it differs from other speech and paralinguistic channels used to assess emotions (described below).

In terms of the range of emotions detected using self-report measures, the education literature has been largely dominated by a focus on anxiety (Zeidner, 2014). This is also the case for medical and health sciences education as evidenced by the use of the State-Trait Anxiety Inventory (Spielberger, Gorsuch, Lushene, Vagg, & Jacobs, 1983) across of number of studies (e.g., Arora et al., 2010; van Dulmen et al., 2007; Harvey et al., 2012; Leblanc et al., 2005; Meunier et al., 2013; Piquette et al., 2014). Although these scales have provided important insights into the role of stress in learning, they are complicated by issues with discriminant validity—namely, they appear to assess multiple negative emotions, such as shame and hopelessness rather than providing a pure measure of anxiety (Pekrun & Bühner, 2014). More recently, the Achievement Emotion Questionnaire (AEQ; Pekrun et al., 2002; 2011) has been used to capture a broader range of emotions expected to be elicited within achievement contexts, which according to Pekrun and Linnenbrink-Garcia (2014, p. 260) can be defined as: “activities or outcomes that are judged according to competence-related standards of quality.” Several studies (e.g., Goetz, Pekrun, Hall, & Haag, 2006; Pekrun et al., 2006, 2009, 2011; Pekrun, Elliot, & Maier, 2009; Pekrun, Goetz, Daniels, Stupnisky, & Perry, 2010) have used the AEQ to assess trait and state emotions (e.g., hope, pride, enjoyment, relief) across various facets of academic achievement settings (e.g., learning and test-taking) at various points in time (e.g., before, during, after the activity). This measure has also been used to assess course-related emotions for medical students (e.g., Artino et al., 2010).

In our research within medical education (e.g., Duffy et al., 2014; Duffy, Lajoie, Jarrell et al., 2015; Duffy, Lajoie, Pekrun et al., 2015), we have used likert-scale questionnaires and semi-structured interviews to assess state emotions before, during, and after learning and performance activities across authentic learning environments (simulation training, surgical settings, and computer-based learning environments). We have found questionnaires to be relatively efficient to administer and analyze across a range of learning environments, whereas interviews can be conducted retrospectively to provide rich contextual information regarding the antecedents, object-focus, and role of emotions. Collectively, these self-report measures allow us to examine participants’ perceptions and subjective experience of emotions.

Within computer-based medical learning environments (e.g., Duffy, Lajoie, Jarrell et al., 2015; Lajoie et al., 2013), we have embedded time-triggered questionnaires to measure activity emotions as they occur at multiple points during the session. These concurrent measures are considered to be more valid (Mauss & Robinson, 2009) as they are less susceptible to memory biases and do not appear to interfere with learning within this environment. In contrast, within team-based simulation and surgical settings (Duffy et al., 2013; Duffy, Lajoie, Pekrun et al., 2015; Duffy, Azevedo, Sun et al., 2015), we have opted to measure self-reported activity emotions retrospectively (via questionnaires and interviews) given that administering questionnaires during the activity could significantly interfere with performance and group dynamics within these achievement-oriented environments.

To conduct follow-up interviews, we have integrated both retrospective (Ericsson & Simon, 1993) and cognitive interviewing techniques (Muis, Duffy, Trevors, Ranellucci, & Foy, 2014). In addition to gaining information about the nature of emotional experiences, these methods allow us to assess convergent validity (i.e., whether there is alignment between interview and questionnaire responses), construct validity (i.e., whether interpretations and responses to questionnaire items align with theoretical assumptions), and face validity (i.e., whether the questionnaire presents a representative range of emotions experienced and is presented in a way that is clear). Eye-tracking and video replay can also be integrated into retrospective interviewing protocols to align participants’ recall of emotional states with cued learning events (see Van Gog & Jarodzka, 2013).

From analyses of questionnaire data collected from medical trainees within surgical settings (i.e., conducting a vein harvest procedure within the operating room) and computer-based learning environments (i.e., conducting a diagnostic reasoning task within BioWorld to solve a virtual case) we have found that the more intensely experienced emotions across these environments include: curiosity, compassion, happiness, relaxation, confusion, pride, and relief. The salience of these emotions varies according to prior experience (e.g., high versus low) type of task/environment (e.g., technical skills within the operating room versus diagnostic reasoning skills within computer-based learning environments), and time of response relative to learning or performance (e.g., before, during, after task; Duffy, Lajoie, Jarrell et al., 2015). From analyses of interview responses, we have discovered that the emotion “disgust” is perceived as less relevant than other emotions. These interviews also illuminated the reoccurring theme of group dynamics in emotion generation within team environments.

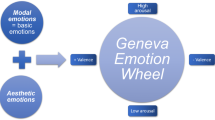

To collect this data, we have developed a scale (Medical Emotion Questionnaire), which consists of emotion taxonomies specifically selected for their relevance to medicine education. For example, within most medical education environments, it is likely that achievement emotions (e.g., anxiety, enjoyment; Pekrun et al., 2011), social emotions (e.g., compassion, gratitude; Fisher & van Kleef, 2010; Weiner, 2007), and epistemic or knowledge emotions (e.g., confusion, curiosity; Muis et al., 2015; Pekrun & Stephens, 2011) are activated given the inherent performance expectations, social dynamics, and knowledge-based nature of medicine. Basic emotions (e.g., fear, anger, sadness; Ekman, 1972, 1992) may also play a role within high-stakes medical education environments, such as simulations and naturalistic settings (e.g., surgical and emergency rooms) given that crisis responses might generate what are considered to be more automated and psychologically primitive or elemental emotions (Tracy & Randles, 2011). Our approach is consistent with Shuman and Scherer’s (2014) recommendation to include multi-emotion measures, as well as previous work that has adapted self-report measures to include taxonomies of emotions that are more context-sensitive (e.g., Zentner, Grandjean, & Scherer, 2008) given that emotions are considered to be domain-specific and may vary according to the educational environment (D’Mello, 2013; Goetz, Frenzel, Pekrun, Hall, & Lüdtke, 2007; Goetz et al., 2006) and sociocultural context (Turner & Trucano, 2014). These developments prompt new questions, such as whether the emotions included are strictly orthogonal (represent independent and unique emotions) or whether they represent several clusters or families of related emotions that share similar components (e.g., sadness and disappointment; Mauss & Robinson, 2009; Shuman & Scherer, 2014).

A key drawback to using self-report measures is that they are susceptible to several validity threats including cognitive and memory biases. Participants may forget the emotional state they are asked to recall or construct a false memory of an emotion to explain their performance. It is also not clear whether participants’ interpretation of self-report questions aligns with researchers’ assumptions or whether they possess adequate emotional awareness to monitor and effectively describe their emotions. This latter concern may be particularly relevant within medical domains, as it has been suggested that the culture of medicine encourages students to disconnect from their emotions to the extent that they may have difficulty recognizing and processing emotional states (Shapiro, 2011). As previously mentioned, another limitation is that self-reports are not always feasible to administer concurrently during performance tasks within high-stakes environments as the nature of these measures interrupts the task itself. As such, it is worth complementing questionnaire data using less intrusive methods; in the following section, we discuss behavioral measures of emotion.

3.3 Behavioral Measures

There is a wide array of behavioral measures, which rely on overt actions, movements, and mannerisms to detect emotional states. Although these measures directly measure the expressive component of emotions, it is argued that these expressions correspond to specific emotional states. Typically behavioral measures are used to identify the frequency of emotions although intensity can also be assessed depending on the coding methods used (Reisenzein, Junge, Studtmann, & Huber, 2014).

Facial expressions are one of the more commonly used behavioral channels for emotion detection and typically rely on video recordings of facial expressions as they occur and change over time. Coding systems developed to detect these emotional states typically rely on facial movements. While some coding methods (e.g., Kring & Sloan, 2007) focus exclusively on sets of muscle movements that are postulated to represent theoretically meaningful emotions, the Facial Action Coding System (Ekman & Friesen, 1978; Ekman, Friesen, & Hager, 2002) includes a broad range of action units (micro motor muscle movements), and as such, is designed to provide a more objective approach to coding. The FACS approach may also be well suited to natural, subtle expressions given that the facial action unit combinations can result in thousands of possible facial expressions (Zeng, Pantic, Roisman, & Huang, 2009). Automated systems for emotion detection can help to reduce human resources needed to manually code emotions (Azevedo et al., 2013; Zeng et al., 2009). For example, automatic facial recognition software, such as Noldus FaceReader™ is designed to classify basic emotions automatically (typically post-hoc) and has been used within a host of studies to detect and trace emotion (e.g., Craig et al., 2008; Pantic & Rothkrantz, 2000).

Speech provides another behavioral channel commonly used to detect emotions through discourse patterns and paralinguistics/vocal features (e.g., intonation, pitch, speech rate, amplitude/loudness) (e.g., D’Mello & Graesser, 2010, 2012). Previous research has found that combinations of multiple speech features can help to detect specific emotional states (Mauss & Robinson, 2009). Software programmes also exist for linguistic and paralinguistic content (Zeng et al., 2009). These programmes share similar limitations to facial recognition software in that they are often restricted to detecting basic emotions (e.g., happiness, sadness, disgust, fear, anger, surprise), which may not capture the full range or types of emotions activated within educational settings (Zeng et al., 2009). These programmes are also based on databases of deliberately displayed (i.e., posed) expressions and, as such, are better equipped to detect extreme or prototypical expressions rather the natural spontaneous expressions we would expect to see in most learning environments (Zeng et al., 2009).

Recent trends have also included analysis of other behavioral features, such as body language and posture (e.g., D’Mello, Dale, & Graesser, 2012; D’Mello & Graesser, 2009). Compared to facial expression and speech features, which appear to be better equipped to predict valence and arousal dimensions of emotions respectively, specific body postures appear to provide markers for distinct emotional states that cannot be detected as reliably with other behavioral data channels (Mauss & Robinson, 2009). For example, research has shown that there is a link between pride and increased posture, and embarrassment and reduced posture (Keltner & Buswell, 1997; Tracy & Robins, 2004, 2007). An upright posture has also been observed during states of confusion (D’Mello & Graesser, 2010).

In our research within medical education, we have collected facial expression data, as well as body language, and speech/vocal features during computer-based learning and simulation training using video and audio recordings (Duffy et al., 2013; Duffy, Lajoie, Jarrell et al., 2015; Duffy, Azevedo, Sun et al., 2015). Although we have not collected this data to the same extent within naturalistic settings (e.g., operating rooms, clinical settings) given the practical challenges of installing equipment, we have conducted field observations that rely on similar behavioral indices. Overall, we have found that these data channels are relatively inconspicuous and allow participants to complete learning and performance tasks without interruption. They also allow us to detect emotions without reliance on participants’ knowledge and unbiased communication of their emotional states. Collectively, these behavioral measures provide a concurrent trace that allows us to measure the onset and transition of emotional expressions as they occur in real-time. However, the analyses of this data can be time and labor intensive, particularly when human coding methods are employed.

We have found that computer-based learning environments are ideal for facial expression detection; high-quality video recordings can be captured using a mounted camera given that these sessions are typically conducted with one participant at a time and involve relatively stationary behavior. However, this may pose challenges when using a multimodal approach. For instance, if we instruct participants to sit upright and restrain from covering their face with their hands (to maintain a consistent view of the face), this interferes with the ability to collect recordings of natural body movements, such as leaning towards the computer when curious. Similarly, using think-alouds or emote-alouds for speech and paralinguistic analysis can interfere with the accuracy of facial expression detection as the coding process is reliant on facial muscle movements, including the mouth, which may change as an artifact of speech rather than facial expression. Segmenting videos for reliable expressions can be time-consuming; fortunately, facial recognition software provides probabilities of classified emotional states that can be verified if the threshold is too low. Figure 10.2 illustrates automated classification of emotion (in this case happiness) using facial expression recognition software during learning within a computer-based learning environment. Moment-to-moment fluctuations in emotion intensity and valence can be displayed over time, as well as a summary of proportions of expressed emotions during the entire learning session. Detailed log files are produced to analyze variations in emotional states and emotion recognition accuracy.

Simulation environments allow for more natural body movements and speech behaviors but the issue of multimodal interference still exists. The increased mobility and dynamic nature of this environment also introduces new challenges, particularly within team-based simulations. For instance, movement can interfere with the ability to consistently capture facial expressions and the distance between the camera(s) and individual team members do not provide ideal conditions to use facial recognition software due to lack of precision and inconsistent facial views. Within these settings we have opted to code facial expressions, body language, and speech by coding each team member individually and collapsing across the group for analyses and comparative purposes (e.g., team leader versus team members Duffy, Azevedo, Sun et al., 2015). Emotion events can be segmented according to the onset and endpoint of an emotional cue (as opposed to pre-determined time segments), which is linked to a behavioral channel (e.g., facial expression, body language). Neutral can be included to provide a baseline or resting state (no positive or negative valence) and allow for a continuous stream of emotion codes. To establish validity and reliability, we have used multiple coders, subject-matter experts’ analysis, and theoretically driven coding schemes. This process brings to light several considerations, including: the number of data channels needed to make grounded inference, whether certain data channels are more reliable than others, segmentation of emotional events (time-based versus event-based), the degree of intensity needed to classify an emotion as present versus absent, the co-occurrence of multiple emotions, conflicting evidence from different data channels, and as Järvenoja, Volet, and Järvelä (2013) have discussed, the occurrence of socially shared emotional events that appear at the group rather than individual level.

One advantage of human coding within these environments is that coders can be trained to detect subtle and natural changes in emotion by analyzing microexpressions (Matsumoto & Hwang, 2011) or including contextual cues (e.g., baseline behavior, events preceding emotional event, peer responses, changes over time). This in situ idiographic approach can boost ecological validity by detecting variations that are unique to an individual, group, or task. Another benefit of human coders is that they can employ adapted coding schemes that include a range of emotion taxonomies and corresponding behavioral indices that are context- and domain-sensitive. As previously mentioned, most of the automated computer-based coding systems classify basic emotions exclusively. Recent advances in machine learning techniques (e.g., use of log files and eye-tracking for contextual cues) and facial recognition software (e.g., including databases composed of naturalistic facial expressions and learner-centered emotions) may help to address these limitations (Zeng et al., 2009).

Although behavioral measures obviate several limitations of self-report data, they are still influenced by the broader culture of a given domain. As Mauss and Robinson (2009) note, various factors such as social dynamics, feedback, and success/failure perceptions may affect the type and nature of facial expressions displayed, which suggests that expressions may be highly context-sensitive, rather than a one-to-one indicator of specific emotional states (Zeng et al., 2009). For example, in certain situations a smile may be indicative of embarrassment rather than happiness (Mauss & Robinson, 2009). Within medicine, it may be the case that expressions are masked or displayed in a different manner than in other educational environments. In particular, social desirability may reduce emotion expression within medical cultures as participants may consciously or unconsciously conceal observable displays of emotions that they are currently experiencing. Medical students may learn through training and socialization that communicating and displaying one’s emotions (whether explicitly or implicitly) is inappropriate (O’Callaghan, 2013; Shapiro, 2011). This may be the reason why behavioral measures of emotions have been less commonly used within medical and health sciences education research, compared to self-report and physiological measures. In the following section, we discuss physiological measures that are less vulnerable to these potential validity threats.

3.4 Physiological Measures

Physiological measures of emotion rely on responses of the central and autonomic nervous systems. Central nervous system (CNS) measures attempt to link brain regions to emotions and commonly employ electroencephalogram (EEG) or neuroimaging methods. EEGs record electrical activity to identify which hemispheres (left versus right) and brain regions (e.g., frontal region) are activated during emotional states, whereas neuroimaging technologies (e.g., functional magnetic resonance imaging, positron emission tomography) can identify activation of more precise brain regions (e.g., amygdala) by measuring changes in blood flow and metabolic activity (Mauss & Robinson, 2009). There are several advantages to these types of devices. Similar to behavioral measures, they do not require accurate self-reports or interruption from activities. They also are less susceptible to impression management. These channels provide a fine-grained continuous trace that capture the dynamic nature of physiological arousal as it occurs in real time (Calvo & D’Mello, 2012).

In general, while these measures of the CNS show promise, it is likely that emotional states involve complex networks or systems of brain activity rather than isolated regions; as such, further work is needed to identify circuits of activation (Mauss & Robinson, 2009). At this stage, correlates between brain states and emotions are largely limited to discriminating between positive/negative valence and approach/avoidance action tendency dimensions of emotion rather than discrete emotional states (Mauss & Robinson, 2009) although progress is being made towards linking brain regions to specific emotions, as demonstrated in research linking fear to the amygdala (Murphy, Nimmo-Smith, & Lawrence, 2003). Furthermore, the methods employed to date typically involve provoking a prototypical or extreme emotional state by presenting sensational stimuli in highly controlled experimental settings rather than capturing the more subtle range of naturally occurring emotions likely to be experienced within academic and achievement settings.

In contrast to the brain regions, peripheral physiological measures of emotions rely on indices of the autonomic nervous system, which is responsible for managing activation and relaxation functions of the human body (Öhman, Hamm, & Hugdahl, 2000). Various peripheral physiological measures and affective devices have been used to track emotion-related patterns, including salivary cortisol tests, electrodermal activity (EDA)/galvanic skin conductance, and heart rate/cardiovascular responses (e.g., Azenberg & Picard, 2014; Matejka et al., 2013; Poh, Swenson, & Picard, 2010; Sharma & Gedeon, 2012). These types of measures, particularly salivary cortisol levels and heart rate, have also been used within medicine and health sciences research to measure stress-related states similar to anxiety during learning and performance (e.g., Arora et al., 2010; Clarke, Horeczko, Cotton, & Bair, 2014; van Dulmen et al., 2007; Harvey et al., 2012; Hunziker et al., 2012; Meunier et al., 2013; Piquette et al., 2014). However, given the diverse functions of the autonomic nervous system, it can be difficult to isolate fluctuations that are solely related to emotions rather than other activities of the autonomic nervous systems acting in parallel (e.g., digestion, homeostasis; Hunziker et al., 2012; Kreibig & Gendolla, 2014; Mauss & Robinson, 2009). Moreover, across measures, these channels appear to exclusively measure the physiological component of emotions (high versus low arousal) rather than differentiate between specific emotions (e.g., anger versus fear; Mauss & Robinson, 2009). Practically speaking, this also makes it difficult to determine whether an increase in physiological activity represents a state of a negative or positive emotion (e.g., anxiety or excitement).

In our research within medical education, we have used EDA sensors to measure emotion-related physiological arousal before, during, and after learning and performance activities within simulation training, surgical settings, and computer-based learning environments (Duffy et al., 2013, 2014; Duffy, Lajoie, Pekrun et al., 2015). We have opted to use Affectiva Q™ sensor bracelet (see Fig. 10.3)Footnote 2 within surgical settings as it is portable, noninvasive, and inconspicuous (Poh et al., 2010).

Within computer-based learning environments, we have used the Biopac™ sensor, which requires attachment of electrodes to the skin and a stationary transmitter. In both cases, these devices are arguably better able to isolate emotion-related arousal compared to other physiological measures (e.g., heart rate), which are influenced by non-affective factors to a greater extent (Kreibig & Gendolla, 2014). These devices can also be used across a range of environments; thus capturing more spontaneous and natural fluctuations compared to brain imaging techniques (Picard, 2010; Poh et al., 2010).

In our research, we have linked physiological traces to key events and other data channels (e.g., self-report) by recording time-stamped log files within computer-based learning environments and time-stamped field notes with naturalistic settings (see Fig. 10.4). Using this approach, we have observed fluctuations in arousal in response to key events during and after surgical procedures.

Electrodermal activity data over time within surgical environment (Duffy, Lajoie, Pekrun et al., 2015)

For example, Fig. 10.4 illustrates a medical trainee’s arousal level heightening at the beginning of a more complex technical procedure (vein harvest), reducing during a less complex procedure (suturing), and increasing again during a follow-up interview as they recall emotions experienced during the procedure. One challenge we have encountered is that certain EDA devices require a baseline before engaging in the learning activity. Although this is feasible to obtain within lab-based settings, it is more challenging to obtain within naturalistic settings. Furthermore, individuals often possess different baseline measures of arousal/sweat conductance, so exercise or cognitively taxing tasks may be needed to reach a sufficient threshold for activation (Poh et al., 2010). In addition, researchers commonly administer the EDA bracelet on the palmar or forearm, which is not always feasible in clinical settings where hand-washing practices may interfere with device functionality. However, recent research suggests other sites on the body may provide viable options for measurement (Fedor & Picard, 2014).

Another significant challenge is that at this stage discrete emotions are not clearly or consistently differentiated through physiological patterns (Mauss & Robinson, 2009). One approach for analysis is to focus exclusively on reporting levels of arousal rather than emotions. Another approach is to examine how physiological signatures correspond to changes in emotional states using a multimodal approach (Immordino-Yang & Christodoulou, 2014). For instance, if an individual reports or displays expressions of enjoyment, we can examine their physiological data at that precise moment to identify patterns of arousal that may differentiate this emotion from other emotional states using intra- and inter-individual analyses. It is also possible that a particular emotion (e.g., boredom) could produce different types of physiological signatures depending on whether an individual is experiencing mixed emotions (e.g., boredom and anger; Larsen & McGraw, 2011) or different subtypes of a particular emotion (e.g., indifferent boredom versus reactant boredom; Goetz et al., 2014). This type of work may help us to re-examine assumptions about the underlying physiological components of emotions.

At an analytical level, all emotion measures described may involve comparisons across a host of factors, including the following: (1) types of tasks (e.g., diagnostic reasoning, surgical procedures, emergency resuscitation); (2) key events during an activity or task (e.g., novel versus routine procedures); (3) learning environments (e.g., computer-based learning environments, simulation centers, real-world clinical environments, operating rooms); (4) time of response (e.g., before, during, after task); and (5) experience level (e.g., novice versus expert). Comparing across these factors may help to illuminate which emotions in particular are more frequently or intensely experienced, which can be used as a index for identifying the most relevant or influential emotions within medical education.

4 Discussion

In this section we return to the role of emotion measures in learning. Specifically, we discuss how the emotion measures and detection technologies reviewed can be used to improve learning and instruction through the development of affect-sensitive instruction and learning technologies. These new-generation advanced learning technologies embody the importance of employing reliable emotion measurement techniques.

Data collected from emotion measures can help to promote effective instruction in several ways. One way is to examine self-report responses and behavioral expressions at the individual or group level to gauge whether specific pedagogical approaches and learning activities prompt a positive emotional reaction (e.g., curiosity, enjoyment). For example, after an emergency care simulation, asking learners to report how they feel can help instructors to determine whether the complexity, novelty, and high-stakes features of a scenario were overwhelming or engaging. At the individual level, detecting negative emotions (e.g., anxiety, boredom) through more subtle channels (e.g., facial expression) can be useful to determine if scaffolding or remediation is needed depending on the intensity and persistence of the emotional state. For example, confusion may be beneficial for the deeper-levels of learning involved in complex medical tasks or concepts given that it may serve as a catalyst for conceptual change (D’Mello, Lehman, Pekrun, & Graesser, 2012; Graesser, D’Mello, & Strain, 2014). However, unproductive persistent confusion that is coupled with low motivation or underachievement may require intervention to address misconceptions or disparities in prior knowledge and skill level. To determine whether a particular emotion serves to hinder or enhance learning and performance, emotion data can also be measured and analyzed in relation to other self-regulated learning processes (e.g., cognitive, metacognitive, motivational) and performance measures (Boekaerts, 2011; Efklides, 2011; Zimmerman, 2011). Identifying the sources or antecedents precipitating a particular emotional state can also help to inform instructional practices and the design of learning activities. In line with control-value theory (Pekrun, 2006), instructors can help to reduce unproductive emotional states (e.g., fear, anger) by increasing the fidelity and relevance of learning tasks (value) and providing learners with greater autonomy and opportunity to seek help when needed (control). Emotion measures can also help learners to effectively monitor and regulate their own emotions by tracking their emotional states. This self-reflective process can provide an opportunity for learners to develop their meta-level awareness of their own emotions and reactions (Chambers, Gullone, & Allen, 2009; Webb, Miles, & Sheeran, 2012). This is particularly important within medical and health sciences education (Shapiro, 2011), as trainees must learn to regulate their emotions during learning and performance tasks, as well as effectively recognize and respond to the emotional states of patients and team members (e.g., recognize sadness; convey empathy) while avoiding burn-out. Several studies, for instance, have highlighted the importance of effective stress-coping strategies to manage negative emotional states within surgical and emergency medicine settings (e.g., Arora et al., 2009; Hassan et al., 2006; Hunziker et al., 2013; Wetzel et al., 2010). One way that emotion regulation can be integrated into medical and health sciences training is through the use of video-based patient cases, which can be used to display, track, and monitor emotional expressions during mock patient–clinician discussions (Lajoie et al., 2012, 2014).

Although emotion can be detected using the measures previously described, scalable, mobile, and unobtrusive advanced technologies in particular (e.g., facial and speech recognition software, mobile and Wi-Fi-enabled self-reports, EDA devices) can help educators to view the temporal and dynamic nature of emotions and emotion regulation as they occur in real time. This allows for a greater degree of sensitivity and precision in adapting learning environments according to emotional states. Moreover, these measures can be embedded within technology-rich learning environments, such as simulations, serious games, and intelligent tutoring systems to create affect-aware learning technologies (Calvo & D’Mello, 2012; D’Mello & Graesser, 2010) that detect and trace each individual learner’s emotional states in real time. For example, Fig. 10.5 provides an annotated illustration of the technologies used in our research to detect emotional states during learning within BioWorld, a computer-based learning environment designed to scaffold medical students’ diagnostic reasoning skills as they solve virtual cases.

Several of these types of affect-aware learning technologies (e.g., AutoTutor; Craig et al., 2008; D’Mello & Graesser, 2012) combine multiple emotion data channels through machine learning techniques to detect learners’ emotional states and respond accordingly by adapting the tutor’s affective expression, the nature of scaffolding (e.g., frequency of prompts/feedback), or the learning environment to promote more adaptive emotional states (Zeng et al., 2009). It may be the case, for example, that if the learner is experiencing certain negative emotions (e.g., frustration, anxiety) the agent may scaffold regulation to help the learner monitor and manage their emotions. They might also use information about emotional states to strategically induce specific emotions (e.g., confusion, curiosity, surprise) by increasing content complexity or introducing conflicting perspectives when learners are unengaged or bored (Calvo & D’Mello, 2012; D’Mello et al., 2012; Markey & Loewenstein, 2014). Within these environments, multimodal approaches can help to improve the probability of accurately detecting emotional states (Mauss & Robinson, 2009) by providing complementary traces of emotion components (e.g., physiological, expressive, subjective) and reducing limitations unique to each data channel (e.g., lighting for facial, auditory noise for speech). This approach has been implemented in several studies but the relative importance or weight that should be given to each channel is unclear (Zeng et al., 2009).

Of course, these affect-aware learning technologies are still vulnerable to the same limitations of emotion measures as previously described. New challenges also arise. In some cases, researchers include blends of emotional-cognitive-motivational states (e.g., flow, engagement) in their work (e.g., Calvo & D’Mello, 2012; D’Mello, 2013). This can be challenging from a conceptual standpoint as it is not always clear that what is being detected, traced, and managed is truly emotion. This may explain why the accuracy of emotion detection within affect-aware learning technologies ranges widely and in some cases is only slightly better than chance (Graesser et al., 2014). With this is mind, both researchers and educators should consider the strengths and weaknesses of measures before integrating them into advanced learning technologies as there is currently no consensus as to the most valid and reliable measure of emotions (D’Mello, 2013; Gross & Barrett, 2011; Mauss & Robinson, 2009). Despite such challenges, these recent developments, which call on diverse interdisciplinary traditions from psychology, education, linguistics, and computer science, (Picard, 2010; Zeng et al., 2009) show great potential for the use of emotion measures in advanced learning technologies, including those designed for medical education.

… Affect detection and affect generation techniques are progressing to a point where it is feasible for these systems to be integrated in next-generation advanced learning technologies. These systems aim to improve learning outcomes by detecting and responding to affective states which are harmful to learning and/or inducing affect states that might be beneficial to learning.

(Calvo & D’Mello, 2012, p. 89)

5 Conclusion

Emotions play an important role in learning and performance, yet this construct has been largely overlooked in medical education. A critical review of emotion measures and their relevance for medical education may help to propel this research forward. In this chapter, we have discussed a variety of approaches to measuring emotions in the affective sciences, which includes technologies developed from computer science and methods used in educational psychology. This includes advanced technologies used to collect data (e.g., Wi-Fi-enabled tablets for more efficient self-report measures, mobile emotion-detection devices for physiological measures), analyze data (e.g., software for analyzing facial expression and speech), and provide contextual information (e.g., log files, eye-tracking). We have also identified advantages and disadvantages for commonly used data channels, including self-report, behavioral, and physiological measures, based on our research and a review of the literature. For example, self-report measures are efficient and well suited for group comparisons across a variety of settings but may be susceptible to cognitive and memory biases. On the other hand, behavioral channels can provide an objective and nonintrusive measure of emotion expressions but may be time-intensive to analyze if conducted manually and may not be feasible in naturalistic clinical settings if high-quality videos or consistent views of subjects are required, as is the case for automated facial expression analyses. Physiological data channels are not susceptible to attempts to conceal emotions (e.g., social desirability, impression management tactics) and provide a continuous stream of data to allow for analyses of temporal fluctuations; however, at this stage they are less capable of differentiating discrete emotional states (e.g., anxiety versus excitement) and better suited for identifying intensity of arousal levels (high versus low arousal).

Medical culture, and the corresponding socialization processes it promotes throughout training, is likely to influence the types of emotions experienced and the way in which they are expressed across these unique learning environments. As such, in our review, we have given special consideration to medical education when discussing emotion measures and have provided examples of our own deliberations, challenges, and decisions from this burgeoning area of research. However, these findings also have implications for other health science professions involved in patient care, such as nursing, ambulatory services, physical therapy, and social work. For example, we have discussed the future of emotion detection and how these measures are being used to improve instruction through their integration into affect-aware learning technologies, which can be broadly applied to training and education programmes across health science fields. By detecting, tracing, and scaffolding emotional processes throughout the learning trajectory, instructors may be better equipped to prepare trainees for professional practice. Ultimately, decisions about which measures to select will depend on a host of factors, including: research and educational goals, access to emotion-detection technologies, and practical challenges of the context (e.g., classroom versus real-world training); however, we hope these discussions help to illuminate methodological considerations and provide a blueprint for future work in this area.

Notes

- 1.

Although the componential approach uses the term emotional episodes to capture the dynamic nature of emotions (see Scherer, 2009; Shuman & Scherer, 2014), we have opted to use the term emotional states as it aligns more closely with the terminology used more commonly in questionnaires (e.g., AEQ Pekrun et al., 2011) and self-regulated learning models (e.g., Azevedo et al., 2013).

- 2.

Image for illustrative purposes only. Manufacturer user manual suggests placing device on inside of wrist.

References

Arora, S., Sevdalis, N., Aggarwal, R., Sirimanna, P., Darzi, A., & Kneebone, R. (2010). Stress impairs psychomotor performance in novice laparoscopic surgeons. Surgical Endoscopy, 24, 2588–2593.

Arora, S., Sevdalis, N., Nestel, D., Tierney, T., Woloshynowych, M., & Kneebone, R. (2009). Managing intraoperative stress: What do surgeons want from a crisis training program? The American Journal of Surgery, 197, 537–543.

Artino, A. R. (2013). When I say … emotion in medical education. Medical Education, 47, 1062–1063.

Artino, A. R., Holmboe, E. S., & Durning, S. J. (2012). Control-value theory: Using achievement emotions to improve understanding of motivation, learning, and performance in medical education. Medical Teacher, 34, 148–160.

Artino, A. R., La Rochelle, J. S., & Durning, S. J. (2010). Second year medical students’ motivational beliefs, emotions, and achievement. Medical Education, 44, 1203–1212.

Azenberg, Y., & Picard, R. (2014). Feel: A system for frequent event and electrodermal activity labeling. IEEE Journal of Biomedical and Health Informatics, 18, 266–277.

Azevedo, R., Harley, J., Trevors, G., Duffy, M., Feyzi-Behnagh, R., Bouchet, F., & Landis, R. S. (2013). Using trace data to examine the complex roles of cognitive, metacognitive, and emotional self-regulatory processes during learning with multi-agents systems. In R. Azevedo, & V. Aleven (Eds.), International handbook of metacognition and learning technologies (pp. 427–449). New York, NY: Springer.

Barrett, L. F. (2009). The future of psychology: Connecting mind to brain. Perspectives in Psychological Science, 4, 326–339.

Becker, E. S., Goetz, T., Morger, V., & Ranellucci, R. (2014). The importance of teachers’ emotions and instructional behavior for their students’ emotions—An experience sampling analysis. Teaching and Teacher Education, 43, 15–26.

Boekaerts, M. (2011). Emotions, emotion regulation, and self-regulation of learning. In B. Zimmerman & D. Schunk (Eds.), Handbook of self-regulation of learning and performance (pp. 408–425). New York, NY: Routledge.

Calvo, R. A., & D’Mello, A. C. (2012). Frontiers of affect-aware learning technologies. IEEE Intelligent Systems, 27, 86–89.

Chambers, R., Gullone, E., & Allen, N. R. (2009). Mindful emotion regulation: An integrative review. Clinical Psychology Review, 29, 560–572.

Cheung, R. Y., & Au, T. K. (2011). Nursing students’ anxiety and clinical performance. Journal of Nursing Education, 50, 286–289.

Chew, B. H., Zain, A. M., & Hassan, F. (2013). Emotional intelligence and academic performance in first and final year medical students: A cross-sectional study. BMC Medical Education, 13, 1–10.

Clarke, S., Horeczko, T., Cotton, D., & Bair, A. (2014). Heart rate, anxiety and performance of residents during a simulated critical clinical encounter: A pilot study. BMC Medical Education, 14, 1–8.

Craig, S., D’Mello, C. S., Witherspoon, A., & Graesser, A. (2008). Emote-aloud during learning with AutoTutor: Applying the facial action coding system to cognitive-affective states during learning. Cognition & Emotion, 22, 777–788.

Croskerry, P., Abbass, A. A., & Wu, A. W. (2008). How doctors feel: Affective issues in patients’ safety. The Lancet, 372, 1205–1206.

Csikszentmihalyi, M., & Larson, R. (1987). Validity and reliability of the experience sampling method. Journal of Nervous and Mental Disease, 9, 526–536.

D’Mello, S. (2013). A selective meta-analysis on the relative incidence of discrete affective states during learning with technology. Journal of Educational Psychology, 105, 1082–1099.

D’Mello, S. K., Dale, R. A., & Graesser, A. C. (2012). Disequilibrium in the Mind, Disharmony in the Body. Cognition & Emotion, 26, 362–374.

D’Mello, S. K., & Graesser, A. C. (2009). Automatic detection of learners’ emotions from gross body language. Applied Artificial Intelligence, 23(2), 123–150.

D’Mello, S. K., & Graesser, A. C. (2010). Multimodal semi-automated affect detection from conversational cues, gross body language, and facial features. User Modeling and User-Adapted Interaction, 20(2), 147–187.

D’Mello, S. K. & Graesser, A. C. (2012). Dynamics of Affective States during Complex Learning, Learning and Instruction, 22, 145–157.

D’Mello, S., Lehman, B., Pekrun, R., & Graesser, A. (2012). Confusion can be beneficial for learning. Learning and Instruction, 29, 153–170.

Darwin, C. (1872). The expression of the emotions in man and animals. London, England: John Murray.

Duffy, M., Azevedo, R., Sun, N., Griscom, S., Stead, V., Crelinsten, L., … Lachapelle, K. (2015). Team regulation in a simulated medical emergency: An in-depth analysis of cognitive, metacognitive, and affective processes. Instructional Science, 43, 401–426.

Duffy, M., Azevedo, R., Wiseman, J., Sun, N., Dhillon, I., Griscom, S., … Maniatis, T. (2013, August). Co-regulating critical care cases: Examining regulatory processes during team-based medical simulation training. Paper presented at the biennial meeting of the European Association of Research in Learning and Instruction, Munich, Germany.

Duffy, M., Lajoie, S., Azevedo, R., Pekrun, R., & Lachapelle, K. (2014, May). Examining emotional states and surgical skills among medical trainees. Poster presented at the Annual Learning Environments Across the Disciplines meeting (Emerging Scholar Consortium), Montreal, Canada.

Duffy, M., Lajoie, S., Jarrell, A., Pekrun, R., Azevedo, R., & Lachapelle, K. (2015, April). Emotions in medical education: Developing and testing a scale of emotions across medical learning environments. Paper presented at the annual meeting of the American Educational Research Association, Chicago, IL.

Duffy, M., Lajoie, S., Pekrun, R., Ibrahim, M., & Lachapelle, K. (2015, March). Simulation versus operating room: Examining relations between emotional states, learning processes, and technical skills performance across training environments. Poster presented at the annual meeting of the consortium of American College of Surgeons Accredited Education Institutes, Chicago, IL.

Dyrbye, L. N., West, C. P., Satele, D., Boone, S., Tan, L., Sloan, J., & Shanfelt, T. D. (2014). Burnout among U.S. medical students, residents, and early career physicians relative to the general U.S. population. Academic Medicine, 89, 443–451.

Efklides, A. (2011). Interactions of metacognition with motivation and affect in self-regulated learning: The MASRL model. Educational Psychologist, 46(1), 6–25.

Ekman, P. (1972). Universal and cultural differences in facial expressions of emotions. In J. K. Cole (Ed.), Nebraska symposium on motivation, 1971 (pp. 207–283). Lincoln, NE: University of Nebraska Press.

Ekman, P. (1992). An argument for basic emotions. Cognition & Emotion, 6, 169.

Ekman, P., & Cordar, D. (2011). What is meant by calling emotion basic. Emotion Review, 3, 364–370.

Ekman, P., & Friesen, W. V. (1978). Facial action coding system: A technique for the measurement of facial movement. Palo Alto, CA: Consulting Psychologists Press.

Ekman, P., Friesen, W. V., & Hager, J. C. (2002). Facial action coding system. Salt Lake City, UT: Research Nexus, Network Research Information.

Ericsson, K., & Simon, H. (1993). Protocol analysis: Verbal reports as data (2nd ed.). Boston, MA: MIT Press.

Fedor, S., & Picard, R. (2014, September). Ambulatory EDA: Comparisons of bilateral forearm and calf locations. Poster presented at the 54th Annual Meeting of the Society of Psychophysiological Research, Atlanta, GA.

Fisher, A. H., & van Kleef, G. A. (2010). Where have all the people gone? A plea for including social interaction in emotion research. Emotion Review, 2, 208–211.

Fraser, K., Huffman, J., Ma, I., Sobczak, M., McIlwrick, J., Wright, B., & McLaughlin, K. (2014). The emotional and cognitive impact of unexpected simulated patient death: A randomized controlled trial. Chest, 145, 958–963.

Fraser, K., Ma, I., Teteris, E., Baxter, H., Wright, B., & McLaughlin, K. (2012). Emotion, cognitive load and learning outcomes during simulation training. Medical Education, 46, 1055–1062.

Frijda, N. H. (1986). The emotions. New York, NY: Cambridge University Press.

Frijda, N. H. (1987). Emotion, cognitive structure, and action tendency. Cognition & Emotion, 1, 115–143.

Gendron, M., & Barrett, L. F. (2009). Reconstructing the past: A century of ideas about emotion in psychology. Emotion Review, 1, 316–339.

Goetz, T., Frenzel, A., Hall, N. C., Nett, U. E., Pekrun, R., & Lipnevich, A. A. (2014). Types of boredom: An experience sampling approach. Motivation and Emotion, 38, 401–419.

Goetz, T., Frenzel, A. C., Pekrun, R., Hall, N. C., & Lüdtke, O. (2007). Between- and within-domain relations of students’ academic emotions. Journal of Educational Psychology, 99(715), 733.

Goetz, T., Pekrun, R., Hall, N., & Haag, L. (2006). Academic emotions from a social-cognitive perspective: Antecedents and domain specificity of students’ affect in the context of Latin instruction. The British Journal of Psychological Society, 76, 289–308.

Graesser, A., D’Mello, S., & Strain, A. (2014). Emotions in advanced learning technologies. In R. Pekrun & L. Linnenbrink-Garcia (Eds.), International handbook of emotions in education (pp. 289–310). New York, NY: Routledge.

Gross, J. J., & Barrett, L. F. (2011). Generation and emotion regulation: One or two depends on your point of view. Emotion Review, 3, 8–16.

Harvey, A., Bandiera, G., Nathens, A. B., & LeBlanc, V. R. (2012). Impact of stress on resident performance in simulated trauma scenarios. The Journal of Trauma and Acute Care Surgery, 72, 497–503.

Hassan, I., Weyers, P., Maschuw, K., Dick, B., Gerdes, B., Rothmund, M., & Zielke, A. (2006). Negative stress-coping strategies among novices in surgery correlate with poor virtual laparoscopic performance. British Journal of Surgery, 93, 1554–1559.

Hektner, J. M., Schmidt, J. A., & Csikszentmihalyi, M. (2007). Experience sampling method: Measuring the quality of everyday life. Thousand Oaks, CA: Sage.

Hunziker, S., Laschinger, L., Portmann-Schwarz, S., Semmer, N. K., Tschan, F., & Marsch, S. (2011). Perceived stress and team performance during a simulated resuscitation. Intensive Care Medicine, 37, 1473–1479.

Hunziker, S., Pagani, S., Fasler, K., Tschan, F., Semmer, N. K., & Marsch, S. (2013). Impact of a stress coping strategy on perceived stress levels and performance during a simulated cardiopulmonary resuscitation. BMC Emergency Medicine, 13, 8. doi:10.1186/1471-227X-13-8.

Hunziker, S., Semmer, N. K., Tschan, F., Schuetz, P., Mueller, B., & Marsch, S. (2012). Dynamics and association of different acute stress markers with performance during a simulated resuscitation. Resuscitation, 83, 572–578.

Immordino-Yang, M. H., & Christodoulou, J. A. (2014). Neuroscientific contributions to understanding and measuring emotions in educational contexts. In R. Pekrun & L. Linnenbrink-Garcia (Eds.), International handbook of emotions in education (pp. 607–624). New York, NY: Routledge.

Izard, C. E. (1993). Four systems for emotion activation: Cognitive and noncognitive processes. Psychological Review, 100, 68–90.

Izard, C. E. (2010). The many meanings/aspects of emotion: Definitions, functions, activation, and regulation. Emotion Review, 2, 363–370.

James, W. (1890). The principles of psychology. New York, NY: Dover.

Järvenoja, H., Volet, S., & Järvelä, S. (2013). Regulation of emotions in socially challenging learning situations: An instrument to measure the adaptive and social nature of the regulation process. Educational Psychology: An International Journal of Experimental Educational Psychology, 33, 31–58.

Kasman, D. L., Fryer-Edwards, K., & Braddock, C. H. (2003). Educating for professionalism: Trainees’ emotional IM and pediatrics inpatient ward. Academic Medicine, 78, 741.

Keitel, A., Ringleb, M., Schwartges, I., Weik, U., Picker, O., Stockhorst, U., & Deinzer, R. (2011). Endocrine and psychological stress responses in a simulated emergency situation. Psychoneuroendocrinology, 36, 98–108.

Keltner, D., & Buswell, B. (1997). Embarrassment: Its distinct form and appeasement functions. Psychological Bulletin, 122(3), 250–270.

Kreibig, S. D., & Gendolla, G. H. E. (2014). Autonomic nervous system measurement of emotion in education and achievement settings. In R. Pekrun & L. Linnenbrink-Garcia (Eds.), International handbook of emotions in education (pp. 625–642). New York, NY: Routledge.

Kring, A. M., & Sloan, D. S. (2007). The facial expression coding system (FACES): Development, validation, and utility. Psychological Assessment, 19, 210–224.

Lajoie, S. P. (2009). Developing professional expertise with a cognitive apprenticeship model: Examples from avionics and medicine. In K. A. Ericsson (Ed.), Development of professional expertise: Toward measurement of expert performance and design of optimal learning environments (pp. 61–83). New York, NY: Cambridge University Press.

Lajoie, S. P., Cruz-Panesso, I., Poitras, E., Kazemitabar, M., Wiseman, J., Chan, L. K., & Hmelo-Silver, C. (2012). Can technology foster emotional regulation in medical students? An international case study approach. In M. Cantoia, B. Colombo, A. Gaggioli, & B. Girani De Marco (Eds.), Proceedings of the 5th biennial meeting of the EARLI Special Interest Group 16 Metacognition (pp. 148), Milano, Italy.

Lajoie, S. P., Hmelo-Silver, C., Wiseman, J., Chan, L K., Lu, J., Khurana, C., … Kazemitabar, M. (2014). Using online digital tools and videos to support international problem-based learning. Interdisciplinary Journal of Problem-Based Learning, 8. Retrieved from http://dx.doi.org/10.7771/1541-5015.1412.

Lajoie, S., Poitras, E., Naismith, L., Gauthier, G., Summerside, C., & Kazemitabar, M. (2013). Modelling domain-specific self-regulatory activities in clinical reasoning. In H. C. Lane, K. Yacef, J. Mostow, & P. Pavlik (Eds.), Lecture Notes in Computer Science: Vol. 7926. Artificial Intelligence in Education (pp. 632–635). Berlin, Germany: Springer.

Larsen, J. T., & McGraw, P. (2011). Further evidence for mixed emotions. Journal of Personality and Social Psychology, 100, 1095–1110.

Lazarus, R. S. (1991). Emotion and adaptation. New York, NY: Oxford University Press.

Leblanc, V. R. (2009). The effects of acute stress on performance: implications for health professions education. Academic Medicine, 84, 25–33.

LeBlanc, V. R., & Bandiera, G. W. (2007). The effects of examination stress on the performance of emergency medicine residents. Medical Education, 41, 556–564.

Leblanc, V. R., MacDonald, R. D., McArthur, B., King, K., & Lepine, T. (2005). Paramedic performance in calculating drug dosages following stressful scenarios in a human patient simulator. Prehospital Emergency Care, 9, 439–444.

LeBlanc, V. R., Manser, T., Weinger, M. B., Musson, D., Kutzin, J., & Howard, S. K. (2011). The study of factors affecting human and systems performance in healthcare using simulation. Simulation in Healthcare, 6, 24–29.

Leblanc, V. R., McConnell, M. M., & Monteiro, S. D. (2015). Predictable chaos: A review of the effects of emotions on attention, memory and decision-making. Advances in Health Sciences Education, 20, 265–282.

LeBlanc, V. R., Regehr, C., Tavares, W., Scott, A. K., Macdonald, R., & King, K. (2012). The impact of stress on paramedic performance during simulated critical events. Prehospital and Disaster Medicine, 27, 369–374.

LeBlanc, V. R., Woodrow, S. I., Sidhu, R., & Dubrowski, A. (2008). Examination stress leads to improvements on fundamental technical skills for surgery. American Journal of Surgery, 196, 114–119.

Markey, A., & Loewenstein, G. (2014). Curiosity. In R. Pekrun & L. Linnenbrink-Garcia (Eds.), International handbook of emotions in education (pp. 228–245). New York, NY: Taylor & Francis.

Matejka, M., Kazzer, P., Seehausen, M., Bajbouj, M., Klann-Delius, G., Menninghaus, W., … Prehn, K. (2013). Talking about emotion: Prosody and skin conductance indicate emotion regulation. Frontiers in Psychology, 4, 1–11.

Matsumoto, D., & Hwang, H. S. (2011). Evidence for training the ability to read microexpressions of emotion. Motivation and Emotion, 35(2), 181–191.

Mauss, I. B., & Robinson, M. D. (2009). Measures of emotions: A review. Cognition & Emotion, 23, 209–237.

McConnell, M. M., & Eva, K. W. (2012). The role of emotion in the learning and transfer of clinical skills and knowledge. Academic Medicine, 87, 1316–1322.

McNaughton, N. (2013). Discourse(s) of emotion within medical education: The ever-present absence. Medical Education, 47(1), 71–79.

Meinhardt, J., & Pekrun, R. (2003). Attentional resource allocation to emotional events: An ERP study. Cognition & Emotion, 17, 477–500.