Abstract

This paper describes the application of complex artificial neural networks (CANN) in the inverse identification problem of the elastic and dissipative properties of solids. Additional information for the inverse problem serves the components of the displacement vector measured on the body boundary, which performs harmonic oscillations at the first resonant frequency. The process of displacement measurement in this paper is simulated using calculation of finite element (FE) software ANSYS. In the shown numerical example, we focus on the accurate identification of elastic modulus and quality of material depending on the number of measurement points and their locations as well as on the architecture of neural network and time of the training process, which is conducted by using algorithms RProp, QuickProp.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Complex-value artificial neural network (CANN)

- Identification of mechanical properties

- Finite element method

- ANSYS

- Inverse problem

This paper describes the application of complex artificial neural networks (CANN) in the inverse identification problem of the elastic and dissipative properties of solids. Additional information for the inverse problem serves the components of the displacement vector measured on the body boundary, which performs harmonic oscillations at the first resonant frequency. The process of displacement measurement in this paper is simulated using calculation of finite element (FE) software ANSYS. In the shown numerical example, we focus on the accurate identification of elastic modulus and quality of material depending on the number of measurement points and their locations as well as on the architecture of neural network and time of the training process, which is conducted by using algorithms RProp, QuickProp.

1 Introduction

The artificial neural networks (ANN) [1] are widely used in different regions of science and industry. One of their applications in mechanics is solving the coefficient inverse problem of identifying elastic [2–6] and dissipative properties of solids [7–11]. In the early 1990s, the complex artificial neural networks (CANN) were proposed in the works of T. Nitta [12, 13], which then have been widely used for various applications [14–16]. The CANN parameters (weights, threshold values, inputs and outputs) are complex numbers, which used in various fields of modern technology, such as optoelectronics, filtering, imaging, speech synthesis, computer vision, remote sensing, quantum devices, spatial–temporal analysis of physiological neural devices and systems. Application of CANN in mechanics is a new research area, which has been developed over the last few years.

This paper describes the application of CANN in solving coefficient inverse problem of identifying elastic (Young’s modulus) and dissipative (quality factor) properties of solids. Additional information for solving this inverse problem serves the components of the displacement vector measured at the boundary of the body (in a discrete set of points), which performs harmonic oscillations in the vicinity of the first resonant frequency. The process of displacement measurement in this paper is simulated using calculation of finite element (FE) software ANSYS. In the numerical example shown below, the accuracy of identifying mechanical properties of the material depends on the number of measurement points and their locations, as well as on the architecture of neural network and time of the training process (which is conducted by using algorithms CBP).

2 Formulation of Identification Inverse Problems for Mechanical Properties

In the plane problem of elasticity theory, the steady-state harmonic oscillations of a rectangular area (a × b) with the angular frequency ω are considered (Fig. 12.1). The rectangle is fixed on the left side boundary S u , the vertical force F 0 is applied to the lower right corner, the rest of the right boundary S t is free from mechanical stresses. The mechanical properties of the material are described by Young’s elastic modulus, E, Poisson’s ratio, ν, and quality factor, Q.

The vibration equations of the solid have the form [18]:

The boundary conditions suppose the presence of a force at the point 2 (Fig. 12.1) and similar conditions in the rest of the boundary:

The additional information to solve the inverse problem are the displacements, measured at the points 2–5 (Fig. 12.1):

Here σ kj , ɛ kj are the stress and strain tensor components, respectively, ρ is the density. The elastic coefficients correspond to the isotropic body: c 11 = c 22 = λ + 2μ, c 12 = c 21 = λ, c 44 = μ, λ, μ are the Lamé coefficients. The applied vibration frequency ω is matched with proper first resonance frequency, which does not take into account the dissipation of mechanical energy. Coefficients α, β characterize dissipation calculated according to the method [17].

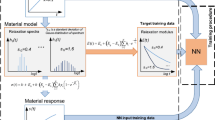

3 Structure of CANN

Structure of CANN in solving coefficient inverse problem of identification of the elastic (Young’s modulus) and dissipative (quality factor) properties of solids (Fig. 12.2).

This CANN is formed in three layers, called the input layer, hidden layer, and output layer. Each of layers consists of one or more nodes, represented in the diagram by the small circles, as shown in Fig. 12.2. The lines between the nodes indicate the flow of information from one node to the next (with weights W, \( \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{W} \)).

When neural networks are trained, they works basically in the following way.

-

(1)

The first step in training a network is to initialize the network connection weights by small random values (−1.0, 1.0).

-

(2)

The input pattern (X 1 = X 1r + iX 1i , X 2 = X 2r + iX 2i , X n = X nr + iX ni ) on the base of which the network will be trained are presented at the input layer of the net and the net is run normally to see what output pattern it actually produces.

-

(3)

The actual output pattern are compared to the desired output pattern for corresponding input pattern. The differences between actual and desired patterns form the error pattern:

$$ Y_{n} = \mathop \sum \limits_{m} W_{nm} X_{m} = X + iY = Z; $$(12.4)$$ O_{n} = F_{c} \left( z \right) = F\left( x \right) + iF\left( y \right) = \frac{1}{1 + \exp ( - X)} + i\frac{1}{1 + \exp ( - Y)}; $$(12.5)$$ Ep = \left( \frac{1}{N} \right)\sum\nolimits_{n = 1}^{N} {\left| {T_{n} - O_{n}} \right|}^{2} = \frac{1}{2}\sum\nolimits_{n = 1}^{N} {(\left| {Re\left( {T_{n} } \right) - Re\left( {O_{n} } \right)} \right|^{2} } + \left. {\left| {Im\left( {T_{n} } \right) - Im\left( {O_{n} } \right)} \right|^{2} } \right), $$(12.6)where W nm is the (complex-valued) weight connecting neuron n and m, X m is the (complex-valued) input signal from neuron m, F is the sigmoid function, Ep is the square error for the pattern p, O n are the output values of the input neuron, T n is the target pattern.

-

(4)

The error pattern is used to adjust the weights on the output layer so that the error pattern reduced the next time if the same pattern was presented at the inputs:

$$ W_{nm} = W_{nm} + \Delta W_{nm} = W_{nm} + \overline{{H_{m} }} \Delta \lambda_{n} ; $$(12.7)$$ \Delta \lambda_{n} = \varepsilon \left\{ {Re\left[ {\delta^{n} } \right]\left( {1 - Re\left[ {O_{n} } \right]} \right)Re\left[ {O_{n} } \right] + i Im\left[ {\delta^{n} } \right]\left( {1 - Im\left[ {O_{n} } \right]} \right)Im\left[ {O_{n} } \right]} \right\}, $$(12.8)where λ n is the threshold value of the output neuron n, \( \delta^{n} = T_n - O_n \) is the difference (error) between the actual pattern and the target pattern, H m are the output values of the hidden neuron.

-

(5)

Then the weights of the hidden layer are adjusted similarly, it is stated in this time what they actually produce the neurons in the output layer to form the error pattern for the hidden layer:

$$ \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{W}_{lm} = \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{W}_{lm} + \Delta \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{W}_{lm} = \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{W}_{lm} = \overline{{{\text{l}}_{l} }} \Delta \theta_{m} ; $$(12.9)$$ \begin{aligned} \Delta \theta_{m} =&\,\varepsilon\{ \left( {1- Re\left[ {H_{m} } \right]} \right)Re\left[ {H_{m} }\right]\,\sum\nolimits_{n} {\left( {Re\left[ {\delta^{n} }\right]\left( {1 - Re\left[ {o_{n} } \right]} \right)} \right.}\,Re\left[ {W_{nm} } \right] \\&+ Im\left[ {\delta^{n} }\right]\left( {1 - Im\left[ {o_{n} } \right]} \right)Im\left.{\left[ {o_{n} } \right]Im\left[ {W_{nm} } \right]} \right)\} -i\varepsilon \{ (1 \\& - Im\left. {\left[ {Hm} \right]}\right)Im\left[ {Hm} \right]\sum\nolimits_{n} {\left( {Re\left[{\delta^{n} } \right]} \right.\left( {1 - Re\left[ {o_{n} } \right]}\right)} Re\left[ {o_{n} } \right]Im\left[ {W_{nm} } \right] \\&-Im\left[ {\delta^{n} } \right]\left( {1 - Im\left[ {o_{n} } \right]}\right)Im\left[ {o_{n} } \right]Re\left[ {W_{nm} } \right])\} \\\end{aligned} $$(12.10)where \( \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{W}_{lm} \) is the weight between input neuron l and hidden neuron m, I l are the output values of the input neuron l, θ m is the threshold value of the hidden neuron m.

-

(6)

The network is trained by presenting each input pattern in turn at the inputs and propagating forward and backward, followed by the next input pattern. Then this cycle is repeated many times. The training stops when any of these conditions occurs: the maximum number of epochs (repetitions) is reached or the error has an acceptable training error threshold.

After network training, a stable network structure is established. The network then can be used to predict output values from new input values, i.e. to solving coefficient inverse problem of identifying elastic and dissipative properties of solids.

4 Application of CANN to Solve Coefficient Inverse Problem for Identification of Elastic (Young’s Modulus) and Dissipative (Quality Factor) Properties of Solids

In order to identify Young’s modulus and quality factor as it was described above used CANN, in which they were output data. As input data, we used displacement amplitudes (12.3), measured on solid surfaces. By training the CANN with a set of input and output data, the first step of the computation process was calculation of natural resonance frequencies, and following definition of steady oscillations of the solid at these frequencies or in their neighborhood with the mechanical energy dissipation.

Figure 12.1 shows four points 2, 3, 4, 5, where displacement amplitudes were measured. The process of the displacement measurements is simulated using calculation of finite element software ANSYS. Table 12.1 presents the input and output data for the “measurement” at the point 2.

CANN has the following configuration in dependence on number of points and the frequency “measurement”:

-

(i)

at one measurement point and one frequency, this neural network consists of two neural input values: \( \varvec{U}_{{2\varvec{r}}} + \varvec{iU}_{{2\varvec{i}}} \), \( \varvec{V}_{{2\varvec{r}}} + \varvec{iV}_{{2\varvec{i}}} \) and one neural output value: E + iQ;

-

(ii)

at one measurement point and three frequencies, this neural network consists of six neural input values: \( \varvec{U}_{{1\varvec{r}}} + \varvec{iU}_{{1\varvec{i}}} ,\varvec{ V}_{{1\varvec{r}}} + \varvec{iV}_{{1\varvec{i}}} \), \( \varvec{U}_{{2\varvec{r}}} + \varvec{iU}_{{2\varvec{i}}} ,\varvec{ V}_{{2\varvec{r}}} + \varvec{iV}_{{2\varvec{i}}} \), \( \varvec{U}_{{3\varvec{r}}} + \varvec{iU}_{{3\varvec{i}}} ,\varvec{ V}_{{3\varvec{r}}} + \varvec{iV}_{{3\varvec{i}}} \) and one neural output value: E + iQ;

-

(iii)

at three measurement points and one frequency, this neural network consists of six neural input values: \( \varvec{U}_{{1\varvec{r}}} + \varvec{iU}_{{1\varvec{i}}} ,\varvec{ V}_{{1\varvec{r}}} + \varvec{iV}_{{1\varvec{i}}} \), \( \varvec{U}_{{2\varvec{r}}} + \varvec{iU}_{{2\varvec{i}}} ,\varvec{ V}_{{2\varvec{r}}} + \varvec{iV}_{{2\varvec{i}}} \), \( \varvec{U}_{{3\varvec{r}}} + \varvec{iU}_{{3\varvec{i}}} ,\varvec{ V}_{{3\varvec{r}}} + \varvec{iV}_{{3\varvec{i}}} \) and one neural output value: E + iQ.

The results of the computer experiments, conducted by using CANN with 2000 patterns, in which, 1800 patterns were used for training and 200 for testing, are show in Tables 12.2, 12.3, 12.4 and 12.5.

Time of training for CANN with different epochs (Table 12.3) is shown in Fig. 12.3. Time of training and testing for CANN with different number of neurons in hidden layer is shown in Fig. 12.4. The relationship between time of training and testing for CANN with different architecture is shown in Fig. 12.5 (data from Table 12.5).

Figures 12.6, 12.7, 12.8 and 12.9 show the results of testing with 200 patterns in CANN (with architecture 2-4-1, see Table 12.2, the row 3). The circles present the data of test E, triangles and squares describe the forecast data E c , obtained by using CANN. The relative errors δ E , δ Q are calculated in the forms: δ E = |E c − E|/E, δ Q = |Q c − Q|/Q. In this case, corresponding triangles and squares with the identification error no exceeding of 20 %, are shown in Figs. 12.6 and 12.7.

The similar results for threshold error of 10 % are shown in Figs. 12.8 and 12.9.

Figure 12.10 demonstrates the results of identification in dependence on number of test data in the form of two curves, the solid curves correspond to the required data, and the dashed lines define the forecast data obtained by using CANN. The graph of training error (12.6) with 20,000 epochs is shown in Fig. 12.11.

5 Conclusion

In this paper, we developed a method for determination of material elastic and dissipative properties by using data for harmonic oscillations on the resonance frequency based on a combination of the finite element method and CANN. The results of the experiments showed that CANN with one of the following architectures:

-

2 (input neurons)—4 (hidden neurons)—1 (output neuron)

-

or

-

6 (input neurons)—6 (hidden neurons)—1 (output neuron)

gives the best result of identification. The time expenses caused by the CANN training were also estimated in this paper. The developed method and computer program could be used to determine the dissipative properties on different frequencies (not only on the first one), as well as in anisotropic elastic solids.

References

H. Simon, Neural Network a Comprehensive Foundation, 2nd edn. (Prentice Hall, New Jersy, 1998)

A.A. Krasnoschekov, B.V. Sobol, A.N. Soloviev, A.V. Cherpakov, Russ. J. Nondestruct. Test. 6, 67 (2011)

S.W. Liu, J.H. Huang, J.C. Sung, C.C. Lee, Comput. Methods Appl. Mech. Eng. 191(4), 2831 (2002)

Hasan Temurtas, Feyzullah Temurtas, Expert Syst. Appl. 38(4), 3446 (2011)

V. Khandetsky, I. Antonyuk, NDT&E Int. 35, 483 (2002)

Y.G. Xu, G.R. Liu, Z.P. Wu, X.M. Huang, Int. J. Solids Struct. 38(8), 5625 (2001)

P. Korczak, H. Dyja, E. Łabuda, J. Mater. Process. Technol. 80–81(8), 481 (1998)

T. Mira, S. Zoran, L. Uros, Polymer 48(8), 5340 (2007)

J. Ghaisari, H. Jannesari, M. Vatani, Adv. Eng. Softw. 45(3), 91 (2012)

P. Iztok, T. Milan, K. Goran, Expert Syst. Appl. 39(4), 5634 (2012)

Y. Sun, W. Zeng, Y. Han, X. Ma, Y. Zhao, P. Guo, G. Wang, Comput. Mater. Sci. 60(7), 239 (2012)

T. Nitta, in Proceedingsof the International Joint Conference on Neural Networks, IEEE, p. 1649. Nagoya, 1993

T. Nitta, Neural Network 10, 1391 (1997)

A. Hirose (ed.), Complex-Valued Neural Networks: Theories and Applications. The Series on Innovative Intelligence. (World Scientific, Singapore 2003)

C. Li, X. Liao, J. Yu, J. Comput. Syst. Sci. 67, 623 (2003)

T. Nitta, Complex-Valued Neural Networks: Utilizing High-Dimensional Parameters (Information Science Reference, New York, 2009)

A.V. Belokon, A.V. Nasedkin, A.N. Soloviev, Appl. Math. Mech. 66(3), 491 (2005)

W. Nowacki, Theory of Elasticity (MIR Publishers, Moscow, 1976). (in Russian)

Acknowledgments

This work was partially supported by the European Framework Program (FP-7) “INNOPIPES” (Marie Curie Actions, People), grant # 318874, and Russian Foundation for Basic Research (grants # 13-01-00196_a, # 13-01-00943_a).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer International Publishing Switzerland

About this paper

Cite this paper

Soloviev, A.N., Giang, N.D.T., Chang, SH. (2014). Determination of Elastic and Dissipative Properties of Material Using Combination of FEM and Complex Artificial Neural Networks. In: Chang, SH., Parinov, I., Topolov, V. (eds) Advanced Materials. Springer Proceedings in Physics, vol 152. Springer, Cham. https://doi.org/10.1007/978-3-319-03749-3_12

Download citation

DOI: https://doi.org/10.1007/978-3-319-03749-3_12

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-03748-6

Online ISBN: 978-3-319-03749-3

eBook Packages: Physics and AstronomyPhysics and Astronomy (R0)