Abstract

Autism spectrum disorder (ASD) is a neurodevelopmental disorder with a high prevalence in males. Most studies are based on the sample of male ASD individuals, which may lead to a bias in understanding female ASD individuals. Not only in the diagnostic characteristics but also the social cognitive performance, gender differences have been found in the ASD population, but the reason is still unclear. To explore whether gender differences are due to specific pathology of ASD or the different social attention between males and females, we collected behavioral and eye movement data from 48 typically developing (TD) adults during a facial emotion discrimination task. We analyzed the behavioral performance, gaze patterns, and pupillary responses during the emotional face processing between males and females. Results showed that the two groups had similar accuracy for facial emotion discrimination, but males spent more time. In addition, males preferred the mouth region while processing emotional faces. In contrast, females preferred to fixate on the eye region and had greater pupillary responses to this region than males. These findings suggest that males and females differ in their visual processing strategies and sensitivity to emotional faces, which may extend to the ASD population.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Autism spectrum disorder (ASD) is a neurodevelopmental disorder more common in males. It is likely four times more prevalent than in females [1]. Because of this, the number of female ASD individuals included in the existing studies is insufficient, so that difficult to accurately evaluate the potential gender effect on symptom presentation. To date, we remain puzzled about the nature of gender differences in ASD, and thus, it is warranted to continue the research.

Gender differences are not only reflected in the diagnostic characteristics of ASD. Regarding social cognition, individuals with ASD generally have impaired attention to social stimuli, especially face stimuli, which has been well demonstrated by eye-tracking technology [2]. Individuals with ASD fixate less on the core features of the emotional face, especially the eye region [3, 4], leading researchers to speculate that abnormal visual processing may be a major reason for poor emotional discrimination in ASD, which in turn aggravates their difficulty in interpreting facial expressions. Existing research found that visual attention to faces is also modulated by gender in ASD: females focus more on faces in the social scene than males [5]. Also, the gender effects are found in typically developing (TD) individuals: females are more sensitive to emotional faces than males, and as age increases, they still perform better in emotional recognition [6, 7]. Therefore, it remains to be studied whether the gender differences in the visual processing of emotional faces are due to the pathology of ASD or to differences in social attention between males and females.

Based on the above points, to provide intuitional evidence, we employed eye-tracking technology, designed a dynamic facial emotion discrimination task, and recruited TD adults as participants. We compared and analyzed behavioral performance, visual fixation patterns, and the pupillary response of emotional faces between genders, exploring whether the visual processing of emotional faces is modulated by gender.

2 Method

2.1 Participants

Fifty-one TD university students participated in our study, of whom three participants were excluded due to poor data quality, and finally, 48 participants were included in further analysis. All participants have normal or corrected-to-normal vision. Of the participants, 24 were male, ranging in age from 19 to 29 years (M = 22.65, SD = 2.27), and 24 were female, ranging in age from 19 to 28 years (M = 22.74, SD = 2.29). No significant difference in age was found between males and females by the two-sample t-test. This research was approved by the Ethics Committee of Tianjin Children’s Hospital, and all participants provided written informed consent.

2.2 Stimuli and Experimental Paradigm

Face stimuli were chosen from the Karolinska Directed Emotional Faces (KDEF) database [8], containing the emotional facial photo of 10 male and 10 female actors.

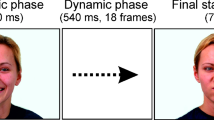

The stimuli presented four emotions: neutral, happy, sad, and angry, and included three angles: 0° (left or right), 45° (left or right), and 90°. All photos were the same size (width: 17° visual angle, height: 23° visual angle), and the critical face features were standardized to the same location. We designed two orders of stimuli presentation, as shown in Fig. 1. Among them, order 1 presents face photos from angle 0° to 90° to 0°, and order 2 presents from 90° to 0° to 90°. This dynamic stimulus presentation imitated the pattern of viewing faces in daily life, improving the ecological validity of the experiment.

2.3 Procedure

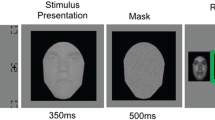

The experiment was conducted in a quiet, soft lightroom. Participants were guided to sit approximately 60cm away from a 27-inch monitor comfortably, which resolution is 1920*1080 pixels. Eye movement data were recorded by Tobii Pro Spectrum eye tracker; the sampling rate is 1200 Hz. Before the experiment, participants conducted a 5-point calibration with the Tobii Pro Lab software. After participants were fully aware of the emotion-discrimination task, the experiment was started by the experimenter pressing the space key.

The experiment consisted of 5 blocks, a total of 80 trials. A cartoon character was first presented in each trial to attract participants’ visual attention to the centre of the screen, lasting for 3s. Then five photos of the same actor, the same emotion, but different angles of the faces were presented in a specific order (as shown in Fig. 1), each lasting for 2s, a total of 10s. The participants were required to discriminate the emotion shown by the actor within 10s of the presentation and give feedback with the mouse by clicking the corresponding icon on the screen at the moment of recognition. The response time and whether correct were recorded, and the participants themselves would not get the result. Across the 80 trials, the two orders of presentation and the four emotional conditions were balanced in number and presented in random order. The eye movement data were recorded throughout the whole process for each block.

2.4 Data Analysis

Data were exported for offline analysis with MATLAB. For behavioral data, the average accuracy and average response time (in the case of correct discrimination) of 48 participants were calculated in two orders of presentation and four emotional conditions, respectively.

For the eye movement data, we calculated the preference index and pupil diameter variation as features. First, taking the nose as the boundary, we divided the face stimuli into eye and mouth ROI. Then we calculated the proportional fixation time for each ROI. And we subtracted the proportional fixation time of the mouth from that of the eye, which is the preference index. If positive, the participant is fixated more on the eyes; if negative, the participant is fixated more on the mouth. For the pupil diameter variation, we filtrated the pupil data during the fixation of the eye ROI. Subsequently, the pupil diameter of the first 3s was used as the baseline, and the baseline was subtracted from the diameter of the facial photos, obtaining the pupil diameter variation. We averaged the two features according to the corresponding presented order and emotional conditions.

In this article, we used behavioral characteristics and eye-movement characteristics as dependent variables, gender as between-group variables, order (1 and 2), and emotion conditions (neutral, happy, sad, angry) as within-group variables. As the dependent variables did not follow the normal distribution, statistical analysis was performed using the generalized estimating equation (GEE) with a significance level of 0.05, adjusting for multiple comparisons using the Bonferroni method.

3 Results

3.1 Behavioral Results

For accuracy, after statistical testing, only the main effect of emotion was significant (p < 0.001). There was no significant difference in accuracy between males (M = 93.45, SD = 3.89) and females (M = 93.55, SD = 3.79). Figure 2 shows that the happy condition has the highest accuracy (M = 98.07, SD = 3.88), followed by the neutral condition (M = 96.61, SD = 4.20). Angry was third (M = 91.92, SD = 7.67) and significantly less accurate than happy (p < 0.001) and neutral (p = 0.001) conditions. The sad condition (M = 87.06, SD = 8.95) was the lowest and significantly lower than happy (p < 0.001), neutral (p < 0.001), and angry (p = 0.001) conditions.

The response time is influenced by order, emotion, and gender, as shown in Fig. 3. The statistical analysis showed that the main effect of order (p < 0.001), emotion.

(p < 0.001), gender (p = 0.034), and the interaction effect of order*emotion (p < 0.001) were significant. Further analysis found that males took more time to discriminate emotions than females, regardless of the stimuli. While for emotion, we found that when the stimuli were presented in order 1, the response time of happy (M = 1.57, SD = 0.42) was the lowest, followed by neutral (M = 2.00, SD = 0.97), angry (M = 2.26, SD = 0.80), and sad (M = 2.51, SD = 0.74) was the highest. There were significant differences between each two of these four emotions. When the stimuli were presented in order 2, the happy condition (M = 2.19, SD = 0.82) was the lowest, which was significantly lower than the neutral (p < 0.001), sad (p < 0.001), and angry (p < 0.001). But there were no significant differences between the remaining three emotions (neutral: M = 3.47, SD = 1.23; sad: M = 3.42, SD = 1.05; angry: M = 3.47, SD = 1.08).

Unexpectedly, we found that response times were significantly lower in order 1 than in order 2, regardless of emotions. This may be due to the 0° faces presented first, providing more emotional information and helping participants quickly discriminate.

Overall, males and females have similar discriminated accuracy for the task, but males spent more time. In addition, they both discriminate happy emotions accurately and quickly, while two negative emotions, especially sad, were discriminated less accurately and slower.

3.2 Preference Index Results

To explore the fixation patterns of males and females to emotional faces, we calculated the preference index to reflect the difference in fixation preference between the two groups, and the results are shown in Fig. 4. Statistical analysis showed that the main effects of gender (p = 0.002), order (p < 0.001), and emotion (p < 0.001) were all significant, and there was a significant interaction effect of gender*emotion (p = 0.006). From Fig. 4, we can clearly see that the preference index of males was significantly lower than that of females (neutral: p = 0.001; happy: p = 0.001; sad: p = 0.002; angry: p = 0.012). This result indicates that during visual scanning of emotional faces, males tend to focus on the mouth region and females tend to focus on the eye region. Both males and females tended to focus more on the mouth region for happy faces and more on the eye region for negative emotional faces. In addition, we were surprised to find out that the preference index in order 1 was significantly higher (p < 0.001), suggesting that viewing the 0° face first may increase subsequent attention to the eye region.

3.3 Pupil Diameter Variation Results

Pupil diameter can reflect participants’ physiological responses to emotional faces. To further investigate gender differences, we calculated the pupil diameter variation of the eye region, as shown in Fig. 5. Statistical analysis found that the main effect of gender (p = 0.026) and emotion (p = 0.021) was significant, and the interaction effect of order*emotion*gender (p = 0.003) was significant. The further specific analysis found that the pupil variation of males was less than that of females. In order 1, the male pupil diameter variation in the eye region of neutral and sad faces was significantly lower than that of females (neutral: p = 0.017; sad: p = 0.019). And in order 2, the male pupil diameter variation in the eye region of happy and angry faces was significantly lower than that of females (happy: p = 0.006; angry: p = 0.026). Overall, females responded greater to emotional faces than males, suggesting more sensitivity to the emotional information conveyed by eyes.

4 Discussion

This study verifies the behavioral and visual performance differences when TD males and females in the facial emotion discrimination task. Specifically, we find that males required more time to achieve similar discrimination accuracy as females. Further, males were more preferred to focus on the mouth region and showed a weaker pupillary response to the eye region. On the other hand, females are more preferred to view the eye region and respond greater.

Attention to the eye region shows salient importance to face processing. In our study, females paid more attention to the eyes than males, suggesting that females are more efficient in capturing facial information. According to neuroimaging studies, in the facial perception stage, females have greater limbic system activation, facilitating primary emotion processing. But males’ emotion processing style tends to be analytical, which may potentially be slower in perception [9]. Meanwhile, the limbic system structurally overlaps with the central autonomic network (CAN), which can modulate the pupillary response through the noradrenergic sympathetic pathway [10]. Therefore, females may experience emotions with greater intensity when viewing emotional faces, have higher emotional arousal, and eventually show larger pupil dilation.

Overall, we found apparent gender effects on visual processing strategies for emotional faces and the sensitivity to emotional information conveyed by the eyes. In the subsequent studies, we will include clinical data to verify whether the gender effect can be extended in the ASD population.

5 Conclusions

The current work focused on gender effects on emotional face processing. In general, we have three main findings: (A). Males required more time to achieve similar emotional discrimination accuracy as females. (B). Males preferred to fixate on the mouth region during processing of emotional faces, while females preferred the eye region. (C). Females had greater pupillary responses to eye region than males. These findings prove that TD females have stronger social attention for emotional faces and are more sensitive to emotional information conveyed by the eyes. In the future, we will verify these results in the ASD group and make a comparison between the ASD and TD groups.

References

Maenner, M.J., Shaw, K.A., Bakian, A.V., et al.: Prevalence and characteristics of autism spectrum disorder among children aged 8 Years—autism and developmental disabilities monitoring network, 11 sites, United States, 2018. MMWR Surveill. Summ. 70(11), 1–16 (2021). https://doi.org/10.15585/MMWR.SS7011A1

Chita-Tegmark, M.: Social attention in ASD: A review and meta-analysis of eye-tracking studies. Res. Dev. Disabil. 48, 79–93 (2016). https://doi.org/10.1016/j.ridd.2015.10.011

Wieckowski, A.T., White, S.W.: Eye-gaze analysis of facial emotion recognition and expression in adolescents with ASD. J. Clin. Child Adolesc. Psychol. 46(1), 110–124 (2017). https://doi.org/10.1080/15374416.2016.1204924

Bochet, A., Franchini, M., Kojovic, N., et al.: Emotional versus neutral face exploration and habituation: An eye-tracking study of preschoolers with autism spectrum disorders. Front. Psychiatry 11(January), 1–13 (2021). https://doi.org/10.3389/fpsyt.2020.568997

Harrop, C., Jones, D., Zheng, S., et al.: Visual attention to faces in children with autism spectrum disorder: Are there sex differences? Mol. Autism 10(1), 1 (2019). https://doi.org/10.1186/s13229-019-0276-2

Kraines, M.A., Kelberer, L.J.A., Wells, T.T.: Sex differences in attention to disgust facial expressions. Cogn. Emot. 31(8), 1692–1697 (2017). https://doi.org/10.1080/02699931.2016.1244044

Abbruzzese, L., Magnani, N., Robertson, I.H., et al.: Age and gender differences in emotion recognition. Front. Psychol. 10(OCT), (2019). https://doi.org/10.3389/fpsyg.2019.02371

Lundqvist, D., Flykt, A., Öhman, A.: The Karolinska directed emotional faces−KDEF, CD ROM from Department of clinical neuroscience, Psychology section. Karolinska Inst. (1998)

Whittle, S., Yücel, M., Yap, M.B.H., et al.: Sex differences in the neural correlates of emotion: Evidence from neuroimaging, 87(3). Biol. Psychol. (2011). https://doi.org/10.1016/j.biopsycho.2011.05.003

Ferencová, N., Višňovcová, Z., Bona Olexová, L., et al.: Eye pupil−a window into central autonomic regulation via emotional/cognitive processing. Physiol. Res. 70, S669–S682 (2021). https://doi.org/10.33549/physiolres.934749

Acknowledgment

This research was supported by the Tianjin Key Technology R&D 21JCYBJC00360.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Zhang, L., Wang, J., Xue, H., Liu, S., Ming, D. (2024). Gender Modulates Visual Attention to Emotional Faces: An Eye-Tracking Study. In: Wang, G., Yao, D., Gu, Z., Peng, Y., Tong, S., Liu, C. (eds) 12th Asian-Pacific Conference on Medical and Biological Engineering. APCMBE 2023. IFMBE Proceedings, vol 103. Springer, Cham. https://doi.org/10.1007/978-3-031-51455-5_31

Download citation

DOI: https://doi.org/10.1007/978-3-031-51455-5_31

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-51454-8

Online ISBN: 978-3-031-51455-5

eBook Packages: EngineeringEngineering (R0)