Abstract

The demand for spectrum is rising by the day as the number of consumers using the spectrum increases. However, the spectrum’s coverage is constrained to a certain area dependent on the local population. Then, researchers come up with an idea of allocating secondary users in the spectrum in the absence of primary users. For this process, a new scheme has been raised known as spectrum sensing in which the primary user’s presence using a variety of procedures. The device used for this process is called Cognitive radio. The spectrum sensing process involves gathering the signal features from the spectrum and then a threshold will be set depending on those values. With this threshold, the final block in Cognitive radio will decide whether the primary user is present or not. The techniques that are involved in spectrum sensing are energy detection, matched filtering, correlation, etc. These techniques cause a reduction in the probability of detection and involve a complex process to sense the spectrum. To overcome these drawbacks, the optimal signal is constructed from the original signal, and this, the spectrum is sensed. This process provides better results in terms of the probability of detection. To increase the scope of the research, the entropy features are extracted and trained with an LSTM based deep learning architecture. This trained network is tested with hybrid a feature which is a combination of both power-optimized features and entropy features. This process derives the spectrum status along with the accuracy and loss curves. The proposed method reduces complexity in sensing the spectrum along with that it produces an accuracy of 99.9% and the probability of detection of 1 at low PSNR values, outcomes when compared to cutting-edge techniques.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Spectrum sensing

- long short-term memory (LSTM)

- Deep learning architecture

- Cognitive radio

- Optimized features, and Hybrid features

- Artificial Intelligence

- Entropy

1 Introduction

The electromagnetic spectrum consists of a range of frequencies that emits energy in the form of waves or photons. This spectrum consists of the different types of wave’s namely visible light, radio waves, gamma rays, X-rays, etc. The waves present in the electromagnetic spectrum in the form of a periodic variance in electrical and magnetic fields [1]. They travel in the air at a speed that is equivalence to the speed of light. The frequencies may vary according to the source from which the waves are producing. The range of frequencies varies from low range to high range together forms an electromagnetic spectrum.

This spectrum is continuously monitored to determine whether the principal user is present or not. By this detection, it is possible to detect the user presence and able to reallocate with the secondary user. This spectrum sensing involves a variety of techniques includes Energy detection, Autocorrelation, a Matched filter, etc. The researchers focus on different techniques to obtain a better probability of detection. The spectrum sensing can be done using Cognitive Radio.

Cognitive radio (CR) is a wireless communication device that can intelligently detect the unused spectrum in the communication channels and made it possible for the secondary user to utilize the unused spectrum without interference with the primary user. For this technique, the energy of the spectrum is detected primarily based on measuring the noise and it is compared with the threshold.

In general, due to growth in technology, there is a rise in the consumption of the spectrum which leads to spectrum scarcity. To provide communication without any delay there is an urgent requirement of the spectrum. So, cognitive radio- an intelligent and adaptive device is developed to detect the unused spectrum in the communication channel and can transmit the information of the secondary user without interfering with the primary user. For this detection process, spectrum sensing is done to detect the existence of the primary user. The spectrum sensing approach involves various techniques. For these methods, a threshold is expected. The major consumer is present if the observed energy is substantially higher than this threshold; else, the user is not present.

2 Literature Survey

The auto-correlated spectrum sensing method [11] derives N samples from the received signal and the correlations between the samples were calculated in time-shifted translation at lag zero and lag one. To identify the user’s presence, these associated samples are contrasted with the threshold value. Since the noise is uncorrelated with the signal detection is simple. But this process requires a greater number of samples and it consumes more sensing time.

Matched filter spectrum sensing [9] involves the process of convolution of N samples with the previously saved data of the user’s signal characteristics and these convolved samples are compared with the threshold. Based on the result the existence of the primary consumer is recognized. However, this process necessitates previous knowledge of the key user signal properties, which is impractical.

To overcome the above problems, we put forward an Energy detection-based spectrum sensing scheme [4, 6]. Energy estimation involves the dynamic selection of threshold which can be carried on in various ways.

The dynamic threshold is generated by the Discrete Fourier Transform of the samples of the received signal. In this work, Gradient-based updates are used to set the threshold value. It also minimizes the sensing error in the spectrum caused due to the presence of noise. But this method shows poor results at low SNR [13].

Then at low SNR, for threshold estimation image binarization technique was implemented. The threshold is fixed to a certain value based on the past iteration values, number samples, false alarm, and SNR values. This process involves more sensing time.

Then to reduce the sensing time threshold is generated primarily based on the two parameters to accomplish the requirements of the chance of detection and false alarm. They are constant false alarm rate approach used to discover the probability of the fake detection in N quantity of trails and Constant Rate approach to solve the possibility of the true detection from the N number of trails. Based on these two probabilities the threshold is generated. This approach gives poor results in the fading environment [2].

Then in the Double thresholding technique [3, 12], two thresholds are evaluated to discover the presence of the primary user. If the predicted energy is below the main threshold, the user does not exist within the spectrum and if the energy is above the second threshold then the consumer is present inside the spectrum. But this process was failed to explain how the two thresholds were estimated.

Then in the Constant false alarm [8] rate approach, the false detection was restricted to a factor and the opportunity of detection may be able to increase with this reduction in false detection. The quantity of noise in the signal being received completely determines the threshold. However, this strategy falls short of explaining how the noise in the received signal was measured. These techniques reveal the various ways of sensing spectrum, but these have their drawbacks in sensing.

To reduce the drawbacks and to sense the presence of the primary user, a novel algorithm based on deep learning architecture is proposed. This architecture consists of a series of layers, it is training with entropy and optimized power features, and finally testing of the network which is explained in the next sections.

The paper is organized as follows: Sect. 3 consists of related work for the proposed method; Sect. 4 indicates the proposed deep learning architecture for spectrum sensing, Sect. 5 describes the results and discussion of the proposed process.

3 Related Works

-

a)

Utilizing the Dynamic Threshold Technique for Noise Estimation

This technique is on sensing the spectrum by considering the energy of the signal present in it. The spectrum may consist of the signal energy values and noise energy values; these are acquired from the spectrum and compared with a threshold. The laborious situation in this technique is to fix the threshold with proper consideration. To fix this threshold, here the noise energy values were considered. The formulation of this process is as follows:

$$ B_{0} :y_{i} \left( n \right) = w_{i} \left( n \right) $$(1)$$ B_{1} :y_{i} \left( n \right) = x_{i} \left( n \right) + w_{i} \left( n \right) $$(2)Here \({B}_{0}\) and \({B}_{1}\) are the hypothesis functions for absence and presence of signal respectively. The term x indicates the signal coefficients and w indicates the noise coefficients. From this hypothesis, a fixed threshold is calculated through noise functions. Then the threshold is detected as \({\lambda }_{D}\) [8] provided by

$$ \lambda_{D} = \sigma_{w}^{2} \left( {Q^{ - 1} \left( {P_{fa} } \right)\sqrt {2N} + N} \right) $$(3)where, \({\sigma }_{w}^{2}\) is the noise variance, \({Q}^{-1}\) is the inverse of Q-Function in which \({\text{Q(x) = }}\frac{1}{2\pi }\int\limits_{x}^{\infty } {\exp \left( {\frac{{ - a^{2} }}{2}} \right)} da,\,P_{fa}\) is the probability of false alarm and, N is the number of samples in the signal.

By this process, the threshold is estimated exactly and the presence of the user is estimated by comparing the threshold with the energy values which can be given as,

$$ {\text{Energy > }}\lambda_{D} ; B_{1} $$(4)$$ {\text{Energy < }}\lambda_{D} ; B_{0} $$(5)Where, \( {\text{Energy}} = \sum\nolimits_{{n = 1}}^{N} {\left( {x\left[ N \right]} \right)^{2} } \).

-

b)

In AWGN channel

The above process is considered using the AWGN channel [7], the additive white Gaussian noise channel is referred for a line of sight communication, it is formed by linear addition of white noise with a constant spectral density function. The bit error rate is high for this type of channel. Hence, we considered the same process in the Rayleigh fading channels.

-

c)

In Rayleigh fading channel

This type of channels is considered for far distance communications. The bit error rate for this type of channel is low when compared to the AWGN channel [10]. In this channel, the coefficients are formed by passing a signal from the channel in which the magnitude of the signal varies randomly as per Rayleigh distribution. Hence, to reduce the bit error rate and for long-distance communications, the spectrum is sensed by using the Rayleigh fading channel [14].

-

d)

Using Optimized features

In this process, the input signal samples are considered then the power of those symbols is calculated. Then these power values are optimized by the given formulation [15]. These optimized features are helpful to reduce the complexity in sensing the spectrum and calculating the probability of detection.

$$ Inputpow = abs (X_{k} )^{ \wedge } \cdot $$(6)The peak values are detected using the formula

$$ {\text{Peak}}_{{{\text{Val}}}} = 10{\text{*log}}\left( {\frac{{{\text{max}}\left( {{\text{abs}} \left( {X_{k} } \right)^{{.2}} } \right)}}{{{\text{min}}\left( {{\text{abs}} \left( {X_{k} } \right)^{{.2}} } \right)}}} \right) $$(7) -

e)

Using Machine Learning Algorithms

Spectrum is also sensed by using the machine learning algorithms namely linear SVM, Quadratic SVM, k-Nearest Neighbor, etc. These extract different kind of features from the spectrum and train the stated networks as sense the spectrum [5]. These methods are complex because it involves a complex training procedure and accuracy and probability of detections are also low.

4 Methodology

The proposed methodology is of sensing the spectrum for detecting the user presence using a deep learning process. Figure 1 displays the block diagram of the suggested technique.

-

a) Procedure

-

The signal which is taken from the spectrum is optimized using the previous optimization algorithm.

-

These optimized values are taken as features.

-

These features are saved in the form of. Mat files for signal + noise which indicates primary user presence and only noise which indicates primary user absence.

-

These mat files are trained using deep learning techniques like convolution neural network.

-

The Convolution neural architecture and its layers are given below. This process increases the probability detection along with indicates the primary user presence.

-

-

b) Proposed Algorithm

The algorithm for the proposed methodology is as follows:

-

c) Entropy Calculation

Entropy is the randomness in the signal which will give the information about the noise coefficients and signal coefficients. This entropy explains the statistical properties of the signal obtained from the spectrum. Hence, these features are considered as the main features for training the network.

-

d) Deep learning architecture

The proposed deep learning architecture is given as follows

In the deep learning process, various types of detections, classifications, and predictions can be achieved by forming a unique architecture for an application. In the proposed method, the features are a sequence of values. Hence, the input layer the Sequence Input Layer. This layer is the first layer in the architecture and the remaining follows a queue. Let’s have a look at them in Fig. 2.

-

e) LSTM Layer

The expansion of LSTM is a Long short-term memory Layer which is a recurrent layer used for training an ordered data taken from the sequence layer. This layer consists of small cells called LSTM cells which learn the data in the forward process. First, the data entered into the cells and they decide whether the data is sufficient for training or not. Based on this information it accepts the data or rejects it. If the data got rejected then the output will be inexact. Hence, the data should be processed and in an ordered form.

This layer is complex in the entire deep learning architecture because it learns according to the given input.

-

f) Fully Connected Layer

The data derived from the previous layers are multiplied by a weight matrix formed by a fully connected layer and then a vector of bias is applied. This layer consists of one layer or more than one layer which follows the convolutionary (and down-sampling) operation. All neurons in this layer, as the name indicates, interact with all features obtained from the previous layer. This layer incorporates all the features of the previous layers across the picture (local information) to detect the broad patterns. For classifying the data, the last layer among these layers combines all the features obtained from the previous layers. Hence, the size which is given as input to the fully connected layer resembles the total number of groups.

By determining the related name-value pair, we can change the learning rate and controlling parameters for this layer. If the training network does not change the training options, then the training framework uses the parameters specified by the global training options. The weight matrix W multiplies the data given as input through a layer that is connected through links and adds a bias vector b. The completely connected layer operates separately on each stage when the input is the sequence (e.g. in an LSTM Network).

-

g) Output Layers: Softmax and Classification Layers

A SoftMax function is applied for input by using this SoftMax layer. The loss in the cross-entropy classification problem with the multi-classification class is determined by a layer utilized for classification. The final layer which is connected in full must be accompanied by a layer used for classification along with its activation layer for classification problems. The SoftMax feature is often referred to as the exponential normalizer and the logistic sigmoid function can also be regarded as a multiclass generalization. Traditional classification networks require that the classification layer come after the activation layer. Train Network uses the values from the SoftMax function in the classification layer. Hence, by designing the above architecture the training and testing of the spectrum will be done.

5 Results and Discussion

The probability of detection is explained as a graph for accuracy and loss when comes to deep learning-based spectrum sensing which is given below.

Case I

If the user selects the input value as a = 1, then

The above Fig. 3 and Fig. 4 reveals that the presence of the user is detected by using the deep learning process. As we are simulating the results, we are giving the results from the input, in real-time, the values are derived from the spectrum.

As we consider both entropy values and optimized power vales as features, here I am visualizing the entropy values of data in the form of histograms which shown in Fig. 5 and Fig. 6. The spectral entropy (SE) of a signal is a measurement of its spectral power distribution. The Shannon entropy theory of capacity computation is the source of this idea. The SE calculates the entropy of a normalised power distribution of the signal, which it regards as a probability distribution in the frequency domain. In this context, this function can be useful for error detection and diagnosis extraction.

As entropy is the randomness in the data, it is considered as one of the features for spectrum sensing. The Fig. 7 resembles that the entropy values of the data in the presence of the user are low when compared to the entropy values of data in absence of the user. The graph displays that the entropy values for the spectrum with only noise are nearer to 1 J/K and the spectrum with both data and noise is -8 J/K.

Case II

If we select the input as 2, then the spectrum is equipped with the user, then the results appear as follows.

The above Fig. 8 indicates the training accuracy and loss values for each epoch/iteration in the presence of the user. The Fig. 9 indicating the presence of the user is as follows:

Then the entropy values are calculated for the presence and absence of user as in the above case.

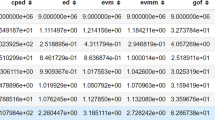

By considering all the above results, the accuracy of the training and testing process reaches 99.9% using deep learning architecture at initial learning iterations. This reveals that the probability of detection reaches 1 at low SNR values when compared to previous values which are 0.9 at the same SNR values which shown in Table. 1. Moreover, in this process, an acknowledgment is displayed for the presence or absence of the user.

6 Conclusions

Hence in the proposed method, the entropy features were extracted and trained with an LSTM based deep learning architecture. This architecture was created using different layers as explained. This trained network was tested with hybrid features which were a combination of both power-optimized features and entropy features. This process helped to derive the spectrum status along with the accuracy and loss curves. The experimental results of the proposed method reduced the complexity in sensing the spectrum with an accuracy of 99.9% and the probability of detection of 1 at low PSNR values, results when compared to state of art methods.

In the future, we can extend this process to real-time implementation by constructing a fully equipped cognitive radio.

References

Centre for Remote Imaging, Sensing and Processing (CRISP). https://crisp.nus.edu.sg/~research/tutorial/em.htm. Accessed 13 Feb 2023

Electronic notes, ‘Rayleigh Fading’. https://www.electronics-notes.com. Accessed 13 Feb 2023

Wu, J., Luo, T., Yue, G.: An energy detection algorithm based on double-threshold in cognitive radio systems. In: International Conference on Information Science and Enginneering, pp. 493–496. Zhanjiajie (2009)

Maleki, S., Pandharipande, A., Leus, G.: Two-degree spectrum sensing for cognitive radios. In: IEEE International Conference on Acoustics, Spe. and Sig. Proc., IEEE, pp. 2946–2949, Texas, USA (2010)

Plata, D.M.M., Reatiga, A.G.A.: Evaluation of strength detection for spectrum sensing based at the dynamic desire of detection threshold. J. Procedia Eng. 35, 135–143 (2012)

Zhang, X., Chai, R., Gao, F.: Matched cleanout based spectrum sensing and energy degree detection for the cognitive radio network. In: IEEE Global Conference on Signal and Information Processing, IEEE, pp. 1267–1270, Atlanta (2014)

Sutanu, G.: Performance analysis based on a comparative study between multipath Rayleigh fading and AWGN channel in the presence of various interference. Int. J. of Mob. Netw. Commun. Telematics, 4, 15–22 (2014)

Subramaniam, S., Reyes, H., Kaabouch, N.: Spectrum occupancy measurement: an autocorrelation based scanning approach using USRP. In: IEEE Wireless and Microwave Technology Conference on IEEE, pp. 1–5, Barcelona (2015)

Al-Badrawi, M.H., Kirsch, N.J.: An EMD-based double threshold detector for spectrum sensing in cognitive radio networks. In: Vehicular Technology Conference. IEEE, pp. 1–5. Glasgow, UK (2015)

Sai Suneel, A., Prasanthi, K.: Multiple input multiple output cooperative communication technique using for spectrum sensing in cognitive radio network. In: IEEE Int. Conference on Signal Processing Communication Power and Embedded System, IEEE, pp. 2052–2063, Chennai (2016)

Arjoune Y., Mrabet Z. E., Ghazi H. E. and Tamtaoui A. : Spectrum sensing: Enhanced energy detection technique based on noise measurement. In: IEEE 8th Annual Computing and Communication Workshop and Conference, IEEE, pp. 828–834, Las Vegas (2018)

Arjoune, Y., Kaabouch, N.: On spectrum sensing, a machine learning method for cognitive radio systems. In: IEEE International Conference on Electro Information Technology IEEE, pp. 333–338. Ecuador (2019)

Sai Suneel, A., Shiyamala, D.S.: A novel energy detection of spectrum based on noise measurement a review. J. Adv. Res. Dyn. Control Syst. 11, 870–873 (2019)

Sai Suneel, A., Shiyamala, D.S.: Dynamic threshold selection through noise variance for spectrum sensing. Int. J. Eng. Adv. Tech. 8, 23–234 (2019)

Sai Suneel, A., Shiyamala, S.: Peak detection based energy detection of a spectrum under Rayleigh fading noise environment. J. Ambient Intell. Humanized Comput. 12, 4237–4245 (2021)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Suneel, A.S., Shiyamala, S. (2023). An Innovative AI Architecture for Detecting the Primary User in the Spectrum. In: Chaubey, N., Thampi, S.M., Jhanjhi, N.Z., Parikh, S., Amin, K. (eds) Computing Science, Communication and Security. COMS2 2023. Communications in Computer and Information Science, vol 1861. Springer, Cham. https://doi.org/10.1007/978-3-031-40564-8_16

Download citation

DOI: https://doi.org/10.1007/978-3-031-40564-8_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-40563-1

Online ISBN: 978-3-031-40564-8

eBook Packages: Computer ScienceComputer Science (R0)