Abstract

Extraction of features from healthcare devices requires the efficient deployment of high-sample rate components. A wide variety of deep learning models are proposed for this task, and each of these models showcases non-uniform performance & complexity levels when applied to real-time scenarios. To overcome such issues, this text proposes the design of a deep-learning-based bioinspired model that assists in improving the feature extraction capabilities of healthcare device sets. The model uses Elephant Herding Optimization (EHO) for real-time control of data rate & feature-extraction process. The collected samples are processed via an Augmented 1D Convolutional Neural Network (AOD CNN) model, which assists in the identification of different healthcare conditions. The accuracy of the proposed AOD CNN is optimized via the same EHO process via iterative learning operations. Due to adaptive data-rate control, the model is capable of performing temporal learning for the identification of multiple disease progressions. These progressions are also evaluated via the 1D CNN model, which can be tuned for heterogeneous disease types. Due to the integration of these methods, the proposed model is able to improve classification accuracy by 2.5%, while reducing the delay needed for data collection by 8.3%, with an improvement in temporal disease detection accuracy of 5.4% when compared with standard feature classification techniques. This assists in deploying the model for a wide variety of clinical scenarios.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The Internet of Things is crucial to many different aspects of contemporary life [1]. Smart cities, intelligent healthcare, and intelligent monitoring are some examples of IoT applications. Because of the many ways they may improve patient care, artificial intelligence (AI) and the Internet of Things (IoT) have seen explosive growth in the healthcare industry. It offers a broad variety of image processing, machine learning, and deep learning approaches, as well as solutions that assist service providers and patients in improving healthcare outcomes across a number of different industries. One of the most exciting and potentially fruitful fields of medical research is the examination of medical images [2]. Methods [3] have been investigated for use in a wide variety of medical imaging applications, ranging from the collection of images to the processing of images to the assessment of prognoses. In the process of analysing medical healthcare device scans, huge volumes of quantitative data may be acquired and retrieved for use in a variety of diagnostic applications. These approaches may also be used in the analysis of medical healthcare device scans. Scanners for medical equipment are becoming more important diagnostic instruments in many hospitals and other healthcare institutions [4]. It is inexpensive and has enormous therapeutic benefits in the diagnosis of certain infectious lung disorders [5], such as pneumonia, tuberculosis (TB), early lung cancer, and, most recently, COVID-19. Some examples of these diseases include the: This dreadful pandemic has started to spread around the globe ever since the new COVID-19 was discovered in December 2019. The respiratory infectious virus that is the root cause of this fatal illness represents a considerable risk to the health of the general population. In March of the year 2020, the World Health Organization (WHO) declared that the epidemic had reached the point where it could be considered a full-fledged pandemic because of how quickly it had spread. There are more than 50 million confirmed cases of COVID-19 in the globe, as reported by the World Health Organization (WHO). In order to monitor the spread of the virus and help put a stop to it, the medical community is investigating the most cutting-edge treatments and technologies available. When evaluating COVID-19, it is usual practice to take into account symptoms, indications, and tests related to pneumonia, in addition to scans of various medical devices [6]. The identification of the COVID-19 virus requires the use of medical equipment scanners [7]. This document contains a lot much information pertaining to the health of the patient. In spite of this, one of the most difficult aspects of a doctor’s job is to come to reasonable conclusions based on the information available. The overlapping tissue structure makes it far more difficult to interpret scans that are produced by medical equipment. Examining a lesion may be challenging if there is little contrast between the lesion and the surrounding tissue, or if the site of the lesion overlaps with the ribs or pulmonary blood veins. Both of these situations make it more difficult to examine the lesion. Even for a physician with years of expertise, it might be challenging to differentiate between tumours that at first glance seem to be quite different from one another or to detect particularly intricate nodules. As a result, the examination of lung sickness using medical device scans will result in false negatives. The appearance of white spots or viruses on medical device scans is another possible obstacle for the radiologist, as it may lead to the incorrect diagnosis of illnesses such as pneumonia and pulmonary sickness such as COVID-19. Researchers are increasingly employing computer vision, machine learning, and deep learning to understand medical imagery, such as scans from medical devices for infectious illnesses [8]. This trend is expected to continue in the near future. These newly discovered approaches may be of assistance to medical professionals or doctors, as they have the potential to automatically identify the infection in scans of medical healthcare equipment. This would improve the treatment test procedure and lessen the amount of labour required. The early detection of illness via the use of these cutting-edge approaches contributes to the overall reduction in death rates. Using a smart healthcare system that is enabled by the Internet of Things (IoT), it is possible to identify infectious illnesses such as COVID-19 and pneumonia in scans of medical healthcare devices. This system was conceptualized as a direct consequence of the enhanced performance outcomes achieved by preceding systems. Transfer learning is combined with the use of two different deep learning architectures, namely VGG-19 [9] and Inception-V3 [10]. Because these designs have been trained in the past on the ImageNet data set, it is possible that they use the concept of multiple-feature fusion and selection [11]. In the course of our investigation, we made use of a method for object categorisation that is analogous to the multiple feature fusion and selection strategy that is presented in [12, 13]. In a range of applications, such as those dealing with object classification and medical imaging, this concept has been put into reality by merging numerous feature vectors into a single feature vector or space via Support Vector Machines (SVM) [14, 15]. These applications include those dealing with object classification. Using the feature selection approach, it is also possible to get rid of features in the fused vector sets that aren’t necessary. As a consequence of this, a variety of deep learning models have been developed for this purpose. When applied to real-world settings, each of these models demonstrates dramatically variable degrees of performance as well as levels of complexity. In the next portion of this text, a proposal is made to construct a deep learning-based bioinspired model in order to solve the challenges that have been raised and to enhance the ability of healthcare device sets to extract features. In Sect. 4, performance assessments of the proposed model were carried out. The accuracy and latency of the proposed model were compared to those of state-of-the-art approaches for feature representation and classification. In the concluding part, the author provides some observations that are context-specific about the model that has been provided and offers some suggestions as to how it might be improved further for use in clinical settings.

2 Literature Survey

The study of medical images is a topic of medical research consider one of the most interesting and potentially lucrative areas in the profession [2, 3]. Methods have been researched for potential use in a wide range of medical imaging applications, ranging from the acquisition of images through the processing of those images to the evaluation of prognoses. The precision of diagnostics is going to be improved as a direct result of this research. Huge amounts of quantitative data may be acquired and retrieved when analyzing scans of medical and healthcare devices. This may be the case because of the nature of the data being collected. After then, this information may be put to use in a number of other diagnostic applications. The analysis of scans that were acquired by medical and healthcare equipment is another application that might make use of these approaches. Scanners that are traditionally employed as medical equipment are increasingly being put to use as diagnostic devices in a variety of hospitals and other types of healthcare facilities [4]. It is cost-effective and delivers considerable therapeutic advantages in the identification of numerous infectious lung illnesses, including pneumonia, tuberculosis (TB), early lung cancer, and, most recently, COVID-19. These diseases include: Some examples of these infectious lung disorders are pneumonia, tuberculosis (TB), and lung cancer in its early stages. The circumstances listed below are only a few examples; this is not an exhaustive list. This terrible epidemic has begun to spread over the whole world ever since the new COVID-19 strain was identified in December of 2019. The respiratory infectious virus that is the root cause of this potentially lethal disease poses a significant threat to the health of the general population. The World Health Organization (WHO) determined in March of the year 2020 that the illness had developed to the point where it could be categorized as a full-fledged pandemic [5]. This conclusion was reached as a direct result of the rapidity with which it had spread. This transpired as a consequence of the lightning-fast rate at which the illness had spread. According to the most recent statistics from the Globe Health Organization, more over 50 million confirmed cases of COVID-19 have been found in countries all over the world (WHO). In order to keep track of the virus’s progression and do what they can to halt its further spread, the scientific community is doing research on the most innovative therapies and instruments that are now on the market. When doing an analysis of COVID-19, it is standard procedure to perform scans on a variety of medical equipment in addition to taking into consideration the signs, symptoms, and tests that are associated with pneumonia [6]. The use of scanners that are designed specifically for use in medical settings is required in order to achieve a positive identification of the COVID-19 virus [7]. This page contains a wealth of information that is directly applicable to the condition that the patient is in at the current time. Despite this, one of the most challenging components of a doctor’s work is to be able to make sound judgments based on the data they have available to them. Because different tissue structures overlap, it is much more challenging for medical professionals to analyze scans provided by medical equipment. It may be difficult to evaluate the lesion if there is little difference between the lesion and the surrounding tissue, or if the position of the lesion overlaps with the ribs or pulmonary blood vessels. Under any of these circumstances, it can be difficult to determine whether or not there is a lesion present. Examination of the lesion will be made more challenging as a result of both of these situations. Even for a physician who has spent a lot of time working in the field, it may be difficult to differentiate between tumors that, at first glance, appear to be reasonably distinct from one another, or to recognize nodules that are extremely complex. This may be the case especially when attempting to diagnose extremely complex tumors. As a consequence of this, misleadingly negative outcomes will be obtained whenever medical equipment scans are employed to assess patients’ lung health. An further potential challenge for the radiologist is the appearance of white spots or viruses on scans of medical devices. This may lead to an incorrect diagnosis of illnesses such as pneumonia and lung disorders like COVID-19. Because the appearance of these white spots or viruses might lead to an incorrect diagnosis, this could be a source of concern. Researchers are quickly turning to computer vision, machine learning, and deep learning in order to comprehend medical imaging, such as images from medical devices for infectious diseases [8]. This trend is expected to continue. It is projected that this pattern will maintain its prevalence in the not-too-distant future. It is anticipated that this pattern will go on for an extremely long period of time. It is possible that these newly found approaches, which have the capacity to automatically detect the infection in scans of medical healthcare equipment, may be valuable to medical professionals or physicians. It’s possible that those in the medical field may find this extremely useful. This would result in a reduction in the amount of labor that is necessary while also enhancing the process that is used to evaluate the therapy. The use of these cutting-edge techniques for the diagnosis of diseases at an early stage results in a general reduction in the number of fatalities. Commons Utilizable Commons Utilizable Using a smart healthcare system that is made possible by the internet of things, it is feasible to discover infectious diseases such as COVID-19 and pneumonia in scans of medical healthcare equipment. This is only achievable for those who utilize the system (IoT). The improved performance outcomes that were accomplished by systems that came before it in the route of development had a direct impact on the development of this system. Transfer learning is used in conjunction with not just one but two distinct deep learning architectures, notably VGG-19 [9] and Inception-V3 [10]. Since these designs were first trained on the ImageNet data set [11], it is very probable that they integrate the idea of multiple-feature fusion and selection. This is because the ImageNet data set was used throughout the training process. This is due to the fact that these designs have already undergone training using the ImageNet data set. Throughout the course of our inquiry, we classified objects using a method that is similar to the multiple feature fusion and selection methodology that was described in [12, 13]. Specifically, this method was based on a set of rules. To be more specific, the following is the classification system that we use for things: Support Vector Machines (SVM) have been used to a variety of applications, including those that deal with object classification and medical imaging [14, 15]. SVM have also been used to combine many feature vectors into a single feature vector or space. Applications such as medical imaging and the classification of objects are two examples of the sorts of applications that suit this definition. This category also include the software that deals with organizing information into several classifications. Using the method of feature selection, it is also feasible to exclude from the fused vector sets any features that are not required for the job that is now being worked on. As a consequence of this, a wide variety of deep learning models that are particularly well-suited for the task at hand have been developed. When applied to circumstances that are more analogous to those that may be encountered in the real world, each of these models demonstrates noticeably distinct levels of performance in addition to varying degrees of complexity.

3 Design of the Proposed Deep-Learning-Based Bioinspired Model to Improve Feature Extraction Capabilities of Healthcare Device Sets

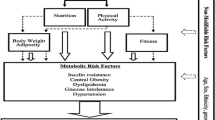

Based on the review of existing methods for feature extraction, it can be observed that a wide variety of deep learning models are proposed for this task, and each of these models showcase non-uniform performance & complexity levels when applied to real-time scenarios. To overcome such issues, this section proposes the design of a deep-learning-based bioinspired model that assists in improving the feature extraction capabilities of healthcare device sets. The design of the proposed model is depicted in Fig. 1, where it can be observed that the model uses Elephant Herding Optimization (EHO) for real-time control of data rate & feature-extraction process. The collected samples are processed via an Augmented 1D Convolutional Neural Network (AOD CNN) model, which assists in the identification of different healthcare conditions. The accuracy of the proposed AOD CNN is optimized via the same EHO process via iterative learning operations. Due to adaptive data-rate control, a model is capable of performing temporal learning for the identification of multiple disease progressions. These progressions are also evaluated via the 1D CNN model, which can be tuned for heterogeneous disease types.

From the flow of this model, it can be observed that the model initially collects large data samples from different sources, and then optimizes collection rates via an EHO process. This process assists in the estimation of deep learning hyperparameters, which enables continuous accuracy improvements under real-time use cases.

The EHO model works as per the following process,

-

Initialize the following optimizer parameters,

-

Total Herds that will be configured (\({N}_{h}\))

-

Total iterations for which these Herds will be reconfigured (\({N}_{i}\))

-

Rate at which Herds will learn from each other (\({L}_{r}\))

-

-

To initialize the optimization model, generate \({N}_{h}\) Herd Configurations as per the following process,

-

From the collected sample sets, select N samples as per Eq. 1

$$N=STOCH\left({L}_{r}*{N}_{s}, {N}_{s}\right)$$(1)where, \({N}_{s}\) represents the number of collected & scanned sample sets.

-

Based on the values of these samples, estimate Herd fitness via Eq. 2,

$${f}_{h}=\frac{{t}_{p}}{{t}_{p}+{t}_{n}}\sum\nolimits_{i=1}^{N}\frac{D{R}_{i}}{N}$$(2)where, \(D{R}_{i}\) represents the data rate during the collection of the sample, while \({t}_{p} \& {t}_{n}\) represent its true positive and negative rates.

-

Estimate such configurations for all Herds, and then calculate the Herd fitness threshold via Eq. 3,

$${f}_{th}=\frac{1}{{N}_{h}}\sum\nolimits_{i=1}^{{N}_{h}}{{f}_{h}}_{i}*{L}_{r}$$(3)

-

-

Once the Herds are generated, then scan them for \({N}_{i}\) iterations as per the following process,

-

Do not modify the Herd if \({f}_{h}>{f}_{th}\)

-

Otherwise, modify the Herd configuration as per Eq. 4,

$$N\left(New\right)=N\left(Old\right)\bigcup N\left(Matriarch\right)$$(4)

where, \(N(New)\) represents feature vectors present in the new Herd configuration, \(N(Old)\) is its old features, and \(N(Matriarch)\) represents feature sets present in the Matriarch Herd, which is estimated via Eq. 5,

$$H\left(Matriarch\right)=H\left(Max\left(\bigcup\nolimits_{i=1}^{{N}_{h}}{f}_{{h}_{i}}\right)\right)$$(5)where, \(H\) represents the Herd Configurations.

-

At the end of every iteration, estimate Herd fitness via Eq. 2, and update fitness threshold levels.

-

Once all iterations are completed, then find a Herd with maximum fitness, and use its configurations for the collection of data samples Configurations of Herds with maximum fitness levels are used to estimate the Data Rate for the collection of healthcare samples. These samples are processed by a 1D CNN Model that can be observed in Fig. 2, where a combination of Convolutional layers (for feature augmentations), Max Pooling layers (for selection of high variance features), Drop Out layers (for removal of low variance features), and Fully Connected Neural Networks (FCNNs) based on SoftMax activation can be observed for different use cases.

-

The model initially augments collected feature sets via Eq. 6, and uses them to find feature variance via Eq. 7 which assists in the estimation of high-density features for better accuracy performance under real-time use cases

where, \(m, a\) represents the sizes for different convolutional Windows, and their Strides, \(FS\) represents the feature sets collected from different devices, and \(ReLU\) represents a Rectilinear Unit which is used for activation of these features. These features are processed by an adaptive data-rate controlled variance estimator, which evaluates feature variance via Eq. 7,

where, \(DR\) is the selected data rate from the EHO process. The selected features are classified as per Eq. 8, where SoftMax activation is used to identify the output class,

where, \({N}_{f}\) represents total features extracted by the CNN layers, while \({w}_{i} \& {b}_{i}\) represent their respective weight & bias levels. The output class represents progression for the given disease type, and can be trained for multiple use cases. Due to the use of these adaptive techniques, the proposed model is able to improve classification performance for different disease types. This performance can be observed in the next section of this text.

4 Result Analysis and Comparison

The proposed model initially collects data samples from different sources, and uses the accuracy levels of classifying these samples for estimating efficient data collection rates. These rates are used to optimize the performance of the Convolutional Neural Network (CNN), thereby assisting in the efficient estimation of different disease types and their progression levels. The performance of this model was estimated on the abdominal and Direct Fetal ECG Database(https://physionet.org/content/adfecgdb/1.0.0/), AF Termination Challenge Database(https://physionet.org/content/aftdb/1.0.0/), and ANSI/AAMI EC13 Test Waveforms (https://physionet.org/content/aami-ec13/1.0.0/), which assisted in the estimation of Heart Disease, Terminal Cancers, and Brain Disease progression levels. These sets were combined to form a total of 75k records, out of which 80% were used for training, while 20% each were used for testing & validation purposes. Based on this strategy, the accuracy of prediction was estimated and compared with VGG Net [9], Incep Net [10], & SVM [14], which use similar scenarios. This accuracy can be observed in Table 1, where it is tabulated with respect Number of Evaluation Data Points (NED),

When compared with VGG Net [9], Incep Net [10], and SVM [14], the suggested model is able to improve classification accuracy by 6.5% when compared with VGG Net [9], 3.9% when compared with Incep Net [10], and 2.3% when compared with SVM [14] for a wide range of different scenarios. This conclusion can be drawn from the findings of this study and Fig. 3. This is because the classification was done utilizing EHO and 1D CNN, both of which lead to an improvement in accuracy for clinical use cases. As a result, this result was achieved. In a way somewhat dissimilar to this, the exactitude of the temporal analysis may be deduced from Table 2 as follows,

This assessment and Fig. 4 lead us to the conclusion that the proposed model achieves a higher level of temporal analysis accuracy than the three state-of-the-art approaches (VGG Net [9], Incep Net [10], and SVM [14]), by a combined 5.9%, 4.50%, and 1.88%, respectively. The proposed model is able to accomplish this goal because it employs the methodology of comparing neural networks in order to improve the accuracy of temporal analysis. We saw an improvement in temporal accuracy for clinical use cases after using EHO for adaptive data rate management and 1D CNN for classification. This improvement was due to the combination of these two technologies.

Similarly, the delay needed for classification can be observed from Table 3 as follows,

The results of this research are shown in Fig. 5, which demonstrates that the proposed model boosts classification speed by 10.5%, 12.4%, and 15.5% when compared to VGG Net [9], Incep Net [10], and SVM [14], respectively. It is possible that the improvement seen in classification times for clinical use cases is due to the use of EHO for the purpose of adaptive data rate control in combination with 1D CNN. After completing this study, it became very obvious that the model that was proposed might be applied in a variety of different real-time clinical scenarios. This was discovered after the research was completed & validated with different scenarios.

5 Conclusion

The proposed model initially collects data samples from different sources and uses the accuracy levels of classifying these samples for estimating efficient data collection rates. These rates are used to optimize the performance of the Convolutional NeuralNetwork (CNN), thereby assisting in the efficient estimation of different disease types and their progression levels. Based on accuracy analysis, it can be observed that the proposed model is able to improve classification accuracy which is due to the use of EHO & 1D CNN for classification, which assists in enhancing accuracy for clinical use cases. Based on temporal accuracy analysis, it can be observed that the proposed model is able to improve temporal classification accuracy which is due to the use of EHO for adaptive data rate control & 1D CNN for classification, which assists in enhancing temporal classification accuracy for clinical use cases. Based on delay analysis, it can be observed that the proposed model is able to improve classification speed due to the use of EHO for adaptive data rate control & 1D CNN which assists in enhancing the speed of classification for clinical use cases. Based on this analysis, it can be observed that the proposed model is useful for a wide variety of real-time clinical use cases. In future, the performance of the proposed model must be validated on larger data samples, and can be improved via integration of Transformer Models, Long-Short-Term Memory (LSTM) Models, and Adversarial Networks, which will enhance temporal analysis performance under different use cases.

References

Bhuiyan, M.N., Rahman, M.M., Billah, M.M., Saha, D.: Internet of things (IoT): A review of its enabling technologies in healthcare applications, standards protocols, security, and market opportunities. IEEE Internet Things J. 8(13), 10474–10498 (2021)

Ray, P.P., Dash, D., Salah, K., Kumar, N.: Blockchain for IoT-based healthcare: background, consensus, platforms, and use cases. IEEE Syst. J. 15(1), 85–94 (2020)

Ren, J., Li, J., Liu, H., Qin, T.: Task offloading strategy with emergency handling and blockchain security in SDN-empowered and fog-assisted healthcare IoT. Tsinghua Sci. Technol. 27(4), 760–776 (2021)

Haghi, M., et al.: A flexible and pervasive IoT-based healthcare platform for physiological and environmental parameters monitoring. IEEE Internet Things J. 7(6), 5628–5647 (2020)

Kumar, A., Krishnamurthi, R., Nayyar, A., Sharma, K., Grover, V., Hossain, E.: A novel smart healthcare design, simulation, and implementation using healthcare 4.0 processes. IEEE Access 8, 118433–118471 (2020)

Xu, L., Zhou, X., Tao, Y., Liu, L., Yu, X., Kumar, N.: Intelligent security performance prediction for IoT-enabled healthcare networks using an improved CNN. IEEE Trans. Industr. Inf. 18(3), 2063–2074 (2021)

Pathak, N., Deb, P.K., Mukherjee, A., Misra, S.: IoT-to-the-rescue: a survey of IoT solutions for COVID-19-like pandemics. IEEE Internet Things J. 8(17), 13145–13164 (2021)

Vedaei, S.S., et al.: COVID-SAFE: An IoT-based system for automated health monitoring and surveillance in post-pandemic life. IEEE Access 8, 188538–188551 (2020)

Ahmed, I., Jeon, G., Chehri, A.: An IoT-enabled smart health care system for screening of COVID-19 with multi layers features fusion and selection. Computing, 1–18 (2022)

Pal, M., et al.: Symptom-based COVID-19 prognosis through AI-based IoT: a bioinformatics approach. BioMed Res. Int. (2022)

Yang, Z., Liang, B., Ji, W.: An intelligent end–edge–cloud architecture for visual IoT-assisted healthcare systems. IEEE Internet Things J. 8(23), 16779–16786 (2021)

Saha, R., Kumar, G., Rai, M.K., Thomas, R., Lim, S.J.: Privacy Ensured ${e} $-healthcare for fog-enhanced IoT based applications. IEEE Access 7, 44536–44543 (2019)

Bao, Y., Qiu, W., Cheng, X.: Secure and lightweight fine-grained searchable data sharing for IoT-oriented and cloud-assisted smart healthcare system. IEEE Internet Things J. 9(4), 2513–2526 (2021)

Zhang, Y., Sun, Y., Jin, R., Lin, K., Liu, W.: High-performance isolation computing technology for smart IoT healthcare in cloud environments. IEEE Internet Things J. 8(23), 16872–16879 (2021)

Chanak, P., Banerjee, I.: Congestion free routing mechanism for IoT-enabled wireless sensor networks for smart healthcare applications. IEEE Trans. Consum. Electron. 66(3), 223–232 (2020)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Reddy, V.S., Debasis, K. (2023). DBFEH: Design of a Deep-Learning-Based Bioinspired Model to Improve Feature Extraction Capabilities of Healthcare Device Sets. In: Chaubey, N., Thampi, S.M., Jhanjhi, N.Z., Parikh, S., Amin, K. (eds) Computing Science, Communication and Security. COMS2 2023. Communications in Computer and Information Science, vol 1861. Springer, Cham. https://doi.org/10.1007/978-3-031-40564-8_11

Download citation

DOI: https://doi.org/10.1007/978-3-031-40564-8_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-40563-1

Online ISBN: 978-3-031-40564-8

eBook Packages: Computer ScienceComputer Science (R0)