Abstract

This study examines the detection of information related to small and large equity styles. Using a novel database of magazines targeting institutional investors, the institutional media, we compare the performance of dictionary-based and supervised machine learning algorithms (Naïve Bayes and support vector machine). Our three main findings are (1) restricted word lists are the most efficient approach, (2) bigram term frequency matrices are the best weighting scheme for algorithms, and (3) Naïve Bayes exhibits overfitting while support vector machine delivers encouraging results. Overall, our results provide material to construct small-cap and large-cap coverage indexes from specialized financial media.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

In their stock allocation process, some investors first allocate their funds between several categories before selecting individual stocks. Those categories are called “styles,” and this investment behavior is called “style investing” (Barberis & Shleifer, 2003). Empirical studies (Froot & Teo, 2008; Kumar, 2009) have documented the importance of investor preference shifts between styles across time (small vs. large, value vs. growth). Change in style preferences provokes important inflow into one style to the detriment of its counterpart. Experts’ recommendations within media are one potential major variable influencing the choice of investors between extreme styles. In this study, we document the most appropriate textual methodology to detect small and large style information within specialized advising magazines.

Investors cannot find directly or explicitly information about equity style investing into traditional financial newspapers (The Wall Street Journal, Dow Jones Newswires) or social network platforms (Twitter, StockTwits). Information in these media sources is often related at stock level or with a broader market vision. Therefore, we explore a new source of information to address our problem, namely, an institutional media database, which we created. It is composed of nine magazines targeting institutional investors, asset managers, advisers, and financial consultants. These magazines provide information focusing on different asset classes with an emphasis on strategic allocation. This way of relating financial market information is in accordance with style investing foundation, i.e., investors shifting preferences between assets sharing opposite characteristics. To our knowledge, no other publication suggests this type of analysis with a corpus targeting professional investors.

Whether existing textual analysis methodologies in finance are transposable to our problem is worth being addressed. The first and most widespread application is sentiment analysis, where researchers translate a qualitative piece of information into a score indicating polarity conveyed. Many sentiment indexes have been developed to study different asset classes (stock, housing, commodities, etc.) using dedicated source of information (newspapers, social media, company filings, etc.). These sentiment indicators exist with an important variation of methodologies applied in different context. For example, measuring sentiment should be different between a more formal newspaper content and informal social network language. In this study, we summarize major methodologies applied in sentiment analysis. We find that existing literature mainly uses word lists (i.e., dictionaries) or supervised machine learning algorithms. But many existing publications lack comparison between the two main solutions leaving a room for discussion. One comparison within an identical sample was documented by Henry and Leone (2016). They find similar performance between word lists and Naïve Bayes algorithms applied to earnings announcement. We formulate our detection problem as a classification task distinguishing between news with style content (i.e., style news) and news without style content (i.e., neutral news). We document the limits of dictionary-based, Naïve Bayes, and support vector machine classifiers, compare their performance, and provide recommendations on the optimal methodology to detect small and large style content.

We encounter three main challenges to detect style information in institutional media. First, we lack labelled data and have to proceed to manual annotation by ourselves. This task is required to train supervised machine learning algorithms so that they learn from data. In contrast to our difficulty, recent studies on social network now find annotated data available (Oliveira et al., 2016; Renault, 2017). This increasing amount of self-generated data improves algorithm efficiency. Second, style information does not necessarily constitute news headlines but mainly represents secondary information. The way that information is presented increases the difficulty of manual annotation. Third, style content is disseminated across various financial topics such as financial markets, retirement planning, or mutual funds industry-related news. In order to adapt our annotation process, we manually select neutral news and use dictionary detection for style news. This semi-automatic labelling process is only possible since dictionary-based approach delivers high precision: news classified within style class contains in vast majority style content.

We find promising results from support vector machine algorithms, especially with a term document matrix composed of bigrams. This methodology delivers balanced performance, limiting false detection (neutral news classified as style news) and missed detection (style news classified as neutral news). Naïve Bayes fails to limit false detection and is extremely sensitive to the size of annotated sample. Naïve Bayes is also more susceptible to overfitting, strongly modeling labelled data but weakly generalizing to an extended corpus such as the overall institutional media database. Compared to supervised algorithms, dictionary-based approach delivers the best performance. Restricted unigram and bigram lists with implicit stock content outperform supervised algorithms. The absence of existing labelled data is detrimental to the training phase of such algorithms.

Our research contributes to the literature on textual analysis in finance. We provide a critical analysis of the capacity of current methodologies to detect style information. We compare the use of human word lists with supervised machine learning algorithms. The results of our work provide style coverage indexes with applications focused on investor behavior, fund flows, and the importance of expert advices in the financial press.

The remainder of the paper is organized as follows. Section 2 discusses main methodologies applied in the financial domain. Section 3 introduces the institutional media database. Section 4 describes the different approaches selected to detect style investing-related content and the evaluation of their performance. Section 5 reports the empirical results. Section 6 concludes.

2 Literature Review

In this section, we present a review of existing classification methodologies applied to financial applications. As such, a classification methodology consists of identifying the class of a piece of information (word, sentence, or text), i.e., reducing the content of information to one or several categories. Most publications covered in our work concern sentiment analysis where the information is classified into positive or negative content. Table 1 summarizes publications covered in this review.

The usage and creation of expert dictionaries in financial publications is important. Tetlock (2007) uses the Harvard IV-4 psychosocial dictionary (hereafter H4N) to study the role of newspapers in the stock market. He counts the number of word occurrences from the 77 predetermined H4N categories. He finds that the “negative,” “weak,” “fail,” and “fall” (i.e., pessimism factor) categories mostly influence stock market prices. In their seminal paper, Loughran and McDonald (2011, hereafter LM) develop financial word lists to better capture domain-specific information. They prove that many words from Harvard General Inquirer are misclassified in the financial context. A concrete example lies in the title of their paper, “When is a liability not a liability?”. The word liability is erroneously considered as negative by common dictionaries while exhibiting no valence in a financial context. Negative and positive word lists created by LM better capture tone content in financial information such as earnings filings. Using the LM dictionary, Garcia (2013) extends Tetlock (2007) findings: both positive and negative tones in media have predictive power on market returns, especially during recession (high uncertainty period). He confirms the importance of selecting an adapted dictionary to the financial domain.

Other word lists have been created subsequent to LM financial dictionary. Larcker and Zakolyukina (2012) analyze conference call transcripts to detect deceptive narrative from CEO and CFO. They manually form several word lists including general knowledge, emotions, hesitations, personal references, and shareholder value. False narratives contain more extreme positive emotion and references to general knowledge but less shareholder value terms. Soo (2018) creates an index for housing sentiment by quantifying tone in different local newspapers in the United States. He adapted four listed categories from Harvard IV-4 psychosocial dictionary: “Increase” and “Rise” (resp. “Decrease” and “Fall”) for positive (resp. negative) sentiment. He manually removes misclassified words and expands lists with all inflections of remaining words. He shows that housing sentiment has higher potential to explain house price variations than a cluster of economic variable (such as rents, employment, or interest rates).

Baker et al. (2016) search terms related to economy (“economic” or “economy”), uncertainty (“uncertain” or “uncertainty”), and policy (“Congress,” “deficit,” “Federal Reserve,” “legislation,” “regulation,” or “White House”) within US newspapers. To define the policy list, authors conduct an audit consisting of 12,009 article readings. They compare human classification with automatic word detection from an enlarged word list (15 terms). They form the policy final list by minimizing coding misclassification (in comparison to manual labelling). The audit process was highly time-consuming, involving the construction of an extensive annotation guide and the participation of a research team. This work leads to the construction of an economic policy uncertainty index allowing further research about policy implications on financial markets. For example, authors show that policy uncertainty affects stock price volatility and reduces investment and employment in certain sectors. Finally, Loughran and McDonald extend their approach to other word list applications. Bodnaruk et al. (2015) study firm financial constraint with a list of related words. Based on their word list, they are able to predict firm liquidity events (such as dividend omission) from financial variables, proving again that textual analysis helps develop new pertinent (and low correlated) variables of interest. Loughran et al. (2019) manually create lists affecting oil prices. Their approach goes beyond the creation of two positive and negative lists. They define a third list of keywords whose effect on oil prices depends on an accompanied modifier word (such as “fall” or “surge”). They find investor overreaction to oil news.

Statistical algorithms are mainly used in absence of a reference dictionary or to alleviate limited size of restricted word lists. Antweiler and Frank (2004) were the first to construct a sentiment index with a Naïve Bayes methodology. Their model correctly classify more than 80% of 1000 messages manually labelled as {Buy, Sell, Hold}. They show that talk from social media has an effect on trading volume and volatility, opening path for applications in event studies and insider trading. Das and Chen (2007) insist on web talk ambiguity and the difficulty to correctly classify message boards (even for humans). They suggest the combination of five different intuitive algorithms computationally efficient in such a way that they do not require important optimization. They find that a majority voting among algorithms delivers higher sentiment accuracy and reduces false positive.Footnote 1 Li (2010) annotated manually 30,000 sentences from 13 million included in forward-looking statements from firms’ earnings filings. He trained a multi-class Naïve Bayes algorithms to categorize sentence tone (“positive,” “neutral,” “negative,” and “uncertain”) and content (“revenue,” “cost,” “profits,” “operations,” “liquidity,” “investing,” “financing,” “litigation,” “employees,” “regulation,” “accounting,” “others”). By applying an N-fold cross-validation test, the algorithm correctly predicts sentence classes with an accuracy above 60%. He advocates that in absence of an adapted dictionary, researchers should rely on machine learning classifier instead of using general word lists.

From these pioneering studies, we learn that there is a compromise between sample training size and time spent for annotations. Moreover, existing dictionaries do not adjust to the informal content of Internet messages. But the recent evolution of social networks brings one green light to researchers: self-reported annotations. Oliveira et al. (2016) automatically construct dictionaries dedicated to StockTwits. From 350,000 users’ labelled posts, they identify unigrams and bigrams co-occurring with bullish and bearish stance. They estimate co-occurrence with information gain, pointwise mutual information, and TF-IDF measures. Their automated methodology produces high classification rate outperforming classical dictionaries, which are not suitable to microblogging data. Renault (2017) attributes a sentiment weight to unigrams and bigrams by counting their occurrences in bearish and bullish posts from StockTwits. His methodology produces higher out-of-sample accuracy than LM and H4N dictionaries. Exploring nearly 60 million posts, he shows that sentiment is a predictor of intraday stock return, mainly driven by novice traders. These findings provide a direct empirical evidence of noise trading.

All previous studies require subjective interpretation: lists of words are constructed from experts’ knowledge, while automatic labelling from microblogs still requires manual deletion or reclassification to improve algorithm efficiency.Footnote 2 Some researchers avoid intentionally any manual classification in their methodology. Jegadeesh and Wu (2013) use a regression-based approach to estimate word positive and negative weightings using market reaction to 10-K filings. They depart from the hypothesis that all words are equally relevant and suggest that market reaction should objectively determine this relative importance. They regress word content of 10-K filings on firms’ abnormal returns during the releasing day: word coefficients representing a positive or negative power. They find that word power weightings differ significantly from proportional and TF-IDF weightings. They proved that the word power approach is robust while using combination of dictionaries and omitting some word from these dictionaries. Their approach gets rid of word classification subjectivity but requires to identify a market reaction to the information studied. Manela and Moreira (2017) apply a support vector regression (hereafter SVR) procedure where unigrams and bigrams from The Wall Street Journal were regressed on implied volatility index (VIX). The advantage of SVR is the reduction of term matrix into its most impactful constituents permitting a regression setting (otherwise impossible with OLS). They find that high news implied volatility index (NVIX) predicts above-average stock returns. Using WordNet to classify word in categories, they find that wars and government are driving the variation of equity risk premium. This last example demonstrates that all research tend usually to blend objective and subjective components.

3 Institutional Media Corpus

Several media groups deliver financial information through their portfolio of magazines. Their general mission statement includes production of trusted information targeting financial decision-makers (such as investment managers, advisers, pension trustees, and financial intermediaries). We identified nine different pension funds and institutional magazines from five well-known financial media groups: they form our institutional media corpus. Table 2 presents a description of institutional media and the related magazines. Although it might differ among our magazine sample, most of the readers are institutional investors, asset managers, advisers, and consultants. For instance, the Global Fund Media reach 43,000 active readers, and more than 60% have position in buy-side industry (fund managers, institutional investors, and advisers). The remaining readers occupy various positions in financial services. PLANSPONSOR magazine has 35,000 subscribers from corporate and plan pension-related positions.

The structure of information within our institutional media corpus is similar to newspapers content. News released in institutional media cover a broad range of topics such as macro-economics, market analysis, expert insight, and strategic portfolio allocation. Equity-related information is essentially discussed at category-based level, i.e., emerging market and developed market equities, equity investment style, or industry sectors. This departs from the information provided from conventional newspapers such as The Wall Street Journal, Dow Jones News, or other leading newspapers. Those media are featuring news on individual firms rather than opinions, recommendations, or performance analysis at a more aggregate level regarding equity style investment. One may have aggregated information from individual companies with regard to equity style portfolio. But two caveats are in order: first, this would assume investors are able to process all this information and, second, style rankings among companies are time-varying.

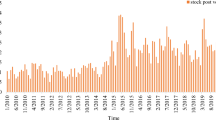

We use Scrapy library from PythonFootnote 3 to collect news content from institutional media websites. Each news collected is converted in a plain text file with the following information: name of magazine, date, title, author, section, and textual content. We gather 108,638 news from January 1996 to June 2018 from the 9 identified magazines. PLANSPONSOR is the first contributor followed by Wealth Adviser, Euromoney and Institutional Investor. AlphaQ, Institutional Asset Manager, and Financial Advisor provide only recent releases but were nonetheless integrated in the corpus. More than 70% occurences of news are collected within the period ranging from 2009 to 2017. Table 3 present descriptive statistics of our database.

Figure 1 illustrates a news from institutional media corpus after collection. The title “What to expect from the markets in 2018” does not refer directly to equity styles. The author relates vision of managers for the coming year. While the article may certainly hold strategic content, it is not guaranteed that the author will speak about small or large styles. In this case, they are mentioned only in one sentence. With this example, we highlight that style information is often secondary topic within institutional media. In addition to the absence of existing annotation and the broad diversity of topics within institutional media, the way information is presented makes the detection of style information a challenge.

4 Methodology

We reformulate the detection of style information from institutional media as a classification problem. We intend to classify news into two categories: news containing style-related information (style class) or no style information (neutral class). One news potentially cover any one or two different style information. Since we want to disaggregate the information content by style, we will perform this classification for small- and large-cap styles separately. We investigate two approaches (1) dictionary-based and (2) a selection of machine learning methods. This methodology section will present our methodologies and the evaluation of their performance. Finally, we will discuss how we handle the construction of manually labelled sample to train machine learning algorithms.

4.1 Dictionary Approach

Dictionary-based method detects words (unigrams) and group of two words (bigrams) defining style investment strategies. Dictionary entries for style information include:

-

Small style: “microcap(s),” “micro cap(s),” “smallcap(s),” “small cap(s),” “midcap(s),” “mid cap(s)”

-

Large style: “large cap(s),” “mega cap(s)”

The occurrence of each dictionary term is searched for in the news. If one news holds one (or several) terms in the lists above, the news belongs to the referred style class. The news is otherwise classified as neutral. While applying dictionary-based detection, we choose to restrain pre-processing steps to (1) removing {urls, special characters, and numbers}, (2) tokenization, and (3) lowering words. Since dictionary terms are nouns, we search for singular and plural forms.

4.2 Machine Learning Approaches

We apply supervised learning algorithms to detect style investment news. They are specifically adapted to classification problems when the number of classes is well defined. In supervised learning, the algorithms require a training phase, using a sub-sample of manually labelled data called training sample. Once algorithms are trained, they can perform classification on the overall corpus.

We select two different supervised methodologies for our work: Naïve Bayes (hereafter NB) and support vector machine (hereafter SVM). We choose NB since it is often used as the reference methodology in the literature (Antweiler & Frank, 2004; Das & Chen, 2007; Li, 2010; Henry & Leone, 2016). We complement the research by investigating SVM potentiality. Each method aims to solve the following problem: determine the class i of a news j containing n words. Considering each investment style separately, this is a binary classification problem where a news belongs to the style class or neutral class.

NB approach estimates the probability score of each word to belong to each class. It then predicts the best class based on the probability that words in the news belong to the class:

where w1, w2, …, wn are words in the news j and class i refers to style or neutral news. This method makes two fundamental assumptions: (1) conditional independence (words occur independently from each other) and (2) positional independence (words have equal probabilities of occurring at all positions).

The SVM approach projects all news j in a space with n dimensions corresponding to each word. In this configuration, news j represents a point in the multi-dimensional word space. The algorithm then creates a boundary to separate the instances of each class i with the following rule: maximize the distance between the boundary and points from each class. SVM is an optimization method that produces the optimal boundary between components of each class. This boundary is constructed using linear or more complex kernel functions. We apply a linear kernel in this study.

We perform more in-depth pre-processing for machine learning algorithms than for the dictionary approach. We restrain vocabulary size to maximize algorithms efficiency. Our pre-processing steps include (1) removing {urls, special characters, and numbers}, (2) tokenization, (3) POS-tagging to select only {noun, verb, and adjective}, (4) lowering, (5) removing stop words, and (6) deleting term with less than three characters. Our pre-processing reduces around 40% of vocabulary size from 24,000 to 15,000 words.

4.3 Performance Assessment

Performance measurements are computed by comparing the actual class of news to the classification provided by the different methods. In our binary classification problem, we get four different results:

-

True positive (TP): Style news is correctly classified within style class.

-

False positive (FP): Neutral news is wrongly classified within style class.

-

True negative (TN): Neutral news is correctly classified within neutral class.

-

False negative (FN): Style news is wrongly classified within neutral class.

We estimate precision, recall, and F-score for dictionary-based and machine learning methods:

-

Precision: \( p=\frac{\textrm{TP}}{\textrm{TP}+\textrm{FP}} \)

-

Recall: \( r=\frac{\textrm{TP}}{\textrm{TP}+\textrm{FN}} \)

-

Balanced F-score: \( F=\frac{2\ast p\ast r}{p+r} \)

Precision is the fraction of actual “style” news among news classified within “style” class by the method. Recall is the fraction of “style” news correctly retrieved among all existing style news. For example, suppose there exists 20 “style” news within a sample and the method classifies 10 news within style class. If eight of ten news classified within style class are really “style” news, precision is 0.8 (or 80%). And as the method has correctly retrieved 8 of 20 “style” news but fail to find the 12 remaining ones, recall is 0.4 (40%). We estimate the overall performance of the method with a balanced F-score.

We will promote the method exhibiting the best F-score. To construct the most efficient style coverage indexes, we intend to retrieve almost all style information included in our corpus, i.e., define a tolerable recall threshold. Moreover, we want to be sure that the retrieved information concerns style investing, i.e., we will maximize precision for accepted recall threshold.

4.4 Data Labelling, Training Sample, and Cross-Validation

The main benefit of dictionary-based approach is its high precision, attributed to the fact that terms are manually crafted. However, this method often exhibits poor recall since it is unlikely that a dictionary would contain all possible relevant terms. Machine learning methods, on the other hand, alleviate the issue of low recall since they can learn new instances of a given class, if properly trained. It is well-known that increasing the training data size results in more accurate methods.

We started our labelling task by parsing news titles in search of equity-related information. As such, we try to maximize manual detection of style news: we find more than 100 news referring to each style. We complete our sample with at least 100 neutral news. This initial labelled sample informed us on the performance of dictionary-based approach. We observe that style information represents a minority class within our corpus, i.e., there exists important imbalance between classes where neutral information is dominant. While increasing labelled sample size, we encounter high difficulty to find additional style news. Our manual labelling task became a tedious process.

To overcome this issue, we propose a semi-automatic labelling approach: we use dictionary detections as labelled data in order to avoid searching scarce style information. As previously mentioned, dictionaries exhibit high precision, i.e., detected style news are mainly true positives. Dictionary detections represent adequate candidates for labelled style data. We do not encounter difficulty to manually label neutral information since it represents the dominant class.

We annotate 800 neutral news and select 800 style news from our dictionary detections to form a balanced training sample. We document performance improvement related to the increasing size of training sample from 400 to 1600 news (equally weighted between style and neutral classes). We estimate performance scores of each algorithm with a fivefold cross-validation. We divide the training sample into five equally sized partitions. We use four partitions to train algorithms and estimate performance scores with the remaining fifth partition. We repeat the process five times by alternatively using each partition to assess performance (see Fig. 2). We compute average performance scores from these five estimations.

Finally, we extend our research to different integration of textual content, considering different term weighting estimations. First, we use word frequency as a base case. Second, we train algorithms with term frequency-inverse document frequency estimation. This evaluation adjusts word frequency by its number of observation across news. Such weighting scheme integrates the fact that words are more common than others. Third, we use bigram frequency to explore potential performance improvements as documented in Oliveira et al. (2016) and Renault (2017).

5 Results

5.1 Performance of Style Dictionaries

We proceed to a performance assessment of our dictionary approach described in Sect. 4.1. Our results are summarized in Table 4. Small and large dictionaries have, respectively, balanced F-scores of 98 and 97%. They exhibit high precision and recall. Small-cap and large-cap terms defined in our dictionaries are so specific to the investments in style equity that we only find one false detection for each style (FP):

-

“The fund invests in high-yield (‘junk’) bonds, foreign securities, emerging markets, liquidity risk, and small- and mid-cap issuers” [bond information].

-

Non-US equities saw a median return of −4.80%, slightly ahead the Russell Developed ex-US Large Cap Index result of −5.79% [ex term, excluding large caps].

While we do not totally discard false detections, they remain exceptions. Second, small and large dictionaries detect the majority of related news. We find, respectively, four (resp. six) false negatives (FN) for small (resp. large) style. Dictionaries mainly fail to detect the information for two reasons: the presence of the other style in the sentence and the presence of the word “company” replacing the term “cap” from dictionary bigrams.

-

“…investing in both small and large-cap based investment trusts” [fail to detect small information]

-

“…as well as exposure to international and domestic large- and mid-cap equities” [fail to detect large information]

-

“…dividends paid by large companies are more secure than those paid by small and medium sized companies, broadly speaking” [fail to detect small and large information]

We do not suggest the integration of terms such as “small company” or “large company” in dictionaries. The informational content is not specific to investments related to stocks and adds many false detections (FP). We do not suggest either adding extended n-gram to detect the opposite style. “Small” and “large” terms have too broad meanings. The power of small-cap and large-cap terms lies in their implicit stock content and their restricted size (unigram or bigram). To be exhaustive, we provide the reader with an Appendix presenting all false-positive and false-negative dictionary detection.

Table 4 shows that small-cap and large-cap terms are so specific to style investments that they are almost present when there is related style information within news. While we construct very restricted lists, our dictionaries are strongly efficient to detect small and large style information. We, respectively, find 2319 small news and 1705 large news within our institutional media corpus of 108,638 news. This represents 1 to 2% of news confirming our previous observation: style information is relatively scarce among institutional media. The main drawback of our approach is the selection and size of our manual labelled sample. In the next section, we test the robustness of our results by investigating if our dictionaries fail to detect style news classified as such by machine learning algorithms.

5.2 Performance of Machine Learning Algorithms

In this section, we focused all tests and results on small style detection. Table 5 summarizes performance of Naïve Bayes and support vector machine approaches. We observe that SVM are more efficient than NB with term frequency weighting: F1 scores reach 84% for SVM compared to 76% for NB. Precision of NB is relatively low (from 62 to 66%): this algorithm tends to wrongly classified neutral news within style class. This is not the case with SVM which exhibits higher precision scores. We find that increasing sample size slightly increases precision of NB term frequency algorithm. Surprisingly, SVM precision is decreasing from 85 to 81% with increasing sample size. We attribute this observation to two possible reasons: the random selection of our training sample and the difficulty to construct a frontier clearly separating the two classes. First, while constructing our training sample, we randomly select 800 news among dictionary detection. This sample differs between each algorithm and therefore impacts their performances. Second, SVM frontier between classes becomes more difficult to construct with increasing number of news present in the multi-dimensional space. If SVM frontier is sensitive to increasing size, we should find similar trend regarding recall scores. However, recall scores seem more stable. We conclude that precision score discrepancy is more related to our random sampling selection.

Considering alternative term document matrices, bigram construction highlights important performance improvements. NB and SVM precision increases strikingly, while recall decreases slightly, leading to higher balanced F1 scores. These results indicate that bigrams add information to NB and SVM models. They are in accordance with observations in Sect. 5.1: bigrams hold more information and are more discriminant than unigrams. Oliveira et al. (2016) confirm our findings with their approach to automatically create sentiment lexicons. They advocate that bigrams present a better sentiment score than unigrams. We do not find the same evidence using term frequency-inverse document frequency weighting algorithms. NB precision, already its weakness, is decreasing to 60% leading to more false positives.

We document an additional performance measure, i.e., small class holdout accuracy. This measure represents the fraction of small news detected by the dictionary-based methodology and correctly classified as small by the algorithms. To compute the measure, we ask the algorithm to classify small news that were not used during the training phase. The overall procedure is presented in Fig. 3. Algorithms correctly classify between 80 and 99% of small news detected by our dictionary approach. As such, NB approach is more efficient to classify small news than SVM. As such, NB tends to retrieve more small news (higher recall) but with less precision.

Training procedure for Naïve Bayes and support vector machine algorithms. This figure illustrates training and performance evaluation for NB and SVM algorithms. We train algorithms with balanced class samples selected from our semi-automatic approach. We compute different in-sample performance scores: precision, recall, balanced F1-score and holdout accuracy

While all measurements discussed indicate the best in sample performing method, they still not guarantee that algorithms will correctly retrieve style news in a sizable sample such as our institutional media corpus. It is possible that trained algorithms model too precisely the training data while not generalizing to new data. This problem is known as overfitting. We investigate out-of-sample performance by asking algorithms to classify all institutional media corpus news and record the number of small news afterwards. We expect this measure to be similar to the number of small news classified by dictionary-based methodology, i.e., 2319 small news detected. We find that NB approach classifies too much news as small in the institutional media corpus (from 22,098 to 43,356). The number of small news classified is 10–18 times more important than dictionary-based method. This suggests that we have an overfitting problem: term frequency algorithms model too precisely the training sample but fail to generalize to the institutional media corpus. SVM is more immune to overfitting, and it classifies between 4569 and 15,855 news as small depending on the algorithm.

The most promising algorithm is the SVM with bigram weighting scheme: it is more effective in out-of-sample classification than its NB counterpart. SVM trained with bigrams classify 4569 news (NB, respectively, 22,098 news) within a small class in the overall corpus. This algorithm is a potential substitute of our dictionary-based methodology. While dictionary methodology exhibits higher performance, measures are estimated from a smaller sample of manually annotated data (see Table 4. in Sect. 5.1). This manual annotation process may be biased. We may have inadvertently selected small news which contains dictionary terms and fail to select small news that do not contain those terms. In this case, we artificially inflate dictionary performance. We investigate this potential problem by comparing dictionary methodology and SVM using bigram term document matrix. We try to find if dictionary-based methodology fails to capture small news. We randomly read 100 news classified as small by SVM but classified as neutral by dictionary methodology. If the majority of news are really small news, our manual annotation process is biased, and the SVM approach is the best proposed methodology. On the contrary, if those news are mainly neutral ones, we will prefer the dictionary methodology. None of the news we read concern small-cap investments: dictionary-based methodology is still the best approach. We summarize our results in three points. First, best machine learning methodology (bigram SVM) captures 80% of small news detected by dictionary-based methodology (holdout accuracy). Second, news only detected as small by SVM are mainly false positive. Third, performance in dictionary-based section is reliable. We conclude that dictionary-based methodology will produce the most accurate small coverage indexes within institutional media.

6 Conclusions

This paper presents an original classification problem, detecting style content within a novel source of information, the institutional media corpus. We observe three main difficulties in our task: (1) the absence of labelled data, (2) an important class imbalance between neutral and style content, and (3) a diversity of topics within the corpus. This combination of problems makes the manual labelling task difficult for the minority style class.

We find that restricted word lists are the most promising methodology to detect small- and large-cap style information within institutional media corpus. Dictionaries composed of only five different terms (micro-, small-, mid-, large-, and mega-cap) efficiently capture style information. The explicit stock content of these terms avoids false detection (high precision) but also limits failed detection (acceptable recall). In comparison, supervised machine learning algorithms exhibit high recall but low precision scores. This comparison confirms that subjective handed crafted lists are more precise than high recall machine learning algorithms. One drawback of algorithms is their failure to generalize to the overall institutional media corpus. However, support vector machine trained with a bigram term frequency matrix presents encouraging results in alleviating this overfitting problem. One possible improvement for this method is the use of an alternative to linear kernel.

We delimit our research to the comparison of main methodologies applied in sentiment analysis. We do not cover any advanced techniques such as unsupervised learning (clustering, LDA, etc.) or deep learning (neural network), leaving a room for further comparison. Moreover, we do not extend our research to other equity style investments (growth vs. value, momentum vs. contrarian) due to manual labelling constraint. We expect that new source of data will fill the gap in the future, such as it exists user self-reported sentiment on social media. Data augmentation using generative model and semi-supervised learning are also alternative solutions.

Finally, this study opens a path for further research around media coverage and attention related to equity style investments. As such, style detections from dictionaries can be aggregated to construct small-cap and large-cap coverage indexes. With an appropriate fund flow analysis, researchers can investigate the potential impact of style information on institutional investors’ behavior.

Notes

- 1.

- 2.

Ibid

- 3.

References

Antweiler, W., & Frank, M. Z. (2004). Is all that talk just noise? The information content of internet stock message boards. Journal of Finance, 59(3), 1259–1294. https://doi.org/10.1111/j.1540-6261.2004.00662.x

Baker, S. R., Bloom, N., & Davis, S. J. (2016). Measuring economic policy uncertainty. Quarterly Journal of Economics, 131, 1593–1636.

Barberis, N., & Shleifer, A. (2003). Style investing. Journal of Financial Economics, 68(2), 161–199. https://doi.org/10.1016/S0304-405X(03)00064-3

Bodnaruk, A., Loughran, T., & McDonald, B. (2015). Using 10-K text to gauge financial constraints. Journal of Financial and Quantitative Analysis, 50, 623–646.

Das, S. R., & Chen, M. Y. (2007). Yahoo! For Amazon: Sentiment extraction from small talk on the web. Management Science, 53(9), 1375–1388. https://doi.org/10.1287/mnsc.1070.0704

Froot, K. A., & Teo, M. (2008). Style investing and institutional investors. Journal of Financial and Quantitative Analysis, 43, 883–906.

Garcia, D. (2013). Sentiment during recessions. Journal of Finance, 68(3), 1267–1300. https://doi.org/10.1111/jofi.12027

Henry, E., & Leone, A. J. (2016). Measuring qualitative information in capital markets research: Comparison of alternative methodologies to measure disclosure tone, 91(1), 153–178. https://doi.org/10.2308/accr-51161

Jegadeesh, N., & Wu, D. (2013). Word power: A new approach for content analysis. Journal of Financial Economics, 110, 712–729.

Kumar, A. (2009). Dynamic style preferences of individual investors and stock returns. Journal of Financial and Quantitative Analysis, 44(03), 607. https://doi.org/10.1017/S0022109009990020

Larcker, D. F., & Zakolyukina, A. A. (2012). Detecting deceptive discussions in conference calls. Journal of Accounting Research, 50, 495–540.

Li, F. (2010). The information content of forward-looking statements in corporate filings – A naïve Bayesian machine learning approach. Journal of Accounting Research, 48(5), 1049–1102.

Loughran, T. I. M., & Mcdonald, B. (2011). When is a liability not a liability? Textual analysis, dictionaries, and 10-Ks, 66(1), 35–65.

Loughran, T., McDonald, B., & Pragidis, I. (2019). Assimilation of oil news into prices. International Review of Financial Analysis, 63, 105–118.

Manela, A., & Moreira, A. (2017). News implied volatility and disaster concerns. Journal of Financial Economics, 123, 137–162.

Oliveira, N., Cortez, P., & Areal, N. (2016). Stock market sentiment lexicon acquisition using microblogging data and statistical measures. Decision Support Systems, 85, 62–73. https://doi.org/10.1016/j.dss.2016.02.013

Renault, T. (2017). Intraday online investor sentiment and return patterns in the U.S. stock market. Journal of Banking and Finance, 84, 25–40. https://doi.org/10.1016/j.jbankfin.2017.07.002

Soo, C. K. (2018). Quantifying sentiment with news media across local housing markets. Review of Financial Studies, 31, 3689–3719.

Tetlock, P. C. (2007). Giving content to investor sentiment: The role of media in the stock market published by: Wiley for the American Finance Association Stable URL: http://www.jstor.org/stable/4622297. The role of media in the stock market giving content to investor sentiment, 62(3), 1139–1168.

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Appendix: Dictionary Approach: False Positives and False Negatives (Exhaustive List)

Appendix: Dictionary Approach: False Positives and False Negatives (Exhaustive List)

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Gillain, C., Ittoo, A., Lambert, M. (2023). Detecting Equity Style Information Within Institutional Media. In: Alphonse, P., Bouaiss, K., Grandin, P., Zopounidis, C. (eds) Essays on Financial Analytics. Lecture Notes in Operations Research. Springer, Cham. https://doi.org/10.1007/978-3-031-29050-3_8

Download citation

DOI: https://doi.org/10.1007/978-3-031-29050-3_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-29487-7

Online ISBN: 978-3-031-29050-3

eBook Packages: Business and ManagementBusiness and Management (R0)