Abstract

In this work we present an “out-of-the-box” application of Machine Learning (ML) optimizers for an industrial optimization problem. We introduce a piecewise polynomial model (spline) for fitting of \(\mathcal {C}^k\)-continuous functions, which can be deployed in a cam approximation setting. We then use the gradient descent optimization context provided by the machine learning framework TensorFlow to optimize the model parameters with respect to approximation quality and \(\mathcal {C}^k\)-continuity and evaluate available optimizers. Our experiments show that the problem solution is feasible using TensorFlow gradient tapes and that AMSGrad and SGD show the best results among available TensorFlow optimizers. Furthermore, we introduce a novel regularization approach to improve SGD convergence. Although experiments show that remaining discontinuities after optimization are small, we can eliminate these errors using a presented algorithm which has impact only on affected derivatives in the local spline segment.

Stefan Huber and Hannes Waclawek are supported by the European Interreg Österreich-Bayern project AB292 KI-Net and the Christian Doppler Research Association (JRC ISIA).

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

When discussing the potential application of Machine Learning (ML) to industrial settings, we first of all have the application of various ML methods and models per se in mind. These methods, from neural networks to simple linear classifiers, are based on gradient descent optimization. This is why ML frameworks come with a variety of gradient descent optimizers that perform well on a diverse set of problems and in the past decades have received significant improvements in academia and practice.

Industry is full of classical numerical optimization and we can therefore, instead of using the entire framework in an industrial context, harness modern optimizers that lie at the heart of modern ML methods directly and apply them to industrial numerical optimization tasks. One of these optimization tasks is cam approximation, which is the task of fitting a continuous function to a number of input points with properties favorable for cam design. One way to achieve these favorable properties is via gradient based approaches, where an objective function allows to minimize user-definable losses. Servo drives like B &R Industrial Automation’s ACOPOS series process cam profiles as a piecewise polynomial function (spline). This is why, with the goal of using the findings of this paper as a basis for cam approximation in future works, we want to lay the ground for performing polynomial approximation with a \(\mathcal {C}^k\)-continuous piecewise polynomial spline model using gradient descent optimization provided by the machine learning framework TensorFlow. The continuity class \(\mathcal {C}^k\) denotes the set of k-times continuously differentiable functions \(\mathbb {R}\rightarrow \mathbb {R}\). Continuity is important in cam design concerning forces that are only constrained by the mechanical construction of machine parts. This leads to excessive wear and vibrations which we ought to prevent. Although our approach is motivated by cam design, it is generically applicable.

The contribution of this work is manifold:

-

1.

“Out-of-the-box” application of ML-optimizers for an industrial setting.

-

2.

A \(\mathcal {C}^k\)-spline approximation method with novel gradient regularization.

-

3.

Evaluation of TensorFlow optimizer performance for a well-known problem.

-

4.

Non-convergence of optimizers using exponential moving averages, like Adam, is documented in literature [2]. We confirm with our experiments that this non-convergence extends to the presented optimization setting.

-

5.

Algorithm to strictly establish continuity with impact only on affected derivatives in the local spline segment.

The Python libraries and Jupyter Notebooks used to perform our experiments are available under an MIT license at [5].

Prior work. There is a lot of prior work on neural networks for function approximation [1] or the use of gradient descent optimizers for B-spline curves [3]. There are also non-scientific texts on gradient descent optimization for polynomial regression. However, to the best of our knowledge, there is no thorough evaluation of gradient descent optimizers for \(\mathcal {C}^k\)-continuous piecewise polynomial spline approximation.

2 Gradient Descent Optimization

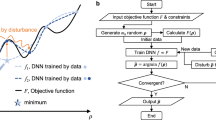

TensorFlow provides a mechanism for automatic gradient computation using a so-called gradient tape. This mechanism allows to directly make use of the diverse range of gradient based optimizers offered by the framework and implement custom training loops. We implemented our own training loop in which we (i) obtain the gradients for a loss expression \(\ell \), (ii) optionally apply some regularization on the gradients and (iii) supply the optimizer with the gradients. This requires a computation of \(\ell \) that allows the gradient tape to track the operations applied to the model parameters in form of TensorFlow variables.

2.1 Spline Model

Many servo drives used in industrial applications, like B &R Industrial Automation’s ACOPOS series, use piecewise polynomial functions (splines) as a base model for cam follower displacement curves. This requires the introduction of an according spline model in TensorFlow. Let us consider n samples at \(x_1 \le \dots \le x_n \in \mathbb {R}\) with respective values \(y_i \in \mathbb {R}\). We ask for a spline \(f :I \rightarrow \mathbb {R}\) on the interval \(I = [x_1, x_n]\) that approximates the samples well and fulfills some additional domain-specific properties, like \(\mathcal {C}^k\)-continuity or \(\mathcal {C}^k\)-cyclicityFootnote 1. Let us denote by \(\xi _0 \le \dots \le \xi _m\) the polynomial boundaries of f, where \(\xi _0 = x_1\) and \(\xi _m = x_n\). With \(I_i = [\xi _{i-1}, \xi _i]\), the spline f is modeled by m polynomials \(p_i :I \rightarrow \mathbb {R}\) that agree with f on \(I_i\) for \(1 \le i \le m\). Each polynomial

is determined by its coefficients \(\alpha _{i,j}\), where d denotes the degree of the spline and its polynomials. That is, the \(\alpha _{i,j}\) are the to be trained model parameters of the spline ML model. We investigate the convergence of these model parameters \(\alpha _{i,j}\) of this spline model with respect to different loss functions, specifically for \(L_2\)-approximation error and \(\mathcal {C}^k\)-continuity, by means of different TensorFlow optimizers (see details below). Figure 1 depicts the principles of the presented spline model.

2.2 Loss Function

In order to establish \(\mathcal {C}^k\)-continuity, cyclicity, periodicity and allow for curve fitting via least squares approximation, we introduce the cost function

By adjusting the value of \(\lambda \) in equation (2) we can put more weight to either the approximation quality or \(\mathcal {C}^k\)-continuity optimization target. The approximation error \(\ell _2\) is the least-square error and made invariant to the number of data points and number of polynomial segments by the following definition:

We assign a value \(\ell _\text {CK}\) to the amount of discontinuity in our spline function by summing up discontinuities at all \(\xi _i\) across relevant derivatives as

We make \(\ell _\text {CK}\) in equation (4) invariant to the number of polynomial segments by applying an equilibration factor \(\frac{1}{m - 1}\), where \(m - 1\) is the number of boundary points excluding \(\xi _0\) and \(\xi _m\). This loss \(\ell _\text {CK}\) can be naturally extended to \(\mathcal {C}^k\)-cyclicity/periodicity for cam profiles.Footnote 2

2.3 TensorFlow Training Loop

The gradient tape environment of TensorFlow offers automatic differentiation of our loss function defined in equation (2). This requires a computation of \(\ell \) that allows for tracking the operations applied to \(\alpha _{i,j}\) through the usage of TensorFlow variables and arithmetic operations, see Listing 1.1.

In this training loop, we first calculate the loss according to equation (2) in a gradient tape context in lines 5 and 6 and then obtain the gradients according to that loss result in line 7 via the gradient tape environment automatic differentiation mechanism. We then apply regularization in line 8 that later will be introduced in Sect. 3.1 and supply the optimizer with the gradients in line 9.

3 Improving Spline Model Performance

In order to improve convergence behavior using the model defined in Sect. 2.1, we introduce a novel regularization approach and investigate effects of input data scaling and shifting of polynomial centers. In a cam design context, discontinuities remaining after the optimization procedure lead to forces and vibrations that are only constrained by the cam-follower system’s mechanical design. To prevent such discontinuities, we propose an algorithm to strictly establish continuity after optimization.

3.1 A Degree-Based Regularization

With the polynomial model described in equation (1), terms of higher order have greater impact on the result. This leads to gradients having greater impact on terms of higher order, which impairs convergence behavior. This effect is also confirmed by our experiments. We propose a degree-based regularization approach, that mitigates this impact by effectively causing a shift of optimization of higher-degree coefficients to later epochs. We do this by introducing a gradient regularization vector \(R = (r_0, \dots , r_d)\), where

The regularization is then applied by multiplying each gradient value \(\frac{\partial \ell }{\partial \alpha _{i,j}}\) with \(r_j\). Since the entries \(r_j\) of R sum up to 1, this effectively acts as an equilibration of all gradients per polynomial \(p_i\).

This approach effectively makes the sum of gradients degree-independent. Experiments show that this allows for higher learning rates using non-adaptive optimizers like SGD and enables the use of SGD with Nesterov momentum, which does not converge without our proposed regularization approach. This brings faster convergence rates and lower remaining losses for non-adaptive optimizers. At a higher number of epochs, the advantage of the regularization is becoming less. Also, the advantage of the regularization is higher for polynomials of higher degree, say, \(d \ge 4\).

3.2 Practical Considerations

Experiments show that, using the training parameters outlined in Sect. 4, SGD optimization has a certain radius of convergence with respect to the x-axis around the polynomial center. Shifting of polynomial centers to the mean of the respective segment allows segments with higher x-value ranges to converge. We can implement this by extending the polynomial model defined in equation (1) as

If input data is scaled such that every polynomial segment is in the range [0, 1], in all our experiments for all \(0 \le \lambda \le 1\), SGD optimization is able to converge using this approach. With regards to scaling, as an example, for a spline consisting of 8 polynomial segments, we scale the input data such that \(I = [0, 8]\). We skip the back-transformation as we would do in production code.

3.3 Strictly Establishing Continuity After Optimization

In order to strictly establish \(\mathcal {C}^k\)-continuity after optimization, i.e., to eliminate possible remaining \(\ell _\text {CK}\), we apply corrective polynomials that enforce \(\delta (\xi _i) = 0\) at all \(\xi _i\). The following method requires a spline degree \(d \ge 2k + 1\). Let

denote the mean j-th derivative of \(p_i\) and \(p_{i+1}\) at \(\xi _i\) for all \(0 \le j \le k\). Then there is a unique polynomial \(c_i\) of degree \(2k+1\) that has a j-th derivative of 0 at \(\xi _{i-1}\) and \(m_j - p^{(j)}_i(\xi _i)\) at \(\xi _i\) for all \(0 \le j \le k\). Likewise, there is a unique polynomial \(c_{i+1}\) with j-th derivative given by \(m_j - p^{(j)}_{i+1}(\xi _i)\) at \(\xi _i\) and 0 at \(\xi _{i+1}\). The corrected polynomials \(p^*_i = p_i + c_i\) and \(p^*_{i+1} = p_{i+1} + c_{i+1}\) then possess identical derivatives \(m_j\) at \(\xi _i\) for all \(0 \le j \le k\), yet, the derivatives at \(\xi _{i-1}\) and \(\xi _{i+1}\) have not been altered. This allows us to apply the corrections at each \(\xi _i\) independently as they have only local impact. This is a nice property in contrast to natural splines or methods using B-Splines as discussed in [3].

4 Experimental Results

In a first step, we investigated mean squared error loss by setting \(\lambda = 1\) in our loss function defined in equation (2) for a single polynomial, which revealed a learning rate of 0.1 as a reasonable setting. We then ran tests with available TensorFlow optimizers listed in [4] and compared their outcomes. We found that SGD with momentum, Adam, Adamax as well as AMSgrad show the lowest losses, with a declining tendency even after 5000 epochs. However, the training curves of Adamax and Adam exhibit recurring phases of instability every \(\sim 500\) epochs. Non-convergence of these optimizers is documented in literature [2] and we can confirm with our experiments that it also extends to our optimization setting. Using the AMSGrad variant of Adam eliminates this behavior with comparable remaining loss levels. With these results in mind, we chose SGD with Nesterov momentum as non-adaptive and AMSGrad as adaptive optimizer for all further experiments, in order to work with optimizers from both paradigms.

The AMSGrad optimizer performs better on the \(\lambda = 1\) optimization target, however, SGD is competitive. The loss curves of these optimizer candidates, as well as instabilities in the Adam loss curve are shown in Fig. 2. An overview of all evaluated optimizers is given in our GitHub repository at [5].

With our degree-based regularization approach introduced in Sect. 3.1, SGD with momentum is able to converge quicker and we are able to use Nesterov momentum, which was not possible otherwise. We achieved best results with an SGD momentum setting of 0.95 and AMSGrad \(\beta _1\) = 0.9, \(\beta _2\)=0.999 and \(\epsilon =10^{-7}\). On that basis, we investigated our general spline model, by we sweeping \(\lambda \) from 1 to 0. Experiments show that both optimizers are able to generate near \(\mathcal {C}^2\)-continuos results across the observed \(\lambda \)-range while at the same time delivering favorable approximation results. The remaining continuity correction errors for the algorithm introduced in Sect. 3.3 to process are small.

Using \(\mathcal {C}^2\)-splines of degree 5, again, AMSGrad has a better performance compared to SGD. For all tested \(0< \lambda < 1\), SGD and AMSGrad manage to produce splines of low loss within 10 000 epochs: SGD reaches \(\ell \approx 10^{-4}\) and AMSGrad reaches \(\ell \approx 10^{-6}\). Given an application-specific tolerance, we may already stop after a few hundred epochs.

5 Conclusion and Outlook

We have presented an “out-of-the-box” application of ML optimizers for the industrial optimization problem of cam approximation. The model introduced in Sect. 2.1 and extended by practical considerations in Sect. 3.2 allows for fitting of \(\mathcal {C}^k\)-continuos splines, which can be deployed in a cam approximation setting. Our experiments documented in Sect. 4 show that the problem solution is feasible using TensorFlow gradient tapes and that AMSGrad and SGD show the best results among available TensorFlow optimizers. Our gradient regularization approach introduced in Sect. 3.1 improves SGD convergence and allows usage of SGD with Nesterov momentum. Although experiments show that remaining discontinuities after optimization are small, we can eliminate these errors using the algorithm introduced in Sect. 3.3, which has impact only on affected derivatives in the local spline segment.

Additional terms in \(\ell \) can accommodate for further domain-specific goals. For instance, we can reduce oscillations in f by penalizing the strain energy

In our experiments outlined in the previous section, we started with all polynomial coefficients initialized to zero to investigate convergence. To improve convergence speed in future experiments, we can start with the \(\ell _2\)-optimal spline and let our method minimize the overall goal \(\ell \).

Flexibility of our method with regards to the underlying polynomial model allows for usage of different function types. In this way, as an example, an orthogonal basis using Chebyshev polynomials could improve convergence behavior compared to classical monomials.

Notes

- 1.

By \(\mathcal {C}^k\)-cyclicity we mean that the derivative \(f^{(i)}\) matches on \(x_1\) and \(x_n\) for \(1 \le i \le k\). If it additionally matches for \(i=0\) then we have \(\mathcal {C}^k\)-periodicity.

- 2.

In (4), change \(m - 1\) to m and generalize \(\delta _{i,j} = p^{(j)}_{1 + (i \bmod m)}(\xi _{i \bmod m}) - p^{(j)}_i(\xi _i)\). For cyclicity we ignore the case \(j=0\) when \(i=m\), but not for periodicity.

References

Adcock, B., Dexter, N.: The gap between theory and practice in function approximation with deep neural networks. SIAM J. Math. Data Sci. 3(2), 624–655 (2021). https://doi.org/10.1137/20M131309X

Reddi, S.J., Kale, S., Kumar, S.: On the convergence of adam and beyond. CoRR abs/1904.09237 (2019). https://doi.org/10.48550/arXiv.1904.09237

Sandgren, E., West, R.L.: Shape optimization of cam profiles using a b-spline representation. J. Mech. Trans. Autom. Design 111(2), 195–201 (06 1989). https://doi.org/10.1115/1.3258983

TensorFlow: Built-in optimizer classes. https://www.tensorflow.org/api_docs/python/tf/keras/optimizers (2022), Accessed 28 Feb 2022

Waclawek, H., Huber, S.: Spline approximation with tensorflow gradient descent optimizers for use in cam approximation. https://github.com/hawaclawek/tf-for-splineapprox (2022), Accessed 31 May 2022

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Huber, S., Waclawek, H. (2022). \(\mathcal {C}^k\)-Continuous Spline Approximation with TensorFlow Gradient Descent Optimizers. In: Moreno-Díaz, R., Pichler, F., Quesada-Arencibia, A. (eds) Computer Aided Systems Theory – EUROCAST 2022. EUROCAST 2022. Lecture Notes in Computer Science, vol 13789. Springer, Cham. https://doi.org/10.1007/978-3-031-25312-6_68

Download citation

DOI: https://doi.org/10.1007/978-3-031-25312-6_68

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-25311-9

Online ISBN: 978-3-031-25312-6

eBook Packages: Computer ScienceComputer Science (R0)