Abstract

In order to analyze and explore the potential value of big data more effectively, intelligent optimization algorithms are applied to this field increasingly. However, data often has the characteristics of high dimension for big data analytics. As the dimension of data increases, the performance of the optimization algorithm degrades dramatically. The Yin-Yang-Pair Optimization (YYPO) is a lightweight single-objective optimization algorithm, which has stronger competitive performance compared with other algorithms and has significantly lower computational time complexity. Nevertheless, it also suffers from the drawbacks of easily falling into local optimum and elitism deficiency, resulting in unsatisfactory performance on high-dimensional problems. To further improve the performance of YYPO in solving high-dimensional problems for big data analytics, this paper proposes an improved Yin-Yang-Pair Optimization based on elite strategy and adaptive mutation method, namely CM-YYPO. First, the crossover operator using an elite strategy is introduced to record the individual optimal generated by point P1 as elite. After the splitting stage, the elite to cross-disturb point P1 is utilized to improve the global search performance of YYPO. Subsequently, the mutation operator with an improved adaptive mutation method is used to mutate point P1 to improve the local search performance of YYPO. The proposed CM-YYPO is evaluated by 28 test functions used in the Single-Objective Real Parameter Algorithm competition of the Congress on Evolutionary Computation 2013. The performance of CM-YYPO is compared with YYPO, YYPO-SA1, YYPO-SA2, A-YYPO, and four other single-objective optimization algorithms with superior performance, which are Salp Swarm Algorithm, Sine Cosine Algorithm, Grey Wolf Optimizer and Whale Optimization Algorithm. The experimental results show that, the proposed CM-YYPO can achieve more stable optimization capability and higher computational accuracy on high-dimensional problems, which prospects a promising idea to solve high-dimensional problems in the field of big data analytics.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In the era of big data, more and more information is being recorded, collected and stored. Through big data analytics, people can discover and summarize the laws hidden behind the data, which can improve the efficiency of the system, as well as predict and judge future trends [1]. To fully exploit the value of big data and solve a series of technical problems, a growing number of intelligent optimization algorithms are applied to this field [2,3,4].

Yin-Yang-Pair Optimization (YYPO) is proposed by Punnathanam et al. [5] in 2016, which is a lightweight single-objective optimization algorithm. The advantages of YYPO are few setup parameters and low time complexity. However, the original YYPO has the following disadvantages. (1) The tendency to premature convergence when solving complex and difficult optimization problems [6]; (2) The quality of candidate solutions is poor in the exploration process due to the lack of elites [7].

Some scholars have proposed improved algorithms for the basic YYPO algorithm. Punnathanam et al. [8] proposed Adaptive Yin-Yang-Pair Optimization (A-YYPO), which modified the probability of one-way splitting and D-way splitting in YYPO into a function related to the dimension of the problem. Xu et al. [6] introduced chaos search in YYPO to improve global exploration ability and backward learning strategy to improve local exploitation ability. Li et al. [9] proposed Yin-Yang-Pair Optimization-Simulated Annealing (YYPO-SA) based on simulated annealing strategy, which is further divided into YYPO-SA1 and YYPO-SA2 by switching strategy.

In the field of big data analytics, the data often has high dimensional characteristics [10]. As the dimension increases, the search space becomes dramatically larger. However, none of these improvement methods consider the problem of elite missing. The lack of information interaction with the global optimal solution makes the search ability of YYPO significantly less efficient. Therefore, this paper proposes an improved Yin-Yang-Pair Optimization algorithm based on elite strategy and adaptive mutation method (CM-YYPO).

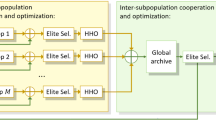

Proposed CM-YYPO aims to integrate crossover operator and mutation operator based on YYPO, with details as follows. (1) In the crossover stage, an elitist strategy is adopted to enhance the global optimization ability of the algorithm. (2) In the mutation stage, an improved adaptive mutation probability is introduced to improve the ability of the algorithm to jump out of local optimum. (3) Use only D-way splitting to improve the performance of YYPO for solving high-dimensional problems.

The rest of this paper is organized as follows. Section 2 describes the YYPO algorithm. Section 3 presents the CM-YYPO algorithm proposed in this paper. Section 4 gives the experimental analysis of CM-YYPO by benchmark function test results. Section 5 concludes the work.

2 Yin-Yang-Pair Optimization (YYPO)

The algorithmic idea of YYPO comes from Chinese philosophy, which uses two points (P1 and P2) representing Yin and Yang to balance local exploitation and global exploration. The points P1 and P2 are the centers of the hypersphere volumes in the variable space explored with radii δ1 and δ2. δ1 tends to decrease periodically, while δ2 is the opposite.

YYPO normalizes all decision variables (between 0 and 1) and scales them appropriately according to the variable boundaries when performing fitness evaluation. Imin, Imax, α are three user-defined parameters, where Imin and Imax are the minimum value and maximum value of the archive count I, and α is the scaling factor of the radius.

2.1 Splitting Stage

Points P1 and P2 will go through the splitting stage in turn, while entering their corresponding radii. The splitting mode is decided equally by the following two methods.

One-way splitting:

D-way splitting:

In Formula (1) and Formula (2), S is a matrix consisting of 2D identical copies of the point P, which has a size of 2D × D; B is a matrix of 2D random binary strings of length D (each binary string in B is unique); k denotes the point number and j denotes the decision variable number that will be modified; r is a random number between 0 and 1.

In both splitting methods, random values in the interval [0, 1] are used to correct for out-of-bounds variables. Then, the generated 2D new points are evaluated for fitness separately, and the point that undergoes the splitting stage is replaced by the one with the best fitness.

2.2 Archive Stage

The archive stage begins after the required number of archiving updates are completed. In this phase, the archive contains 2I points, which correspond to the two points (P1 and P2) added in each update before the splitting stage. If the best point in the archive is fitter than point P1, then swap it with point P1. After that, if the best point in the archive is fitter than point P2, then P2 is replaced by it. Next, update the search radii (δ1 and δ2) using the following equations.

At the end of the archive stage, the archive is cleared, a new value of I for the number of archive updates is randomly generated in the specified range [Imin, Imax], and the archive counter is set to 0.

3 Improved Yin-Yang Pair Optimization (CM-YYPO)

3.1 Crossover Operator with Elite Strategy

The splitting method of YYPO determines the quality of new solutions generated by the algorithm. Before entering the archive stage, point P1 is restricted only by radius and lacks information interaction with the global optimal solution. When solving high-dimensional problems, the optimization effect is not satisfactory due to the lack of elites.

Therefore, in this paper, the individual optimal position of P1 is taken as the elite and recorded with Pbest. Pbest is initialized to the best point of P1 and P2. At the beginning of the iteration, points P2 and P1 are exchanged frequently, and although there is a case that P2 is better than P1, there is a high probability that the exchanged points are only locally optimal. Update Pbest only in the crossover and mutation stages in order to delay the tendency to fall into local optima.

During each iteration, the point P1 is cross-perturbed by Pbest to try to produce a higher quality solution. There are various methods of crossover, of which the formula for uniform arithmetic crossover is:

In Formula (4), Ya and Yb represent the two parent chromosomes; Y is the offspring chromosome generated by crossover; λ is a constant belonging to [0, 1].

This crossover method limits the search range and prevents the offspring from exploring more around the parents. In order to enable the offspring to jump out of the solution region enclosed by Pbest and P1, an extended arithmetic crossover method [11] is used in this paper to expand the range of λ. At the same time, the influence of the fitness value is added to the crossover stage to improve the chance of generating high-quality offspring. In this work, the crossover operation is performed according to the following equation to produce the children P1*.

In Formula (5), fP1 and fPbest are the fitness values of point P1 and point Pbest, respectively. F(fP1, fPbest) is calculated according to Formula (6).

At the end of the crossover stage, the parents are updated sequentially according to the fitness values of the offspring. For example, if the point P1* is fitter than P1, replace P1 with P1*, otherwise do not update. In addition, n is used to record the number of times Pbest has not been updated. If Pbest is updated, n needs to be reset to 0; otherwise n = n + 1.

3.2 Mutation Operator with Adaptive Mutation Probability

If Pbest is updated in the crossover stage, the algorithm proceeds directly to the next phase. Otherwise, the point P1 mutates with a certain probability. In this paper, the adaptive mutation probability formula shown below is used.

In Formula (7), c is the control parameter, which determines the range of variation probability; t is the number of iterations; n indicates the number of times Pbest has not been updated. As is indicated in Formula (7), the mutation probability pm increases as n increases. This is because, as the number of times Pbest un-updated increases, the likelihood of the algorithm falling into a local optimum also becomes larger. Further, as t increases, the pm gradually decreases to ensure the convergence of the algorithm.

When the mutation probability is satisfied, the point P1 is mutated according to the following equation.

In Formula (8), P1** denotes the point after the variation and j denotes the decision variable number that will be modified.

Update P1 and Pbest using the update method in the crossover stage. The difference is that if Pbest is un-updated, n remains unchanged; otherwise, let n = 0.

3.3 Splitting Method

In YYPO, one-way splitting and D-way splitting are equally utilized with a probability of 0.5. However, it was found that multidirectional search is more effective than one-way search in high-dimensional problems [8]. Therefore, only D-way splitting is used in this paper.

3.4 Computational Complexity

In this section, the symbol O is used to analyze the computational complexity in a single iteration. CM-YYPO performs crossover stage in each iteration. However, mutation occurs only under conditions that satisfy the probability of mutation. Here, the time complexity analysis is performed in the worst case, with crossover and mutation in each iteration.

In crossover and mutation, there are four identical operations: (a) calculate to obtain a new point; (b) scale the new point for fitness evaluation; (c) update P1; (d) update Pbest. In addition, the probability of mutation needs to be calculated at the beginning of the mutation. The computational complexities are shown as follows.

Since the computational complexity of original YYPO is O(D2), the computational complexity of CM-YYPO is as follow.

Thus, CM-YYPO is a polynomial-time algorithm with the same time complexity as YYPO for a single iteration. So, proposed CM-YYPO is also a lightweight optimization algorithm.

4 Validation and Discussion

4.1 Experimental Results

To test the performance of CM-YYPO, 28 test functions used in the single-objective real parameter algorithm competition of IEEE Congresson Evolutionary Computation (CEC) 2013 [12] are adopted in this paper. There are 5 unimodal functions (f1-f5), 15 basic multimodal functions (f6-f20) and 8 composition functions (f21-f28). The parameter range of the test functions is uniformly set to [-100, 100].

In this paper, CM-YYPO, YYPO, YYPO-SA1, YYPO-SA2, A-YYPO and four representative single-objective optimization algorithms: Salp Swarm Algorithm (SSA), Sine Cosine Algorithm (SCA), Grey Wolf Optimizer (GWO), and Whale Optimization Algorithm (WOA) are compared for performance in the experiments. The three user-defined parameters (Imin, Imax, α) are set according to literature [5]. The parameters for CM-YYPO were not rigorously tuned, and were intuitively set as: λ = 0.4; c = 0.01. In addition, the parameters in the simulated annealing mechanism of YYPO-SA1 and YYPO-SA2 are set according to literature [9]. SSA, SCA, GWO and WOA all adopted the parameter values set in the original papers, and the population number was uniformly set to 100.

For fairness, all algorithms are run independently 51 times. The number of iterations for YYPO and its improved algorithms is set to 2500. Iteration times (iter) for the remaining algorithms is related to dimension(D) and population number (pop):

4.2 Performance Analysis of CM-YYPO

Tables 1–3 give the test results of all algorithms for 28 test functions in 10, 30, and 50 dimensions, respectively. The terms “mean” and “std. Dev.” are used to refer to the mean and standard deviation of the error obtained over the 51 runs. A smaller mean value indicates a better average performance of the algorithm, and a smaller standard deviation means that the algorithm is more stable.

As can be seen from Table 1, CM-YYPO ranked first for eleven times on the 10-dimensional test functions, including two unimodal functions, seven multimodal functions, and two composition functions. The performance in the multimodal functions is significantly better than other comparison algorithms, and slightly worse than YYPO in the composition function. CM-YYPO ranked first the most times. YYPO-SA1 was next, but only ranked first for five times. It shows that the improved strategy proposed in this work, solving the low-dimensional for problem is greatly improved.

As can be shown from Table 2, CM-YYPO ranked first for seventeen times on the 30-dimensional test functions, with three unimodal functions, eight multimodal functions, and six composition functions. It has the best optimization performance on all three types of test functions, especially the solution capability of the composite functions is significantly improved. It is shown that CM-YYPO has obvious superiority in solving high-dimensional problems.

As can be demonstrated from Table 3, CM-YYPO ranked first for seventeen times on the 50-dimensional test functions, including four unimodal functions, eight multimodal functions, and five composition functions. For further increases in dimensionality, CM-YYPO still maintains a superb search capability compared to other algorithms.

The crossover stage of CM-YYPO leads to unsatisfactory performance for f5 and f11. However, the solving ability on f5 is significantly improved at dimension 50. It can be concluded that the performance of CM-YYPO is stable and significant for high-dimensional problems.

To verify the convergence performance of CM-YYPO, eleven representative test functions are selected. Figure 1 shows the convergence curves of all algorithms in 50 dimensions. The horizontal coordinates of the graph indicate the percentage of the maximum number of iterations, and the vertical coordinates indicate the average fitness value over the current number of iterations.

It can be seen from Fig. 1 that CM-YYPO can converge faster on the unimodal functions (f2, f3 and f4); on the multimodal functions (f7 f9 f12 f18 and f20), the tendency to fall into local optimum is slowed down. For the more difficult composition functions (f23 f25 and f27), the convergence speed and solution accuracy of CM-YYPO are also better than other comparative algorithms.

In summary, the candidate solutions can effectively improve the quality under the perturbation of elites during the search process. And the mutation operation on the candidate solutions also increases the probability of jumping out of the local optimum.

5 Conclusions

In this paper, the crossover and mutation operators are added to the search mechanism of YYPO for the optimization problems in high dimensions. The splitting method is also improved. According to the comparison results of test functions, proposed CM-YYPO not only overcomes the drawback of early convergence, but also greatly improves the solving ability in each dimension. Especially in solving high-dimensional problems, its search performance is obviously better than other algorithms. However, optimization problems in big data analytics involve more optimization objectives than just high-dimensional features. It is still worthwhile to continue to explore the research on how to extend CM-YYPO effectively to multi-objective optimization problems.

References

Li, G., Cheng, X.: Research status and scientific thinking of big data. Bulletin of Chinese Academy Sci. 6, 647–657 (2012)

Jaume, B., Xavier, L.: Large-scale data mining using genetics-based machine learning. Wiley Interdisciplinary Reviews: Data Mining Knowledge Discovery 3(1), 37–61 (2013)

Chen, Y., Miao, D., Wang, R.: A rough set approach to feature selection based on ant colony optimization. Pattern Recogn. Lett. 31(3), 226–233 (2010)

Samariya, D., Ma, J.: A new dimensionality-unbiased score for efficient and effective outlying aspect mining. Data Science Eng. 7(2), 120–135 (2022)

Varun, P., Prakash, K.: Yin-Yang-pair optimization: a novel lightweight optimization algorithm. Eng. Appl. Artif. Intell. 54, 62–79 (2016)

Xu, Q., Ma, L., Liu, Y.: Yin-Yang-pair optimization algorithm based on chaos search and intricate operator. J. Computer Appl. 40(08), 2305–2312 (2020)

Wang, W., et al.: An orthogonal opposition-based-learning Yin–Yang-pair optimization algorithm for engineering optimization. Engineering with Computers (4), (2021)

Varun, P., Prakash, K.: Adaptive Yin-Yang-Pair Optimization on CEC 2016 functions. In: 2016 IEEE Region 10 Conference (TENCON), pp. 2296–2299 (2016)

Li, D., Liu, Q., Ai, Z.: YYPO-SA: novel hybrid single-object optimization algorithm based on Yin-Yang-pair optimization and simulated annealing. Application Research of Computers 38(07), 2018–2024 (2021)

Guo, P., et al.: Computational intelligence for big data analysis: current status and future prospect. Journal of Software 26(11), 3010–3025 (2015)

Chen, X., Yu, S.: Improvement on crossover strategy of real-valued genetic algorithm. Acta Electron. Sin. 31(1), 71–74 (2003)

Liang, J., et al.: Problem Definitions and Evaluation Criteria for the CEC 2013 Special Session on Real-Parameter Optimization, pp. 281295 (2013)

Acknowledgments

This work has been supported by the National Natural Science Foundation of China (No. 61602162).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Xu, H., Ding, M., Lu, Y., Ye, Z. (2023). An Improved Yin-Yang-Pair Optimization Algorithm Based on Elite Strategy and Adaptive Mutation Method for Big Data Analytics. In: Li, B., Yue, L., Tao, C., Han, X., Calvanese, D., Amagasa, T. (eds) Web and Big Data. APWeb-WAIM 2022. Lecture Notes in Computer Science, vol 13421. Springer, Cham. https://doi.org/10.1007/978-3-031-25158-0_1

Download citation

DOI: https://doi.org/10.1007/978-3-031-25158-0_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-25157-3

Online ISBN: 978-3-031-25158-0

eBook Packages: Computer ScienceComputer Science (R0)