Abstract

Usually when noise effects are considered with respect to well-being and health, A-weighted sound pressure level indicators are analyzed. However, several decades ago researchers started to use measurement methods to quantify auditory sensations in more detail. Later the soundscape pioneer Murray Schafer described acoustics and psychoacoustics as the cornerstones to understanding the physical properties of sound and the way sound is perceived. This approach emphasized that all aspects of soundscape are related to perception. Psychoacoustic data are considered for a more comprehensive evaluation of acoustic environments that goes beyond the simplified use of sound level indicators. Moreover, a key consideration is that acoustic environments are perceived binaurally by humans. Thus, measurement equipment that collects spatial information about the acoustic environments is increasingly being applied in soundscape investigations and consequently is suggested in soundscape standards. Following the soundscape concept, all measurements and analyses must reflect the way soundscape is perceived by people in the appropriate context. This insight led to an increase in research and applications of psychoacoustic measurements to understand the effects of acoustic environments on humans in more detail. Although the general value of psychoacoustics is broadly acknowledged in soundscape research, several research questions remain that must be addressed to fully understand the relevance of psychoacoustic properties in different environments and contexts.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Acoustic environments

- Artificial head

- Auditory perception

- Binaural hearing

- Environmental noise

- Masking

- Psychoacoustics

- Psychoacoustic parameters

- Soundscape assessment

6.1 Introduction

More than 40 years ago, Schultz (1978) published a dose-effect relationship that could be used for predicting community annoyance due to transportation noise of all kinds. His predictor of community reactions offered the prospect of a technical rationale for environmental noise regulations and, due to his influence, non-acoustic factors became equally important in determining a community’s reaction to noise (Fidell 2003). Though the U.S. Federal Interagency Committee on Noise (FICON), the Environmental Protection Agency (EPA), and other groups had set up research projects to study annoyance caused by environmental noise, there were still no explanations for large differences in the relative rates of annoyance recorded for different communities with the same noise levels. Thus, accurate predictors of potential annoyance remained elusive.

The Directive 2002/49/EC relating to the assessment and management of environmental noise (the Environmental Noise Directive: END) since 2002 is the main instrument used in the European Union to identify critical noise pollution levels and to trigger necessary actions both at Member State and at an European-wide level. The action areas on which END focuses include: (1) the determination of exposure to environmental noise, (2) ensuring that information on environmental noise and its effects is made available to the public for preventing and reducing environmental noise where necessary, and (3) the preservation of environmental sound quality where it is good. Since environmental noise can be persistent and inescapable, a significant proportion of the population suffers from long-term exposure. Thus, the quantification of the related burden of diseases from environmental noise, including reduction in the number of years of healthy living, is an emerging challenge for policy makers (WHO 2014).

Environmental noise investigations based mostly on measurements and calculations of A-weighted sound pressure level did not allow accurate predictions of human responses to the noise. In most cases the A-weighted equivalent continuous sound level (LAeq) or the day-evening-night average sound level (LDEN) with level adjustments for evening (+5 dB) and night (+10 dB) are considered more appropriate in the context of environmental noise exposure (e.g., ISO 1996-1 2016). However, by using those approaches, the description, classification, and assessment of environmental noise is based on a single parameter related to one property of sound, its level, and the psychoacoustic characteristics of the soundscape and the auditory sensations it elicits are completely neglected. Therefore, the need for measurements mimicking the way humans perceive sounds was increasingly acknowledged and the inclusion of more signal parameters related to psychoacoustic properties of sounds was considered. The insight that measurements and assessments must be guided by the way humans typically perceive the soundscape under scrutiny promoted the increasing use of binaural measurement systems and psychoacoustic analyses in soundscape research (Engel et al. 2021).

To understand the complexity of human responses to environmental noise, the transformation of the sound event (the physical event) into a hearing event (the perceptual event) must be considered. There are several different aspects influencing this transformation. First, the physical aspect of the sound event includes the change in the sound from a sound source to the human ears depending on the direction of incidence. Second, the psychoacoustical aspect, how the inner ear processes sound, is dependent on the time structure and frequency distribution. The third aspect, the psychological cognitive aspect, involves the context, kinds of information, individual expectations, and the attitude of the listener toward the sound that influence how the sound event is classified and interpreted (Genuit 2002). Thus, the accurate description of environmental noise requires not only the utilization of measurements capable of replicating the physical mechanisms involved in human hearing but also the application of extensive knowledge about binaural signal processing, psychoacoustics, and the cognitive aspects of human sound perception.

6.2 Listening to Acoustic Environments

The acoustic environment is a distinct component of the sensory experience of humans. “A Soundscape consists of events heard not objects seen” (Schafer 1994).

6.2.1 Spatial Hearing

One of the most relevant aspects of the human auditory system is its ability to process the differences in information provided by the left and right ears. This binaural signal processing is essential for spatial hearing, which can provide source direction and distance, and is advantageous for pattern recognition and localization of different sources (Blauert 1996). Sound source localization is possible both horizontally and vertically, although the mechanisms involved vary along the corresponding planes (Shaw 1997; Fay and Popper 2005). In the horizontal plane, localization is based on the evaluation of interaural differences (i.e., the differences between level and phase reaching the left and right ears). Sound waves originating from sources located outside of the median plane (having a lateral offset) travel paths of different lengths toward the left and right ears of the listener, which results in different times of arrival (i.e., delayed on one side). The human brain can interpret these delays (less than one millisecond) as directional information. These differences in arrival time, typically denoted as interaural time differences (ITDs), reach their maximum when the sound source is located to the left or right of the listener. The ITD cues are considered most important for horizontal sound source localization and work especially well for low frequencies when the auditory system is able to detect pure phase differences between both ear signals. In addition to ITDs, acoustic shadowing caused by the head, shoulders, and torso of the listener introduces interaural level differences (ILDs) for signal components with small wavelengths (i.e., at higher frequencies). The combination of ITDs and ILDs are used by the human auditory system for horizontal sound source localization.

Sounds from sources located along the median plane of the human head will provide equal stimulation to the left and right ears (Blauert 1974). Nonetheless, humans are capable of localizing sound sources in the median plane, for example, distinguishing the sound source location in front from behind by moving the head to cause left and right differences. In addition, the frequency spectrum of the received sound varies with the direction of the sound source due to the direction-dependent filtering of sound caused by human anatomy: the shape of the auricles, head, shoulders, and upper body of the listener. These spectral differences can be interpreted by the brain as directional information because certain distortion patterns are associated with specific directions (Blauert 1996).

These direction-dependent modifications imposed on the received sound are often summarized as a pair of filters for each horizontal and vertical direction of incidence known as the head-related transfer function (HRTF). Unlike conventional sound measurement systems comprised of a microphone with an omnidirectional, flat frequency response, the sound pressure level (SPL) measured at the eardrums shows frequency-dependent variations between +15 and −30 dB related to different directions of sound incidence. These spectral modifications are introduced through interactions of the sound field with the anatomy of the listener and can be categorized as direction-dependent modifications, such as diffractions, reflections, and shadowing, as well as direction-independent alterations observed in the form of resonances. The spectral pattern induced by this directional filtering is illustrated in Fig. 6.1.

Spectral pattern induced by the filtering properties of the human anatomy on sound arriving at the left ear. Frequency-dependent variations between –30 and +15 dB can be observed for different directions of sound incidence. The sound source was in the horizontal plane passing through the ears, and at constant radius from the centre-point between the ears. It circled counterclockwise as seen from above looking down, starting from the center-front direction at 0 degrees and continuing full circle back to 0 degrees. The vertical stripes in the illustration are instrumentation artifacts due to the use of fixed source positions with a resolution of 10 degrees. (Adapted from Genuit and Sottek 2010)

One of the major advantages of binaural signal processing is illustrated in Figs. 6.2 and 6.3. In this example, two spatially distributed loudspeakers (− 60° vs + 60° from the center) emit different signals: white noise (left loudspeaker) and diesel engine noise (right loudspeaker). Two different “receivers” were used. An omnidirectional microphone was placed at the same position (i.e., distance and height) from the sound sources as the paired microphones of an artificial head. With the omnidirectional microphone, the diesel engine noise from the right loudspeaker was masked by the white noise masker playing on the left side (Fig. 6.2). In contrast to this, the artificial head experiment shows that due to the filtering properties of human hearing, the diesel engine noise becomes identifiable in the spectrum of the right ear signal (Fig. 6.3). This means a human being could hear and distinguish the sound from the diesel engine.

Sound reception by an omnidirectional microphone for two different sources at two different locations. The panels depict the spectrograms of white noise (left) and diesel engine noise (middle) placed at different positions (−60° and +60°, respectively) in azimuth. The panel on the right shows the spectrogram from the omnidirectional microphone recording. The dB scale along the bottom of each panel is color-coded with blue representing the lowest level (SPL), pink-red intermediate levels, and yellow the highest levels (up to 60 dB) at the frequencies shown on the vertical scales

Example of the unmasking of spatially distributed sound sources due to the directional filtering properties of human hearing. Same sound presentation as shown in Fig. 6.2 but received by an artificial head. The figures show the spectrograms from the received sounds at the left (left panel) and right (right panel) ears. Within the right ear the diesel engine noise is clearly visible. Sound pressure level (dB SPL) and frequency scales are identical to Fig. 6.2

For the binaural condition, the diesel engine noise is effectively released from masking by white noise from the left (cf. Flanagan and Watson 1966). Comparing the panels in Figs. 6.2 and 6.3 reveals that that pre-, post-, and simultaneous masking properties will be different for the binaural condition when the masker and the masked signal have different directions of incidence. The human auditory system is able to detect single sound sources in a complex soundscape with several sound sources at different locations. It could be possible that a sound source with a lower level but with a specific, discernable pattern in the time and/or frequency domain could contribute significantly to the overall perceived annoyance.

Humans exploit the capabilities offered by binaural signal processing to enhance speech intelligibility in noisy environments (vom Hövel 1984). In the case of complex auditory environments comprised of several spatially distributed sound sources, further advantages are given by the capacity to direct auditory attention toward individual sound sources. In a noisy environment, speech intelligibility can vary by 12 dB depending on sound incidence, meaning that the level of a sound source could be decreased by up to 12 dB for specific source locations without any influence on the detectability. This specific capability of human hearing was observed and described long ago by Cherry (1953) but is only considered later in soundscape research.

Another relevant feature of the human auditory system is its high simultaneous resolution in the frequency and time domains, which is complemented by a high dynamic range comprising more than 120 dB. For ordinary signal analyzers using Fourier analysis (e.g., FFT), the product of spectral resolution and temporal resolution equals 1 or higher; increasing the resolution in one domain leads to a decreased resolution in the other domain. Psychoacoustic investigations have shown that the product of temporal and spectral resolutions amounts to 0.3 for the human auditory system, meaning that humans use a high-frequency resolution and, at the same time, can perceive fast temporal variations such as short amplitude or frequency changes (Genuit 1992a).

These observations illustrate the remarkable performance of human binaural sound signal processing capabilities, which are still difficult to match by current technical devices and analysis methods. They also exemplify the value of binaural measurement systems for the evaluation of complex auditory environments for which an analysis based on human perception is essential. Only binaural recording in combination with calibrated, equalized playback headphones guarantee signals for the listener that are comparable to the signals the listener would hear in the original situation.

6.2.2 Aurally Accurate Measurements

The use of binaural measurement systems is well-established in fields concerned with sound quality for which the employment of human-related sound measurements is indispensable (e.g., sound design for household appliances and automobiles). In these applications, the generation of pleasant sound experiences and the reduction of annoyances for customers is generally achieved after performing binaural measurements capable of capturing all perceptually relevant characteristics of sound-generating elements (e.g., the tires, engine, transmission, or brakes in an automobile) located at different spatial positions around the listener. Through this approach, annoying sound-emitting components can be identified and modified accordingly to elicit positive responses by the product users, leading to a higher level of customer satisfaction.

The binaural measurement system accurately simulates the acoustically relevant components of the human ear and thus is able to achieve binaural recordings of sound events that are aurally accurate. These recordings include all the features of human sound perception related to spatial hearing. Existing regulations and standards in the context of environmental noise measurements are often incompatible with the soundscape approach and the use of binaural measurement systems. Nonetheless, a few general recommendations and guidelines are available for the practical execution of soundscape measurements, for example, the height of the microphones should be chosen according to the actual or expected position and height of the receiver (Genuit and Fiebig 2014). For guidance on how to perform acoustic measurements in soundscape investigations, the technical specification ISO/TS 12913-2 (2018) provides detailed information (see Sect. 6.2.3).

6.2.2.1 Binaural Recording

Binaural measurement systems are designed to mimic the directional filtering properties of human anatomy in a representative and reproducible way. The relevance of this aspect can be illustrated by considering a typical stereo microphone arrangement in which two omnidirectional microphones are placed at a distance that replicates the span between the left and right human ears. While this approach will also produce two signals with differences in time and level, these differences are applied equally for all frequencies due to the absence of natural signal filtering structures (auricles, head, shoulders). All acoustically relevant parts of the human anatomy involved in the generation of the binaural input to the brain are included in a binaural measurement system and contribute to the directional filtering. Thus, special attention must be paid to the appropriate positioning and dimensioning of the anatomical components in order to create differences in time and level between the left and right ear that vary over frequency. Therefore, a binaural measurement system and a typical stereo microphone arrangement do not record the same signals.

The exact design of a binaural measurement system is particularly relevant for the position of the artificial auricles (pinna) in relation to the head and shoulder elements, as positioning errors become apparent in the direction-dependent part of the transfer function and cannot be corrected after the measurement. Similarly, the angles of inclination and the design of the artificial cavum conchae also have a significant influence on the HRTFs.

As a result of studies on the influence of different components of the human anatomy on the sound recorded at the ears, it is possible to develop artificial heads with a simplified but mathematically accurate geometry without the loss of relevant directional information (Genuit 1984). Artificial head measurement systems can produce directional filtering patterns comparable to those generated by human anatomy and can record binaural signals with a high dynamic range. Moreover, artificial head dimensions comply with international specifications and standards as their free-field transfer functions and directional patterns are in accordance with the IEC 959 report (IEC 1990).

The fundamental principle of binaural technology includes aurally accurate recording, analysis, and reproduction. Herein, two signals, recorded by the left and right ear microphones of an artificial head are transformed into signals compatible with recordings from conventional omnidirectional measurement microphones by means of equalization. These signals can be used for analysis and parameter estimation using typical signal analyzers like level, third octave spectrum, and other parameters. These equalized signals can even be used for a loudspeaker playback system. Of course, the sound reproduction with loudspeakers cannot reproduce the same spatial impression as the playback using headphones, but at least the timbre is comparable.

Through the process of equalization, different components of the directional filtering introduced by the artificial head are reversed in accordance with the characteristics of the sound field present at the time of recording. The purpose of this equalization can be easily illustrated for a free-field (i.e., reflection-free) sound condition, when a sound source emitting a signal with a flat spectrum is placed directly in front of the artificial head at a large enough distance (i.e., in the far-field). Under this condition, the corresponding sound field equalization can be determined by performing a measurement with the artificial head followed by an equivalent measurement with a calibrated microphone. The free-field equalization is then determined by subtracting and inverting the spectra obtained from both measurements.

In addition to equalizers for the well-defined free-field and diffuse-field conditions, an equalization independent of direction (ID) was introduced. The ID equalization compensates for the direction-independent part of the artificial head’s transfer function (Genuit 1992b), which is caused by resonances at the auricle cavity (cavum conchae) and the ear canal. For the case of an artificial head that is based on a mathematically describable, simplified geometry, the ID components can be determined precisely for the purpose of equalization.

6.2.2.2 Binaural Reproduction

On the reproduction side, the recorded signals are corrected (i.e., equalized) once again by applying appropriate filters for playback. This is done with the intention of eliminating unwanted distortions introduced by the sound reproduction system. In addition, the signals are equalized to recreate the original sound pressure signals at the ear canals of the listener as if the listener had been present during the recording in the original sound situation. This means that an accurate reproduction of binaural recordings is only possible through the employment of a calibrated and equalized playback device. Artificial head recordings are usually reproduced through headphones as these provide better separation between the left and right ear signals and simplify control with respect to frequency and level. As no exact specifications for the transmission characteristic of headphones are available, a special hardware (or software) is used to calibrate and to equalize the individual headphones in such a way that the reproduced ear signals are comparable to the ear signals at the original sound field with respect to level and spectrum. It is highly recommended that adequate (calibrated and equalized) playback devices be used to reproduce the noise situations with a high degree of realism and to produce valid, reliable results (Genuit 2018).

A correct reproduction over loudspeakers can be achieved by employing systems capable of compensating for the unwanted crosstalk between each of the ear signals to the contralateral ear; however, to realize an adequate reproduction, a significant increase in complexity must be tackled compared to headphone playback.

Binaural measurements are of particular importance whenever a sound environment is to be reproduced accurately at a different time or in a different location, for example, in the case of further examination of the sounds in laboratory listening tests (Genuit and Fiebig 2006). Whenever evaluations under laboratory conditions are performed, the use of binaural recordings becomes indispensable. Through binaural recordings, “copies” of an acoustic environment are generated as close as possible to human perceptions, providing advantages with regard to the archiving and re-experiencing of acoustic sceneries, which also simplifies the comparability and analyses of different sound environments.

6.2.3 Aurally Accurate Measurements According to ISO/TS 12913-2

Given that in soundscape investigations the receiver is usually a person, the measurement height can be narrowed down to typical heights of humans. This clearly contrasts with the conventional noise measurement position according to ISO 1996-2 (ISO 2017) for which the microphone position is determined to be 0.5 m in front of an open window, and differs with the “noise maps” principle, for which the SPL calculations are related to a height of 4 m (ISO 2017). These measurement positions are obviously not typical receiver positions and constitute simple conventions for regulatory purposes. Therefore, those measurement points are not suitable for a soundscape study that aims to consider the human perception of sounds in context.

Regarding measurement time intervals, soundscape measurements should cover all variations caused by prominent sound sources, classifiable in soundscape-related terms (signals, soundmarks, or keynote sounds) as introduced by Schafer (1994). These prominent sound sources or events must not be energetically prominent; consideration must be given to whether they attract attention beyond their contribution in sound pressure level or possess a particular meaning for the local community. With respect to measurement duration, soundscape measurements must be long enough to sufficiently encompass all emission situations needed to obtain a representative, comprehensive depiction of the complete soundscape. This means that all relevant, typical sound events and sound sources must be recorded (Fiebig and Genuit 2011).

Additionally, stationary measurements are highly recommended as any movement of the measurement system and interactions of the measurement device with the person performing the measurement could potentially cause unwanted noise that does not represent the measured soundscape. For artificial head measurement systems, the use of a tripod is recommended. Outside recordings require the use of windscreens (Fig. 6.4). In general, equalization of the binaural measurement systems must be chosen with respect to the specific sound field of the investigated soundscape. The time signals of the binaural recordings must be digitally stored and sampled at a sampling rate equal to or higher than 44.1 kHz to preserve all spectral features relevant for human hearing.

San Martín et al. (2019) and Sun et al. (2018) suggested the use of multichannel recording techniques in the context of soundscape such as ambisonics. Although developed in the 1970s, ambisonics has gained weight recently since YouTube, Oculus VR, and Facebook adopted it as a standard for their 360-degree videos (see also Brambilla and Fiebig, Chap. 7). This technique can provide an alternative to binaural recordings in the context of laboratory studies of soundscapes (Davis et al. 2014) if the semantic aspects of user experience are similar in the original soundscape and its reproduction (Guastavino et al. 2005).

According to the ISO/TS 12913-2 (2018), other measurement systems like microphone arrays and surround recordings are not recommended as those systems are not yet fully standardized (Hong et al. 2017). Although those recording technologies offer some advantages, the lack of standardization makes it difficult to perform aurally accurate analyses to compute psychoacoustic parameters and indicators (cf. ISO/TS 12913-2 (2018)).

6.3 Psychoacoustic Analysis of Acoustic Environments

6.3.1 Introduction to Psychoacoustics

The discipline of psychoacoustics deals with the quantitative link between physical stimuli and their corresponding hearing sensations (Fastl and Zwicker 2007). In essence, psychoacoustic research attempts to describe sound perception mechanisms in terms of specific parameters using elaborated models. This means that mathematical descriptions are derived from measured relationships between stimulus (physics) and response (perception). By investigating different aspects of human auditory sensations, comprehensive models can be developed describing the manner of human noise perception and signal processing (Sottek 1993). Some common and established psychoacoustic parameters include loudness, sharpness, roughness, fluctuation strength, and tonality. Table 6.1 presents a list of basic psychoacoustic parameters and short descriptions of their meanings.

Loudness, a psychoacoustic parameter introduced several decades ago (e.g., Zwicker et al. 1957; Zwicker 1958), considers the basic human processing phenomenon associated with the sensation of volume. Loudness includes signal processing effects such as spectral contribution and sensitivity (i.e., frequency weighting), masking (post and simultaneous), the interactions within critical bands, and nonlinearities. The unit of loudness is the sone. The computation of this psychoacoustic parameter can be performed using the model developed by Zwicker (1982), standardized in the German standard DIN 45631/A1 (2010) and in the international standard ISO 532-1 (2017), or in the model proposed by Moore and Glasberg (Moore et al. 1997; Glasberg and Moore 2006), which is standardized in the American National Standard ANSI S3.4-2007 (ANSI 2007) and the ISO 532-2 (2017). By applying algorithms related to human auditory processes, the psychoacoustic parameter of loudness offers advantages over the A-weighted sound pressure level (Fastl and Zwicker 2007): the psychoacoustic parameter shows a much better correspondence with loudness sensation than the LAeq (Bray 2007). The parameter loudness goes far beyond simple sound-level indicators (Genuit 2006) and the advantage of loudness compared to A-weighted sound-pressure-level indicators becomes even clearer when the superposition of sounds is considered. For example, when sounds with different spectral shapes are combined, the A-weighted SPL is unable to predict the perceived loudness. Evaluating loudness becomes even more complicated when tones are added to noise. Lastly, Hellman and Zwicker (1987) showed that the A-weighted SPL can be even inversely related to loudness and annoyance.

6.3.2 Psychoacoustic Analysis of Acoustic Environments

Figure 6.6 illustrates the analysis of loudness for three simple noises with identical A-weighted SPLs (LAeq). Since the psychoacoustic loudness values can be interpreted as a ratio-scaled quantity, the values illustrate the great mismatch between the psychoacoustic loudness indicator and the A-weighted time-averaged SPL. The loudness values in sones can be directly compared by the intervals or differences: a loudness value twice another loudness value means that this sound is perceived as twice as loud as the other sound. Although the broadband noise is perceived as twice as loud as the narrowband noise, the sound level indicator does not indicate any difference.

Another relevant phenomenon of human perception is also illustrated in Fig. 6.5. Although the depicted European police siren sound has the same time-averaged A-weighted SPL as the synthetic (broadband and narrowband noise) sounds, the time-varying pattern of the siren sound produces loudness values that reach or surpass the representative single value of 32.8 sone only in very few instances. The result of this loudness analysis can be explained by the fact that the cognitive stimulus integration of humans is complex, meaning that humans do not simply average their sensation levels over time (Stemplinger 1999; Fiebig 2015). Using the statistical mean of a time-variant loudness analysis would lead to results that are too low in comparison to perceived and judged overall loudness (Fastl 1991). Thus, the percentile loudness N5, indicating the loudness value reached or exceeded in 5% of the total time, expresses the perceived overall loudness more adequately and should be determined in accordance with DIN 45631/A1 (2010) and ISO 532-1 (2017). In general, the difference between the high and low loudness percentile values is an indicator for environmental noise quality (Genuit 2006). Greater loudness fluctuations indicate a strong unsteadiness with respect to loudness. Such loudness variations usually attract more attention than less-varying noise.

There is strong evidence that physiological reactions to noise correlate better with the loudness parameter than with the sound pressure level. As an example, Jansen and Rey (1962) showed that the finger pulse amplitude, an autonomous physiological reaction measured after exposure to different sounds, can vary strongly with the same sound pressure levels. The variances can be explained on the basis of the differences in the psychoacoustic parameter loudness (Genuit and Fiebig 2007).

Similarly, the psychoacoustic parameter sharpness, related to the perceived spectral emphasis of a signal toward high frequencies, is a potential predictor for determining the pleasantness or annoyance of sounds, as it has been observed that sensory pleasantness decreases with increasing sharpness (Fastl and Zwicker 2007). One method for the calculation of the psychoacoustic parameter sharpness is defined in the German standard DIN 45692 (DIN 2009). However, in addition to the algorithm implemented in DIN 45692, other methods for the calculation of the parameter sharpness are also available, including the approaches introduced by Aures (1985) and von Bismarck (1974). Generally, the DIN 45692 standard and von Bismarck calculation methods produce similar sharpness results and are not dependent on the total loudness. In contrast, the sharpness computation according to Aures (1985) will increase in sharpness value for a constant spectral shape as loudness increases due to the coupling of the sharpness impression to the total loudness introduced by this method. The unit of sharpness is the acum.

Figure 6.6 shows the different psychoacoustic results from two simple signals: white noise and pink noise. Both have the same A-weighted sound pressure level, but the loudness of the pink noise is higher than the loudness of the white noise, and the white noise produces a higher sharpness value.

Analysis of white noise and pink noise with respect to sound pressure level (left), loudness (middle), and sharpness (right). White noise is random noise that has a constant power spectral density, whereas in pink noise, there is equal amplitude per octave. This means that pink noise has less energy in the higher frequency range. Both signals have the same A-weighted sound pressure level (left), but pink noise has 17% higher loudness according to the ISO 532-1 (middle) and more than 20% less sharpness (computed by means of the DIN 45692) in comparison to white noise (right). The x-axis shows the time in sec

The question of which sound is less annoying or produces a higher perceived sound quality can only be answered by listening tests and statistical analyses. Usually, people prefer the louder but less sharp sound as listening tests show for synthetic signals (Fiebig 2015).

Now the question arises as to which sound (white vs. pink noise) humans perceive as “better”? Is the signal property loudness more important with respect to the sound quality than the signal property sharpness or quite the opposite? This can only be answered by a statistical analysis of data from a jury evaluation test. A paired comparison listening test was conducted in which participants were asked to judge which sound (pink versus white noise) caused higher annoyance for the listener. The effect on the perceived annoyance of the two different signals with changing step by step the sound pressure level of the pink noise is shown in Fig. 6.7. When both signals had the same sound pressure level, but pink noise had higher loudness, only 28% of the test participants judged the pink noise as more annoying than the white noise. That means that most participants preferred the pink noise instead of the white noise although the loudness of the pink noise was higher. Obviously, the signal property of sharpness has a stronger contribution to the annoyance. Only after increasing the level of the pink noise by 10 dB did nearly all participants judge the annoyance level caused by the pink noise to be higher than the annoyance caused by the white noise.

Percentage responses of 14 participants stating in a paired comparison test that pink noise was more annoying than white noise. The level of the pink noise varied from equalling the level of white noise until 10 dB higher level than white noise (x-axis). The level of the white noise was consistently presented at 70 dB(A) (Fiebig 2015)

In a real soundscape the context is very important, as acknowledged in the definition of the term soundscape in ISO 12913-1 (2014). In the laboratory with test signals, sound with higher sharpness normally has a negative correlation with the overall perceived sound quality. It is very important to distinguish between the terms sound character and sound quality. Sound character represents basic attributes (sensory properties) of sound events. Sound quality perception includes non-acoustic factors that influence the interpretation of sound and is affected by context, cognition, expectations, experiences, and interactions (Blauert and Jekosch 1997).

Comparison of a relatively ugly urban place with a lot of traffic versus a beautiful park with a fountain might lead to a judgment for greater pleasantness of the park despite the higher sharpness of the fountain sound compared to the traffic noise. However, if two fountains are compared, both interpreted as pleasant sources, the less sharp fountain sound (maybe due to the fountain design) is assigned a higher sound quality (Galbrun and Ali 2012). Figure 6.8 illustrates the remarkable sharpness differences that can occur in the context of environmental noises. The fountain has a higher overall level, less loudness, but 50% more sharpness; however, most people prefer the soundscape around the fountain instead of the urban location. Fiebig (2015) gives a more detailed discussion about cognitive stimulus integration in the context of auditory sensations and sound perceptions (see Fiebig, Chap. 2).

Analyses for two environmental sounds. Traffic at an urban square (green curves) has lower sound pressure level (left panel), but higher loudness (ISO 532-1) (middle panel) and less sharpness (DIN 45692) (right panel) than the fountain (red curves). The sound quality of the fountain was evaluated by participants in a case study as more pleasant than the urban square sounds

Other psychoacoustic parameters, such as roughness and fluctuation strength, are descriptors for the human perception of temporal effects and can be indicators for the annoyance caused or the perceived “aggressiveness” of sounds, although the interpretation of the results given by these psychoacoustic analyses strongly depends on the type of sound and the source being investigated. From the physical point of view, the psychoacoustic parameters roughness and fluctuation strength are similar; they are related to modulations (both amplitude modulations and frequency modulations). However, slow modulations (e.g., modulation frequencies below 20 Hz) produce the sensation of a sound with fluctuations; in contrast, fast modulations (e.g., modulation frequencies clearly above 20 Hz) produce a sensation of an unclean, rough sound. Fig. 6.9 provides an illustration of signals with variations in roughness and fluctuation strength. A combination of a 1 kHz tone with 996 Hz and 1004 Hz results in a slow modulation of 474 Hz, which is the fluctuation (measurement unit: vacils). Fluctuations are used especially for warning signals because these fluctuations create greater attention by the listener. The combination of three tones leading to a fast modulation of 70 Hz, produces a sound that is perceived as a rougher sound of 1 kHz because human hearing cannot separate the three tones and perceives only a disturbing 1 kHz tone. This is described as roughness (measurement unit: asper). Roughness is important for sound design and the evaluation of roughness is strongly dependent on the context, the kind of product producing the sound, and the expectations of the listener.

Comparison of three signals: 1 kHz tone (blue, top); 1 kHz tone with 996 Hz and 1004 Hz tones (red, middle); 1 kHz tone with 930 Hz and 1070 Hz tones (pink, bottom). All signals have the same sound pressure level (left panel) but have differences in loudness (second panel) and great differences in roughness (third panel) and fluctuation strength (fourth panel) according to ECMA-418-2

The tonality parameter (measurement unit: tu) describes the sensation that is related to the proportion of prominent tones or narrowband components in a signal. The newest methods for the determination of the tonality parameter are standardized in the international standard ECMA-74 (2019) or ECMA 418-2 (2020). The standards include a psychoacoustic-based tonality computation algorithm that considers relevant aspects of human auditory perception, such as hearing thresholds and masking, to determine the perceptual relevance and prominence of tonal components in a signal (Becker et al. 2019). Figure 6.10 demonstrates the importance of tonality in an example with the sound of a large widespread passenger airplane as it “takes off.” In this example, the tones were synthetically removed. This effect is clearly audible even though all other parameters are unchanged (Table 6.2).

Human hearing quickly adapts to stationary signals but remains very sensitive to fluctuations and intermittent noise, as well as to prominent, salient noise events. Therefore, peak values and relative changes can be significant with respect to auditory perception. The use of percentile values of a parameter that has been measured over time can reveal the magnitude of fluctuations and variations (Genuit 2006). For example, if large differences are observed between values in the 5th and 95th percentiles of a measurement interval, strong fluctuations of the considered parameter are detected, which would suggest a dynamic sound situation.

In addition to the basic psychoacoustic parameters described in this section, the Relative Approach parameter developed by Genuit (1996), which is related to the detection and perception of patterns in acoustic signals, provides information about obtrusive and attention-attracting noise features. The Relative Approach analysis simulates the ability of human hearing to adapt to stationary sounds and to react to variations and patterns within the time and frequency structure of a sound. An example is shown in Fig. 6.11 in which two vehicles have pass-by events with the same sound pressure levels (LAeq). The Relative Approach analysis shown identifies the pattern of diesel “knocking” in the second pass-by event, which is perceived as more annoying by most people.

Comparison of Relative Approach analysis results for two vehicle engine noises. The analysis result of the gasoline engine noise is shown on the left side, and the analysis result of the diesel engine noise is displayed on the right side. Both noises possess the same A-weighted SPL. The typical “knocking” noise patterns of a diesel engine can be clearly seen in the result of the Relative Approach analysis (right)

By considering different aspects of human (binaural) signal processing through psychoacoustic analyses, pleasant and unpleasant features of sound can be identified. The first and most relevant step toward achieving meaningful results from the acoustic analysis of sound environments is to move away from indicators based on simple energy averaging (i.e., SPL values) and to adopt the usage of more detailed (psycho)acoustic parameters that consider different acoustic properties of sound events. This entails the determination of psychoacoustic parameters capable of detecting temporal and spectral patterns that are relevant to human perception. The application of well-established psychoacoustic analysis methods can advance soundscape evaluations and considerably improve perceptual assessments of environmental sound quality and the expected impacts with regard to annoyance (Genuit and Fiebig 2006). Moreover, advanced parameters, such as the Relative Approach developed by Genuit (Genuit 1996), must be further developed to improve the characterization of environmental sound conditions.

6.3.3 Psychoacoustics in Soundscape

An important step toward improving the characterization of acoustic environments and obtaining meaningful results from noise annoyance investigations is to pose specific questions about the acoustic environment under investigation. For example, in the case of complaints about noise, it might be relevant to start by asking which of the existing sound sources are causing the discomfort and are considered responsible for the noise annoyance by the person concerned. Similarly, signal attributes, such as modulations or specific patterns in the time or frequency domains, should be examined for their potential as sources of irritation. Moreover, informative features about the annoyance and the necessity (or lack thereof) of the noise should be questioned, just as the attitude and expectations of the listener should be examined (Genuit 2003). The answers to these questions will help to identify suitable acoustic analyses and appropriate measurement methods that will lead to improved and goal-oriented investigations of an acoustic environment (Berglund and Nilsson 2006). In addition, binaural recordings are often combined with psychoacoustic analysis to determine the reasons behind the annoyance. Through these processes, sound adjustments can be performed and evaluated in a perceptually relevant way before any major and potentially expensive modifications of the environment (e.g., in structural or mechanical elements) are performed.

Psychoacoustics is used frequently to develop better noise maps of certain areas and to describe acoustic properties of the area beyond the sound level distribution (Kang et al. 2016). Genuit et al. (2008) generated psychoacoustic maps of a small urban park, Nauener Platz, in Berlin. The maps of Nauener Platz showed that some psychoacoustic parameters behave differently compared to the sound pressure level. For example, in the center of the urban park the SPL dropped down due to the large distance to the roads. However, parameters like sharpness or roughness remained almost constant over the whole urban park area.

Hong and Jeon (2017) developed loudness and sharpness maps of Seoul among maps that indicated the audibility of certain sound sources and showed that consideration of a variety of parameters was advantageous for the determination of soundscape quality. Montoya-Belmonte and Navarro (2020) used a sensor network to determine loudness, sharpness, and a psychoacoustic annoyance map for a university campus in Spain. They concluded that the psychoacoustic annoyance measurement was better correlated with loudness in the locations they considered, and sharpness was only of minor importance. Those efforts reflect the increased interest in psychoacoustic parameters in soundscape investigations around the world.

Although the psychoacoustic approach for acoustic environment analysis has provided valuable information, those studies only partially cover the investigation of the sensory and mental representations of the typical sounds in urban spaces (Yang 2019). Without asking how residents feel about their surroundings and investigating the visual elements of the location under study, the results obtained from psychoacoustic analysis alone are not sufficient. Aspects such as local expectations, suitability, or acceptability of sound in their respective contexts cannot be sufficiently answered without knowledge of human responses to the locations under consideration. Psychoacoustics can analyze in detail the acoustic composition of a soundscape and the signal properties that elicit specific auditory sensations; however, a comprehensive interpretation of the results requires feedback from the listeners.

A thorough study of the acoustical properties and psychoacoustic characteristics of soundscapes is an important part of understanding the perception of the acoustic environment in context and can serve as a starting point for the classification of soundscapes. Through these analyses, acoustical properties can be identified in detail that are common across multiple locations (e.g., urban environments, urban parks, residential areas). Identification of site-specific patterns and noise features within a soundscape will continue to be necessary. The inclusion of macroscopic and microscopic analyses is required to capture the overall sound impression created by soundscape. Those combined analyses also are needed to recognize and interpret sound events that may cause strong positive or negative reactions and feelings (Schulte-Fortkamp and Nitsch 1999). The macro-level analysis is defined by descriptions embedding the noise events into the comparable soundscapes of streets, places, and urban areas. The micro-level is related to the analysis of noise events based on psychoacoustic parameters (Schulte-Fortkamp and Nitsch 1999).

In contrast to conventional environmental noise measurement regulations, the focus in soundscape investigation lies in recording and analyzing environmental sound with all relevant sound sources as perceived by individuals in context. The separation of the contributions of the different sound sources might be relevant for analytical or legal reasons and for regulatory purposes in noise policy, but the examination and assessment of the acoustic environment as a whole remains inevitable for a thorough understanding of a soundscape.

Measurement guidelines for comprehensive soundscape studies must cover both dimensions of measurements by persons and measurements by instruments (see Botteldooren, De Coensel, Aletta, and Kang, Chap. 8). In a practical sense, soundwalks, questionnaires, and explorative interviews can be used to complement the acoustical measurements and psychoacoustic analyses of soundscapes.

Psychoacoustic parameters and perceptual, visual, or contextual indicators are used to predict all kinds of soundscape-related descriptors. For example, Lionello et al. (2020) studied prediction models from the acoustic literature that measured the experience of soundscape in terms of tranquility, arousal, valence, pleasantness, or sound quality. They observed that a great variety of acoustic and psychoacoustic indicators were used and applied in prediction models. For example, Brambilla et al. (2013) used sharpness and roughness for the affective dimension chaotic versus calm. Aletta and Kang (2016, 2018) used loudness, fluctuation strength, and roughness, among other parameters, to predict vibrancy. Çakır Aydın and Yılmaz (2016) developed a Sound Quality Index based on loudness, sharpness, and roughness to predict pleasantness of sound. Lionello et al. (2020) pointed out that those parameters did not systematically lead to great prediction accuracy. In addition, those indicators were often combined with other parameters. Ongoing research must continue to investigate which psychoacoustic parameters are of significant value and which parameters are less closely related to the perception and assessment of acoustic environments. Although the limitations of psychoacoustics to predict human responses to soundscapes are not completely understood, the advantages of using psychoacoustic analyses beyond data from simple level indicators are indisputable.

6.4 Benefits and Limitations of Psychoacoustics in the Context of Soundscape

While the overall noise measured at a specific location can be analyzed in terms of several acoustical parameters, the annoyance or pleasantness level of a complex soundscape composed of several sound sources cannot be determined solely from the values obtained through such analyses. Even if the acoustic contribution of a single sound source to the overall noise does not appear significant in a physical sense, the influence of this sound source on the soundscape can be relevant perceptually. This can be explained based on how perceptual “attention” influences sound processing. Thus, to better understand the perception and evaluation of soundscapes, studies must include evaluation of typical attention processes of individuals and the possible factors that influence the (listening) focus on specific sound sources in complex environments. Selective auditory attention processes in perceiving complex (acoustic) environments continue to be very relevant research subjects (Fiebig 2015).

Auditory attention allows human beings to focus their mental resources on a particular stream of interest while ignoring others. According to de Coensel and Botteldooren (2010), most theories on attention rely on a concept that there is an interplay between bottom-up (saliency-based) and top-down (voluntary) mechanisms in a competitive selection process. For example, Knudsen (2007) discussed evidence that the perceived signal strength is influenced by bottom-up salience filters and at the same time is modulated by top-down control. In the context of soundscape, it seems crucial to understand those selection activities to be able to predict individual responses to multi-source environments more appropriately.

Assessment methods that provide different degrees of context may be applied in soundscape investigations, depending on the projected type of investigation and the resources available. These methodologies may range from evaluations on-site (e.g., by means of a soundwalk), which provide a complete range of sensory and environmental aspects, to listening tests in a laboratory environment, where there is better control over the stimuli presented and greater reproducibility (Hermida Cadena et al. 2017).

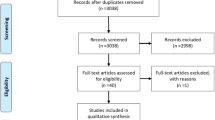

Although psychoacoustics parameters are frequently applied in soundscape investigations, a great variety of methods for psychoacoustic indicators are used with different computations and implementations. This variety of computation methods limits the comparability of the results and impedes meta-analyses of the behavior of psychoacoustic parameters in soundscape studies. Engel et al. (2021) conducted a comprehensive literature review of soundscape investigations and found that almost 30% of publications report the data and results without specific details about the computation methods and/or standards to determine certain psychoacoustic parameters. Although the ISO/TS 12913-2 (2018) states that the computation methods and/or standards used to perform the psychoacoustic analysis of the binaural measurements need to be documented, often the methods and standards for calculating the psychoacoustic parameters are not properly reported. The lack of detailed scientific reporting limits the comparability of study results and impedes progress in understanding the link between psychoacoustic properties of acoustic environments and their corresponding perception and assessment.

Another area of research that lacks consensus concerns how to represent values of time-variant noises to accurately describe overall auditory impressions and sound perceptions. Frequently different average values or percentile values are applied to quantify the psychoacoustic properties of dynamic acoustic environments. The ISO/TS 12913-3 (2019) suggests that average and percentile values can account for variation over time for certain signal properties. For example, the quotient of the loudness N5 (loudness exceeded in 5% of the time interval) and loudness N95 (loudness exceeded in 95% of the time interval) may be an indicator of the level of loudness variability (ISO/TS 12913-3 2019). However, according to Engel et al. (2021), analyzing related research outcomes cannot provide a clear direction with regard to established and acknowledged (percentile) values that correspond to an overall impression of specific auditory sensations. Fiebig (2015) concluded optimistically that predictions of overall assessments of sound perceptions are possible with fair accuracy based on indicators derived from psychoacoustic profiles that represent proxies of momentary perceptual levels. However, further research is needed to derive valid, representative, single values of time-variant noises, which are common components of soundscapes.

6.5 Summary

In this chapter, the need for psychoacoustic measurement in soundscapes is discussed. The mechanisms of human binaural hearing, which involve the directional filtering of sound introduced by human anatomy and the combined processing of signals to the left and right ears, are introduced with regard to the soundscape approach. Information encoded in the differences between the two ear signals (e.g., ITDs and ILDs) is used to determine the position of individual sound sources and binaural hearing provides many advantages for the identification and discrimination of individual sound sources in complex acoustic environments. These advantages include the suppression of noise and the capacity to focus on individual sound sources (due to improved signal-to-noise ratio) as well as the ability to identify spatial distribution, speed, and direction of movement of sound sources. Based on this assertion, calibrated binaural measurement systems are required in soundscape research, for which the perception and evaluation of environmental noise is a main concern. Further psychoacoustic evaluation through multi-channel systems is also desirable.

Binaural listening and the intricate signal-processing involved in human hearing provide the advantage of source focusing that in combination with spatial perception and the ability to assess the direction and speed of any movement of sound sources directly influence the perception and evaluation of environmental noise. The involvement of human perception in the evaluation of soundscapes, therefore, is particularly relevant and can only be realized with data collection methods comprising the full capabilities of the human auditory system.

Nonetheless, even if a psychoacoustic approach to the evaluation of a sound environment can aid in the interpretation of acoustic measurements and reveal critical and relevant components of a soundscape, relying solely on values obtained through psychoacoustic parameter estimation to make assertions about complex acoustic environments would disregard the significance of the emotional components of human perception. It is only through the combination of perceptual evaluation methods, which consider the context, expectations, and attitudes of the listener with psychoacoustic analyses, that the outcomes from soundscape studies can become more insightful. More relevant acoustic measurements can be performed by employing binaural measurement systems instead of single or even stereophonic microphone measurement systems (that are incapable of recreating relevant filtering properties of human anatomy). Acoustic measurement procedures described in current standards, such as ISO 1996-2, do not provide a good basis for the establishment of soundscape measurements since they do not consider the human listener as a receiver. In this chapter, the importance of suitable assessment methods that consider a broad range of sensory and environmental aspects has been stressed.

Future soundscape research must consider the need for aurally accurate measurements and psychoacoustic analyses with the distinct purpose of archiving and re-experiencing different acoustic environments. Future studies should also consider the relevance of documenting and analyzing the occurrence of a variety of sound sources since focusing on certain sound sources can change the overall assessment of soundscapes.

A common basis of measurement and data collection procedures that reflect the approach to soundscape research presented in this chapter is provided by the ISO/TS 12913-2 (2018), which has been introduced with the goal of improving the comparability and compatibility of future soundscape investigations. While this technical specification provides common ground with regard to data collection and reporting, the adoption of any soundscape standard should not limit the flexibility and interdisciplinary characteristics of the soundscape approach.

Compliance with Ethics Requirements

Klaus Genuit declares that he has no conflict of interest.

Brigitte Schulte-Fortkamp declares that she has no conflict of interest.

André Fiebig declares that he has no conflict of interest.

References

Aletta F, Kang J (2016) Descriptors and indicators for soundscape design: vibrancy as an example. Internoise, Hamburg

Aletta F, Kang J (2018) Towards an urban vibrancy model: a soundscape approach. Int J Environ Res Public Health 15(8):1712. https://doi.org/10.3390/ijerph15081712

ANSI S3.4 2007 Procedure for the computation of loudness of steady sounds

Aures W (1985) Berechnungsverfahren für den sensorischen Wohlklang beliebiger Schallsignale. Acta Acust Acust 59:130–141

Becker J, Sottek R, Lobato T (2019) Progress in tonality calculation. In: ICA 2019, Aachen

Berglund B, Nilsson ME (2006) On a tool for measuring soundscape quality in urban residential areas. Acta Acust Acust 92(6):938–944

Blauert J (1974) Räumliches Hören. Hirzel, Stuttgart

Blauert J (1996) Spatial hearing: the psychophysics of human sound localization. MIT Press, Cambridge

Blauert J, Jekosch U (1997) Sound-quality evaluation – a multi-layered problem. Acta Acust Acust 83(5):747–753

Brambilla G, Maffei L, Gabriele M, Gallo V (2013) Merging physical parameters and laboratory subjective ratings for the soundscape assessment of urban squares. J Acoust Soc Am 134:782–790. https://doi.org/10.1121/1.4768792

Bray WR (2007) Behavior of psychoacoustic measurements with time-varying signals. In: Noise-Con 2007, Reno

Bronkhorst AW, Plump R (1988) The effect of head-induced interaural time and level differences on speech intelligibility in noise. J Acoust Soc Am 83(4):1508–1516. https://doi.org/10.1121/1.395906

Çakır Aydın D, Yılmaz S (2016) Assessment of sound environment pleasantness by sound quality metrics in urban spaces. TU A|Z J Fac Archit 13(2):87–99

Cherry EC (1953) Some experiments on the recognition of speech, with one and with two ears. J Acoust Soc Am 25(5):975–979

Davis WJ, Bruce NS, Murphy JE (2014) Soundscape reproduction and synthesis. Acta Acust Acust 100(2):285–292

Coensel de B, Botteldooren D (2010) A model of saliency-based auditory attention to environmental sound. In: Proceedings of international congress on acoustics 2010, Sydney, Australia

DIN 45631/A1 (2010) Calculation of loudness level and loudness from the sound spectrum – Zwicker method – amendment 1: calculation of the loudness of time-variant sound. Beuth Verlag, Berlin

DIN 45692 (2009) Measurement technique for the simulation of the auditory sensation of sharpness. Beuth Verlag, Berlin

ECMA (2019) ECMA-74 – measurement of airborne noise emitted by information technology and telecommunications equipment, Geneva

ECMA-418-2 (2020) Psychoacoustic metrics for ITT-equipment, part 2: models based on human perception, Geneva

Engel MS, Fiebig A, Pfaffenbach C, Fels J (2021) A review of the use of psychoacoustic indicators on soundscape studies. Curr Pollution Rep 7:359. https://doi.org/10.1007/s40726-021-00197-1

Fastl H (1991) Beurteilung und Messung der äquivalenten Dauerlautheit, Z. f. Lärmbekämpf, vol 38. Springer, pp 98–103. Düsseldorf, Germany

Fastl H, Zwicker E (2007) Psychoacoustics. Springer, Berlin

Fay RR, Popper AN (2005) Introduction to sound source localization. In: Popper AN, Fay RR (eds) Sound source localization, Springer handbook of auditory research, vol 25. Springer, New York. https://doi.org/10.1007/0-387-28863-5_1

Fidell S (2003) The Schultz curve 25 years later: a research perspective. J Acoust Soc Am 114(6):3007–3015

Fiebig A (2015) Cognitive stimulus integration in the context of auditory sensations and sound perceptions (Dissertation), Technische Universität Berlin, epubli, Berlin

Fiebig A, Genuit K (2011) Applicability of the soundscape approach in the legal context. In: DAGA 2011, Düsseldorf, Germany

Flanagan JL, Watson BJ (1966) Binaural unmasking of complex signals. J Acoust Soc Am 40:456–468. https://doi.org/10.1121/1.1910096

Galbrun L, Ali TT (2012) Perceptual assessment of water sounds for road traffic noise masking. In: Acoustics 2012, Nantes

Genuit K (1984) Ein Modell zur Beschreibung der Außenohrübertragungseigenschaften. TH Aachen, Aachen

Genuit K (1992a) Sound quality, sound comfort, sound design—why use artificial head measurement technology? In: Daimler-Benz JRC (ed) Stuttgart

Genuit K (1992b) Standardization of binaural measurment technique. J Phys IV Proc 02:C1-405–C1-407. https://doi.org/10.1051/jp4:1992187

Genuit K (1996) Objective evaluation of acoustic quality based on a relative approach. In: Internoise 1996, Liverpool, pp 3233–3238

Genuit K (2002) Sound quality aspects for environmental noise. In: Internoise 2002, Dearborn, pp 1242–1247

Genuit K (2003) How to evaluate noise impact. In: Euronoise 2003, Naples, pp 1–4

Genuit K (2006) Beyond the a-weighted level. In: Internoise 2006. Honolulu, pp 1321–1327

Genuit K (2018) Standardization of soundscape: request of binaural recording. In: Proceedings of Euronoise, pp 2451–2458

Genuit K, Fiebig A (2006) Psychoacoustics and its benefit for the soundscape approach. Acta Acust Acust 92:1–7

Genuit K, Fiebig A (2007) Environmental noise: is there any significant influence on animals? J Acoust Soc Am 122:3082. https://doi.org/10.1121/1.2943007

Genuit K, Fiebig A (2014) The measurement of soundscapes – it it standardizable? In: Internoise 2014, pp 3502–3510

Genuit K, Sottek R (2010) Das menschliche Gehör und Grundlagen der Psychoakustik. In: Genuit K (ed) Sound-Engineering im Automobilbereich. Springer, Berlin, ISBN: 978-3-642-01415-4

Genuit K, Schulte-Fortkamp B, Fiebig A (2008) Psychoacoustic mapping within the soundscape approach. In: Internoise 2008, Proceeding, Shanghai, China

Glasberg BR, Moore BCJ (2006) Prediction of absolute thresholds and equal-loudness contours using a modified loudness model. J Acoust Soc Am 120:585–588. https://doi.org/10.1121/1.2214151

Guastavino C, Katz BFG, Polack JD, Levitin DJ, Dubois D (2005) Ecological validity of soundscape reproduction. Acta Acust Acust 91(2):333–341

Hellman R, Zwicker E (1987) Why can a decrease in dB(a) produce an increase in loudness? J Acoust Soc Am 82:1700–1705. https://doi.org/10.1121/1.395162

Hermida Cadena LF, Lobo Soares AC, Pavón I, Bento Coelho JL (2017) Assessing soundscape: comparison between in situ and laboratory methodologies. Noise Mapp 4:57–66. https://doi.org/10.1515/noise-2017-0004

Hong JY, Jeon, JY (2017) Exploring spatial relationships among soundscape variables in urban areas: a spatial statistical modelling approach. Landsc Urban Plan 157:352–353

Hong JY, Jianjun H, Bhan L, Rishabh G, Woon-Seng G (2017) Spatial audio for soundscape design:recording and reproduction. Appl Sci 2017(7):627

IEC 959 (1990) Provisional head and torso simulator for acoustic measurements on air conduction hearing aids

ISO 532-1 (2017) Acoustics—methods for calculating loudness—part 1: Zwicker method, Geneva

ISO 532-2 (2017) Acoustics—methods for calculating loudness—part 2: Moore-Glasberg method. Geneva

ISO 12913-1 (2014) Acoustics — Soundscape —Part 1: Definition and conceptual framework, Geneva

ISO 1996-1 (2016) Acoustics — Description, measurement and assessment of environmental noise — Part 1: Basic quantities and assessment procedures, Geneva

ISO 1996-2 (2017) Acoustics — Description, measurement and assessment of environmental noise — Part 2: Determination of sound pressure levels, Geneva

ISO/TS 12913-2 (2018) Acoustics — Soundscape — Part 2: Data collection and reporting requirements, Geneva

ISO/TS 12913-3 (2019) Acoustics — Soundscape — Part 3: Data analysis, Geneva

Jansen G, Rey P-Y (1962) Der Einfluss der Bandbreite eines Geräusches auf die Stärke vegetativer Reaktionen. Int Zeitschrift für Angew Physiol Einschl Arbeitsphysiologie 19:209–217. https://doi.org/10.1007/BF00697117

Kang J, Schulte-Fortkamp B, Fiebig A, Botteldooren D (2016) Mapping of soundscape, In: Kang J, Schulte-Fortkamp B (ed.) Soundscape and the built environment. Taylor & Francis incorporating Spon, London

Knudsen EI (2007) Fundamental components of attention. Annu Rev Neurosci 30:57–78. https://doi.org/10.1146/annurev.neuro.30.051606.094256

Lionello M, Aletta F, Kang J (2020) A systematic review of prediction models for the experience of urban soundscapes. Appl Acoust 170:107479

Montoya-Belmonte J, Navarro JM (2020) Long-term temporal analysis of psychoacoustic parameters of the acoustic environment in an university campus using a wireless acoustic sensor network. Sustainability 2020(12):7406. https://doi.org/10.3390/su12187406

Moore BCJ, Glasberg BR, Baer T (1997) A model for the prediction of thresholds, loudness, and partial loudness. J Audio Eng Soc 45:224–240

San Martín R, Arana M, Ezcurra A, Valencia A (2019) Influence of recording technology on the determination of binaural psychoacoustic indicators in soundscape investigations. In: Proceedings Internoise, Madrid, Spain 2019

Schafer RM (1994) The soundscape: our sonic environment and the tuning of the world. Destiny Books

Schulte-Fortkamp B, Nitsch W (1999) On soundscapes and their meaning regarding noise annoyance measurements. In: Internoise 1999, Fort Lauderdale, Florida

Schultz TJ (1978) Synthesis of social surveys on noise annoyance. J Acoust Soc Am 852–861(64):377–405

Shaw E (1997) In: Gilkey RH, Anderson TR (eds) Binaural and spatial hearing in real and virtual environments. Lawrence Erlbaum, Mahwah, pp 25–48

Sottek R (1993) Modelle zur Signalverarbeltung im menschlichen Gehör. RWTH, Aachen

Stemplinger IM (1999) Beurteilung, Messung und Prognose der Globalen Lautheit von Geräuschimmissionen. Technische Universität München

Sun K, Botteldooren D, De Coensel B (2018) Realism and immersion in the reproduction of audio-visual recordings for urban soundscape evaluation. In: Proceedings of the 47th international congress and exposition on noise control engineering, Chicago, USA

vom Hövel H (1984) Zur Bedeutung der Übertragungseigenschaften des Aussenohrs sowie des binauralen Hörsystems bei gestörter Sprachübertragung. RWTH, Aachen

von Bismarck G (1974) Sharpness as an attribute of the timbre of steady sounds. Acta Acust Acust 30:159–172

World Health Organization (2014) Burden of disease from environmental noise. In: Quantification of healthy life years lost in Europe. WHO Regional Office for Europe, Bonn, ISBN 978 92 89002295

Yang M (2019) Prediction model of soundscape assessment based on semantic and acoustic/psychoacoustic factors. J Acoust Soc Am 145:1753–1753. https://doi.org/10.1121/1.5101420

Zwicker E (1958) Über psychologische und methodische Grundlagen der Lautheit. Acustica 8:237–258

Zwicker E (1982) Psychoakustik. Springer

Zwicker E, Flottorp G, Stevens SS (1957) Critical band width in loudness summation. J Acoust Soc Am 29:548–557. https://doi.org/10.1121/1.1908963

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Genuit, K., Schulte-Fortkamp, B., Fiebig, A. (2023). Psychoacoustics in Soundscape Research. In: Schulte-Fortkamp, B., Fiebig, A., Sisneros, J.A., Popper, A.N., Fay, R.R. (eds) Soundscapes: Humans and Their Acoustic Environment. Springer Handbook of Auditory Research, vol 76. Springer, Cham. https://doi.org/10.1007/978-3-031-22779-0_6

Download citation

DOI: https://doi.org/10.1007/978-3-031-22779-0_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-22778-3

Online ISBN: 978-3-031-22779-0

eBook Packages: Biomedical and Life SciencesBiomedical and Life Sciences (R0)