Abstract

Flower classification and recognition is an exciting research area because extensive variety of flower classes have similar colour, shape and texture features. Most of the existing flower classification systems use a combination of visual features extracted from flower images followed by classification using supervised or unsupervised learning methods. Classification accuracy of these approaches is moderate. Hence, there is a demand for a robust and accurate system to automatically classify flower images at a larger scale. In this paper, a selected deep features and Multiclass SVM based flower image classification method which uses pre-trained CNN (Convolutional Neural Network) AlexNet as feature extractor is proposed. Initially, flower image features are extracted using fully connected layers of AlexNet and subsequently most informative features are selected using minimum Redundancy Maximum Relevance (mRMR) algorithm. Finally, Multiclass Support Vector Machine (MSVM) classifier is used for classification. In the proposed scheme, computationally intensive task of training the CNN is minimized and also the efforts required to extract low level features is reduced. Classification accuracy of 98.3% and 97.7% is observed for KL University Flower (KLUF) dataset and Flower 17 dataset respectively. It is revealed that the proposed transfer learning based method outperforms existing deep learning based classification methods in terms of accuracy.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Convolutional Neural Network

- Deep learning

- Flower classification

- Support Vector Machine

- Minimum Redundancy Maximum Relevance

1 Introduction

There are numerous species of flowers around the world. Flowers have great demand in pharmaceutical, cosmetic, floriculture and food industry. Accurate identification of flowers is essential in applications like flower patent analysis, field observing, plant identification, floriculture industry, research in medicinal plants, etc. Manual classification of flowers is time consuming, less accurate and cumbersome. Automation of the classification of flower is therefore essential but a challenging task due to high similarities among classes [1]. There exists interclass similarity and intra-class dissimilarity among flower species. Due to deformation in flowers, lighting and climatic conditions, variations in viewpoints, large intra-class variations occur [2]. Because of these problems, flower recognition has become a challenging research topic in recent years.

In [3], it has been noticed that most of the manual approaches describe images using difference of image gradients, textures and/or colors. As a result of this, there exists a large dissimilarity between the low level representations and the high level semantics giving rise to low classification accuracy. Deep learning is found to be helpful in producing accurate image classification results.

It has been revealed that the features extracted from a pre-trained CNN can be directly used as a collective image representation. Compared with the traditional feature extraction methods, deep features extracted by the deep learning methods can represent the information content of the massive image data effectively. In [4], authors observed that deep learning techniques exhibit high degree of accuracy as compared with classical machine learning methods. The problem of image classification, identification etc. are efficiently tackled by deep learning approaches. At present, commonly used deep learning networks are Stacked AutoEncoder [5], Restricted Boltzmann Machine [6], Deep Belief Network [7] and Convolutional Neural Network (CNN). Deep CNN [8] is the most effective one for image classification.

In this paper, CNN based technique to classify flower images using deep features extracted from fully connected layers of AlexNet [9] has been presented. Consequently, 9192 (f6 and f7-4096 features each and f8-1000 features) dimensional feature vector is obtained. Discriminate feature selection is then done by ranking them using minimum Redundancy Maximum Relevance Algorithm (mRMR) [10]. Support Vector Machine (SVM) [11] with linear kernel is employed to classify flower images. The experiments are performed on Flower 17 database belonging to Oxford Visual Geometry Group [12] and KLUF database [13]. Use of deep CNN ensures robustness, eliminates the need of hand crafted features and improves classification accuracy.

The rest of the paper is organized as follows: Sect. 2 highlights the related work on image classification, Sect. 3 contains the outline of the proposed method, Sect. 4 describes the datasets used and Sect. 5 provides experimental results. Finally, we conclude the paper in Sect. 6.

2 Related Work

Image classification is a vibrant research topic in computer vision. Several approaches have been proposed for image classification in an automatic manner. Image classification was done using hand-picked features until’90s. Computer vision and image processing based classification techniques use a blend of features extracted from images for improving classification accuracy. Commonly used features for image classification are: colour, texture, shape and some statistical information.

In [14], authors have developed and tested a visual vocabulary that represents colour, shape, and texture to distinguish one flower from another. They found that combination of these vocabularies yield better classification accuracy than individual vocabularies. However, the accuracy reported by this approach was 71.76% which is quite low.

Authors in [15] have developed an approach for learning the discriminative power-invariance trade-off for classification. Optimal combination of base descriptors was done in kernel learning framework giving better classification results. Though this approach was capable of handling diverse classification problems, classification accuracy was not up to the mark.

An improved averaging combination (IAC) method based on simple averaging combination was proposed in [16]. Dominant set clustering was used to evaluate the discriminative power of features. Powerful features were selected and added into averaging combination one by one in descending order. Authors claim that their method is faster. However, classification accuracy is not satisfactory.

The conventional flower image classification methods lack in robustness and accuracy as they rely on handmade features which might not be generalizable. Flower classification technique applied to one flower dataset is not guaranteed on a different flower dataset.

Automated feature extraction is essential for improving the classification accuracy. Deep learning techniques are very effective in extracting features from a large number of images. In [17], flower classification model based on saliency detection and VGG-16 deep neural network was proposed. Stochastic gradient descent algorithm was used for updating network weights. Transfer learning was used to optimize the model. Classification accuracy of 91.9% was reported on Oxford flower-102 dataset. In [18], AlexNet, GoogleNet, VGG16, DenseNet and ResNet were analysed for classification of kaggle flowers dataset. It was reported that VGG16 model achieved highest classification accuracy of 93.5%. However, the time complexity of this method was high. In [19], a generative adversarial network and ResNet-101 transfer learning algorithm was combined, and stochastic gradient descent algorithm was used to optimize the training process of flower classification. Oxford flower-102 dataset was used in this research. Accuracy of 90.7% was reported by authors.

Authors in [20] used f6 and f7 layers of AlexNet and f6 layer of VGG16 model for deep feature extraction. Feature selection was done using mRMR feature selection algorithm and SVM classifier was employed for classification of the flower images. Classification accuracy of 96.1% was reported by authors. In this approach, more time is needed to extract deep features from two pre-trained networks i. e. AlexNet and VGG16. In [21] combination of an improved AlexNet Convolutional Neural Network (CNN), Histogram of Oriented Gradients (HOG) and Local Binary Pattern (LBP) descriptors was used by authors for feature extraction. Principle Component Analysis (PCA) algorithm was used for dimension reduction. The experiments performed on Corel-1000, OT and FP datasets yielded classification accuracy of 96%.

In [22], authors extracted Deep CNN features using VGG19 from and handcrafted features using SIFT, SURF, ORB Shi-Tomasi corner detector algorithm. Fusion of deep features and handcrafted features was done. The fused features were classified using various machine learning classification methods, i.e., Gaussian Naïve Bayes, Decision Tree, Random Forest, and eXtreme Gradient Boosting (XGBClassifier) classifier. It was revealed that fused features using Random Forest provided highest classification accuracy. Caltech-101 dataset was used by authors.

A hybrid classification approach for COVID-19 images was proposed by combination of CNNs and a swarm-based feature selection algorithm (Marine Predators Algorithm) to select the most relevant features was proposed in [23]. Promising classification accuracies were obtained. The authors concluded that their approach could be applicable to other image classes as well.

Authors in [24] evaluated the performance of the CNN based model using VGG16 and inception over the traditional image classification model using oriented fast and rotated binary (ORB) and SVM. Transfer learning was used to improve the accuracy of the medical image classification. The experiments using transfer learning achieved satisfactory results on chest X-ray images. Data augmentation method for flower images was used by authors in [25]. The Softmax function was used for classification. Classification accuracy was observed to be 92%.

The attractive attribute of pre-trained CNNs as feature extractor is its robustness, and no need to retrain the network. The objective of using the deep CNN model for flower classification is that, the feature learning in CNNs is a highly automated therefore it avoids the complexity in extracting the various features for traditional classifiers. Hence, we are motivated to use deep feature extraction approach for flower classification. AlexNet [9], ResNet [26], GoogleNet [27], VGG 16 [28] are some of the available choices of pre-trained networks for image classification. AlexNet DCNN [9] was pre-trained on one million images so the feature values are simple. Other CNNs were trained on more than 15 million images, giving rise to more complex feature values at fully connected layers. In AlexNet, fully connected layers provide discriminant features suitable for SVM classifer. This is reason for selecting AlexNet as feature extractor in the proposed work.

From the review of related works it was revealed that deep learning based techniques tackle the image classification problem efficiently. There is scope for improvement in classification accuracy. In this paper, we present a simple approach for flower classification using deep features extracted from fully connected layers of AlexNet. Feature ranking and selection is done to avoid redundant features. MSVM is then used for classification of flower images. The proposed work is presented below.

3 Proposed Work

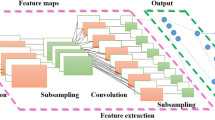

The proposed work consists of 3 stages, namely deep feature extraction using AlexNet [9], feature ranking and selection using mRMR algorithm [10], classification using MSVM classifier [11]. The block diagram of the proposed flower classification system is shown in Fig. 1.

3.1 Feature Extraction Using AlexNet

The AlexNet has eight layers out of which first five layers are convolutional layers and remaining layers are fully connected layers. Rectified linear unit (RELU) activation is used in each of these layers except the output layer. Use of RELU speeds up training process. The problem of overfitting is eliminated using dropout layers in AlexNet. This network was trained on ImageNet dataset having one thousand image classes.

In AlexNet, size of each input image is 227 * 227 * 3. First convolutional layer has 96 filters of size 11 * 11 with stride of 4. The size of output feature map is calculated as

Output Feature Map size = [(Input image size-Filter Size)/Stride] + 1.

Hence, Output feature map is 55 * 55 * 96.

Five convolution operations with different number and size of filters and strides are performed. At the end of fifth convolution, size of feature map is 13 * 13 * 256.

The number of filters goes on increasing as the depth of the network increases resulting in more number of features. The filter size reduces with depth of the network giving rise to feature maps with smaller shape. The fully connected layers f6 and f7 have 4096 neurons each. The last layer f8 is the output layer with 1000 neurons.

In the proposed approach, the deep CNN features extracted from fully connected layers of AlexNet are used. The size of concatenated feature vector is (f6 and f7 4096 features each and f8 1000 features) 9192. It is essential to reduce the number of features for saving computation cost and time hence, mRMR algorithm [10] for selecting distinct features by ranking them is used in this work, as explained in the following subsection. The proposed method is simple and less complex and no pre-processing on the input images is needed.

3.2 Feature Ranking and Selection: Minimum Redundancy Maximum Relevance Algorithm

If all the available features in the model are used then it suffers from the drawbacks such as high computation cost, over-fitting and model understanding difficulty. Therefore, distinct features should be selected. It leads to faster computation and accurate classification of flowers.

In this paper, a filter method called mRMR [10] is used on account of its computation efficiency and ability to effectively reduce the redundant features while keeping the relevant features for the model.

The mRMR algorithm finds each attribute as a separate coincidence variable and uses mutual information, I(x,y), among them to measure the level of similarity between the two attributes:

where \(p\left( {x,y} \right)\) represents the combined probability distribution function of X and Y, and \(p_1 \left( x \right)\,and\,p_2 (y\)) represent the marginal probability distribution function of coincidence variables of X and Y, respectively.

To facilitate equation, each attribute \(f_i\) defined as a vector formed by sorting N features \(( f_i = [f_i^1 f_i^2 ,f_i^3 , \ldots ,f_i^N ])\). \(f_i\) is treated as an example of a discrete coincidence variable and mutual information between i and j attributes is defined as \(I(F_i ,F_j ). \)

Where i = 1, 2,… d, j = 1,2,… d and d represents number of feature vector.

Let S be the set of selected features and |S| shows the number of selected features. The first condition to select best features is called as the minimum redundancy condition and is given by

And the other condition is named as maximum relevance condition which is given by

The two simple combinations that combine the two conditions can be denoted by the following equations:

The search algorithm is required to select the best number of feature, primarily, the first feature is selected according to Eq. (3). At each step, the feature with the highest feature importance score is added to selected feature set S.

3.3 Multi-class Support Vector Machine

Support Vector Machine is an effective tool which is widely used in image classification [11]. The elementary idea of SVM classifier is to find the best possible separating hyper-plane between two classes. This plane is such that there is highest margin between training samples that are closest to it. Initially, SVM was a binary class problem. Multiclass classification using SVM is done by breaking the multi-classification problem into smaller sub-problems named as one versus all and one versus one. One- versus one binary classifiers identify one class from another. One-versus-all classifiers separate one class from all other classes.

Let C1, C2,…, Cn be n number of classes.

Let S1,S2,…, Sm are the support vectors of the above classes.

In general,

where Ci consists of a set of support vectors Sj, separates nth class from all other classes.

The discriminant features obtained in second stage assist SVM to classify flower images.

4 Dataset

We have used publicly available Flower 17 [12] and KLUF [13] datasets in this work.

4.1 Flower 17 Dataset [12]

This dataset consists of 1360 flower images of 17 categories (buttercup, colts’ foot, daffodil, daisy, dandelion, fritillary, iris, pansy, sunflower, windflower, snowdrop, lilyvalley, bluebell, crocus, tigerlily, tulip, and cowslip). There are 80 images in each category.

FLOWERS17 dataset from the Visual Geometry group at University of Oxford is a challenging dataset. There are large variations in scale, pose and illumination intensity in the images of the dataset. The dataset also has high intra-class variation as well as inter-class similarity. The flower categories are deliberately chosen to have some ambiguity on each aspect. For example, some classes cannot be distinguished on colour alone (e.g. dandelion and buttercup), others cannot be distinguished on shape alone (e.g. daffodils and windflower). Buttercups and daffodils get confused by colour, colts’ feet and dandelions get confused by shape, and buttercups and irises get confused by texture. The diversity between classes and small differences between categories make it challenging. Hence, handcrafted feature extraction techniques are insufficient for describing these images. Sample images from Flower 17 dataset are as shown in Fig. 2.

Sample images from Flower 17 dataset [11]

4.2 KLUF Dataset [13]

KL University Flower Dataset (KLUFD) consists of 3000 images from 30 categories of flowers. There are 100 flower images in each category. Sample images in few categories of this dataset are as shown in Fig. 3.

Sample images from KLUF dataset [12]

5 Experimental Results

The overall flower image classification problem is evaluated using different combinations of features extracted by fully connected layers f6, f7 and f8 of AlexNet. The convolutional layers provide low level features whereas fully connected layers provide high level features which are useful for flower image classification. Hence, we make use of features from fully connected layers. f6 and f7 provide 4096 features each and f8 provides 1000 features. Hence, total number of features is 9192. As mentioned in previous section, so many features increase computational burden and causes storage space problem. Therefore, feature selection is done and the selected features are trained using multiclass SVM classifier. Classification accuracy is tested for various combinations of number of features from f6, f7 and f8. Features from f6 and f7 have almost no effect on classification accuracy. From the obtained classification results, it was observed that 800 features from f8 provides better features compared with f6 and f7 for flower classification problem. Deep features from pre-trained AlexNet f8 layer are sufficient for classifying the flower images efficiently. There is no need to use features from other pre-trained networks. Novelty of our method lies in improvising the accuracy by integrating deep features with selection criteria followed by multiclass classification.

Classification accuracy was compared by splitting the Flower 17 dataset into 75–25% and 60–40% training-testing images.

Table 1 shows the effect of number of selected features from fully connected layers of AlexNet on classification accuracy. These results are obtained for 5 fold cross validation.

It is observed that using proposed approach highest classification accuracy of 97.7% and 97.8% were obtained on Flower 17 and KLUF dataset respectively when the total number of selected features were 800. Experimental results reflect that the features obtained from f8 are more crucial in improving classification accuracy. When more features are selected from f8, better classification accuracy is obtained. Reducing the number of features from f7 and f6 has very little effect on classification accuracy. It is also noticed that when datasets are split as 75% training 25% testing images then better accuracy is obtained compared with 60% training-40% testing images as given in Table 2.

Classification accuracy based on Flower type is given in Table 3. By comparing confusion matrices for different number of features, it was observed that all the flower classes were correctly classified maximum number of times except Flower class 14 (Crocus). This particular class has a very wide intra-class variation that is why more number of features from f8, f7 and f6 are required for its correct classification.

Proposed classification results are compared with few state of the art existing approaches as shown in Table 4. Very less classification accuracy of 71.76% was obtained in the approach proposed by Nilsback et al. [14] using colour, shape, and texture features of flower images. Improved classification accuracy of 82.55% was obtained by authors in [15]. They used best possible trade-off for classification and combination of base kernels. However, the feature selection in this method was poor. Corresponding to base features which achieve different levels of trade-off (such as no invariance, rotation invariance, scale invariance, affine invariance, etc.) authors obtained classification accuracy of 82.55%.

Handpicked features HOG shape descriptor, Bag of SIFT, Local Binary Pattern, Gist Descriptor, Self-similarity Descriptor, Gabor filter and Gray value histogram were used by Wei et al. [16]. Clustering, ranking and averaging combination of features were used to yield classification accuracy of 86.1%. Though the accuracy is satisfactory, this approach is very cumbersome as it involves manual way of feature extraction.

In [3], authors used first, second and third layer semantic modelling which provided accuracy of 87.06% but it was noticed that the accuracy does not increase by adding more layers.

In [20] 800 features form AlexNet{fc6 + fc7} + VGG16{fc6} are needed for achieving classification accuracy of 96.1%.

Proposed deep feature based classification employing feature ranking and selection outperforms above mentioned approaches give accuracy of 98.3% and 97.7% with 820 {AlexNet f6–10, f7–10 and f8–800} features. It is revealed that selection of more number of features from f8 layer improves classification accuracy.

Feature selection strategy in our proposed work helped us in getting discriminative features which lead better classification accuracy using multiclass SVM classifier.

Figure 4 shows comparison of classification accuracy of existing approaches and proposed method.

6 Conclusion

In this paper, an accurate and efficient flower classification system based on deep feature extraction using AlexNet is proposed. In the presented approach, to select relevant features, feature ranking is done using mRMR algorithm. It is revealed that fully connected layer f8 provides more prominent image features compared with f6 and f7 for flower classification problem. Further, Multiclass SVM classifier is used for classification. Classification accuracy of proposed method is studied for different number of features selected from fully connected layers. Classification accuracy of 97.7% and 98.3% was observed on Flower 17 database and KLUF database respectively, which is far better compared with existing methods. It is revealed that the proposed approach is efficient and very much useful in flower patent search as well as identification of flowers for medicinal use.

Finding of this research lies in designing a method for tailoring up deep architecture with conventional classification algorithm with suitable features selection algorithm to achieve higher accuracy compared to existing works. Proposed method on the flower classification problem, can be applied to other applications, which share similar challenges with flower classification hence in future evaluation of performance of proposed method on different dataset is to be done.

Classification accuracy of few classes is less, which reduces the overall accuracy. This is the limitation of proposed method. By combining AlexNet f8 features with features extracted from other deep CNN models, the classification accuracy for all flower classes in the dataset can be improved.

References

Mukane, S.M., Kendule, J.A.: Flower classification using neural network based image processing. IOSR J. Electron. Commun. Eng. (IOSR-JECE) 7, 80–85 (2013)

Guru, D.S., Sharath, Y.H., Manjunath, S.: Texture features and KNN in classification of flower images. IJCA Spec. Issue ‘Recent Trends Image Process. Pattern Recogn.’ RTIPPR 21–29 (2010)

Zhang, C., Li, R., Huang, Q., Tian, Q.: Hierarchical deep semantic representation for visual categorization. Neurocomputing 257, 88–96 (2017)

Zhu, L., Li, Z., Li, C., Wu, J., Yue, J.: High performance vegetable classification from images based on AlexNet deep learning model. Int. J. Agric. Biol. Eng. 11, 217–223 (2018). https://www.ijabe.org

Liu, G., Bao, H., Han, B.: A stacked autoencoder-based deep neural network for achieving gearbox fault diagnosis. Adv. Math. Methods Pattern Recogn. Appl. 2018, 1–10 (2018). https://doi.org/10.1155/2018/5105709

Upadhya, V., Sastry, P.S.: An overview of restricted Boltzmann machines. J. Indian Inst. Sci. 99(2), 225–236 (2019). https://doi.org/10.1007/s41745-019-0102-z

Hinton, G.E.: Deep belief networks. Scholarpedia 4, 5947 (2009). https://doi.org/10.4249/scholarpedia.5947. CorpusID:7905652

LeCun, Y., Bengio, Y., Hinton, G.: Deep learning. Nature 521, 436–444 (2015)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: ImageNet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems (2012)

Peng, H., Long, F., Ding, C.: Feature selection based on mutual information: criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 8, 1226–1238 (2005)

Arun Kumar, M., Gopal, M.: A hybrid SVM based decision tree. Pattern Recogn. 43, 3977–3987 (2010)

Visual Geometry Group: Flower Datasets Home Page (2009). http://www.robots.ox.ac.uk/~vgg/data/flowers/

Prasad, M.V.D., et al.: An efficient classification of flower images with convolutional neural networks. Int. J. Eng. Technol. 7(1.1), 384–391 (2018)

Nilsback, M.-E., Zisserman, A.: A visual vocabulary for flower classification. In: Proceedings of CVPR, pp. 1447–1454 (2006)

Varma, M., Ray, D.: Learning the discriminative power-invariance trade-off. In: Proceedings of ICCV, pp. 1–8 (2007)

Wei, Y., Wang, W., Wang, R.: An improved averaging combination method for image and object recognition. In: Proceedings of ICMEW, pp. 1–6 (2015)

Rongxin, L., Li, Z., Liu, J.J.: Flower classification and recognition based on significance test and transfer learning. In: 2021 IEEE International Conference on Consumer Electronics and Computer Engineering (ICCECE 2021) (2021)

Cengıl, E., Çinar, A.: Multiple classification of flower images using transfer learning. In: 2019 International Artificial Intelligence and Data Processing Symposium (IDAP), pp. 1–6 (2019). https://doi.org/10.1109/IDAP.2019.8875953

Li, X., Lv, R., Yin, Y., Xin, K., Liu, Z., Li, Z.: Flower image classification based on generative adversarial network and transfer learning. In: IOP Conference Series: Earth and Environmental Science, vol. 647, p. 012180 (2021). https://doi.org/10.1088/1755-1315/647/1/012180

Cıbuk, M., Budak, U., Guo, Y., CevdetInce, M., Sengur, A.: Efficient deep features selections and classification for flower species recognition. Meas. J. Int. Meas. Confed. 137, 7–13 (2019). https://doi.org/10.1016/j.measurement.2019.01.041

Shakarami, A., Tarrah, H.: An efficient image descriptor for image classification and CBIR. Int. J. Light Electron. Opt. 214, 164833 (2020). https://doi.org/10.1016/j.ijleo.2020.164833

Bansal, M., Kumar, M., Sachdeva, M., Mittal, A.: Transfer learning for image classification using VGG19: Caltech-101 image data set. J. Ambient Intell. Humaniz. Comput. 2021, 1–12 (2021). https://doi.org/10.1007/s12652-021-03488-z

Talaat, A., Yousri, D., Ewees, A., Al-qaness, M.A.A., Damasevicius, R., Elaziz, M.E.A.: COVID-19 image classification using deep features and fractional-order marine predators’ algorithm. Sci. Rep. 10(1), 15364 (2020). https://doi.org/10.1038/s41598-020-71294-2

Yadav, S.S., Jadhav, S.M.: Deep convolutional neural network based medical image classification for disease diagnosis. J. Big Data 6(1), 1–18 (2019). https://doi.org/10.1186/s40537-019-0276-2

Tian, M., Chen, H., Wang, Q.: Flower identification based on deep learning. J. Phys. Conf. Ser. 1237, 22060 (2019). https://doi.org/10.1088/1742-6596/1237/2/022060

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 770–778 (2016). https://doi.org/10.1109/CVPR.2016.90

Szegedy, C., et al.: Going deeper with convolutions. In: 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1–9 (2015). https://doi.org/10.1109/CVPR.2015.7298594

Simonyan, K., Zisserman, A.: very deep convolutional networks for large-scale image recognition. In: International Conference on Learning Representations (2015)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Banwaskar, M.R., Rajurkar, A.M., Guru, D.S. (2022). Selected Deep Features and Multiclass SVM for Flower Image Classification. In: Guru, D.S., Y. H., S.K., K., B., Agrawal, R.K., Ichino, M. (eds) Cognition and Recognition. ICCR 2021. Communications in Computer and Information Science, vol 1697. Springer, Cham. https://doi.org/10.1007/978-3-031-22405-8_28

Download citation

DOI: https://doi.org/10.1007/978-3-031-22405-8_28

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-22404-1

Online ISBN: 978-3-031-22405-8

eBook Packages: Computer ScienceComputer Science (R0)