Abstract

Over the last decade, the fintech industry has witnessed fast growth, where online or digital lending has appeared as an alternative and flexible form of financing in addition to popular forms of financing, such as term loans, and expedited the entire lending process. High-tech online platforms have provided better user experiences and attracted more consumers and SMBs (small and medium-sized businesses). Partnerships and collaborations have also been forged between financial startups and incumbents or traditional lending institutions to further differentiate products and services to niche markets and accelerate the way of innovations. With the evolving landscape of the fintech sector, risk management tools such as credit scoring have become essential to assess credit risks such as default or delinquency based on debtor credit history or status. Many fintech companies are relatively young and sometimes serve only a small portfolio with a relatively scarce delinquency history. How can they predict default risk when making financing decisions on new applications? In this paper, we document a framework of leveraging supplemental samples of consumer or business credit information from the credit bureau that can be augmented with fintech applications for credit scoring based on theoretical and empirical studies of credit application data from a Canadian online auto leasing corporation. We also provide and compare credit scoring modeling solutions utilizing interpretable AI and machine learning methods such as logistic regression, decision tree, neural network, and XBGoost.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Between 2010 and 2020 about 20 fintech hubs have been formulated around the globe with strong growth trends and activities or investments [1]. Canada has become home to several of the leading hubs benefiting from good quality of national regulation and mature business environment, attracting a strong talent pool and embracing technology innovation [2]. Consumers and SMBs are now taking advantage of innovation capabilities of fintechs with more flexible financing terms and programs, accelerated customer experiences from digital banking and fast and transparent funding opportunities with less fees and costs. Traditional credit risk assessment tools such as credit scoring conditional on applicants’ previous credit history and current credit status are still commonly used by lending fintechs to assess borrowers’ creditworthiness and delinquency risk or to make prediction of their probability of default after application. Various generic credit scores such as credit bureau scores or FICO score [3] have existed for many years to evaluate consumer or commercial credit risk and almost become universal rules of standard for underwriting. They can be easily acquired by fintech financers from credit bureaus at the point of applications and usually have good and robust performance in helping risk judgment. Sometimes customized credit scores have also been developed by lenders based on their own existing credit portfolios that make better prediction or risk classification.

One observation with this approach is that for fintechs with relatively short history of establishment, small portfolios of customers from niche markets and prudent credit decision process or market strategies, the scarcity of delinquency history or low portfolio default rate has sometimes caused generic credit scores difficult to build and unreliable overtime even they have been built. This could also be contributed by the lack of funded applications in the case of commercial credit seekers such as SMBs. Section 2 proposes a framework of supplementary sampling of consumers or businesses with similar credit quality and their proxy credit trades from credit bureau reported by various partner lenders, which is based on application credit bureau scores and default rate distributions of original fintech applications. It can be used to build credit scoring models tailored to specific fintech companies to overcome aforementioned model data issues.

In the era of big data, interpretable AI and machine learning have gained more popularity over the years and become almost ubiquitous in credit scoring literature. While traditional approach logistic regression has still been widely applied in industry practices due to its simplicity, robustness and parsimonious form and easy interpretability for regulatory purposes, many sophisticated generic classification algorithms have also been implemented in credit risk modeling and proven to provide better risk prediction and validation results in cases such as e-commerce or retail banking [4, 5]. Section 3 reviews several credit risk modeling techniques including logistic regression, decision tree, constrained neural network and XGBoost for consumer or commercial credit scoring. Section 4 compares their performances based on real data analysis of application and proxy samples from a Canadian fintech company and credit bureau.

2 Supplementary Sampling

It has been well known that credit scoring model exploiting only booked applications might introduce selection bias due to reasons such as cherry picking in credit decisioning process [6]. Many reject inference methods and strategies have been evaluated under various assumptions of missing mechanism, which include statistical inference based on regression models or reclassification, variations of Heckman’s 2-step sample bias correction procedure and nonparametric methods, and recently proposed machine learning methods such as SMOTE and graph-based semi-supervised learning algorithm [7]. This paper does not focus on these reject inference methods, instead we take an approach of utilizing supplementary samples and using proxy trade performances for rejects or non approvals as documented by Barakova et al. (2013) [8]. A proxy trade is a trade from similar credit product but funded by other lenders. Using massive credit bureau database, rejected or non-funded applications from a specific lender can be identified if they were able to get funds from other competitors and their performances on similar trades can also be extracted for risk modeling.

Building a credit scoring model on small application data can be unstable or unrealistic even when there could exist abundant credit attributes at the point of application due to the problem of overfitting or curse of dimensionality [9]. Independent consumers or SMBs with similar credit quality measured by credit bureau scores and their proxy consumer or commercial credit trades from the similar credit products funded by other lenders can be extracted and augmented to the original fintech credit applications so that enough bad observations and sufficient model sample size can be achieved. We prove that under certain assumptions of distributions of application credit scores, credit attributes and default rate, the conditional probability estimation of default can be unbiased using the augmented samples from both fintech applications and proxy population.

2.1 Notations

We introduce mathematical notations for credit scoring modeling as following: Let \(X=\{X_{1},...,X_{p}\}\) be the available credit attributes from credit bureau at point of application, these are usually composed of hundreds or even thousands of predictive attributes related to applicant’s credit characteristics such as payment or delinquency history in various credit products or trades, e.g., credit card, line of credit, installment loans, mortgages or telco trades; derogatory public records such as charge-off, collection or bankruptcy. They can also be related to applicant’s credit appetite such as trade balance, credit limit or utilization and credit history such as number of trades opened and their ages or new credit.

Let \(S=\{S_{1},...,S_{s}\}\) be credit scores at point of application, they could be multiple bureau risk scores, or bankruptcy scores and FICO scores.

Let \(Y=\{0,1\}\) be the indicator of default or non-default of a credit trade or loan in a performance window, e.g. 12 or 24 months after application.

Let \(Z=\{F,P\}\) be the indicator of whether an application is from a specific fintech and funded by the fintech itself or by a competitor after reject inference, or an independent proxy trade from similar credit product or portfolio booked by other lenders reported to credit bureau, P represents that the trade is a proxy, F represents a trade related to a specific fintech.

Let \(P(Y=1|X,S,Z=F)\) or \(P(Y=1|X,S,F)\) be the conditional probability of default of fintech applications given application credit bureau scores and credit attributes that we want to estimate using credit scoring models.

2.2 Theories

Theorem 1

Assuming that the distribution of applicants credit attributes related to a credit trade given default status and the same application credit bureau scores is independent of whether the trade is from a specific fintech or an independent proxy trade from credit bureau report pool, i.e., \(P(X|Y,S,F) = P(X|Y,S,P) = P(X|Y,S)\), if a random sample from the proxy population has the same joint distribution of default and application credit scores as that of the applications from a specific fintech, i.e., \(P(Y,S|P) = P(Y,S|F),Y=1\ or\ 0\), then we have the conditional probability of default as following:

Corollary 1

Under the same assumption as in Theorem 1, if a random sample from the proxy population has the same conditional credit score distribution P(S|Y, P) and marginal default distribution P(Y|P) as the fintech applications, i.e., \(P(S|Y,P) = P(S|Y,F)\) and \(P(Y|P) = P(Y|F)\), then we have the conditional probability of default as following:

Corollary 2

Under the same assumption as in Theorem 1, if a random sample from the proxy population has the same conditional default probability distribution P(Y|S, P) and the marginal application credit scores distribution P(S|P) as the fintech applications, i.e.,\(P(Y|S,P) = P(Y|S,F)\) and \(P(S|P) = P(S|F)\), then we have the conditional probability of default as following:

Proof

See proof in Appendix.

2.3 Sampling Strategies

Based on Theorem 1 and corollaries, we propose three strategies of proxy sampling for credit scoring modeling of Fintechs’ applications:

Let N be the total number of funded fintech applications and reject inferences.

Let \(I(Y_{i})\) be the indicator of applications being default or not default.

Let \(I(S_{ik})\) be the indicator of the ith application falling into a score band k. If there exists multiple application scores from the credit bureau, the score band could be extended to a grid.

Let \(I(Y_{i}, S_{ik})\) be the indicator of the ith application falling into an application credit score band k and being default or not default.

Strategy 1. Stratified sampling based on joint distribution of default and application credit scores P(Y, S|F):

-

1.

Find the empirical joint distribution of default and application credit scores \(\hat{P}(Y,S_{k}|F)=\frac{\sum _{i=1}^{N} I(Y_{i},S_{ik})}{N}\), where \(Y_{i}=1\) or 0.

-

2.

Break the proxy population into strata based on combination of application scores bands and default status, draw a stratified sample from proxy population using proportional rate at each stratum according to \(\hat{P}(Y,S_{k}|F)\).

Strategy 2. Stagewise sampling based on marginal default distribution P(Y|F) and posterior conditional distribution of application credit scores given default status P(S|Y, F):

-

1.

Find the empirical marginal default rate \(\hat{P}(Y|F)= \frac{\sum _{i=1}^{N}I(Y_{i})}{N}\), \(Y_{i}=1\) or 0 and the empirical posterior conditional distribution of application credit scores given default status \(\hat{P}(S_{k}|Y,F)=\frac{\sum _{i=1}^{N}I(Y_{i},S_{ik})}{\sum _{i=1}^{N}I(Y_{i})}\), where \(Y_{i}=1\) or 0.

-

2.

Do a two stage sampling from proxy population by breaking the sample into default or not default according to empirical marginal default distribution \(\hat{P}(Y|F)\) from step 1 at the 1st stage, then draw a stratified sample using proportional rate at each stratum according to \(\hat{P}(S_{k}|Y,F)\).

Strategy 3. Stagewise sampling based on marginal application credit score distribution P(S|F) and posterior conditional distribution of default given application credit scores \(P(Y=1|S,F)\):

-

1.

Find the empirical marginal distribution of application credit score \(\hat{P}(S_{k}|F)=\frac{\sum _{i=1}^{N}I(S_{ik})}{N}\), where \(S_{ik}\) falls in score band k and the empirical posterior conditional distribution of default given application credit scores \(\hat{P}(Y|S_{ik},F)=\frac{\sum _{i=1}^{N}I(Y_{i},S_{ik})}{\sum _{i=1}^{N}I(S_{ik})}\), where \(Y_{i}=1\) or 0.

-

2.

Do a two stage sampling from proxy population by breaking the sample into score bands or grids according to empirical marginal score distribution \(\hat{P}(S_{k}|F)\) from step 1 at the 1st stage, then draw a stratified sample using proportional rate at each stratum according to \(\hat{P}(Y|S_{k},F)\).

It is worth to notice that using the same estimation methods of empirical distributions of default and application credit scores, the three sampling strategies are essentially equivalent.

3 Techniques of Credit Scoring

A wide variety of credit scoring techniques have been used to build credit scoring models. Hand and Henley (1997) [10] offer an excellent review of the statistical techniques used in building credit scoring models. Abdou and Pointon (2011) [11] have extensively reviewed both of the traditional and advanced techniques of credit scoring. In this paper we mainly investigate and compare four of the credit scoring techniques:

Logistic regression is a type of statistical regression analysis often used to predict the outcome of a binary target variable, e.g., default or not, conditional on a set of independent predictive variables such as the credit attributes and credit scores, it is assumed the probability of default is linked to the predictive credit attributes through a logit function [12].

Decision tree is a type of classification methods based on recursive partitioning of predictive attributes or features depending on the information gain or purity measures such as entropy or GINI after splits [13]. There have been variations of decision trees, such as CART or CHAID based on different types of target variables or splitting criteria. They are a convenient tool that can automatically handle large amounts of predictive attributes and feature selection.

XGBoost provides a regularizing gradient boosting framework for various computing languages through an open-source library [14]. The method contains rounds of iteration that create a weighted summation of learners or ensemble through gradient descent search that minimizes the empirical loss function [15]. It’s a process of merging all weak classifiers together to have a model with better performance [4]. Sometimes it can also suffer from imbalanced data [16, 17]. XGBoost is a slightly different version of boosting in that the optimization is not directly based on gradient descent but based on approximation. Like decision trees, XGBoost provides a convenient feature selection process through iteration and often achieves higher accuracy than a single decision tree. However, it also sacrifices the intrinsic interpretability of decision tree diagrams through aggregation of multiple tree learners.

Constraint Neural Network or NDT is a refined version of neural networks with additional constraints such as monotonic relationship between predictive attributes and target variable. A set of credit attributes are fed into multiple layers of neuron nodes through activation functions and output the final prediction through the last hidden layer. By adding monotonic constraints, it offers more interpretability for regulatory purposes and creates logical reason codes for credit decisioning while still maintaining the machine learning structure and the accuracy from artificial intelligence [18]. The invention is credited to Turner M., Jordan, L. and Joshua, A. (2021) [19], it requires stringent feature selection and normalization of data before training.

4 Empirical Studies

4.1 Data Source and Sample Facts

Auto lease application data submitted between Nov 2015 and Dec 2019 from a recently established Canadian online auto leasing corp has been analyzed. Bad or default of an auto lease is defined as 90dpd+ or worse in 24 months post application. There are 47,818 consumer applications, out of which 31,754 consumers have been funded by the fintech lender, 152 of them are defaults (0.48% bad rate); 9,093 consumer applications can also be qualified as commercial SMBs, out of which 6,035 commercial applications have been funded by the fintech lender, 33 of them are defaults (0.55% bad rate).

For the rest of non-funded applications, reject inferences are performed based on auto lease trades from credit bureau consumer or commercial trade pools opened within 2 months after the original submission of fintech application, only 559 of non-funded consumer applications can be found opened a consumer auto lease with 9 defaults (1.6% bad rate); 156 of non-funded commercial applications can be found opened a commercial auto lease with 3 defaults (1.9% bad rate).

Three benchmark consumer credit scores and three commercial credit scores have been selected from bureau: ERS2 or Equifax Risk Score predicts consumer tendency of delinquency; BNI3 or Bankruptcy Navigation Indicator predicts consumer tendency of bankruptcy; BCN9 or FICO8 score is the Fair Issac consumer credit score. BFRS2 or bankruptcy financial risk score predicts tendency of businesses to go bankruptcy in commercial trades; FTDS2 or financial trade delinquency score predicts tendency of businesses being delinquent in financial commercial trades; CDS2 or commercial delinquency score predicts tendency of businesses being delinquent in industry commercial trades.

For the consumer applications, stratified sampling strategy 2 from only ERS2 score band strata has been performed due to the lack of bad observations in some of the combined score grids, which resulted in an independant supplementary proxy sample of 104,922 consumer auto leases with 3,888 defaults (non-defaults were down-sampled 1 out of 10, 0.38% weighted bad rate). For the commercial applications, the entire commercial proxy population of 150K commercial auto leases (1.10% bad rate) has been used without further stratified sampling because of very limited fintech observations and the fintech’s business strategy and risk tolerance or appetite.

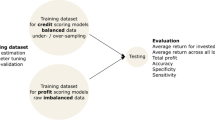

4.2 Model Development and Comparisons

The fintech applications and proxy samples are matched at bureau and appended consumer and commercial credit scores and attributes at the point of application separately based on their individual categories. There are around 2K trended or static consumer credit attributes and 800 commercial credit attributes available for modeling. Before passing them to modeling, several procedures have been completed to ensure the modeling quality including data integration and cleansing, segmentation analysis, data filtering and transformation. The consumer applications have been splitted based on a credit attribute related to the worst ever rating of all credit trades from a CART tree, which results in two segments of ever 30dpd or worse, or intuitively clean or dirty.

In the model development stage, the full samples are divided into 70% training and 30% validation set for consumer and commercial applications individually. Four credit risk modeling techniques have been utilized including: logistic regression, decision tree, XGBoost and constraint neural network (NDT). The related feature selection methods for the four procedures are as following:

Logistic Regression utilizes stepwise selection according to attribute statistical significance from maximum likelihood estimation after prescreening of credit attributes. To cope with collinearity, sometime VIF (variance inflation factor) filtering has also been applied after model fit to reduce any model attributes that are highly correlated with other predictors.

Decision tree and XGBoost automatically select attributes at each partition or iteration based on information gain or variable importance when optimizing the loss functions such as impurity or negative likelihood etc. It is similar to stepwise selection in some sense but it would not update the previous selection and estimation when new attribute entered through iteration.

Constrained neural network (NDT) does not provide automatic feature selection, instead it requires preselection of attributes before model fitting. Top 20 to 50 attributes selected from XGBoost have been used based on attribute importance. Further reduction or addition of attributes seem not to improve the model performances.

The generic algorithms from machine learning and artificial intelligence usually requires tuning of hyperparameters that regularize the model fit, such as the number of features, tree depth, leaf size, learning rate, number of iterations, number of nodes, number of hidden layers, L1 and L2 regularization etc. Combination of these hyperparameters and grid search have been tested to find the best fits through cross validation.

For model interpretability, the credit attributes relative importance from the algorithms can be easily extracted.

4.3 Model Evaluation

We have used the following common performance measures for credit scoring model including:

KS (Kolmogorov-Smirnov) test statistic: It measures the separation ability of classification, which captures the maximum difference in the cumulative distributions of the good/bad samples. Larger KS represents better separation.

GINI or AUROC: The two metrics measure the discriminatory power of the classification models. The GINI Coefficient is the summary statistic of the Cumulative Accuracy Profile (CAP) chart. A ROC curve shows the trade-off between true positive rate (TPR) and false positive rate (FPR) across different decision thresholds. The AUROC is calculated as the area under the ROC curve. GINI is equal to 2AUROC-1. Larger GINI represents better discrimination.

Gains or Lift: A gain or lift chart graphically represents the improvement that a model provides when compared against a random guess. Gain is the ratio between the cumulative number of bad observations up to a decile to the total number of bad observations in the data. Lift is the ratio of the number of bad observations up to kth decile using the model to the expected number of bads up to kth decile based on a random or benchmark model.

Risk ordering and accuracy: As model prediction improves, the bad rate should improve in an orderly and predictable fashion. The estimated probability of bad decreases when the default risk decreases, so the observed bad rate should decrease as well. Z-statistics can compare the actual and predicted bad rate for each of the decile ranks so the direction of estimation discrepancies can be checked.

The model performance has been assessed on both training set and validation set by sample categories of the full (Proxy+Fintech+RI), fintech applications (Fintech+RI) and fintech funded, by applicant type of consumer or commercial and by combined or segments. The detailed comparison can be found in Table 1, Fig. 1 and 2. Key findings of validation include:

-

Customized new scoring models almost always outperform the three benchmark bureau scores regardless of the sample categories in the validation.

-

For consumer applications, XGBoost significantly outperforms the other three methods in the clean segment of Fintech applications, also has best GINI in combined segments validation. Decision tree has the best separation KS or gains at the 1st decile in the full validation data of Fintech applications. Logistic regression has the best separation KS or gains in the dirty segment of Fintech applications. NDT can have better seperation in clean segment.

-

For commercial applications, there is more volatility in their performances in the fintech applications: generic algorithms always outperform logistic regression and the benchmark scores in the sample of Fintech+RI, however logistic regression and they sometimes underperform some of the benchmarks in the fintech booked applications. Decision tree seems to provide more stable separation and discriminatory power and XGBoost has the highest gains in the 3rd decile.

-

For the consumer applications, the new scores seem to slightly underestimate the bad rate with most of the Z-statistics being positive but not severe (\({<}\)1.96) and the risk ordering is in the right direction. For commercial applications, the new scores seem to slightly overestimate the bad rate with most of the Z-statistics being negative for fintech applications due to the higher bad rate in the proxy sample. This problem does not appear to be severe for generic algorithms, but more severe for logistic regression so further calibration may be needed even though the risk ordering is in the right direction.

5 Conclusion

Supplemental samples are effective and useful for both reject inference and predictive modeling in credit scoring. By leveraging the tailored supplemental samples, we have demonstrated that traditional credit scoring tools and innovative generic algorithms, which are from machine learning and interpretable AI, can both be utilized to assess default risk for fintech loan applications when the original volume and number of bad observations are small and not sufficient for modeling. They can provide significantly better performances than the existing benchmark scores. Generic algorithms can sometimes overfit so additional out-of-time validations need to be required for further investigation in practice.

References

Accenture: Collaborating to win in Canada’s Fintech ecosystem, Accenture 2021 Canadian Fintech Report (2021). https://www.accenture.com/_acnmedia/PDF-149/Accenture-Fintech-report-2020.pdf

Goulard, B., Lake, K.T., Reynolds M.: Canadian Fintech Review, Torys LLP, November 2021. https://www.torys.com/our-latest-thinking/publications/2021/11/canadian-fintech-review

Fair-Isaac: FAQs-About-FICO-Scores-Canada-2019.pdf (2019). https://www.ficoscore.com/ficoscore/pdf/FAQs-About-FICO-Scores-Canada-2019.pdf

Tian, Z.Y., Xiao, J.L., Feng H.N., Wei, Y.T.: Credit risk assessment based on gradient boosting decision tree. In: 2019 International Conference on Identification, Information and Knowledge in the Internet of Things (IIKI2019)

Ma, Z., Hou, W., Zhang, D.: A credit risk assessment model of borrowers in P2P lending based on BP neural network. PLoS ONE 16(8), e0255216 (2021). https://doi.org/10.1371/journal.pone.0255216

Hand, D.J.: Reject inference in credit operations: theory and methods. In: The Handbook of Credit Scoring, pp. 225–240. Glenlake Publishing Company (2001). /art00177

Kang, Y., Cui, R., Deng, J., Jia, N.: A novel credit scoring framework for auto loan using an imbalanced-learning-based reject inference. In: 2019 IEEE Conference on Computational Intelligence for Financial Engineering & Economics (CIFEr), pp. 1–8. IEEE (2019)

Barakova, I., Glennon, D., Palvia, A.: Sample selection bias in acquisition credit scoring models: an evaluation of the supplemental-data approach. J. Credit Risk 9, 77–117 (2013)

Surrya, P.D., Radcliffea, N.J.: Why size does matter in credit scoring. In: Proceedings of Credit Scoring and Credit Control V, Edinburgh (1997) (1997)

Hand, D.J., Henley, W.E.: Statistical classification methods in consumer credit scoring: a review. J. R. Stat. Soc. A 160, 523–541 (1997)

Abdou, H., Pointon, J.: Credit scoring, statistical techniques and evaluation criteria: a review of the literature. Intell. Syst. Account. Finance Manag. 18(2–3), 59–88 (2011)

Hosmer, D.W., Lemeshow, S.: Applied Logistic Regression, 2nd edn. Wiley, New York (2000)

Breiman, L., Friedman, J.H., Olshen, R.A., Stone, C.J.: Classification and Regression Trees. The Wadsworth, Belmont (1984)

Chen, T., Guestrin, C.: XGBoost: a scalable tree boosting system. In: Krishnapuram, B., Shah, M., Smola, A.J., Aggarwal, C.C., Shen, D., Rastogi, R. (eds.) Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016, pp. 785–794. ACM (2016). arXiv:1603.02754. https://doi.org/10.1145/2939672.2939785

Hastie, T., Tibshirani, R., Friedman, J.H.: Boosting and additive trees. In: Hastie, T., Tibshirani, R., Friedman, J.H (eds.) The Elements of Statistical Learning, 2nd edn., pp. 337–384. Springer, New York (2009). https://doi.org/10.1007/978-0-387-84858-7_10. ISBN 978-0-387-84857-0

Buja, A., Stuetzle, W., Shen, Y.: Loss functions for binary class probability estimation: structure and applications, Technical report, The Wharton School, University of Pennsylvania, January 2005

Shen, Y.: Loss functions for binary classification and class probability estimation, Ph.D. dissertation, The Wharton School, University of Pennsylvania (2005)

McBurnett, M., Sembolini, F., Turner, M., Jordan, L., Hamilton, H., Torres, S.R.: Comparative Analysis of Machine Learning Credit Risk Model Interpretability: Model Explanations, Reasons for Denial and Routes for Score Improvements, Credit Scoring and Credit Control XVII, University of Edinburgh, UK, August 26 2021 (2021)

Turner, M., Jordan, L., Joshua, A.: Machine-learning techniques for monotonic neural networks, Equifax, US patent 11010669 (2021)

Acknowledgements

The authors report no conflicts of interest. The authors alone are responsible for the content and writing of the paper.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix. Proof of Theroem and Corollaries

Appendix. Proof of Theroem and Corollaries

Proof of Theorem 1: According to Bayes’ rules, we have the conditional probability of default given applications from a specific fintech:

In the above equation, the joint distribution of credit attributes and credit scores given applications from a specific fintech can be expanded as:

we also have the conditional probability of default given an independent proxy trade in a proxy sample from credit bureau:

And the joint distribution of application credit attributes and credit scores given an independent proxy trade in a proxy sample from credit bureau can be expanded as:

Hence under the assumption \(P(X|Y,S,F) = P(X|Y,S,P) = P(X|Y,S)\), if there exists a proxy sample that has the same joint distribution of probability of default and credit scores at the point of application as that of a trade related to a specific fintech, then the conditional probabilities of default from proxy or fintech are equivalent.

Proof of Corollary 1: Notice that according to Bayes’ rules and Theorem 1, we have the conditional joint distributions in Eq. (4) and (5):

By plugging (8) back into Eqs. (4)–(7), we have the desired result.

Proof of Corollary 2: Notice that according to Bayes’ rules and Theorem 1, we have the conditional joint distributions in Eq. (4) and (5):

By plugging (9) back into Eqs. (4)–(7), we have the desired result.

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Shen, Y. (2022). Application of Supplemental Sampling and Interpretable AI in Credit Scoring for Canadian Fintechs: Methods and Case Studies. In: Chen, W., Yao, L., Cai, T., Pan, S., Shen, T., Li, X. (eds) Advanced Data Mining and Applications. ADMA 2022. Lecture Notes in Computer Science(), vol 13725. Springer, Cham. https://doi.org/10.1007/978-3-031-22064-7_1

Download citation

DOI: https://doi.org/10.1007/978-3-031-22064-7_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-22063-0

Online ISBN: 978-3-031-22064-7

eBook Packages: Computer ScienceComputer Science (R0)