Abstract

Falsification is the basis for testing existing hypotheses, and a great danger is posed when results incorrectly reject our prior notions (false positives). Though nonparametric and nonlinear exploratory methods of uncovering coupling provide a flexible framework to study network configurations and discover causal graphs, multiple comparisons analyses make false positives more likely, exacerbating the need for their control. We aim to robustify the Gaussian Processes Convergent Cross-Mapping (GP-CCM) method through Variational Bayesian Gaussian Process modeling (VGP-CCM). We alleviate computational costs of integrating with conditional hyperparameter distributions through mean field approximations. This approximation model, in conjunction with permutation sampling of the null distribution, permits significance statistics that are more robust than permutation sampling with point hyperparameters. Simulated unidirectional Lorenz-Rossler systems as well as mechanistic models of neurovascular systems are used to evaluate the method. The results demonstrate that the proposed method yields improved specificity, showing promise to combat false positives.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Coupling measures derived from data allow discovery of system characteristics and information flow when the experiment does not permit intervention in the physical process. Traditionally, pairwise coupling metric such as mutual information or correlation are used for assessing coupling between systems [1], such as for assessing functional connectivity in the brain [2]. Dynamical system methods have been popular for uncovering coupling, such as Granger causality and its nonparametric extension, transfer entropy [3,4,5]. However, in their standard form, they assess linear relationships through multivariate autoregression models [4], though extensions have been made for assessing nonlinear coupling through kernel methods [6]. Nonetheless, such methods have been shown to report spurious detections of causality, i.e. false positives [7, 8]. Straining out the possibilities of false positive is imperative in hypothesis driven analysis to avoid improper conclusions based on a priori expectations [9]—in causal analysis, the prior notion is non causal relationship between two variables.

Convergent cross-mapping was introduced to account for cases when synergistic nonlinear coupling may emerge from complex dynamical systems [10]. This phenomenon is possible to be exploited as, holistically, the history of state space is assessed (nonparametrically) rather than being purely an analysis of the lag values’ predictive power as with Granger causality. Formally, convergent cross-mapping (CCM) suggests that, in deterministic systems, there should be high correlation in predicting a cross-mapped system by taking a state from a state space reconstruction (as in [11]) of a putative variable to the state space reconstruction of a caused variable [10]. Subsequent ameliorations introduced Gaussian processes with the notion that the reconstructed state space has uncertainty, thus a prior Gaussian process mean and covariance functions were applied, either as in [12] to optimize the free parameters for state space construction, in [13] to analyze residuals in cross-mapping, or to assess a probability ratio as in [14]. We shall refer to the latter method in our paper.

Gaussian process models are characterized by mean and covariance (often referred to as the kernel) functions [15]. Though they provide nonparametric inference models for a posteriori analysis of random processes conditioned on observed data, they are sensitive to the hyperparameters of the kernel function that describes the covariance between observations. Point estimates of hyperparameters are optimized by maximum marginal likelihood of the observations. However, these point estimates may provide degenerate distributions when generating null distributions derived from permutation sampling for hypothesis testing as empirically seen in [14] and other contexts of Bayesian modeling [16]. We thus propose placing prior distributions on the hyperparameters and subsequently obtain an approximate posterior distribution conditioned on observations (who a priori are zero mean Gaussian processes) using variational Bayesian methods, hereafter referred to as Variational Gaussian Process Convergent Cross-Mapping (VGP-CCM). This method avoids expensive Markov Chain Monte Carlo integration methods for conditional distributions [17]. With the approximate posterior we integrate out hyperparameters effects from the coupling statistics and derive nondegenrate null distributions for hypothesis testing. We test this amelioration on unidirectional coupled Lorenz-Rossler systems and neurovascular systems.

2 Materials and Methods

2.1 Cross Mapping

A central tenet of nonlinear analysis in state space using a single time series, which we refer to in lower case letters such as x, hinges on state space reconstruction, where a time series of a variable in a system is viewed as a projection of system’s topology to a single dimension (focusing on reals, \(\mathbb {R}^{N\times 1}\) where N is number of time points), and where using delay-coordinate embedding maps (\(\phi (x_i)= \{x_i, x_{i-\tau },x_{i-2\tau },...,x_{i-m\tau }\}\) for i in \(1..N-\tau m\), where m is an “embedding dimension" and \(\tau \) delay time) of said time series can reconstruct a state space with isomorphisms to the generating system’s structure in terms of differential geometry [11, 18]. Cross-mapping exploits this theoretical feature of reconstructed state spaces and the nature of time series being low dimension projections of systems, such that a state space reconstructed by a putative variable (\(\phi (x)\)) should be independent of a caused variable (\(\phi (y)\), implying its reconstructed state space contains no dynamical information of the caused variable leading to the independence of predictions of a caused variable by a putative variable’s state. On the other hand, a prediction of a putative variable from a caused variable’s state space should not be independent of the putative variable as its reconstructed geometry needs to have topology consistent with that of the putative variable to predict itself. Convergent cross-mapping in its original form approaches this problem as a regression task, particularly as performed by simplex regression [10, 19].

2.2 Gaussian Process Convergent Cross Mapping

Introduced in [14], GP-CCM utilizes probability ratio to determine the most likely coupling direction based on spatial analysis in state space through incorporating a delay-coordinate map into the kernel function that defines the a priori covariance function of the Gaussian process, i.e. \(Cov(x_i,x_j) = K(\phi (x_i),\phi (x_j))\). In other words, rather than assess predictive powers through regression analysis, GP-CCM proposed a probabilistic approach that relates the cross-mapping power as a multivariate probability distribution. Let X and Y be stochastic processes that generate the observed time series of x and y respectively. Probability functions for X and Y are derived by posterior analysis from conditioning a joint Gaussian Processes by the observed time series y and x respectively. Consider \(\tilde{X}\) and \(\tilde{Y}\) the null distributions for uncoupled processes (e.g. through permutation sampling). Then, the null GP-CCM probability ratio test would be:

\(\theta _x\) or \(\theta _y\) are considered nonrandom point values, \(\tilde{\kappa }\) is a function of the null distribution, and k is the permutation sample iteration. For sake of conciseness, we shall abuse the notation, e.g. P(X|Y), for a conditioned distribution, e.g. \(P(X|Y=y)\), for the rest of this paper.

Information Theoretic Results In [14], the maximum a posteriori (MAP) of the distributions \(P(\tilde{Y}|X;\theta _x)\) and \(P(\tilde{X}|Y;\theta _y)\) were used to provide scalar results. Given the form of a normal distribution with dimensionality d, mean \(\mu \) and covariance \(\varSigma \):

The MAP would be where \(X = \mu \), making the exponential an identity matrix, thus:

Which we can see, from taking the negative logarithm, is equivalent to the differential entropy of a Gaussian distribution with an offset of \(\frac{d}{2}\):

Let \(K_k(\tilde{X}, \tilde{Y})=log(\frac{MAP(P(\tilde{Y}|X;\theta _x)}{MAP(P(\tilde{X})|Y;\theta _y)})\), we then obtain:

An interpretation of our probability ratio test in information theory can be the difference of the amount of information X provides given we were informed of Y a priori vs how much information Y provides given we were informed of X a priori. Caused variables should provide minimal information to the causing variable’s state space, thus \(H(X|Y)<H(Y|X)\) if X drives Y.

Standardizing Results and Null Distributions N samples of \(K_k(X,Y)\) can be generated from N permutations. The values \(K_k(X,Y)\) are normalized by the number of samples in the time series and then passed to a hyperbolic tangent function in order to standardize the values between (-1,1). From the samples of \(K_k(X,Y)\), an empirical cumulative distribution is derived and a 1 sided test is performed to see whether the probability of the causal statistic is less than an \(\alpha \) in the null distribution. \(\alpha \) is often chosen to be 0.05. Concretely, to test whether X GP-CCM causes Y, the following is calculated:

where U is the Heaviside function which is only 1 when the measured statistic for the actual observations K(X, Y) is greater than the permutation samples \(K(\tilde{X}\),\(\tilde{Y})\), otherwise 0.

2.3 Variational Gaussian Process Convergent Cross Mapping

Variational Approximation of the Hyperparameter Distributions The proposed method, Variational Gaussian Process Convergent Cross-Mapping (VGP-CCM), proposes to treat hyperparameters as random effects to be integrated out of the model, via Monte Carlo integration, exploiting a proposed a posteriori distribution of hyperparameters \(Q(\theta _x|X)\) that approximate the true a posteriori distribution \(P(\theta _x|X)\) in order to exploit independence structures that make integration computational feasible rather than resorting to more expensive methods such as Gibbs conditional sampling of the true posteriori [20].

To elaborate, hyperparameters are considered point estimates with no uncertainty in eq. 1. However, studies have shown that point estimates may not be stable in a variational sense and can correspond to models that overfit [21]. Calculus of variations provides a method to discover models that exhibit minimal "action" from perturbations in their function. We utilize this framework to discover functions of our hyperparameters from which we can draw samples to integrate out the effects of the hyperparameters in the model subsequently. We modify eq. 1 accordingly:

The hyperparameter posterior distribution tend to be intractable to compute as they depend on the marginal distributions of P(X) or P(Y) (model evidence) respectively:

We, instead, consider a simpler model \(Q(\theta _x)\) that approximates the posterior \(P(\theta _x|X)\) based on mean field approximations, eliminating the dependency of conditioning samples on X while minimizing the statistical distance in the Kullback-Leibler sense [22] from the true posterior distribution which is conditioned on X. In other words, we want to substitute a simple distribution \(Q(\theta _x)\) with independence structures for \(P(\theta _x|X)\) in eq. (7) to permit computationally efficient Monte Carlo integration:

The best approximation Q can be derived by maximizing the lower bound for the evidence (ELBO) provided the approximate posterior via the KL divergence.

As the KL divergence is strictly greater than 0, its minimization thereby is also a maximization of the ELBO. This can be seen by multiplying both sides by -1 and taking the evidence (log(P(X))) and moving it to the left hand side:

Thus our objective to obtain the optimal hyperparameter distributions \(Q(\theta _x)\) is to maximize the above ELBO. The next question becomes how to choose the form of our distribution \(Q(\theta _x)\).

Approximate Posterior Form The form of the posterior distribution is the art of the practitioner based on their domain knowledge. For the sake of this paper, we choose its form to follow a mean-field approximation that factorizes as \(Q(\theta ) = \prod _{k=1}^KQ(\theta _k)\) for K hyperparameters. \(\theta \) comprises the hyperparameters of an exponential squared distance kernel with automatic relevance detection \(\sigma \) and l [23], and the inducing pseudopoints for a sparse kernel z [24]. In order to maintain strictly positive parameters for the kernel parameters as well as having a distribution from which we can reparameterize to draw samples to automatically compute gradients [25] and in which we can derive easily the KL-divergence, we choose log normal distributions as their priors. The distribution for the inducing points, instead follows a normal distribution. For the KL divergences in the ELBO, analytic expressions can be derived. It happens that log normal \(log\mathcal {N}(\mu ,\sigma )\) and normal distribution \(\mathcal {N}(\mu ,\sigma )\) [25] share the same expression for KL divergences.

Note that if the prior P(X) were chosen independent point distributions, i.e. \(P(X)=\delta (X-\mu _p)\), the optimal Q(X) distribution would have a collapsed mode on \(\mu _p\), arriving at the original formulation of GP-CCM in eq. (1) in the case that the prior \(\mu _p\) were chosen as the maximum marginal likelihood solution to \(P(X;\theta _x)\).

A priori the hyperparameters are ansatz from the data. We standardize the data to be zero mean unit variance, thus the covariance amplitude \(\sigma \) has parameters \(\mu _{\sigma }=\textrm{exp}(1)\) and \(\sigma _{\sigma }=1\). Length factor l of the kernel has \(\mu _{l}=\textrm{exp}(1)\), \(\sigma _l=1\). The inducing points are a priori mean equal to a random selection of a subset of the observations with \(\sigma _z=1\). Calculus of variations could be used to derive a coordinate ascent method of obtaining the optimal distribution Q [26]. We, instead, choose to maximize the ELBO using stochastic gradient ascent as in [25] and automatic differentiation in PyTorch [27] to avoid manually deriving the update equations. The expectation \(E_{\theta _x \sim Q}(P(X|\theta _x))\) is approximated by 10 draws of \(\theta _x\) from \(Q(\theta _x)\) [25]. Furthermore, gradient clipping was used to minimize the effect of the stochastic gradient’s variance [28]. The final result from VGP-CCM comes from applying eq. (5) to the distributions in eq. (7). Code is publicly available in Python at the author’s Github profile.

2.4 Synthetic Data

Unidirectionally Coupled Lorenz and Rossler We simulate Rossler (Y) and Lorenz (X) systems with nonlinear unidirectionally coupling defined by either \(\epsilon _x\) or \(\epsilon _y\) and diffusion dynamics defined by a 6d Wiener process W. The joint system has the following form:

The coupling values were chosen as \(\epsilon =\{\epsilon _x,\epsilon _y\}=\{(0,0),(2,0),(4,0),(0,0.2),(0,0.5)\}\). Thirty realizations of the dynamical system was drawn, where subsequent permutation analysis was performed to obtain a p-value. The values of the parameters of the system are used in order to induce deterministic chaos as seen in Table. 1.

Unidirectionally Coupled Neurovascular Responses The previous system, as mentioned earlier, exhibit highly oscillatory behaviors specified by its chaotic nature where nearby states may exhibit drastically divergent trajectories. We also simulate signals which may not be as drastically oscillatory, though may be emergent of nonlinear phenomenon as seen in neurovascular signals as observed in neuroscientific studies that exploit hemodynamics like functional magnetic resonance imaging (fMRI) [29]. From [29], stimuli generating neurovascular signal (x) is approximated by a bilinear state equation

where the A matrix describes the autonomous undriven dynamics of the system (here treated as a diagonal matrix with values −1), u are stimuli of a condition (where the element \(u_j\) is 0 when jth event is not occurring, and 1 when the jth event is occurring), where \(B^j\) describes the interconnectivity between voxels of interest (i.e. \(\begin{bmatrix}\rho _{11}&{}\rho _{12}\\ 0&{}\rho _{22}\end{bmatrix}\) for voxel 1 causing voxel 2, and each \(\rho _{ij}\) is an i.i.d. random variable \(\rho _{ij} \sim \mathcal {N}(0,1)\)). The B matrices do not change in time, rather they are realized for each simulated time course. C describes which voxels the stimulus condition interacts with (in this paper, simulated as a diagonal matrix). In our simulations, we have two conditions \(u_1\), \(u_2\) and \(u_3\). \(u_1\) and \(u_2\) are events that occur 10 time each over a period of 1000 s; each event lasts 6 s on intervals of 1 min. \(u_1\) invokes voxel 1 to voxel 2 interactions via a random variable, \(\rho ^1_{12} \sim \mathcal {N}(0,1)\) while \(u_2\) does not invoke interaction between the voxels, i.e. \(\rho ^2_{12} = 0\). \(u_3\) is a binomial point process with \(p=0.3\) that describes random neural activity that elicit no functional interconnectivity between voxels 1 and 2, i.e. \(\rho ^3_{12}=0\). This serves as background noise where the activities are occurring on top of.

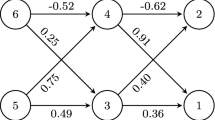

After the neural state equations, regional blood flow f, blood volume v, and deoxyhemoglobin concentration q is simulated using the proposed parameters and mechanistic equations in [29]. The nonlinear response of deoxyhemoglobin, q, is the neurovascular variable observed in fMRI. Its emergence from nonlinear equations provides a complex, nonlinearly saturating signal whose causality may be affected by parameters of regional properties that make traditional causal methods hard to apply and begs dynamical systems characterizations [30], and whose characterizations is vital in connectivity analysis in psychophysiological data. We simulate q with observational white noise added to corrupt the SNR to 5dB. Figure 1 demonstrates an exemplary simulation of these interactions.

2.5 Statistical Analysis

For VGP-CCM, we generate the null distribution of the coupling statistic using Eq. (2), substituting \(P(\theta _x|X)\) and \(P(\theta _y|Y)\) with our approximate distributions \(Q(\theta _x)\) and \(Q(\theta _y)\) respectively. \(\tilde{Y}\) and \(\tilde{X}\) are sampled from 30 permutations of the original time series X and Y respectively. GP-CCM, on the other hand, has its null distribution generated from eq. (1) with its hyperparameters obtained as the maximum a posteriori point estimate from Q obtained in Eq. (5). An \(\alpha =0.05\) is used as the threshold for significance in all tests when applied to eq. (6). Given the Rossler and Lorenz equations each have 3 variables, we perform 9 comparisons (\(X_i\rightarrow Y_1, X_i\rightarrow Y_2, X_i\rightarrow Y_3\) for i in 1...3). Thus, we have 270 tests for each coupling value. We then calculate the specificity, a measure of proneness to type I errors, as \(\frac{\text {N correctly accepted }H_0}{\text {N correctly accepted }H_0 + N \text { incorrectly rejected }H_0}\) [31]. This same procedure is applied to the neurovascular system, except 100 time series are realized, thus 100 tests for coupling results are performed.

3 Results

Table 2 displays the set of significance rates for various couplings values for the unidirectionally coupled Lorenz-Rossler systems and for the neurovascular system. For zero coupling, VGP-CCM has only rejects the null hypothesis in 3 out of 270 realizations, whereas GP-CCM rejects the null hypothesis all but 4 of its test. Furthermore, VGP-CCM is more conservative than GP-CCM in dictating whether to make a claim of significant coupling at low coupling values, thus VGP-CCM very rarely made a false directionality claim. For the case of detecting Rossler driving Lorenz coupling, VGP-CCM reports a specificity of 99.6% while GP-CCM reports a specificity of 58.3%. In the direction from Lorenz driving Rossler, GP-CCM and VGP-CCM both report a specificity of 100%. For correctly rejecting the hypothesis in either direction, VGP-CCM has a specificity of 99.8% compared to GP-CCM’s 79.1%. Similarly for the neurovascular systems, the proposed variational VGP-CCM provides more specificity at 94% compared to GP-CCM’s 75%.

Figure 2 displays cumulative distributions for simulating a Lorenz system of equations driving Rossler equations for coupling values \(\epsilon =\{(0,0)\}\) between variables X0 and Y0. The cumulative distribution reveals a robust null distribution when using the approximate posterior compared to using fixed values for the hyperparameters and performing permutations where results from only permuting samples results in a degenerate distribution with a collapsed mode as seen with zero coupling between the systems.

Example null distributions and p values for zero coupling seen from the Lorenz system to the Rossler system using the original GP-CCM method (left) and the proposed variational GP-CCM method (right). The value for nonpermuted maximum a posteriori time series results is shown in red. It can be seen that VGP-CCM properly provides non significant results while GP-CCM does not.

4 Discussion

We propose the VGP-CCM framework, which extends the GP-CCM to use a variational approximation to the posterior distribution of hyperparameters. The presented study aimed to use a variational approximation to the posterior distribution of hyperparameters to develop a more parsimonious distribution for testing a null uncoupled hypothesis for GP-CCM. Tests were performed on simulated unidirectionally coupled Lorenz-Rossler systems to control the values of coupling between the variables of the system of equations to determine whether GP-CCM and VGP-CCM suffered from false positives in the significance tests and at what coupling could either make significance statements. Neurovascular systems were also simulated to illustrate VGP-CCM’s efficacy at uncovering coupling in more slowly oscillating nonlinear signals compared to the chaotic maps studied in the Lorenz-Rossler system.

From the results over 30 realizations, as seen in Table 2, VGP-CCM has much improved results compared to GP-CCM, with nearly 20% greater specificity than GP-CCM. Furthermore, from the simulations of the neurovascular system, we demonstrated that the proposed variational extension continues to provide further robustness even for slow oscillating signals as observed in deoxyhemoglobin signals, again with a similar substantial increase in specificity. This results on the neurovascular system was also impressive as it inferred the proper directionality even when there was intermittent forcing as described in Sect. 2.4. As mentioned in the introduction, indeed the degenerate nature of the null distribution, as seen in Fig 2 with GP-CCM allowed too much liberty to make claims of significance even in the wrong direction. VGP-CCM proves promising in the case that even in low coupling it will not make a claim to the wrong direction, giving researchers confidence in the results that if the raw statistic is insignificant but with high value, it may be a matter of needing to collect more realizations, as seen in the low couplings values in Table 2.

The prior distribution used in this study were indeed uninformative naively, though they allowed ease of computations, reparameterizations of means and variations with unit Gaussian random sample draws for automatic differentiation, and provided domain constraints. We suggest in studies where domain knowledge of how samples should a priori correlate is available, such as a kernel derived from mechanistic dynamic equations—e.g. time constants in linear ordinary differential equations as performed in dynamic causal modeling [32] or temperature in thermodynamic heat systems [33]–could be used. Otherwise, a separate dataset with labels can be used to infer optimal distributions through cross-validations. Even further directions may consider generative models for the posterior distribution conditioned on data, such as neural networks [17, 23].

From this study, we can conclude that integrating out the hyperparameters from the GP-CCM probability ratio may result in a more parsimonious statistic giving robust null distributions for hypothesis testings. The computational aspects are lessened by avoiding Markov Chain Monte Carlo integration on the true conditional a posteriori distribution, instead opting for variational approximations of the hyperparameter distribution with independent mean field structures. The robustness was verified on simulated data from unidirectional Lorenz-Rossler systems, where VGP-CCM achieved much higher specificity than GP-CCM. Further studies will look into applying this methodology on real world data such as neurophysiological systems.

References

Stankovski, T., Pereira, T., McClintock, P.V.E., Stefanovska, A.: Coupling functions: universal insights into dynamical interaction mechanisms. Rev. Mod. Phys. 89, 045001 (2017)

Friston, K.J.: Functional and effective connectivity: a review. Brain Connectivity 1(1), 13–36 (2011)

Granger, C.: Investigating causal relations by econometric models and cross-spectral methods. Econometrica 37(3), 424–38 (1969)

Schreiber, T.: Measuring information transfer. Phys. Rev. Lett. 85, 461–464 (2000)

Barnett, L., Barrett, A.B., Seth, A.K.: Granger causality and transfer entropy are equivalent for gaussian variables. Phys. Rev. Lett. 103, 238701 (2009)

Marinazzo, D., Pellicoro, M., Stramaglia, S.: Kernel method for nonlinear granger causality. Phys. Rev. Lett. 100, 144103 (2008)

Smirnov, D.A., Bezruchko, B.P.: Spurious causalities due to low temporal resolution: towards detection of bidirectional coupling from time series. 100, 10005 (Oct 2012)

Smirnov, D.A.: Spurious causalities with transfer entropy. Phys. Rev. E 87, 042917 (2013)

Popper, K.R.: Science as falsification. Conjectures Refutations 1(1963), 33–39 (1963)

Sugihara, G., May, R., Ye, H., Hsieh, C.-H., Deyle, E., Fogarty, M., Munch, S.: Detecting causality in complex ecosystems. Science 338(6106), 496–500 (2012)

Takens, F.: Detecting strange attractors in turbulence. In: Rand, D., Young, L.-S. (eds.) Dynamical Systems and Turbulence, Warwick 1980, pp. 366–381. Springer, Berlin, Heidelberg (1981)

Feng, G., Yu, K., Wang, Y., Yuan, Y., Djurić, P.M.: Improving convergent cross mapping for causal discovery with gaussian processes. In: ICASSP 2020 — 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 3692–3696 (2020)

Feng, G., Quirk, J.G., Djurić, P.M.: Detecting causality using deep gaussian processes. In: 2019 53rd Asilomar Conference on Signals, Systems, and Computers, pp. 472–476 (2019)

Ghouse, A., Faes, L., Valenza, G.: Inferring directionality of coupled dynamical systems using gaussian process priors: application on neurovascular systems. Phys. Rev. E 104, 064208 (2021)

Rasmussen, C.E., Williams, C.K.I.: Gaussian Processes for Machine Learning (Adaptive Computation and Machine Learning). The MIT Press (2005)

Chung, Y., Gelman, A., Rabe-Hesketh, S., Liu, J., Dorie, V.: Weakly informative prior for point estimation of covariance matrices in hierarchical models. J. Educ. Behav. Stat. 40(2), 136–157 (2015)

Blei, D.M., Kucukelbir, A., McAuliffe, J.D.: Variational inference: a review for statisticians. J. Am. Stat. Assoc. 112(518), 859–877 (2017)

Sauer, T., Yorke, J.A., Casdagli, M.: Embedology. J. Stat. Physics. 65, 579–616 (1991)

Sugihara, G., May, R.M.: Nonlinear forecasting as a way of distinguishing chaos from measurement error in time series. Nature 344(6268), 734–741 (1990)

Geman, S., Geman, D.: Stochastic relaxation, Gibbs distributions, and the Bayesian restoration of images. IEEE Trans. Pattern Anal. Mach. Intell. 6, 721–741 (1984)

Beal, M.J.: Variational Algorithms for Approximate Bayesian Inference. Ph.D. thesis, Gatsby Computational Neuroscience Unit. University College London (2003)

Kullback, S., Leibler, R.A.: On information and sufficiency. Ann. Math. Stati. 22(1), 79–86 (1951)

MacKay, D.J.C.: Bayesian Methods for Backpropagation Networks, pp. 211–254. Springer, New York, NY (1996)

Snelson, E., Ghahramani, Z.: Sparse gaussian processes using pseudo-inputs. In: Proceedings of the 18th International Conference on Neural Information Processing Systems, NIPS’05, (Cambridge, MA, USA), pp. 1257-1264. MIT Press (2005)

Kingma, D.P., Welling, M.: Auto-encoding variational Bayes. In: 2nd International Conference on Learning Representations, ICLR 2014, Banff, AB, Canada, April 14–16, 2014, Conference Track Proceedings (2014)

Lee, S.Y.: Gibbs sampler and coordinate ascent variational inference: a set-theoretical review. Commun. Stat. — Theory Methods. 1–21 (2021)

Paszke, A., Gross, S., Chintala, S., Chanan, G., Yang, E., DeVito, Z., Lin, Z., Desmaison, A., Antiga, L., Lerer, A.: Automatic differentiation in Pytorch (2017)

Kim, Y., Wiseman, S., Miller, A.C., Sontag, D.A., Rush, A.M.: Semi-amortized variational autoencoders. In: Dy, J.G., Krause, A. (eds.) ICML. Proceedings of Machine Learning Research, vol. 80, pp. 2683–2692. PMLR (2018)

Stephan, K.E., Weiskopf, N., Drysdale, P.M., Robinson, P.A., Friston, K.J.: Comparing hemodynamic models with DCM. Neuroimage 38(3), 387–401 (2007)

Friston, K.: Dynamic causal modeling and granger causality comments on: the identification of interacting networks in the brain using fMRI: model selection, causality and deconvolution. Neuroimage 58(2–2), 303 (2011)

Gaddis, G.M., Gaddis, M.L.: Introduction to biostatistics: part 3, sensitivity, specificity, predictive value, and hypothesis testing. Ann. Emerg. Med. 19(5), 591–597 (1990)

Friston, K., Harrison, L., Penny, W.: Dynamic causal modelling. NeuroImage 19(4), 1273–1302 (2003)

Berline, N., Getzler, E., Michèle, V.: Heat Kernels and Dirac Operators. Springer (1996)

Papoulis, A., Pillai, S.U.: Probability, Random Variables, and Stochastic Processes, 4th edn. McGraw Hill, Boston (2002)

Acknowledgments

The research leading to these results has received partial funding from the European Commission — Horizon 2020 Program under grant agreement n 813234 of the project “RHUMBO”, and by Italian Ministry of Education and Research (MIUR) in the framework of the CrossLab project (Departments of Excellence).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Ghouse, A., Valenza, G. (2023). Inferring Parsimonious Coupling Statistics in Nonlinear Dynamics with Variational Gaussian Processes. In: Cherifi, H., Mantegna, R.N., Rocha, L.M., Cherifi, C., Miccichè, S. (eds) Complex Networks and Their Applications XI. COMPLEX NETWORKS 2016 2022. Studies in Computational Intelligence, vol 1077. Springer, Cham. https://doi.org/10.1007/978-3-031-21127-0_31

Download citation

DOI: https://doi.org/10.1007/978-3-031-21127-0_31

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-21126-3

Online ISBN: 978-3-031-21127-0

eBook Packages: EngineeringEngineering (R0)