Abstract

This chapter explores the use of quality statistical tools for the development of diagnostic tests for SARS-CoV-2 and the different metrological parameters recommended to laboratories towards guaranteeing the quality assurance of the tests, according to ISO/IEC 17025. Tools such as validation, uncertainty estimation, and proficiency testing are presented and the importance of their application to the current scenario and their perspectives and scarcity in the tests developed and made available are discussed.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

The current global pandemic caused by Severe Acute Respiratory Syndrome 2 (SARS-CoV-2) is considered constant challenge for global public health. Diagnostic methods, or in vitro tests, developed for the detection and diagnosis of the contagion of viruses act towards a quick and effective response in a crisis, contributing to the screening, diagnosis, follow-up/treatment of patients, and recovery/epidemiological surveillance [1]. Their performance must be qualified and reported so that their compliance with legislation can be assessed. However, the currently available database has revealed a mismatch between existing or reported results of method/test/device information from metrology tools applied and performance criteria, which requires the performance characteristics of the method be defined so that it is scientifically consistent under the conditions for its adoption [1]. A set of qualitative analysis checks should therefore be applied to the method through different metrological tools (e.g., validation, uncertainty estimation, and proficiency testing) for ensuring the tests are suitable and compliant with their application.

This chapter addresses the importance of a correct application of metrology tools for ensuring both quality and reliability of the results of SARS-CoV-2 detection methods. The analysis is based on a review and an evaluation of such tools and their best practices are highlighted.

2 SARS-CoV-2

Emerging and re-emerging infectious diseases are regarded as ongoing challenges for global public health. Among such diseases, the current Severe Acute Respiratory Syndrome 2 (or SARS-CoV-2), formerly known as novel coronavirus (2019-nCoV), has been considered a global pandemic. It is transmitted by a new zoonotic agent that emerged in Wuhan, China, in December 2019, and has caused respiratory, digestive, and systemic manifestations articulated in the clinical picture of a disease called COVID-19 (Coronavirus Disease 2019). COVID-19 spreads to humans from an animal host and, according to its origin, SARS-CoV-2 virus is known to be 96% identical to a bat coronavirus, which spreads through intermediate hosts and now from human to human (Fig. 1) [2, 3].

Potential transmission cycles of SARS-CoV-2 (based on Ahmad et al. [3])

SARS-Cov-2 is a β-coronavirus type virus that uses angiotensin-converting enzyme II (ACE II) for cell adhesion and subsequent replication [4]. It is transmitted by various means [e.g., aerosols (coughing/sneezing)], direct contact (e.g., fomites, handshake, kissing, and hugging), and possibly through sexual contact [5]. COVID-19 can develop asymptomatically or with symptoms such as runny nose, fever, cough, diarrhea, and, in more advanced cases, severe pneumonia [6].

The virus is known to have a high transmission rate and cause an acute respiratory syndrome that ranges from mild cases—approximately 80%—to very severe ones—between 5 and 10%—with respiratory failure and a variable fatality rate, mainly according to age group [2, 5], thus requiring specialized treatment in intensive care units (ICU).

An early diagnosis of COVID-19 is essential for the identification of cases and control of the pandemic [7]. Suspected cases can be confirmed by SARS-CoV-2 ribonucleic acid amplification molecular tests, immunological tests for antibody detection, clinical presentation, and clinical-epidemiological investigation [8].

2.1 Diagnostic Tests for SARS-CoV-2 Detection

Diagnostic methods developed for confirming diagnoses and better estimating contagion of SARS-CoV-2 have emerged in the pandemic as essential tools that monitor cases at a population level, understand the immune response, and assess the exposure of individuals and possible immunity from reinfection [7]. Such tests are simple and usually do not require equipment; moreover, they enable the visualization of the result in a few minutes (10–30 min on average, depending on the type of test applied) [9].

The use of different diagnostic methods for detecting SARS-CoV-2 infection should consider their purpose of detection, since their characteristics vary according to the context of infection (e.g., timing of symptoms, type of sample, among others). Their use can help from clinical decision-making to the development of a health surveillance strategy [10, 11]. Among other aspects, individuals to be tested, clinical stage of their disease, definition of samples to be used, and minimum acceptable requirements for clinical performance (Fig. 2) [12] must also be identified.

Testing in the context of SARS-CoV-2 disease. Reprinted from European Commission [13]

The tests can be classified into two groups, i.e., those that can detect the presence of the virus (RNA and antigen tests) for supporting the diagnosis of patients with symptoms similar to those of 2019-nCoV, and tests with detection of the immune system and body response against SARS-CoV-2 virus, which identify previous infections or current infections in the presence of the virus (antibody tests) [12, 13].

Technologies based on polymerase chain reaction (PCR) and high-throughput sequencing are commonly used in the molecular approach for replicating nucleic acids and detecting the virus in respiratory samples [14]. Different targets include genes E, S, and Orflab, and in RDT tests, antigens or antibodies detect the presence of the virus. Antibody-based tests use ELISA or immunity-based technologies to detect antibodies in patients and identify if a patient has been previously infected. Antibodies of IgG, IgM, and IgA types related to SARS-CoV-2 infection are detected by qualitative methodology [7, 15]. Antibody tests use blood, plasma, or serum samples, and antigen-based testing methods can detect the presence of viral antigens in respiratory samples and diagnose an active infection using S and N proteins as the main targets of such antigens [7, 14].

A variety of laboratory parameters assists in monitoring the virus; apart from the aforementioned methods, radio imaging such as computed tomography (CT) monitors the shape of the chest throughout an infection, and inflammatory biomarkers [e.g., interleukin-6 (IL-6)] have also been detected in patients with COVID-19 [8, 12].

In addition to playing an essential and effective role in a crisis, diagnostic tests, contribute to a rapid response to patient triage, diagnosis, monitoring/treatment, and epidemiological recovery/surveillance [13]. Some of the several commercially available diagnostic tests for SARS-CoV-2 have received authorizations for use by national and international regulatory agencies [10].

Manufacturers of diagnostic methods for SARS-CoV-2 should evaluate the performance of the test device and report the performance parameters and the technical documentation of the device in the instructions for use—usually through performance studies—towards assessing the compliance of the test specifications with the legislation. Furthermore, after the commercialization of a diagnostic test, the performance of the methods should be validated for helping public health decision-making, especially in the context of the current crisis [9, 16].

According to a study conducted by the European Union [13], the current performance of test methods and devices for 2019-nCoV (Working document of Commission services) has shown a clear mismatch between the existing or reported quality assurance and the information about the tests/devices and performance criteria, which are based on good analytical practice principles and corresponding international standards such as ISO/IEC 17025 [16] and ISO 15189 [17]. The study has shown a current need for ensuring the performance characteristics of a test method are understood and certifying the method is scientifically consistent under the conditions for its use, which requires a set of checks regarding an analytical method, such as validation.

3 Quality Assurance

Quality assurance was considered quite revolutionary for laboratories a few years ago, since it justified laboratory credibility and proved effective in increasing the reliability of results [18]. It has been adopted in the daily processes of laboratory management, therefore, several organizations have implemented quality management systems in their routine [19].

The concept of quality is mainly associated with the reliability and traceability of analytical results in laboratories. A quality management system provides tools for the management of factors that may affect the quality of laboratory results according to documented procedures so that tools are properly applied and always in the same way [18, 19].

In clinical laboratories, the implementation of a quality control system represents all the systematic actions necessary to provide reliable results for satisfying patients’ needs and avoiding errors [20]. A regular testing of quality control samples and samples from diseased individuals, as well as comparisons of quality control results with specific statistical results already known are required for ensuring quality in diagnostic tests [17].

Among the specific standards that regulate the implementation of a quality management system in laboratories is ISO/IEC 17025:2017 [16], more flexible for any type of laboratory and applied by several routine laboratories. However, GLP [21] (Good Laboratory Practice) focuses on each study performed and on the organizational processes and conditions under which non-clinical environmental health and safety studies are planned, performed, monitored, recorded, archived, and reported. As shown in Fig. 3, research laboratories apply quality principles and practices towards guaranteeing their activities.

Major goals and quality systems from different kinds of laboratories, classified according to their activities. Reprinted from Valcárcel and Ríos [22]

Among the management requirements in NBR ISO/IEC 17025:2017 [16] for the functioning of the quality management system and the administration of the laboratory, laboratories must assure their customers they provide data with the expected quality through tools related to technical requirements and considered essential for the reliability and traceability of the results associated with each other by AQAC (Analytical Quality Assurance Cycle) [23] (see Fig. 4).

Modified from Olivares et al. [24]

Analytical quality assurance cycle (AQAC) with CRM and PT concepts.

-

I.

Method validation: refers to the evaluation of a method’s suitability for an intended use;

-

II.

Uncertainty estimation: the confidence level of a result is evaluated with data obtained in the validation stage;

-

III.

Quality control: refers to a continuous evaluation of the validity of results after the validation and uncertainty estimation stages. The method is continuously monitored and data provided are incorporated into the validation ones.

After the evaluation of a method (during validation) and obtaining of the confidence level of the result (knowing the uncertainty), the quality control is applied towards demonstrating the method can provide reliable results in each test batch. The use of “calibrated equipment” and “certified standards” assists in proving the reliability and traceability of the results and provides sustainability to the application of validation, uncertainty, and quality control [19, 25].

Quality Control can be applied either inter-laboratorial, or intra-laboratorial. The inter-laboratory control employs proficiency tests (PT) periodically elaborated by laboratories and that help the traceability control of a given standard, whereas the intra-laboratory control uses reference material (RM) or certified reference material (CRM) [26].

3.1 Qualitative and Quantitative Methods

Analytical methods can be classified as quantitative or qualitative. A quantitative method establishes the amount of substance analyzed through a numerical value with the appropriate units [23, 24], whereas a qualitative method classifies a sample based on its physical, chemical, or biological properties. A binary response can be provided by a measurement instrument and test kits, which involve sensory changes detected through the presence or absence of a microorganism, directly (mass or volume) or indirectly (color, absorbance, impedance, etc.) in a given sample [23, 24].

Semi-quantitative methods of analysis lie between qualitative and quantitative ones; they provide an approximate answer regarding the quantification of an analyte and usually assign a certain class to a test sample (e.g. concentration can be high, medium, low, or very low) [27].

Prior to the validation process in the analysis of samples, studies on the performance parameters of a qualitative method, i.e., parameters analyzed during the validation of quantitative methods, must be conducted [27, 28]. Qualitative methods provide a response on the presence or absence of a particular analyte in a sample; therefore, the results of a qualitative analysis are binary responses such as present/absent, positive/negative, or yes/no. The parameters evaluated (Table 1) for qualitative methods are generally specificity, sensitivity, precision, false-positive rate, and false-negative rate, whereas repeatability and reproducibility are assessed for quantitative methods.

Quantitative methods can be described by a well-established set of performance characteristics to be used. In a comparison of such characteristics with those of qualitative methods, only the limit of detection (LOD) provides essentially the same meaning for the methods, while concepts related to selectivity are important for both. The major features of qualitative methods are measures of “correctness” (ie, indications of false response rates), which have no direct counterpart in quantitative methods [29].

Qualitative analyses have gained importance in laboratories and several sectors, since they provide fast, objective, low cost, simple, and error minimization results due to the shorter interval between sampling and analysis frequently selected for screening [27].

A qualitative analysis (Fig. 5) requires both specification of the property and assessment of the suitability of the analysis for the intended use. The reporting of a qualitative analytical result must be supported by valid procedures and an adequate quality control of the test, and the way the results are reported depends on the purpose of the analysis and the recipient of the report [30].

Qualitative analysis process: (1) problem description, (2) method development and (3) validation, (4) tests on unknown items checked through quality control, and (5) reporting of results (Based on EURACHEM/CITAC Guide (2021) [30])

The aforementioned analyses can be implemented in several analytical areas. For example, rapid testing methods, including qualitative tests, detect microbiological contaminants, heavy metals, pesticides, foreign bodies, mycotoxins, allergens, and other analytes in foods [27].

3.2 Method Validation

The quality of a method must comply with national and international regulations in all areas of analyses. Therefore, a laboratory must take appropriate steps (e.g., use of validated methods of analysis, internal quality control procedures, proficiency testing, and accreditation to an International Standard such as ISO/IEC 17025 [20, 23]) towards providing high quality data.

During the development of a method or a test, its performance characteristics must be evaluated towards their optimization and undergo preliminary validation studies [25] for the establishment of criteria for their performance parameters. Validation refers to confirmation and involves the provision of evidence that the minimum requirements for acceptance of a specific intended application or use have been met, thus proving its applicability for a particular purpose [16].

Method validation is an essential component of the measures implemented by a laboratory for the production of reliable analytical data, as shown in Table 2.

The validation process consists of evaluation of the performance characteristics of a method and their comparison with analytical requirements [16]. Therefore, prior to the implementation of tests, the laboratory must prove it can properly operate a standardized method and provide objective evidence that the specific requirements for an intended use have been met. It must also define the validation parameters and criteria that best demonstrate the suitability of the method for that use [25].

Figure 6 illustrates the general process of validation of qualitative methods that involves both experimental and qualitative non-experimental steps. If the final result has been satisfied, the method can be considered ‘‘validated’’; otherwise, it is necessary to return to the previous steps [32].

General validation process of a qualitative method: (1) Conversion of the client's information needs (e.g., threshold limit and % reliability required) into the expected characteristics of the analytical information provided by the qualitative method; (2) A priori selection of the qualitative method (e.g., standard, standard modified or developed by the laboratory) most appropriate to solve the analytical problem; (3) Selection of the key characteristics of the qualitative method; (4) Establishment of the set of experimental processes for the determination of the selected characteristics of the method; and (5) Comparison of the characteristics in step (4) with those established a priori (i.e., confirmation of the fitness for the purpose of the qualitative method). (Based on Cárdenas and Valcárcel [32])

If an existing method has been modified towards meeting specific requirements, or if an entirely new method has been developed, the laboratory must ensure their performance characteristics meet the requirements of the intended analytical operations. Regarding modified methods (standardized or not) or those developed by a laboratory, a series of parameters defined by the laboratory must be evaluated for ensuring the suitability of the method for the intended use [33].

The main characteristics of the methods during the validation process are selectivity, limit of detection (LOD) and limit of quantification (LOQ), working range, analytical sensitivity, trueness (bias, recovery), precision (repeatability, intermediate precision, and reproducibility), measurement uncertainty, and ruggedness (robustness) [25].

Harmonized IUPAC [34], AOAC [35], and EURACHEM [25] protocols, among others, describe the way validation studies must be conducted and the way the results must be analyzed regarding the performance of quantitative methods. The validation of qualitative methods is an important bottleneck for the recognition of the competence of laboratories. Although several publications on validation procedures for qualitative methods are available in the literature, no harmonized document has established the parameters to be evaluated in each process, as in quantitative methods [27, 36]. A specific approach to this topic can be found in Trullols et al.’s “Validation of qualitative analytical methods” [27].

3.3 Uncertainty Estimation

An assessment of the risk of misclassification is recommended in the development of any test procedure; therefore, a laboratory is commonly expected to establish or have access to information on the risks of incorrect results [25]. Regarding standardized test procedures established by groups outside the laboratory and suitable for an intended purpose, access to performance data may be limited or even non-existent [16].

The aforementioned evaluation can provide a detailed test specification; moreover, relevant factors under the control of the laboratory often satisfy the requirements of a test procedure [25]. The evaluation may involve the demonstration that the uncertainty of the control parameters and the performance of the test are adequate to the ultimate objective of the testing [37].

Measurement uncertainty is defined as a parameter that characterizes the dispersion of values assigned to measurement, this is non-negative, based on the information used, according to the International Vocabulary of Measurements—VIM [31].

The establishment of an uncertainty usually requires a range related to a measurement result, which is expected to cover a large fraction of the distribution of values attributed to a quantity subjected to measurement [37]. The method should readily provide an interval with a scope probability or confidence level that realistically corresponds to the required level for the assessment and expression of the measurement uncertainty [38].

Laboratories are currently not expected to assess or report uncertainties associated with qualitative analysis results. However, some specifications, such as ISO/IEC 17025 [16] and ISO 15189 [17], require laboratories ensure they can obtain valid qualitative and quantitative analysis results and are aware of their reliability. When necessary, such laboratories report limitations for interpreting results and accurately answering customers’ questions about reliability [28].

The assessment of uncertainties associated with quantitative parameters or analysis results has been the subject of considerable efforts [1]. On the other hand, uncertainties in qualitative analysis have received much less attention due to challenges for the establishment of uncertainty parameters associated with the method of analysis [39].

Despite a wide variety of metrics that express uncertainty in qualitative results, only a limited consensus on those to be used has been reached [5]. The most basic way to quantify the performance of qualitative analysis is to calculate rates of false results, which leads to “positive” or “negative” results, reported as “true positive” and “false positive” or “true negative” and “false negative” rates, respectively. However, such rates can be related to the total number of a specific type of case or result or to the total number of possible causes or results [30].

“Assessment of performance and uncertainty in qualitative chemical analysis” [30], a recently published guide produced by a joint Eurachem/CITAC working group, is based on experiences from several analytical fields through performance and qualitative uncertainty analyses and provides some performance alternatives to express the quality of qualitative analytical results (see Table 3).

3.4 Proficiency Testing

The laboratory must implement a quality assurance (QA) system that includes the monitoring of its performance through comparisons with results from other laboratories, when available and appropriate, for controlling the validity of its measurements [26, 30], which are an important aspect of the requirements for accredited laboratories or those seeking accreditation [16].

Participation in a proficiency test (PT) complements a laboratory’s internal quality control (IQC) procedures, since it provides an additional external measure of the laboratory’s measurement capability [26, 30].

Proficiency tests are considered interlaboratory studies used as tools for external evaluations and demonstration of the reliability of laboratory analytical results. They help the identification of failures and enable corrective or preventive actions to be taken. Moreover, they are one of the items required for laboratory accreditation by ISO/IEC 17025:2017 [16].

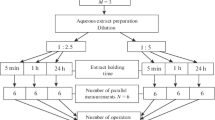

According to ISO/IEC 17043 [40], the proficiency testing provider must follow some steps, such as instruction to participants, handling of PT items, distribution, data analysis, and evaluation, as shown in Fig. 7.

Steps of a proficiency test according to ISO/IEC 17043:2011 [40]

The interlaboratory ensures a validated method whose uncertainty has been calculated continues working satisfactorily. In principle, method validation and internal quality control are sufficient to ensure a method’s accuracy; however, in practice, they are often not perfect [41]. Regarding method validation, unknown influences can interfere with the measurement process and Certified Reference Materials (CRMs) are not available in several industries [27]. Given these factors, it is difficult to establish traceability of results and unidentified sources of error may be present during the measurement process. Proficiency testing has the advantage of providing a means for participants to obtain an external and independent assessment of the accuracy of their results [42].

In a general context, some of the benefits of PTs are [40]:

-

Laboratory performance evaluation and continuous monitoring;

-

Evidence of reliable results and identification of problems related to the testing system;

-

Possible corrective and/or preventive actions;

-

Evaluation of the efficiency of internal controls;

-

Determination of performance characteristics and method validation; and

-

Standardization of market-facing activities and recognition of test results at national and international levels.

The development and application of PT involve a series of steps that include different approaches chosen according to the matrix and analytes to be evaluated, e.g., assignment of values obtained by consensus or by a reference value calculated by different strategies, performance evaluation (z-score, Zeta-score, etc.), graphical methods (Youden plot, histograms, etc.), and evaluation of stability and homogeneity of PT items, among others [24].

The use of PT or other external control schemes in quality control enables the laboratory to guarantee the effectiveness of the quality control implemented internally, an external reference of the accuracy of the results, and their comparison with those provided by other laboratories. Among the several parameters calculated for assessing the quality of a laboratory’s performance is the homogeneity of the results from the participating laboratories [16, 40].

ISO/IEC 17043 [40] describes requirements for the competence of PT providers and ISO 13528 [42] provides an approach to data for qualitative methods. Some practical information on how to select, use, and interpret PT schemes can be found in the Eurachem Guide [25]. However, no fully appropriate scheme has been developed for emerging fields of analysis or rare applications.

4 Application of Quality Statistical Tools in Tests for the Detection of SARS-CoV-2

Quality control, one of the tools strongly related to metrology [19], involves a set of measurement operations that ensure the products manufactured by a company meet the technical specifications to be introduced in the Market [20].

An increasing number of SARS-CoV-2 RNA tests (mostly through RT-PCR) and tests for antibodies against coronavirus (mostly immunoassays) have been reported, whereas a small number of antigen tests is available [13].

The pandemic crisis caused by SARS-CoV-2 and the need for tests that diagnose the virus have required adequate evaluations (i.e. validations) of the performance of the emerging test methods, as well as the development of protocols that standardize their specification of quality, safety, and efficacy, which involves validation of the methods and determination of their uncertainty for ensuring public health safety during their use. Furthermore, the development of proficiency tests in the public domain enables laboratories to prove their competence in test design.

Among the main metrological quality tools are validation, uncertainty estimation, and proficiency testing, as discussed elsewhere. Figure 8 displays a complete set of measurements to be performed by laboratories towards high-quality results. Apart from validation and/or standardized methods, internal quality control (IQC) procedures (use of reference materials (MRs), control charts, etc.), participation in proficiency tests, accreditation to an international standard, usually ISO/IEC 17025 [16], and registration of the test with regulatory agencies such as ANVISA [23] are effective measures.

Adapted from Olivares and Lopes [23]

Different levels of quality validation categories for analyses of diagnostic methods for SARS-CoV-2.

SARS-CoV-2 diagnostic tests have been performed worldwide in laboratories with extensive experience and technical capacity for nucleic acid amplification testing. Although such tests, which have been commercialized, have been validated and approved by regulatory agencies, few data on their efficacy in wide implementations are currently available.

According to a survey on SARS-CoV-2 diagnostic tests in use, of those registered with Agência Nacional de Vigilância Sanitária (ANVISA) [10], 64 were analyzed, as shown in Fig. 9, and no standardization of the performance parameters has been established for ensuring the quality of their results, thus leading to public health risks.

Main performance parameters used in tests for the diagnosis of SARS-CoV-2 in Brazil, according to ANVISA (2020) [10]

Most parameters analyzed by the manufacturers (e.g., sensitivity and specificity) have shown discrepancies and followed no standard. The low sensitivity of a diagnostic test may lead to false negatives, thus interfering mainly in cases of asymptomatic individuals.

A study developed by the European Commission [13] on the current performance of COVID-19 test methods and devices revealed an urgent need for adequate assessments (i.e. validation) of the performance of both existing and emerging test methods with a view to SARS-CoV-2 viral RNA, such as antigen or antibody tests [1].

We can conclude no standardization has been established for the detection of the quality of tests and no data or parameters for their measurement uncertainty are available.

Regarding the proficiency tests currently available worldwide, only 01 PT has been identified for the diagnosis of COVID-19 in Brazil and only 07 international tests (offered by private companies) have been reported. Therefore, given the importance and urgency of diagnostic methods for COVID-19, the development of a public and widely applicable PT for laboratory comparisons and assurance of results are crucial, since they can be used as a model for other qualitative PTs in emerging disease diagnoses for ensuring interlaboratory testing reliability.

New protocols for validation, calculation of uncertainty, and development of proficiency assays must be implemented not only for the current scenario, but also for future detection tests for SARS-CoV-2 for diagnosing the immunity obtained after infection or vaccination. Once issues of reliability and evaluation of diagnostic tests have been clarified, they will be an essential tool for the development of strategies against SARS-CoV-2 and diseases that may emerge.

5 Conclusions and Future Perspectives

This chapter has discussed statistical quality tools for the quality control of diagnostic tests for SARS-CoV-2.

Among such tools, validation proves an analytical method is suitable for its purpose, thus ensuring routine analyses reproduce consistent values when compared to a reference value. A method is considered validated when evaluated according to a series of established parameters, such as specificity and selectivity, linearity, working range or range, precision, detection limit, quantification limit, accuracy, and robustness (repeatability) and if it has achieved the expected performance.

The uncertainty estimate that provides the confidence level of the result of each test must also be established, and laboratories must be aware of the reliability of qualitative analysis results so that, when necessary, they can report limitations for interpreting results and accurately responding to customers’ questions about reliability.

Moreover, well-characterized reference (control) materials that simulate real patient samples and reference test methods should be inventoried, verified, or established towards comparisons of the performance of different tests according to the quality standards required. Other proficiency testing exercises must be organized, so that laboratories can prove their competence in COVID-19 testing.

Despite the importance of application of statistical tools to diagnostic tests for the detection of SARS-CoV-2, no standardization has been established for the registration process with regulatory bodies. Therefore, this chapter has addressed an evaluation and application of statistical tools (validation, uncertainty estimation, and proficiency testing) for the development and monitoring of such tests. The transmission cycles of SARS-CoV-2 must be understood and mechanisms that help the prevention and mitigation of transmission must be developed for use in future risk conditions in the context of emerging zoonotic diseases, including the current one.

References

Eurachem. Trends & challenges in ensuring quality in analytical measurements, Book of Abstracts, 01, https://www.eurachem.org/images/stories/workshops/2021_05_QA/pdf/abstracts/Book_of_Abstracts_final.pdf (2021)

D. Wang, B. Hu, C. Hu, F. Zhu, X. Liu, J. Zhang, B. Wang, H. Xiang, Z. Cheng, Y. Xiong, Y. Zhao, Y. Li, X. Wang, Z. Peng, Clinical characteristics of 138 hospitalized patients with 2019 novel coronavirus-infected pneumonia in Wuhan, China. JAMA 323, 1061–1069 (2020). https://doi.org/10.1001/jama.2020.1585

T. Ahmad, M. Khan, T.H. Musa, S. Nasir, J. Hui, D.K. Bonilla-Aldana, A.J. Rodriguez-Morales, COVID-19: zoonotic aspects. Travel Med. Infect. Dis. 36, 101607 (2020). https://doi.org/10.1016/j.tmaid.2020.101607

M.A. Shereen, S. Khan, A. Kazmi, N. Bashir, R. Siddique, COVID-19 infection: Origin, transmission, and characteristics of human coronaviruses. J. Adv. Res. 24, 91–98 (2020). https://doi.org/10.1016/j.jare.2020.03.005

A. Patrì, L. Gallo, M. Guarino, G. Fabbrocini, Sexual transmission of severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2): a new possible route of infection? J. Am. Acad. Dermatol. 82, e227 (2020). https://doi.org/10.1016/j.jaad.2020.03.098

B.D. Kevadiya, J. Machhi, J. Herskovitz, M.D. Oleynikov, W.R. Blomberg, N. Bajwa, D. Soni, S. Das, M. Hasan, M. Patel, A.M. Senan, S. Gorantla, J.E. McMillan, B. Edagwa, R. Eisenberg, C.B. Gurumurthy, S.P.M. Reid, C. Punyadeera, L. Chang, H.E. Gendelman, Diagnostics for SARS-CoV-2 infections. Nat. Mater. 20, 593–605 (2021). https://doi.org/10.1038/s41563-020-00906-z

Y. Pan, X. Li, G. Yang, J. Fan, Y. Tang, J. Zhao, X. Long, S. Guo, Z. Zhao, Y. Liu, H. Hu, H. Xue, Y. Li, Serological immunochromatographic approach in diagnosis with SARS-CoV-2 infected COVID-19 patients. J. Infect. 81, e28–e32 (2020). https://doi.org/10.1016/j.jinf.2020.03.051

N.N.T. Nguyen, C. McCarthy, D. Lantigua, G. Camci-Unal, Development of diagnostic tests for detection of SARS-CoV-2. Diagnostics. 10, 1–28 (2020). https://doi.org/10.3390/diagnostics10110905

ANVISA: Information Note - Use of quick tests – Covid-19. 2507, 1–9 (2020)

ANVISA: Accuracy of diagnostic tests registered with ANVISA for COVID-19. 1–35 (2020)

B. Flower, J.C. Brown, B. Simmons, M. Moshe, R. Frise, R. Penn, R. Kugathasan, C. Petersen, A. Daunt, D. Ashby, S. Riley, C.J. Atchison, G.P. Taylor, S. Satkunarajah, L. Naar, R. Klaber, A. Badhan, C. Rosadas, M. Khan, N. Fernandez, M. Sureda-Vives, H.M. Cheeseman, J. O’Hara, G. Fontana, S.J.C. Pallett, M. Rayment, R. Jones, L.S.P. Moore, M.O. McClure, P. Cherepanov, R. Tedder, H. Ashrafian, R. Shattock, H. Ward, A. Darzi, P. Elliot, W.S. Barclay, G.S. Cooke, Clinical and laboratory evaluation of SARS-CoV-2 lateral flow assays for use in a national COVID-19 seroprevalence survey. Thorax 75, 1082–1088 (2020). https://doi.org/10.1136/thoraxjnl-2020-215732

V. Tiwari, M. Kumar, A. Tiwari, B.M. Sahoo, S. Singh, S. Kumar, R. Saharan, Current trends in diagnosis and treatment strategies of COVID-19 infection. Environ. Sci. Pollut. Res. 28, 64987–65013 (2021). https://doi.org/10.1007/s11356-021-16715-z

European Commission, Current performance of COVID-19 test methods and devices and proposed performance criteria (2020), pp. 1–32. https://ec.europa.eu/docsroom/documents/40805. Accessed 30 June 2020

X. Li, M. Geng, Y. Peng, L. Meng, S. Lu, Molecular immune pathogenesis and diagnosis of COVID-19. J. Pharm. Anal. 10, 102–108 (2020). https://doi.org/10.1016/j.jpha.2020.03.001

Z. Li, Y. Yi, X. Luo, N. Xiong, Y. Liu, S. Li, R. Sun, Y. Wang, B. Hu, W. Chen, Y. Zhang, J. Wang, B. Huang, Y. Lin, J. Yang, W. Cai, X. Wang, J. Cheng, Z. Chen, K. Sun, W. Pan, Z. Zhan, L. Chen, F. Ye, Development and clinical application of a rapid IgM-IgG combined antibody test for SARS-CoV-2 infection diagnosis. J. Med. Virol. 92, 1518–1524 (2020). https://doi.org/10.1002/jmv.25727

ISO 17025:2017, A.N.I.: General requirements for the competence of testing and calibration laboratories (2017)

ISO 15189: Clinical laboratories - competency quality requirements (2015)

I.R.B. Olivares, Quality management in laboratories. Publishing company Átomo, Campinas, SP (2019)

ISO 9001: Quality management systems (2021)

M.C. de Souza, A.L. Korzenowski, F.A. de Medeiros, C.S. ten Caten, R. Herzer, Standards for quality management in clinical analysis laboratories. Espacios. 37, 363–368 (2016)

E. Directorate, C. Group, M. Committee, OECD series on principles of good laboratory practice and compliance monitoring. Ann. Ist. Super. Sanita. 33, 1–172 (1997)

M. Valcárcel, A. Ríos, Quality assurance in analytical laboratories engaged in research and development activities. Accredit. Qual. Assur. 8, 78–81 (2003)

I.R.B. Olivares, F.A. Lopes, Essential steps to providing reliable results using the analytical quality assurance cycle. TrAC—Trends Anal. Chem. 35, 109–121 (2012). https://doi.org/10.1016/j.trac.2012.01.004

I.R.B. Olivares, G.B. de Souza, A.R. de Araujo Nogueira, V.H.P. Pacces, P.A. Grizotto, P.S. da Silva Gomes Lima, R.M. Bontempi, Trends in the development of proficiency testing for chemical analysis : focus on food and environmental matrices. Accredit. Qual. Assur. (2021). https://doi.org/10.1007/s00769-021-01487-3

Eurachem Guide—Second Edition 2014, The fitness for purpose of analytical methods—a laboratory guide to method validation and related topics second edition (1999)

E.P.T. Guide, Selection, use and interpretation of proficiency testing (PT) schemes by laboratories (2000)

E. Trullols, I. Ruisánchez, F.X. Rius, Validation of qualitative analytical methods. TrAC—Trends Anal. Chem. 23, 137–145 (2004). https://doi.org/10.1016/S0165-9936(04)00201-8

C. de Souza Gondim, O.A.M. Coelho, R.L. Alvarenga, R.G. Junqueira, S.V.C. de Souza, An appropriate and systematized procedure for validating qualitative methods: its application in the detection of sulfonamide residues in raw milk. Anal. Chim. Acta. 830, 11–22 (2014). https://doi.org/10.1016/j.aca.2014.04.050

S.L.R. Ellison, T. Fearn, Characterising the performance of qualitative analytical methods: statistics and terminology. TrAC—Trends Anal. Chem. 24, 468–476 (2005). https://doi.org/10.1016/j.trac.2005.03.007

QAWG: Assessment of performance and uncertainty in qualitative chemical analysis, Draft 03/2021 (2021)

National Institute of Metrology, Q. e T., The international vocabulary of metrology (2012)

S. Cárdenas, M. Valcárcel, Analytical features in qualitative analysis. TrAC—Trends Anal. Chem. 24, 477–487 (2005). https://doi.org/10.1016/j.trac.2005.03.006

National Institute of Metrology, Quality and Technology (INMETRO): DOQCGCRE-089 (2017)

M. Thompson, S.L.R. Ellison, R. Wood, Harmonized guidelines for single-laboratory validation of methods of analysis (IUPAC technical report). Pure Appl. Chem. 74, 835–855 (2002). https://doi.org/10.1351/pac200274050835

B. Pöpping, J. Boison, S. Coates, C. Von Holst, R. MacArthur, R. Shillito, P. Wehling, AOAC international guidelines for validation of qualitative binary chemistry methods. J. AOAC Int. 97, 1492–1495 (2014). https://doi.org/10.5740/jaoacint.BinaryGuidelines

C.S. Gondim, R.G. Junqueira, S.V.C. Souza, Tendências em validação de métodos de ensaios qualitativos Trends in implementing the validation of qualitative methods of analysis. Rev. Inst. Adolfo Lutz. 70, 433–447 (2011)

ISO/IEC GUIDE 98–4, Uncertainty of measurement—part 4: role of measurement uncertainty in conformity assessment. Int. Organ. Stand. GUIDE 98-4 (2012)

A. ISO, I.E.C. Guide, ABNT ISO/IEC GUIDE 98-3 (2016)

B.L. Mil’man, L.A. Konopel’ko, Uncertainty of qualitative chemical analysis: general methodology and binary test methods. Mendeleev All-Russia Res. Inst. Metrol. 59, 1129–1130 (2004). https://doi.org/10.1023/B:JANC.0000049712.88066.e7

17043:2011, A.N.I.: Conformity assessment — General requirements for proficiency testing. 46 (2011)

A.K. Ávila, L.J.R. Pereira, P.L.S. Ferreira, P.R.G. Couto, R.S. Couto, T.O. Araujo, R.M.H. Borges, Proficiency testing: A powerful tool for national laboratories (2004)

ISO 13528:2015: Statistical methods for use in proficiency testing by interlaboratory comparison. 2015, (2015)

V. Haselmann, M.K. Özçürümez, F. Klawonn, V. Ast, C. Gerhards, R. Eichner, V. Costina, G. Dobler, W.J. Geilenkeuser, R. Wölfel, M. Neumaier, Results of the first pilot external quality assessment (EQA) scheme for anti-SARS-CoV2-antibody testing. Clin. Chem. Lab. Med. 58, 2121–2130 (2020). https://doi.org/10.1515/cclm-2020-1183

Acknowledgements

The authors are grateful to CAPES (001 and Pandemias 8887.504861/2020-00) and FAPESP (no. 2020/01238-4) for their financial support and groups MeDiCo and RQA Labs for all support and assistance during the development of this research.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

de Oliveira Rodrigues, C., Olivares, I.R.B. (2023). Application of Quality Statistical Tools for the Evaluation of Diagnostic Tests for SARS-CoV-2 Detection. In: Crespilho, F.N. (eds) COVID-19 Metabolomics and Diagnosis. Springer, Cham. https://doi.org/10.1007/978-3-031-15889-6_8

Download citation

DOI: https://doi.org/10.1007/978-3-031-15889-6_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-15888-9

Online ISBN: 978-3-031-15889-6

eBook Packages: Chemistry and Materials ScienceChemistry and Material Science (R0)