Abstract

Density-based clustering methods can detect clusters of arbitrary shapes. Most traditional clustering methods need the number of clusters to be given as a parameter, but this information is usually not available. And some density-based clustering methods cannot estimate local density accurately. When estimating the density of a given point, each neighbor of the point should have different importance. To solve these problems, based on the K-nearest neighbor density estimation and shared nearest neighbors, a new density-based clustering method is proposed, which assigns different weights to k-nearest neighbors of the given point and redefines the local density. In addition, a new clustering process is introduced: the number of shared nearest neighbors between the given point and the higher-density points is calculated first, the cluster that the given point belongs to can be identified, and the remaining points are allocated according to the distance between them and the nearest higher-density point. Using this clustering process, the proposed method can automatically discover the number of clusters. Experimental results on synthetic and real-world datasets show that the proposed method has the best performance compared with K-means, DBSCAN, CSPV, DPC, and SNN-DPC.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Clustering [1,2,3,4] is the important process of pattern recognition, machine learning, and other fields. It can be used as an independent tool for data distribution and can be applied in image processing [3,4,5,6], data mining [3], intrusion detection [7, 8], and bioinformatics [9]. Without any prior knowledge, clustering methods assign points into different clusters according to their similarity such that points in the same clusters are similar to each other while points in the different clusters have a low similarity. Clustering methods are divided into different categories [10]: density-based clustering method, centroid-based clustering method, model-based clustering method, and grid-based clustering method.

Commonly centroid-based clustering methods include K-means [11] and K-medoids [12]. This type of method performs clustering by judging the distance between the point and the cluster center. Therefore, these methods can only identify spherical or spherical-like clusters and need the number of clusters as a priori [13].

The density-based clustering methods can identify clusters of arbitrary shapes, and it is not sensitive to noise [2]. Common and representative density-based clustering methods include DBSCAN [14], OPTICS [15], DPC [16], etc. DBSCAN defines a density threshold by using a neighborhood radius Eps and the minimum number of points Minpts. Based on this, it distinguishes core points and noisy points. As an effective extension of the DBSCAN, OPTICS only need to determine the value of Minpts and generate an augmented cluster ranking that represents the density-based clustering structure of each point. The DPC proposed by Liao et al. is based on two assumptions: the cluster center is surrounded by neighbors with lower local density, the distance between the cluster center and points with high local density is relatively large. It can effectively identify high-density centers [16].

At present, there are still drawbacks to most density-based clustering methods. DBSCAN-like methods can produce good clustering results but they depend on the distance threshold [17]. To avoid it, ARKNN-DBSCAN [18] and RNN-DBSCAN [19] redefine the local density of points by using the number of reverse nearest neighbors. Jian et al. [20] proposed a clustering center recognition standard based on relative density relationship, which is less affected by density kernel and density difference. IDDC [21] uses the relative density based on K-nearest neighbor to estimate the local density of points. CSPV [22] is a potential-based clustering method, it replaces density with the potential energy calculated by the distribution of all points. The one-step clustering process in some methods may lead to continuity errors, that is, once a point is incorrectly assigned, then more points may be assigned incorrectly [23]. To solve this problem, Yu et al. [24] proposed a method that can assign the non-grouped points to the suitable cluster according to the evidence theory and the information of K-nearest neighbors, improving the accuracy of clustering. Liu et al. [25] proposed a fast density peak clustering algorithm based on shared nearest neighbor(SNN-DPC), which improves the clustering process and reduces the impact of density peak and allocation process on clustering results to a certain extent. However, the location and number of cluster centers still need to be manually selected from the decision graph.

These methods have solved the problems existing in the current methods to a certain extent, but these methods do not consider the importance of points, which may result in inaccurate density calculation. This paper attempts to solve the inaccurate definition of local density and the errors caused by one-step clustering process. Therefore, a new density-based clustering method is proposed. Based on the K-nearest neighbor density estimation [26, 27] and the shared nearest neighbor [25, 28], we redefine the K-nearest neighbor density estimation to calculate the local density, which assigns the different importance for each neighbor of the given point. A new clustering process is proposed: the number of shared nearest neighbors between the given point and the higher-density point is calculated first, the cluster that the given point belongs to can be identified, and the remaining points are allocated according to the distance between them and the nearest higher-density point. To some extent, it avoids the continuity error caused by directly assigning points to the cluster where the nearest higher-density neighbor is located. After calculating the local density, all points are sorted in the descending order, and then the cluster centers are selected from the points whose density is higher than the given point. Through this process, the method can automatically discover both the cluster center and the number of clusters.

The rest of this paper is organized as follows. Section 2 introduces relevant definitions and the new clustering method we proposed. In Sect. 3, we discuss the experimental results on synthetic datasets and real-world datasets and compare the proposed method with other classical clustering methods according to several evaluation metrics. Section 4 summarizes the paper and discusses future work. Table 1 illustrates the symbols and notations used in this paper.

2 Method

A new clustering method is proposed according to the new density estimation method and new allocation strategy in the clustering process. And the new density estimation method is based on the K-nearest neighbor density estimation, which is the nonparametric density estimation method proposed by fix and Hodges [26]. K-nearest neighbor density estimation [26, 27] is a well-known and simplest density estimation method which is based on the concept: the density function of a continuity point can be estimated using the number of neighbors observed in a small region near the point. In the dataset \(X\left[N\right]={\left\{{x}_{i}\right\}}_{i=1}^{N}\), the estimation of the density function is based on the distance from \({x}_{i}\) to its K-th nearest neighbor. For points in different density regions, the neighborhood size determined by the K-nearest neighbors is adaptive, ensuring the resolution of high-density regions and the continuity of low-density regions.

For each \({x}_{i}\in {R}^{d}\), the estimated density of \({x}_{i}\) is:

where \({V}_{d}\) is the volume of the unit sphere in \({R}^{d}\), K is the number of neighbors, \({r}_{k}\left(i\right)\) represent the distance from \({x}_{i}\) to the K-th nearest neighbor in the dataset \(X\left[N\right]\).

2.1 The New Density Estimation Method

Based on the K-nearest neighbor density estimation, the new density estimation method is proposed. According to the number of shared neighbors between the given point and others, the volume of a region containing the K shared nearest neighbors of the given point is used to estimate the local density. Generally, the parameter \(K={k}_{1}\times \sqrt{N}\), k1 is coefficient. Local density estimation is redefined as:

where N is the number of points in the dataset. For point \({x}_{i}\), \({K}_{i}\) is the number of weighted points according to shared nearest neighbors, \({V}_{i}\) is the volume of the high-dimensional sphere with radius \({R}_{i}\), and all the spheres considered in the experiment are closed Euclidean spheres.

For K-nearest neighbors [27, 29] of each point, it refers that selecting K points according to the distance between points. For points \({x}_{i}\) and \({x}_{j}\) in the dataset, the K-nearest neighbor sets of \({x}_{i}\) and \({x}_{j}\) are defined as \(KNN\left(i\right)\) and \(KNN\left(j\right)\). Based on K-nearest neighbors, the shared nearest neighbors [25, 28] between \({x}_{i}\) and \({x}_{j}\) are their common K-nearest neighbor sets, expressed as:

That is to say, the matrix \(SNN\) represents the numbers of shared nearest neighbors between points.

The points are sorted in descending order according to the number of shared neighbors. Point \({x}_{j}\) is the K-th shared nearest neighbor of the given point \({x}_{i}\), and the neighborhood radius \({R}_{i}\) of point \({x}_{i}\) is defined as:

D is the distance matrix of the dataset, and the distance is Euclidean distance.

Given the radius \({R}_{i}\) of point \({x}_{i}\), the volume of its neighborhood can be calculated by:

where d is the dimension of the feature vector in the dataset.

In general, for point \({x}_{i}\), the importance of its each neighbor is different, and the contribution to the density estimation of point \({x}_{i}\) should be different. In our definition, this contribution is related to the number of shared nearest neighbors between \({x}_{i}\) and its each neighbor. According to the number of shared neighbors between points \({x}_{i}\) and \({x}_{j}\), the weight coefficient formula is defined to assign different weights to K-nearest neighbors of any point:

where \(|SNN\left(i,j\right)|\) is the number of shared neighbors between point \({x}_{i}\) and point \({x}_{j}\).

\({K}_{i}\) is redefined by adding the different weights to K-nearest neighbors of point \({x}_{i}\), as shown in Eq. 7:

As the neighbor of \({x}_{i}\), if \({x}_{j}\) has the more number of shared nearest neighbors with \({x}_{i}\), that is, the weight of \({x}_{j}\) is bigger, \({x}_{j}\) has more contribution in calculating the local density of point \({x}_{i}\). Using Eq. 6 and Eq. 7, Eq. 1 can be expressed in the form of Eq. 8.

In summary, when calculating the local density of \({x}_{i}\), different weights are added to K points falling in a neighborhood according to the number of shared neighbors. The more the number of shared nearest neighbors with \({x}_{i}\), the greater contribution to the local density estimation of \({x}_{i}\).

2.2 A New Allocation Strategy in the Clustering Process

The allocation process of some clustering methods has poor fault tolerance. When one point is assigned incorrectly, more subsequent points will be affected, which will have a severe negative impact on the clustering results [23, 24]. Therefore, a new clustering process is proposed to make the allocation more reasonable and avoid the continuity error caused by direct allocation to a certain extent.

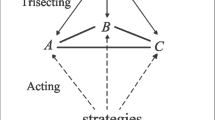

In the proposed clustering method, all points are sorted in the descending order according to the local density value. The sorted index is stored in the array \(sortedIdx\left[1\dots N\right]\). Then, in the sorted points queue, points are accessed one by one with the local density value from the highest to the lowest. The first point in the queue has the highest local density and automatically becomes the center of the first cluster. For each subsequent point \(sortedIdx\left[i\right]\) in the queue, two special points are identified: a point parent1 is the nearest point to \(sortedIdx\left[i\right]\) in the visited points, and a point parent2 is the point that has the most number of shared neighbors with \(sortedIdx\left[i\right]\). The number of shared nearest neighbors between \(sortedIdx\left[i\right]\) and parent2 is compared with K/2. If it is at least half of K/2, \(sortedIdx\left[i\right]\) is assigned to the cluster where parent2 belongs. Otherwise, the distance between \(sortedIdx\left[i\right]\) and parent1 is compared with the given distance bandwidth parameter B. If the distance is greater than parameter B, \(sortedIdx\left[i\right]\) is the center of the new cluster; if not, it is assigned to the cluster where point parent1 belongs. This process continues until all points are visited and assigned to the proper clusters. The detail of the proposed method is shown in Algorithm 1.

3 Experiments

In this section, we use classical synthetic datasets and real-world datasets to test the performance of the proposed method. Moreover, we take K-means, DBSCAN, CSPV, DPC, and SNN-DPC as the control group. According to several evaluation metrics, the performance of the proposed method is compared with five classical clustering methods.

3.1 Datasets and Processing

To verify the performance of the proposed method, we select real-world datasets and synthetic datasets with different sizes, dimensions, and the number of clusters. The synthetic datasets include Flame, R15, D31, S2, and A3. The real-world datasets include Iris, Wine, Seeds, Breast, Wireless, Banknote, and Thyroid. The characteristics of datasets used in the experiments are presented in Table 2. The evaluation metrics used in experiments are as followed: Normal Mutual Information (NMI) [30], adjusted Rand index(ARI) [30], and Fowlkes-Mallows index (FMI) [31]. The upper bound is 1, where larger values indicate better clustering results.

3.2 Parameters Selection

We set parameters of each method to ensure the comparison of their best performance. The parameters corresponding to the optimal results of different methods are chosen. The real number of clusters is assigned to K-means, DPC, and SNN-DPC.

The proposed method needs two key parameters: the number of nearest neighbor K and the distance bandwidth B. The selection of parameter B [22] is derived from the distance matrix D:

The parameter K is selected by the formula \(K={k}_{1}*\sqrt{N}\) to determine the relationship between K and N, k1 is the coefficient. The parameter is related to the size of the dataset and clusters. In the proposed method, k1 is limited in (0,9] to adapt to different datasets. Figure 1 shows the FMI indices of some representative datasets with different k1 values. It can be seen that for datasets S2 and R15, the FMI index is not sensitive to k1 when k1 is within region (0, 1.5), and for the Wine dataset, the FMI index is not sensitive to k1 within the whole region.

3.3 Experimental Results

We conduct comparison experiments on 12 datasets and evaluate the clustering results with different evaluation metrics. In the following experiments, we first verify the effects of the new density estimation method, which is proposed in this paper. Then to test the effectiveness of the automatically discovered number of clusters, the proposed method is compared with the other methods. And the whole proposed method with the other five commonly used methods is compared.

The New Density Estimation Method.

Based on the original K-nearest neighbor density estimation [26], the new density estimation method is proposed, which is described in Sect. 2.1. To check if the new method improves the accuracy of the local density calculation, the comparison experiment is conducted between the original method and the new method. Firstly, the original and the new density estimation method are used to estimate the local density of the points. Secondly, after the local density is calculated and sorted in descending order, the same clustering process is used to assign points, which is proposed by [22]. Finally, the clustering results of datasets are evaluated by different metrics, which are shown in Fig. 2 and Fig. 3.

Compared with the original method, the new method is superior on most real-world datasets but is slightly poor on the Seeds. On the synthetic datasets R15, D31, S2, and A3, the new method has good clustering results, which is not much different from the original method. In summary, the new method shows an advantage over the original density estimation method.

The Effectiveness of the Automatically Discovered Number of Clusters.

The comparison experiments are conducted among DBSCAN, CSPV, and the proposed method to verify the validity of the automatically discovered number of clusters. The proposed method is not compared with K-means, DPC, and SNN-DPC because the real number of clusters is used in these methods. The experimental results are shown in Table 3. The accuracy of the discovered number of clusters by DBSCAN, CSPV, and the proposed method are 42%, 42%, and 83% respectively. On 5 synthetic datasets, the proposed method can correctly discover the number of clusters; the proposed method outperforms DBSCAN and CSPV on real-world datasets Iris, Seeds, Breast, and Wireless. In summary, the proposed method is better than DBSCAN and CSPV for automatically discovering the number of clusters.

Experiments on the Different Datasets.

The experiments are conducted on the different datasets, and the experimental results are presented in Table 4. From Table 4, the proposed method has the best clustering results on real-world datasets Iris, Seeds, Breast, Wireless, and Banknote than other clustering methods. On the Wine, the result of the proposed method is the best. For the dataset Thyroid, the proposed method performs better than DPC and SNN-DPC.

The clustering results of the proposed method for the 5 synthetic datasets are shown in Fig. 4. For 5 synthetic datasets, on the datasets Flame, S2, and A3, the proposed method has the best clustering result, especially on the Flame, the same result as the original data label is obtained. On the dataset D31, the proposed method is slightly poor than the best. The proposed method generates the same results as K-means, DPC, and SNN-DPC on dataset R15, but the proposed method can discover the number of clusters automatically. On the synthetic datasets, the results of the proposed method are similar to SNN-DPC, but slightly better than SNN-DPC. And the proposed method outperforms the other five methods on most real-world datasets.

In summary, the proposed method has more advantages and outperformance than other methods in the effectiveness of clustering results in most cases. These results show that our redefinition of local density and the new clustering process is effective.

4 Conclusion

In this paper, a new clustering method is proposed according to the K-nearest neighbor density estimation and shared nearest neighbors. When calculating the local density, the number and the different contributions of points in the neighborhood are considered, which improves the accuracy of local density calculation to a certain extent. This paper proves that the proposed method can adapt to most different datasets and using the improved local density estimation can improve the clustering performance. The proposed method has a parameter K, the formula \(K={k}_{1}\times \sqrt{N}\) is used to determine the relationship between K and N, and k1 is the coefficient. Although k1 is limited in a reasonable range, k1 had a considerable influence on the clustering results in some datasets. As a possible direction for future work, we will explore the possibility of reducing the influence of the parameter K on the clustering results.

References

Omran, M., Engelbrecht, A.P., Salman, A.: An overview of clustering methods. Intell. Data Anal. 11(6), 583–605 (2007)

Han, J., Pei, J., Kamber, M.: Data Mining: Concepts and Techniques. Elsevier, Amsterdam (2011)

Jain, A.K., Murty, M.N., Flynn, P.J.: Data clustering: a review. ACM Comput. Surv. (CSUR) 31(3), 264–323 (1999)

Zhang, C., Wang, P.: A new method of color image segmentation based on intensity and hue clustering. In: Proceedings 15th International Conference on Pattern Recognition, ICPR-2000, vol. 3, pp. 613–616. IEEE (2000)

Reddy, S., Parker, A., Hyman, J., Burke, J., Estrin, D., Hansen, M.: Image browsing, processing, and clustering for participatory sensing: lessons from a dietsense prototype. In: Proceedings of the 4th Workshop on Embedded Networked Sensors, pp. 13–17 (2007)

Khan, Z., Ni, J., Fan, X., Shi, P.: An improved k-means clustering algorithm based on an adaptive initial parameter estimation procedure for image segmentation. Int. J. Innov. Comput. Inf. Control 13(5), 1509–1525 (2017)

Portnoy, L.: Intrusion detection with unlabeled data using clustering. Ph.D. thesis, Columbia University (2000)

Guan, Y., Ghorbani, A.A., Belacel, N.: Y-means: a clustering method for intrusion detection. In: CCECE 2003-Canadian Conference on Electrical and Computer Engineering. Toward a Caring and Humane Technology (Cat. No. 03CH37436), vol. 2, pp. 1083–1086. IEEE (2003)

Frank, E., Hall, M., Trigg, L., Holmes, G., Witten, I.H.: Data mining in bioinformatics using Weka. Bioinformatics 20(15), 2479–2481 (2004)

Rui, X., Wunsch, D.I.: Survey of clustering algorithms. IEEE Trans. Neural Netw. 16(3), 645–678 (2005)

Macqueen, J.: Some methods for classification and analysis of multivariate observations. In: Proceedings of Berkeley Symposium on Mathematical Statistics Probability (1965)

Kaufman, L., Rousseeuw, P.J.: Finding Groups in Data: An Introduction to Cluster Analysis. Wiley, Hoboken (2005)

Jain, A.K.: Data clustering: 50 years beyond k-means. Pattern Recogn. Lett. 31(8), 651–666 (2010)

Ester, M., Kriegel, H.P., Sander, J., Xu, X.: Density-based spatial clustering of applications with noise. In: International Conference on Knowledge Discovery and Data Mining, vol. 240, p. 6 (1996)

Ankerst, M., Breunig, M.M., Kriegel, H.P., Sander, J.: OPTICS: ordering points to identify the clustering structure. In: SIGMOD 1999, Proceedings ACM SIGMOD International Conference on Management of Data, Philadelphia, Pennsylvania, USA, 1–3 June 1999 (1999)

Rodriguez, A., Laio, A.: Clustering by fast search and find of density peaks. Science 344(6191), 1492–1496 (2014)

Li, H., Liu, X., Li, T., Gan, R.: A novel density-based clustering algorithm using nearest neighbor graph. Pattern Recogn. 102, 107206 (2020)

Pei, P., Zhang, D., Guo, F.: A density-based clustering algorithm using adaptive parameter k-reverse nearest neighbor. In: 2019 IEEE International Conference on Power, Intelligent Computing and Systems (ICPICS), pp. 455–458. IEEE (2019)

Bryant, A., Cios, K.: RNN-DBSCAN: a density-based clustering algorithm using reverse nearest neighbor density estimates. IEEE Trans. Knowl. Data Eng. 30(6), 1109–1121 (2017)

Hou, J., Zhang, A., Qi, N.: Density peak clustering based on relative density relationship. Pattern Recogn. 108(8), 107554 (2020)

Wang, Y., Yang, Y.: Relative density-based clustering algorithm for identifying diverse density clusters effectively. Neural Comput. Appl. 33(16), 10141–10157 (2021). https://doi.org/10.1007/s00521-021-05777-2

Lu, Y., Wan, Y.: Clustering by sorting potential values (CSPV): a novel potential-based clustering method. Pattern Recogn. 45(9), 3512–3522 (2012)

Jiang, J., Chen, Y., Meng, X., Wang, L., Li, K.: A novel density peaks clustering algorithm based on k nearest neighbors for improving assignment process. Phys. A 523, 702–713 (2019)

Yu, H., Chen, L., Yao, J.: A three-way density peak clustering method based on evidence theory. Knowl.-Based Syst. 211, 106532 (2021)

Liu, R., Wang, H., Yu, X.: Shared-nearest-neighbor-based clustering by fast search and find of density peaks. Inf. Sci. 450, 200–226 (2018)

Fukunaga, K., Hostetler, L.: Optimization of k nearest neighbor density estimates. IEEE Trans. Inf. Theory 19(3), 320–326 (1973)

Dasgupta, S., Kpotufe, S.: Optimal rates for k-NN density and mode estimation. In: Advances in Neural Information Processing Systems, vol. 27 (2014)

Ertöz, L., Steinbach, M., Kumar, V.: Finding clusters of different sizes, shapes, and densities in noisy, high dimensional data. In: Proceedings of the 2003 SIAM International Conference on Data Mining, pp. 47–58. SIAM (2003)

Qaddoura, R., Faris, H., Aljarah, I.: An efficient clustering algorithm based on the k-nearest neighbors with an indexing ratio. Int. J. Mach. Learn. Cybern. 11(3), 675–714 (2020)

Vinh, N.X., Epps, J., Bailey, J.: Information theoretic measures for clusterings comparison: variants, properties, normalization and correction for chance. J. Mach. Learn. Res. 11, 2837–2854 (2010)

Fowlkes, E.B., Mallows, C.L.: A method for comparing two hierarchical clusterings. J. Am. Stat. Assoc. 78(383), 553–569 (1983)

Acknowledgment

This work was partially supported by the Gansu Provincial Science and Technology Major Special Innovation Consortium Project (Project No. 1), the name of the innovation consortium is Gansu Province Green and Smart Highway Transportation Innovation Consortium, and the project name is Gansu Province Green and Smart Highway Key Technology Research and Demonstration.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Guan, Y., Li, Y., Li, B., Lu, Y. (2022). A Clustering Method Based on Improved Density Estimation and Shared Nearest Neighbors. In: Huang, DS., Jo, KH., Jing, J., Premaratne, P., Bevilacqua, V., Hussain, A. (eds) Intelligent Computing Methodologies. ICIC 2022. Lecture Notes in Computer Science(), vol 13395. Springer, Cham. https://doi.org/10.1007/978-3-031-13832-4_2

Download citation

DOI: https://doi.org/10.1007/978-3-031-13832-4_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-13831-7

Online ISBN: 978-3-031-13832-4

eBook Packages: Computer ScienceComputer Science (R0)