Abstract

In the context of the era of big data, hybrid data are multimodality, including numerical, images, audio, etc., and even attribute values may have unknown values. Multikernel fuzzy rough sets can effectively solve large-scale multimodality attributes. At the same time, the decision attribute values may have hierarchical structure relationships, and the multikernel fuzzy rough sets based on hierarchical classification can solve the hierarchical relationships among decision attribute values. In real life, data often change dynamically. The article discusses the updating method when one object changes in the multimodality incomplete decision system based on hierarchical classification, and according to the variation of the tree-based hierarchical class structure, the upper and lower approximations are updated. Finally the deduction is carried out through relevant examples.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Multikernel fuzzy rough sets

- Hierarchical classification

- Multimodality incomplete decision system

- Variation of object set

1 Introduction

Fuzzy rough sets skillfully combined the rough sets that deal with classification uncertainty and the fuzzy sets that deal with boundary uncertainty to handle various classes of data types [1]. Subsequently, many scholars expanded and developed fuzzy rough sets [2,3,4,5,6,7]. To deal with multimodality attributes, Hu proposed multikernel fuzzy rough sets [8], different kernel functions used to process multimodality attributes.

Actually, there are semantic hierarchies among most data types [9]. Chen and others constructed a decision tree among classes with a tree-like structure [10]. Inspired by fuzzy rough sets theory, Wang proposed deep fuzzy trees [11]. Zhao embed the hierarchical structure into fuzzy rough sets [12]. Qiu proposed a fuzzy rough sets method using the Hausdorff distance of the sample set for hierarchical feature selection [13].

The expansion and updating of data information will also cause data loss. Therefore, in the multimodality incomplete information system, the incremental updating algorithm of the approximations is particularly important, Zeng proposed an incremental updating algorithm for the variation of object set [14] and the attribute value based on the hybrid distance [6]. Dong L proposed an incremental algorithm for attribute reduction when samples and attributes change simultaneously [15]. Huang proposed a multi-source hybrid rough set (MCRS) [16], under variation of the object, attributes set and attribute values, a matrix-based incremental mechanism studied. However, multikernel fuzzy rough sets incremental algorithm based on hierarchical classification is not considered now.

This paper is organized as follows: Sect. 2 introduces the basic knowledge. In Sect. 3, the hierarchical structure among decision attribute values is considered into the multikernel fuzzy rough sets, the tree-based hierarchical class structure is imported, and the upper and lower approximations of all nodes is proposed. In Sect. 4, an incremental updating algorithm of upper and lower approximations for the immigration and emmigration of single object. Finally a corresponding example is given for deduction. The paper ends with conclusions and further research topics in Sect. 5.

2 An Introduction of Fuzzy Rough Sets

In this section, some related content of fuzzy rough sets will be briefly introduced.

Definition 1

[17]. Given an fuzzy approximate space \(\left\{ {U,R} \right\}\), \(\forall x,y,z \in U\). If R satisfies:

Reflexivity: \(R\left( {x,x} \right) = 1\);

Symmetry: \(R\left( {x,y} \right) = R\left( {y,x} \right)\);

Min-max transitivity: \(min \left( {R\left( {x,y} \right),R\left( {y,z} \right)} \right) \le R\left( {x,z} \right)\).

Then R is said to be a fuzzy equivalence relation on U.

Definition 2

[4]. Given a fuzzy approximate space \(\left\{ {U,R} \right\}\), R is a fuzzy equivalence relation on the U, and the fuzzy lower and upper approximations are respectively defined as follows:

T and S are the triangular mode. N is a monotonically decreasing mapping function.

In a multimodality information system, the attributes of samples are multimodality, and multikernel learning is an effective method, often using different kernel functions to extract information from different attributes [8]. At the same time, there may also be unknown value in the attribute values. In this paper, the case where the attribute values exist unknown value is considered into the multimodality information system.

Definition 3

[6]. Given a multimodality incomplete information system \(\left\{ {U,MC} \right\}\), MC is a multimodality conditional attribute, \(\forall x,y \in U\), \(\forall M \in MC\), and it exists unknown value. \(M\left( x \right)\), \(M\left( y \right)\) are the M attribute values of x and y, respectively. Unknown values are marked as “?”. The similarity relationships extracted from this data using a matching kernel.

It is easy to prove that the kernel function satisfies Definition 1. Therefore, the similarity relation calculated is fuzzy equivalence relation.

Definition 4

[8]. Given a \(\left\{ {U,MC} \right\}\), MC divides the multimodality information system into p subsets with different attributes, referred to as \(MC/U = \left\{ {M_{1} ,M_{2} ,...,M_{P} } \right\}\), \(M_{i} \in MC\), \(K_{i}\) is a fuzzy similarity relation computed with single attribute. \(\forall x,y \in U\), the fuzzy similarity relation based on combination kernels is defined as follows:

Example 1.

Given a \(\left\{ {U,MC,D} \right\}\), there are seven objects, \(MC = \left\{ {K_{1} ,K_{2} ,K_{3} ,K_{4} } \right\}\), \(D = \left\{ {d_{1} ,d_{2} ,d_{3} ,d_{4} ,d_{5} } \right\}\), The details are shown in Table 1.

In Table 1, there are three types of conditional attributes, numerical type, categorical type, and unknown values. It can be regarded as a multimodality incomplete decision system, and different kernel functions is used to extract the fuzzy similarity relationships of different attributes.

\(x_{1}\) and \(x_{2}\) as an example, calculating the fuzzy similarity relationships based on combination kernels:

In the same way, the fuzzy similarity relationships based on combination kernels among other objects can be obtained, and the fuzzy similarity relationships matrix \(K_{{T_{\cos } }} \left( {x,y} \right)\) can be obtained as follows:

3 Multikernel Fuzzy Rough Sets Based on Hierarchical Classifification

Given a multimodality incomplete decision system, in addition to multimodality attributes of objects, there may be hierarchical relationships among decision attribute values. The hierarchical classification is mostly based on a tree structure. A multikernel fuzzy rough sets based on hierarchical classification takes the hierarchical relationships of decision attribute values into account in the fuzzy rough sets.

Definition 5

[4]. Given a \(\left\{ {U,MC} \right\}\). \(K_{{T_{\cos } }}\) is a fuzzy equivalence relation based on combination kernels, X is a fuzzy subset of U, and the approximations are respectively defined as as follows:

Definition 6

[12]. \(\left\{ {U,MC,D_{tree} } \right\}\) is a multimodality decision system based on hierarchical classification, \(D_{Tree}\) is decision attribute based on hierarchical classification, and divides U into q subsets, referred to as \(U/D_{Tree} = \left\{ {d_{1} ,......,d_{p} } \right\}\), \(sib\left( {d_{i} } \right)\) represents the sibling node of \(d_{i}\).\(\forall x \in U\), if \(x \in sib\left( {d_{i} } \right)\), then \(d_{i} \left( x \right) = 0\), else \(d_{i} \left( x \right) = 1\). The approximations of decision class \(d_{i}\) is defined as follows:

Proposition 1.

This paper extends the lower approximations algorithm of the decision class to any node. When the decision class is a non-leaf node, the upper approximations is obtained by finding the least upper bound of the upper approximations of its child nodes. Leaf(d) represents no child node, the child node of \(d_{i}\) is marked as \(d_{ich} = \left\{ {d_{i1} ,d_{i1} ,...,d_{ik} } \right\}\), where k is the number of child nodes, there are:

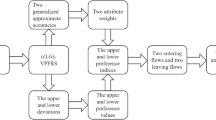

Given a \(\left\{ {U,MC,D_{tree} } \right\}\), the algorithm for the lower and upper approximations is designed in Algorithm 1.

Example 2.

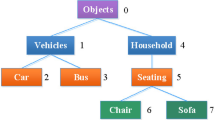

On the basis of Example 1, the decision attributes are divided into five subsets, namely \(d_{1}\), \(d_{2}\), \(d_{3}\), \(d_{4}\), \(d_{5}\). From Fig. 1, \(d_{1} = \left\{ {x_{4} ,x_{6} } \right\}\), and from Table 1, \(sib(d_{1} ) = d_{4} = \left\{ {x_{1} ,x_{8} } \right\}\), according to Proposition 1, the lower and upper approximations of the decision class are calculated as follows:

Because \(d_{1}\) is a non-leaf node, according to Proposition 1 there are:

So the lower and upper approximations of \(d_{1}\) are:

On the basis of Table 1, the tree-based hierarchical class structure is established in Fig. 1.

4 Incremental Updating for Lower and Upper Approximations Under the Variation of Single Object

A \(\left\{ {U,MC,D_{tree} } \right\}\) at time t is given. \(\underline{K}_{{T_{sibling} }}^{\left( t \right)} X\) represents lower approximations, and \(\overline{K}_{{T_{sibling} }}^{(t)} X\) represents upper approximations. Given \(\left\{ {\overline{U} ,\overline{MC} ,\overline{D}_{tree} } \right\}\) represents a multimodality decision system based on hierarchical classification at time t + 1, \(x^{ + } ,x^{ - }\) represents immigration and emmigration of one object, respectively. The fuzzy upper and lower approximations at time t + 1 are denoted by \(\overline{K}_{{T_{sibling} }}^{(t + 1)} X\) and \(\underline{K}_{{T_{sibling} }}^{{\left( {t + 1} \right)}} X\), respectively.

4.1 Immigration of Single Object

The \(x^{ + }\) immigrates into the \(\left\{ {\overline{U} ,\overline{MC} ,\overline{D}_{tree} } \right\}\) at time t + 1, in which \(\overline{U} = U \cup \left\{ {x^{ + } } \right\}\). If no new decision class is generated at time t + 1, tree-based hierarchical class structure does not need to be updated. Otherwise, the tree will be updated. Next, the incremental updating is discussed by whether to generate a new decision class.

Proposition 2.

\(\forall d_{i} \in \overline{U} /\overline{D}_{tree}\), \(x \in \overline{U}\), \(x^{ + }\) will generate a new decision class, and the new class is marked as \(\overline{d}^{ + }_{n + 1}\). The labeled class \(\overline{d}^{ + }_{n + 1}\) needs to be inserted into the tree-based hierarchical class structure. Then the approximations updates of the decision class is shown:

Proof. For the lower approximations of \(d_{i}\), the approximations at time t + 1 is determined by the objects belonging to the sibling nodes of \(d_{i}\). When \(x \ne x^{ + }\) and \(x^{ + } \notin \left\{ {sib\left( {d_{i} } \right)} \right\}\), the lower approximations are the same as time t; when \(x = x^{ + }\) or the sibling nodes of at time t + 1 are newly decision class, calculating its lower approximations directly according to Proposition 1; when \(x^{ + } \in \left\{ {sib\left( {d_{i} } \right)} \right\}\) and all sibling nodes of \(d_{i}\) are not newly classes, \(\forall x \ne x^{ + }\), there is:

For the upper approximations of \(d_{i}\), the upper approximations is determined by the object that belongs to \(d_{i}\). When \(d_{i}\) is a leaf node and \(x \ne x^{ + }\) at time t + 1, that is the newly decision subset not equal to \(d_{i}\), and its upper approximations is the same as time t; When \(d_{i}\) is a leaf and \(x = x^{ + }\) at time t + 1, direct computational approximations according to Proposition 1; In the tree-based hierarchical class structure, the decision class with the same parents node belongs to a major class, so \(d_{i}\) and dich belong to the same major class, When \(d_{i}\) is not a leaf node, we directly find the least upper bound for all child nodes of the decision class \(d_{i}\), and obtain updating of the upper approximations.

Proposition 3.

\(\forall d_{i} \in \overline{U} /\overline{D}_{tree}\), \(x \in \overline{U}\), \(x^{ + }\) will not generate a new decision class, and then the tree-based hierarchical class structure does not need to be updated. The approximations updating of the decision class is shown:

Proof. The proof of the lower approximations updating is similar to Proposition 2; for the upper approximations, at time t + 1, when \(d_{i}\) is a non-leaf node, directly find the least upper bound for the child nodes of \(d_{i}\), and the approximations updating is obtained. When \(d_{i}\) is a leaf node, the upper approximations at time t + 1 is determined by the object that belongs to \(d_{i}\); When \(x = x^{ + }\), the upper approximations is directly calculated according to Proposition 1; When \(x \ne x^{ + }\) and \(x^{ + } \notin \left\{ {d_{i} } \right\}\) the approximations is the same as time t; when \(x \ne x^{ + }\) and \(x^{ + } \in \left\{ {d_{i} } \right\} \, \), there is:

Given a \(\left\{ {\overline{U} ,\overline{MC} ,\overline{D}_{tree} } \right\}\), when immigration of one object, the algorithm for the lower and upper approximations is designed in Algorithm 2.

Example 3.

On the basis of Table 1, one object \(x_{9}^{ + }\) immigrates into system, and its information is shown in Table 2. A new decision class \(d_{6}\) is generated. first the fuzzy similarity relationships with other objects based on combination kernels are calculated according to Definition 6, as follows:

On the basis of Fig. 1, inserting the newly decision class \(d_{6}\) into the tree, the tree is update as follows:

As shown in Fig. 2, \(d_{6} \in leaf\), \(sib\left( {d_{1} } \right) = \left\{ {d_{4} ,d_{6} } \right\} = \left\{ {x_{1} ,x_{8} ,x_{9}^{ + } } \right\}\).

According to Proposition 2:

From Fig. 2, \(d_{1}\) can be obtained as a non-leaf node, according Proposition 2:

So the lower and upper approximations of \(d_{1}\) are:

From Example 3, obviously, when immigration of one object in the multimodality decision system based on hierarchical classification, it will affect the upper and lower approximations of other decision classes. Only a small amount of update operations need to be performed according to properties 2 and 3, which greatly reduces the amount of calculation and time cost.

4.2 Emmigration of Single Object

\(x^{ - }\) emmigrates from the \(\left\{ {\overline{U} ,\overline{MC} ,\overline{D}_{tree} } \right\}\) at time t + 1, in which \(\overline{U} = U - \left\{ {x^{ - } } \right\}\). If no decision class is removed at time t + 1, the tree-based hierarchical class structure does not need to be updated, otherwise, the tree is updated. Next, the incremental updating will be discussed by whether to remove a decision class.

Proposition 4.

\(\forall d_{i} \in \overline{U} /\overline{D}_{tree}\), \(x \in \overline{U}\), \(x^{ - }\) emmigrates form system, which leads to the decision class is removed, marked as \(\overline{d}_{l}\). The tree-based hierarchical class structure will be updated, and the \(\overline{d}_{l}\) will be removed from the tree. Then the approximations updating of the decision class is shown:

Proof. At time t+1, the removed decision class has no upper and lower approximations, but \(x^{ - }\) will have the impact on the upper and lower approximations of other decision classes. If \(x^{ - }\) is the closest object to the sibling node to which the object x belongs, the lower approximations need to be recalculated, otherwise, the lower approximations remains unchanged; if the sibling node of \(d_{i}\) does not exist after \(x^{ - }\) emmigrates, the lower approximations is 0. For a decision class, the upper approximations is only related to the objects belonging to the decision class. At time t+1, \(x^{ - } \notin d_{i}\), so the upper approximations remains the same.

Proposition 5.

\(\forall d_{i} \in \overline{U} /\overline{D}_{tree}\), \(x \in \overline{U}\), \(x^{ - }\) will not cause the decision class to be removed, and then the tree-based hierarchical class structure does not need to be updated. Then the approximations updating of the decision class is shown:

The proof process is similar.

Given a \(\left\{ {\overline{U} ,\overline{MC} ,\overline{D}_{tree} } \right\}\), when one object emmigrates from system, the algorithm for the lower and upper approximations is designed in Algorithm 3.

Example 4.

The object \(x_{3}\) emmigrates from the system on the basis of Table 1, which will cause the decision class \(d_{3}\) to be removed, and the tree will be updated as follows:

It can be seen from Fig. 3 that the child node of \(d_{3}\) has been removed, and \(d_{1}\) is a leaf node. According to Proposition 4, it can be obtained:

Because \(d_{1}\) become a leaf node, where are:

So the lower and upper approximations of \(d_{1}\) are:

From Example 4, it can be seen that when one object emmigrate form the multimodality decision system based on hierarchical class, the emmigration of one objects have an impact on the upper and lower approximations of other decision classes, and only a small amount of update operations need to be performed according to Proposition 4 and 5, which greatly reduces the calculation and time cost.

5 Conclusions and Further Research

In the multimodality decision system based on hierarchical classification, attributes of simples are multimodality, decision attribute values often have a hierarchical structure, and data changes frequently. The paper takes into account the fact that the attribute values are not known in the multimodality information system based on hierarchical classification, and proposes an incremental updating algorithm in multikernel fuzzy rough sets based on hierarchical classification. The specific process of this algorithm is demonstrated through relevant examples. This algorithm can effectively reduce the time cost caused by object set changes. In future work, the approximations updating algorithm of more objects changing in multikernel fuzzy rough sets based on hierarchical classification and the performance of the algorithm to test the algorithm using the UCI dataset will be the focus of the research.

References

Dubois, D., Prade, H.: Rough fuzzy sets and fuzzy rough sets. Int. J. Gen. Syst. 17, 191–209 (1990)

Morsi, N., Yakout, M.: Axiomatics for fuzzy rough sets. Fuzzy Sets Syst. 100, 327–342 (1998)

Mi, J., Zhang, W.: An axiomatic characterization of a fuzzy generalization of rough sets. Inf. Sci. 160, 235–249 (2004)

Yeung, D., Chen, D., Tsang, E.C.: On the generalization of fuzzy rough sets. IEEE Trans. Fuzzy Syst. 13, 343–361 (2005)

Hu, Q., Yu, D., Pedrycz, W., Chen, D.: Kernelized fuzzy rough sets and their applications. IEEE Trans. Knowl. Data Eng. 23, 1649–1667 (2011)

Zeng, A., Li, T., Luo, C., Hu, J.: Incremental updating fuzzy rough approximations for dynamic hybrid data under the variation of attribute values. In: 2015 International Conference on Machine Learning and Cybernetics (ICMLC), pp. 157–162 (2015)

Zeng, K., She, K.: Fuzzy rough set model based on multi-Kernelized granulation. J. Univ. Electron. Sci. Technol. China 5, 717–723 (2014)

Hu, Q., Zhang, L., Zhou, Y.: Large-scale multimodality attribute reduction with multi-kernel fuzzy rough sets. IEEE Trans. Fuzzy Syst. 26, 226–238 (2018)

Zhao, H., Cao, J., Chen, Q.: Methods for hierarchical multi-label text classification. J. Chin. Comput. Syst. 43, 673–683 (2022)

Ceci, M., Malerba, D.: Classifying web documents in a hierarchy of categories: a comprehensive study. J. Intell. Inf. Syst. 28, 37–78 (2007). https://doi.org/10.1007/s10844-006-0003-2

Wang, Y., Hu, Q., Zhu, P., Li, L., Lu, B.: Deep fuzzy tree for large-scale hierarchical visual classification. IEEE Trans. Fuzzy Syst. 28, 1395–1406 (2019)

Zhao, H., Wang, P., Hu, Q.: Fuzzy rough set based feature selection for large-scale hierarchical classification. IEEE Trans. Fuzzy Syst. 27, 1891–1903 (2019)

Qiu, Z., Zhao, H.: A fuzzy rough set approach to hierarchical feature selection based on Hausdorff distance. Appl. Intell., 1–14 (2022). https://doi.org/10.1007/s10489-021-03028-4

Zeng, A., Li, T., Luo, C.: Incremental approach for updating approximations of gaussian kernelized fuzzy rough sets under variation of object set. Comput. Sci. 6, 173–178 (2013)

Dong, L., Chen, D.: Incremental attribute reduction with rough set for dynamic datasets with simultaneously increasing samples and attributes. Int. J. Mach. Learn. Cybern. 11(6), 1339–1355 (2020). https://doi.org/10.1007/s13042-020-01065-y

Huang, Y., Li, T., Luo, C.: Dynamic maintenance of rough approximations in multi-source hybrid information systems. Inf. Sci. 530, 108–127 (2020)

Chen, S., Li, J., W, X.: Fuzzy Set Theory and Its Application, pp. 100–123. Science Press (2006)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Fan, W., He, C., Zeng, A., Lin, K. (2022). An Incremental Approach Based on Hierarchical Classification in Multikernel Fuzzy Rough Sets Under the Variation of Object Set. In: Huang, DS., Jo, KH., Jing, J., Premaratne, P., Bevilacqua, V., Hussain, A. (eds) Intelligent Computing Methodologies. ICIC 2022. Lecture Notes in Computer Science(), vol 13395. Springer, Cham. https://doi.org/10.1007/978-3-031-13832-4_1

Download citation

DOI: https://doi.org/10.1007/978-3-031-13832-4_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-13831-7

Online ISBN: 978-3-031-13832-4

eBook Packages: Computer ScienceComputer Science (R0)