Abstract

We introduce a simple and effective regularization of knowledge gradient (KG) and use it to present the first sublinear regret bound result for KG-based algorithms. We construct online learning with regularized knowledge gradients (ORKG) algorithm with independent Gaussian belief model, and prove that ORKG algorithm achieves sublinear regret upper bound with high probability facing bounded independent Gaussian multi-armed bandit (MAB) problems. The theoretical properties of regularized KG and ORKG algorithm are analyzed, and the empirical characteristics of ORKG algorithm are empirically validated with MAB benchmark simulations. ORKG algorithm shows top-tier performance comparable to select MAB algorithms with provable regret bounds.

D. Lee—This work is supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (No. 2020R1G1A1102828).

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

This paper considers the problem of making best possible decisions facing uncertainty, in which a decision-making agent repeatedly chooses from a set of decisions and then observes an outcome from which a bounded quantifiable reward can be derived. We assume that the agent knows the set of possible decisions, which is finite and remains the same over the time horizon in which the agent choose and learns. If such an agent is evaluated on how well it finds out which choice incurs the best reward, disregarding the rewards incurred by its choices while learning, the agent is facing a ranking and selection (R&S) problem.

Knowledge gradient (KG) is an algorithm proposed to solve R&S problems with independent Gaussian model assumption [4], and later with different assumptions such as correlated Gaussian model [5], Gaussian process model [15], binary cost function [22], locally nonlinear parametric models [9], and repeated noisy measurements [8]. Empirical effectiveness of KG-based algorithms has been demonstrated in diverse fields where R&S problems can be applied: for example, drug discovery [12], chemical engineering [3], fleet management [10, 21], COVID responses [19], and clinical trials [20].

However, R&S problem disregards the reward incurred by the choices made by the agent while it is learning. As such, R&S problem is ill-suited to model online learning problems, in which every single reward incurred by the agent counts, and 2) the remaining number of choices the agent must make may be unknown. Little work has been done to utilize KG in online learning, where a most notable approach assuming the agent knows the remaining number of choices [13, 14]. In this paper, we present a new approach to utilize KG to solve online learning problem with unknown time horizon.

Novel contribution of this manuscript is summarized as follow. We present Online learning with Regularized Knowledge Gradients (ORKG) algorithm with independent Gaussian belief, a novel online learning algorithm that uses knowledge gradient. We provide theoretical analysis of ORKG, including the proof of ORKG’s regret upper bound of \(O(\sqrt{KT \ln (KT)})\) in stochastic MAB problems with K bounded independent Gaussian arms, which is the first sublinear regret bound for knowledge gradient based algorithms. We also perform empirical validation of the theoretical properties of ORKG and empirical sensitivity analysis of the key hyperparameters of ORKG. Lastly, we verify empirical performance of ORKG in Gaussian stochastic MAB problems against other well-known MAB algorithms with provable regret bounds.

2 Problem Setting

We consider “online” sequential decision problem in which a decision-making agent faces partial information stochastic MAB problem, in particular with bounded Gaussian stochastic arms and unknown total number of decisions to make. For each time index \(t \in \left\{ 0, 1, \cdots , T-1 \right\} \) with unknown finite time horizon T, the agent must make a decision, denoted by x, among \(K < \infty \) mutually independent arms that can be indexed by \(i \in \left\{ 1, 2, \cdots , K \right\} \), and then observe a bounded random reward/contribution \(C_t\) from respective arm’s distribution with mean \(\mu ^{i}\) and standard deviation \(\sigma ^i\) that are unknown to the agent. We use \(x_t\) for decision made at time t, and \(\mathcal {X}\) as the set containing all possible decisions. Hence, \(\forall t: x_t \in \mathcal {X}\), and \(|\mathcal {X}| = K\).

The goal of the agent is twofold: 1) to learn the best arm \(i^*\) whose reward distribution has the largest mean (i.e. \(\mu ^{i^*} = \max _{i} \left\{ \mu ^{i} \right\} =: \mu ^*\) using the observations incurred by past decisions, and 2) to control the impact of inevitable suboptimality caused by choosing arms that are not the best arm without knowing the best arm a priori. Note that the term “online” is not the same as in online convex optimization, but instead is related to the second aspect of the goal of the learning agent – that the performance of the agent while it is learning (i.e. “online”) is important, as opposed to batch learning such as R&S problems where only final performance matters.

The belief state \(B_t\) at time t, for KG-based algorithms with independent Gaussian belief model, is defined as the sufficient information to model Gaussian rewards incurred by each action \(x \in \mathcal {X}\). Hence, we define \(B_t := \left\{ \left. \left( \bar{\mu }^x_t, \bar{\sigma }^x_t \right) \right| x \in \mathcal {X} \right\} \), as the set of mean parameter estimates \(\bar{\mu }_t^x\) and standard deviation parameter estimates \(\bar{\sigma }_t^x\) for all \(x \in \mathcal {X}\).

Under independent Gaussian belief model, KG of choosing x at time t can be efficiently computed [6] using the following closed form formula:

where \(\varPhi ( \cdot )\) and \(\phi ( \cdot )\) are the cumulative distribution function and the probability density function of standard Gaussian distribution, respectively. \(\xi _t^x\) is defined as:

where \(\tilde{\sigma }_t^x := {\bar{\sigma }_t^x} / {\sqrt{1 + \left( {\sigma ^\epsilon } / {\bar{\sigma }_t^x}\right) ^2}}\) . \(\sigma ^\epsilon \) is the standard deviation of the zero-mean Gaussian measurement noise assumed to be found on all observed reward C(x) for all \(x \in \mathcal {X}\). Most KG-based algorithms have \(\sigma ^\epsilon \) as a hyperparameter.

Using KG as-is to solve online learning problems is expected to fail, because R&S problem disregards the rewards caused by a fixed, known number of choices which it considers as the learning process. From this perspective, KG algorithm for online learning problems (OKG) is proposed [13]. OKG algorithm chooses action \(x_t\) at time t as:

where T is the total number of choices to make in the online learning problem. Naturally, OKG algorithm requires knowing the true time horizon T, after which it exploits learned information and choose the action with best expected mean reward.

3 Online Learning with Regularized KG

We present Online learning with Regularized KG (ORKG) with independent Gaussian belief, a novel online learning algorithm with knowledge gradient, in Algorithm 1. Compared to OKG algorithm [13], ORKG introduces two key innovations: 1) standardizing and regularizing knowledge gradient; 2) adaptively learning exploration parameter \(\rho _t\). ORKG contains two key hyperparameters \(\kappa _R > 0\) and \(0< \delta < 1\), and we use \(\kappa _R=0.01\), \(\delta =0.01\) as their default values. These hyperparameters are explained in theoretical analysis of ORKG (Sect. 4) and their default values are justified in empirical sensitivity analysis of ORKG (Sect. 5.2).

As in step 6 of Algorithm 1, ORKG algorithm chooses action at time t as:

where \(\rho _t := \sqrt{2 \ln \left( \frac{2 \left| \mathcal {X} \right| }{ \delta \pi _t} \right) } \frac{1}{\max \left\{ \kappa _{R}, \min _{x \in \mathcal {X}} \kappa _t^{x} \right\} }\), in which \(\delta \in \left( 0, 1\right) \) and \(\pi _t\) is a sequence satisfying \(\sum _t^{\infty } \pi _t = 1\), for example, \(\pi _t := \frac{1}{\left( t + 1\right) ^2 } \frac{6}{\pi ^2}\). With this \(\rho _t\), ORKG balances the exploitation action to maximize \(\bar{\mu }^x_t\), the current estimate of mean reward incurred by action x and the exploration action to maximize \(\nu ^{RKG,x}_t\), the regularized knowledge gradient of action x at time t. It is notable that ORKG does not need to know the time horizon T; whereas OKG algorithm explicitly requires knowing the true T as shown in its decision rule (3). This property allows ORKG to be easily applied to online learning problems where explicit end-of-horizon is unknown or changes over time.

With carefully constructed decision rule, ORKG controls the exploration-exploitation dilemma in online learning problem with unknown horizon, and achieves sublinear regret upper bound as shown in Theorem 1.

Theorem 1

In stochastic MAB problems with bounded independent Gaussian arms, ORKG algorithm with independent Gaussian belief has regret upper bound:

with probability \(1 - \delta \), where \(0<\delta <1\), and \(L^{RKG}<\infty \) is a constant uniformly bounding smoothness of regularized KG surface.

Our proof strategy, which is inspired from GP-UCB algorithm [17], is as follow: first, the deviations of Gaussian rewards are taken with union bounds to bound squared one-step regret with high probability, given \(\delta \) and \(\kappa _R\), and then we sum up one-step regrets and bound the regret R(T) and derive \(\rho _t\) shown in Algorithm 1. The smoothness constant \(L^{RKG}\) is analyzed in greater detail in Sect. 4.2, and complete proof of Theorem 1 is given in appendix A.7.

Therefore, ORKG algorithm with independent Gaussian belief has a sublinear regret upper bound of \(O \left( \sqrt{ \left| \mathcal {X} \right| T \ln \left| \mathcal {X} \right| T} \right) \) with probability \(1 - \delta \), when its modeling assumption matches the problem specification.

4 Theoretical Analysis

4.1 Regularization of Knowledge Gradient in ORKG

In this section, we define the regularization of KG used in ORKG algorithm, and analyze the theoretical property of the regularized KG on which the sublinear regret bound of ORKG depends.

Conceptual summary of the regularization of KG in ORKG algorithm is as follows: 1) “standardize” KG into a unitless value, 2) force it to have a fixed uniform bound from below, 3) then give back its unit to match KG. Step 1 is achieved by computing standardized KG, and steps 2 and 3 are done in computing regularized KG from standardized KG.

Definition 1

\(\kappa _t^x\), standardized knowledge gradient of an action \(x \in \mathcal {X}\) at time t is defined for all \(x \in \mathcal {X}\) as:

where knowledge gradient \(\nu _t^{KG,x}\) is computed from belief state \(B_t\).

\(\kappa _t^x\) is “standardized” KG, in a sense that it has the same unit as \(\xi _t^x\):

where \(\xi _t^x\) is as defined in (2), \(\varPhi \) is the cumulative distribution function, and \(\phi \) is the probability density function of standard normal distribution.

We introduce the following regularization method, designed to achieve a needed property for a sublinear upper bound of the regret of ORKG, and at the same time easy to interpret.

Definition 2

\(\nu ^{RKG,x}_t\), the regularized KG for making a decision x at time t given belief state \(B_t\), is defined as

where \(\kappa _{R} > 0\) is the regularizing parameter, which is a small arbitrary constant uniform lower bound on \(\kappa ^x_t\) for all x, t, and \(\kappa _t^x\) is standardized KG computed at time t given belief state \(B_t\) according to Definition 1.

Note that from this regularization originates \(\kappa _{R}\), one of the two hyperparameters of ORKG algorithm. \(\kappa _{R}\) stands for the uniform lower bound on how small \(\kappa _t^x\) can get for all x, t.

4.2 Smoothness of Regularized KG Surface

In ORKG algorithm facing stochastic MAB with finite number of bounded Gaussian independent arms, \(\nu ^{KG,x}_t\) can be efficiently computed for all \(x \in \mathcal {X}\) given \(B_t\). To represent the “surface” of KG with respect to x at t, we consider \(\nu ^{KG}_t = \left[ \nu ^{KG,1}_t, \nu ^{KG,2}_t, \cdots , \nu ^{KG,K}_t \right] \) as a piecewise linear function measured at \(x=1, 2, \cdots , K\). We define a smoothness constant for the surface of KG as:

Definition 3

\(L^{KG,x}_t\), the smoothness constant of KG for action x at time t, is defined as:

\(L^{KG,x}_t\) represents the worst case relative difference between KG of x at t and smallest KG across all x at t, up to permutation of \(\mathcal {X}\), in the unit of the value of smallest KG at t. It has trivial lower bound of 1, and upper bound of \(\infty \) at \(t \rightarrow \infty \), suggesting that the KG “surface” may have a very sharp point.

On the other hand, the surface of regularized KG, whose smoothness constant is shown in Definition 4, has a smoothness bound as shown in Lemma 1.

Definition 4

\(L^{RKG,x}_t\), the smoothness constant of regularized KG for action x at time t, is defined, analogous to that of KG (Definition 3), as:

Lemma 1

There exists a finite constant \(L^{RKG}\) such that

Existence of a constant \(L^{RKG}\) is needed to establish the sublinear regret upper bound of ORKG, as the constant appears in the regret bound in Theorem 1. We provide the proof of Lemma 1 in appendix A.3.

5 Empirical Verification

In this section, we present multifaceted empirical verification of the performance of ORKG algorithm in online learning. We use Python package smpybandit [1] to implement all stochastic multi-armed bandit (MAB) benchmarks, on an AMD Ryzen 3900x CPU with 64GB of RAM. Benchmarks are randomized and repeated 100 times, and the sample mean and standard deviation from all repeats are reported. For each benchmark scenario, the best result in sample mean and all runner-up results within 1 standard deviation of the best result are boldfaced.

5.1 ORKG Compared to Other KG Algorithms

First, we demonstrate how the theoretical improvements of ORKG is realized, by comparing empirical performance of KG based algorithms in bounded Gaussian stochastic MAB problems. We compare ORKG algorithm against KG with independent Gaussian belief algorithm (KG) [6] and KG for general class of online learning problems algorithm (OKG) [13]. We also test \(\epsilon \)-greedy algorithm with constant \(\epsilon (t)=0.01\) as a widely known benchmark algorithm frequently seen in applications. The key differences of the algorithms are outlined in Table 1. We use \(\sigma _\epsilon =0.1\) as the value of the common hyperparameter among the KG algorithms for fair comparison.

We test the algorithms on the stochastic MAB benchmark problems with 5, 10, and 20 arms generating Gaussian rewards, whose mean parameter \(\mu _x\) sampled equally distanced in \([-5,5]\), with low variance scenario of \(\sigma ^2_x=0.1\) and high variance scenario \(\sigma ^2_x=1\) for all actions x. For each algorithm, we sum up observed regrets from \(t=1, \cdots , 10000\), and report their mean and standard deviations from 100 independent repeats in Table 2. ORKG shows expected behavior of controlling the cumulative regret throughout all tested settings, whereas other KG algorithms without sublinear regret bounds mostly show large regret. OKG, even when provided with additional information on the true time horizon \(T=10000\), achieves results comparable to ORKG only in 5 arms setting, not in the harder settings with 10 and 20 arms. KG, intended to solve R&S problem, shows worst performance in terms or regrets as expected. Note that \(\epsilon \)-greedy, a widely used algorithm in practice, shows extremely large standard deviation, suggesting hit-or-miss performance in online learning.

5.2 Sensitivity Analysis of ORKG

ORKG introduces new hyperparameters \(\delta \) and \(\kappa _R\) compared to other KG algorithms as shown in Table 1. Since those hyperparameters play critical role in the sublinear regret bound of ORKG, we analyze empirical sensitivity of ORKG to \(\delta \) and \(\kappa _R\), one by one, tested on Gaussian MAB benchmarks. In the main paper, we present results with 10 arms and high variance only, and full results are found in appendix (Figs. B.4 and B.5).

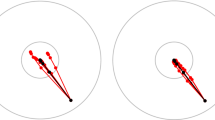

First, we vary \(\kappa _R \in \left\{ 0.0001, 0.001, 0.01, 0.1, 1 \right\} \) while fixing \(\delta =0.01\), and report the time evolution of cumulative regret against t, averaged over 100 repeats, as trajectories shown in Fig. 1.

It is evident that ORKG shows robust regret controls regardless of wide range of \(\kappa _R\), and retains robust advantage over KG and OKG. Considering the intuitive role of \(\kappa _R\) in ORKG to enforce the lower bound of KG and regularizes the smoothness of the KG surface, the subtle sensitivity to \(\kappa _R\) is theoretically expected, and can be interpreted as follows: changing \(\kappa _R\) can change the values of the ORKG decision rule (4) transiently when exploration happens, visualized as minor difference in early-stage trajectories (\(T<10^3\)) of ORKGs with different \(\kappa _R\) values in Fig. 1.

We recommend the default value of \(\kappa _R=0.01\), based on theoretical understanding of the value should be small enough to become the lower bound of KG, as the intuitive meaning of KG is the expected improvement from a single reward. Also, \(\kappa _R\) can be tuned with a priori information or at problem formulation stage: if the gap between the largest mean and the smallest mean of the rewards are known or can be enforced by clipping rewards, then \(\kappa _R\) can be set to be at least sufficiently smaller than the gap.

Next, we vary \(\delta \in \left\{ 0.0001, 0.001, 0.01, 0.1, 0.9 \right\} \) while fixing \(\kappa _R=0.01\), and report the time evolution of cumulative regret against t, averaged over 100 repeats, as the trajectories shown in Fig. 2.

It is notable that \(\delta \) affects the behavior of ORKG in mid-range \(10^2<T<10^4\) to vary, and in most cases, the impact appears to be transient as the regret is controlled for \(\delta \le 0.1\) cases. Drastically different behavior of ORKG is observed for \(\delta =0.9\) case, and this is expected according to the role of \(\delta \) in ORKG: the probability \(\delta \) of encountering a reward deviates more than the estimated mean plus exploration bonus term scaled by \(\rho _t\) (as given in (4)). Intuitively, larger \(\delta \) makes ORKG more cautious before greedily exploiting, since \(\delta \) is the probability of a rare event of facing unexpected rewards after choosing the action according to ORKG decision rule (4), and this is empirically shown by ORKG with \(\delta =0.9\) case in Fig. 2. Therefore, it is reasonable to set \(\delta \) in ORKG as a relatively small value even if \(\delta \in (0,1)\) is theoretically allowed, as \(\delta \) adjusts how much ORKG should expect the rare events would happen. We recommend the default value of \(\delta =0.01\), as \(1\%\) appears to be a good reference point for encountering “rare” events; if more frequent surprises are expected, larger \(\delta \) is recommended.

5.3 ORKG Performance Validation Against Other MAB Algorithms

We validate empirical performance of ORKG against other MAB algorithms with provable regret bounds, on stochastic Gaussian MAB benchmark problems set up the same way as described in Sect. 5.1. Both classic algorithms and cutting-edge algorithms for MAB are compared against ORKG in this validation, with abbreviated names as follow: UCB [11], kl-UCB [7], EXP3++ [16], TS [18], and BG [2]. Detailed rationale of choosing these algorithms are given in appendix Sect. B.1. For each algorithm, we sum up observed regrets from \(t=1, \cdots , 10000\), and report their mean and standard deviations from 100 independent repeats in Table 3.

As shown by boldfaced results across all scenarios, ORKG reliably performs well in all tested Gaussian MAB benchmark scenarios, with the cumulative regret of ORKG is on par with the top-performing algorithm within each scenario; whereas other algorithms show some scenario preferences in which they perform well. Both UCB, a classic algorithm, and Boltzmann-Gumbel (BG), a cutting edge algorithm are the runner-ups, closely followed by kl-UCB, an improved UCB with tighter bound that shows scenario preference different from UCB. We conjecture that the unexpectedly poor performance of EXP3++ may be an unwanted artifact of general-purposing EXP3 algorithm that is originally designed for adversarial MAB problems to have sublinear regrets for stochastic MAB problems as well. Thompson sampling (TS) also show unexpectedly poor performance in many-arms scenario, and we think that 10000 samples, although they are sufficiently many for 5 arms case, are not sufficient enough for 10 and 20 arms case, as there are more Bayesian estimates for TS to learn as the number of arms grow. All algorithms tested have regret bounds for Gaussian MAB problems tighter than the bound of ORKG we present in Theorem 1, and this empirical validation suggests existence of tighter regret bounds for ORKG.

6 Discussion

The simple regularization method for KG used in ORKG algorithm allows the first KG-based algorithm with sublinear regret bounds, yet this approach may be too simple to tighten regret bounds of ORKG on par with other stochastic MAB algorithms. Despite the theoretical gap in regret bounds, we witness impressive empirical performance of ORKG in MAB benchmarks with correct model specification. Notably, the empirical validations is performed with relatively few samples from MAB perspective, which suggests ORKG can perform well in real world applications where the number of samples are limited. Also, ORKG gives new insight to a long-standing question in KG literature on how to trade off exploration-exploitation correctly in online learning, and at the same time, ORKG allows interdisciplinary discussion between KG and MAB literature by providing the first regret bound result of KG-based algorithm in MAB problems.

7 Conclusion

We present a simple and effective method to regularize knowledge gradient (KG) that allows novel asymptotic regret analysis of KG-based algorithms with independent Gaussian belief model. Using regularized knowledge gradients, we construct ORKG, a KG-based online learning algorithm, and present its sublinear regret bound in partial information Gaussian MAB problem. We provide empirical validation of ORKG, and verify that ORKG algorithm performs comparable to select MAB algorithms with tighter regret bounds in Gaussian MAB benchmarks. Our result opens up an interesting stage for further research in KG from the perspective of MAB literature.

References

Besson, L.: SMPyBandits: an open-source research framework for single and multi-players multi-arms bandits (MAB) algorithms in python. GitHub.com/SMPyBandits/SMPyBandits (2018)

Cesa-Bianchi, N., Gentile, C., Lugosi, G., Neu, G.: Boltzmann exploration done right. Adv. Neural Inf. Process. Syst. 30 (2017)

Chen, S., Reyes, K.R.G., Gupta, M.K., McAlpine, M.C., Powell, W.B.: Optimal learning in experimental design using the knowledge gradient policy with application to characterizing nanoemulsion stability. SIAM/ASA J. Uncertain. Quant. 3(1), 320–345 (2015)

Frazier, P., Powell, W.: The Knowledge Gradient Policy for Offline Learning with Independent Normal Rewards (2007)

Frazier, P., Powell, W., Dayanik, S.: The knowledge-gradient policy for correlated normal beliefs. INFORMS J. Comput. 21(4), 599–613 (2009)

Frazier, P.I., Powell, W.B., Dayanik, S.: A knowledge-gradient policy for sequential information collection. SIAM J. Control Opt. 47(5), 2410–2439 (2008)

Garivier, A., Cappé, O.: The KL-UCB algorithm for bounded stochastic bandits and beyond. In: Kakade, S.M., von Luxburg, U. (eds.) Proceedings of the 24th Annual Conference on Learning Theory. Proceedings of Machine Learning Research, vol. 19, pp. 359–376. PMLR, Budapest (2011)

Han, W., Powell, W.B.: Optimal online learning for nonlinear belief models using discrete priors. Oper. Res. 68(5), 1538–1556 (2020)

He, X., Reyes, K.G., Powell, W.B.: Optimal learning with local nonlinear parametric models over continuous designs. SIAM J. Sci. Comput. 42(4), A2134–A2157 (2020)

Huang, Y., Zhao, L., Powell, W.B., Tong, Y., Ryzhov, I.O.: Optimal learning for urban delivery fleet allocation. Transp. Sci. 53(3), 623–641 (2019)

Lai, T.L., Robbins, H.: Asymptotically efficient adaptive allocation rules. Adv. Appl. Math. 6(1), 4–22 (1985)

Negoescu, D.M., Frazier, P.I., Powell, W.B.: The Knowledge-Gradient Algorithm for Sequencing Experiments in Drug Discovery (2011)

Ryzhov, I.O., Powell, W.: The knowledge gradient algorithm for online subset selection. In: 2009 IEEE Symposium on Adaptive Dynamic Programming and Reinforcement Learning, March 2009, pp. 137–144 (2009)

Ryzhov, I.O., Powell, W.B., Frazier, P.I.: The knowledge gradient algorithm for a general class of online learning problems. Oper. Res. 60(1), 180–195 (2012)

Scott, W., Frazier, P., Powell, W.: The correlated knowledge gradient for simulation optimization of continuous parameters using Gaussian process regression. SIAM J. Opt. Publ. Soc. Indust. Appl. Math. 21(3), 996–1026 (2011)

Seldin, Y., Slivkins, A.: One practical algorithm for both stochastic and adversarial bandits. In: Xing, E.P., Jebara, T. (eds.) Proceedings of the 31st International Conference on Machine Learning. Proceedings of Machine Learning Research, vol. 32, pp. 1287–1295. PMLR, Bejing (2014)

Srinivas, N., Krause, A., Kakade, S.M., Seeger, M.: Gaussian Process Optimization in the Bandit Setting: No Regret and Experimental Design (2009)

Thompson, W.R.: On the likelihood that one unknown probability exceeds another in view of the evidence of two samples. Biometrika 25(3/4), 285–294 (1933)

Thul, L., Powell, W.: Stochastic Optimization for Vaccine and Testing Kit Allocation for the COVID-19 Pandemic (2021)

Tian, Z., Han, W., Powell, W.B.: Adaptive learning of drug quality and optimization of patient recruitment for clinical trials with dropouts. Manuf. Serv. Oper. Manag. (2021)

Wang, Y., Do Nascimento, J.M., Powell, W.: Reinforcement Learning for Dynamic Bidding in Truckload Markets: An Application to Large-Scale Fleet Management with Advance Commitments (2018)

Wang, Y., Wang, C., Powell, W.: The knowledge gradient for sequential decision making with stochastic binary feedbacks. In: Balcan, M.F., Weinberger, K.Q. (eds.) Proceedings of The 33rd ICML. Proceedings of Machine Learning Research, vol. 48, pp. 1138–1147. PMLR, New York (2016)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Lee, D., Powell, W.B. (2022). Online Learning with Regularized Knowledge Gradients. In: Gama, J., Li, T., Yu, Y., Chen, E., Zheng, Y., Teng, F. (eds) Advances in Knowledge Discovery and Data Mining. PAKDD 2022. Lecture Notes in Computer Science(), vol 13281. Springer, Cham. https://doi.org/10.1007/978-3-031-05936-0_26

Download citation

DOI: https://doi.org/10.1007/978-3-031-05936-0_26

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-05935-3

Online ISBN: 978-3-031-05936-0

eBook Packages: Computer ScienceComputer Science (R0)