Abstract

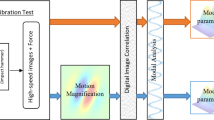

We perform Operational Modal Analysis (OMA) through Frequency Domain Decomposition (FDD) on a test structure using a high speed camera. Using optical flow algorithms, brightness changes due to displacements of a test structure under load are translated into displacement data. By using a mirror, a split image is generated that enables a stereoscopic view of the structure and thus 3D information can be extracted from the high speed video footage.

This setup is used to determine the modal parameters of the structure, especially the mode shapes with high spatial resolution. The mirror that is used to gather a second point of view onto the image sensor enables a cost-efficient stereoscopic measurement compared to a two-camera setup. Moreover, it eliminates the need of synchronizing two separate signals. This is also why OMA is used instead of EMA: as no force signal needs to be recorded, we can perform a full-field modal analysis with inputs from only one single device.

The results are evaluated taking into account the accuracy as well as the complexity of the setup of the 3D measurements compared to a setup using triaxial accelerometers.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

11.1 Introduction

Using digital high speed cameras (HSC) as displacement sensors is a technique that gained popularity as image sensors got ever more capable and less noisy over the past decades. In contrast to the widely used piezo-electric accelerometers, camera based displacement identification offers the possibility of full-field measurements instead of having to choose few measurement points. There exist multiple algorithms that are able to calculate displacements of measurement points based on the difference between a current frame and a reference frame of a video [1]. Digital Image Correlation (DIC) [2] for example takes a region of the reference frame, moves and warps it with different parameters and optimizes these parameters to match the region in the current frame. Computationally less demanding algorithms that are applicable for very small displacements are based on the optical flow equation [3], such as the Lucas–Kanade Algorithm [4], which is used in this work. Effectively, all these algorithms allow the engineer to use single pixels or small regions of pixels as individual displacement sensors. This allows for contactless measurements with an unparalleled spatial resolution. An important drawback of camera measurements is a relatively high level of noise and the limitation to only two dimensions (perpendicular to the optical axis) in which the measurements are possible.

This study aims at circumventing the dimensional limitation by using a mirror that produces a second view on the observed structure in order to enable a three-dimensional reconstruction of the structure’s vibrations. The idea of splitting the camera’s view is not new and has been shown to enable three-dimensional measurements with only one camera. Different researchers have developed setups consisting of multiple mirrors [5] or a biprism [6] that split the sensor image into two sub-images to perform 3D reconstruction by means of finding the internal and external parameters of the cameras and the setup used. In this study, we want to even simplify the procedure by avoiding the camera calibration using a special setup that allows for a very simple 3D reconstruction. Moreover, the setup is not only used to record displacements and grade their accuracy, but we perform Operational Modal Analysis (OMA) with the data.

OMA is a way to determine the modal parameters of a structure without the measurement of the excitation forces. The method originated in the field of civil engineering, where large structures (buildings, bridges) cannot be moved to a lab and excited in a controllable manner. Instead, the forces acting on these structures due to wind or traffic are considered to be broad band and random. Under this assumption, eigenfrequencies, mode shapes and modal damping can still be extracted from a measurement of displacements, velocities or accelerations on the structure [7]. For a good estimation of a structure’s modal parameters, it is advisable to conduct measurements as long as possible. This goal of relatively long duration measurements is in conflict with limited memory when using a camera that produces very large amounts of data compared to traditional sensor setups with a very limited number of channels.

11.2 Experimental Setup

11.2.1 Equipment

The considered structure (see Fig. 11.1) is made of brass that consists of three parts that were soldered together. It was once designed to primarily vibrate in the top part’s out-of-plane direction to enable a measurement even without stereo vision due to the fact that almost any observed displacements could be interpreted as some projection of the true vertical movement. However, this structure is still used in this study because the very first mode is a rotation about the vertical axis (i.e. displacements in the horizontal plane) and therefore not accessible with the old strategy.

The camera is a Photron Fastcam Nova S6 with 8 GB of memory and 1024 × 1024 pixel resolution. It has a monochrome 12 bit sensor. The light source is a 360 W LED that provides 36,000 lm. A first-surface mirror is used to provide the second view. The mirror was at hand at our lab and has the advantage that it exhibits no ghosting effects from any secondary reflection as might happen for a standard mirror.

11.2.2 Setup

In order to achieve a stereoscopic with only one camera, a mirror was used to produce a second view on the structure that can be interpreted as the image recorded from a virtual camera that is located straightly above the structure. The camera is therefore placed in front and slightly above the structure aiming downwards at a small angle (Fig. 11.2a) such that in the original view of the structure, it appears as a trapezoid (Fig. 11.2b). The mirror is then adjusted in a way such that the structure is seen perfectly from the top, appearing as a rectangle on the camera sensor. This setup, which takes some time to fine-tune, allows for a fairly easy 3D reconstruction of the structure’s movement based on the raw displacements that are observed by the camera’s image sensor.

Schematics of the experimental setup describing the positions of the structure, mirror, and camera. The images are not true to scale. (a) Experimental setup and the chosen coordinate system. The viewing angle φ is assumed to be constant for the complete surface. (b) View of the camera. The mirror is adjusted such that the top view is perpendicular to the surface

11.2.3 Lucas–Kanade Displacement Identification

A chessboard pattern was applied to the structure’s surface to provide high contrast on the pattern’s edges, which enables the displacement measurements. The displacements of the corner points of the chessboard pattern are then identified using the Lucas–Kanade algorithm as implemented in the open-source python package pyIDI [8]. The algorithm basically finds the translations of a region of interest (ROI) around the selected pixel based on the brightness gradient of the pixels in the ROI. This method is equivalent to the first iteration of a classic DIC method and is well suited for applications where only very small displacements are expected as is the case for the observed structure.

11.2.4 3D Reconstruction Equations

Top View Through the Mirror

The top view in the mirror provides a very easy-to-interpret displacement measurement. As the virtual camera is filming perpendicularly to the surface, small out-of-plane movements are not perceptible while a horizontal displacement along the x-axis of the structure results in a left-right movement on the image sensor. Accordingly, a displacement along the structure’s y-axis will be seen as an up-down movement on the sensor. The only thing that has to be taken into account carefully, is the swapped sign for the y-displacements due to the mirror.

Original View

In the original view, only for the x-direction of the true displacements, we can assume to have an undistorted measurement when identifying the image’s movement on the sensor. In the sensor’s vertical direction, it receives a mixture of movements in the y- and z-direction. The concept that is used for this study is to use the y-measurement from the top view to isolate the z-deformation in the original view’s displacement measurement.

Resulting Equations

With the two views of the structure described above, we can now formulate the equations needed to identify 3D displacements of the structure based on two 2D measurements:

In these equations, Δh and Δv describe the horizontal and vertical displacements (with respect to the image sensor) identified in the video, respectively. The subscripts indicate whether the displacement was observed through the mirror (top view) or directly. The signs in the equations arise from the fact that the displacements generated by the python package pyIDI are positive for a displacement in the downward direction of the sensor. This is a convention in image processing where the pixels are numbered starting at the top left corner, which leads to ascending coordinates as one goes down from top to bottom.

Known Inaccuracies

The equations presented above contain some simplifications that lead to inaccuracies that we are aware of, but decided to first not address.

Viewing angle There is only one single angle φ used for all points on the surface. This is obviously not exactly true as can be seen in Fig. 11.2a: the angle is different depending on the position of the observed points along the y-axis.

Scaling Due to the front edge being nearer to the camera than the far edge, not only the front edge looks larger in the image, but also the displacements observed for nearer points are enlarged by a certain factor. We assume a constant pixel to mm conversion, which is tuned for the image in the mirror.

Both described errors come from the fact that the camera is not infinitely far away from the structure, which would render the light rays perfectly parallel. Because they are not, also other minor errors might play a small role, for example z-displacements might be slightly observable in the off-center regions, even for the mirrored view. In practice, we placed the camera as far away as possible using the full zoom range of the lens attached to the camera while still using the complete sensor and hence the maximum resolution.

11.3 Experimental Results

11.3.1 Comparison with Accelerometer Results

To gain some insight into how good the identification of displacements in x-, y- and z-direction works based on the equations presented before, we attached one triaxial accelerometer in one corner of the structure on the bottom side of the top plate in the left corner away from the camera and compared its recorded signal to the result of the displacement identification and our 3D reconstruction. The structure was therefore excited by a modal hammer with a vinyl tip at the front right corner (as seen from the camera) both in z-direction and in the horizontal plane in order to excite multiple modes. The hammer with the vinyl tip was used because the metal wrench (see Sect. 11.3.2) yielded heavy overload on the accelerometer when used as an excitation tool with hard impacts, which are needed for a decent displacement signal. Unfortunately, with this tip, only the rather low-frequency range (below 1000 Hz) – and thus merely the first three eigenmodes – were adequately excited.

The movement of the structure can be interpreted as the rigid body motion of the top plate for that lower frequency range. These first modes that dominate the structure’s behavior are in ascending order of frequency:

-

1.

rotation around the z-axis

-

2.

tilting around the y-axis

-

3.

tilting around the x-axis

The acceleration signal was integrated twice using the trapezoidal rule, while the displacements identified from the video were transformed from the unit of pixels (the output unit of pyIDI) to millimeters. Therefore, the known dimensions of the structure were simply compared to the number of pixels the structure’s edges occupy on the sensor. This yields a count of almost exactly 5 pixel/mm.

Figure 11.3 shows parts of the structure’s answer to a series of impacts. At time t = 0 s, the first impact occurs, such that the plots show the decay after the first hit and the beginning of the second one. In the time domain, one can clearly see that the method delivers results that resemble the acceleration measurements, however there are obvious differences, especially in the x- and y-directions. Because the dominating frequencies are relatively close to each other, there is quite a heavy beat to the curves, which makes it hard to judge whether there is an error in the overall amplitude. For this reason, we also look at the autopower spectral density of the signals that are depicted on the right. We can see that for the first eigenfrequency where x and y are the directions in which the structure deflects, the amplitude fits the integrated acceleration measurement very well. That indicates that there is no systematic error in the scaling of the optical measurement for those directions. For the z-direction, things look a little bit different. Here, the time histories of both HSC and accelerometer look almost the same with just some scaling error: the amplitudes of the optical measurement are slightly too large compared to the reference measurement from the accelerometer. This holds true for the whole frequency band under consideration (see Fig. 11.3f). While the errors in x- and y-direction are harder to interpret, there is a simple possible explanation for the deviations in the third direction: The angle of view φ (see Fig. 11.2a) was estimated very roughly based on the distance between the camera and the middle of the structure. First of all, this estimation can be off by some degrees. Moreover, the point under consideration is not located in the middle of the structure, but at the far edge. This means, that the effective angle is smaller than the one assumed in Eq. 11.4, thus the displacements get overestimated.

Comparison of displacement measurements in all three directions. The signals are all high-pass filtered to eliminate the low-frequency motion coming from the tripod. The left figures show the respective displacements over time and on the right, the PSD of the signals is shown in the frequency band where the first three eigenfrequencies are located. (a) Displacements in x-direction (time history). (b) Displacements in x-direction (PSD). (c) Displacements in y-direction (time history). (d) Displacements in y-direction (PSD). (e) Displacements in z-direction (time history). (f) Displacements in z-direction (PSD)

For the sake of simplicity, we still want to conduct this first investigation with all those simplifications and observe, how strongly they affect the outcome of a modal analysis.

11.3.2 OMA

After a first look at the measured 3D signals, they are now used to find the eigenfrequencies and mode shapes of the example structure. On top of the first three eigenfrequencies around 400 Hz with mode shapes described in the previous section, the next eigenfrequency does not occur until about 1660 Hz where the top plate exhibits its first flexible mode with two corners along a diagonal bending upwards and the other two downwards. For any higher modes, the used frame rate of 4000 fps was too low such that we only expect the four described modes. As a very first test showed, the excitation should be rather strong in order to experience large enough displacements that well surpass the noise floor of the camera. For that purpose, a large steel wrench was used to impact the structure. During the measurement, which only lasts 1.3 s, only two or three impacts could be achieved. Such a short measurement is definitely not ideal in the context of OMA, but the only way to achieve longer measurements with a given camera is to lower the resolution, which in turn would effectively reduce the sensitivity. As we know that the rather bad signal-to-noise ratio is the biggest problem when measuring with a camera, we use the highest possible resolution at the expense of a short duration.

After the displacements resulting from the impacts were recorded, the Frequency Domain Decomposition (FDD) method [9] was applied. This frequency domain method first computes the signals’ matrix of crosspower spectra R and then finds the Singular Value Decomposition (SVD) for each frequency line:

From the plot of the singular values over frequencies, the user can then pick apparent eigenfrequencies (peaks of the first singular value). At each chosen frequency line (the eigenfrequencies), the first column of the matrix U can be interpreted as the corresponding mode shape. The results from this procedure can be seen in Fig. 11.4.

First four identified mode shapes (blue dots) of the structure, each in a 3D view (left) and a suitable side view (right). The light gray dots represent the undeformed surface. All shapes were acquired using the Frequency Domain Decomposition Method with data taken from a short video of 1.3 s at 4000 fps. (a) First mode Shape, f 1 = 377 Hz. (b) First mode Shape, view from top. (c) Second mode Shape, f 2 = 424 Hz. (d) Second mode Shape, view from side, along y-axis. (e) Third mode Shape, f 3 = 441 Hz. (f) Third mode Shape, view from side, along x-axis. (g) Fourth mode Shape, f 4 = 1662 Hz. (h) Fourth mode Shape, view from side, along x-axis

The four mode shapes presented look quite as expected, although there are some irregularities in the deformation pattern, especially in the front half (towards the negative y-axis), which might be the result of lighting conditions that were probably not ideal in that region. However, even the strong simplifications made for the 3D reconstruction do not change the fact that mode shapes can be extracted and are qualitatively correct. Looking at the mode shapes, it is very likely that putting more effort in a better lighting situation would provide much cleaner looking mode shapes. This is supported by the fact that the half that is far from the camera delivers very smooth shapes with only very little apparent noise.

11.4 Conclusion

We tested a one-camera setup for stereoscopic displacement measurements and conducted OMA with the results. While there are many points that make such an approach problematic (a series of simplification errors not taken into account, bad signal-to-noise ratio for camera measurements, very short measurement duration), the presented method still provides mode shapes that look just as expected and offer a great spatial resolution. The results also highlight the fact that proper lighting is probably the most crucial part in conducting measurements with a high speed camera. For example, it should be considered to use a second light source coming from the other side of the structure as well instead of just using one. Apart from that issue, which obviously deteriorated the results in some parts of the structure, the gained mode shapes look very promising. Also, the presented and highly simplistic 3D reconstruction seems to be a reasonable choice, at least for such a simple structure. For future studies, one should still consider using a proper 3D reconstruction. This approach requires some calibration, but in turn is more flexible as the method presented would need to be adjusted every time the mirror is not able to be placed exactly as described.

References

Baqersad, J., Poozesh, P., Niezrecki, C., Avitabile, P.: Photogrammetry and optical methods in structural dynamics - a review. Mech. Syst. Signal Process. 86, 17–34 (2017). https://doi.org/10.1016/j.ymssp.2016.02.011

Chu, T.C., Ranson, W.F., Sutton, M.A.: Applications of digital-image-correlation techniques to experimental mechanics. Exper. Mech. 25(3), 232–244 (1985). https://doi.org/10.1007/bf02325092

Brandt, J.W.: Improved accuracy in gradient-based optical flow estimation. Int. J. Comput. Vision 25(1), 5–22 (1997). https://doi.org/10.1023/a:1007987001439.

Lucas, B.D., Kanade, T.: An iterative image registration technique with an application to stereo vision. In: Proceedings of the International Joint Conference on Artificial Intelligence, pp. 674–679 (1981)

Yu, L., Pan, B.: Single-camera stereo-digital image correlation with a four-mirror adapter: optimized design and validation. Opt. Lasers Eng. 87, 120–128 (2016). https://doi.org/10.1016/j.optlaseng.2016.03.014

Genovese, K., Casaletto, L., Rayas, J., Flores, V., Martinez, A.: Stereo-digital image correlation (DIC) measurements with a single camera using a biprism. Opt. Lasers Eng. 51(3), 278–285 (2013). https://doi.org/10.1016/j.optlaseng.2012.10.001

Brincker, R., Ventura, C.E.: Introduction to Operational Modal Analysis. Wiley, Hoboken (2015). https://doi.org/10.1002/9781118535141

Zaletelj, K., Gorjup, D., Slavic, J.: ladisk/pyidi: Release of the version v0. 23 (2020)

Brincker, R., Zhang, L., Andersen, P.: Modal identification from ambient responses using frequency domain decomposition. In: Proceedings of the 18th International Modal Analysis Conference (IMAC), San Antonio, Texas (2000)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Society for Experimental Mechanics, Inc.

About this paper

Cite this paper

Gille, M., Judd, M.R.W., Rixen, D.J. (2023). Stereoscopic High Speed Camera Based Operational Modal Analysis Using a One-Camera Setup. In: Di Maio, D., Baqersad, J. (eds) Rotating Machinery, Optical Methods & Scanning LDV Methods, Volume 6. Conference Proceedings of the Society for Experimental Mechanics Series. Springer, Cham. https://doi.org/10.1007/978-3-031-04098-6_11

Download citation

DOI: https://doi.org/10.1007/978-3-031-04098-6_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-04097-9

Online ISBN: 978-3-031-04098-6

eBook Packages: EngineeringEngineering (R0)