Abstract

Modeling of users and items is essential for accurate recommendations. Traditional methods focused only on users’ behavior data for recommendation. Several recent methods attempted to use multi-modal data (e.g. items’ attributes and visual features) to better model users and items. However, these methods fail to model users’ dynamic and personalized preferences on different modalities. In addition, besides useful information for recommendation, the multi-modal data also contains a great deal of irrelevant and redundant information that may mislead the learning of recommendation models.

To solve these problems, we propose a Memory-augment Multi-Modal Information Bottleneck method, named \(M^3\)-IB, for next item recommendation. First, we design a memory network framework to maintain modality-specific knowledge and capture users’ dynamic modality-specific preferences. Second, we propose to model and fuse users’ personalized preferences on different modalities with a multi-modal probabilistic graph. Then, to filter out irrelevant and redundant information in multi-modal data, we extend the information bottleneck theory from single-modal to multi-modal scenario and design a multi-modal information bottleneck (M2IB) model. Finally, we provide a variational approximation and a flexible implementation of the M2IB model for next item recommendation. Experiential results on five real-world data sets demonstrate the promise of the proposed method.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Next item recommendation

- Multi-modal data

- Memory networks

- Information bottleneck

- Variational approximation

1 Introduction

Recommender systems gain popularity for many online applications such as e-commerce and online video sharing, which can enhance both users’ satisfaction and platforms’ profits. In real-world scenarios, users always engage items, such as click or purchase, in a sequential form. Therefore, predicting and recommending the next item that the target user may engage, known as next item recommendation, is a key part of recommender systems.

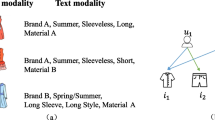

Multi-modal data, such as items’ visual and acoustic features, carries rich content information of items, which can help to better model users’ preferences and items’ characteristic for next item recommendation. Several recent methods try to utilize multi-modal data by the constant feature-level combination for next item recommendation [2, 9, 12, 15]. However, these methods ignore users’ dynamic and personalized preferences on different modalities, which may restrict the recommendation performance. Figure 1 (a) shows a scenario of online film website. Bob preferred comedy films previously (at time t) but now he prefers horror films (at time \(t+1\)), which indicates users’ dynamic preferences about the genre modality. Alice focused more on the poster than on the genre when selecting films, which indicates users’ personalized preferences on different modalities.

Although the multi-modal data carries useful information for next item recommendation, it also contains a great deal of irrelevant and redundant information, which may mislead the learning of recommendation models. As shown in Fig. 1 (b), we have three modalities of data (circles) for the recommendation task (gray rectangle). It shows that multi-modal data may be overlapped and redundant (e.g. the repeated parts in \(x_4\), \(x_{5}\), and \(x_{6}\)), and not all information of multi-modal data is relevant for recommendation (e.g. \(z_1\), \(z_2\) and \(z_3\)) [23]. However, most existing methods adopt the combination strategies, such as feature-level aggregation or concatenation, for multi-modal data utilization, which exacerbates the misleading of irrelevant and redundant information for recommendation.

To address these problems, we propose a Memory-augment Multi-Modal Information Bottleneck model (\(M^3\)-IB) for next item recommendation. We first design a memory network based framework that consists of several knowledge memory regions and a lot of user-state memory regions. Each knowledge region stores the extracted item relevancies and similarities from a data modality as the modality-specific knowledge, and each private user-state region maintains the state of a specific user according to his/her dynamic behaviors. To learn users’ dynamic preferences on different modalities, we extract user-state related knowledge in each modality-specific knowledge region for the preference modeling. To learn users’ personalized preferences on different modalities, we propose a multi-modal probabilistic graph to discriminate and fuse the multi-modal information for different users. Furthermore, to mine useful information (e.g. \(x_1,\cdots ,x_6\)) and filter out irrelevant information (e.g. \(z_1\), \(z_2\), and \(z_3\)) and redundant information (e.g. the repeated parts in \(x_4\), \(x_{5}\), \(x_{6}\)) in multi-modal data, we design a multi-modal information bottleneck (M2IB) model based on the multi-modal probabilistic graph. Finally, we provide a variational approximation and a flexible implementation of the M2IB model for next item recommendation. Extensive experiments on five real-world data sets show that the proposed model outperforms several state-of-the-art methods, and ablation experiments prove the effectiveness of the proposed model and verify our motivations.

2 Related Work

Multi-modal Recommendation. Several recent studies attempted to design personalized recommendations models based on static multi-modal data, which can be divided into collaborative filtering models and graph models. The collaborative filtering models tried to combine user-item interaction records and items’ multi-modal content information for recommendation, i.e. treating structured attributes and users engaged items as factors of model [7]. For example, Chen et al. [3] proposed an attentive collaborative filtering method for multimedia recommendation, which can automatically assign weights to the item-level and content-level feedback in a distant supervised manner. As graph models can capture the collaborative or similarity relationship among the entities, they are widely used in recommender systems [22, 27, 28]. For example, Wei et al. [28] constructed a user-video bipartite graph, and utilized graph neural networks to learn modality-specific representations of users and micro-videos through message-passing. Although some of these methods [3, 28] explored users’ personalized preferences on different data modalities to some extent, they can not capture users’ dynamic modality-specific preferences with their dynamic behaviors.

In real-world scenarios, users’ behavior data always appears as a sequence and every behavior is linked with a timestamp. As a result, sequential recommendation, which aims at recommending the next item to users, has been becoming a hot research topic in recent years [5, 12]. To fully exploit multi-modal information, most of existing methods adopted feature-level combination for next item recommendation. Some methods concatenated the features of different modalities in user’s behavior order, and mined their transition patterns between adjacent behaviors [5, 29]. For example, Zhang et al. [29] tried to model item- and attribute- level transition patterns from adjacent behaviors for next item recommendation. Some methods combined items’ multi-modal content to enrich their representations, thereafter, such representations were fed into a sequential model such as RNN for next item recommendation [2, 9, 12, 15]. For example, Huang et al. [9] combined unified multi-type actions and multi-modal content representation, and proposed a contextual self-attention network for sequential recommendation. However, these methods failed to explore users’ dynamic modality-specific and personalized preferences on different modalities. More importantly, these feature-level combination strategies may exacerbate the misleading for the recommendation models by irrelevant and redundant information.

Memory Networks Recommendation. Recently, memory networks gain their popularity in recommender systems, which can capture users’ historical behaviors or auxiliary information for recommendation [6, 30]. For example, Zhu et al. [30] utilized the memory networks to store the categories of items in the last basket, and inferred the categories that users may need for next-basket prediction. However, these methods rely on specific data, which may be not applicable for dynamic and heterogeneous multi-modal data. In addition, these methods can not filter out the irrelevant and redundant information for recommendation.

Information Bottleneck. The information bottleneck (IB) principle [25] provides an information-theoretic method for representation learning. Compared to the traditional dimension reduction methods that fail to capture varying information amounts of different inputs (e.g. as in the encoder neural networks), the IB principle permits encodings to keep the amount of effective information varying with inputs in a high-dimensional space [13]. As the IB principle is computationally challenging, Alemi et al. [1] presented a variational approximation to it with a single-modality input. However, the existing IB models are not designed for recommendation tasks, which fails to fuse multi-modal information and discriminate the significance of individual modalities for each user.

We notice that the proposed model is related to some existing models such as variational auto-encoding (VAE) [11] based recommendation. For example, Liang et al. [14] proposed to combine MF and VAE to enforce the consistency of items embedding and their content (e.g. poster and plot) for a recommendation task. But it fails to utilize sequential information and remove irrelevant and redundant information for recommendation. Ma et al. [17] explored the macro and micro disentanglement for recommendation via the \(\beta \)-VAE scheme. However, it is impossible to learn disentangled representation with unsupervised learning [16]. Instead of learning disentangled representation of users, we attempt to mine the most meaningful and relevant information in multi-modal data with supervised learning.

3 Problem Definition

Let \(\mathcal {U} = \{u_1,\cdots ,u_M\}\) and \(\mathcal {I} = \{i_1,\cdots ,i_N\}\) denote the sets of M users and N items, respectively. Besides user-item interaction records, we suppose to know the order or timestamps of interactions. Therefore, we denote the sequential behaviors of each user \(u\in U\) as \(S_u(t)=[s_{1},\cdots ,s_{t}]\), where \(s_{j}\in \mathcal {I}\) denotes the j-th item that user u engaged. In addition, we assume that multi-modal content of items is available, e.g. attributes, visual, acoustic, and textual features. For structured data \(\mathcal {A}=\{a_1,\cdots ,a_{|\mathcal {A}|}\}\), we suppose to know each item i’s \(a_k\)-typed (e.g. Actor) attribute values \(V_i(a_k)=\{v_1,\cdots ,v_{|V_i(a_k)|}\}\) (e.g. Leonardo, Downey). For unstructured data \(\mathcal {C}=\{visual,\) \( acoustic, textual\}\), we can obtain their semantic representations \(\{e_i^m \in \mathbb {R}^d|m\in \mathcal {C}\}\) of item i with feature extraction strategies, e.g. deep CNN visual features [18].

The goal of sequential recommendation is to learn a prediction function \(f(\cdot )\) based on all users’ sequential behaviors and multi-modal data to predict the next item that each user u may engage. In this paper, we take items’ multi-modal content information into consideration and define the prediction function as \(s(u,t) = \max _{i\in \mathcal {I}}f(u,i,S_u(t),\mathcal {A}\cup \mathcal {C} )\).

4 The Proposed Method

The overall architecture of the proposed method is shown in Fig. 2. First to extract from multi-modal data, we design a memory network framework to capture users’ dynamic modality-specific preferences according to their dynamic behaviors (Sect. 4.1). Then to fuse users’ multi-modal preferences, we propose the M2IB model with a multi-modal probabilistic graph to learn users’ personalized preferences on different modalities, and filter out irrelevant and redundant information (Sect. 4.2). Finally, we provide the detailed implementation of the variational M2IB model, which can encode, fuse, and decode users’ multi-model preferences for next item recommendation (Sect. 4.3).

4.1 Memory Network Framework

To make use of multi-modal data, we propose a memory network framework called MemNet as shown in the left part of Fig. 2 (a), which consists of several knowledge memory regions and user-state memory regions.

Knowledge Memory Regions. To exploit the heterogeneous multi-modal data for recommendation, we organize multi-modal data as unified item-to-item similarity matrices. These matrices can be seen as the global-sense knowledge shared by all users, which measures the modality-specific relevancies and similarities between items with different modalities.

Inspired by the collaborative filtering models [21], we assume that there exist some collaborative correlations that describe users’ behavior patterns. For example, some persons always buy pencils and erasers together, and some persons are used to buying the gum shell after buying the cigarette. To this end, we calculate the collaborative correlations between items based on user-item behavioral and sequential interactions respectively, which are denoted as \(M^c,M^s \in \mathbb {R}^{N\times N}\). Specifically, co-engaged collaborative correlation \(M^c_{ij}\) counts the number of users who engaged both item i and item j, while sequential collaborative correlation \(M^s_{ij}\) counts the number of users who engaged item i and item j chronologically.

Inspired by the content based models [4], we assume that items’ similarities measured by each content modality reflect users’ inherent preference from a specific aspect. For example, some persons prefer to watch similar movies. For structured data such as items’ \(a_k\)-typed attributes, we calculate the similarities \(M^{a_k}\) among items by counting the shared \(a_k\)-typed attributes by item i and item j, i.e. \(M^{a_k}_{ij} = |V_i(a_k) \cap V_j(a_k)|\). For unstructured data such as items’ visual content, we calculate their similarities \(M^{m}\) among items based on the extracted features \(e^m\), e.g. inner product similarity.

To alleviate the differences between the measurement strategies, we only keep the F most frequent patterns for each modality inspired by the frequent pattern model [23] and the reassignment strategy [27]. We store the knowledge in P knowledge regions of the MemNet framework, which is denoted as \(\mathcal {M} = [M^c,M^s,M^{a_1},\cdots ,M^{a_{|\mathcal {A}|}},M^{\text {visual}},M^{\text {acoustic}},M^{\text {textual}}]\).

Private User-Specific Memory Regions. To capture users’ dynamic preferences, we propose to maintain the dynamic behaviors of each user in user-state memory regions. Let \(\varLambda _u(t,K)\) denote the user-state memory for user u, where K is the memory capacity. After getting a new behavior of user u, i.e. user u interacting with item i at time t, we update the memory queue \(\varLambda _u(t,K)\) to maintain its dynamic nature. We first drop the front item from the queue if the memory queue is full, and then add the new item i into the rear of the memory queue.

To model user u’s dynamic modality-specific preference \(X^{u,t}_p\in \mathbb {R}^N\) for different modalities, we aggregate the user-state related knowledge of each modality for the user’s preference modeling at time t, i.e.

where \(\mathcal {M}^{p}_{i}\) denotes the i-th row (related to item i) of the p-th knowledge region matrix \(\mathcal {M}^p\).

4.2 Multi-modal Information Bottleneck Model

Multi-modal Probabilistic Graph with IB Principle. To learn the user’s personalized preferences on different modalities, we propose a multi-modal probabilistic graph (M2PG) to discriminate and fuse his/her modality-specific preferences as shown in Fig. 2 (b). Specifically, the user’s modality-specific preferences \([X_1,\cdots ,X_P]\) are taken as the inputs, which are mapped into a latent space as the multi-modal preference encodings \([Y_1,\cdots ,Y_P]\). Then, we aggregate these encodings into the hybrid encoding Z by attentional weights, which model users’ personalized preference on different modalities. Finally, we map Z into the scores of all items to predict for the user’s next behavior (target R).

To mine relevant information and filter out irrelevant and redundant information, we try to optimize two goals for next item recommendation. The first goal of M2IB model is to be expressive about the target R, which can be achieved by maximizing the mutual information between the hybrid encoding Z and the target R, i.e. \(\max I(Z,R)\). The second goal of M2IB model is to minimize the information of the M2IB model retained from the inputs, which can be achieved by minimizing the mutual information between the encoding \(Y_p\) and the input \(X_p\) for different data modalities, i.e. \(\min \sum _{p=1}^{P} I(Y_p,X_p) \). Combination of the above two goals means retaining as little as possible the most useful information for the target, i.e.

where \(\lambda \) denotes a trade-off coefficient of the two goals.

Variational Approximation. To optimize objective function Eq. (2) which includes two kinds of intractable mutual information, we introduce two theorems for the mutual information variational approximation, whose proofs are shown in the supplementary materialsFootnote 1. Assuming we have Q samples \(\{(r^q,x_{1}^q,..,x_P^q)|q=1,\cdots , Q\}\) from a data distribution D that subjects to the empirical data distribution as follows:

where r denotes the target and \(x_{1},..,x_P\) denote the inputs.

Theorem 1

With Q samples \(\{(r^q,x_{1}^q,..,x_P^q)|q=1,\cdots , Q\}\) and M2PG, the mutual information I(Z, R) has an approximation lower bound, i.e.

where \(q(r^{q}|z)\) denotes the variational inference of posterior \(p(r^{q}|z)\).

Theorem 2

With Q samples \(\{(r^q,x_{1}^q,..,x_P^q)|q=1,\cdots , Q\}\), the mutual information \(\sum \nolimits _{p=1}^{P} I(Y_p,X_p) \) has an approximation upper bound as follows:

where \(\epsilon \thicksim \mathcal {N}(0,I)\) and \(KL(\cdot )\) denotes the Kullback-Leibler divergence.

Encoder-Fusion-Decoder Framework. According to the Theorems 1 and 2, we can obtain the variational M2IB model in an encoder-fusion-decoder framework.

where \(L_1\) in Eq.(4) consists of the \(p(y_p|x^q_p)\) that denotes an encoder of input \(x_p\), \(p(z|y_1,..,y_P)\) that denotes the fusion layer for multi-modal information, and variational estimation \(q(r^q|z)\) that denotes a decoder for the output.

4.3 Implementation for Next-Item Recommendation

In this subsection, we detailed the implementation of variational M2IB model as shown in the right part of Fig. 2 (a). It consists of multiple encoders, a fusion layer, a decoder, a prediction loss, and a KL divergence loss.

Encoder Layer: To exploit users’ modality-specific preferences \([x_1,\cdots ,x_P]\), we encode them into a latent space by encoders \(\{p(y_p|x_p)|p=1,\cdots ,P\}\). For user u at time t, we encode his/her p-th modality preference \(X^{u,t}_p\in \mathbb {R}^{N}\) as a random variable \(p(y^{u,t}_p |X^{u,t}_p) \thicksim \mathcal {N}(\mu _p^{u,t}, diag(\sigma _p^{u,t}))\), where the mean \(\mu _p^{u,t}\) and the standard deviation \(diag(\sigma _p^{u,t})\) are parameterized by neural networks \(f_p^{\mu }\) and \(f_p^{\sigma }\) as follows:

where \(W_p^{*} \in \mathbb {R}^{d\times N}\) and \(b_p^{*} \in \mathbb {R}^{d}\) denote the fully connected layers and the biases, respectively. d denotes the dimension of the latent space and the activation function \(\kappa (x)=\exp (x/2)\) keeps the standard deviation to be positive.

Fusion Layer. To learn the user’s personalized preference on different modalities in the fusion layer \(p(z|y_1,\cdots ,y_P)\), we adopt an attentional mechanism to aggregate his/her preference encodings into the hybrid preference encoding, i.e.

where \(\text {l}\_\text {2}(\cdot )\) denotes the L2 normalization and \(h_p\in \mathbb {R}^d\) denotes the encoding of the p-th modality to model its importance for recommendation, which represents the key for the p-th modality in the attentional mechanism. And \(y_p^{u,t}\) represents both queries and values as in the self-attention mechanism [26].

Decoder Layer. To predict next behavior of user u at time t, we adopt the decoder q(r|z) to map his/her hybrid preference encoding \(z^{u,t}\) into the score or probability of all items that user u will engage:

where \(H\in \mathbb {R}^{d\times N}\) is the decoding matrix which is the parameters of the decoder.

Loss Functions. To measure the effectiveness of the recommendation results, we adopt a pair-wise loss [19] to formulate the lower bound of I(Z, R) in Eq. (4), i.e.

where \(\sigma (\cdot )\) denotes the sigmoid function. \(D = \{(u,i_1,i_2,t)|u\in \mathcal {U}\}\) denotes the train set and \((u,i_1,i_2,t)\) means user u has engaged item \(i_{1}\) but not engaged item \(i_{2}\) at time t. To limit the information retained from the inputs, we formulate the upper bound of \(L_2\) term in Eq. (5) by calculating the KL divergence between the encoding \(p(y^{u,t}_p |X^{u,t}_p) \thicksim \mathcal {N}(\mu _p^{u,t},diag(\sigma _p^{u,t}))\) and \(\epsilon \thicksim \mathcal {N}(0,I)\) for different modalities, i.e.

4.4 Model Learning and Complexity Analysis

Parameters Learning. The overall objective function combines both the prediction loss \(L_1\) and the KL divergence loss \(L_2\) as follows:

where \(\lambda \) denotes the trade-off coefficient. \(\theta \) denotes all parameters need to be learned in the proposed model and \(\mu _\theta \) denotes the regularization coefficient of L2-norm \(||\cdot ||^2\). As the objective function is differentiable, we optimize it by stochastic gradient descent and adaptively adjust the learning rate by AdamGrad, which can be automatically implemented by TensorFlowFootnote 2.

Complexity. For the model learning process, updating one sample for the proposed model takes \(O(P\cdot F\cdot d + p\cdot d)\) time. Specifically, P denotes the number of knowledge regions. \(O(F\cdot d + p\cdot d)\) denotes the computational complexity of the encoder with sparse inputs and fusion layers, where F denotes the number of frequent patterns. Thus, one iteration takes \(O(|D| \cdot P\cdot F\cdot d +|D| \cdot p\cdot d)\) time, where |D| is the number of all users’ interactions. The proposed model shares roughly equal time complexity to the self-attention method such as SASRec [10] and FDSA [29] \(O(|D|\cdot K^2\cdot d + |D|\cdot K\cdot d^2)\), where K denotes the window size of users’ behaviors and \(O(P\cdot F) \approx O(K^2)\) with appropriate hyper-parameters.

5 Experiment

In this section, we aim to evaluate the performance and effectiveness of the proposed method. Specifically, we conduct several experiments to study the following research questions:

RQ1: Whether the proposed method outperforms state-of-the-art methods for next item recommendation?

RQ2: Whether the proposed method benefits from the MemNet framework to capture users’ dynamic modality-specific preferences?

RQ3: Whether the proposed M2IB model with M2PG outperforms the traditional IB model with single-modal probabilistic graph to model users’ personalized preferences on different modalities?

RQ4: (a) Whether the proposed method benefits from removing irrelevant and redundant information compared with traditional dimension reduction? (b) To what extent the proposed method can filter out these irrelevant and redundant information?

5.1 Experimental Setup

Data Sets: We adopt five public data sets as the experimental data, including ML (Hetrec-MovieLensFootnote 3), DianpingFootnote 4, AmazonFootnote 5_Kindle (Amazon Kindle Store), Amazon_Game (Amazon Video Games), and TiktokFootnote 6. The ML data set contains users’ ratings on movies with timestamps and the attributes of movies (e.g. Actors, Directors, Genres, and Countries). The Dianping data set consists of the users’ ratings on restaurants in China with timestamps and attributes of restaurants (e.g. City, Business district, and Style). Amazon_Kindle and Amazon_Game data sets contain users’ ratings on books and video games with timestamps and metadata of items such as genre and visual features, where the visual features are extracted from each product image using a deep CNN [18]. Tiktok data set is collected from a micro-video sharing platform. It consists of users, micro-videos, their interactions with timestamps, and visual and acoustic features which are extracted and published without providing the raw data. Due to the possible redundancy of visual and acoustic features, we reduce them to d-dimensions with principal component analysis (PCA) as in [9]. For these data sets, we filter users and items which have less than [10, 25, 50, 50, 20] records for these data sets respectively according to their original scales, and consider ratings higher than 3.5 points as positive interactions as in [5]. The characteristics of these data sets are summarized in Table 1.

Evaluation Methodology and Metrics: We sort all interactions chronologically, then use the first 80% interactions as the train set and hold the last 20% for testing as in [24]. To stimulate the dynamic data sequence in a real-world scenario, we test the interactions from the hold-out data one by one correspondingly. Experimental results are recorded as the average of five runs with different random initializations of model parameters.

To evaluate the performances of the proposed method and the baseline methods, we adopt two widely used evaluation metrics for top-n recommendation as in [5], including hit ratio (hr) and normalized discounted cumulative gain (ndcg). Intuitively, hr@n considers all items ranked within the top-n list to be equally important, while ndcg@n uses a monotonically increasing discount to emphasize the importance of higher ranking positions versus lower ones.

Baselines: We take the following state-of-the-art methods as the baselines.

-BPR [19]. It proposes a pair-wise loss function to model the relative preferences of users.

-VBPR [8]. It integrates the visual features and ID embeddings of each item as its representation, and uses MF to reconstruct the historical interactions between users and items.

-FPMC [20]. It combines MF and MC to capture users’ dynamic preferences with the pair-wise loss function.

-Caser [24]. It adopts the convolutional filters to learn users’ union and skip patterns for sequential recommendation.

-SASRec [10]. It is a self-attention based sequential model, which utilizes item-level sequences for next item recommendation.

-ANAM [2]. It utilizes a hierarchical architecture to incorporate the attribute information by an attention mechanism for sequential recommendation.

-FDSA [29]. It models item and attribute transition patterns for next item recommendation based on the Transformer model [26].

-HA-RNN [15]. It combines the representation of items and their attributes, then fits them into an LSTM model for sequential recommendation.

-ACF [3]. It consists of the component-level and item-level attention modules that learn to informative components of multimedia items and score the item preferences. We utilize users’ recent engaged items as the neighborhood items in ACF to learn users’ dynamic preferences.

-SNR [5]: It is a sequential network based recommendation method for next item recommendation, which tries to explore and combine multi-factor and multi-faceted preference to predict users’ next behaviors.

Among these baseline methods, BPR and VBPR utilize static user-item interaction while others utilize users’ sequential behaviors for recommendation. Besides, ANAM explores items’ structured attributes information, while ACF, FDSA, SNR and HA-RNN explore both structured attributes and unstructured features for next item recommendation.

Implementation Details: We set the initial learn rating \(\gamma =1\times 10^{-3}\), regularization coefficient \(\mu _\theta = 1\times 10^{-5}\), and the dimension \(d=64\) to all methods for a fair comparison. We set the frequent pattern number F as 20, the user-state memory capacity K as 5, and the trade-off parameter \(\lambda \) as 1.0 for the moderate hyper-parameter selection of the propose model. For baseline models, we tune their parameters to the best. The source code is available in https://github.com/kk97111/M3IB.

5.2 Model Comparison

Table 2 shows the performances of different methods for next item recommendation. To make the table more notable, we bold the best results and underline the best baseline results in each case. Due to ANAM is designed for the structured attribute features, it can not work with only unstructured information such as visual and acoustic features. VBPR is designed for visual features and can not work with structured attributes. From the Table 2, we can observe that: 1) The proposed method \(M^3\)-IB outperforms all baselines significantly in all cases. The relative improvement over the best baselines is 10.75% and 8.39% on average according to hr@50 and ndcg@50, respectively. It confirms the effectiveness of our proposed model (RQ1). 2) The multi-modal methods, e.g. HA-RNN and SNR, achieve better performance among baseline models on data sets that contain structured attribute information, which confirms the necessity of extracting the rich information from multi-modal data. But some multi-modal methods (e.g. ANAM, ACF, VBPR) show low accuracy in some cases, which indicates they may suffer from the misleading of irrelevant and redundant information for next item recommendation. 3) The methods without consideration of user-item dynamic relations, e.g. BPR and VBPR, perform badly among the baselines, which indicates it is necessary to capture users’ dynamic preferences for next item recommendation.

5.3 Ablation Study

To evaluate the effectiveness of the module design of the proposed method and the motivations of this paper, we take some special cases of the proposed method as comparisons.

-\(M^3\)-IB-noMM: It is a variant of \(M^3\)-IB method without the MemNet framework. Following the idea in [15], we first enrich items’ representations with multi-modal content, and then takes the representations of users’ recent engaged (several) items as the multi-source input for the M2IB model.

-\(M^3\)-IB-noM2PG: It is a variant of \(M^3\)-IB method without the M2PG, which adopts the traditional IB model [1] that takes the concatenation of users’ multi-modal preferences, i.e. \(X_1 \oplus \cdots \oplus X_P\), as inputs.

-\(M^3\)-IB-noBN: It is a variant of \(M^3\)-IB without using the IB information constraints i.e. set \(\lambda =0\) in Eq. (2), which only uses dimension reduction for information processing.

The results of the ablation study are shown at the bottom part of Table 2. On all data sets, the proposed method \(M^3\)-IB with the MemNet framework significantly outperforms the variant \(M^3\)-IB-noMM that utilizes the feature-level combination strategy, which proves the necessity of capturing users’ dynamic modality-specific preferences (RQ2). Besides, the proposed method \(M^3\)-IB outperforms the variant \(M^3\)-IB-noM2PG for multi-modal information fusion. It indicates the effectiveness of M2IB model to model users’ personalized preferences on different modalities (RQ3). Finally, the proposed method \(M^3\)-IB outperforms the variant \(M^3\)-IB-noBN with traditional dimension reduction, which confirms the effectiveness of the proposed method \(M^3\)-IB to filter out the irrelevant and redundant information based on the IB principle (RQ4.(a)).

5.4 Hyper-Parameter Study

There are several key parameters for the proposed method \(M^3\)-IB, including the number of retained the most frequent patterns F for each modality, the capacity of each user memory region K, and the trade-off coefficient \(\lambda \). Exploring them can help us with better understanding to what extent the \(M^3\)-IB method can extract useful information and filter out irrelevant and redundant information (RQ4.(b)). Figure 3 shows the performances of the method \(M^3\)-IB and the variant \(M^3\)-IB-noBN with various F and K. The larger F implies the more knowledge is retained from the data modality. It will contain more useful information while introducing more irrelevant and redundant information for next item recommendation. The larger K implies the more users’ recent behaviors are memorized and utilized for next item recommendation. The method \(M^3\)-IB consistently outperforms the variant \(M^3\)-IB-noBN with sufficient information in all data sets, which confirms the necessity to remove irrelevant information. In addition, the method \(M^3\)-IB is more effective and stable than the variant \(M^3\)-IB-noBN when introducing massive noise, which shows IB model can capture useful information effectively and remove irrelevant and redundant information stably. Figure 3 also shows the performances of the method \(M^3\)-IB with various \(\lambda \). The larger \(\lambda \) implies the more strict rule to remove the irrelevant and redundant information. It shows that too large or too small \(\lambda \) limits the performance of the method \(M^3\)-IB. It will remove some useful information when \(\lambda \) is too large, and introduce too much irrelevant and redundant information when \(\lambda \) is too small.

6 Conclusion

In this paper, we proposed a memory-augment multi-modal information bottleneck method to make use of information from multi-modal data for next item recommendation. The proposed method can model users’ dynamic and personalized preferences on different modalities, and capture the most meaningful and relevant information with the IB principle. Extensive experiments show the proposed method outperforms state-of-the-art methods. Besides, the ablation experiments verify the validity of our research motivations: a) the proposed method benefits from exploring users’ dynamic and personalized preferences on different modalities compared to existing content combination based strategies; b) the proposed method benefits from the M2IB model compared to traditional IB method for multi-modal fusion and representation learning; c) the proposed method shows effectiveness and stability to capture useful information effectively and remove irrelevant and redundant information for next item recommendation. In the future, we will study how to extend the proposed method to make use of unaligned data such as cross-domain information.

References

Alemi, A.A., Fischer, I., Dillon, J.V., Murphy, K.: Deep variational information bottleneck. arXiv preprint arXiv:1612.00410 (2016)

Bai, T., Nie, J.Y., Zhao, W.X., Zhu, Y., Du, P., Wen, J.R.: An attribute-aware neural attentive model for next basket recommendation. In: Proceedings of SIGIR, pp. 1201–1204 (2018)

Chen, J., Zhang, H., He, X., Nie, L., Liu, W., Chua, T.S.: Attentive collaborative filtering: multimedia recommendation with item-and component-level attention. In: Proceedings of the 40th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 335–344 (2017)

Di Noia, T., Mirizzi, R., Ostuni, V.C., Romito, D., Zanker, M.: Linked open data to support content-based recommender systems. In: Proceedings of the 8th International Conference on Semantic Systems, pp. 1–8 (2012)

Du, Y., Liu, H., Wu, Z.: Modeling multi-factor and multi-faceted preferences over sequential networks for next item recommendation. In: Oliver, N., Pérez-Cruz, F., Kramer, S., Read, J., Lozano, J.A. (eds.) ECML PKDD 2021. LNCS (LNAI), vol. 12976, pp. 516–531. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-86520-7_32

Ebesu, T., Shen, B., Fang, Y.: Collaborative memory network for recommendation systems. In: The 41st International ACM SIGIR Conference On Research & Development in Information Retrieval, pp. 515–524 (2018)

Guo, H., Tang, R., Ye, Y., Li, Z., He, X.: DeepFM: a factorization-machine based neural network for CTR prediction. In: Proceedings of the 26th International Joint Conference on Artificial Intelligence, pp. 1725–1731 (2017)

He, R., McAuley, J.: VBPR: visual Bayesian personalized ranking from implicit feedback. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 30 (2016)

Huang, X., Qian, S., Fang, Q., Sang, J., Xu, C.: CSAN: contextual self-attention network for user sequential recommendation. In: Proceedings of the 26th ACM international conference on Multimedia, pp. 447–455 (2018)

Kang, W.C., McAuley, J.: Self-attentive sequential recommendation. In: 2018 IEEE International Conference on Data Mining (ICDM), pp. 197–206. IEEE (2018)

Kingma, D.P., Welling, M.: Auto-encoding variational Bayes. arXiv preprint arXiv:1312.6114 (2013)

Li, X., et al.: Adversarial multimodal representation learning for click-through rate prediction. In: Proceedings of the Web Conference 2020, pp. 827–836 (2020)

Li, X.L., Eisner, J.: Specializing word embeddings (for parsing) by information bottleneck. In: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), pp. 2744–2754 (2019)

Liang, D., Krishnan, R.G., Hoffman, M.D., Jebara, T.: Variational autoencoders for collaborative filtering. In: Proceedings of the 2018 World Wide Web Conference, pp. 689–698 (2018)

Liu, K., Shi, X., Natarajan, P.: Sequential heterogeneous attribute embedding for item recommendation. In: Proceedings of ICDMW, pp. 773–780 (2017)

Locatello, F., et al.: Challenging common assumptions in the unsupervised learning of disentangled representations. In: International Conference on Machine Learning, pp. 4114–4124. PMLR (2019)

Ma, J., Zhou, C., Cui, P., Yang, H., Zhu, W.: Learning disentangled representations for recommendation. In: Advances in Neural Information Processing Systems, pp. 5711–5722 (2019)

McAuley, J., Targett, C., Shi, Q., Van Den Hengel, A.: Image-based recommendations on styles and substitutes. In: Proceedings of the 38th international ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 43–52 (2015)

Rendle, S., Freudenthaler, C., Gantner, Z., Schmidt-Thieme, L.: BPR: Bayesian personalized ranking from implicit feedback. In: Proceedings of the Twenty-Fifth Conference on Uncertainty in Artificial Intelligence, pp. 452–461 (2009)

Rendle, S., Freudenthaler, C., Schmidt-Thieme, L.: Factorizing personalized Markov chains for next-basket recommendation. In: Proceedings of WWW, pp. 811–820 (2010)

Schafer, J.B., Frankowski, D., Herlocker, J., Sen, S.: Collaborative filtering recommender systems. In: Brusilovsky, P., Kobsa, A., Nejdl, W. (eds.) The Adaptive Web. LNCS, vol. 4321, pp. 291–324. Springer, Heidelberg (2007). https://doi.org/10.1007/978-3-540-72079-9_9

Sun, R., et al.: Multi-modal knowledge graphs for recommender systems. In: Proceedings of the 29th ACM International Conference on Information & Knowledge Management, pp. 1405–1414 (2020)

Swesi, I.M.A.O., Bakar, A.A., Kadir, A.S.A.: Mining positive and negative association rules from interesting frequent and infrequent itemsets. In: 2012 9th International Conference on Fuzzy Systems and Knowledge Discovery, pp. 650–655. IEEE (2012)

Tang, J., Wang, K.: Personalized top-n sequential recommendation via convolutional sequence embedding. In: Proceedings of WSDM, pp. 565–573 (2018)

Tishby, N., Pereira, F.C., Bialek, W.: The information bottleneck method, pp. 368–377 (1999)

Vaswani, A., et al.: Attention is all you need. In: Proceedings of NIPS, pp. 5998–6008 (2017)

Wang, Z., Liu, H., Du, Y., Wu, Z., Zhang, X.: Unified embedding model over heterogeneous information network for personalized recommendation. In: Proceedings of the 28th International Joint Conference on Artificial Intelligence, pp. 3813–3819. AAAI Press (2019)

Wei, Y., Wang, X., Nie, L., He, X., Hong, R., Chua, T.S.: MMGCN: multi-modal graph convolution network for personalized recommendation of micro-video. In: Proceedings of the 27th ACM International Conference on Multimedia, pp. 1437–1445 (2019)

Zhang, T., et al.: Feature-level deeper self-attention network for sequential recommendation. In: Proceedings of IJCAI, pp. 4320–4326 (2019)

Zhu, N., Cao, J., Liu, Y., Yang, Y., Ying, H., Xiong, H.: Sequential modeling of hierarchical user intention and preference for next-item recommendation. In: Proceedings of the 13th International Conference on Web Search and Data Mining, pp. 807–815 (2020)

Acknowledgement

This work was supported by Peking University Education Big Data Project (Grant No. 2020YBC10).

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Du, Y., Liu, H., Wu, Z. (2022). \(M^3\)-IB: A Memory-Augment Multi-modal Information Bottleneck Model for Next-Item Recommendation. In: Bhattacharya, A., et al. Database Systems for Advanced Applications. DASFAA 2022. Lecture Notes in Computer Science, vol 13246. Springer, Cham. https://doi.org/10.1007/978-3-031-00126-0_2

Download citation

DOI: https://doi.org/10.1007/978-3-031-00126-0_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-00125-3

Online ISBN: 978-3-031-00126-0

eBook Packages: Computer ScienceComputer Science (R0)