Abstract

In this paper, we analyse the Nyström method based on a sextic spline quasi-interpolant for approximating the solution of a linear Fredholm integral equation of the second kind. For a sufficiently smooth kernel the method is shown to have convergence of order 8 and the Richardson extrapolation is used to further improve this order to 9. Numerical examples are given to confirm the theoretical estimates.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Spline quasi-interpolant

- Fredholm integral equation

- Nystr \(\ddot {o}\) m method

- Richardson extrapolation

1 Introduction

Consider the Fredholm integral equation defined on \(\mathbb {E}=\mathscr {C}[0,1]\) by

where κ is a smooth kernel, \(f\in \mathbb {E}\) is a real-valued continuous function and u denotes the unknown function. The Nyström method (see [5]) for solving (5.1) consists in replacing the integral in (5.1) by a numerical formula and it has been widely studied in the literature. A general framework for the method in the case of interpolatory projection is presented in [1, 2]. In [6] the method using a quartic spline quasi-interpolant is proposed. A superconvergent version of the Nyström method based on spline quasi-interpolants of degree d ≥ 2 is analysed in [7]. In this paper we construct a quadrature formula based on integrating a sextic spline quasi-interpolant and this formula is used for the numerical solution of the Fredholm integral equation (5.1). We show that the convergence order of the approximate solution to the exact solution is the same as that of the quadrature rule. We show that the approximate solution of (5.1) has an asymptotic error expansion and one step of the Richardson extrapolation further improves the order of convergence.

The paper is divided into five sections. In Sect. 5.2, we set the notation and the sextic spline quasi-interpolant \(\mathscr {Q}_n\) is constructed. In Sect. 5.3, we introduce the quadrature rule based on \(\mathscr {Q}_n\) and we establish an expression of the error estimate. In Sect. 5.4, the Nyström method for the approximate solution of (5.1) is analysed and asymptotic series expansion for the proposed solution is obtained. Numerical examples are given in Sect. 5.5.

2 Sextic Spline Quasi-Interpolant

2.1 B-splines

Definition 5.1

Let \(d\in \mathbb {N}\) and let

be an extended partition of the interval I = [0, 1]. The normalized B-spline of degree d associated with the knots x i…, x i+d+1 is defined by

where \([x_i,\ldots ,x_{i+d+1}](.-x)_+^d\) is the divided difference of \(t\longrightarrow (t-x)_+^d\) with respect to the d + 2 points x i, …, x i+d+1.

By using the definition of the divided differences, we obtain

Thus, from the above formula, we get

which is the characteristic function on the interval [x i, x i+1[, i.e.

The B-splines of higher degree (d ≥ 1) can be evaluated by using the following recursion formula (see [3, Chap.4]):

with

2.2 Construction of the Discrete Spline Quasi-Interpolant

Let \(\mathbb {X}_{n}=\{x_{k}=\frac {k}{n},\,0\leq k\leq n\} \) denote the uniform partition of the interval I onto n equal subintervals I k = [x k−1, x k], 1 ≤ k ≤ n with meshlength \(h=\frac {1}{n}\). Let \(S_{6}(I, \mathbb {X}_{n})\) be the space of \(\mathscr {C}^{5}\) sextic splines on this partition. Its canonical basis is formed by the n + 6 normalized B-splines {B k ≡ B k−7,6, k ∈ J n} where J n = {1, …, n + 6}. The support of B k is [x k−7, x k] if we add multiple knots at the endpoints

For 7 ≤ k ≤ n, we have \(B_k(x)=\bar {B}(\frac {x}{h}-k),\) where \(\bar {B}\) is the cardinal B-spline associated with the knots {0, 1, 2, 3, 4, 5, 6, 7} and defined by

We recall (see [10, Theorem 4.21 & Remark 4.1]) the representation of monomials using symmetric functions of the interior knots N k = {x k−6, …, x k−1} in the support of B k, which are defined by σ 0(N k) = 1 and for 1 ≤ r ≤ 6:

For 0 ≤ r ≤ 6, let m r(x) = x r. Then, we have

where

Hence

For r = 0, we have \(\theta _{k}^{(0)}=1\), for all k ∈ J n, since \(\sum \limits _{k\in J_n}B_k(x)=1.\)

For r = 1, we have \(\binom {6}{r}^{-1}=\frac {1}{6}\) and \(\sigma _{1}(N_k)=\sum \limits _{1\leq \ell \leq 6}x_{k-\ell }=x_{k-1}+\ldots +x_{k-6}.\) Thus, we obtain the Greville abscissae:

which are the coefficients of \(m_{1}(x)=\sum \limits _{k\in J_n}\theta _{k}B_k(x)\).

The sextic discrete spline quasi-interpolant (abbr. dQI) used here (see [8]) is the following spline operator

whose coefficients are linear combinations of discrete values of f on a set of data points \(\mathbb {T}_n=\{t_j,\;j\in \Gamma _n\}\) where Γn = {j = 1, 2, …, n + 2}. The elements of \(\mathbb {T}_n\) are defined by

The dQI is constructed to be exact on Π6, where Π6 is the space of polynomials of degree at most 6, that means \(\mathscr {Q}_nm_r = m_r\) for 0 ≤ r ≤ 6 and therefore

For 7 ≤ k ≤ n, the functionals μ k use values of f in a neighbourhood of the support of B k, thus it is natural to express μ k in the following way

where f k = f(t k). This leads us to solve the system of linear equations

For 1 ≤ k ≤ 6 and n + 1 ≤ k ≤ n + 6 we write respectively

which is equivalent to the systems of linear equations

All these systems have Vandermonde determinants and since the \((t_j)_{j\in \Gamma _n}\) are distinct, they have unique solutions, whence the existence and unicity of the dQI. The functional coefficients are respectively defined by the following formulas:

and for n + 1 ≤ k ≤ n + 6, μ k(f) is given by the second formula in (5.5) with γ i,k = β i,k, 1 ≤ i ≤ 7. Since \(\mathscr {Q}_n\) reproduces Π6 it is easy to show that for \(f\in \mathscr {C}^7[0,1],\) we have

where c 1 is a constant independent of n.

It is more convenient to write the quasi-interpolant \(\mathscr {Q}_n\) under the quasi-Lagrange form

where the quasi-Lagrange functions L j are linear combinations of seven B-splines. For example, using the value f 1 are {μ 1, μ 2, μ 3, μ 4, μ 5, μ 6}, therefore we have

For 8 ≤ k ≤ n − 5, we have

3 Quadrature Formula Associated with \(\mathscr {Q}_n\)

By integrating \(\mathscr {Q}_nf\) in the quasi-Lagrange form (5.7) we obtain as in [9], the following quadrature formula

with weights \(\omega _j=\frac {1}{h}\int _0^1L_j(x)dx.\) Using the fact that \(\int _0^1B_j(x)=\frac {x_j - x_{j-7}}{7},\) we get

Since \(\mathscr {Q}_n\) is exact on Π6, and the weights and knots \((t_j)_{j\in \Gamma _n}\) are symmetric with respect to the midpoint of I, we deduce that the quadrature rule (5.8) is exact on Π7. Therefore, the error E n(f) = I(f) − I n(f) is a \(\mathscr {O}(h^8)\), when \(f \in \mathscr {C}^8[0,1]\). Now, according to the Peano kernel theorem (see [4, Chap.3]), we have

where K(t) is the Peano kernel defined by

Theorem 5.1

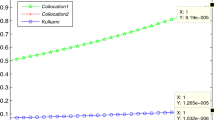

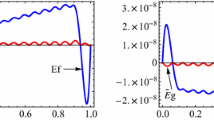

The Peano kernel K(t) is negative in the intervals J 1 = [0, t 0] and J 3 = [1 − t 0, 1] and positive in J 2 = [t 0, 1 − t 0] with t 0 = τ 0 h and τ 0 = 1.38135 (Fig. 5.1).

Proof

Using

we see immediately that K(0) = K(1) = 0. In fact, since for all j ∈ Γn, \( (t_j-1)_+^7=0 \), we obtain K(1) = 0. On the other hand, K(0) = 0 is due to the fact that the polynomial p(x) = x 7, belongs to Π7, hence it is exactly integrated by the quadrature rule I n and therefore

We need to study the sign of K(t) as it is done for the quartic case, (see [6]).

Now, setting t = τh, τ ∈ [0, n] and x = ξh, we obtain

where

We have also

which gives

Consequently, K(t) and p(τ) have the same sign. By using the symmetry of nodes and weights, it is easy to verify that p(τ) = p(n − τ). Then

Now let study the sign of p(τ) on [0, n]. Let \(p_j\equiv p|{ }_{[\tau _j,\tau _{j+1}]},\;j=1,\ldots ,n+1.\)

-

In the interval \([\tau _1,\tau _2]=[0,\frac {1}{2}] :\)

$$\displaystyle \begin{aligned} p_1(\tau)&=\frac{\tau^7}{8} \left(\tau-\frac{808}{735}\right)\leq 0, \end{aligned} $$which admits τ = 0 as root on this interval.

-

In the interval \([\tau _2,\tau _3]=[\frac {1}{2},\frac {3}{2}]:\)

$$\displaystyle \begin{aligned} p_2(\tau)&=p_1(\tau)-\frac{267}{326}\left(\tau-\frac{1}{2}\right)^7, \end{aligned} $$which admits \(\tau _0 = \frac { 1985}{1437}=1.38135\) as root in the interval [τ 2, τ 3]. We can check numerically that p(τ) ≤ 0 in [τ 2, τ 0] and p(τ) ≥ 0 in [τ 0, τ 3].

-

In the interval \([\tau _3,\tau _4]=[\frac {3}{2},\frac {5}{2}]:\)

$$\displaystyle \begin{aligned} p_3(\tau)&=p_2(\tau)-\frac{996}{931}\left(\tau-\frac{3}{2}\right)^7\geq 0, \end{aligned} $$which does not admits any roots in the interval [τ 3, τ 4].

-

In the interval \([\tau _4,\tau _5]=[\frac {5}{2},\frac {7}{2}]:\)

$$\displaystyle \begin{aligned} p_4(\tau)&=p_3(\tau)-\frac{339}{353}\left(\tau-\frac{5}{2}\right)^7\geq 0, \end{aligned} $$which does not admits any roots in the interval [τ 4, τ 5].

-

In the interval \([\tau _5,\tau _6]=[\frac {7}{2},\frac {9}{2}]:\)

$$\displaystyle \begin{aligned} p_5(\tau)&=p_4(\tau)-\frac{402}{395}\left(\tau-\frac{7}{2}\right)^7\geq 0, \end{aligned} $$which does not admits any roots in the interval [τ 5, τ 6].

-

In the interval \([\tau _6,\tau _7]=[\frac {9}{2},\frac {11}{2}] :\)

$$\displaystyle \begin{aligned} p_6(\tau)&=p_5(\tau)-\frac{1411}{1418}\left(\tau-\frac{9}{2}\right)^7\geq 0, \end{aligned} $$which does not admits any roots in the interval [τ 6, τ 7].

-

In the interval \([\tau _7,\tau _8]=[\frac {11}{2},\frac {13}{2}]:\)

$$\displaystyle \begin{aligned} p_7(\tau)&=p_6(\tau)-\frac{1600}{1599}\left(\tau-\frac{11}{2}\right)^7\geq 0, \end{aligned} $$which does not admits any roots in the interval [τ 7, τ 8].

-

In the interval \([\tau _8,\tau _9]=[\frac {13}{2},\frac {15}{2}]:\)

$$\displaystyle \begin{aligned} p_8(\tau) &=p_7(\tau)-\left(\tau-\frac{13}{2}\right)^7\geq 0. \end{aligned} $$ -

In the interval [τ i, τ i+1], 8 ≤ i ≤ n − 5 it can be shown by induction that p i(τ) = p i−1(τ − 1).

-

In the last seven intervals, since p(τ) = p(n − τ), we get

$$\displaystyle \begin{aligned} p_{n+3-j}(\tau)=p_j(n-\tau),\quad \tau\in[\tau_{n+3-j},\tau_{n+4-j}],\quad 1\leq j\leq 7 , \end{aligned}$$which means that the behaviour of p is symmetrical of that one in the first seven intervals. This completes the proof.

□

Using the above theorem, the following asymptotic error formula can be proved.

Proposition 5.1

For any function \(f\in \mathscr {C}^8 [0,1] \) , there exist a point τ ∈ [0, 1] such that

where \(c_0=\frac {1107467}{3251404800}\simeq 3.41\times 10^{-4}.\)

Proof

The proof is similar to the proof of Theorem 2 in [6]. □

4 The Nyström Method

By using the quadrature scheme (5.8) to approximate the integral in (5.1), we obtain a new equation

where the unknowns are {u n(t j), j ∈ Γn} and they can be evaluated by solving the following linear system of size n + 2

From (5.10), the approximate solution u n(s) is completely determined by their values at the nodes \((t_i)_{i\in \Gamma _n}\). In fact,

Now let \(\mathscr {K}\) be the integral operator defined by

and let \(\mathscr {K}_n\) be the following Nyström approximation

The following theorem gives a complete information for analyzing the convergence of the Nyström method.

Theorem 5.2

Let κ(s, t) be a continuous kernel for s, t ∈ [0, 1]. Assume that the quadrature scheme (5.8) is convergent for all continuous functions on [0, 1]. Further, assume that the integral equation (5.1) is uniquely solvable for a given \(f\in \mathscr {C}[0,1]\) . Then, for all sufficiently large n, say n ≥ N, the operators \((I-\mathscr {K}_n)^{-1}\) exist and are uniformly bounded,

with a suitable constant c < ∞. For the equations \((I-\mathscr {K})u=f\) and \((I-\mathscr {K}_n)u_n=f,\)

Proof

See Atkinson [1, Theorem 4.1.2]. □

Theorem 5.3

Let u be the exact solution of (5.1). Assume that \(\kappa (s,.)u(.)\in \mathscr {C}^8[0,1]\) for all s ∈ [0, 1]. Then, for a sufficiently large n,

Proof

The estimation (5.13) shows that ∥u − u n∥∞ and \(\Vert (\mathscr {K}-\mathscr {K}_n)u\Vert _\infty \) converges to zero with the same speed. By (5.9), we have for s ∈ [0, 1] the asymptotic integration error

Hence, from (5.13) and (5.15), the Nyström method converges with an order of \(\mathscr {O}(h^8)\), provided κ(s, t)u(t) is eight times continuously differentiable with respect to t, uniformly in s. □

An asymptotic series expansion for the Nyström solution u n is obtained below.

Theorem 5.4

Under the assumption of Theorem 5.3 , we have

with

Proof

Since

we can write as in [1, Chap.4]

where

Using the asymptotic expansion (5.15), we get

and

where \(c(t)=c_0[(I-\mathscr {K})^{-1}\mathscr {W}u](t)\) and τ n ∈ [0, 1]. Letting S n be the solution of equation

we deduce that

where S satisfies

Taking into account that S − S n ≃ e n, we finally obtain

This completes the proof. □

One step of Richardson extrapolation can be used to further improve the order of convergence of u n. Let u 2n be the solution associated with a uniform partition of [0, 1] with 2n intervals and norm \(\frac {h}{2}.\) Define

Theorem 5.5

If \(\kappa (s,.)u(.)\in \mathscr {C}^8[0,1]\) for all s ∈ [0, 1], then, we have

Proof

From Theorem 5.4 we obtain

5 Numerical Results

Example 1

Consider the following linear Fredholm integral equation of the second kind

where the exact solution is u(s) = s and f is chosen accordingly. The errors

were approximated respectively by

and

Using two successive values of n, the values of α and β are computed and are listed in Table 5.1.

From the above table it can be seen that the computed orders of convergence match well with the expected values.

Example 2

Consider the following Fredholm integral equation quoted from [6]

where f is chosen so that \(u(s) = \cos {}(s)\). The results are given in Table 5.2.

We denote by \(u^Q_n\) and \(u^{QB}_n\) the approximated solutions given by Nyström method based on the integration of a quartic spline quasi-interpolant and Nyström method associated with the extrapolated quadrature formula I QB in [6] respectively. The errors

which are listed in Table 5.3, are quoted from [6]. Note that the predicted values of γ and δ are respectively, 6 and 7. The numerical algorithm was run on a PC with Intel Core i5 1.60 GHz CPU, 8GB RAM, and the programs were compiled by using Wolfram Mathematica.

It can be seen from Tables 5.2 and 5.3 that the approximation \(u^R_{2n}\) with n = 32 is better than the approximation \(u^{QB}_n\) with n = 128.

6 Conclusion

The results, which are displayed in Table 5.1, show that a very high accuracy is obtained even for a kernel which is only continuous with respect to the variable s. On the other hand, we obtained significant performances in comparison with those of the quadrature rules in [6] and this is due to the fact that the order of convergence of the proposed method is higher. Note that the size of the corresponding linear system is n + 2. It can be shown that to solve the present problem by a piecewise polynomial interpolation scheme, a linear system of size at least 4n will need to be solved to obtain accuracy of comparable order.

References

Atkinson, K.E.: The Numerical Solution of Integral Equations of the Second Kind. Cambridge University Press, Cambridge (1997)

Atkinson, K.E., Han, W.: Theoretical Numerical Analysis, 2nd edn. Springer, Berlin (2005)

de Boor, C.: A Practical Guide to Splines. Springer, Berlin (1978)

Engels, H.: Numerical Quadrature and Cubature. Academic, New York (1980)

Nyström, E.: Uber die praktische Auflosung von Integralgleichungen mit Anwendungen auf Randwertaufgaben. Acta Math. 54, 185–204 (1930)

Sablonnière, P., Sbibih, D., Tahrichi, M.: Error estimate and extrapolation of a quadrature formula derived from a quartic spline quasi-interpolant. BIT Numer. Math. 50, 843–862 (2010)

Sablonnière, P., Sbibih, D., Tahrichi, M.: High-order quadrature rules based on spline quasi-interpolants and application to integral equations. Appl. Numer. Math. 62, 507–520 (2012)

Sablonnière, P.: Spline quasi-interpolants and applications to numerical Analysis. Rend. Sem. Univ. Pol. Torino 63(2), 211–222 (2005)

Sablonnière, P.: A quadrature formula associated with a univariate quadratic spline quasi-interpolant. BIT Numer. Math. 47, 825–837 (2007)

Schumaker, L.L.: Spline Functions: Basic Theory. Wiley, New York (1973)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Allouch, C., Hamzaoui, I., Sbibih, D. (2022). Richardson Extrapolation of Nyström Method Associated with a Sextic Spline Quasi-Interpolant. In: Barrera, D., Remogna, S., Sbibih, D. (eds) Mathematical and Computational Methods for Modelling, Approximation and Simulation. SEMA SIMAI Springer Series, vol 29. Springer, Cham. https://doi.org/10.1007/978-3-030-94339-4_5

Download citation

DOI: https://doi.org/10.1007/978-3-030-94339-4_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-94338-7

Online ISBN: 978-3-030-94339-4

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)