Abstract

In this paper, we present recent solutions to the problem of approximating functions by polynomials for reducing in a substantial manner two well-known phenomena: Runge and Gibbs. The main idea is to avoid resampling the function or data and relies on the mapped polynomials or “fake” nodes approach. This technique turns out to be effective for stability by reducing the Runge effect and also in the presence of discontinuities by almost cancelling the Gibbs phenomenon. The technique is very general and can be applied to any approximant of the underlying function to be reconstructed: polynomials, rational functions or any other basis. A “natural” application is then quadrature, that we started recently to investigate and we propose here some results. In the case of jumps or discontinuities, where the Gibbs phenomenon appears, we propose a different approach inspired by approximating functions by kernels, in particular Radial Basis Functions (RBF). We use the so called Variably Scaled Discontinuous Kernels (VSDK) as an extension of the Variably Scaled Kernels (VSK) firstly introduced in Bozzini et al. (IMA J Numer Anal 35:199–219, 2015). VSDK show to be a very flexible tool suitable to substantially reducing the Gibbs phenomenon in reconstructing functions with jumps. As an interesting application we apply VSDK in Magnetic Particle Imaging which is a recent non-invasive tomographic technique that detects super-paramagnetic nanoparticle tracers and finds applications in diagnostic imaging and material science. In fact, the image generated by the MPI scanners are usually discontinuous and sampled at scattered data points, making the reconstruction problem affected by the Gibbs phenomenon. We show that VSDK are well suited in MPI image reconstruction also for identifying image discontinuities.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Univariate approximation of functions and data is a well studied topic since the antiquity (Babylon and Greece), with many different developments by the Arabs and Persians in pre and late medieval period. The scientific revolution in astronomy, mainly due to Copernicus, Kepler and Galileo was the starting point for the investigations done later on by Newton, who gave strong impetus to the further advancement of mathematics, including what is now called “classical” interpolation theory (interested people on these historical aspects may read the nice survey by E. Meijering [50]).

Interpolation is essentially a way of estimating a given function \(f: [a,b] \subset \mathbb {R} \rightarrow \mathbb {R}\) known only at a finite set X n = {x i, i = 0, …, n} of n + 1 (distinct) points, called interpolation points The corresponding set of values is denoted by Y n = {y i = f(x i), i = 0, …, n}. Then, we are looking for a polynomial \(p \in \mathbb {P}_n\), with \(\mathbb {P}_n\) the linear space of polynomials of degree less and equal than n. The space \(\mathbb {P}_n\) has finite dimension n + 1 and spanned by the monomial basis M = {1, x, x 2, …, x n}. Therefore, every \(p \in \mathbb {P}_n\) is written as

The coefficients a i are determined by solving the linear system p(x i) = y i, i = 0, …, n. Introducing the Vandermonde matrix \(V_{ij}=(x_i^{j})\), the coefficient vector a = (a 0, …, a n)t and the vector y = (y 0, …, y n)t, the linear system can compactly be written as

As well-known, the solution of the system is guaranteed if the points are distinct, making V invertible [21, §2]. Moreover, the interpolating polynomial (1.1) can be expressed at any point x ∈ [a, b] by the discrete inner product p = 〈a, x〉.

Instead of the monomial basis, we can use the cardinal basis of elementary Lagrange polynomials. That is L = {ℓ i, i = 0, …, n} where \(\displaystyle l_i(x)=\prod _{j=0, j\ne i} {x-x_j \over x_i-x_j}\) or, alternatively by the ratio

where, V i(x) is the Vandermonde matrix in which we have substituted the i-th column with the vector x = (1, x, x 2, …, x n)T. The formula (1.3) is essentially the Cramer’s rule applied to the system

showing immediately the main property of the elementary Lagrange polynomials: they form a set of cardinal polynomial functions, that is ℓ i(x j) = δ ij. Using the Lagrange basis the interpolant becomes p = 〈l, y〉. Therefore, by unicity of interpolation we get

Hence, in the Lagrange basis ℓ the vector of coefficients a is at once y, so that in (1.2) V is substituted by the identity matrix I of order n + 1.

There is another interesting formula that we can used to express the interpolant p. As pointed out in [20, §3 Prob. 14], the interpolant at every point x can be written in determinantal form as follows

This expresses the interpolant as the determinant of an (n + 2) × (n + 2) matrix, obtained by bordering the Vandermonde matrix with two vectors y, x T and the scalar 0. The constant C appearing is (1.4) is a normalizing factor for expressing the interpolant in Lagrange form, that is \(C=-1/\det (V)\).

This formula can be specialized for any set of linear independent functions, say {g 0, …, g n} (cf. [20, §3, Prob. 15]) and in particular for the Lagrange basis L obtaining

with I the identity matrix of order n + 1.

Historically, but also nowadays in different frameworks and applications, the simplest way to take distinct samples x i, is to consider equally spaced points (assuming for simplicity x 0 = a and x n = b). Two well-known phenomena are related to this choice of the interpolation points. The first one is the Runge phenomenon: when using polynomial interpolation with polynomials of high degree, the polynomial shows high oscillations at the edges of the interval. It was discovered by Carl Runge when exploring the behavior of errors when using polynomial interpolation to approximate also analytic functions (cf. [56]). It is related to the stability of the interpolation process via the Lebesgue constant

by means of the inequality

where p ∗ represents the polynomial of best uniform approximation, that is \(p^*:=\inf _{p \in \mathbb {P}_n} \|f-p\|{ }_\infty \), which uniquely exists. About the growth of the Lebesgue constant and its relevant properties we invite the readers to refer to the great survey by L. Brutman [19]. Here we simply recall the fundamental fact that Λn, which depends only on the choice of the node set X, grows exponentially (with n) when the interpolation points are equally spaced. Therefore it will be fundamental to look for a different choice than the equal distribution. As well-known, this is what do the Chebyshev points in [a, b] or the zeros of orthogonal polynomials with respect to the interval [a, b] and a given weight function (cf. e.g. [17, 19, 21]).

The second phenomenon and related to the Runge phenomenon is the Gibbs phenomenon, which tells us the peculiar manner in which the Fourier series of a piecewise continuously differentiable periodic function behaves at a jump discontinuity. This effect was originally discovered by Henry Wilbraham (1848) and successively rediscovered by J. Willard Gibbs (1899) (see [42]). The phenomenon observed that the n-th partial sum of the Fourier series has large oscillations near the jump, which might increase the maximum of the partial sum above that of the function itself. The overshoot does not die out as n increases, but approaches a finite limit. The Gibbs phenomenon is the cause of unpleasant artifacts in signal and image processing in presence of discontinuities.

The Gibbs phenomenon is also a well-known issue in higher dimensions and for other basis systems like wavelets or splines (cf. e.g. [44] for a general overview) and also in barycentric rational approximation [51]. Further, it appears also in the context of Radial Basis Function (RBF) interpolation [36] and in subdivision schemes (cf. [2, 3]). To soften the effects of this artifact, additional smoothing filters are usually applied to the interpolant. For radial basis function methods, one can for instance use linear RBFs in regions around discontinuities [25, 45]. Furthermore, post-processing techniques, such as Gegenbauer reconstruction procedure [40] or digital total variation [57], are available. This technique can be also applied in the non-polynomial setting by means of discontinuous kernels.

This survey paper consists of 6 main sections and various subsections as follows. In Sect. 1.2 we introduce our main idea for mitigating the Runge and Gibbs effects based on the mapped-basis approach or its equivalent form that we have termed “fake-nodes”. In the successive Sect. 1.3 we present the algorithms for choosing a suitable map in the two instances studied in the paper. In Sect. 1.4 we show that the technique can be applied also using rational approximation instead of polynomial, while in Sect. 1.5 we discuss the application to quadrature. We continue then with Sect. 1.6 in which, for treating the Gibbs phenomenon we use a non-polynomial approach based on discontinuous kernels, in particular the so-called VSDK. Finally in Sect. 1.7 we discuss the application of these VSDK to the Magnetic Particle Imaging, a new quantitative imaging method for medical applications. We conclude with Sect. 1.8 by summarizing the main ideas and highlighting some further developments.

2 Mitigating the Runge and Gibbs Phenomena

Let \([a,b] \subset \mathbb {R}\) be an interval, X the set of distinct nodes (also called data sites) and \(f: \Omega \longrightarrow \mathbb {R}\) a given function sampled at the nodes with F n = {f i = f(x i)}i=0,…,n.

We now introduce a method that changes the interpolation problem (1.2) without resampling the function f. The idea rely on the so-called mapped basis approach where the map is used to mitigate the oscillations in the Runge and Gibbs phenomena. The idea of mapped polynomials is not new. Indeed, such kinds of methods have been used in the context of spectral schemes for PDEs.

For examples of well-known maps refer to e.g. [1, 41, 49]. However, for our purposes, that are devoted especially to applications when resampling cannot be performed, we consider a generic map \(S:\,[a,b] \longrightarrow \mathbb {R}\). We investigate the conditions which this map S should satisfy in Sect. 1.2.1.

Let \(\hat {x} \in \hat {S}:=S([a,b])\) we can compute the polynomial \(P_{g}: \hat {S} \longrightarrow \mathbb {R}\) interpolating the function values F n at the “fake” nodes \(S_X=\{S(x_i)=\hat {x}_i\}_{i = 0, \ldots , n}\subset \hat {S}\) defined by

for some smooth function \(g: \hat {S}\longrightarrow \mathbb {R}\) such that

Hence, for x ∈ [a, b] we are interested in studying the function

The function \(R^S_{f}\) in (1.8) can be considered as an interpolating function at the original set of nodes X n and data values F n, which is a linear combination of the basis functions \(\mathcal {S}_{n} \mathrel{\mathop:}= \{S(x)^i, \; i=0,\dots ,n\}\). As far as we know, a similar approach has been mentioned in [5], without being later worked out.

The analysis of this interpolation process can be performed in the following equivalent ways.

-

The mapped bases approach on [a, b]: interpolate f on the set X n via \(R^S_{f}\) in the function space \(\mathcal {S}_{n}\).

-

The “fake” nodes approach on \(\hat {S}\): we interpolate g on the set S X via P g in the polynomial space Πn.

2.1 The Mapped Bases Approach

The first question to answer is: how arbitrary is the choice of the map S?

Definition 1.1

We term S admissible if the resulting interpolation process admits a unique solution, that is the Vandermonde-like matrix V S:= V (S 0, …, S n), is invertible.

Since the new point set in the mapped space is S X, then

A consequence of this observation is the following proposition.

Proposition 1.1

The map S is admissible if and only if for any 0 ≤ i, j ≤ n, i ≠ j we have S i ≠ S j. In other words, S is injective in [a, b].

In fact, det(V S)≠0 if and only if S j − S i ≠ 0.

Remark 1.1

We point out that we can easily write

where V is the classical Vandermonde matrix and

This fact presents some similarities with the so-called generalized Vandermonde determinants that can be factored as the classical Vandermonde determinant and a Schur function, as outlined for instance in the paper [22].

We can associate to the interpolant \(R^S_{f}\) also its Lagrange form

with, in analogy with (1.3),

where \(V^S_i(x)=V_i(S(x))\).

Consequently, we can consider the S-Lebesgue constant \(\Lambda ^S_n\) associated to the mapped Lagrange basis \(\mathcal {L}^S=\{\ell ^S_0,\dots ,\ell ^S_n\}\) and to the interpolation operator \(\mathcal {L}^S_n: \Upsilon \longrightarrow \mathcal {S}_n\) with \((\Upsilon ,\lVert \cdot \lVert _{\Omega }\)) be a normed function space, which contains only real-valued functions on Ω = [a, b]. Then, \(\Lambda ^S_n\) is the operator norm of \(\mathcal {L}^S_n\) with respect to \(\lVert \cdot \lVert _{\Omega }\), that is

and hence we can provide the sup-norm error estimator as follows:

where \(E^{S,\star }_n(f)\) is the best polynomial approximation error in the sup-norm [53, Theorem I.1, p. 1].

We have just seen that ℓ i and \(\ell ^S_i\) are defined as in (1.3) and (1.9), respectively. The next Proposition proposes a rough upper bound for the S-Lebesgue constant, useful for understanding the asymptotic behaviour of the error.

Proposition 1.2

For all x ∈ [a.b], x ≠ x j , we have

with \(\displaystyle \alpha _i\mathrel{\mathop:}= \prod _{\substack {0\le j\le n \\ j\neq i}}{\frac {S_i-S_j}{x_i-x_j}},\quad \beta _i(x)\mathrel{\mathop:}= \prod _{\substack {0\le j\le n \\ j\neq i}}{\frac {S(x)-S_j}{x-x_j}}. \)

Proof

By construction \(\ell ^S_i(x_j)=\ell _i(x_j)=\delta _{i,j}\). Then, let x ≠ x j, from (1.3) and (1.9) we have that

We can also write

By defining

we get formula (1.10) as claimed. □

As a consequence, we can bound \(\Lambda _n^S\) from above by Λn unless a constant C(S, n) depending on the map S and n.

Theorem 1.1

Letting

then

where C = (L∕D)n with Λ n the classical Lebesgue constant in (1.6).

Proof

Using Proposition 1.2

An upper bound for |β i| is

where

Thus,

A lower bound for |α i| is

where

We then have

Therefore, letting L: =maxi≠j L i, D: =mini D i and considering the sum of the Lagrange polynomials, we obtain

We conclude by setting C(S, n) = (L∕D)n. □

2.2 The “Fake” Nodes Approach

The construction of the interpolating function \(R_{f}^S\) is equivalent to building a polynomial interpolant at the “fake” nodes, as defined in (1.8). Therefore, in what follows we concisely analyze the parallelism with the polynomial interpolation problem in \(\hat {S}\).

If ℓ i is the i-th Lagrange polynomial related to the set S X, then for \(\hat {x} \in \hat {S}\), we have

and the Lebesgue constant is given by

For x ∈ Ω, we observe that

As a consequence, we obtain

and

which in particular implies that

Since we can suppose without loss of generality that g is a regular function, for an appropriate choice of the map S, and hence of the nodes S X, we might improve the results with respect to classical polynomial approximation in [a, b]. The main difficulties are in finding a good map. In the next section we thus propose two receipts for computing suitable maps that, as numerically shown later, enable us to naturally mitigate both Runge and Gibbs phenomena.

3 Choosing the Map S: Two Algorithms

In this Section, we describe how, given an ordered set of interpolation nodes X n = {x i ∈ [a, b] | x 0 = a, x n = b, x i < x i+1}, we can effectively construct suitable maps S. We present two different ways of constructing the map S and in doing so we deal with the Runge and Gibbs phenomenon, respectively.

Treating the Runge Phenomenon

In order to prevent the appearance of the Runge phenomenon, we construct a map S such that the resulting set of “fake” nodes S X guarantees a stable interpolation process. The natural way is mapping X n to the set of ordered Chebyshev-Lobatto (CL) nodes C n = {c 0, …, c n} on [a, b].

Indeed, as well-known the Lebesgue constant of the CL nodes grows logarithmically with respect to n [19]. The map S on [a, b] that does this transformation, for any x ∈ [a, b], is the piecewise interpolant as obtained by the following algorithm that we term S-Runge.

Algorithm 1

Input: X n.

-

1.

Define the set of CL nodes C n.

-

2.

For x ∈ [x i, x i+1], i = 0, …, n − 1, set \( \beta _{1,i}=\frac {c_{i+1}-c_i}{x_{i+1}-x_i}, \quad \beta _{2,i}=c_i \) so that S(x) = β 1,i(x − x i) + β 2,i.

Outputs: the vectors β 1, β 2 of the coefficients that define S.

Since the CL nodes are distinct, the map is admissible by construction. For instance, if X n = {x k = a + k ⋅ (b − a)∕n, k = 0, …, n} is the ordered set of n + 1 equispaced nodes in [a, b], it can be analytically mapped to C n by using the map

This algorithm, is robust and does not require any additional hypothesis on X n and it could work for scattered data or on random, nearly equispaced or well-spaced interpolation nodes (for the definition of well-spaced we refer to [15]). These algorithms are also quoted in Wikipedia at [61].

Treating the Gibbs Phenomenon

Let us suppose that the underlying function f presents jump discontinuities, whose positions and magnitudes are encoded in the set

We assume to know the discontinuities and the related jumps. Such assumption is not restrictive. Indeed for the one dimensional case, but also in higher dimensions, algorithms for detecting the discontinuity points are available, see for instance the references [4, 54].

Near a discontinuity, the interpolant is forced to strongly vary making the Gibbs phenomenon more evident. The usual approach is to put more points nearby the jumps or use a so-called filter in order to get more accurate solution than rough Fourier expansions (cf e.g. [25]) or acceleration techniques of the Fourier expansions based on the 𝜖-algorithm (see e.g. [6]).

Our strategy is very simple: we sufficiently increase the gap between the node right before and the one right after the discontinuity, so allowing the interpolant to become smoother. To accomplish this, we introduce a shifting parameter k > 0.

The next algorithm, that we call S-Gibbs implements this idea.

Algorithm 2

As experimentally observed the choice of k is not critical. It suffices that in the mapped space the so-constructed function g has no steep gradients and this can be obtained by taking k ≫ 1. Since the resulting “fake” nodes S X are distinct, the so constructed map is admissible.

3.1 Numerical Tests

We show via the algorithms described in the previous Sect. 1.3 that we are able to substantially reduce the oscillating effects due to Runge and Gibbs phenomena. Our “fake” nodes approach is compared with the resampling at Chebyshev nodes. We note, that in many applications we unfortunately dispose of the data at equispaced samples. It is the reason why our approach becomes relevant for the underlying idea: mapping without resampling.

We consider the interval [−5, 5] and both equispaced and randomly distributed interpolation nodes. Moreover, we evaluate the interpolants on a set of equispaced points \({\Xi }=\{ \bar {x}_i, i=0,\ldots ,330 \}\) and compute the Relative Maximum Absolute Error (RMAE)

The experiments have been carried out in Python 3.6 using Numpy 1.15; see [30].

3.1.1 Application to Runge Phenomenon

For this test we consider the function \(f_1(x) = \frac {1}{\mathtt {e}^{-3x}+1}\,\) sampled at equispaced interpolation nodes E n. We then compare f 1 evaluated at Ξ with respect to

-

i.

the interpolating polynomial at equispaced points E n, i.e. the original data set and function values;

-

ii.

the interpolating polynomial at CL nodes C n and resampled function values f 1(C n), i.e. we resample the function;

-

iii.

the approximant built upon a polynomial interpolant at the fake nodes S(E n) corresponding to the CL, C n obtained by the map (1.13), and function values f 1(E n).

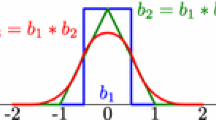

In Fig. 1.1, we show different reconstructions of f 1 for a fixed number of nodes. In Fig. 1.2 we show the RMAE for the function f 1 varying the number of nodes while in Fig. 1.3 we plot the Lebesgue functions related to the proposed methods. As pointed out in the theoretical analysis, the behavior of the “fake” nodes in terms of Lebesgue constant is analogous to that of the classical polynomial interpolation at CL points.

For more experiments and details the reader can refer to [27].

3.1.2 Applications to Gibbs Phenomenon

For the test, we consider the function with jump discontinuities at the origin

As done in the previous Sect. 1.3.1.1, we compare the interpolant in the three different situations indicated in the gray box.

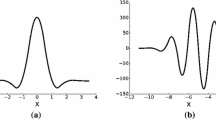

In Fig. 1.4 we display the results obtained using 20 interpolation points. We observe that the Gibbs phenomenon affects the reconstruction obtained via resampling on CL nodes, while it is well mitigated if using the fake nodes. In Fig. 1.5, we provide the RMAE behavior of these methods. The results are quite impressive, meaning that we are able to effectively reduce the Gibbs phenomenon by the S-Gibbs map of Algorithm 2.

Remarks In the presence of discontinuities, it is interesting noticing the behaviour of the elementary Lagrange functions under the mapping approach. In Fig. 1.6 we show this effect for the cubic case when there is no jump and jump, with and without scaling. What is interesting to see is that the cardinal functions become discontinuous in the presence of a discontinuities or jumps. This is the idea that we will develop in Sect. 1.6, about the use of discontinuous kernels when treating discontinuous functions.

4 Application to Rational Approximation

We can extend the mapped bases or “fake” nodes approach to the barycentric rational interpolation. In particular we concentrate to the family of Floater-Hormann (FH) rational approximants [35] combined with AAA-algorithm. We consider the family of FH interpolants because they have shown good approximation properties for smooth functions, in particular using equidistant nodes (cf. [14]). The idea comes from the fact that our previous construction is a general purpose machinery, a kind of “black-box”. Indeed, given a basis \(\mathcal {B}\) for the approximation space, we can apply the S-map approach getting a new basis, say \(\tilde {\mathcal {B}}=S \circ \mathcal {B}\). Considering that we are not resampling the values of the reconstructed function remains unchanged.

In [51], the AAA-algorithm has been discussed. This is a greedy algorithm to compute a barycentric rational approximant that is named by the authors Adaptive Antoulas–Anderson algorithm, reminding the names of the authors. This algorithm leads to impressively well-conditioned bases, which can be used in different fields, such as in computing conformal maps, or in rational minimax approximations (see e.g. [10]).

Unfortunately, both FH rational interpolants and those obtained by the AAA-algorithm, suffer of the Gibbs phenomenon when the underlying function presents jump discontinuities. For this reason we try to apply the S-Gibbs algorithm to mitigate or even better to reduce, this unpleasant effect.

We start by presenting a short overview of barycentric polynomial approximation and the FH family of rational approximants. Then we recall the main ideas about the AAA-algorithm and present the application of the S-Gibbs to both.

The numerical tests below will show an accurate interpolation of discontinuous functions.

4.1 Barycentric Polynomial Interpolation

As before let X n: = {x i: i = 0, …, n} the set of n + 1 distinct nodes in \(I=[a, \,b]\subset \mathbb {R}\), increasingly ordered and let \(f:I\longrightarrow \mathbb {R}\) be known at the sample points. Again we set F n: = {f i = f(x i): i = 0, …, n}.

It is well-known (see e.g. [7,8,9]) that it is possible to write the unique interpolating polynomial P n;f of degree at most n of f at X n for any x ∈ I in the second barycentric form

where \(\lambda _i=\displaystyle \prod _{j\neq i} \frac {1}{x_i-x_j}\) are called weights and this formula is one of the most stable way of evaluating P n;f (see [43]). If the weights λ i are changed to other nonzero weights, say w i, then the corresponding barycentric rational function

still satisfies the interpolation conditions R n;f(x i) = f i, i = 0, …, n.

4.2 Floater-Hormann Rational Interpolation

Let \(n \in \mathbb {N}\), d ∈{0, …, n}. Let p i, i = 0, …, n − d denote the unique polynomial interpolant of degree at most d interpolating the d + 1 points (x k, f k), k = i, …, i+d. The Floater-Hormann rational interpolant is

which interpolates f at the set of nodes X n. It has been proved in [35] that R n,d;f has no real poles and that it reduces to the unique interpolating polynomial of degree at most n when d = n.

One can derive the barycentric form of this family of interpolants as well. Indeed, with considering the sets J i = {k ∈{0, 1, …, n − d} : i − d ≤ k ≤ i}, one has

4.3 The AAA Algorithm

Let us consider a set of points X N with a large value of N (which represents the discretization of our domain) and a function \(f: I \longrightarrow \mathbb {R}\). The AAA-algorithm (cf. [51]) is a greedy technique that at the step m ≥ 0 considers the set X (m) = X N ∖{x 0, …, x m} and constructs the interpolant

by solving the discrete least squares problem for the weight vector w = (w 0, …, w m)

where \(\|{\cdot }_{X^{(m)}\|}\) is the discrete 2-norm over X (m). The next data site is x m+1 ∈ X (m) that makes the residual |f(x) − n(x)∕d(x)| maximum with respect to x ∈ X (m). This choice confirms the greedy nature of the process.

4.4 Mapped Bases in Barycentric Rational Interpolation

Using the ideas of mapped based approach in [27], we apply it to the Floater-Hormann interpolants and to its approximants produced via the AAA algorithm.

First, since the interpolant R n;f defined in (1.16) can be written using the cardinal basis, that is \(R_{n;f}(x)=\sum _{j=0}^n{f_jb_j(x)}\), where

is the j-th basis function, in the same spirit, we can write \(R^S_{n;f}\) in the mapped cardinal basis form \(R_{n;f}^S(x)=\sum _{i=0}^n{f_ib^S_i(x)}\), where \(b^S_j(x)= \frac { \frac {w_j}{S(x)-S(x_j)}}{\sum _{i=0}^n \frac {w_i}{S(x)-S(x_i)}}\) is the j-th mapped basis function.

Using the S mapping approach, a more stable interpolant may arise. Indeed, the following result provides an upper bound for the S-Lebesgue constant that shows this improved stability.

Theorem 1.2

Let \(\displaystyle \Lambda _n(X_n) = \max _{x\in I}{\sum _{j=0}^n{|b_j(x)|}}\) and \(\displaystyle \Lambda _n^S(X_n)\mathrel{\mathop:}= \max _{x\in I}{\sum _{j=0}^n{|b^S_j(x)|}}\) be the classical and the S-Lebesgue constants, respectively. We have

where \(C=\displaystyle \frac {\max _k M^k}{\min _k m_k}\) with

Proof

As done in the polynomial case, we should bound each basis function \(b_j^S\) in terms of b j for all x ∈ I. For details see [10]. □

Equivalently to the above mapped basis instance, we may construct the interpolant \(R_{n;f}^S\) via the “fake” nodes approach just following the same ideas developed in Sect. 1.4.

Let \(\tilde {R}_{n;g}\) be the barycentric rational interpolant as in (1.16) that interpolates, at the set of “fake “nodes S(X n), where the function \(g: S(I)\longrightarrow \mathbb {R}\) interpolates the values F n

Observe that \(R^S_{n;f}(x)=\tilde {R}_{n;g}(S(x))\) for every x ∈ I. Hence, we may also build \(R^S_{n;f}]\) upon a standard barycentric interpolation process, thereby providing a more intuitive interpretation of the method.

As we already observed, the choice of the mapping S is crucial for the accuracy interpolation process. Here, we confine our attention to the case in which f presents jump discontinuities and by using the S-Gibbs Algorithm (SGA), presented above, we construct an effective mapping S.

4.5 A Numerical Example

In I = [−5, 5] we consider the discontinuous functions

We test the “fake” nodes approach with the S-Gibbs Algorithm in the framework of FH interpolants and the AAA algorithm for approximation. We fix k = 10 in the S-Gibbs. As observed in [27], also in this setting the choice of the shifting parameter is non-critical as long as it is taken “sufficiently large”. We evaluate the constructed interpolants on a set of 5000 equispaced evaluation points \(\Xi =\{\bar {x}_i= -5+\frac {i}{1000}\::\:i=0,\dots ,5000\}\) and compute the Relative Maximum Absolute Error RMAE as defined in (1.14, both for R n;f and \(R_{n;f}^S\).

4.5.1 The FH Interpolants

Here, we take various sets of equispaced nodes \(\mathcal {X}_n=\{-5+\frac {5i}{n}\::\:i=0,\dots ,n\}\), varying the size of n. The results of the RMSE interpolation errors are displayed in Figs. 1.7 and 1.8, by doubling the number of the nodes for n = 40 up to 2560. We simply observe that the proposed reconstruction via the “fake” nodes approach outperforms the standard technique.

4.5.2 The AAA Algorithm

As the starting set for the AAA algorithm, we consider 10, 000 nodes randomly uniformly distributed in I, which we denote by \(\mathcal {X}_{rand}\).

Looking at Table 1.1, we observe that using the AAA algorithm with starting set S(X rand) (indicated in the Table as AAAS), that is, constructing the approximants via the fake nodes approach, does not suffer from the effects of the Gibbs phenomenon. For both approximants we fix the maximum degree to 20 and to 40 (by default 100 in the algorithm) getting rational approximants of type (20, 20) and (40, 40), respectively.

Remarks This extension of the “fake” nodes approach to barycentric rational approximation, in particular to the family of FH interpolants, and its approximations by the AAA algorithm for the treatment of the Gibbs phenomenon via the S-Gibbs algorithm, shows that the proposed reconstructions outperform their classical versions, by erasing distortions and oscillations.

5 Application to Quadrature

We now drive our attention towards the use of mapped bases as an alternative to standard quadrature rules as started to discuss in the ongoing paper [29].

Given \(f:I=[a,b] \rightarrow \mathbb {R}\), we are interested in approximating

via quadrature formulae of interpolation type. To this aim we take a set of distinct (quadrature) nodes X n = {x i, i = 0, …, n} by assuming x 0 = a, x n = b and n ≥ 1.

The classical quadrature of interpolation type substitutes f by its interpolant \(P_{n,f}(\cdot )=\sum _{i=0}^n a_i\, b_i(\cdot )\), with b i the i-th basis element of the polynomial space \(\mathbb {P}_n\) (note that the most simple basis one can consider is the monomials one, that is b i = x k) so that

The vector f contains the function values f k = f(x k) while the vector of the weights \(\boldsymbol {w}=(w_0,\ldots ,w_n)^{\intercal }\) is computed by solving the moments’ system

where V is the Vandermonde matrix so that V

i,j = b

i(x

j), the vector \(\boldsymbol {m}=(m_0,\ldots ,m_n)^{\intercal }\) consists of the moments  .

.

Remark The quadrature (1.17) is a scalar product that can be computed as the determinant of formula (1.5) substituting the elementary Lagrange polynomials with the weights and changing sing

5.1 Quadrature via “Fake” Nodes

In the mapped bases approach [27] instead of P n;f we consider the mapped interpolant \(P^S_{n;f}\). It comes then “natural” to construct the quadrature formulae in the mapped basis, that is

The weights \(\boldsymbol {w}^S=(w^S_0,\ldots ,w^S_n)^{\intercal }\) are computed by solving the mapped-based system

with m

S the vector of S-mapped moments. For instance, taking the monomial basis, the i-th S-moment is  .

.

Equivalently, for x ∈ I, the interpolant \(P^S_{n;f}\) can be seen as a standard polynomial interpolant P n;g on the mapped set of nodes \(S(I)=\hat {I}\). Moreover, if the map S is at least C 1 in I, letting t = S(x), for x ∈ I, we get

Thus,

It then follows

Proposition 1.3

\(w^S_i = \mathcal {I}(l_i\hat {S},\hat {I}).\)

Proof

Considering the Lagrange basis of the polynomial interpolant so that

and taken into account (1.20) we conclude □

The following then can be easily proven.

Proposition 1.4

Let S be such that g is at least \(C^{n+1}(\hat {I})\) . Then,

with \( \omega _{n+1}(t) = \prod _{i=0}^n (t-t_i).\)

Proof

Indeed

as claimed. □

Remark We observe that if S is such that g is a polynomial of degree n, then the quadrature has exactness n.

We now focus on the computation of the weights for the two maps introduced above for S-Gibbs and S-Runge, respectively.

First, we recall that considering \(h(x)=1/\sqrt {1-x^2}\), the Chebyshev weight function and C n the CL nodes on J = [−1, 1]

where \(w^c_i =\frac {\pi }{z_i n}\) with

We are ready to prove the following.

Theorem 1.3

Let X n be the set of n + 1 equispaced points of I = [a, b] and consider the S-Runge map

Then,

Proof

Let us now take on I = [a, b] the set of equispaced nodes X n and the S-map (1.23). Thus, S(X n) = C n and letting t = S(x) then l i(S(x)) = l i(t), i = 0, …, n, where l i(t) is the Lagrange basis at the CL nodes of J = [−1, 1]. Therefore, by using (1.20) and (1.21)

we have

Finally, by observing that the quadrature rule (1.22) is exact for polynomials of degree n, we get

This concludes the proof. □

Remarks

-

1.

This result turns out to be quite surprising but interesting: the weights of the “fake” nodes quadrature, coincide with those of the trapezoidal rule and up to the constant \({\pi \over b-a}\) with those for the weighted quadrature based on the CL nodes in [−1, 1].

-

2.

In the case of composite quadrature rules, the weights for the “fake” nodes approach can be computed by applying the S-map on each subinterval.

5.1.1 Examples

We apply the “new” quadrature approach to the following test functions: a discontinuous one and a Runge-type one

We compute their integrals over the interval I = [−2, 2]. In Fig. 1.9 the absolute error between the true value of the integral and its approximation is displayed. As approximants we compare the standard approach on a equispaced points, the classical quadrature on Chebyshev-Lobatto nodes and the integral computed with the “fake” nodes approach: we use the S-Gibbs and S-Runge for f 1 and f 2, respectively. We consider polynomials with degrees n = 2k with k = 1, …, 20. We observe that the “fake” nodes quadrature outperforms the computation of the integral on equispaced nodes while still competitive with the classical Gaussian quadrature based on CL nodes.

The experiments have been carried out in Python 3.7 using Numpy 1.15. The Python codes are available at https://github.com/pog87/FakeQuadrature.

6 Discontinuous Kernels

Interpolation by kernels, mainly by radial kernels known as Radial Basis Functions (RBF), are suitable tools for high-dimensional scattered data problems, solution of PDES, machine learning, image registration. For an overview of the topic we refer the reader to the monographs [34, 60] and references therein. Our interest is now confined to the approximation of data with discontinuities. Indeed, based on recent studies on Variably Scaled Kernels (VSKs) [18, 55] and their discontinuous extension [26], we use discontinuous kernel functions that reflect discontinuities in the data as a basis. These basis functions, referred to as Variably Scaled Discontinuous Kernels (VSDKs), enable us naturally to interpolate functions with given discontinuities.

6.1 A Brief Introduction to RBF Approximation

We start by introducing some basic notation and results about RBF interpolation.

Let \( \mathcal {X}_N = \{ \boldsymbol {x}_i, \; i = 1, \ldots , N\}\) be a set of distinct data points (data sites or nodes) arbitrarily distributed on a domain \( \Omega \subseteq \mathbb {R}^{d}\) and let \( \mathcal {F}_N = \{ f_i = f(\boldsymbol {x}_i) , \; i=1, \ldots , N \}\) be an associated set of data values (measurements or function values) obtained by sampling some (unknown) function \(f: \Omega \longrightarrow \mathbb {R}\) at the nodes x i. We can reconstruct f by interpolation, that is by finding a function \(\mathcal {P}: \Omega \longrightarrow \mathbb {R}\) satisfying the interpolation conditions

This problem (1.24) has unique solution provided \(\mathcal {P} \in \mbox{span} \{ \Phi _{\varepsilon }(\cdot ,\boldsymbol {x}_i), \boldsymbol {x}_i \in \mathcal {X}\}\), where \(\Phi _{\varepsilon } : \Omega \times \Omega \longrightarrow \mathbb {R}\) is a strictly positive definite and symmetric kernel. Notice that Φ depends on the so-called shape parameter ε > 0 which allows to change the shape of Φ, making it flatter (or wider) as ε → 0 + or spiky and so more localized as ε → +∞. This has important consequences in error analysis and stability of interpolation by RBF (cf. e.g. [58]).

The resulting kernel-based interpolant, denoted by \(\mathcal {P}_{\varepsilon ,\mathcal {X}_N},\) can be written as

The interpolation problem (1.24) in matrix form becomes \(A_{\varepsilon } \in \mathbb {R}^{N \times N}\) with (A ε)ik = Φε(x i, x k), i, k = 1, …, N. Then, letting \(\boldsymbol {f}= (f_1, \ldots , f_N)^{\intercal }\) the vector of data values, we can find the coefficients \(\boldsymbol {c}= (c_1, \ldots , c_N)^{\intercal }\) by solving the linear system A ε c = f. Since we consider strictly positive definite and symmetric kernels, the existence and uniqueness of the solution of the linear system is ensured. More precisely, the class of strictly positive definite and symmetric radial kernels Φε can be defined as follows.

Definition 1.2

Φε is called radial kernel if there exists a continuous function \(\varphi _{\varepsilon }: [0,+\infty )\longrightarrow \mathbb {R}\), depending on the shape parameter ε > 0, such that

for all x, y ∈ Ω.

Remark The notation (1.26) provides the “dimension-blindness” property of RBF. Hence, once we know the function φ and compose it with the Euclidean norm, we get a radial kernel.

In Table 1.2 we collect some of the most popular basis functions φ which are strictly positive definite. The Gaussian, Inverse Multiquadrics and Matérn (M0) are globally supported, while the Wendland (W2) and Buhmann (B2) are locally supported.

To Φε we can associate a real pre-Hilbert space \(H_{\Phi _{\varepsilon }}(\Omega )\)

with Φε as reproducing kernel. This space will be then equipped with the bilinear form \(\left (\cdot ,\cdot \right )_{H_{\Phi _{\varepsilon }}(\Omega )}\). Then we define the associate native space \(\mathcal {N}_{\Phi _{\varepsilon }} (\Omega )\) of Φε as the completion of \(H_{\Phi _{\varepsilon }}(\Omega )\) with respect to the norm \(||\cdot ||{ }_{H_{\Phi _{\varepsilon }}(\Omega )}\), that is \(||f||{ }_{H_{\Phi _{\varepsilon }}(\Omega )} = ||f||{ }_{\mathcal {N}_{\Phi _{\varepsilon }}(\Omega )}\) for all \(f \in H_{\Phi _{\varepsilon }}(\Omega )\). For more details, as already quotes, see the monographs [34, 60]).

The accuracy of the interpolation process can be expressed, for instance, in terms of the power function. The power function is a positive function given as the ratio of two determinants (cf. [23])

where \(A_{\varepsilon }(\mathcal {X}_N)\) is the interpolation matrix related to the set of nodes \(\mathcal {X}_N\) and the kernel Φε and \(A_{\varepsilon }(\mathcal {Y}_{N+1})\) the matrix associated to the set \(\mathcal {Y}_{N+1}\mathrel{\mathop:}= \{\boldsymbol {x}\}\cup \mathcal {X}_N,\; \boldsymbol {x} {\,\in \,}\Omega \).

The following pointwise error bound holds.

Theorem 1.4

Let Φ ε ∈ C( Ω × Ω) be a strictly positive definite kernel and \(\mathcal {X}_N \subseteq \Omega \) a set of N distinct points. For all \(f \in \mathcal {N}_{\Phi _{\varepsilon }}(\Omega )\) we have

Remarks We observe that this Theorem bounds the error in terms of the power function which depends on the kernel and the data points but is independent on the function values.

This theorem is a special instance of [60, Theorem 11.3, p. 182] where the fill-distance

is used instead of the power function.

6.2 From RBF to VSK Interpolation

The choice of the shape parameter ε is a crucial computational issue in RBF interpolation leading to instability effects without a clever choice of it. This is usually done by analyzing the behaviour of some kind of errors (like the Root Mean Square Error) versus the conditioning of the interpolation matrix (cf. e.g. [37, 52]) and so many techniques has been developed in order to overcome such problems. Many of these techniques allow to choose the best shape parameter based on a “trade-off” between conditioning and efficiency. There are approaches based on the choice of well-conditioned bases, like in the RBF-QR method for Gaussians [38] or in the more general setting discussed in [24].

In the seminal paper[18] the authors introduced the so called Variably Scaled Kernels (or VSKs) where the classical tuning strategy of finding the optimal shape parameter, is substituted by the choice of a scale function which plays the role of a density function. More precisely [18, Def. 2.1]

Definition 1.3

Letting \(\mathcal {I}\subseteq (0,+\infty )\) and Φε a positive definite radial kernel on \(\Omega \times \mathcal {I}\) depending on the shape parameter ε > 0. Given a scale function \(\psi :\Omega \longrightarrow \mathcal {I}\), a Variably Scaled Kernel Φψ on Ω is

for x, y ∈ Ω.

Defining then the map Ψ(x) = (x, ψ(x)) on Ω, the interpolant on the set of nodes \(\Psi (\mathcal {X}_N)\mathrel{\mathop:}= \{(\boldsymbol {x}_k,\psi (\boldsymbol {x}_k)),\;\boldsymbol {x}_k\in \mathcal {X}_N\}\) with fixed shape parameter ε = 1 (or any other constant value c) takes the form

with \(\boldsymbol {x}\in \Omega ,\;\boldsymbol {x}_k\in \mathcal {X}_N\).

By analogy with the interpolant in (1.25), the vector of coefficients \(\boldsymbol {c}= (c_1, \ldots , c_N)^{\intercal }\) is determined by solving the linear system A ψ c = f, where f is the vector of data values and (A ψ)ik = Φψ(x i, x k) is strictly positive definite because of the strictly positiveness of Φψ.

Once we have the interpolant \(\mathcal {P}_{1,\Psi (\mathcal {X}_N)}\) on \(\Omega \times \mathcal {I}\), we can project back on Ω the points \((\boldsymbol {x},\psi (\boldsymbol {x}))\in \Omega \times \mathcal {I}\). In this way, we obtain the so-called VSK interpolant \(\mathcal {V}_{\psi }\) on Ω. Indeed, by using (1.28), we get

The error and stability analysis of this varying scale process on Ω coincides with the analysis of a fixed scale kernel on \(\Omega \times \mathcal {I}\) (for details and analysis of these continuous scale functions see [18]).

6.3 Variably Scaled Discontinuous Kernels (VSDK)

To understand the construction of a VSDK let start from the one dimensional case. Let \(\Omega =(a,b)\subset \mathbb {R}\) be an open interval, ξ ∈ Ω and the discontinuous function \(f:\Omega \longrightarrow \mathbb {R}\)

where f 1, f 2 are real valued smooth functions for which exist finite the limits \(\displaystyle {\lim _{x\to a^+}{f_1(x)}}\), \(\displaystyle {\lim _{x\to b^-}{f_2(x)}}\) and f 2(ξ) ≠ limx→ξ f 1(x) .

If we approximate f on some set of nodes, say \(\mathcal {X} \subset \Omega \), the presence of a jump will result in unpleasant oscillations due to the Gibbs phenomenon. The idea is then to approximate f at \(\mathcal {X}\) by interpolants of the form (1.30) with the main issue of considering discontinuous scale functions in the interpolation process. The strategy is that of approximating a function having jumps by using a scale function that has jumps discontinuities at the same positions of the considered function.

To this aim, take \(\alpha ,\beta \in \mathbb {R},\;\alpha \neq \beta \), \(\mathcal {S}= \left \{ \alpha ,\beta \right \}\) and the scale function \(\psi :\Omega \longrightarrow \mathcal {S}\)

which is piecewise constant, having a discontinuity exactly at ξ as the function f.

Then we consider Φε a positive definite radial kernel on \(\Omega \times \mathcal {S}\), possibly depending on a shape parameter ε > 0 or alternatively a VSK Φψ on Ω as in (1.28). For the sake of simplicity we start by taking the function φ 1 related to the kernel Φ1 that is

so that

noticing that φ 1(∥(x, α) − (y, β)∥2) = φ 1(∥(x, β) − (y, α)∥2).

Then, consider the set \(\mathcal {X}_N=\{ x_k,\;k=1,\dots ,N\}\) of points of Ω and the interpolant \(\mathcal {V}_{\psi }:\Omega \longrightarrow \mathbb {R}\) which is a linear combination of discontinuous functions Φψ(⋅, x k) having a jump at ξ.

-

if a < x k < ξ

$$\displaystyle \begin{aligned} \Phi_{\psi}(x, x_k)= \left \{ \begin{array}{ll} \varphi_1(|x-x_k|), & \quad x<\xi,\\ \varphi_1(\|(x,\alpha)-(x_k,\beta)\|{}_2), & \quad x\ge \xi,\\ \end{array} \right. \end{aligned}$$ -

if ξ ≤ x k < b

$$\displaystyle \begin{aligned} \Phi_{\psi}(x, x_k)= \left \{ \begin{array}{ll} \varphi_1(|x-x_k|), & \quad x\ge \xi,\\ \varphi_1(\|(x,\alpha)-(x_k,\beta)\|{}_2), & \quad x<\xi.\\ \end{array} \right. \end{aligned}$$

By this construction we can give the following definition that extends the idea when we have more than one jump.

Definition 1.4

Let \(\Omega =(a,b)\subset \mathbb {R}\) be an open interval, \(\mathcal {S}=\{\alpha ,\beta \}\) with \(\alpha ,\beta \in \mathbb {R}_{>0},\;\alpha \neq \beta \) and let \(\mathcal {D}=\{\xi _j,\;j=1,\dots ,\ell \}\subset \Omega \) be a set of distinct points with ξ j < ξ j+1 for every j. Let \(\psi :\Omega \longrightarrow \mathcal {S}\) the scale function defined as

and

The kernel Φψ is then called a Variably Scaled Discontinuous Kernel on Ω.

For the analysis of the VSDKs introduced in Definition 1.4 we cannot rely on some important and well-known results of RBF interpolation due to the discontinuous nature of these kernels. Therefore we may proceed as follows.

Let Ω and \(\mathcal {D}\) be as in Definition 1.4 and \(n\in \mathbb {N}\). We define \(\psi _n:\Omega \longrightarrow \mathcal {I} \subseteq (0,+ \infty )\) as

where γ 1, γ 2 are continuous, strictly monotonic functions so that

From Definition 1.4, it is straightforward to verify that ∀x ∈ Ω the following pointwise convergence result holds

We point out that for every fixed \(n\in \mathbb {N}\) the kernel \(\Phi _{\psi _n}\) is a continuous VSK, hence it satisfies the error bound of Theorem 1.4. For VSDKs instead we have the following results whose proofs can be found in the paper [26].

Theorem 1.5

For every x, y ∈ Ω, we have

where Φ ψ is the kernel considered in Definition 1.4.

Corollary 1.1

Let \(H_{\Phi _{\psi _n}}(\Omega )=\mathit{\mbox{span}} \{ \Phi _{\psi _n}(\cdot ,x),\;x\in \Omega \}\) be equipped with the bilinear form \(\left (\cdot ,\cdot \right )_{H_{\Phi _{\psi _n}}(\Omega )}\) and let \(\mathcal {N}_{\Phi _{\psi _n}} (\Omega )\) be the related native space. Then, taking the limit of the basis functions, we obtain the space \(H_{\Phi _{\psi }}(\Omega )=\mathit{\mbox{span}} \{ \Phi _{\psi }(\cdot ,x),\;x\in \Omega \}\) equipped with the bilinear form \(\left (\cdot ,\cdot \right )_{H_{\Phi _{\psi }}(\Omega )}\) and the related native space \(\mathcal {N}_{\Phi _{\psi }}(\Omega )\).

We get an immediate consequence for the interpolant \(\mathcal {V}_{\psi }\) too.

Corollary 1.2

Let Ω, \(\mathcal {S}\) and \(\mathcal {D}\) be as in Definition 1.4 . Let \(f:\Omega \longrightarrow \mathbb {R}\) be a discontinuous function whose step discontinuities are located at the points belonging to \(\mathcal {D}\) . Moreover, let ψ n and ψ be as in Theorem 1.5 . Then, considering the interpolation problem with nodes \(\mathcal {X}_N=\{ x_k,\;k=1,\dots ,N\}\) on Ω, we have

for every x ∈ Ω.

To provide error bounds in terms of the power function, we should first define the equivalent power function for a VSDK Φψ on a set of nodes \(\mathcal {X}_N\). From (1.27), we immediately have

From Theorem 1.5 and Corollary 1.1, we may define the power function for a discontinuous kernel as

Finally, we can state the error bound for interpolation via VSDKs.

Theorem 1.6

Let Φ ψ be a VSDK on \(\Omega =(a,b)\subset \mathbb {R}\) , and \(\mathcal {X}_N \subseteq \Omega \) consisting of N distinct points. For all \(f \in \mathcal {N}_{\Phi _{\psi }}(\Omega )\) we have

Proof

For all \(n\in \mathbb {N}\) and x ∈ Ω, since the VSK \(\Phi _{\psi _n}\) is continuous, from Theorem 1.4, we get

Then, taking the limit n →∞ and recalling the results of this subsection, the thesis follows. □

6.4 VSDKs: Multidimensional Case

VSDKs rely upon the classical RBF bases, therefore as noticed are “dimension-blind” which make them a suitable and flexible tool to approximate data in any dimension. However, in higher dimensions, the geometry is more complex, so we must pay attention in defining the scale function ψ on a bounded domain \(\Omega \subset \mathbb {R}^d\).

We consider the following setting.

-

(i)

The bounded set \(\Omega \subset \mathbb {R}^d\) is the union of n pairwise disjoint sets Ωi and \(\mathcal {P}_\Omega =\{\Omega _1,\ldots ,\Omega _n\}\) forms a partition of Ω.

-

(ii)

The subsets Ωi satisfy an interior cone condition and have a Lipschitz boundary.

-

(iii)

Let \(\alpha _1, \ldots , \alpha _n \in \mathbb {R}\) and Σ = {α 1, …, α n}. The function ψ: Ω → Σ is constant on the subsets Ωi, i.e., ψ(x) = α i for all x ∈ Ωi. In particular, ψ is piecewise constant on Ω and discontinuities appear only at the boundaries of the subsets Ωi. We further assume that α i ≠ α j if Ωi and Ωj are neighboring sets.

A suitable scale function ψ for interpolating f via VSDKs on \(\Omega \subset \mathbb {R}^d\) can be defined as follows.

Let \(\Omega \subset \mathbb {R}^d\) satisfies the assumptions (i)–(iii) above. We define the scale function \(\psi :\Omega \longrightarrow \mathcal {S}\) as

Definition 1.5

Given the scale function (1.32) the kernel Φψ defined by (1.28) is a Variably Scaled Discontinuous Kernel on Ω.

Remarks In Definition 1.5 we choose a scale function which emulates the properties of the one-dimensional function of Definition 1.4. The difference is that the multidimensional ψ could be discontinuous not just at the same points as f, but also on other nodes. That is all the jumps of f lye along (d − 1)-dimensional manifolds γ 1, …, γ p which verify

More precisely, if we are able to choose \(\mathcal {P}_\Omega \) so that

then f and ψ have the same discontinuities. Otherwise, if

then ψ is discontinuous along \(\bigcup _{i=1}^{n} {\partial \Omega _{i}}\setminus \big (\partial \Omega \cup \bigcup _{i=1}^{p}{\gamma _i}\big )\), while f is not.

The theoretical analysis in the multidimensional case is done along the same path of the one-dimensional setting. The idea is to consider continuous scale functions \(\psi _n:\Omega \longrightarrow \mathcal {I} \subseteq (0,+ \infty )\) such that ∀x ∈ Ω the limits hold

and

We omit this extension and all the corresponding considerations which are similar to those discussed above for the one-dimensional setting, while we state directly the error bound.

Theorem 1.7

Let Φ ψ be a VSDK as in Definition 1.5 . Suppose that \(\mathcal {X}_N = \{\boldsymbol {x}_i, i=1, \ldots , N \} \subseteq \Omega \) have distinct points. For all \(f \in \mathcal {N}_{\Phi _{\psi }}(\Omega )\) we have

Proof

Just refer to Theorem 1.6 and considerations made above. □

For the characterization of the native spaces for the VSDKs (if the discontinuities are known) and Sobolev-type error estimates, based on the fill-distance, of the respective interpolation scheme the reader must refer to the very recent paper [28].

7 Application to MPI

As we already observed, interpolation is an essential tool in medical imaging. It is required for example in geometric alignment or registration of images, to improve the quality of images on display devices, or to reconstruct the image from a compressed amount of data. In Computerized Tomography (CT) and Magnetic Resonance Imaging (MRI), which are examples of medical inverse problems, interpolation is used in the reconstruction step in order to fit the discrete Radon data into the back projection step. Similarly in SPECT for regridding the projection data in order to improve the reconstruction quality while reducing the acquisition computational cost [59]. In Magnetic Particle Imaging (MPI), the number of calibration measurements can be reduced by using interpolation methods, as well (see the important paper [46]).

In the early 2000s, B. Gleich and J. Weizenecker [39], invented at Philips Research in Hamburg a new quantitative imaging method called Magnetic Particle Imaging (MPI). In this imaging technology, a tracer consisting of superparamagnetic iron oxide nanoparticles is injected and then detected through the superimposition of different magnetic fields. In common MPI scanners, the acquisition of the signal is performed following a generated field free point (FFP) along a chosen sampling trajectory. The determination of the particle distribution given the measured voltages in the receive coils is an ill-posed inverse problem that can be solved only with proper regularization techniques [47].

Commonly used trajectories in MPI are Lissajous curves [48], which are parametric space-filling curves of the square Q 2 = [−1, 1]2. More precisely, by using the same notations in [31,32,33], let \(\boldsymbol {n}=(n_1,n_2)\in \mathbb {N}^2\) be a vector of relatively prime integers and 𝜖 ∈{1, 2}, the two-dimensional Lissajous curve \(\gamma _{\epsilon }^{\boldsymbol {n}}:[0,2\pi ]\to Q_2\) is defined as

The curve \(\gamma _{\epsilon }^{\boldsymbol {n}}\) is called degenerate if 𝜖 = 1, and non-degenerate if 𝜖 = 2. The Padua points of degree n are a degenerate Lissajous curve which have generating curve \(\gamma _{Pad}(t)=(-\cos {}((n+1)t),-\cos {}(nt)),\quad t\in [0,\pi ]\) (see also [11, 16]).

The set of Lissajous node points associated to the curve \(\gamma _{\epsilon }^{\boldsymbol {n}}\) is given by

We define also the index set \(\Gamma ^{2\boldsymbol {n}}\mathrel{\mathop:}= \bigg \{(i,j)\in \mathbb {N}_0^2:(i/2 n_1)+(j/2 n_2)<1\bigg \}\cup \{(0,2 n_2)\}\) which give the cardinality of the set, that is

To reduce the amount of calibration measurements, it is shown in [46] that the reconstruction can be restricted to particular sampling points along the Lissajous curves, i.e., the Lissajous nodes \(\boldsymbol {\mathrm {LS}}_{2}^{(\boldsymbol {n})}\) introduced in (1.33). Furthermore, by using a polynomial interpolation method on the Lissajous nodes the entire density of the magnetic particles can then be restored (cf. [33]). As noticed, these sampling nodes and the corresponding polynomial interpolation are an extension of the theory of the Padua points (see [11, 16] and also https://en.wikipedia.org/wiki/Padua_points).

If the original particle density has sharp edges, the polynomial reconstruction scheme on the Lissajous nodes is affected by the Gibbs phenomenon. As shown in [25], post-processing filters can be used to reduce oscillations for polynomial reconstruction in MPI. In the following, we demonstrate that the usage of the VSDK interpolation method in combination with the presented edge estimator effectively avoids ringing artifacts in MPI and provides reconstructions with sharpened edges.

7.1 An Example

As a test data set, we consider MPI measurements conducted in [46] on a phantom consisting of three tubes filled with Resovist, a contrast agent consisting of superparamagnetic iron oxide. By the proceeding described in [46] we then obtain a reconstruction of the particle density on the Lissajous nodes \(\boldsymbol {\mathrm {LS}}_{2}^{(33,32)}\) (due to the scanner available, as described in [46] ). This reduced reconstruction on the Lissajous nodes is illustrated in Fig. 1.11 (left). A computed polynomial interpolant of this data is shown in Fig. 1.11 (middle, left). In this polynomial interpolant some ringing artifacts are visible The scaling function ψ for the VSDK scheme is then obtained by using the classification Algorithm [28] with a Gaussian function for the kernel machine. The resulting scaling function is visualized in Fig. 1.11 (middle, right). Using the C 0-Matérn (M0) kernel (see Table 1.2) for the VSDK interpolation, the final interpolant for the given MPI data is shown in in Fig. 1.11 (right).

Comparison of different interpolation methods in MPI. The reconstructed data on the Lissajous nodes \( \boldsymbol {\mathrm {LS}}_{2}^{(33,32)}\) (left) is first interpolated using the polynomial scheme derived in [33] (middle left). Using a mask constructed upon a threshold strategy described in [28] (middle right) the second interpolation is performed by the VSDK scheme (right)

8 Conclusion and Further Works

We have investigated the application of the polynomial mapped bases approach without resampling for reducing the Runge and Gibbs phenomena. The approach shows to be a kind of black-box that can be applied in many other frameworks. We indeed have applied it to barycentric rational approximation and quadrature. We have also studied the use of VSDK, a new family of variable scaled kernels, particularly effective in the presence of discontinuity in our data. A particular applications of VSDK is the image reconstruction from data coming from MPI scanners acquisitions.

Concerning the work in progress and the future works

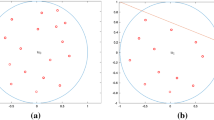

-

In the 2d case, we have results on discontinuous functions on the square, using polynomial approximation at the Padua points or tensor product meshes. In Fig. 1.12 we show the results of the interpolation of a discontinuous function along a disk of the square [−1, 1]2, where the reconstruction has been done by interpolation on the Padua points of degree 60 on the left. On the right we show the same reconstruction where the points that do not fall inside the disk are mapped with a circular mapping. The mapping strategy indeed reduce the Gibbs oscillations, but outside the disk we cannot interpolate, we instead approximate by least-squares, because of the “fake Padua” points that are not anymore unisolvent.

-

Again in 2d but also in 3d we can extract the so called Approximate Fekete Points of Discrete Leja sequences (cf. [12]) on various domains (disk, sphere, polygons, spherical caps, lunes and other domains). These points are numerically computed by numerical linear algebra methods and extracted from the so called weakly admissible meshes (WAM). For details about WAMs, refer to fundamental paper [13]

Finally we are working in improving the error analysis and finding more precise bounds for the Lebesgue constant(s). Among the applications of this approach we are interested to image registration in nuclear medicine and the reconstruction of periodic signals.

References

Adcock, B., Platte, R.B.: A mapped polynomial method for high-accuracy approximations on arbitrary grids. SIAM J. Numer. Anal. 54, 2256–2281 (2016)

Amat, S., Dadourian, K., Liandrat, J.: On a nonlinear subdivision scheme avoiding Gibbs oscillations and converging towards C s functions with s > 1. Math. Comput. 80, 959–971 (2011)

Amat, S., Ruiz, J., Trillo, J.C., Yáñez, D.F.: Analysis of the Gibbs phenomenon in stationary subdivision schemes. Appl. Math. Lett. 76, 157–163 (2018)

Archibald, R., Gelb, A., Yoon, J.: Fitting for edge detection in irregularly sampled signals and images. SIAM J. Numer. Anal. 43, 259–279 (2005)

Bayliss, A., Turkel, E.: Mappings and accuracy for Chebyshev pseudo-spectral approximations. J. Comput. Phys. 101, 349–359 (1992)

Beckermann, B., Matos, A.C., Wielonsky, F.: Reduction of the Gibbs phenomenon for smooth functions with jumps by the 𝜖-algorithm. J. Comput. Appl. Math. 219(2), 329–349 (2008)

Berrut, J.P., Elefante, G.: A periodic map for linear barycentric rational trigonometric interpolation. Appl. Math. Comput. 371 (2020). https://doi.org/10.1016/j.amc.2019.124924

Berrut, J.P., Mittelmann, H.D.: Lebesgue constant minimizing linear rational interpolation of continuous functions over the interval. Comput. Math. Appl. 33, 77–86 (1997)

Berrut, J.P., Trefethen, L.N.: Barycentric Lagrange interpolation. SIAM Rev. 46, 501–517 (2004)

Berrut, J.P., De Marchi, S., Elefante, G., Marchetti, F.: Treating the Gibbs phenomenon in barycentric rational interpolation via the S-Gibbs algorithm. Appl. Math. Lett. 103, 106196 (2020)

Bos, L., Caliari, M., De Marchi, S., Vianello, M., Xu, Y.: Bivariate Lagrange interpolation at the Padua points: the generating curve approach. J. Approx. Theory 143, 15–25 (2006)

Bos, L., De Marchi, S., Sommariva, A., Vianello, M.: Computing multivariate Fekete and Leja points by numerical linear algebra. SIAM J. Numer. Anal. 48, 1984–1999 (2010)

Bos, L., Calvi, J.-P., Levenberg, N., Sommariva, A., Vianello, M.: Geometric weakly admissible meshes, discrete least squares approximation and approximate Fekete points. Math. Comput. 80, 1601–1621 (2011)

Bos, L., De Marchi, D., Hormann, K.: On the Lebesgue constant of Berrut’s rational interpolant at equidistant nodes. J. Comput. Appl. Math. 236, 504–510 (2011)

Bos, L., De Marchi, S., Hormann, K., Sidon, J.: Bounding the Lebesgue constant of Berrut’s rational interpolant at general nodes. J. Approx. Theory 169, 7–22 (2013)

Bos, L., De Marchi, S., Vianello, M.: Polynomial approximation on Lissajous curves in the d −cube. Appl. Numer. Math. 116, 47–56 (2017)

Boyd, J.P., Xu, F.: Divergence (Runge Phenomenon) for least-squares polynomial approximation on an equispaced grid and Mock-Chebyshev subset interpolation. Appl. Math. Comput. 210, 158–168 (2009)

Bozzini, M., Lenarduzzi, L., Rossini, M., Schaback, R.: Interpolation with variably scaled kernels. IMA J. Numer. Anal. 35, 199–219 (2015)

Brutman, L.: Lebesgue functions for polynomial interpolation - a survey. Ann. Numer. Math. 4, 111–127 (1997)

Cheney, E.W.: Introduction to Approximation Theory, 2nd edn. AMS Chelsea Publishing, reprinted (1998)

Davis, P.J.: Interpolation and Approximation. Blaisdell, New York (1963)

De Marchi, S.: Polynomials arising in factoring generalized Vandermonde determinants: an algorithm for computing their coefficients. Math. Comput. Modelling 34, 271–281 (2001)

De Marchi, S.: On optimal center locations for radial basis interpolation: computational aspects. Rend. Sem. Mat. Torino 61(3), 343–358 (2003)

De Marchi, S., Santin, G.: A new stable basis for radial basis function interpolation. J. Comput. Appl. Math. 253, 1–13 (2013)

De Marchi, S., Erb, W., Marchetti, F.: Spectral filtering for the reduction of the Gibbs phenomenon for polynomial approximation methods on Lissajous curves with applications in MPI. Dolomites Res. Notes Approx. 10, 128–137 (2017)

De Marchi, S., Marchetti, M., Perracchione, E.: Jumping with variably scaled discontinuous kernels (VSDK). BIT Numer. Math. (2019). https://doi.org/10.1007/s10543-019-00786-z

De Marchi, S., Marchetti, M., Perracchione, E., Poggiali, D.: Polynomial interpolation via mapped bases without resampling. J. Comput. Appl. Math. 364, 112347 (2020)

De Marchi, S., Erb, W., Marchetti, F., Perracchione, E., Rossini, M.: Shape-driven interpolation with discontinuous kernels: error analysis, edge extraction and application in MPI. SIAM J. Sci. Comput. 42(2), B472–B491 (2020)

De Marchi, S., Elefante, G., Perracchione, E., Poggiali, D.: Quadrature at “fake” nodes. Dolomites Res. Notes Approx. 14, 39–45 (2021)

De Marchi, S., Marchetti, F., Perracchione, E., Poggiali, D.: Python code for Fake Nodes interpolation approach. https://github.com/pog87/FakeNodes

Erb, W.: Bivariate Lagrange interpolation at the node points of Lissajous curves - the degenerate case. Appl. Math. Comput. 289, 409–425 (2016)

Erb, W., Kaethner, C., Dencker, P., Ahlborg, M.: A survey on bivariate Lagrange interpolation on Lissajous nodes. Dolomites Res. Notes Approx. 8(Special issue), 23–36 (2015)

Erb, W., Kaethner, C., Ahlborg, M., Buzug, T.M.: Bivariate Lagrange interpolation at the node points of non-degenerate Lissajous nodes. Numer. Math. 133(1), 685–705 (2016)

Fasshauer, G.E., McCourt, M.J.: Kernel-Based Approximation Methods Using Matlab. World Scientific, Singapore (2015)

Floater, M.S., Hormann, K.: Barycentric rational interpolation with no poles and high rates of approximation. Numer. Math. 107(2), 315–331 (2007)

Fornberg, B., Flyer, N.: The Gibbs phenomenon for radial basis functions. In: The Gibbs Phenomenon in Various Representations and Applications. Sampling Publishing, Potsdam, NY (2008)

Fornberg, B., Wright, G.: Stable computation of multiquadrics interpolants for all values of the shape parameter. Comput. Math. Appl. 47, 497–523 (2004)

Fornberg, B., Larsson, E., Flyer, N.: Stable computations with gaussian radial basis functions. SIAM J. Sci. Comput. 33(2), 869–892 (2011)

Gleich, B., Weizenecker, J.: Tomographic imaging using the nonlinear response of magnetic particles. Nature 435, 1214–1217 (2005)

Gottlieb, D., Shu, C.W.: On the Gibbs phenomenon and its resolution. SIAM Rev. 39, 644–668 (1997)

Hale, N., Trefethen, L.N.: New quadrature formulas from conformal maps. SIAM J. Numer. Anal. 46, 930–948 (2008)

Hewitt, E., Hewitt, R.E.: The Gibbs-Wilbraham phenomenon: an episode in Fourier analysis. Arch. Hist. Exact Sci. 21(2), 129–160 (1979)

Higham, N.J.: The numerical stability of barycentric Lagrange interpolation. IMA J. Numer. Anal. 24, 547–556 (2000)

Jerri, A.: The Gibbs Phenomenon in Fourier Analysis, Splines and Wavelet Approximations. Kluwer Academic Publishers, Dordrecht (1998)

Jung, J.-H.: A note on the Gibbs phenomenon with multiquadric radial basis functions. Appl. Numer. Math. 57, 213–219 (2007)

Kaethner, C., Erb, W., Ahlborg, M., Szwargulski, P., Knopp, T., Buzug, T.M.: Non-equispaced system matrix acquisition for magnetic particle imaging based on Lissajous node points. IEEE Trans. Med. Imag. 35(11), 2476–2485 (2016)

Knopp, T., Buzug, T.M.: Magnetic Particle Imaging. Springer, Berlin (2012)

Knopp, T., Biederer, S., Sattel, T., Weizenecker, J., Gleich, B., Borgert, J., Buzug, T.M.: Trajectory analysis for magnetic particle imaging. Phys. Med. Biol. 54, 385–397 (2009)

Kosloff, D., Tal-Ezer, H.: A modified Chebyshev pseudospectral method with an \(\mathcal {O}(N^{-1})\) time step restriction. J. Comput. Phys. 104, 457–469 (1993)

Meijering, E.: A chronology of interpolation: from ancient astronomy to modern signal and image processing. Proc. IEEE 90(3), 319–342 (2002)

Nakatsukasa, Y., Sète, O., Trefethen, L.N.: The AAA algorithm for rational approximation. SIAM J. Sci. Comput. 40(3), A1494–A1522 (2018)

Rippa, S.: An algorithm for selecting a good value for the parameter c in radial basis function interpolation. Adv. Comput. Math. 11, 193–210 (1999)

Rivlin, T.J.: An Introduction to the Approximation of Functions. Dover, New York (1969)

Romani, L., Rossini, M., Schenone, D.: Edge detection methods based on RBF interpolation. J. Comput. Appl. Math. 349, 532–547 (2019)

Rossini, M.: Interpolating functions with gradient discontinuities via variably scaled kernels. Dolomites Res. Notes Approx. 11, 3–14 (2018)

Runge, C.: Über empirische Funktionen und die Interpolation zwischen äquidistanten Ordinaten. Z. Math. Phys. 46, 224–243 (1901)

Sarra, S.A.: Digital total variation filtering as postprocessing for radial basis function approximation methods. Comput. Math. Appl. 52, 1119–1130 (2006)

Schaback, R.: Error estimates and condition numbers for radial basis function interpolation. Adv. Comput. Math. 3, 251–264 (1995)

Takaki, A., Soma, T., Kojima, A., Asao, K., Kamada, S., Matsumoto, M., Murase, K.: Improvement of image quality using interpolated projection data estimation method in SPECT. Ann. Nucl. Med. 23(7), 617–626 (2009)

Wendland, H.: Scattered Data Approximation. Cambridge Monographs on Applied and Computational Mathematics. Cambridge University Press, Cambridge (2005)

https://en.wikipedia.org/wiki/Runge%27s_phenomenon#S-Runge_algorithm_without_resampling

Acknowledgements

I have to thanks especially who have collaborated with me in this project: Giacomo Elefante of the University of Fribourg and Wolfgang Erb, Francesco Marchetti, Emma Perracchione, Davide Poggiali of the University of Padova. We had many fruitful discussions that made the survey a nice overview of what can be done with “fake” nodes or discontinuous kernels for contrasting the Runge and Gibbs phenomena.

This research has been accomplished within Rete ITaliana di Approssimazione (RITA), partially funded by GNCS-INδAM, the NATIRESCO project BIRD181249 and through the European Union’s Horizon 2020 research and innovation programme ERA-PLANET, Grant Agreement No. 689443, via the GEOEssential project.

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Marchi, S.D. (2022). Mapped Polynomials and Discontinuous Kernels for Runge and Gibbs Phenomena. In: Barrera, D., Remogna, S., Sbibih, D. (eds) Mathematical and Computational Methods for Modelling, Approximation and Simulation. SEMA SIMAI Springer Series, vol 29. Springer, Cham. https://doi.org/10.1007/978-3-030-94339-4_1

Download citation

DOI: https://doi.org/10.1007/978-3-030-94339-4_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-94338-7

Online ISBN: 978-3-030-94339-4

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)