Abstract

The basic instinct of autonomous robot is to navigate in the unstructured environment. In several decades, the human-machine interaction has significantly developed and gained many greater achievements of the interesting fields such as perception, reasoning mechanism, manipulation, learning ability and navigation. In these topics, navigation becomes one of the most attractive studies for investigators to explore. Especially, the novel approached with the constraints of human comfort together with social rules are newly proposed. This paper provides a survey of past and present researches for human-aware navigation framework and synthesizes the potential solutions for the existing challenges in related fields.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In the early stage of robotics, there are two key streams which attracted many researchers all over the world. The first one is manipulation and the rest is navigation. It could be momentarily explained that manipulation [1] is mainly focused on a robot arm control. Usually, robotic manipulator is working on the assembling line in a wide range of industries. They need to pick various objects in complex environments. Although the workspace for the manipulation is restricted, the hardware architecture of the robot arm is too complicated [2]. Hence, the requirements of planning schemes are often sophisticated to do the manipulating jobs. In the other manner, navigation [3] is the main role in an autonomous robot control. Its observation in the surrounding space includes the feedback signals from cameras, laser scanners and the other sensors, then it builds the environmental model [4]. With the achieved data, it schedules the robot’s trajectory to move from the starting point to target point. Based on the artificial intelligence, if possible, robot could become more and more useful.

In many years ago, the applications for navigation and manipulation were primarily in industry. In other words, robotic system served in the industries. Recently, many robots are working instead of labor force [5]. Several developers solved main research issues for the industrial robots and implemented the practical systems [6, 7]. Though, they have not completely solved the research issues. It is quite important and necessary to deeply study the issue and improve the developed algorithms. However, on the other hand, robotics needs a new research issue.

Industrial robotics developed key components for building more human-like robots, such as sensors and motors. From 1990 to 2000, Japanese companies developed various animal-like and human-like robots as Fig. 1. Sony developed AIBO [8] which is a dog-like robot and QRIO [9] which is a small human-like robot. Mitsubishi Heavy Industries, LTD developed Wakamaru [10]. Honda developed a child-like robot called ASIMO [11]. Unfortunately, Sony and Mitsubishi Heavy Industries, LTD have stopped the projects but Honda is still continuing.

The most considerable difference between industrial robots and robots works in the daily environments is an interactive activities. In the regular environments, robots encounter with humans, and they have to interact with them before doing the task. Rather, the interaction will be the main task of the robots. These robots developed by the companies had various functions with the animal-like or human-like appearance to interact with people. Nevertheless, most of the current versions in service robot are wheeled robots or vehicle-type platforms which are flexible to move any location. The rest of this article is organized as follows: Sect. 2 briefly summarizes the historical development of human-aware navigation framework. It also provides an introduction of service robot in both academic researches and commercial products. In Sect. 3, several challenges of human-aware navigation theme in either the past time or present are synthesized the effective solutions and the existing problems. Section 4 mentions the potential approaches to solve these challenges. Finally, some conclusions are carried out in Sect. 5.

2 Historical Background

The term social robot or sociable robot was coined by Aude Billard and Kerstin Dautenhahn [12, 13]. The field of social robotics concentrates on the development and design of robots which interact socially with humans, but sociality between robots (e.g., in multirobot systems) is not part of the field. They can be distinguished between a weak and a strong approach in social robotics. While the strong approach wants to motivate robots which have the capabilities to display social and emotional behavior, the weak approach researches in the imitation of social and emotional behavior only. Social robots need to show the human-like social characteristics for instance the expression of emotions, the ability to conduct high-level dialogue, to learn, to develop personality, to use natural cues, and to develop social competencies [14,15,16]. In the last years, the term human-robot interaction (HRI) became more prominent than that of social robotics.

Nowadays, a social robot is an intelligent platform that integrated socially and cleverly human-aware behaviors in order to communicate with persons and other robots. In the office or factory, they are potential to handle tasks by themselves, for example reception and basic guide. For home-based application, social robots are able to serve and manipulate as a private duenna, and flexibly behave according to personal characteristics. In Fig. 2, some of them are listed as below:

-

hitchBOT [16] - a social robot that attempted to hitchhike across the United States in 2014

-

Kismet [17] - a robot head that understands and exhibits emotion when interacting with humans

-

Tico [18] - a robot developed to improve children's motivation in the classroom

-

Bandit [19] - a social robot designed to teach social behavior to autistic children

-

Jibo [20] is a consumer-oriented social robot. Jibo understands speech and facial expressions, is able to find out relations with family, and match with them that characterizes it.

Additionally, a social robot might be monitored from far distance, possibly handling as a TelePresence agent at a business meeting [21] or at home [22], or as a nurse in healthcare center [23]. The other ones that are autonomous systems, are implemented with locally artificial intelligence in order to communicate independently in respect to human’s response and object’s occurrence in workplace [24]. Occasionally, this robot is considered as an autonomously smart robot. The awareness of intelligent robot naturally depends on a perceptive computing model which imitates human’s actions and thoughts. Cognitive computing [25] comprises various models of machine learning approach which employ the techniques of data mining, pattern recognition and natural language processing (NLP) to simulate what the human brain acts.

Although social robots employ leading modern technology in any research, they’re really not persons and lack sympathy, feeling and reasoning mechanism. They proceed their missions from which they are planned to do, but then they might perform unexpectedly to circumstances for which they were not taught. Above all, every robot is sensitive to any malfunction and failure, and potentially suffers an expensive repair and maintenance. Furthermore, developers who investigate a dependence to an excessive degree on social robots, such emotional closeness, could be ignored when interacting among humans that are the necessities of the human characters.

3 Challenges and Opportunities

In general, the interest topics in human-aware robot navigation involves the interactive optimization of annoyance and stress to be more comfortable, the integration of cultural knowledge in robot’s awareness or the improvement of robot’s behavior to be more natural. All of them have the same goal to acquire the acceptance from community or society. To be considered as human-like factor, the human comfort should be taken into account. When a robot moves toward to target position, it can cause the discomfort feeling for human observers. The reasons are that too closed-distance, too fast or slow movement in respect to human must be avoided to reduce discomfort. The larger part of researches focuses on the avoidance of negative emotional responses such as fear or anger. The report indicates various strategies ranging from sustaining appropriate distance, proper strategy and precise control scheme to prevent being noisy, and planning to prevent interference (Figs. 3 and 4).

The virtual zones of social interaction for various situations in proxemics [26].

Desired trajectory for the passage maneuver [27].

4 Toward Modern Human-Aware Navigation

4.1 Observing Human’s Reactions

The purpose is to realize real services by the robot through the interactions. These researches have mainly focused on the development of the general functions and behavior of the robot [32, 33]. They would include moving to a destination and greeting as Fig. 5. The service robot should have situated behaviors to the environment, and they should follow contexts. In a complicated environment, it is quite difficult to design robot behaviors a priori. Thus, a tele-robot was introduced to develop: an operator remotely controlled the robot, and robot recorded the sensory data and visual data from the tags and cameras installed in the environment. Basically, this prototype obtains the robot autonomous functions based on the recorded sensory data.

System overview of mobile guide robot [32].

However, the merit of the tele-operated system was not only for developing robot autonomous behaviors in a complicated environment. The sensory data from both of the robot and environment indicated many things about how people react to robot behavior. It provided the rich information about human interactive behaviors, but there is a limitation to the psychological studies done in laboratories. They cannot provide enough variety of human interactive behaviors since there is no rich context in the laboratory environment. It is a rather assumed situation and not real. However, some field tests [34] with the tele-operated robot can induce real and rich interactive behavior among people as Fig. 6. Thus, the tele-operated systems and the sensor network in the field test enable to deeply study the psychological and cognitive aspect of people’s behaviors.

Scenario in the practical implementation, (a) direct interview and (b) remoted interview [34].

4.2 User’s Attitude and Expectations

In the study between human and robot, a lot of articles have focused on users’ expectations. Most of persons also have their prospects about robots’ jobs and missions, and in what way robots were developed. It could be seen clearly that user desires human-like presence for applications which require communal skills [35]. To response these requirements, human prefers to deliver missions acting as human-like robots rather than machine-like robots [36]. Related to human as Fig. 7, an operator wishes robot to co-work for such service-oriented duties or jobs which needs the deeply cognitive thinking [37], and challenged tasks [38]. Besides, the impact of culture was also noticed that in some special situations, human tends from accepting robot’s attitude to cultural behavior [39]. Likewise, differences in culture cause an alternative way for robot to communicate with people [40].

Experimental validation of a gaze cueing protocol by integrating in action sequence [36].

These studies reported how users interacted with social robots. Some users showed very positive attitudes trying to interact with robots repeatedly; some users showed an intermediate attitude, hesitantly crowding around the robot and watching the robot’s behavior. In the first topic, robot which is known to be tele-controlled, is mainly discussed. It is considered that there are needs of remotely operation in the stage of utilization, operators do not know their impacts clearly. In the other manner, humans prefer to directly communicate with social robot and they are not interested in the tele-operated robot. For the second approach, user’s attitude is addressed as the key factor. In the case that a lot of articles concentrate on the robot design and evaluate user’s acceptance attitude, there are not many studies to indicate the possible reason that human reveals negative attitude toward social robot. The contents of these studies match with mentioned problems. As a result, a novel idea for psychological indicators to estimate user’s attitude, and discovers the reasonable answers why there exists some negative attitudes from human toward robots.

4.3 Framework of Naturally and Comfortably Behaviors in Social Interaction

In reality, a given robot which situates the directional position of its body and displays both pose and gesture, is completely demonstrated in meaningful track [33]. Whenever an user is speaking to a robot, it has been clearly stated that this robot which non-verbally answers to a human’s speech, makes several deep feelings such listening to, understanding, and sharing a story with spoken man [41]. In the coworking-attention task, it was denoted that user observe a robot’s nonverbal cues to predict its internal state [42] (Fig. 8).

A characterization for manipulating the socially interactive robot for various tasks [33].

Likewise, the interactive performance of nonverbal behaviors has been validated as following. From the user’s gaze, the feedback information has been effectively reflected [43], preserve engagement [44, 45], and regulate the conversation flow [46, 47]. As well, the other gestures such pointing is usefully indicated as a conversation’s target objects [48, 49]. Most of those researches largely concentrate on estimating natural behavior of a human-like robot. In the first approach, a work related to deictic interaction, was introduced. When talking about objects, people naturally engage in deictic interaction, associating with pointing gestures. Since deictic interaction frequently occurs, it indicates about new signals that are outside our mutual knowledge [50]. Whenever robots are driven in a public place, the usage of deictic interaction as Fig. 9 becomes one of the relevantly interactive features in natural manner. Via these visual expression, human can rapidly understand what robot refers. In [51], authors have successfully simulated the use of conventional reference gestures such as kore, sore, and imitated human behaviors in deictic interactions.

Classifications of mixed-reality deictic gestures [50].

In the second approach, the zones around human was mentioned as the proxemics. During conversations, people adjust their distance to others based on their relationship and the situation. When discussing about objects, their formation is an O-shaped space that stand around the target object [52]. In this method, each member can see both the central object and other members in the conversation as Fig. 10. Such contributions in human communication have been replicated in human–robot interaction. Because robots are mobile platform, it is essential to manage its position during interaction. In the earlier stage [53], it discussed to achieve control based on a standing position, and robot is enabled to stand in a queue. Lately, developers in [54] have studied that people tend to concern about appropriate distance between them and a robot when interacting.

Group of humans, (a) three standing persons, (b) two humans interacting with an object, (c) walking human and (d) group of two walking people [52].

4.4 Data Fusion Approach

Sensing system is the fundamental components in most of robotic systems. In present time, people lives in the world with ubiquitous sensors, and access to the Internet via smart phones and computers to refer to information in distant locations. In similar metaphor, as robots are networked, large part of sensing can be done with ubiquitous sensors in network robot system. There is a large number of sensing devices for the network robot operating in the unstructured environment such floor sensors, active tags or passive tags, laser-based scanner and digital camera.

Setting of floor sensor, (a) sensing unit and (b) testbed for footstep or fall down recognition [55].

The floor sensor [55] is defined as a kind of pressure sensor which has a wide range. Usually, this equipment could sense the occurrence of both human and robot for using in indoor environment as Fig. 11. The active tags as well as passive tags are employed to determine humans around the robot [56, 57]. These tags are attached on humans, and the tag reader is located onboard as Fig. 12. While active tag has a wider range of detection than passive tag, it still needs a battery. The existing problem in this case is that time using battery is not as long as desired working time, and depends on how robot utilizes. However, battery needs to be regularly charged. Alternatively, there is no battery source in the passive tag, although the effective range to detect is only 10 mm. The most inconvenient thing is that human must put the tag on reader correctly. Subsequently, the selection for proper type of tag would be motivated by the purpose and situation of research.

Experimental demonstration of following a tagged human [56].

One of the most powerful sensors is lidar and camera. In [58], the benefits of digital cameras are small size, compact and easy to use, especially in an indoor environment since they are often attached on mobile platform. Though, they are not so good as required specifications for an outdoor environment for the reason that the dynamic adaptation against brightness is restricted. In the very bright zones and very dark zones co-existing in the same scene as Fig. 13, camera cannot identify any target for instance human and robot. In contrast, lidar is moderately unchanging in respect to the fluctuation of brightness. Recently, there is a trend to combine both sensors in order to achieve the best performance. If several lidars are sourced, the tracking performance is stably ensured. Then, multi cameras to detect whether face or facial emotion according to the detected positions by lidars, the tracking results are obviously improved.

Data fusion between 2D lidar and digital camera for human detection [58].

In recent years, these sensors could be integrated to launch the different kinds of sensor networks. In the field of navigation control, the sensor network is regularly classified into three classes, improving the sensor performance onboard, observing for safe events in the environment and developing the augmented autonomy for robot. In the first group, the sensing network must distribute sensors to cover densely and precisely both robots and humans. These onboard sensors are not strong enough and not intelligent enough because robot is not able to attach an appropriate number of sensing devices, and provide sufficiently computational abilities. Accordingly, active and passive tags are alternatively explored. In the other articles, the modern network including lidar and cameras, is mentioned. This sensor network can surely identify and follow both robots and humans simultaneously. Hence, dangerous situations as well as emergency case could be detected. Last but not least, the third category is to build the robot autonomously. Owing to the precisely sensory data that attained by sensing networks, it motivates investigators to create the highly autonomous robots in the real-world environment. Besides, the advanced algorithms are also essential to integrate into hardware level.

5 Conclusion

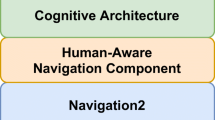

The scope of this review is to represent the state-of-the-art methodologies that related to human-aware navigation in the dynamic environment. The conceptual model as well as the historical development were re-drawn in order to provide the systematic overview in this field. Later, the respective methodologies were clustered accordingly by both traditional researches and modern topics so that the features of the human-aware navigation method are summarized and analyzed completely. Each category of specifications and a diversity of methods were synthesized and exploited for a variety of problems. The progress developed so far permits establishing the robot navigation system to be more autonomous and intelligent in social manner.

The publications found in this paper, deals with individual domains and challenges. It still remains the existing troubles which require more researches and investigations such that the adaptive performance of single service robot is mandatory in front of crowded including both humans and mobile vehicles. The autonomy of swarm system should be studied and mapped into the human-aware navigation planning.

References

Billard, A., Kragic, D.: Trends and challenges in robot manipulation. Science, 364 (6446) (2019)

Johannsmeier, L., Gerchow, M., Haddadin, S.: A framework for robot manipulation: Skill formalism, meta learning and adaptive control. In: 2019 International Conference on Robotics and Automation (ICRA), pp. 5844–5850. IEEE (2019)

Pandey, A., Pandey, S., Parhi, D.R.: Mobile robot navigation and obstacle avoidance techniques: a review. Int. Rob. Auto. J. 2(3), 00022 (2017)

Ravankar, A., Ravankar, A.A., Kobayashi, Y., Hoshino, Y., Peng, C.C.: Path smoothing techniques in robot navigation: state-of-the-art, current and future challenges. Sensors 18(9), 3170 (2018)

Kim, W., et al.: Adaptable workstations for human-robot collaboration: a reconfigurable framework for improving worker ergonomics and productivity. IEEE Robot. Autom. Mag. 26(3), 14–26 (2019)

Koch, P.J., et al.: A skill-based robot co-worker for industrial maintenance tasks. Procedia Manuf. 11, 83–90 (2017)

Liu, H., Wang, L.: An AR-based worker support system for human-robot collaboration. Procedia Manuf. 11, 22–30 (2017)

Knox, E., Watanabe, K.: AIBO robot mortuary rites in the Japanese cultural context. In: 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 2020–2025. IEEE (2018)

Nagasaka, K.: Sony QRIO. In: Humanoid Robotics: A Reference, pp. 187–200 (2019)

Porkodi, S., Kesavaraja, D.: Healthcare robots enabled with IoT and artificial intelligence for elderly patients. In: AI and IoT‐Based Intelligent Automation in Robotics, pp. 87–108 (2021)

Shigemi, S., Goswami, A., Vadakkepat, P.: ASIMO and humanoid robot research at Honda. In: Humanoid Robotics: A Reference, pp. 55, 90 (2018)

Billard, A., Dautenhahn, K.: Grounding communication in autonomous robots: an experimental study. Robot. Auton. Syst. 24(1–2), 71–79 (1998)

Breazeal, C.L.: Designing Sociable Robots. MIT Press, Cambridge (2002)

Breazeal, C.: Emotion and sociable humanoid robots. Int. J. Hum. Comput. Stud. 59(1–2), 119–155 (2003)

Rahwan, I., et al.: Machine behaviour. Nature 568(7753), 477–486 (2019)

Smith, D.H., Zeller, F.: The death and lives of hitchBOT: the design and implementation of a hitchhiking robot. Leonardo 50(1), 77–78 (2017)

Breazeal, C.: Emotive qualities in robot speech. In: Proceedings 2001 IEEE/RSJ International Conference on Intelligent Robots and Systems. Expanding the Societal Role of Robotics in the the Next Millennium (Cat. No. 01CH37180), vol. 3, pp. 1388–1394. IEEE (2001)

Castillejo, E., et al.: Distributed semantic middleware for social robotic services. In: Proceedings of the III Workshop de Robtica: Robtica Experimental, Seville, Spain, vol. 2829 (2011)

Luo, X., Zhang, Y., Zavlanos, M.M.: Socially-aware robot planning via bandit human feedback. In: 2020 ACM/IEEE 11th International Conference on Cyber-Physical Systems (ICCPS), pp. 216–225. IEEE (2020)

Chen, A.: JiboChat: interactive chatting through a personal robot, Doctoral dissertation, Massachusetts Institute of Technology (2020)

Due, B.L.: RoboDoc: semiotic resources for achieving face-to-screenface formation with a telepresence robot. Semiotica 2021(238), 253–278 (2021)

Yang, L., Neustaedter, C.: Our house: living long distance with a telepresence robot. In: Proceedings of the ACM on Human-Computer Interaction, vol. 2, no. CSCW, pp. 1–18 (2018)

Koceski, S., Koceska, N.: Evaluation of an assistive telepresence robot for elderly healthcare. J. Med. Syst. 40(5), 121 (2016)

Alers, S., Bloembergen, D., Claes, D., Fossel, J., Hennes, D., Tuyls, K.: Telepresence robots as a research platform for AI. In: 2013 AAAI Spring Symposium Series (2013)

Zhang, Y., Tian, G., Chen, H.: Exploring the cognitive process for service task in smart home: a robot service mechanism. Futur. Gener. Comput. Syst. 102, 588–602 (2020)

Hall, E.: The Hidden Dimension. Anchor Books (1966)

Pacchierotti, E., Christensen, H.I., Jensfelt, P.: Human-robot embodied interaction in hallway settings: a pilot user study. In: ROMAN 2005. IEEE International Workshop on Robot and Human Interactive Communication, pp. 164–171. IEEE (2005)

Smith, T., Chen, Y., Hewitt, N., Hu, B., Gu, Y.: Socially aware robot obstacle avoidance considering human intention and preferences. Int. J. Soc. Robot. 1–18 (2021). https://doi.org/10.1007/s12369-021-00795-5

Zardykhan, D., Svarny, P., Hoffmann, M., Shahriari, E., Haddadin, S.: Collision preventing phase-progress control for velocity adaptation in human-robot collaboration. In: 2019 IEEE-RAS 19th International Conference on Humanoid Robots (Humanoids), pp. 266–273. IEEE (2019)

Che, Y., Okamura, A.M., Sadigh, D.: Efficient and trustworthy social navigation via explicit and implicit robot–human communication. IEEE Trans. Rob. 36(3), 692–707 (2020)

Ngo, H.Q.T., Tran, A.S.: Using fuzzy logic scheme for automated guided vehicle to track following path under various load. In: 2018 4th International Conference on Green Technology and Sustainable Development (GTSD), pp. 312–316. IEEE (2018)

Kuno, Y., Sadazuka, K., Kawashima, M., Yamazaki, K., Yamazaki, A., Kuzuoka, H.: Museum guide robot based on sociological interaction analysis. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, pp. 1191–1194 (2007)

Deng, E., Mutlu, B., Mataric, M.: Embodiment in socially interactive robots. arXiv preprint arXiv:1912.00312 (2019)

Shimaya, J., Yoshikawa, Y., Kumazaki, H., Matsumoto, Y., Miyao, M., Ishiguro, H.: Communication support via a tele-operated robot for easier talking: case/laboratory study of individuals with/without autism spectrum disorder. Int. J. Soc. Robot. 11(1), 171–184 (2019). https://doi.org/10.1007/s12369-018-0497-0

Edwards, A., Edwards, C., Westerman, D., Spence, P.R.: Initial expectations, interactions, and beyond with social robots. Comput. Hum. Behav. 90, 308–314 (2019)

Wykowska, A.: Social robots to test flexibility of human social cognition. Int. J. Soc. Robot. 12(6), 1203–1211 (2020). https://doi.org/10.1007/s12369-020-00674-5

Wang, L., Törngren, M., Onori, M.: Current status and advancement of cyber-physical systems in manufacturing. J. Manuf. Syst. 37, 517–527 (2015)

Hayashi, K., Shiomi, M., Kanda, T., Hagita, N.: Are robots appropriate for troublesome and communicative tasks in a city environment? IEEE Trans. Auton. Ment. Dev. 4(2), 150–160 (2011)

Kumm, A.J., Viljoen, M., de Vries, P.J.: The digital divide in technologies for autism: feasibility considerations for low-and middle-income countries. J. Autism Dev. Disorders, 1–14 (2021). https://doi.org/10.1007/s10803-021-05084-8

Bröhl, C., Nelles, J., Brandl, C., Mertens, A., Nitsch, V.: Human–robot collaboration acceptance model: development and comparison for Germany, Japan, China and the USA. Int. J. Soc. Robot. 11(5), 709–726 (2019). https://doi.org/10.1007/s12369-019-00593-0

Van Pinxteren, M.M.E., Pluymaekers, M., Lemmink, J.: Human-like communication in conversational agents: a literature review and research agenda. J. Serv. Manag. 31(2), 203–225 (2020). https://doi.org/10.1108/JOSM-06-2019-0175

Yang, Y., Williams, A.B.: Improving human-robot collaboration efficiency and robustness through non-verbal emotional communication. In: Companion of the 2021 ACM/IEEE International Conference on Human-Robot Interaction, pp. 354–356 (2021)

Andrist, S., Ruis, A.R., Shaffer, D.W.: A network analytic approach to gaze coordination during a collaborative task. Comput. Hum. Behav. 89, 339–348 (2018)

Oertel, C., et al.: Engagement in human-agent interaction: an overview. Front. Robot. AI 7, 92 (2020)

Ben-Youssef, A., Clavel, C., Essid, S., Bilac, M., Chamoux, M., Lim, A.: UE-HRI: a new dataset for the study of user engagement in spontaneous human-robot interactions. In: Proceedings of the 19th ACM International Conference on Multimodal Interaction, pp. 464–472 (2017)

Chai, J.Y., et al.: Collaborative effort towards common ground in situated human-robot dialogue. In: 2014 9th ACM/IEEE International Conference on Human-Robot Interaction (HRI), pp. 33–40. IEEE (2014)

Iio, T., Satake, S., Kanda, T., Hayashi, K., Ferreri, F., Hagita, N.: Human-like guide robot that proactively explains exhibits. Int. J. Soc. Robot. 12(2), 549–566 (2020)

Neto, P., Simão, M., Mendes, N., Safeea, M.: Gesture-based human-robot interaction for human assistance in manufacturing. Int. J. Adv. Manuf. Technol. 101(1–4), 119–135 (2018). https://doi.org/10.1007/s00170-018-2788-x

Du, G., Chen, M., Liu, C., Zhang, B., Zhang, P.: Online robot teaching with natural human–robot interaction. IEEE Trans. Ind. Electron. 65(12), 9571–9581 (2018)

Williams, T., Tran, N., Rands, J., Dantam, N.: Augmented, mixed, and virtual reality enabling of robot deixis. In: Chen, J.Y.C., Fragomeni, G. (eds.) Virtual, Augmented and Mixed Reality: Interaction, Navigation, Visualization, Embodiment, and Simulation, pp. 257–275. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-91581-4_19

Kanda, T., Shiomi, M., Miyashita, Z., Ishiguro, H., Hagita, N.: A communication robot in a shopping mall. IEEE Trans. Rob. 26(5), 897–913 (2010)

Truong, X.T., Ngo, T.D.: Toward socially aware robot navigation in dynamic and crowded environments: a proactive social motion model. IEEE Trans. Autom. Sci. Eng. 14(4), 1743–1760 (2017)

Savino, M.M., Mazza, A.: Kanban-driven parts feeding within a semi-automated O-shaped assembly line: a case study in the automotive industry. Assem. Autom. (2015)

Hong, S.W., Schaumann, D., Kalay, Y.E.: Human behavior simulation in architectural design projects: an observational study in an academic course. Comput. Environ. Urban Syst. 60, 1–11 (2016)

Clemente, J., Song, W., Valero, M., Li, F., Liy, X.: Indoor person identification and fall detection through non-intrusive floor seismic sensing. In: 2019 IEEE International Conference on Smart Computing (SMARTCOMP), pp. 417–424. IEEE (2019)

Wu, C., Tao, B., Wu, H., Gong, Z., Yin, Z.: A UHF RFID-based dynamic object following method for a mobile robot using phase difference information. IEEE Trans. Instrum. Meas. 70, 1–11 (2021)

DeGol, J., Bretl, T., Hoiem, D.: Chromatag: a colored marker and fast detection algorithm. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1472–1481 (2017)

Ngo, H.Q.T., Le, V.N., Thien, V.D.N., Nguyen, T.P., Nguyen, H.: Develop the socially human-aware navigation system using dynamic window approach and optimize cost function for autonomous medical robot. Adv. Mech. Eng. 12(12), 1687814020979430 (2020)

Acknowledgements

We acknowledge the support of time and facilities from Ho Chi Minh City University of Technology (HCMUT), VNU-HCM for this study.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 ICST Institute for Computer Sciences, Social Informatics and Telecommunications Engineering

About this paper

Cite this paper

Ngo, H.Q.T. (2021). Recent Researches on Human-Aware Navigation for Autonomous System in the Dynamic Environment: An International Survey. In: Cong Vinh, P., Rakib, A. (eds) Context-Aware Systems and Applications. ICCASA 2021. Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering, vol 409. Springer, Cham. https://doi.org/10.1007/978-3-030-93179-7_21

Download citation

DOI: https://doi.org/10.1007/978-3-030-93179-7_21

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-93178-0

Online ISBN: 978-3-030-93179-7

eBook Packages: Computer ScienceComputer Science (R0)