Abstract

With the explosive growth of videos on the internet, video-text retrieval is receiving increasing attention. Most of the existing approaches map videos and texts into a shared latent vector space and then measure their similarities. However, for video encoding, most methods ignore the interactions of frames in a video. In addition, many works obtain features of various aspects but lack a proper module to fuse them. They use simple concatenation, gate unit, or average pooling, which possibly can not fully exploit the interactions of different features. To solve these problems, we propose the Multi-Interaction Model (MIM). Concretely, we propose a well-designed multi-scale interaction module to exploit interactions among frames. Besides, a fusion module is designed to combine representations from different branches by encoding them into various subspaces and capturing interactions among them. Furthermore, to learn more discriminative representations, we propose an improved loss function. And we design a new mining strategy, which selectively reserves informative pairs. Extensive experiments conducted on MSR-VTT, TGIF, and VATEX datasets demonstrate the effectiveness of the proposed video-text retrieval model.

This work was supported in part by the National Innovation 2030 Major S&T Project of China under Grant 2020AAA0104203, and in part by the Nature Science Foundation of China under Grant 62006007.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

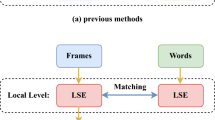

Since natural language texts contain richer content than keywords, video retrieval with natural language queries has received more attention. Usually, both texts and videos are projected into a latent space via different methods, which still have some limitations. First, most methods do not exploit sufficient inter-frame interactions. HGR [1] uses a weighted sum to get video embeddings, ignoring exploring more inter-frame interactions. Second, many works obtain features of various aspects but fuse them with simple methods. CE [5] fuses the results of multiple experts by average pooling and gate unit. Third, most loss functions for video retrieval are not flexible enough. The hinge-based triplet ranking loss [7,8,9] treats all samples equally, ignoring the effect of different samples on optimization. And most loss functions either focus on the hardest negative pair or average all negative pairs. [10, 12] The former may cause model affected by outliers, while the latter brings lots of redundancy.

To address the above limitations, we propose the Multi-Interaction Model (MIM). First, we propose a multi-scale inter-frame interactions module (MSIFI) to encode videos. It is implemented by a well-designed convolutional module. It regards each frame feature as a channel and performs 1-D convolution along the feature axis. Through MSIFI, each element of output embeddings comes from all the elements of inputs. Second, a fusion method is designed to merge features from MSIFI, bi-GRU, and global branches sufficiently. It maps the outputs of MSIFI and bi-GRU into different subspaces. Features from all subspaces will interact with each other. Then it is combined with global features via an adaptive gate unit. Third, we propose an improved loss function. It assigns weights to each pair with non-linear functions, whose value changes with the similarity score. Pairs whose similarity scores are far from the optimum will get larger weights and converge faster. Moreover, an adaptive mining strategy is designed to reserve informative samples with different weights. The main contributions of this work are as follows:

-

To fully exploit interactions among frames in multi scales, we propose a novel MSIFI module. It utilizes a well-designed convolution operation to learn more accurate and significant information from multi-scale interactions.

-

We design a novel fusion module to merge different features. Through sufficient interactions among features from multiple latent subspaces, we integrate features of various aspects and get an accurate video representation.

-

Considering the influence of different samples on optimization, we propose an improved loss with a new mining strategy.

-

Extensive experiments on several datasets validate the effectiveness of MIM.

2 Related Work

Frame Aggregations for Video-Text Retrieval. HGR [1] decomposes videos to match with texts in different levels and JSFusion [4] encodes all frames of videos with texts and directly predicts the video-text similarities. They both ignore exploring more inter-frame interactions.

Fusion Methods for Video-Text Retrieval. Dual Encoding [9] concatenates the results of multiple encoders. Howto100m [6] aggregates different features by max pooling and concatenation. CE [5] aggregates various information with a gate unit and average pooling.

Loss Functions for Video-Text Retrieval. Most methods [7,8,9] adopt hinge-based triplet ranking loss or bi-directional max-margin ranking loss [5, 6]. However, they treat all samples equally. Circle loss [12] assigns weights to different pairs with a linear function and Polynomial Loss [10] just considers the hardest negative sample or averages all negative samples, which are not flexible enough.

The architecture of MIM. The video encoder has three branches. The Text encoder contains a multi-dimensional attention module. The MSIFI module captures multi-scaled interactions among frames. The fusion module merges three branches features. N is the number of video frames and the dimension of features is unchanged by proper padding. Details are in Sect. 3. \(\textit{WS}\) denotes weighted sum and \(\odot \) is Hadamard product.

3 Methodology

Given a video v and a text t, our model encodes them into fixed d-dimensional vectors in a common space. We use the features extracted by pre-trained CNNs [19,20,21] and BERT [11]. As illustrated in Fig. 1, the video encoder has three branches, whose outputs are denoted as \(\phi _1(v)\), \(\phi _2(v)\), \(\phi _3(v)\). Then they are integrated into \(\zeta (v)\) by the fusion module. Text encoder handles text features with a multi-dimensional attention mechanism to get the result \(\psi (t)\).

3.1 Multi-scale Inter-frame Interactions (MSIFI) Branch

As shown in the upper-left part of Fig. 1, a video is projected into a matrix \(I\in \mathbb {R}^{N\times d}\) by pre-trained CNNs. I is the input of MSIFI and N is the number of frames. Specifically, each element of the feature corresponds to a channel of the last layer in pre-trained CNNs. We treat each frame as one channel of MSIFI and perform the convolution along the feature axis. This actually combines different channels of pre-trained CNNs when sliding our convolutional kernels. As the number of layers increases, the receptive field of each layer is enlarged and it completely covers I in the last layer. In this way, we achieve multi-scale inter-frame interactions and merge significant information from all frames. They are aggregated into \(\phi _1(v)\in \mathbb {R}^{d}\) by max pooling, reserving the most informative features. Each element of \(\phi _1(v)\) is derived from the interactions among all frames.

3.2 Temporal Branch and Global Branch

Since temporal information plays an important role in video encoding, we employ the bi-GRU network to capture temporal information. The input is \(I\in \mathbb {R}^{N\times d}\) and the output is aggregated into \(\phi _2(v)\in \mathbb {R}^{d}\) by max pooling.

To obtain a more comprehensive video embedding, we also extract the global features. As the significance of frames in a video are different, we assign weights to them based on significance. Each frame \(v_i\in \mathbb {R}^{d}\) is mapped into \(\tau _i\in \mathbb {R}\) by a FC layer. The global embedding of the video is the weighted sum of all frames:

where \(\gamma _{i}\in \mathbb {R}\) is the weight of the i-th frame and \(\phi _3(v)\) represents relatively primitive video information.

Visualization of attentions of different videos to K subspaces. Each row denotes the attention of a subspace, and every K rows correspond to a video. We set K = 3. Semantic similar videos have similar attentions. The content of the first two and last two videos are different, so they have different attentions. This indicates different subspaces represent different aspects of video features.

3.3 Fusion Module

To fuse information from three branches, we conduct another kind of interaction between \(\phi _1(v)\) and \(\phi _2(v)\) and then merge the result with \(\phi _3(v)\). As illustrated in the lower-right part of Fig. 1, we first map \(\phi _1(v)\) and \(\phi _2(v)\) into K subspaces respectively. They are denoted as \(\{\boldsymbol{h}^{(k)}\}\) and \(\{\boldsymbol{e}^{(k)}\}\), where k represents the k-th subspace. Different subspaces represent different aspects of video features. Figure 2 shows the representations of several videos in K subspaces. Semantic similar videos pay similar attention to certain subspaces, and unrelated videos have different dependencies on each subspace. After that, the representations from all subspaces are aggregated by weighted sum to obtain \(\mathbf {z_1}\in \mathbb {R}^{d}\) and \(\mathbf {z_2}\in \mathbb {R}^{d}\). They are fused into \(\xi (v)\) by Hadamard product. The q-th element of \(\xi (v)\) is as follow, where \(\alpha ^{(i)}\in \mathbb {R}\) and \(\beta ^{(i)}\in \mathbb {R}\) are trainable parameters.

It can be seen that the representation from each subspace interacts with representations from all subspaces of another branch. As \(\phi _3(v)\) contains global information, an adaptive fusion gate is uesd to mix \(\xi (v)\) and \(\phi _3(v)\) into \(\zeta (v)\in \mathbb {R}^{d}\):

where \(\mathbf {\lambda }\in R^{d}\) denotes the gating weight, \(\mathbf {FC_1}\) represents a fully connected layer and \(\sigma \) is the sigmoid function.

3.4 Text Encoder with Multi-dimensional Attention

Inspired by MAGP [14], we believe that different dimensions attend to different properties and we adopt the text encoder of MAGP. The difference is that we add up the output of every 2 adjacent layers of BERT, and concatenate the results of 6 groups. Then the multi-dimensional attention module obtains attention weights for every word and aggregates them into a vector \(\psi (t)\in \mathbb {R}^{d}\).

3.5 Video-Text Matching

The cosine similarity of \(\zeta (v)\) and \(\psi (t)\) is their similarity score: \( s_{i,j}=\frac{\zeta (v)_i^{T}\psi (t)_j}{||\zeta (v)_i||||\psi (t)_j||}.\) \(s_{i,i}\) is a positive pair and \(s_{i,j}\) is a negative pair, where \(i \ne j\). An adaptive mining strategy is used to reserve informative pairs. We select and assign weights to informative pairs while discarding other pairs. All negative samples are sorted based on similarity scores. Harder samples rank higher. Then we save top \(\frac{U}{r}\) samples, assign weights, and aggregate them to get the negative pairs representative \(s_{i,neg}\) for the i-th query. r is a hyper-parameter, U is the size of one batch.

Our loss function is as follow, where \(\mu _{n}\) and \(\mu _{p}\) are the weight functions of negative and positive pairs. \(\mathrm{\Delta }\) is the margin and \(\left[ \cdot \right] _{+}=max(\cdot ,0)\), a, \(b_0\) and \(b_1\) are hyper-parameters.

The curves of weight functions and their derivative functions are shown in Fig. 3. Our loss functions satisfy the following characteristics. When the similarity score is far from its optimum, this pair is more informative. The value and derivative value of its weight function will be greater. It means that this pair gets a bigger weight in the loss function and updates at a faster pace, and vice versa.

4 Experiments

4.1 Experimental Settings

Datasets and Metrics. We conduct experiments on MSR-VTT [15], VATEX [16], and TGIF [17]. We use the official partition of MSR-VTT. For VATEX and TGIF, we follow the experimental setup of HGR [1].The performance is evaluated with common retrieval metrics, namely R@K (Recall at rank K), MedR (Median Rank), MnR (Mean Rank), and rsum (the sum of all recall scores).

Implementation Details. For MSR-VTT, the visual features are extracted by ResNet-152 and ResNeXt-101 pre-trained on ImageNet [9]. For TGIF and VATEX, we use the pre-trained ResNet-152 visual feature and the officially provided I3D [19] visual feature respectively. The MSIFI module has 5 convolutional layers with kernel size = 3,5,5,7,9. The number of subspaces K is 3. The dimension d is 4096. For loss function, we choose hyper-parameters by grid search. We set \(r \!=\!20\), \(a\!=\!0.37\), \(\mathrm{\Delta }\!=0.8\), \(b_0\!=37\), and \(b_1\!=0.5\). The model is trained for 20 epochs using Adam optmizer [18] with batch size of 64 and learning rate is 1e−4.

4.2 Comparisons with State-of-the-Arts (SOTAs)

As shown in Table 1. On all datasets, MIM has the highest rsum, demonstrating the advantages of MIM. Specifically, MIM outperforms MAGP. As they have the same text encoder, it proves that our video encoder is more effective. As the features of VATEX are not frame-level features, it is hard to implement inter-frame interactions as sufficiently as on MSR-VTT or TGIF. Our performance on VATEX degrades slightly. Nevertheless, our rsum is still the highest, proving the superiority of our fusion module and loss function.

4.3 Ablation Studies

We conduct ablation studies on MRS-VTT and results are displayed in Table 2.

Effectiveness of MSIFI. We remove MSIFI and compare it with Transformer [3]. To maintain similar number of parameters, we use 1 layer Transformer with 4096 hidden dimensions and 8 attention heads. Results show that MSIFI is effective.

Effectiveness of Fusion Module. We replace the fusion module with gate unit and concatenation respectively. And rsum decreases by 9.4 and 23.5, which proves that our fusion strategy can integrate different features more effectively.

Effectiveness of Loss Function. We compare our loss function with other loss functions and replace the mining strategy with hard mining and average operation. Results confirm the superiority of our loss function and mining strategy.

5 Conclusions

This paper introduces a multi-interaction model for video-text retrieval, with an MSIFI branch to capture multi-scale interactions among videos frames and a fusion method to exploit multiple complementary information between different video features. Moreover, a loss function and a mining strategy are proposed. Extensive experiments show the effectiveness of this approach.

References

Chen, S., Zhao, Y., Jin, Q., et al.: Fine-grained video-text retrieval with hierarchical graph reasoning. In: CVPR, pp. 10638–10647 (2020)

Song, Y., Soleymani, M.: Polysemous Visual-semantic embedding for cross-modal retrieval. arXiv preprint arXiv:1906.04402 (2019)

Vaswani, A., et al.: Attention is all you need. In: NIPS, pp. 5998–6008 (2017)

Yu, Y., Kim, J., Kim, G.: A joint sequence fusion model for video question answering and retrieval. In: ECCV, pp. 471–487 (2018)

Liu, Y., et al.: Use what you have: video retrieval using representations from collaborative experts. In: BMVC (2019)

Miech, A., Zhukov, D., et al.: Howto100m: learning a text-video embedding by watching hundred million narrated video clips. In: ICCV, pp. 2630–2640 (2019)

Loko, J., et al.: A W2VV++ case study with automated and interactive text-to-video retrieval. In: MM, pp. 2553–2561 (2020)

Faghri, F., et al.: VSE++: improving visual-semantic embeddings with hard negatives. In: BMVC (2018)

Dong, J., et al.: Dual encoding for video retrieval by text. In: TPAMI (2021)

Wei, J., et al.: Universal weighting metric learning for cross-modal matching. In: CVPR, pp. 13005–13014 (2020)

Devlin, J., et al.: Bert: pre-training of deep bidirectional transformers for language understanding. In: NAACL-HLT, pp. 4171–4186 (2019)

Sun, Y., et al.: Circle loss: a unified perspective of pair similarity optimization. In: CVPR, pp. 6398–6407 (2020)

Feng, F., et al.: Cross-modal retrieval with correspondence autoencoder. In: MM, pp. 7–16 (2014)

Wu, D., et al.: Multi-dimensional attentive hierarchical graph pooling network for video-text retrieval. In: ICME (2021)

Xu, J., et al.: MSR-VTT: a large video description dataset for bridging video and language. In: CVPR, pp. 5288–5296 (2016)

Wang, X., et al.: Vatex: a large-scale, high-quality multilingual dataset for video-and-language research. In: CVPR, pp. 4581–4591 (2019)

Li, Y., et al.: TGIF: a new dataset and benchmark on animated GIF description. In: CVPR, pp. 4641–4650 (2016)

Kingma, DP., Ba, J.: Adam: a method for stochastic optimization. In: ICLR (2015)

Carreira, J., Zisserman, A.: Quo vadis, action recognition? a new model and the kinetics dataset. In: CVPR, pp. 6299–6308 (2017)

Xie, S., et al.: Aggregated residual transformations for deep neural networks. In: CVPR, pp. 1492–1500. (2017)

He, K., et al.: Deep residual learning for image recognition. In: CVPR, pp: 770–778 (2016)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Li, J., Wu, D., Zhu, Y., Bai, Z. (2021). A Multi-interaction Model with Cross-Branch Feature Fusion for Video-Text Retrieval. In: Mantoro, T., Lee, M., Ayu, M.A., Wong, K.W., Hidayanto, A.N. (eds) Neural Information Processing. ICONIP 2021. Communications in Computer and Information Science, vol 1517. Springer, Cham. https://doi.org/10.1007/978-3-030-92310-5_55

Download citation

DOI: https://doi.org/10.1007/978-3-030-92310-5_55

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-92309-9

Online ISBN: 978-3-030-92310-5

eBook Packages: Computer ScienceComputer Science (R0)