Abstract

Randomized experiments is a key part of product development in the tech industry. It is often necessary to run programs of exclusive experiments, i.e., groups of experiments that cannot be run on the same units during the same time. These programs imply restrictions on the random sampling, as units that are currently in an experiment cannot be sampled into a new one. Moreover, to technically enable this type of coordination with large populations, the units in the population are often grouped into ‘buckets’ and sampling is then performed on the bucket level. This paper investigates statistical implications of both the restricted sampling and the bucket-level sampling. The contribution of this paper is threefold: First, bucket sampling is connected to the existing literature on randomized experiments in complex sampling designs which enables establishing properties of the difference-in-means estimator of the average treatment effect. These properties are needed for inference to the population under random sampling of buckets. Second, the bias introduced by restricting the sampling as imposed by programs of exclusive experiments, is derived. Finally, practical recommendations on how to empirically evaluate and handle this bias is discussed together with simulations that support the theoretical findings .

M. Schultzberg—The authors thanks Andreas Born, Claire Detilleux, Brian St Thomas, Michael Stein, and the colleagues in the experimentation platform team for helpful feedback and suggestions for this paper.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

P-hacking still exists though, since it is easier to put a feature into production if there is a ‘significant’ experiment results to back it up.

- 2.

Assuming even splits into treatment and control which is often selected for efficiency reasons.

- 3.

Assuming a program with many experiments over time.

- 4.

The peaks are artefacts form that the \(\delta \)’s are multiplicatives of the lengths of the experiments.

References

Amrhein, V., Greenland, S., McShane, B.: Scientists rise up against statistical significance. Nature 567(7748), 305–307 (2019)

Dai, B., Ding, S., Wahba, G.: Multivariate Bernoulli distribution. Bernoulli 19(4), 1465–1483 (2013)

Dawid, A.P.: Conditional independence in statistical theory. J. R. Stat. Soc. Ser. B (Methodol.) 41(1), 1–31 (1979)

Fisher, R.A.: The Design of Experiments. Oliver and Boyd, Edinburgh (1935)

Hern, A.: Why Google has 200m reasons to put engineers over designers, February 2014

Horvitz, D.G., Thompson, D.J.: A generalization of sampling without replacement from a finite universe. J. Am. Stat. Assoc. 47(260), 663–685 (1952)

Ioannidis, J.P.A.: Why most published research findings are false. PLoS Med. 2(8), e124–e124 (2005)

Johansson, P., Schultzberg, M.: Rerandomization strategies for balancing covariates using pre-experimental longitudinal data. J. Comput. Graph. Stat. 29(4), 798–813 (2020)

Kish, L., Frankel, M.R.: Inference from complex samples. J. R. Stat. Soc. Ser. B (Methodol.) 36(1), 1–22 (1974)

Knuth, D.E.: The Art of Computer Programming: Volume 3: Sorting and Searching. Pearson Education, London (1998)

Kohavi, R., Thomke, S.: The surprising power of online experiments. Harvard Bus. Rev. 95, 74–82 (2017)

Pradhan, B.K.: On efficiency of cluster sampling on sampling on two occasions. Statistica 64(1), 183–191 (2007)

Lohr, S.L.: Sampling: Design and Analysis. Chapman & Hall/CRC Texts in Statistical Science. CRC Press (2019)

Neyman, J.: On the two different aspects of the representative method: the method of stratified sampling and the method of purposive selection. J. R. Stat. Soc. 97(4), 558 (1934)

Neyman, J.: On the application of probability theory to agricultural experiments. Essay on principles. Section 9. Stat. Sci. (1990), 5(4), 465–472 (1923)

Nordin, M., Schultzberg, M.: Properties of restricted randomization with implications for experimental design. arXiv preprint arXiv:2006.14888 (2020)

Rubin, D.B.: Inference using potential outcomes : design, modeling, decisions. J. Am. Stat. Assoc. 100(469), 322–331 (2005)

Student (William Sealy Gosset): The probable error of a mean. Biometrika, 6(1), 1–25 (1908)

Sukhatme, P.V., Sukhatme, S.: Sampling Theory of Surveys with Applications. Iowa State University Press, Iowa City (1984)

Tang, D., Agarwal, A., O’Brien, D., Meyer, M.: Overlapping experiment infrastructure: more, better, faster experimentation. In: Proceedings of the 16th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 17–26 (2010)

van den Brakel, J., Renssen, R.: Design and analysis of experiments embedded in sample surveys. J. Off. Stat. 14(3), 277–295 (1998)

van den Brakel, J., Renssen, R.: Analysis of experiments embedded in complex sampling designs. Surv. Methodol. 31(1), 23–40 (2005)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendices

Appendix

All theorems are repeated here for convenience.

1.1 6.1 Complete Random Sampling of Buckets

Theorem 4

Under random sampling of equally sized buckets, with random treatment assignment into two equally sized groups, the sample difference-in-means estimator is an unbiased estimator of the ATE. i.e.

where expectation is taken over the design space of random samples and treatment allocations.

Proof

Under random sampling of equally sized buckets, it follows that \(\pi _i=\frac{N_S}{N}\,\,\forall \,\, i=1,...,N\), which implies that the Horvitz-Thompson estimator simplifies as

which is simply the sample means of the groups. Unbiasedness follows directly from the results in [6], but we give an alternative proof here to build intuition. We drop the superscript t on the ATE to easy notation. Denote the difference-in-means estimator of the average treatment effect

Enumerate all possible sample under random sampling of buckets with \(\mathbf {S}_s\) where \(s=1,...,\text {card}(\mathcal {S}_B)\). Moreover, enumerate all possible treatment assignments over random treatment assignments by \(W^j\) where \(j=1,...,\text {card}(\mathcal {W})\). This implies that we can write one single estimate as

The expected value of \(\widehat{ATE}_B\), where the subscript B indicates random sampling of buckets from \(\mathcal {B}\), is given by

where the last step follows from equally sized buckets. Due to the symmetry of the random sampling of buckets and the equal bucket size, each bucket (and thereby unit) will be in equally many samples. Moreover, in each sample, each unit is in the treatment and control groups equally many times, respectively, due to the mirror property of randomization distributions [8, 16]. This implies that we are simply adding and subtracting the value of each unit several times. It follows that

The number of time that each unit will be in the control and the treatment group across all possible samples and treatment assignments is given by

which implies

We note that

which gives the final expression

Which is in line with [6] as expected. \(\blacksquare \)

1.2 Restricted Random Sampling of Buckets

Lemma 2

For a \(\delta _{\,\,\perp \!\!\! \perp }\) fulfilling Condition 1, the the difference in means estimator \(\widehat{ATE^{\tilde{\mathcal {B}}_t}_{t-\delta _{\,\,\perp \!\!\! \perp }}}\) is an unbiased estimator of \(ATE_{t-\delta _{\,\,\perp \!\!\! \perp }}\), i.e.,

Proof

Since a program of exclusive experiment is expected to change user behaviour and therefore reactions to future changes, it generally holds that

However, it is also the case that the only dependency between the potential outcomes and the set of available buckets is captured by the history of the available buckets. The samples are random from the set of available buckets – if different subsets have different experiences that affects their behaviour such that the ATE changes, the dependency between the sample and these changes are completely described by the history of \(\tilde{\mathcal {B}}_t\). It follows from Condition 1 that

In other words, in relation to the potential outcomes at time \(t-\delta _{\,\,\perp \!\!\! \perp }\), the subset \(\tilde{\mathcal {B}}_t\) is a random subset from \(\mathcal {B}\). One way to understand this is that from a randomization perspective it is equivalent to 1. randomly selecting a subset at time t, randomly sample from that subset, and finally randomly assign the treatment, and, 2. semi-randomly selecting subsets in steps until the subset is independent from the starting set (at step \(\delta _{\,\,\perp \!\!\! \perp }\)), and then sample and assign treatment randomly. The semi-random subsetting in \(\delta _{\,\,\perp \!\!\! \perp }\) steps is essentially an ineffective method for randomly drawing a subset. The important practical difference between 1 and 2 is that in a program of exclusive experiments, the potential outcomes are expected to change as a function of the steps of subsetting in 2. For this reason, performing 1 at time t yields a subset that is independent of the outcome at time t and all other time periods, whereas 2 yields a subset that is independent in relation to the potential outcomes only at time \(t-\delta _{\,\,\perp \!\!\! \perp }\) and before that.

Since \(\tilde{\mathcal {B}}_t\) is a random subset of buckets in relation to the potential outcomes at time \(t-\delta _{\,\,\perp \!\!\! \perp }\) under Condition 1, it only remains to prove that the difference-in-means estimator is an unbiased estimator of \(ATE_{t-\delta _{\,\,\perp \!\!\! \perp }}\) under random ‘sampling’ of a subset, random sampling within the subset and random treatment assignment within the sample to prove Lemma 1. Enumerate the possible subsets,\(\tilde{\mathcal {B}}_t^b\), of a given size as \(b=1,...,\left( {\begin{array}{c}B\\ \text {card}(\tilde{\mathcal {B}}_t)\end{array}}\right) \), where each subset is equally probable in relation to the potential outcomes at time \(t-\delta _{\,\,\perp \!\!\! \perp }\). In each subset, enumerate all possible samples \(\mathbf {S}_s\) as \(s=1,...,\left( {\begin{array}{c}\text {card}(\tilde{\mathcal {B}}_t)\\ \frac{N_S}{N_B}\end{array}}\right) \). This implies that using results from the proof of Theorem 1

where, similar to the proof of Theorem 1, each unit is included in equally many subsets and samples, and within each sample each unit is included in the treatment group and the control group equally many times. It follows that

where

Note that

Putting this together it follows that

Theorem 5

For a \(\delta _{\,\,\perp \!\!\! \perp }\) fulfilling condition 1, the bias in the difference in means estimator caused by sampling from the restricted set of the population of buckets given by \(\tilde{\mathcal {B}}\) is given by

Proof

Note that from Definition 1 it follows that

By Lemma 1 the difference-in-means estimator under random sampling of bucket from \(\tilde{\mathcal {B}}_t\) is an unbiased estimator of \(ATE_{t-\delta _{\,\,\perp \!\!\! \perp }}\) which implies that \(ATE_{t-\delta _{\,\,\perp \!\!\! \perp }}^{ \tilde{\mathcal {B}}_t} = ATE_{t-\delta _{\,\,\perp \!\!\! \perp }} \) which directly gives

Simulations

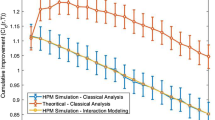

Here the simulation from Sect. 5.2 is repeated but with 100 buckets instead of 10000. All simulations can be replicated using the Julia code in the supplementary files. The parameters are repeated in Table 3 for convenience. Fig. 8 and 9 display the simulation results. The patterns are the same as in Sect. 5.2, but the biases are generally larger for the same settings when the number of buckets is smaller. This is expected, as the heterogeneity is ‘fixed’ between these settings, in the sense that the ATE’s for the first time points are drawn from the same normal distribution. This implies that there are going to be more and more buckets (when the number of buckets increase) with similar values, in turn implying that the heterogeneity between samples will decrease.

The ATE1 bias, bucket availability correlation, and bucket sampling correlation plotted as a function of \(\delta \), for settings 1–2. The parameters for each setting are given in Table 2.

The ATE1 bias, bucket availability correlation, and bucket sampling correlation plotted as a function of \(\delta \), for settings 3–6. The parameters for each setting are given in Table 2.

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Schultzberg, M., Kjellin, O., Rydberg, J. (2022). Statistical Properties of Exclusive and Non-exclusive Online Randomized Experiments Using Bucket Reuse. In: Arai, K. (eds) Proceedings of the Future Technologies Conference (FTC) 2021, Volume 1. FTC 2021. Lecture Notes in Networks and Systems, vol 358. Springer, Cham. https://doi.org/10.1007/978-3-030-89906-6_50

Download citation

DOI: https://doi.org/10.1007/978-3-030-89906-6_50

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-89905-9

Online ISBN: 978-3-030-89906-6

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)