Abstract

Link prediction in Knowledge Graph, also called knowledge completion, is a significant problem in graph mining and has many applications for large companies. The more accurate the link prediction results will bring satisfaction, reduce and avoid risks, and commercial benefits. Almost all state-of-the-art models focus on the deep learning approach, especially using convolutional neural networks (CNN). By analysing the strengths and weaknesses of the CNN based models, we proposed a better model to improve the performance of the link prediction task. Specifically, we apply a CNN with specific filters generated through the Hypernetwork architecture. Moreover, we increase the depth of the model more than baseline models to help learn more helpful information. Experimental results show that the proposed model gets better results when compared to CNN-base models.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

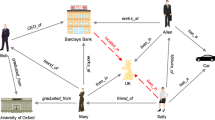

A knowledge graph is a form of knowledge representation that is of great interest to research communities, business and government because of its applicability. In social networks, we can consider vertices as users and edges describing relationships between them. We analyze this graph to make friend suggestions. In the context of the Covid epidemic, place and people are vertices, and edges can be the time and method of movement. We analyze the graph to trace who are at risk of infection. In criminal investigations, it is possible to determine or predict the action association of them. In a nutshell, knowledge representation as graphs along with the methods of analysis will yield valuable applications.

Link prediction is a task that based on observed information of the graph to infer the connections between vertices. In a static graph, the link prediction finds out the missing relations and generally referred to as the graph completion. Meanwhile, in a dynamic graph, link prediction identifies relationships that will appear the next time. In this paper, we only focus on predicting the missing links on a static graph. Approaches to solving this problem are divided into two main groups: supervised learning methods and unsupervised learning methods. Mining rules or calculating the similarity between vertices are algorithms that belong to the unsupervised learning group. Their advantages lie in simplicity and low training time. However, they are not universal on many types of graphs or many kinds of relationships, or governed by subjectivity when making measures. A supervised learning branch usually begins by embedding the graph in latent space with low-dimensional vectors, in which associated vertices tend to lie close together. The strength of the methods is that the feature is automatically learned. Consequently, the results are usually better. The trade-off, nevertheless, is the complexity of the learning model.

Today, deep learning is applied to many different problems such as image processing, natural language processing. Link prediction is also not out of this scope. Although more complex, it easily gets higher results compared to other approaches. With its own weights, the deep learning model can easily remember the information it learned and reflect the hidden distribution of data to predict more relationships that are not trained yet. Thanks to that advantage, we decided to research and improve the link prediction problem based on this field.

In this paper, we:

-

Propose HyperConvKB model based on the Convolutional Neural Network to learn entity and relation embeddings for knowledge graph completion. Our model uses a specific relation-dependent filter to generate the feature map.

-

Evaluate our model’s effectiveness on two benchmark datasets, WN18RR and FB15k-237, in standard metrics such as MR, MRR, Hit@k and compare it to state-of-the-art models. The experiments show that our approach achieves promising results in dataset WN18RR.

2 Related Work

Firstly, we introduce notations used in this paper. Let \(\mathcal {E}\) be a set of entities, \(\mathcal {R}\) be a set of relations. Knowledge graph \(\mathcal {G} \subseteq \mathcal {E} \times \mathcal {R} \times \mathcal {E}\). A triple \((s, r, t) \in \mathcal {G}\) where \(s\), \(r\), \(t\) denotes to source entity, relation, target entity, respectively. The scoring function \(\phi _r(s, t)\) is a measure of the correctness of a triple in the embedding space.

The models to solve the link prediction can be divided into three branches: geometric model, matrix factorization and deep learning model [11]. The geometric-based approaches interpret relation as geometric transformations. Some well-known models in this area are TransE [2] with translation transformation, RotatE [14] with rotation transformation. TransE mines the transitional characteristic, which is the useful intuition on the knowledge graph. This model represents entities and relations into a low-dimensional vector space and assumes that the source entity in this embedding space will be translated by relation vector \(r\). The result of the translation is a new point expected roughly equal to embedded target entity \(t\). However, TransE does not carry one-to-many, many-to-one, many-to-many relations. To solve these problems, there are many models developed such as TransH [17], TransR [7], StransE [9]. These models still use the translation assumption but in a different way. For instance, TransH considers a relation as a hyperplane. After that, a transition operator on its source and target are projected on this hyperplane and expect a transitional vector to connect two projected vectors. Another idea of the geometric model is embedding graphs into more complex spaces such as QuatE [12]. Specifically, QuatE uses quaternion embedding to represent the knowledge graph.

Meanwhile, matrix factorization optimizes the scoring function using bilinear or non-bilinear product between the vectors of source and target entity and relation. DistMult [18], Complex [16] are the models in this branch using a bilinear product. The scoring of these model has the form:

where \(\times \) denotes to matrix product.

However, these models usually suffer from overfitting. Regularization is one of the solutions to solve this problem. Rescal-DURA [19] is one of the models developed from Rescal by using a new regularizer called DUality-induced RegulArizer (DURA) to improve the performance.

The architecture of HypER and ConvKB to generate the feature maps. The HypER model uses specific filters generated by Hypernetwork to apply the CNN with these filters. The ConvKB model concatenates the embedding of source, relation and target as a matrix \(A \in \mathcal {R}^{d \times 3}\) and apply CNN on this matrix. If the convolutional layer only uses one filter [1, 1, −1], ConvKB will become TransE. Then, ConvKB can keep the idea of TransE and go further.

Both geometric and matrix factorization models are often designed with low parameters or insufficient depth to learn more information in the graph. Recently, many researchers have tried to implement the deep neural network for the link prediction problem. A deep neural network has many layers, and every layer can be designed to learn different information. Therefore, it can easily capture the hidden link that does not appear in the graph. Many models in this category, such as ConvKB [10], ConvE [4], HypER [1], utilized Convolutional Neural Network (CNN), one of the networks successfully applied in computer vision. D.Q. Nguyen proposes ConvKB model, which is only interactive in the same dimension of the embedding vector. In ConvKB (illustrated in Fig. 1), source, relation and target \((s, r, t)\) are concatenated without using reshape operator. Accordingly, ConvKB limits the reconstruction 2D structure of the embedding vector. With the input matrix, ConvKB uses \(1 \times 3\) filters for the convolutional layer to create a feature map. In this way, it can keep the transitional characteristic of embedding space. HypER model (shown in Fig. 1) used a specific architecture called Hypernetwork [6] which includes two networks. A network is the main network that behaves like any other typical network. Its task is to learn to map the source to the target. Another network smaller than the primary network, also called Hypernetwork, takes every information about the structure of weights and generates the weights for the primary network. Generally, these models are not deep enough. They only do one layer convolution and one fully connected to calculate the ranking score. In a complex graph, these approaches may not be good because they can not capture enough information.

Based on the synthesis of the strengths and the weaknesses of the previous models in the convolution field, we propose some improvements. Specifically, we update the ConvE by avoiding using the reshape operator because this operator destroys the 2D structure of the embedding vector. Furthermore, we found that the transitional characteristic from ConvKB is good intuition when doing the link prediction task. Hence, we include this characteristic in our model. Primarily, our model uses the Hypernetwork architecture to generate specific filters instead of using global filters. The reason is that the specific filters can capture specific relationships better than global filters.

3 Proposed HyperConvKB Model

This section will introduce our model architecture in detail. Generally, the model is divided into two phases. The first phase is the feature extraction, and the ranking score calculation for triples is the second phase. To do that, we assume two principles: (i) the relationship of a pair of vertices in triple that represents the characteristic of that triple and (ii) the pair of similar vertices can share the information for each other. We also offer the loss function to optimize the model parameters.

Feature Extraction

In deep learning models, the feature extraction process is fundamental, which impacts on the model performance. We realize that the transitional characteristic is still one of the essential ideas to serve the problem. However, as we know, these ideas are not strong enough to solve the relationships in the knowledge graph because they do not handle the one-to-many, many-to-one and many-to-many relationships well. Therefore, we try to extract the characteristic that the transitional characteristic has not solved. We suppose that the information of the relations represents the characteristic of a pair of vertices. That means there is a function to transform \(Feat(s, r, t) = F_r(s, t)\), where Feat(s, r, t) is a feature of triple \((s, r, t)\) and \(F_r\) is a function to extract the characteristic of a pair of vertices based on their relations. We use the convolutional neural network to extract the feature of every dimension of the data point. Hence, it helps to avoid the interaction of different dimensions and keep the transitional characteristic. For the convolutional layer, \(F_r\) is the filter’s weight, and input is the concatenated vector of source and target entity embeddings. Relation r can be concatenated to capture the transitional characteristic better.

Our model architecture. In this example, the embedding size \(d\) is 4, the number of relations is 4, and the number of filters \(k\) is 3. The relation after using a fully connected layer will reshape as a set of filters and apply the convolutional operator over the row of the input matrix. In this example, we do not concatenate the embedding of relation.

We represent the model’s input as a matrix \(X \in \mathbb {R}^{d \times n_{col}}\) by concatenating row by row the embeddings of triple, where \(d\) is the dimensionality of the embedding vector, \(n_row\) is the number of rows of the input matrix. A set of filters with a fixed size, \(1 \times n_{col}\), will apply the convolution operator over the row of the input matrix to generate the feature vector. A non-linear transformation, Hypernetwork, generates the weights of the convolution layer. The Hypernetwork uses the information from relations of triples as input and applies a fully connected layer with the activation function to create a vector with \(k \times n_{col}\) dimensions, where \(k\) is the number of filters in the convolutional layer. This vector will reshape as a \(k \times 1 \times n_{col}\) matrix which is a set of \(k\) matrices with a size of \(1 \times n_{col}\). In which every matrix represents a filter of the convolutional layer.

Ranking Score Calculation

After the first step, we have a feature vector that represents the information of triples. This vector is used to calculate the ranking score for the confidence of this triple. The smaller the triple’s ranking score, the better the confidence. In the knowledge graph, the positive triples are the facts, while negative triples do not exist. Therefore, the positive triples should have small scores and vice versa for the negative triples.

We use the second principle to calculate the ranking score. The main idea is based on the similarity of pairs to infer the missing relationship. We assume that if two pairs of vertices have a similarity, they will share the relationship for each. For example, let a pair of vertices (A, B) have relations (1, 2, 3) and (C, D) have relations (1, 2) as shown in Fig. 3. By somehow, if (A, B) is similar to (C, D), (C, D) will be suggested a relation (3) in future. To learn this rule, the feature vector from the first step is reduced in dimension to the number of relations. Therefore, we represent the feature vector at every dimensional as one information of relation. This process allows two different pairs of vertices to have the same feature vector. We use a fully connected layer with the number of units equal to the number of relations in the graph to extract this information. If this representation vector is a one-hot vector, each dimension of the vector represents the relation that the triple represents. Hence, if there are two different triplets with the same feature vector and one of them already exists in the graph, we will most likely accept the other triples as a missing link in the graph.

The feature vector of this step is used to calculate the ranking score of triples. Although there are many pairs with the same similarity, we only choose the pair with the best ranking score. Formally, the score function follows as Eq. 2.

where \(\omega _r = reshape(tanh(Wr + b))\) is a Hypernetwork to generate weights for the convolutional layer; f and g is non-linear activation. We choose both f and g as rectified linear unit (ReLU) in our model.

To learn the parameters, we design the loss function to satisfy the problem requirements. Suppose that the input consists of two sets: one includes triples that have a link (positive triples) and the other consists of triples that do not have the link (negative triples). Our model will learn to calculate the ranking score so that the positive triples always get a better ranking score than negative triples. In other words, the positive triples will get a ranking score smaller than negative triples. We choose 0 as the boundary of positive triple and negative triple. If positive triples have label 1 and negative triples have label −1, \(score \times label \le 0\). It means that the model calculates a true ranking score. Otherwise, we have an amount of penalty view as the loss of wrong calculation. We propose use the loss function follow as Eq. 3 and Eq. 4

or

where \(x = score \times label\) and \(b \ge 1\). Both loss functions satisfy the condition. Figure 2 shows the end-to-end architecture of our model.

4 Experiments

To evaluate effectiveness, we compare our model with state-of-the-art models on two standard datasets, WN18RR and FB15k-237. We also evaluate the model based on common metrics such as MR, MRR, H@K.

4.1 Datasets

Normally, there are five common datasets used in the link prediction evaluation. They are WN18 [2], WN18RR[4], FB15k [2], FB15k-237 [15] and YAGO [13]. However, we only pay attention to two datasets: WN18RR and FB15k-237. WN18RR and FB15k-237 are correspondingly subsets of WN18 and FB15k. Both WN18 and FB15k have reversible relations that are easy to predict the missing link. We can easily infer the missing link by reverse the source and target when knowing the train triples. It makes the model overestimate and can not evaluate the model performance precisely. Therefore, WN18RR and FB15k-237 was created to solve this problem by removing the inverse relations. WN18RR contains 40943 entities and 11 relations, while FB15k-237 contains 14541 entities and 237 relations. Table 1 summarises the characteristics of these datasets.

4.2 Metrics

Link prediction is a task that finds the missing relation. Given a source entity and a relation, (s, r), we infer t. It is called head prediction. Similarly, a relation and a target entity, (r, t), infer s is called tail prediction. For predicting the relations, the source entity s is replaced by other entities in the graph if it is a head prediction or the target entity t if a tail prediction. After that, the model calculates the ranking score for triples. The smaller the rating score, the better the result. We use “filtered” setting protocol [2] to eliminate its misleading effect. This option does not take any triple that appears in the training set for the validation or test phase. The reason is that, in the training phase, the model has learned these triples. If they happen again in the test set, they donate other triples. It means that they often have a good score. The rank of triple in tail prediction was calculated by the Eq. 5 and rank for head prediction can be computed analogously:

where \(\mathcal {E}\) is the set of entities and \(\mathcal {G}\) is training graph.

We evaluated the model in metrics including mean rank (MR), mean reciprocal (MRR) and Hit at K (H@K). The formula of these metrics gives in Eq. 6, Eq. 7 and Eq. 8. Let Q is a set of rank of correct triples which we want to predict.

Mean rank computes the average rank of a correct triple: the smaller MR, the better result.

It is the average of the inverse of the obtained ranks of the correct triple: the higher MRR, the better result.

It is the ratio of predictions for which the rank is equal or smaller than \(k\).

When \(score(s, r, t) = score(s, r, e)\), some policy will apply to calculate the rank of the correct triple. In this paper, we use the min policy and ordinal policy to evaluate the model. The min policy accepts the different triples to have the same rank if it has the same ranking score. Meanwhile, the ordinal policy ranks triples by their ordinal and does not accept other triples with the same rank.

4.3 Experiment Setup

We implement our model based on source code ConvKB in PyTorch version [10] which implemented on OpenKE framework [21]. The computer includes CPU intel core i7 9700K and GPU RTX 2080Ti running Ubuntu 16.04 LTS. We reuse some results of the authors [3, 10], such as hyperparameter, embedding vectors pre-trained from TransE [3, 10]. In the dataset WN18RR, the embedding dimension of entity and relation is 50, 100, and in FB15K-237, the embedding dimension is 100. We set the dropout ratio as 0 and 0.5. Convolution layer with a filter size of \(1 \times 2\) and \(1 \times 3\), respectively, if the relation was concatenated. The number of filters in the convolutional layer is default 64 with convolution \(stride = 1\). The number of negative sampling is chosen from {1, 2, 5, 10}.

As we mentioned earlier, our model takes input consisting of 2 sets: positive triples and negative triples. However, the training datasets only have positive triples. Hence, we had to generate negative triples manually. We use the Bernoulli trick introduced by [7, 17]. Besides, the Adagrad [5] optimizer is applied with a learning rate equal to 0.01 and Adam [20] with learning rate equal to 0.0001. Regularization is not applied. We train the model up to 300 epochs. After that, we use the best results of H@10 on the validation set to the testing model. Table 2 summaries the hyperparameters used in our model.

Evaluation protocol: Our implementation is based on OpenKE framework [21] and uses two policies (min, ordinal) when evaluating the model.

4.4 Result and Analysis

Table 3 showed the performance of models in the link prediction task. The results use the min policy show that in the WN18RR dataset, our model gets a better result than others at metrics MRR, H@1, H@3, H@10. Specifically, we increase 12% MRR when compared to RotH. Our model also increases H@1 above 10% when compared to Rescal-DURA which is the best model at H@1 in our comparison table. However, we found that the results on FB15k-237 are not good. The FB15k-237 is large and contains data from multiple fields, while our model is not deep enough to capture the relations. As shown in the comparison table, the KBGAT model is still good when doing the link prediction task in FB15k-237. Using the Graph Attention network to capture the graph structure, KBGAT gives better prediction for the multiple domain dataset such as FB15k-237. When using the ordinal policy, although performance has changed and is no longer the best, our model still better than some model also use the Convolution neural network such as ConvE, ConvKB.

Moreover, we also evaluate our model in two other aspects, head prediction and tail prediction. The detailed results are shown in Table 4. In FB15k-237 dataset, there is a quite difference between head and tail prediction. The tail prediction gets better results than the head prediction. That is the reason that reducing the overall performance of our model in this dataset. In contrast, our model achieves a stable result on WN18RR in both head and tail prediction. Therefore, depending on the type of dataset, we should choose a suitable prediction protocol to achieve the best performance.

We also evaluate the influence of hyperparameters to the model’s performance. Because our model has developed from ConvKB, so we suppose that the hyperparameters of our model will be the same as ConvKB. The two main parameters of the model are the exponential factor of the loss function and the number of negative sampling. Result show in Fig. 4. We found that the performance in the FB15k-237 is not stable, it just gets better results when choosing right parameters and it often gets bad results on almost all cases of hyperparameters. While the dataset WN18RR is completely opposite, it easily gets a good result. As we show in Fig. 4, for WN18RR the best hyperparameter is b = 2 (3), number of negative sampling is 5 and b = 1, number of negative sampling is 5 for FB15k-237.

The effectiveness of hyperparameters in our model. The figure on the left is for the WN18RR dataset, and the figure on the right is for the FB15k-237 dataset. In the WN18RR dataset, we easily find hyperparameters to get a better result. With different hyperparameters, the model nearly achieves the same result and gets the best result when b = 2 and negative sampling = 5. Otherwise, in the FB15k-237 dataset, we must choose suitable hyperparameters. If not, the result is awful.

Furthermore, we evaluate the influence of hyperparameters to the model’s performance. Because our model has developed from ConvKB, we apply the hyperparameters similar to ConvKB. The two other parameters are the exponential factor of the loss function and the number of negative sampling. The results are shown in Fig. 4. We found that the performance in the FB15k-237 dataset is not stable. It gets better results when choosing the correct parameters. However, in general, it often gets terrible results in every case of hyperparameters. Oppositely, for the WN18RR dataset, it gets a good result overall.

5 Conclusion

This paper proposed a model for link prediction task using Hypernetwork to generate parameters and applying two helpful principles to design model architecture. The experiments show that our proposed model gets better results in the WN18RR dataset. Specifically, we achieve approximately 10% higher than the state-of-the-art models on the MRR, H@K metrics, except MR. For the FB15k-237 dataset, the performance of the model is not expected. Because of inconsistency, the FB15k-237 dataset causes the learning model could not capture many cases well. It is proved through evaluating the head prediction and tail prediction and the effects of the metadata on the model. In the future, we intend to increase the number of parameters and the depth of the model to help it learn much hidden information.

References

Balažević, I., Allen, C., Hospedales, T.M.: Hypernetwork knowledge graph embeddings. In: Tetko, I., Kurková, V., Karpov, P., Theis, F. (eds.) International Conference on Artificial Neural Networks. LNCS, vol. 11731, pp. 553–565, Springer, Cham (2019). https://doi.org/10.1007/978-3-030-30493-5_52

Bordes, A., Usunier, N., Garcia-Duran, A., Weston, J., Yakhnenko, O.: Translating embeddings for modeling multi-relational data. In: Neural Information Processing Systems (NIPS), pp. 1–9 (2013)

Nguyen, D.Q., Vu, T., Nguyen, T.D., Nguyen, D.Q., Phung, D.: A capsule network based embedding model for knowledge graph completion and search personalization. In: NAACL-HLT, pp. 2180–2189 (2019b)

Dettmers, T., Minervini, P., Stenetorp, P., Riedel, S.: Convolutional 2D knowledge graph embeddings. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 32, no. 1 (2018)

Duchi, J., Hazan, E., Singer, Y.: Adaptive subgradient methods for online learning and stochastic optimization. J. Mach. Learn. Res. 12(7) (2011)

Ha, D., Dai, A., Le, Q.V.: Hypernetworks. In: International Conference on Learning Representations (2016)

Lin, Y., Liu, Z., Sun, M., Liu, Y., Zhu, X.: Learning entity and relation embeddings for knowledge graph completion. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 29, no. 1 (2015)

Nathani, D., Chauhan, J., Sharma, C., Kaul, M.: Learning attention based embeddings for relation prediction in knowledge graphs. In: ACL, pp. 4710–4723 (2019)

Nguyen, D.Q., Sirts, K., Qu, L., Johnson, M.: STransE: a novel embedding model of entities and relationships in knowledge bases. In: Proceedings of the 16th Annual Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL-HLT), pp. 327–333 (2016)

Nguyen, D.Q., Nguyen, T.D., Nguyen, D.Q., Phung, D.: A novel embedding model for knowledge base completion based on convolutional neural network. In: Proceedings of the 16th Annual Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL-HLT), pp. 327–333 (2017)

Rossi, A., Barbosa, D., Firmani, D., Matinata, A., Merialdo, P.: Knowledge graph embedding for link prediction: a comparative analysis. ACM Trans. Knowl. Discov. Data (TKDD) 15(2), 1–49 (2021)

Zhang, S., Tay, Y., Yao, L., Liu, Q.: Quaternion knowledge graph embeddings. Adv. Neural Inf. Process. Syst. 32, 2735–2745 (2019)

Suchanek, F.M., Kasneci, G., Weikum, G.: Yago: a core of semantic knowledge. In: Proceedings of the 16th International Conference on World Wide Web, pp. 697–706 (2007)

Sun, Z., Deng, Z.H., Nie, J.Y. and Tang, J.: Rotate: knowledge graph embedding by relational rotation in complex space. In: Seventh International Conference on Learning Representations, pp. 1–18 (2019)

Toutanova, K., Chen, D.: Observed versus latent features for knowledge base and text inference. In Proceedings of the 3rd Workshop on Continuous Vector Space Models and Their Compositionality, pp. 57–66 (2015)

Trouillon, T., Welbl, J., Riedel, S., Gaussier, É., Bouchard, G.: Complex embeddings for simple link prediction. In: International Conference on Machine Learning, pp. 2071–2080. PMLR (2016)

Wang, Z., Zhang, J., Feng, J., Chen, Z.: Knowledge graph embedding by translating on hyperplanes. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 28, no. 1 (2014)

Yang, B., Yih, W.T., He, X., Gao, J., Deng, L.: Embedding entities and relations for learning and inference in knowledge bases. arXiv preprint arXiv:1412.6575 (2014)

Zhang, Z., Cai, J., Wang, J.: Duality-induced regularizer for tensor factorization based knowledge graph completion. In: Advances in Neural Information Processing Systems, vol. 33 (2020)

Kingma, D., Ba, J.: Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Han, X., et al.: OpenKE: an open toolkit for knowledge embedding. In: Proceedings of EMNLP (2018)

Rossi, A., Firmani, D., Matinata, A., Merialdo, P., Barbosa, D.: Knowledge graph embedding for link prediction: a comparative analysis. 1(1), 43, Article no. 1 (2016)

Acknowledgements

This research is funded by the Faculty of Information Technology, University of Science, VNU-HCM, Vietnam, Grant number CNTT 2021-03 and Advanced Program in Computer Science.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Le, T., Nguyen, D., Le, B. (2021). Learning Embedding for Knowledge Graph Completion with Hypernetwork. In: Nguyen, N.T., Iliadis, L., Maglogiannis, I., Trawiński, B. (eds) Computational Collective Intelligence. ICCCI 2021. Lecture Notes in Computer Science(), vol 12876. Springer, Cham. https://doi.org/10.1007/978-3-030-88081-1_2

Download citation

DOI: https://doi.org/10.1007/978-3-030-88081-1_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-88080-4

Online ISBN: 978-3-030-88081-1

eBook Packages: Computer ScienceComputer Science (R0)