Abstract

A three-layered neural-network (NN), which consists of an input layer, a wide hidden layer and an output layer, has three types of parameters. Two of them are pre-neuronal, namely, thresholds and weights to be applied to input data. The rest is post-neuronal weights to be applied after activation. The current paper consists of the following two parts. First, we consider three types of stochastic processes. They are constructed by summing up each of parameters over all neurons at each epoch, respectively. The neuron number will be regarded as another time different to epochs. In the wide neural-network with a neural-tangent-kernel- (NTK-) parametrization, it is well known that these parameters are hardly varied from their initial values during learning. We show that, however, the stochastic process associated with the post-neuronal parameters is actually varied during the learning while the stochastic processes associated with the pre-neuronal parameters are not. By our result, we can distinguish the type of parameters by focusing on those stochastic processes. Second, we show that the variance (sort of “energy”) of the parameters in the infinitely wide neural-network is conserved during the learning, and thus it gives a conserved quantity in learning.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In recent years, great developments have been made in understanding the mechanisms of training of a neural networks when the width of the network is large. The first step was given in Neal [7], where it was shown that for any NTK-parametrized NN, the output before training converges to a Gaussian process on the space of inputs as the width increases. This means that even in the case of a neural network with nonlinear transformations, Bayesian regression with this Gaussian process as its prior distribution is tractable when we take the limit of width to infinity (Williams [12] and Goldberg et al. [2]). This idea has been extended to deep neural-networks by Lee et al. [5].

The Bayesian regression and training by gradient method have been linked by Jacot et al. ([4]). They found that gradient method in a NTK-parametrized NN with the large width is equivalent to kernel learning with the neural tangent kernel (NTK), and found a connection between the kernel and the maximum-a-posteriori estimator in Bayesian inference. They and Lee et al. ([6]) also showed that as the NTK-parametrized NN becomes wider, the model becomes linearized along the gradient descent or flow as the training, and the parameters become harder to be changed. This “lazy” regime appears, as shown in Chizat et al. [1], not only in over-parametrized neural-networks, but also in more abstract settings depending on the choice of scaling and initialization.

Due to the universal nature discovered in [4] and [6], we have not been able to distinguish whether they are pre- or post-neuronal if we focus on the behavior of the parameters. In this paper, we show that, during the learning, the behaviors of the cumulative sums of parameters over all neurons are different from each other according to their types of parameters. This implies that it is possible to distinguish whether the parameters are pre- or post-neuronal. When the width of the network tends to infinity, we also show that the “energy” of the cumulative sum is conserved (Theorem 2).

2 Related Works

Integral Representation of Mean-Field Parametrized NN. A mean-field parametrized NN forms like a Riemann sum, and thus has an integral representation when the width tends to infinity. In Sonoda-Murata [8] and Murata [11], the relationship between the distribution of parameters and the output is described via ridgelet transformation and their reconstruction theorem. On the other hand, in the case of our NTK-parametrized NN, the output before training is given by a stochastic integral when the network is infinitely wide. It would be of independent interest to investigate the reconstruction theorem in this situation.

Dynamics of Infinitely Wide Mean-Field Parametrized NN. For training of mean-field parametrized NN, another method for training is the stochastic gradient descent. It is described as a stochastic differential equation in the parameter space, in particular, it gives a gradient Langevin dynamics. When the width of the network is infinite, the parameter space is infinite-dimensional. Then the corresponding dynamics is described by an infinite-dimensional Langevin dynamics in a reproducing kernel Hilbert space, which appears as a collection of features. This infinite-dimensional model contains all models of finite width, and thus allows us to analyze them universally among all models with finite width. The convergence of this learning and the generalization error are discussed in Suzuki [9] and Suzuki-Akiyama [10].

3 Our Contribution

We consider the following NTK-parametrized NN of the width m:

Here, the input \( x \in \mathbb {R}^{} \) is one-dimensional and the activation function \(\sigma : \mathbb {R} \rightarrow \mathbb {R}\) is assumed to be non-negative and Lipschitz continuous. We denote the coordinates of the parameter \( \theta = ( \boldsymbol{a}_{0}, \boldsymbol{a}, \boldsymbol{b} ) \) as follows.

-

Pre-neuronal thresholds: \(\boldsymbol{a}_{0} = ( a_{0,1}, a_{0,2}, \ldots , a_{0,m} ) \in \mathbb {R}^{m}\),

-

Pre-neuronal weights: \(\boldsymbol{a} = ( a_{1}, a_{2}, \ldots , a_{m} ) \in \mathbb {R}^{m} \),

-

Post-neuronal weights: \(\boldsymbol{b} = ( b_{1}, b_{2}, \ldots , b_{m} ) \in \mathbb {R}^{m}\).

Given a training data \( \{ ( x_{i}, y_{i} ) \}_{i=1}^{n} \), we put \( \hat{y}_{i} ( \theta ) := f( x_{i}; \theta ) \) and define a loss function by

The solution to the associated gradient flow equation \( \frac{\mathrm {d}}{\mathrm {d}t} \theta (t) = - \frac{1}{2} ( \nabla _{\theta } L ) ( \theta (t) ) \) is denoted by \( \theta (t) = ( \boldsymbol{a}_{0}(t), \boldsymbol{a}(t), \boldsymbol{b}(t) ) = ( \{ a_{0,j}(t) \}_{j=1}^{m}, \{ a_{j}(t) \}_{j=1}^{m}, \{ b_{j}(t) \}_{j=1}^{m} ) \), where we set its initialization by \( \theta (0) = ( \boldsymbol{a}_{0} (0), \boldsymbol{a} (0), \boldsymbol{b} (0) ) \sim \mathrm {N} ( \boldsymbol{0}, I_{3m} ). \) Here, \(I_{3m}\) is the identity matrix of order 3m.

It is known that when the width m of the network is sufficiently large and training is performed, the optimal parameters are obtained as values close to the initial ones (Jacot et al. [4]). In this paper, we further investigate behaviors of the parameters. Specifically, we consider cumulative sums of the parameters over all neurons at each epoch, which are normalized by a scale depending on the width m. We focus on what arises when we take the normalized cumulative sums along the gradient flow, even the values of parameters are hardly varied. It is enough to consider only two cumulative sums \(\sum _{j=1}^{m} a_{j}(0)\) and \(\sum _{j=1}^{m} b_{j}(0)\) associated with pre- and post-neuronal weights respectively since thresholds have the same role as pre-neuronal weights by considering \(\{ (x_{i}, 1) \}_{i=1}^{n}\) as a two-dimensional input.

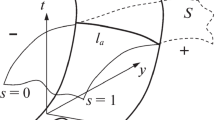

To compare their behaviors among different widths during the training, we have to consider which scale is appropriate to normalize the cumulative sums of the parameters. The initialization gives us a hint. At the initialization, variances of the cumulative sums are given by \( \sum _{j=1}^{m} \mathrm {Var} ( a_{j} (0) ) = \sum _{j=1}^{m} \mathrm {Var} ( b_{j} (0) ) = m \). Thus it would be natural to normalize \(\sum _{j=1}^{m} a_{j}(0)\) and \(\sum _{j=1}^{m} b_{j}(0)\) by scaling of \(\sqrt{m}\). Moreover, we embed them into the space of continuous functions on the interval [0, 1] as follows. On the m-equidistant partition \(\{ s_{k} := \frac{k}{m} \}_{k=0}^{m}\) of the interval, we set \( A_{s_{k}}^{(m)} (t) := \frac{1}{\sqrt{m}} \sum _{j=1}^{k} a_{j} (t) \) and \( B_{s_{k}}^{(m)} (t) := \frac{1}{\sqrt{m}} \sum _{j=1}^{k} b_{j} (t) \) and then we extend them onto subintervals \([ s_{k-1}, s_{k} ]\) by linear interpolations:

For each width m and time t of the gradient flow, these embedded functions \( A^{(m)} (t) = \{ A_{s}^{(m)} (t) \}_{0 \le s \le 1} \) and \( B^{(m)} (t) = \{ B_{s}^{(m)} (t) \}_{0 \le s \le 1} \) are random continuous-functions on [0, 1], namely, stochastic processes.

With this embedding, it will be necessary that they do not diverge when \(m \rightarrow \infty \) in order to compare them appropriately among various widths. At the initialization, by the so-called Donsker’s invariance principle, which is well known in probability theory, the stochastic processes \( \{ ( A^{(m)}(0), B^{(m)}(0) ) \}_{m=1}^{\infty } \) converge to a two-dimensional Brownian motion. In general, for any time t of the gradient flow, the following is valid.

Theorem 1

The family \(\{ ( A^{(m)}(t), B^{(m)}(t) )\}_{m=1}^{\infty }\) is tight.

This implies that a certain subsequence \(\{ ( A^{(m_{k})}(t), B^{(m_{k})}(t) ) \}_{k=1}^{\infty }\) converges almost surely (by replacing the probability space appropriately if necessary). In what follows, we denote the subsequence again by \(\{ (A^{(m)}(t), B^{(m)}(t) ) \}\) for simplicity of notations. The limit (A(t), B(t) ) of this subsequence gives a dynamics on the infinite-dimensional Banach space \(C([0,1] \rightarrow \mathbb {R}^{2})\) and then it would be another interest to describe the dynamics. In terms of \(B(t) = \{ B_{s}(t) \}_{0 \le s \le 1}\), we have

in probability as \(m \rightarrow \infty \), and this limit is called a stochastic integral. In the above, \(\{ a_{s} \}_{0 \le s \le 1}\) and \(\{ a_{0,s} \}_{0 \le s \le 1}\) are mutually independent Gaussian processes on [0, 1] with a zero mean and the covariance function given by \( \mathbf {E} [ a_{s} a_{u} ] = \mathbf {E} [ a_{0,s} a_{0,u} ] = \mathbf {1}_{\{ 0 \}} (u-s) \). Here, \(\mathbf {1}_{\{ 0 \}} \) is the indicator function of the singleton \(\{0\}\). These are also independent of B(0). Although it can be smoothly expected that the dynamics of \(\{ ( A(t), B(t) ) \}_{t \ge 0}\) is described by the neural tangent kernel, since \(C([0,1] \rightarrow \mathbb {R}^{2})\) is a non-Hilbert Banach space, it is difficult to employ the concepts of their gradient and kernel that depend on the inner product structure.

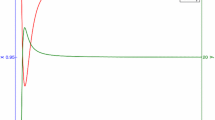

Now, among NTK-parametrized NNs of various widths, we can compare the dynamics for the cumulative sum at an “appropriate scale”. Figure 1 shows outputs of neural networks widths of \(m = 100, 1000, 10000\) after training. The training data are indicated by points, and we have used gradient descent. The following Figs. 2, 3, 4 and 5 show the changes of the parameters and their cumulative sums during the training. Each line in Figs. 2 and 4 represents how the corresponding parameter is varied during the training.

From the figures, as width increases, the variation of cumulative sum becomes smaller for parameters a, while we can see it is actually varied for parameters b.

In fact, when \(t=0\) and \(m \rightarrow \infty \), by the law of large numbers, we have

On the other hand, since the activation function \(\sigma \) is non-negative and non-zero,

As above, we observed numerically that the cumulative sum of the parameters b is varied along the gradient flow. It can be shown, however, that the following “energy” is conserved along the gradient flow.

Theorem 2

We have \(\displaystyle \lim _{m \rightarrow \infty } \frac{1}{m} \sum _{j=1}^m \big ( b_j (t)- \mathbf {E} [b_j(t)] \big )^2 = 1 \) for all \(t \ge 0\).

Here, \(\mathbf {E}\) denotes the expectation operator. The same for \(a_{0,j}(t)\) and \(a_{j}(t)\).

Figures 6 and 7 below confirm Theorem 2 in the learning shown in Fig. 1. The expectations have been simulated with using Monte Carlo methods.

4 Conclusion

In this paper, we showed that in a three-layer wide neural-network, the cumulative sum of pre-neuronal parameters is hardly varied along the gradient flow, while it is varied for post-neuronal parameters. This allowed us to find a critical difference among the behaviors of the pre- and post-neuronal parameters, this is a first trial to distinguish them, which has not been so far. Furthermore, we showed that the energy is conserved along the gradient flow.

References

Chizat, L., Oyallon, E., Bach, F.: On lazy training in differentiable programming. In: Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R. (eds.) Advances in Neural Information Processing Systems, vol. 32, (NeurIPS 2019). Curran Associates, Inc (2018)

Goldberg, P., Williams, C., Bishop, C.: Regression with input-dependent noise: a Gaussian process treatment. In: Advances in Neural Information Processing Systems, vol. 10, NIPS 1997. MIT Press (1998)

Ikeda, N., Watanabe, S.: Stochastic Differential Equations and Diffusion Processes, Second edn. North-Holland Mathematical Library, 24. North-Holland Publishing Co., Amsterdam; Kodansha Ltd, Tokyo, p. xvi+555 (1989). ISBN: 0-444-87378-3

Jacot, A., Gabriel. F., Hongler. C.: Neural tangent kernel: convergence and generalization in neural networks. In: Bengio, S., Wallach, H., Larochelle, H., Grauman, K., Cesa-Bianchi, N., Garnett, R. (eds.) Advances in Neural Information Processing Systems, vol. 31, pp. 8571–8580. Curran Associates, Inc (2018)

Lee, J., Bahri, Y., Novak, R., Schoenholz, S., Pennington, J., Sohl-Dickstein, J.: Deep neural networks as Gaussian processes. In: International Conference on Learning Representations, (ICLR 2018) (2018 )

Lee, J., et al.: Wide neural networks of any depth evolve as linear models under gradient descent. In: Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R. (eds.) Advances in Neural Information Processing Systems, vol. 32, (NeurIPS 2019), Curran Associates, Inc (2019)

Neal, R.M.: Priors for infinite networks. In: Bayesian Learning for Neural Networks, pp. 29–53. Springer, New York (1996). https://doi.org/10.1007/978-1-4612-0745-0_2

Sonoda, S., Murata, N.: Neural network with unbounded activation functions is universal approximator. Appl. Comput. Harmonic Anal. 43(2), 233–268 (2017)

Suzuki, T.: Generalization bound of globally optimal non-convex neural network training: transportation map estimation by infinite dimensional Langevin dynamics. In: Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M.F., Lin, H. (eds.), Advances in Neural Information Processing Systems, vol. 33, (NeurIPS 2020), pp. 19224–19237. Curran Associates, Inc (2020)

Suzuki, T., Akiyama, S.: Benefit of deep learning with non-convex noisy gradient descent: provable excess risk bound and superiority to kernel methods. To appear in International Conference on Learning Representations, 2021 (ICLR 2021) (2021)

Murata, N.: An integral representation of functions using three-layered networks and their approximation bounds. Neural Netw. 9(6), 947–956 (1996)

Williams, C.: Computing with infinite networks. In: Mozer, M.C., Jordan, M., Petsche, T. (eds.) Advances in Neural Information Processing Systems, vol. 9, (NIPS 1996), MIT Press (1997)

Acknowledgments

The authors would like to express their appreciation to Professor Masaru Tanaka and Professor Jun Fujiki who provided valuable comments and advices.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

A Proof of Theorem 1 and Theorem 2

A Proof of Theorem 1 and Theorem 2

Recall that the activation function \(\sigma \) has been assumed to be non-negative and Lipschitz continuous. Then \(\sigma \) is differentiable almost everywhere and the Lipschitz constant can be expressed as \( \Vert \sigma ^{\prime } \Vert _{\infty } := \mathrm {ess}\sup \vert \sigma ^{\prime } \vert \), where \(\sigma ^{\prime }\) is the almost-everywhere-defined derivative of \(\sigma \). We shall put \( \vert \mathcal {X} \vert := \max _{i = 1,2,\ldots , n} \vert x_{i} \vert \), where \(\{ x_i \}_{i=1}^{m}\) is the input data. Note that the loss function \( L ( \theta ) = \frac{1}{n} \sum _{i=1}^{n} \big ( \hat{y}_{i} ( \theta ) - y_{i} \big )^{2} \) depends on the width m as does so for the outputs \( \hat{y}_{i} ( \theta ) = \frac{1}{\sqrt{m}} \sum _{j=1}^{m} \sigma ( a_{j} x_{i} + a_{0,j} ) b_{j} \).

1.1 A.1 Equipments About Gradient Flow \(\frac{\mathrm {d}}{\mathrm {d} t} \theta (t) = - \frac{1}{2} ( \nabla _{\theta } L ) ( \theta (t) )\)

Lemma 1

Along the gradient flow, we have \( L( \theta (t) ) \le L( \theta (0) ) \) for \(t\ge 0\).

In the coordinate \( \theta (t) = ( \boldsymbol{a}_{0}(t), \boldsymbol{a}(t), \boldsymbol{b}(t) ) = ( \{ a_{0,j}(t) \}_{j=1}^{m}, \{ a_{j}(t) \}_{j=1}^{m},\)\( \{ b_{j}(t) \}_{j=1}^{m} ) \), the gradient flow \( \frac{\mathrm {d}}{\mathrm {d} t} \theta (t) = - \frac{1}{2} ( \nabla _{\theta } L) ( \theta (t) ) \) can be written as follows: for \(j = 1,2,\ldots , m\) and \(t \in \mathbb {R}\),

Proposition 1

For \(m = 1,2,3,\ldots \), \(j = 1,2,\ldots ,m\) and \(t \ge 0\), we have

where \( F_{j} (t) := \vert a_{0,j} (t) \vert + \vert a_{j} (t) \vert + \vert b_{j} (t) \vert \).

Proof

We begin with estimating \(a_{j}(t)\). Let \(\dot{a}_j(s) := \frac{\mathrm {d}}{\mathrm {d}s} a_j (s)\). By fundamental theorem of calculus, the triangle inequality and (1), we have

Since it holds that

by virtue of Jensen’s inequality and Lemma 1, we obtain

Similarly, we have

For \(b_{j}(t)\), by estimating in a manner similar to \(\vert a_{j}(t) \vert \), we get

By using a estimate: \( \sigma \big ( a_j x_i + a_{0,j} \big ) \le \sigma (0) + \Vert \sigma ^{\prime } \Vert _{\infty } ( \vert \mathcal {X} \vert \vert a_j \vert + \vert a_{0,j} \vert ) \) and (2),

By putting estimates (3), (4) and (5) together, we have

Now, by applying Grönwall’s inequality, we reach the conclusion.

Proposition 2

For every \(j = 1,2,\ldots , m\), we have

-

(i)

\(\displaystyle \int _{0}^{t} F_{j} (u) \mathrm {d}u \le G_{j}(t) \),

-

(ii)

\(\displaystyle \int _{0}^{t} \max \big \{ \vert \dot{a}_{0,j} (u) \vert , \vert \dot{a}_{j} (u) \vert , \vert \dot{b}_{j} (u) \vert \big \} \mathrm {d}u \le \sqrt{ \frac{ L ( \theta (0) ) }{ m } } \left\{ \Vert \sigma ^{\prime } \Vert _{\infty } ( \vert \mathcal {X} \vert + 1 ) G_{j}(t) + \sigma (0) t \right\} \),

where \( F_{j} (u) := \vert a_{0,j} (u) \vert + \vert a_{j} (u) \vert + \vert b_{j} (u) \vert \) and

Note that each \( G_{j} (t) \) depends on the width m of the network.

Proof

(i) Put \( c_{1} = \frac{ \Vert \sigma ^{\prime } \Vert _{\infty } ( \vert \mathcal {X} \vert + 1 ) }{\sqrt{m}} \sqrt{ L ( \theta (0) ) } \) and \( c_{2} = \frac{ \sigma (0) }{\sqrt{m}} \sqrt{ L ( \theta (0) ) } \). Then by Proposition 1, we have

Since it holds that \( \frac{ \mathrm {e}^{x} - 1 }{ x } \le \mathrm {e}^{2x} \) for \(x > 0\), we obtain

(ii) We show only for \( \int _{0}^{t} \vert \dot{b}_{j} (u) \vert \mathrm {d}u. \) The same is for the other parameters. By (1) and (2), we get \( \int _{0}^{t} \vert \dot{b}_{j} (u) \vert \mathrm {d}u \le \sqrt{ \frac{ L ( \theta (0) ) }{ m } } \int _{0}^{t} \sigma \big ( a_{j} (u) x_{i} + a_{0,j} (u) \big ) \mathrm {d}u \). Then by using that \( \sigma \big ( a_{j} (u) x_{i} + a_{0,j} (u) \big ) \le \Vert \sigma ^{\prime } \Vert _{\infty } ( \vert \mathcal {X} \vert + 1 ) F_{j} (u) + \sigma (0) \) and by (i), we have the conclusion.

Proposition 3

For all \(p > 0\), we have the following: \( \limsup _{m \rightarrow \infty } \mathbf {E} [ \big ( \sqrt{ L ( \theta (0) ) } \big )^{p} ] < \infty \), \( \limsup _{m \rightarrow \infty } \mathbf {E} [ G_{j} (t)^{p} ] < \infty \) and \( \limsup _{m \rightarrow \infty } \mathbf {E} [ \big ( \sqrt{ L ( \theta (0) ) } \, G_{j} (t) \big )^{p} ] < \infty \).

Proof

The last estimate follows from the first two estimates and Cauchy-Schwarz’ inequality. Since the first estimate is obvious, we show only the second. For this, it is sufficient to show that

In the following, we write \(a_{0,j} (0) = a_{0,j}\), \(a_{j} (0) = a_{j}\) and \(b_{j} (0) = b_{j}.\) First, we note that \( \sqrt{L ( \theta (0) )} \le \frac{1}{\sqrt{n}} \sum _{i=1}^{n} \vert \hat{y}_{i} ( \theta (0) ) - y_{i} \vert \le \frac{1}{\sqrt{n}} \sum _{i=1}^{n} \vert \hat{y}_{i} ( \theta (0) ) \vert + \frac{1}{\sqrt{n}} \vert y_{i} \vert \). Then by using Hölder’s inequality, we get

Since we have \( ( \hat{y}_{i} ( \theta (0) ) \mid \boldsymbol{a}_{0}, \boldsymbol{a} ) \sim \mathrm {N} \big ( 0, \frac{1}{m} \sum _{j=1}^{m} \sigma ( a_{j} x_{i} + a_{0,j} )^{2} \big ) \),

Furthermore, by Jensen’s inequality and independence,

We can show that \( \sigma ( a_{1} x_{i} + a_{0,1} )^{2} \le 16 \{ \Vert \sigma ^{\prime } \Vert _{\infty } ( \vert \mathcal {X} \vert + 1 ) \}^{2} \{ ( a_{0,1} )^{2} + ( a_{1} )^{2} \} + ( \sigma (0) )^{2} \). Hence

The right-hand-side is finite if \( \frac{ 8 p^{2} n \Vert \sigma ^{\prime } \Vert _{\infty }^{2} ( \vert \mathcal {X} \vert + 1 )^{2} }{ m } - \frac{1}{2} < 0 \), that is, \( m > 16 p^{2} n \Vert \sigma ^{\prime } \Vert _{\infty }^{2} ( \vert \mathcal {X} \vert + 1 )^{2} \), and then it is decreasing with respect to m. By putting all together, (7) is proved.

1.2 A.2 Proof of Theorem 1

It is enough to prove that both of \(\{ A^{(m)}(t) \}_{m=1}^{\infty }\) and \(\{ B^{(m)}(t) \}_{m=1}^{\infty }\) are tight. For this, from [3, Chapter I, Section 4, Theorem 4.3], it is sufficient to show that (i) \( \sup _{m} \mathbf {E} \big [ \vert A_{0}^{(m)}(t) \vert + \vert B_{0}^{(m)} (t) \vert \big ] < \infty \) and (ii) there exist \(\gamma , \alpha > 0\) such that

(i) is clear since \( A_{0}^{(m)} (t) = B_{0}^{(m)} (t) = 0 \). Thus we show only (ii). We will only show the one for \(A^{(m)}(t)\). Since \( A^{(m)} (t) \) is a piecewise linear interpolation of values on \(\{ s_{k} = \frac{k}{m} \}_{k=0}^{m}\), it suffices to show that for some \(\gamma , \alpha > 0\), it holds that

Let \(k,j \in \{ 1,2,\ldots , m \}\) be arbitrary. Without loss of generality, we assume that \(j < k\). Then we have

We shall make estimates for two terms on the right-hand-side.

Lemma 2

With \(G_{l}(t)\) defined in (6), we have

Proof

Since \(\mathbf {E} [ a_{l}(0) ] = 0\), we have \( a_l (t) - \mathbf {E} [ a_l (t) ] = \int _0^t \dot{a}_l (u) \mathrm {d}u - \int _0^t \mathbf {E} [ \dot{a}_l (u) ] \mathrm {d}u + a_l (0) \). By summing up this over \(l=j+1 , j+2, \ldots , k\) and by using (1) and (2),

Finally, by applying Proposition 2, we get the conclusion.

Lemma 3

We have \(\displaystyle \Big \vert \sum _{l=j+1}^k \mathbf {E} [ a_l (t) ] \Big \vert \le \frac{ \Vert \sigma ^{\prime } \Vert _{\infty } \vert \mathcal {X} \vert }{ \sqrt{m} } \mathbf {E} \big [ \sqrt{ L ( \theta (0) ) } \, \sum _{l=j+1}^k G_{l} (t) \big ] . \)

Proof

By (1), \( \mathbf {E} [ a_l (t) ] = \int _0^t \mathbf {E} [ -\frac{1}{n} \sum _{i=1}^n \big ( \hat{y}_{i} ( \theta (u) ) - y_i \big ) \sigma ^{\prime } \big ( a_l (u) x_i + a_{0,l} (u) \big ) x_i \frac{ b_l (u) }{ \sqrt{m} } ] \mathrm {d}u \). By taking the sum over \(l = j+1, j+2, \ldots , k\), we have

Then by using Proposition 2, we reach the conclusion.

Turning back to Eq. (9), we apply Lemma 2 and Lemma 3 to get

where \( H_{l} (t) = \sqrt{ L ( \theta (0) ) } \, G_{l} (t) \). By an easy estimate: \( ( x+y )^{4} \le 2^{4} ( x^{4} + y^{4} ) \),

Therefore \( \mathbf {E} [ \big ( A_{s_{k}}^{(m)} (t) - A_{s_{j}}^{(m)}(t) \big )^{4} ] = \frac{2^{4}}{m^{2}} I + 2^{4} \Vert \sigma ^{\prime } \Vert _{\infty }^{4} \vert \mathcal {X} \vert ^{4} ( s_{k} - s_{j} )^{4} I\!I \). Here,

First, we shall focus on \(I\!I\). By Jensen’s inequality,

On the other hand, for I, since \( a_{1}(0), a_{2}(0), \ldots , a_{m}(0) \) are independent and identically distributed, and each of them is distributed in \(\mathrm {N} ( 0, 1 )\), we have \( I = 3 (k-j)^{2} \). Hence

Finally, by noting Proposition 3, we see that (8) holds for \(\gamma = 4\) and \(\alpha = 1\).

1.3 A.3 Proof of Theorem 2

By the law of large numbers, we see that \( \frac{1}{m} \sum _{j=1}^m \big ( b_j (0) \big )^{2} \rightarrow \mathbf {E} [ \big ( b_j (0) \big )^{2} ] = 1 \) as \(m \rightarrow \infty \). Then it suffices to show that

Since \( b_j (t) - \mathbf {E} [ b_j (t) ] = b_j (0) + \int _0^t \big ( \dot{b}_j (u) - \mathbf {E} [ \dot{b}_j (u) ] \big ) \mathrm {d}u \), we have \( ( b_j (t) - \mathbf {E} [ b_j (t) ] )^{2} - ( b_j (0) )^{2} = \big ( \int _0^t \big ( \dot{b}_j (u) - \mathbf {E} [ \dot{b}_j (u) ] \big ) \mathrm {d}u \big )^2 + 2 b_j (0) \int _0^t ( \dot{b}_j (u) - \mathbf {E} [ \dot{b}_j (u) ] ) \mathrm {d}u \). Thus we have

By taking the expectation, we get

For the term \(\int _{0}^{t} \vert \dot{b}_{j} (u) \vert \mathrm {d}u\) appeared above, we know by Proposition 2 that

where note that \(M_j (t)\) depends on the width m. Thus, \( \int _{0}^{t} \big ( \vert \dot{b}_{j} (u) \vert + \mathbf {E} \big [ \vert \dot{b}_{j} (u) \vert \big ] \big ) \mathrm {d}u \le \frac{ M_{j} (t) + \mathbf {E} [ M_{j} (t) ] }{ \sqrt{m} } \). By Proposition 3, we have \(\displaystyle \limsup _{m \rightarrow \infty } \mathbf {E} \big [ \big ( M_{1}(t) + \mathbf {E} [ M_{1}(t) ] \big )^2 \big ] < \infty \) and \(\displaystyle \limsup _{m \rightarrow \infty } \mathbf {E} \big [ \vert b_{1} (0) \vert \big ( M_{1}(t) + \mathbf {E} [ M_{1}(t) ] \big ) \big ] < \infty \). Hence as \(m \rightarrow \infty \),

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Eguchi, S., Amaba, T. (2021). Energy Conservation in Infinitely Wide Neural-Networks. In: Farkaš, I., Masulli, P., Otte, S., Wermter, S. (eds) Artificial Neural Networks and Machine Learning – ICANN 2021. ICANN 2021. Lecture Notes in Computer Science(), vol 12894. Springer, Cham. https://doi.org/10.1007/978-3-030-86380-7_15

Download citation

DOI: https://doi.org/10.1007/978-3-030-86380-7_15

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-86379-1

Online ISBN: 978-3-030-86380-7

eBook Packages: Computer ScienceComputer Science (R0)