Abstract

Industry 4.0 is facing a fast–development in its technologies, automatizing and restructuring processes in order to make them data–driven. However, its uses are not exclusive for the industry as its name suggests, since it can be extended to health sector. In the next decade, the Digital Twin (a digital replica of a physical asset) is going to face a spread into mainstream market due to its potential applications in the healthcare sector, such as a 24/7 monitoring of the evolution of cancer and its treatment, a heart based on cloud–computing, an even receive medical treatment that adapts to every person. But before seeing this as a reality, there are several obstacles that the researchers need to overcome for the proposed Digital Twins to reach its full–potential.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 The Digital Twin and its Common Misconceptions

As a result of the upcoming Industry 4.0, technologies are being quickly developed and growing their popularity in both specialized fields and mainstream media alike, being these technologies are the Internet–of–Things (IoT), cloud computing, big data and the automation that does not require the human intervention as a consequence of the implementation of machine learning algorithms and artificial intelligence. As it has been stated, their potential applications are not only limited to the industry, but can be extended and successfully implemented into the healthcare systems around the world, modifying how we understand and work with them [7].

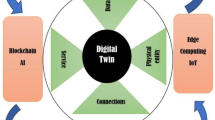

The state–of–the–art of this revolution is known as the Digital Twin (DT), a concept that uses and encompasses all the previously mentioned technologies to develop a living model in the form of a digital instance that represents and mirrors in real time its physical asset or system. However, this term is so broad that most of the time it may lead to false impressions of what it really is, aspect that will be addressed up–ahead, being the main distinction between them how the data flows from the physical to the digital object and vice–versa [5].

1.1 Digital Twin

Summarizing, a DT can be described as a virtual replica of a physical object with the virtue of fully–automated data acquisition [7]. In other words, a change that is made in the digital asset is reflected immediately in its physical counterpart, and vice–versa. The main difference of the DT, is that there is no need for a manual data input [5].

1.2 Digital Shadow

This instance, as its name may suggest, is only a digital representation of a physical object, meaning that data is only automated in a one–way flow from the physical to the digital object [6]. It is mostly used to gather and integrate information from different sources to facilitate real–time analysis [12].

1.3 Digital Model

This is where the majority of the misconceptions are centred. This case is a virtual replica of the physical object and it requires a full–time manual input of the data [7]. They are used for the most part in the industry to understand how a change in the digital object would affect its counterpart if implemented [6].

1.4 Digital Terms Summary

To synthesize the presented information, and as way to clarify any possible doubts that may appear, Table 1 is presented as a mean to understand the flow of the data between the physical and digital objects, as well their most common applications.

2 Challanges to Overcome

Before seeing these DTs as a reality and as a part of our daily lives, there are some obstacles that need to be dealt with. The first, and most challenging one, is to design them to be identical to their physical self, to be indistinguishable from each other; that is, a real–time exchange of information that is both easy to be operated by its user, and at the same time to be complex enough to model and answer sudden changes in real time [11].

2.1 IT Infrastructure

For this proposal to be successful, then it is mandatory to have the means that will ensure the optimal performance of the system. Both Artificial Intelligence and Machine Learning are expected to be the key–players [11], and both require high–performing hardware and software to be in a constant analysis of data, aspect that becomes obvious in the high–running costs, as well in its subsequent environmental impact [5].

From the IoT point–of–view, the main issue is the flow of data, problem that is enlarged with the rise of big data, which means that a gargantuan volume of data is in constant transit. However, this data, most of the time, is in a “raw” state that needs to be prepared, sorted and organized before being useful for any research [5]. In the healthcare sector, there exists a high–natural variability, which is explained by the innate differences of the human body, such as age, height, or response to medical treatments; parameters that need to be understood by the sensors implemented in the DT to prevent false-positives [9].

As a result, before a personalized, data–driven healthcare becomes a reality, the IT infrastructure needs to be leveraged. This new architecture needs to comply with different standards that ensure the well–being of the patient and continuous function of the DT, its design must entail a hybrid platform: Centralized for a straightforward management and effortless data sharing, yet distributed enough to ensure real–time deployment [7].

2.2 Data Privacy and Security

In the recent years, the threat of cyberattacks has been increasing, compromising personal data that ranges from personal keywords to paralyze whole communities’ finances. In the case of the DT, the data used is both sensitive and confidential, and it must be only accessed by the patient and by qualified personnel, such as physicians or nurses. In the case of IoT, interconnected devices are an easy target for denial–of–service attacks (DDoS) that can take offline a healthcare system, temporarily freezing its infrastructure and even pose a threat to the lives of the patients [8]. As ludicrous as it might sound, in the next decades the idea of hijacking a organ can become a reality if cybersecurity is not guaranteed.

An important factor to be consolidated in both DT and Industry 4.0 is law regulation, governments need to define the scope of the Artificial Intelligence and ensure that the data is at all–times protected [5]. As a byproduct of the massive amount of information generated by the big data, cyber–attacks are prone to happen, compromising sensitive data [7], meaning that cybersecurity analysis and protocols are mandatory, and security frameworks are to be adopted as a design paradigm known as “Security by Design” [8].

2.3 Data Quality

As expected for a complex system that integrates and coordinates multiple engineering fields, the DT needs to work with noise–free and high–quality data to perform as expected [5].

In the case of the DT, data are crucial, and they come from multiple sources and need to be integrated as a whole. The sources of this information are many, some is obtained from the digital–physical asset, other from the digital–models that support the DT performance, and a small yet crucial part is provided by experts in the fields of health and engineering. In the end, all of them need to be merged together (applying different AI methods) to give as a result the “fusion data”, core of the DT performance [10].

2.4 Ethics

A downside of introducing a data–driven healthcare system, is the that the boundaries of medicine need to be posed once more. The use of the DT, at the beginning, raises the possibility of a new kind of patient, ones that have a digital enhancement of themselves. Of course, personalized medicine highly exceeds the cost of off–the–shelf treatments, meaning that only people with the enough resources can access to this opportunity. As a result of both these situations, a segmentation of the population and which medical treatment they receive is prone to occur if the technologies used do not lower costs [3].

Another issue that needs to be addressed before the DT can go mainstream, is the ownership of the data used, being the most important ones “Who will be the rightful owner? The patient or a health institution?”, “Who will play the bigger role, the government or the private sector and for what purpose they will use personal data? Will all they have a share?”

Although these questions at first sound like science–fiction, the truth is that they need to be answered as soon as possible, the DT is around the corner and its development must not be left stray [3].

2.5 Expectations

Albeit the potential applications of the DT, it is expected to change how the problems are solved in the healthcare sector. Instead, caution is needed to really understand that the implementation of the DT can take its time to become a reality, due to the fact that all the supporting technologies and the DT itself is in a developing phase where the real applications remain uncertain [13].

Another important aspect to take into consideration, is that current trends may not be the same for the next decade, meaning that the DT applications in healthcare may stem from what is proposed and described in this paper [5] (Table 2).

2.6 Challenges Summary

3 Future Applications

Although the applications in both products and services seem endless, it is time to focus solely on what can be made in the next decade with this brand–new technology. At the time being, the DT has already been successfully implemented at the healthcare sector. Its first use was in medical devices for preventive maintenance and for the optimization of its capabilities [1]. However, the asset potential can be exploited to disrupt how we understand and perceive human healthcare and lifestyle and make a fully–functional device of ourselves, thus creating a new paradigm in health [5].

3.1 Non-invasive Treatment

The most realistic application for the next decade of non–invasive treatments is not the whole human body, but the development of physiological models of organs or systems. For example, Dassault Systèmes in 2015 released its “Living Heart” project, which is the first digital representation of the organ that works as the real one: The electrical impulses that are translated into mechanic contractions and expansions and control the blood flow [1].

For the 5G/6G communications era, the previous application is expected to improve and to be capable to replicate a disease and its effects in a DT. As a result, research is being made to develop the DT of lung cancer in all of its stages and the damage that these organs undergo, and is expected for this approach to benefit from the enormous amount of data that circulates between the digital and physical organ, then made understandable for the physicians and finally choose the best medical treatment for this patient; process that is also known as personalized medicine [14].

3.2 Personalized Medicine

As a result of the fast–development that the DTs are showing in the healthcare sector, the old paradigm of “one size fits all”, i.e. assume the people’s physiology is exactly the same for everybody, is meant to disappear. Instead, personalized medicine is the next standard, as it can monitor all day long the patient and gather data on how the treatment performs: If the dose needs to be modified, which side–effects are expected in the patient, and even if the treatment will be effective or not [4].

As it has been stated, data from multiple sources are collected by the DT need to be concealed in order to perform as desired. In turn, this is the biggest obstacle for the personalized medicine, since it deals with multiple degrees of complexity in a single individual, that ranges from the molecular to even the environmental levels. Another benefit of this brand–new approach, is that some diseases can be diagnosed in early stages in which damage and symptoms to the patient are yet to occur, giving physicians the enough time to reverse the disease, if it is the case [2].

3.3 Telemedicine

Subsequent from the IoT and Cloud computing of the DT, another application for it is the telemedicine. Using the data that is always flowing and being analyzed, it can allow for a real–time monitoring and prediction of the health–status of the patient, meaning that face–to–face interactions between patient and physician can be reduced.

As a result of this characteristic, healthcare platforms are almost certain to occur in the next decade, a digital landscape where different services can be offered: Medication reminder, crisis warning, and the disease prevention or the early detection of them. Of course, the data used is confidential, and it needs to be protected by security frameworks and protocols at all times, and as discussed previously, data leakage must be prevented [7].

4 Conclusions

As history shows, when a revolutionary technology is first presented, most of the people fear the unknown until they saw the potential and benefits in their lifestyles. It is expected that during the next decade, healthcare is most likely to face severe changes on how we understand it, due to the advent of the Digital Twin, a concept that is currently undergoing a continuous research and development process to bring together health specialists and engineers, as part of the Fourth Industrial Revolution.

Whilst there are serious obstacles to overcome before seeing this as a public technology, the benefits that it offers for the healthcare greatly exceed the costs. For the next decade, biomedical engineering is expected to make bigger developments and become a key player in the redefinition of medicine, one that is personalized and solely data–driven.

References

Barricelli, B.R., Casiraghi, E., Fogli, D.: A survey on digital twin: definitions, characteristics, applications, and design implications. IEEE Access 7, 167653–167671 (2019)

Björnsson, B., et al.: Digital twins to personalize medicine. Genome Med. 12(1), 1–4 (2020)

Bruynseels, K., Santoni de Sio, F., van den Hoven, J.: Digital twins in health care: ethical implications of an emerging engineering paradigm. Front. Genet. 9, 31 (2018)

Feng, Y., Chen, X., Zhao, J.: Create the individualized digital twin for noninvasive precise pulmonary healthcare. Significances Bioeng. Biosci. 1(2), 2–5 (2018)

Fuller, A., Fan, Z., Day, C., Barlow, C.: Digital twin: enabling technologies, challenges and open research. IEEE Access 8, 108952–108971 (2020)

Gochhait, S., Bende, A.: Leveraging digital twin technology in the healthcare industry-a machine learning based approach. Eur. J. Mol. Clin. Med. 7(6), 2547–2557 (2020)

Liu, Y., et al.: A novel cloud-based framework for the elderly healthcare services using digital twin. IEEE Access 7, 49088–49101 (2019)

Lou, X., Guo, Y., Gao, Y., Waedt, K., Parekh, M.: An idea of using digital twin to perform the functional safety and cybersecurity analysis. In: INFORMATIK 2019: 50 Jahre Gesellschaft für Informatik-Informatik für Gesellschaft (Workshop-Beiträge). Gesellschaft für Informatik eV (2019)

Patrone, C., Lattuada, M., Galli, G., Revetria, R.: The role of Internet of Things and digital twin in healthcare digitalization process. In: Ao, S.-I., Kim, H.K., Amouzegar, M.A. (eds.) WCECS 2018, pp. 30–37. Springer, Singapore (2020). https://doi.org/10.1007/978-981-15-6848-0_3

Qi, Q., et al.: Enabling technologies and tools for digital twin. J. Manuf. Syst. 58, 3–21 (2019)

Rasheed, A., San, O., Kvamsdal, T.: Digital twin: values, challenges and enablers from a modeling perspective. IEEE Access 8, 21980–22012 (2020)

Riesener, M., Schuh, G., Dölle, C., Tönnes, C.: The digital shadow as enabler for data analytics in product life cycle management. Procedia CIRP 80, 729–734 (2019)

Singh, M., Fuenmayor, E., Hinchy, E.P., Qiao, Y., Murray, N., Devine, D.: Digital twin: origin to future. Appl. Syst. Innov. 4(2), 36 (2021)

Zhang, J., Li, L., Lin, G., Fang, D., Tai, Y., Huang, J.: Cyber resilience in healthcare digital twin on lung cancer. IEEE Access 8, 201900–201913 (2020)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 IFIP International Federation for Information Processing

About this paper

Cite this paper

Rojas-Arce, J.L., Ortega-Maldonado, E.C. (2021). The Advent of the Digital Twin: A Prospective in Healthcare in the Next Decade. In: Dolgui, A., Bernard, A., Lemoine, D., von Cieminski, G., Romero, D. (eds) Advances in Production Management Systems. Artificial Intelligence for Sustainable and Resilient Production Systems. APMS 2021. IFIP Advances in Information and Communication Technology, vol 633. Springer, Cham. https://doi.org/10.1007/978-3-030-85910-7_26

Download citation

DOI: https://doi.org/10.1007/978-3-030-85910-7_26

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-85909-1

Online ISBN: 978-3-030-85910-7

eBook Packages: Computer ScienceComputer Science (R0)