Abstract

Despite the recent success of deep learning methods in automated medical image analysis tasks, their acceptance in the medical community is still questionable due to the lack of explainability in their decision-making process. The highly opaque feature learning process of deep models makes it difficult to rationalize their behavior and exploit the potential bottlenecks. Hence it is crucial to verify whether these deep features correlate with the clinical features, and whether their decision-making process can be backed by conventional medical knowledge. In this work, we attempt to bridge this gap by closely examining how the raw pixel-based neural architectures associate with the clinical feature based learning algorithms at both the decision level as well as feature level. We have adopted skin lesion classification as the test case and present the insight obtained in this pilot study. Three broad kinds of raw pixel-based learning algorithms based on convolution, spatial self-attention and attention as activation were analyzed and compared with the ABCD skin lesion clinical features based learning algorithms, with qualitative and quantitative interpretations.

T. Chakraborti is funded by EPSRC SeeBiByte and UKRI DART programmes.

T. Chowdhury and A. R. S. Bajwa—First authors with equal contributions.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Explainable artificial intelligence

- Melanoma classification

- Digital dermatoscopy

- Attention mechanisms

- Deep machine learning

1 Introduction

Among the several variants of skin lesion diseases, melanoma is the condition that puts patients’ lives at risk because of its highest mortality rate, extensive class variations and complex early stage diagnosis and treatment protocol. Early detection of this cancer is linked to improved overall survival and patient health. Manual identification and distinction of melanoma and its variants can be a challenging task and demands proper skill, expertise and experience of trained professionals. Dermatologists consider a standard set of features (popularly known as the ABCDE features) that takes into consideration the size, border irregularity, colour variation for distinguishing malignant and benign tumours. With proper segmentation boundaries these features can be extracted from images and used as inputs to machine learning algorithms for classification purposes. Also, with the recent advancements of deep learning, Convolutional neural networks (CNNs) [1] are able to differentiate the discriminative features using raw image pixels only [26]. But the decision making process of these complex networks can be opaque. Several approaches have been proposed to identify the image regions that a CNN focuses on during its decision-making process [28,29,30]. Van Molle et al. [3] tried to visualize the CNN learned features at the last layers and identified where these networks look for discriminative features. Young et al. [4] did a similar work towards the interpretability of these networks using GradCAM [5] and kernel SHAP [6] to show how unpredictable these models can be regarding feature selection even when displaying similar performance measures. Both these works demonstrated how pixel-based models can be misguided towards image saliency and focus on undesirable regions like skin hairs, scale marks etc. Also, attention guided CNNs were used [7, 8] to solve the issue of feature localization. Though these works provide a comprehensive insight to where these CNNs look for unique elements in an image they are not sufficient to unveil what exactly these models look for and more importantly, if there is any kind of correlation with their extracted sets of features and those sought by dermatologists (the why question). As the consequences of a false negative can be quite severe for such diagnostic problems, it is of utmost importance to determine if the rules learned by these deep neural networks for decision making in such potential life-threatening scenarios can be backed by medical science. In this paper we have tried to address this issue by experimenting with both handcrafted ABCD features and raw pixel based features learned by a deep learning models, along with exploring if there is any correlation present between them.

2 Dataset and Methodology

2.1 Dataset: Description and Pre-processing

HAM10000 dataset [9] a benchmark data set for skin lesion classification, is used in this study. The dataset contains a total of 10015 dermoscopic images of dimensions \(3 \times 450 \times 600\) distributed over 7 classes namely: melanoma (Mel, 1113 samples), melanocytic nevi (NV, 6705 samples), basal cell carcinoma (BCC, 514 samples), actinic keratosis and intraepithelial carcinoma (AKIEC, 327 samples), benign keratosis (BKL, 1099 samples), dermatofibroma (DF, 115 samples) and vascular lesions (VASC, 142 samples).

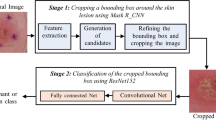

Pre-processing steps are carried out to remove the artifacts. First, the images are center cropped to extract the main lesion region and separate out the natural skin area, scale marks and shadows present due to the imaging apparatus [27]. Further, to remove the body hair and remaining scale marks, a local adaptive thresholding method is used where the threshold value of a pixel is determined by the range of intensities in its local neighbourhood. Finally, the images were enhanced using CLAHE [10] technique, and scaled using maximum pixel value. The entire dataset is divided in a 80 : 10 : 10 ratio as the training, validation and test set, respectively.

2.2 Deep Architectures

Baseline CNN: First, we designed a simple convolutional neural network with 5 convolutional blocks that servers as the baseline for other deep learning models used in this paper. Each convolutional block further consists of a convolutional layer followed by ReLU activation, max pooling (except for the first block) and batch normalization layers. Dropout layers with a dropout probability of 0.2 were used after the convolutional layers of the last two blocks to reduce overfitting. The convolutional blocks are then followed by global average pooling (GAP) [17] suitable for fine-grain classification problems and a softmax based classification layer. We used convolutional kernels with spatial extent 7, 5, 3, 3, 3 for consecutive convolutional blocks with 16, 32, 64, 128 and 256 feature maps, respectively.

CNN with Global Attention: Considering the importance for the network to focus on clinically relevant features, we further test the network by adding global attention modules, proposed by Jetley et al. [18] on top of the last two convolutional blocks of our baseline CNN model. The resulting network is presented in Fig. 1. which is end-to-end trainable. This method exploits the universality between local and global feature descriptors to highlight important features of an input.

First a compatibility score \((c_{i}^{s})\) is calculated using the local feature vector \(l_{i}^{s}\) and the global feature vector g as:

Where, \(l_{i}^{s}\) represents the \(i_{th}\) feature map of \(s_{th}\) convolutional layer. Here \(i \in \{1,2,ldots n\}\) and \(s \in \{1,2,\ldots S\}\) (n = number of feature maps and S = total number of layers in the network). u is the weight vector learning the universal feature sets for the relevant task. \(1 \times 1\) convolutions are used to change the dimensionality of \(l^{s}\) to make it compatible for addition with g. Next, the attention weights a are calculated from the compatibility scores c by simply applying a softmax function function as:

These two operations sum up as the attention estimator. The final output of the attention mechanism for each block s is then calculated as:

Two such \(g_{a}^{s}\)s are concatenated as shown and a dense layer is added on top of it, to make the final prediction.

Spatial Self-attention Model: Inspired by the enormous success achieved by the transformer networks [19] in the field of natural language processing (NLP), Ramachandran et al. [20] proposed a classification framework in spatial domain entirely based on self-attention. The paper showed state-of-the-art performance on multiple popular image datasets questioning the need for convolution in vision tasks. Like convolution, the fundamental goal of self-attention also is to capture the spatial dependencies of a pixel with its neighbourhood. It does so by calculating a similarity score between a query pixel and a set of key pixels in the neighbourhood with some spatial context.

Here, we have modified the self-attention from the original work. In case of self-attention, the local neighbourhood \(\mathcal {N}_{k}\) is denoted as the memory block. In contrast to the global attention modules, here the attention is calculated over a local region, which makes it flexible for using at any network depth without causing excessive computational burden. Two different matrices, query (\(q_{ij}\)) and keys (\(k_{k}\)), are calculated from \(x_{ij}\) and \(x_{ab} \in \mathcal {N}_{k}\), respectively by means of linear transformations as shown in Fig. 2. Here, \(q_{ij} = Qx_{ij}\) and \(k_{k} = Kx_{ab}\) where \(Q,K\in \mathbb {R}^{d_{out} \times d_{in}}\) are the query and key matrices respectively and formulated as the model parameters. Intuitively, the query represents the information (pixel) to be matched with a look up table containing the addresses and numerical values of a set of information represented by the keys.

In the original work [20], proposing the self-attention layer in spatial domain, a separate value matrix V is taken to calculate the values v which is a linear projection of the original information. Technically, in our case, keys are essentially the same thing, with the keys containing extra positional information that has been added explicitly. So, we’ve discarded v entirely and used k only for calculating the attention weights as well as representing the original information; that reduces the total number of model parameters. In practice, the input is divided into several parts along the depth (feature maps) and multiple convolution kernels. Multiple such query-key matrix pairs known as heads are used to learn distinct features from an input. Unlike [20], the single headed normalized attention scores in the neighbourhood \(\mathcal {N}_{k}\) are calculated as the scaled dot product of queries and keys. Further, while calculating the attention scores, positional information is injected into the keys in the form of relative positional embedding as mentioned in [20].

Where \( r_{a-i,b-j}\) is obtained by concatenating row and column offset embeddings \(r_{a-i}\) and\(r_{b-j}\) respectively, with \(a-i\) and \(b-j\) being row and column offsets of each element \(ab \in \mathcal {N}_{k}\) from input \(x_{ij}\). The attention weighted output \(y_{ij}^{att}\) corresponding to pixel \(x_{ij}\) is calculated as:

Here, the same query and key matrices are used to calculate the attention outputs for each (i, j) of the input x.

Then we designed our model on the same structural backbone as our baseline CNN by replacing all the convolution layer with our self-attention layers.

Attention as Activation Model: Activation functions and attention mechanisms are typically treated as having different purposes and have evolved differently. However upon comparison, it can be seen that both the attention mechanism and the concept of activation functions give rise to non-linear adaptive gating functions [24]. To exploit both the locality of activation functions and the contextual aggregation of attention mechanisms, we use a local channel attention module, which aggregates point-wise cross-channel feature contextual information followed by sign-wise attention mechanism [24].

Our activation function resorts to point-wise convolutions [17] to realize local attention, which is a perfect fit since they map cross-channel correlations in a point-wise manner. The architecture of the local channel attention based attention activation unit is illustrated in Fig. 3. The goal is to enable the network to selectively and element-wisely activate and refine the features according to the point-wise cross-channel correlations. To reduce parameters, the attention weight \(L(X) \in R^{C \times H \times W}\) is computed via a bottleneck structure.

Input(X) is first passed through a 2-D convolutional layer into a point-wise convolution of kernel size \( \frac{C}{r} \times C \times 1 \times 1 \) followed by batch normalization. The parameter r is the channel reduction ratio. This output is passed through a rectified linear unit (ReLU) activation function. The output of the ReLU is input to another point-wise convolution of kernel size \(C \times \frac{C}{r} \times 1 \times 1\) followed by batch normalization (BN in Fig. 3). Finally, to obtain the attention weight L(X), the output is passed into a sigmoid function. It is to be noted that L(X) has the same shape as the input feature maps and can thus be used to activate and highlight the subtle details in a local manner, spatially and across channels. The activated feature map \(X'\) is obtained via an element-wise multiplication with L(X):

In element-wise sign-attention[23], positive and negative elements receive different amounts of attention. We can represent the output from the activation function (\(\mathcal {L}\)) with parameters \(\alpha \) and \(X'\).

Where \(\alpha \) is a learnable parameter and \(C(\cdot )\) clamps the input variable between [0.01, 0.99]. \(X'\) is the above calculated activated feature map. R(X) is the output from standard rectified linear unit.

This combination amplifies positive elements and suppresses negative ones. Thus, the activation function learns an element-wise residue for the activated elements with respect to ReLU which is an identity transformation, which helps mitigate gradient vanishing. We design the model based on our baseline CNN with only three blocks but with the above attentional activation function in place of ReLU.

2.3 ABCD Clinical Features and Classification

Dermatologists consider certain clinical features during the classification of malignant or benign skin lesions. A popular example is the ABCDE feature set [2]. In this approach, Asymmetry, Border irregularity, Color variation, Diameter and Evolving or changing of a lesion region are taken into consideration for determining its malignancy (Ref. Fig. 4.). Asymmetry – Melanoma is often asymmetrical, which means the shape isn’t uniform. Non-cancerous moles are typically uniform and symmetrical in shape. Border irregularity – Melanoma often has borders that aren’t well defined or are irregular in shape, whereas non-cancerous moles usually have smooth, well-defined borders. Color variation – Melanoma lesions are often more than one color or shade. Moles that are benign are typically one color. Diameter – Melanoma growths are normally larger than 6mm in diameter, which is about the diameter of a standard pencil. Since we do not have time series data, we extracted the first 4 (ABCD) features for each image in our dataset. Before feature extraction, an unsupervised segmentation framework is designed based on OTSU’s [11] thresholding, morphological operations, and contour detection to separate out the main lesion region from the skin. From these segmented regions, the above-mentioned set of features were extracted using several transformations and elementary mathematical functions [12, 13]. Random Forest (RF) [14] and Support Vector Machines (SVM) [15] are used for the final classification with grid search [16] to find the optimal set of hyperparameters.

3 Experiments and Results

In this section, we present the experimental results, both quantitative and qualitative. First, in Table 1, we present the numerical results of the methods described in the preceding section. As evaluation metrics, we have used accuracy, AUC-ROC, precision, recall, and F1 score. Equalization sampling of minority classes was performed to tackle the problem of imbalanced dataset. All the deep learning models were trained to minimize the categorical crossentropy loss and the parameters were updated using ADAM optimizer [22].

First, we trained several traditional machine learning algorithms such as random forest and SVM, based on the ABCD features extracted as mentioned in Sect. 2.3. Grid search is used to choose the optimal set of hyperparameters and as shown in Table 1 a random forest model with 200 trees showed the best classification performance and its results are used for further comparison with the pixel-based models.

Next, multiple raw pixel-based deep learning models, as mentioned in Sect. 2.2, were trained and evaluated for the purpose of comparing and analyzing their performance with the ABCD feature based classification method, as well as to search for any feature correlation.

3.1 Quantitative Results

Table 1 shows that even with suboptimal segmentation maps ABCD features have a high discriminating power of malignancy detection and classification. Further, use of finer lesion segmentation maps obtained by a manual or supervised approach can boost the classification performance of learning algorithms utilizing these sets of features. The overall performances of the deep models are also presented.

CNNs with global attention modules showed better results compared to the baseline CNN architecture that can be explained by the improved localization and feature selection capabilities of attention modules, whereas the self-attention based model performs similar to baseline CNN. Attention as activation based model outperforms CNNs of the same size. Self attention based models face the problem that using self-attention in the initial layers of a convolutional network yields worse results compared to using the convolution stem. This problem is overcome by Attention as activation based model and is the most cost effective solution as our activation units are responsible only for activating and refining the features extracted by convolution.

Table 2 shows that the performance of our proposed variation of the spatial self-attention model is not affected when we consider keys (k) and values (v) as identical metrics. This design of the spatial self-attention layer offers similar performance at lesser parameter settings and lower computational cost.

In Fig. 5, we present the confusion matrix of stand-alone self-attention and attention as activation models on the test dataset. Both models perform well on tumor types melanoma (mel), melanocytic nevi (nv), basal cell carcinoma (bcc), actinic keratosis (akiec), intraepithelial carcinoma and benign keratosis (bkl). However, occasionally the models confuse melanocytic nevi (nv) as benign keratosis (bkl) and vascular lesions (vasc) as melanocytic nevi lesions (nv).

3.2 Alignment Between ABCD Features and Deep Learned Features

To justify the decision level correlation between deep learned features and the ABCD features, the predictions on the test dataset were analyzed using four major criteria as presented in Table 3. We find relatively higher values in the first and last columns, where both the two broad classes of algorithms either succeed or fail, clearly indicating a correlation between their sought out features. Though this is not sufficient to establish direct feature correspondence, the results point towards some clinical relevance of deep models at a decision level.

We calculate the ABCD features from the attention maps of our self attention model and the ground truth segmentation maps. We use Random Forest and Support Vector Machine models on this data. The results are presented in Table 4. These results point towards the high correspondence in the ABCD features obtained by ground truth segmentation maps (clinical features) and the attention maps of self attention based model (deep learned features). We also calculate the dice score [25] to compare the similarity between the ground truth segmentation maps and the deep learning model attention maps. The average dice score calculated over all the images as presented in Table 5. These positive results help us to closely examine how the raw pixel-based neural architectures associate with the clinical feature based learning algorithms at the feature level and indicate the similarity between model predicted and ground truth lesion regions. In a few failure cases, the dice score calculated was low. We present two such examples in Fig. 6.

Comparison of segmentation maps used for ABCD feature extraction and important regions according to deep learning models for a random set of test images. A red box around a segmentation/attention map represents incorrect prediction whereas a green box denotes correct prediction. (Color figure online)

3.3 Qualitative Results

Next, we have visually explored whether there is any direct alignment between the deep learned features and ABCD features by analyzing their global feature descriptors and segmentation maps, respectively, for a random set of test images. CAM [21] is used for visualizing the global feature descriptors for the deep classification models. From the visual results presented in Fig. 7, it is clear that the ability to precisely localize the lesion region is the most crucial quality that a model should possess. For most of the cases, whenever the attention heat maps have a satisfactory overlapping with the correct segmentation map (rows 3, 5, 6, 7) the results are correct, and whenever they differ significantly (row 2) the results are incorrect. The third column of the figure shows the activation maps of the baseline CNN to be very sparse that indicates poor localization capability, leading to many incorrect predictions. The localization capability of the attention-based models (columns 4,5 and 6) are much better than the baseline CNN that accounts for better classification results. These attention-based models have helped to pinpoint the lesion areas in the image and better addressed the fine-grain nature of the problem. Visually the localization power of the spatial self-attention and attention as activation models are quite accurate, however, in many cases, they tend to focus on the boundary regions of the image or have poor overlapping with the lesion area, which leads to incorrect predictions and suboptimal results. A good dice score suggests a descent alignment of model activations with some of the clinical features such as Asymmetry and Border irregularity, reflecting with their accuracy.

4 Conclusion

In this work, we have investigated whether the features extracted by deep models such as convolutional networks, self-attention models and attention as activation models correlate with clinically relevant features. We have taken automated skin cancer detection as the test case and the quantitative, as well as qualitative results, point towards an underlying correlation between them at feature and decision level. A visual analysis has been performed to check whether the activation maps of deep models do possess any similarity with the segmentation maps used for clinical feature (ABCD features for skin lesion) extraction. Where the clinical features are unique and concrete representations of a lesion region, the deep learned features are more abstract and compound. However, with the help of a comparative analysis of different methods we are able to bridge the gap of trustability, when it comes to justifying their output.

References

LeCun, Y., Bengio, Y.: Convolutional networks for images, speech, and time series. In: The Handbook of Brain Theory and Neural Networks (1995)

Jensen, D., Elewski, B.E.: The ABCDEF rule: combining the ABCDE rule and the ugly duckling sign in an effort to improve patient self-screening examinations. J. Clin. Aesthetic Dermatol. 8(2), 15 (2015)

Van Molle, P., De Strooper, M., Verbelen, T., Vankeirsbilck, B., Simoens, P., Dhoedt, B.: Visualizing convolutional neural networks to improve decision support for skin lesion classification. In: Stoyanov, D., et al. (eds.) MLCN/DLF/IMIMIC -2018. LNCS, vol. 11038, pp. 115–123. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-02628-8_13

Young, K., Booth, G., Simpson, B., Dutton, R., Shrapnel, S.: Deep neural network or dermatologist? In: Suzuki, K., et al. (eds.) ML-CDS/IMIMIC -2019. LNCS, vol. 11797, pp. 48–55. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-33850-3_6

Selvaraju, R.R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., Batra, D.: Grad-cam: visual explanations from deep networks via gradient-based localization. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV), pp. 618–626 (2017)

Lundberg, S.M., Lee, S.-I.: A unified approach to interpreting model predictions. In: Advances in Neural Information Processing Systems, pp. 4765–4774 (2017)

Aggarwal, A., Das, N., Sreedevi, I.: Attention-guided deep convolutional neural networks for skin cancer classification. In: IEEE International Conference on Image Processing Theory, Tools and Applications, pp. 1–6 (2019)

Zhang, J., Xie, Y., Xia, Y., Shen, C.: Attention residual learning for skin lesion classification. IEEE Trans. Med. Imaging 38(9), 2092–2103 (2019)

Tschandl, P., Rosendahl, C., Kittler, H.: The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. Sci. Data 5(1), 1–9 (2018)

Pizer, S.M., et al.: Adaptive histogram equalization and its variations. Comput. Vis. Graphics Image Process. 39(3), 355–368 (1987)

Otsu, N.: A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 9(1), 62–66 (1979)

Zaqout, I.: Diagnosis of skin lesions based on dermoscopic images using image processing techniques. Pattern Recognition-Selected Methods and Applications Intech Open (2019)

Amaliah, B., Fatichah, C., Widyanto, M.R.: ABCD feature extraction of image dermatoscopic based on morphology analysis for melanoma skin cancer diagnosis. Jurnal Ilmu Komputer dan Informasi 3(2), 82–90 (2010)

Breiman, L.: Random forests. Mach. Learn. 45(1), 5–32 (2001)

Cortes, C., Vapnik, V.: Support-vector networks. Mach. Learn. 20(3), 273–297 (1995)

Bergstra, J., Bardenet, R., Bengio, Y., Kégl, B.: Algorithms for hyper-parameter optimization. Advances in Neural Information Processing Systems (2011)

Lin, M., Chen, Q., Yan, S.: Network in network. arXiv:1312.4400 (2013)

Jetley, S., Lord, N.A., Lee, N., Torr, P.H.S.: Learn to pay attention. arXiv:1804.02391 (2018)

Vaswani, A., et al.: Attention is all you need. In: Advances in Neural Information Processing Systems (2017)

Ramachandran, P., Parmar, N., Vaswani, A., Bello, I., Levskaya, A., Shlens, J.: Stand-alone self-attention in vision models. arXiv:1906.05909 (2019)

Zhou, B., Khosla, A., Lapedriza, A., Oliva, A., Torralba, A.: Learning deep features for discriminative localization. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2016)

Kingma, D., Ba, J.: Adam: a method for stochastic optimization. In: International Conference on Learning Representations (2014)

Chen, D., Li, J., Xu, K.: AReLU: attention-based rectified linear unit. arXiv:2006.13858 (2020)

Dai, Y., Oehmcke, S., Gieseke, F., Wu, Y., Barnard, K.: Attention as activation. arXiv:2007.07729 (2020)

Eelbode, T., et al.: Optimization for medical image segmentation: theory and practice when evaluating with Dice score or Jaccard index. IEEE Trans. Med. Imaging 39(11), 3679–3690 (2020)

Nida, N., Irtaza, A., Javed, A., Yousaf, M.H., Mahmood, M.T.: Melanoma lesion detection and segmentation using deep region based convolutional neural network and fuzzy C-means clustering. Int. J. Med. Inform. 124, 37–48 (2019)

Bisla, D., Choromanska, A., Berman, R.S., Stein, J.A., Polsky, D.: Towards automated melanoma detection with deep learning: data purification and augmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (2019)

Adekanmi, A.A., Viriri, S.: Deep learning-based system for automatic melanoma detection. IEEE Access 8, 7160–7172 (2019)

Adekanmi, A.A., Viriri, S.: Deep learning techniques for skin lesion analysis and melanoma cancer detection: a survey of state-of-the-art. Artif. Intell. Rev. 54(2), 811–841 (2021)

Codella, N., et al.: Skin lesion analysis toward melanoma detection 2018: a challenge hosted by the international skin imaging collaboration (ISIC). arXiv:1902.03368 (2019)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Chowdhury, T., Bajwa, A.R.S., Chakraborti, T., Rittscher, J., Pal, U. (2021). Exploring the Correlation Between Deep Learned and Clinical Features in Melanoma Detection. In: Papież, B.W., Yaqub, M., Jiao, J., Namburete, A.I.L., Noble, J.A. (eds) Medical Image Understanding and Analysis. MIUA 2021. Lecture Notes in Computer Science(), vol 12722. Springer, Cham. https://doi.org/10.1007/978-3-030-80432-9_1

Download citation

DOI: https://doi.org/10.1007/978-3-030-80432-9_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-80431-2

Online ISBN: 978-3-030-80432-9

eBook Packages: Computer ScienceComputer Science (R0)