Abstract

Multiparty multiobjective optimization problems (MPMOPs) are a type of multiobjective optimization problems (MOPs), where multiple decision makers are involved, different decision makers have different objectives to optimize, and at least one decision maker has more than one objective. Although evolutionary multiobjective optimization has been studied for many years in the evolutionary computation field, evolutionary multiparty multiobjective optimization has not been paid much attention. To address the MPMOPs, the algorithm based on a multiobjective evolutionary algorithm is proposed in this paper, where the non-dominated levels from multiple parties are regarded as multiple objectives to sort the candidates in the population. Experiments on the benchmark that have common Pareto optimal solutions are conducted in this paper, and experimental results demonstrate that the proposed algorithm has a competitive performance.

This study is supported by the National Key Research and Development Program of China (No. 2020YFB2104003) and the National Natural Science Foundation of China (No. 61573327).

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In the real world, there are a lot of optimization problems which have more than one objective, and these objectives are conflicted with each other. This type of optimization problems is called multiobjective optimization problems (MOPs) [1, 4, 11] for two or three objectives and many-objective optimization problems (MaOPs) [6] for more than three objectives.

Multiobjective evolutionary algorithms (MOEAs) have been studied for many years, such as NSGA-II [2], MOEA/D [16], SPEA2 [20] and Two_Arch2 [14]. MOEAs could find a set of optimal solutions in a run, which attracts many researchers to design efficient evolutionary algorithms for solving MOPs [12, 13, 19].

However, there are cases that there are multiple decision makers (DMs) and each decision maker only pays attention to some of all the objectives of MOPs. If MOEAs only search for the optimal solutions on some certain objectives for a DM, it may lead to deterioration of other objectives concerned by other DMs. If existing MOEAs are directly used to solve the MOP including all the objectives from all parties, they may result in too many Pareto optimal solutions for the MOP, but not Pareto optimal solutions for each DM’s objectives.

Multiparty multiobjective optimization problems (MPMOPs) are used to express the above situation. An MPMOP often has multiple parties, and at least one party has more than one objective. MPMOPs are viewed as a subfield of multiobjective optimization problems in the viewpoints of different DMs, respectively. Although MPMOPs are regarded as multiple MOPs, they are quite different in most circumstances so that MPMOPs cannot be directly solved by the original MOEAs. That is because each DM does not consider all objectives in MPMOPs, but a few objectives which he/she cares.

A common method to solve MPMOPs is that the final optimal solutions are obtained by the complex negotiation of a third party among the Pareto optimal solutions from each party [7, 8, 15]. Recently, in [9], Liu et al. first proposed a method without negotiations to address a special class of MPMOPs with the common Pareto optimal solutions, called OptMPNDS. MPMOPs with the common Pareto optimal solutions means that there exits at least one solution that is Pareto optimal for all parties. In other words, the intersection of Pareto optimal solutions of MOPs in viewpoint of multiple DMs is not empty. OptMPNDS defines the dominance relation of solutions based on the corresponding Pareto optimal levels of multiple parties, where the levels are obtained according to the non-dominated sorting in NSGA-II [2]. In each generation, after sorting individuals by objectives preferred by each party, the common solutions with the same level of all parties are assigned to the same rank. Then, the rank of the rest individuals are set to the maximum level obtained by all parties. However, it cannot perfectly handle the situation that the individuals have the same maximum level with different other levels.

In this paper, we propose an improved evolutionary algorithm to solve the MPMOPs, called OptMPNDS2. Similar to OptMPNDS, OptMPNDS2 is also based on NSGA-II. However, OptMPNDS2 overcomes the shortcoming mentioned above. Experiments on the benchmark in [9] are conducted in this paper. From the experimental results, it can be seen that OptMPNDS2 has a more powerful performance on some MPMOPs.

The rest of this paper is organized as follows. Section 2 gives the related work. Section 3 clearly explains the proposed algorithm and Sect. 4 describes the performance metrics and shows the experiment results. Finally, we make a brief conclusion in Sect. 5.

2 Related Work

2.1 Multiparty Multiobjective Optimization Problems (MPMOPs)

An MPMOP is a particular class of multiobjective optimization problems (MOPs). MOPs are a type of optimization problems which have at least two objectives. Because MPMOPs are based on MOPs, the related concepts of MOPs are described first. Here, for convenience, an MOP is defined as a minimization problem [5]:

where \(f_i\) denotes the i-th objective function and F, combined with m objectives, denotes the vector function of objectives which should be minimized. And \(h_i(x)=0\) represents the i-th equality constraint, of which total number is \(n_p\); \(g_j(x)\le 0\) represents the j-th inequality constraint, of which total number is \(n_q\). x, a d-dimensional vector, stands for the decision variables that have the lower bounds \(x_{min}\) and upper bounds \(x_{max}\) in each dimension.

Dominance is a relation about decision vectors [19]. Given two decision vectors x and y, under the condition that all objectives satisfy \(f(x) \le f(y)\), if there exists one objective \(f_i\) satisfying \(f_i(x) < f_i(y)\), it is said that x Pareto dominates y, denoting as \(x \prec y\) [10]. Pareto optimal set (PS) is a set of Pareto optimal solutions. A decision vector x belongs to PS if and only if no solution dominates x. Pareto optimal front (PF) is a set of objectives of decision vectors in Pareto optimal set, which is formally defined as \(\text {PF} = \{f = (f_1(x),f_2(x),\cdots ,f_n(x))|x \in \text {PS}\}\).

There are multiple DMs in an MPMOP, where each DM focuses on different objectives and at least one DM has at least two objectives. Different from Formula (1), where \(f_i(x)\) denotes one objective of the solution x, MPMOPs consider that \(f_i(x)\) is a vector function, which represents all objectives of one party. Specifically,

and \(j_i\) denotes the number of objectives of the i-th party. Then m becomes the number of the parties.

As shown in [9], during evolution, to compare two individuals in MPMOPs, the comparison of all objectives together is not well performed. This is because one DM only focusses on the objectives concerned by himself. Therefore, the individuals are assigned the max levels among the Pareto optimal levels obtained by all parties to compare with each other in [9].

2.2 NSGA-II

Non-dominated sorting genetic algorithm II (NSGA-II) [2] is a popular evolutionary algorithm to solve MOPs. There are two core strategies in NSGA-II, i.e., the fast non-dominated sorting and the calculation of crowding distance. The fast non-dominated sorting adopts the Pareto dominance relation to rapidly achieve the Pareto levels of all the individuals in the evolutionary population. The crowding distance describes the distribution of the individuals. If the crowding distance is large, it means that the individual locates at a region with a few individuals; while it is small, the individual is in a dense region.

In each generation of NSGA-II, the process is shown as follows. First, the crossover and mutation operators are adopted to generate offspring. Next, the parent and offspring are put together to sort the Pareto levels. Then, the crowding distances of the individuals in the same level are obtained. Finally, based on the Pareto levels and crowding distances, the population of next generation is selected from the parent population and the offspring population.

3 The Proposed Algorithm

In this section, the algorithm based on NSGA-II is proposed to solve MPMOPs. Different from traditional MOPs, in MPMOPs, we should maximize the profits of each party. That is, we should try to approach to the Pareto front of each party. In order to achieve this goal, we should optimize the objectives from each party simultaneously, but group them according to each party.

The core issue is how to evaluate a given individual. For a DM, the Pareto optimal level number \(L_i\) of the party i could be regarded as an objective value for each individual. Therefore, for total m parties, we have the following multiobjective problem:

where m represents the number of the parties, \(L_i\) of the party i could be obtained by non-dominated sorting function in NSGA-II. It should be noted that, when calculating \(L_i\) of the party i, only the objectives of the party i are considered. Based on Formula (2), we can sort the individuals in evolutionary population according to the standard Pareto dominance, and then the corresponding algorithm could be designed.

The pseudocodes OptMPNDS2 are described in Algorithm 1, which are described as follows.

-

(1)

The population \(P_0\) is initialized with population size N.

-

(2)

The number of generation t and offspring \(Q_t\) are set as 0 and \(\emptyset \), respectively.

-

(3)

From steps 3 to 15, in the loop, the population \(P_t\) and its offspring \(Q_t\) are gathered into \(R_t\) and function MPNDS2 is applied to sort these individuals into different ranks \(\mathcal {F}\). Then, sort individuals in the same rank according to crowding distance. Next the parameters t and \(P_t\) are updated for next generation. Subsequently, N individuals are picked from the best to the worst and stored in the population of the next generation \(P_t\). Finally, the offspring \(Q_t\) is generated from \(P_t\) by both crossover and mutation operators, and the loop is repeated until the termination condition is satisfied.

-

(4)

Return the final solutions MPS.

OptMPNDS2 is similar to NSGA-II except the function MPNDS2(.), and the pseudocodes of MPNDS2(.) are given in Algorithm 2.

As depicted in Algorithm 2, the key point of OptMPNDS2 is to redefine a dominance relation among individuals in the objective space \(L = (L_1, L_2, \dots , L_m)\). From the perspective of a party, the individuals are sorted by \(F_i\) (i.e., the objective function of the party i). For individuals in \(R_t\), the Pareto level of the party i is calculated as the new “objective” of the party and stored in \(\mathcal {L}(:,i)\). After all m parties are sorted, perform the non-dominated sorting with the objectives \(\mathcal {L}\) again. Here, the non-dominated sorting function NonDominatedSorting adopted in our algorithm is the same as that in NSGA-II [18].

Although both OptMPNDS2 and OptMPNDS sort individuals in terms of each party first and then sort individuals according to the levels by parties, the detail behaviors of these two algorithms are different. In OptMPNDS, the maximum levels in all parties are used to sort the individuals, while OptMPNDS2 performs the non-dominated sorting again according to the non-dominated level numbers of the individuals.

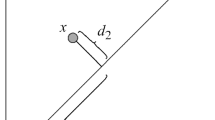

Here, we use an example to explain the difference between OptMPNDS2 and OptMPNDS. Suppose there are two individuals x and y in a bi-party multiobjective optimization, and their non-dominated level numbers are (1, 3) and (2, 3), respectively.

-

(1)

In OptMPNDS, x and y have the same rank because both the maximum non-dominated levels are 3.

-

(2)

In OptMPNDS2, x dominates y. For the first party, their non-dominated levels are 1 and 2, respectively. For the second party, their non-dominated levels are the same. Therefore, according to Formula (2), x dominates y.

4 Experiments

4.1 Parameter Settings

In [9], 11 MPMOP test problems with the common Pareto optimal solutions are given, and two algorithms, i.e., OptMPNDS and OptAll, are used to solve MPMOPs. OptAll just views the problems as MOPs and performs the NSGA-II to obtain solutions.

To compare with these two algorithms, OptMPNDS2 uses the same parameters as [9]. All three algorithms use the simulated binary crossover (SBX) and polynomial mutation [3], of which distribution indexes are set as 20. The rates of crossover and mutation are set to 1.0 and 1/d, respectively, where d represents the dimension of the individuals. For each test problem, all algorithms are run 30 times to obtain the average results. In each run, the population size is set to 100 and the maximum fitness evaluations is set to \(1000*d*m\), where d and m represent the dimension of MPMOPs and the number of parties, respectively.

Inverted generational distance (IGD) [17] is an indicator to evaluate the solution quality, which measures both the convergence and uniformity of solutions. To adapt metric for MPMOPs, the work [9] slightly modifies the related concept about the distance between an individual v and a PF (denoted by P) shown as follows:

where \(v_{ij} \), as well as \(s_{ij}\), means the j-th objective values of the i-th party, respectively. There are m parties and the i-th party has \(j_i\) objectives.

Based on Formula (3), IGD is calculated as follows:

Where \(P^*\) represents the true PF and P represents the PF that algorithms obtained.

For IGD, the smaller value means the better performance of the algorithm, since it measures the distance between the true PF and PF obtained by the algorithm.

Considering the final population returned by an algorithm could contain some dominated solutions, the solution number (SN) [9], which represents the number of non-dominated solutions in the final population, is also used to evaluate the performance of the algorithms. The algorithm with a larger SN has a better performance.

4.2 Results

Tables 1, 2 and 3 show the IGD of the three algorithms, i.e., OptMPNDS2, OptMPNDS, OptAll. The problems from MPMOP1 to MPMOP11 are used in experiments, and the dimensions are set to 10, 30 and 50. There are the mean and standard deviation values for each problem in each row. And the best results of the same problem are labeled in the bold font. The sign “—” means that the algorithm does not obtain any solution in at least one run. And nbr denotes the number of problems for which the algorithm obtains the best results.

As tables depicted, OptMPNDS2 performs better than OptAll and is comparable with OptMPNDS. In Table 1, the number of the best IGD results of OptMPNDS2 reaches 4. In Table 2, OptMPNDS2 performs the best on 8 problems of 11. The performance of OptMPNDS2 is better than OptMPNDS in Table 3, where OptMPNDS2 wins 7 problems of 11.

In terms of SN, from Tables 4, 5 and 6, it can be observed that OptMPNDS2 performs slightly better than OptMPNDS, and these two both are better than OptAll. All the numbers of the best SN results obtained by OptMPNDS2 for all dimensions reach 9. The numbers of the best SN results obtained by OptMPNDS are 7, 7 and 6, respectively. For OptAll, its nbr values for the SN metric is always 0.

In summary, OptMPNDS2 obtains more solutions, and the solutions are closer to the true PF for MPMOPs with higher dimensions.

5 Conclusion

In this paper, we propose an evolutionary algorithm called OptMPNDS2 to solve MPMOPs. A new dominance relation of individuals in MPMOPs is defined to handle the non-dominated sorting, where the Pareto optimal level number of each party is regarded as an objective value of the individual. In the experiments, OptMPNDS2 is compared with OptMPNDS as well as OptAll. Experimental results show that the overall performance of the proposed OptMPNDS2 is better. In the future, we will establish a benchmark of the MPMOPs which have no common Pareto optimal solutions, and the benchmark will be used to evaluate the proposed algorithm.

References

Coello, C.C.: Evolutionary multi-objective optimization: a historical view of the field. IEEE Comput. Intell. Mag. 1(1), 28–36 (2006)

Deb, K., Pratap, A., Agarwal, S., Meyarivan, T.: A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 6(2), 182–197 (2002)

Deb, K., Sindhya, K., Okabe, T.: Self-adaptive simulated binary crossover for real-parameter optimization. In: Proceedings of the 9th Annual Conference on Genetic and Evolutionary Computation, pp. 1187–1194 (2007)

Fonseca, C.M., Fleming, P.J.: An overview of evolutionary algorithms in multiobjective optimization. Evol. Comput. 3(1), 1–16 (1995)

Geng, H., Zhang, M., Huang, L., Wang, X.: Infeasible elitists and stochastic ranking selection in constrained evolutionary multi-objective optimization. In: Wang, T.-D., et al. (eds.) SEAL 2006. LNCS, vol. 4247, pp. 336–344. Springer, Heidelberg (2006). https://doi.org/10.1007/11903697_43

Ishibuchi, H., Tsukamoto, N., Nojima, Y.: Evolutionary many-objective optimization: a short review. In: 2008 IEEE Congress on Evolutionary Computation (IEEE World Congress on Computational Intelligence), pp. 2419–2426. IEEE (2008)

Lau, R.Y.: Towards genetically optimised multi-agent multi-issue negotiations. In: Proceedings of the 38th Annual Hawaii International Conference on System Sciences, pp. 35c–35c. IEEE (2005)

Lau, R.Y., Tang, M., Wong, O., Milliner, S.W., Chen, Y.P.P.: An evolutionary learning approach for adaptive negotiation agents. Int. J. Intell. Syst. 21(1), 41–72 (2006)

Liu, W., Luo, W., Lin, X., Li, M., Yang, S.: Evolutionary approach to multiparty multiobjective optimization problems with common pareto optimal solutions. In: Proceedings of 2020 IEEE Congress on Evolutionary Computation (CEC), pp. 1–9. IEEE (2020)

Miettinen, K.: Nonlinear Multiobjective Optimization, vol. 12. Springer Science & Business Media, Berlin (2012)

Tamaki, H., Kita, H., Kobayashi, S.: Multi-objective optimization by genetic algorithms: a review. In: Proceedings of IEEE International Conference on Evolutionary Computation, pp. 517–522. IEEE (1996)

Tian, Y., Cheng, R., Zhang, X., Jin, Y.: PlatEMO: a MATLAB platform for evolutionary multi-objective optimization [educational forum]. IEEE Comput. Intell. Mag. 12(4), 73–87 (2017)

Trivedi, A., Srinivasan, D., Sanyal, K., Ghosh, A.: A survey of multiobjective evolutionary algorithms based on decomposition. IEEE Trans. Evol. Comput. 21(3), 440–462 (2016)

Wang, H., Jiao, L., Yao, X.: Two_Arch2: an improved two-archive algorithm for many-objective optimization. IEEE Trans. Evol. Comput. 19(4), 524–541 (2014)

Zhang, C., Wang, G., Peng, Y., Tang, G., Liang, G.: A negotiation-based multi-objective, multi-party decision-making model for inter-basin water transfer scheme optimization. Water Resour. Manag. 26(14), 4029–4038 (2012)

Zhang, Q., Li, H.: MOEA/D: a multiobjective evolutionary algorithm based on decomposition. IEEE Trans. Evol. Comput. 11(6), 712–731 (2007)

Zhang, Q., Zhou, A., Zhao, S., Suganthan, P.N., Liu, W., Tiwari, S.: Multiobjective optimization test instances for the CEC 2009 special session and competition (2008)

Zhang, X., Tian, Y., Cheng, R., Jin, Y.: An efficient approach to nondominated sorting for evolutionary multiobjective optimization. IEEE Trans. Evol. Comput. 19(2), 201–213 (2014)

Zhou, A., Qu, B.Y., Li, H., Zhao, S.Z., Suganthan, P.N., Zhang, Q.: Multiobjective evolutionary algorithms: a survey of the state of the art. Swarm Evol. Comput. 1(1), 32–49 (2011)

Zitzler, E., Laumanns, M., Thiele, L.: SPEA2: Improving the strength pareto evolutionary algorithm. TIK-report 103 (2001)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

She, Z., Luo, W., Chang, Y., Lin, X., Tan, Y. (2021). A New Evolutionary Approach to Multiparty Multiobjective Optimization. In: Tan, Y., Shi, Y. (eds) Advances in Swarm Intelligence. ICSI 2021. Lecture Notes in Computer Science(), vol 12690. Springer, Cham. https://doi.org/10.1007/978-3-030-78811-7_6

Download citation

DOI: https://doi.org/10.1007/978-3-030-78811-7_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-78810-0

Online ISBN: 978-3-030-78811-7

eBook Packages: Computer ScienceComputer Science (R0)