Abstract

Due to the extensive role of social networks in social media, it is easy for people to share the news, and it spreads faster than ever before. These platforms also have been exploited to share the rumor or fake information, which is a threat to society. One method to reduce the impact of fake information is making people aware of the correct information based on hard proof. In this work, first, we propose a propagation model called Competitive Independent Cascade Model with users’ Bias (CICMB) that considers the presence of strong user bias towards different opinions, believes, or political parties. We further propose a method, called \(k-TruthScore\), to identify an optimal set of truth campaigners from a given set of prospective truth campaigners to minimize the influence of rumor spreaders on the network. We compare \(k-TruthScore\) with state of the art methods, and we measure their performances as the percentage of the saved nodes (nodes that would have believed in the fake news in the absence of the truth campaigners). We present these results on a few real-world networks, and the results show that \(k-TruthScore\) method outperforms baseline methods.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Since 1997, Online Social Networks (OSNs) have made it progressively easier for users to share the information with each other, and information reaches millions of people in just a few seconds. Over these years, people shared true as well as fake news or misinformation on OSNs, since no references or proofs are required while posting on an OSN. In 2017, The World Economic Forum announced that the fake news and misinformation is one of the top three threats to democracy worldwide [9]. Google Trend Analysis shows that the web search for the “Fake News” term began to gain relevance from the time of the U.S. presidential election in 2016 [1]; Fig. 1 shows the plot we generated using Google Trend data.

There are several reasons why people share fake news. Some of the threatening ones are changing the outcome of an event like an election, damaging the reputation of a person or company, creating panic or chaos among people, gaining profit by improving the public image of a product or company, etc. Less malicious reasons for sharing misinformation are due to the fame that users catch as a result of the news’ catchiness or to start a new conversation while having no malicious intentions [5].

A study on the Twitter data shows that the false news spread faster, farther, and deeper [17, 25], and these effects are even more prominent in the case of political news than financial, disaster, terrorism or science-related news [25]. A large volume of fake information is shared by a small number of accounts, and Andrews et al. [3] show that this could be combated by propagating the correct information in the time of crisis; the accounts propagating true information are referred to as “official” accounts.

In OSNs, users have high bias or polarity towards news topics, such as a bias for political parties [21, 26]. Lee et al. [13] observe that the users who are actively involved in political discussions on OSNs tend to develop more extreme political attitudes over time than the people who do not use OSNs. Users tend to share the news confirming their beliefs. In this work, we propose a propagation model to model the spread of misinformation and its counter correct information in the presence of strong user bias; the proposed model is referred to as the Competitive Independent Cascade Model with users’ Bias (CICMB). In the proposed model, the user’s bias for a belief or opinion keeps getting stronger as they are exposed to more news confirming that opinion, and at the same time, their bias towards counter-opinion keeps getting weaken.

It is very challenging to mitigate the fake news in the presence of strong users’ bias. Researchers have proposed various techniques to minimize the impact of fake news on a given social network. The proposed methods can be categorized as, (i) influence blocking (IB) techniques [2, 18], and (ii) truth-campaigning techniques (TC) [4, 22]. IB techniques aim to identify a set of nodes that can be blocked or immunized to minimize the spread of fake information in the network. However, in truth campaigning techniques, the aim is to identify an optimal set of users who will start spreading the correct information in the network so that the people are aware of true news and share it further. Psychological studies have shown that people believe in true news rather than fake news when they receive both, and this also reduces the sharing of fake information further [16, 23].

Most of the existing methods identify truth campaigners in the network who can minimize the impact of fake information; however, they do not consider the factor that a chosen node might not be interested in starting a truth campaign if asked [4, 15, 22]. In this work, we consider a realistic approach where we have a given set of nodes which are willing to start a truth campaign; these nodes are referred to as prospective truth campaigners. We propose a method to identify k most influential truth campaigners from the given set of prospective truth campaigners to minimize the damage of fake news. We compare the proposed method, \(k-TruthScore\), with state-of-the-art methods and the results show that the \(k-TruthScore\) is effective in minimizing the impact of fake news in the presence of strong user bias.

The paper is structured as follows. In Sect. 2 we discuss the related literature. In Sect. 3, we discuss the proposed spreading model. Section 4 includes our methodology to choose truth-campaigners. Section 5 shows the comparison of methods on real-world networks. We conclude the paper with future directions in Sect. 6.

2 Related Work

The problem of fake news spreading needs public attention to control further spreading. In a news feed released by Facebook in April 2017 [14], Facebook outlined two main approaches for countering the spread of fake news: (i) a crowdsourcing approach leveraging on the community and third-party fact-checking organizations, and (ii) a machine learning approach to detect fraud and spam accounts. A study by Halimeh et al. supports the fact that Facebook’s fake news combating techniques will have a positive impact on the information quality [10]. Besides Facebook, there are several other crowdsourced fact-checking websites including snopes.com, politifact.com, and factcheck.org.

Researchers have proposed various influence blocking and truth-campaigning techniques to mitigate fake news in different contexts. In influence blocking, the complexity of the brute force method to identify a set of nodes of size k to minimize the fake news spread is NP-hard [2]. Therefore, greedy or heuristic solutions are appreciated and feasible to apply in real-life applications. Amoruso et al. [2] proposed a two-step heuristic method that first identifies the set of most probable sources of the infection, and then places a few monitors in the network to block the spread of misinformation.

Pham et al. [18] worked on the Targeted Misinformation Blocking (TMB) problem, where the goal is to find the smallest set of nodes whose removal will reduce the misinformation influence at least by a given threshold \(\gamma \). Authors showed that TMB is \(\#P-hard\) problem under the linear threshold spreading model, and proposed a greedy algorithm that provides the solution set within the ratio of \(1+ln(\gamma / \epsilon )\) of the optimal set and the expected influence reduction is greater than \((\gamma - \epsilon )\), given that the influence reduction function is submodular and monotone. Yang et al. worked on two versions of the influence minimization problem called Loss Minimization with Disruption (LMD) and Diffusion Minimization with Guaranteed Target (DMGT) using Integer Linear Programming (ILP) [27]. Authors proposed heuristic solutions for the LMD problem where k nodes having the minimum degree or PageRank are chosen. They further proposed a greedy solution for the DMGT problem, where at each iteration, they choose a node that increases the maximal marginal gain.

In contrast to IB, truth campaigning techniques combat fake news by making the users aware of the true information. Budak et al. [4] showed that selecting a minimal group of users to disseminate “good” information in the network to minimize the influence of the “bad” information is an NP-hard problem. They provided an approximation guarantee for a greedy solution for different variations of this problem by proving them submodular. Nguyen et al. [15] worked on a problem called \(\beta ^I_T\) where they target to select the smallest set S of influential nodes which start spreading the good information, so that the expected decontamination ratio in the whole network is \(\beta \) after t time steps, given that the misinformation was started from a given set of nodes I. They proposed a greedy solution called Greedy Viral Stopper (GVS) that iteratively selects a node to be decontaminated so that the total number of decontaminated nodes will be maximum if the selected node starts spreading the true information.

Farajtabar et al. [8] proposed a point process based mitigation technique using the reinforcement learning framework. The proposed method was implemented in real-time on Twitter to mitigate a synthetically started fake news campaign. Song et al. [22] proposed a method to identify truth campaigners in temporal influence propagation where the rumor has no impact after its deadline; the method is explained in Sect. 5.2. In [20], authors considered users’ bias, though the bias remains constant over time. In our work, we consider a realistic spreading model where users’ biases keep getting stronger or weaken based on the content they are exposed to and share further. Next, we propose \(k-TruthScore\) method to choose top-k truth campaigners for minimizing the negative impact of fake news in the network.

3 The Proposed Propagation Model: CICMB

The Independent Cascade Model (ICM) [11] has been used to model the information propagation in social networks. In the existing ICM, each directed edge has an influence probability with which the source node influences the target node. The propagation is started from a source node or a group of source nodes. At each iteration, a newly influenced node tries to influence each of its neighbors with the given influence probability, and will not influence any of its neighbors in further iterations. Once there is no newly influenced node in an iteration, the propagation process is stopped. The total number of influenced nodes shows the influencing or spreading power of the seed nodes.

Kim and Bock [12] observed that peoples’ beliefs construct their positive or negative emotions about a topic, which further affects their attitude and behavior towards the misinformation spreading. We believe that in real life, people have biases towards different opinions, and once they believe in one information, they are less willing to switch their opinion.

Competitive Independent Cascade Model with users’ Bias (CICMB). In our work, we propose a Competitive Independent Cascade Model with users’ Bias (CICMB) by incorporating the previous observation in the ICM model when two competitive misinformation and its counter true information propagates in the network. In this model, each user has a bias towards misinformation at timestamp i, namely \(B_m(u)[i]\), and its counter true information, \(B_t(u)[i]\). The influence probability of an edge (u, v) is denoted as, P(u, v). Before the propagation starts, each node is in the neutral state, N. If the user believes in misinformation or true information, then it can change its state to M or T, respectively.

Once the propagation starts, all Misinformation starters will change their state to M, and truth campaigners will be in state T, and they will never change their state during the entire propagation being stubborn users. At each iteration, truth and misinformation campaigners will influence their neighbors as we explain next. A misinformation spreader u will change the state of its neighbor v at timestamp i with the probability \(Prob= P(u,v) \cdot B_m(v)[i]\). If the node v change its state from N to M, its bias values are updated as \((B_m(v)[i+1], B_t(v)[i+1])=f(B_m(v)[i], B_t(v)[i])\). Similarly, a truth campaigner u will influence its neighbor v with \(P(u,v) \cdot B_t(v)[i]\) probability, and the bias values are updated as, \((B_m(v)[i+1], B_t(v)[i+1])=f(B_m(v)[i], B_t(v)[i])\).

In our implementation, we consider that when a node u believes in one information, its bias towards accepting other information is reduced in half, using these functions:

(i). When u changes its state to M, \(B_m(u)[i+1]=B_m(u)[i]\) & \(B_t(u)[i+1]={B_t(u)[i]}/2\).

(ii). When u changes its state to T, \(B_m(u)[i+1]={B_m(u)[i]}/2\) & \(B_t(u)[i+1]=B_t(u)[i]\).

The model is explained in Fig. 2 for this linear function. As a second case for our research, we will present results for another stronger bias function, where the bias is reduced faster, using a quadratic function.

4 Methodology

We first introduce the problem formulation and then follow with our proposed solution.

4.1 Problem Formulation

In OSNs, when true or misinformation is propagated, users change their state between the elements of the set \(\{N, M, T\}\). The state of a user u at timestamp i is denoted as \(\varvec{\pi }_u[i]\) with the following possible assignments: (i) \(\varvec{\pi }_u[i]=T\) if user believes in true information, (ii) \(\varvec{\pi }_u[i]=M\) if user believes in misinformation, and (iii) \(\varvec{\pi }_u[i]=N\) if user is in neutral state.

Given a set of rumor starters R who spreads misinformation, the deadline of misinformation spread \(\alpha \), and a set of prospective truth campaigners P, we aim to identify a set D of chosen truth-campaigners of size k from set P (\(D \subset P\) and \(|D|=k\)) to start a truth-campaign such that the impact of misinformation is minimized.

Let u be a node such that \(\varvec{\pi }_{u}[\alpha ]= M\) if only misinformation is propagated in the network and \(\varvec{\pi }_{u}[\alpha ] = T\), when both misinformation and its counter true information is propagated in the network. The node u is considered a saved node at the deadline \(\alpha \) as it believes in true information and would have believed in the misinformation in the absence of truth-campaign.

Problem Definition: Given a graph \(G=(V, E)\), a rumor deadline \(\alpha \), a set of rumor starters R, and a set of prospective truth-campaigners P. Let S be the set of nodes whose state is M at time \(\alpha \) when only nodes in the set R propagate misinformation using CICMB. Let I be the set of nodes whose state is T at time \(\alpha \) when sets R and D propagate misinformation and true information, respectively, using CICMB. Our aim is to find a set \(D \subset P\) of given size k, such that the number of saved nodes is maximized as follows:

4.2 The Proposed Solution

In this section, we introduce our proposed algorithm, \(k-TruthScore\), giving intuition for how it works, and we then summarize it at the end of the section. For a given set of misinformation starters R and prospective truth-campaigners P, our goal is to estimate which truth campaigner node will save the maximum number of nodes by the deadline \(\alpha \) tracked by their TruthScore that we introduce below. We then choose top-k nodes having the highest TruthScore as truth-campaigners (D) to minimize the impact of misinformation.

To compute TruthScore, we assign to each node u, two arrays mval and tval, each of length (\(\alpha \) + 1), where \(mval_u[i]\) and \(tval_u[i]\) denote the estimated probability that node u will change its state to M and T at time i, respectively. To estimate these probability values, first, we create the Directed Acyclic Graph (DAG) \(G'(V, E')\) of the given network G to remove the cycles from the network. Otherwise, if there would be a cycle in the network, then the nodes belonging to the cycle will keep updating the probabilities of each other in an infinite loop.

We now compute the probability of an arbitrary node u changing its state to M at some iteration i, namely \(mval_u[i]\). For this to happen, we compute two probabilities:

-

1.

the probability that the node u is not in state M at time \(i-1\) is computed as, \((1-\sum _{j=1}^{i-1}mval_u[j])\), and

-

2.

the probability that the node u will receive the misinformation at the \(i^{th}\) step that considers all parents v of node u that have updated their mval at \(i-1\) timestamp, \( V_1=\{v | (v,u) \in E' \; \& \; mval_v[i-1] > 0 \}\). Then we compute the value of \(mval_u[i]\) by taking their product as shown in Eq. 1:

$$\begin{aligned} mval_u[i]=\sum _{v \in V_1}(mval_u[i]+(1-mval_u[i])\cdot (1-\sum _{j=1}^{i-1}mval_u[j]) \cdot P(v,u)\cdot B_m(u) \cdot mval_v[i-1]) \end{aligned}$$(1)

We use this formula to compute \(mval_u[i]\) for all nodes from \(i=1\) to \(\alpha \). All the nodes whose mval has been updated, are added to set A.

Next, we compute the TruthScore of each prospective truth-campaigner w. We estimate the probability that a node u will believe in true information at \(i_{th}\) timestamp when the true information is propagated from node w in the network. For this update \(tval_w[0]=1\), and compute for each node \(u \in (V-R)\), \(tval_u[i]\) from \(i=1\) to \(\alpha \).

The probability that node u will change its state to T at timestamp i is the probability that the node u has not changed its state to T at any previous timestamp multiplied by the probability of receiving the true information at \(i_{th}\) timestamp. It is computed using the same approach as defined in Eq. 1.

The estimated probability that a node u will change its state to T at time stamp i is computed as follows: Consider all parents v of node u who has updated \(tval_v[i-1]\) at \(i-1 \) timestamp, \( V_2=\{v | (v,u) \in E' \; \& \; tval_v[i-1] > 0 \}\).

The tval is computed for \(i=1\) to \(\alpha \). All the nodes whose tval has been updated, are added to B. The truth score of truth-campaigner w is computed as:

For the fast computation, a node v will update the mval of its child node u at timestamp i, if \(mval_v[i-1] > \theta \), where \(\theta \) is a small threshold value. The same threshold value is used while computing tval array of the nodes.

We now summarize the above described method, and we call it k -TruthScore:

-

1.

Create \(G'(V, E')\), the DAG of the given network G.

-

2.

For all nodes in the set R of rumor starters, update \(mval_u[0]\) = 1. Compute mval for the nodes reachable from R by the given deadline \(\alpha \) using Eq. 1 and add these nodes to set A.

-

3.

For each given prospective truth-campaigner w from set P,

-

4.

Choose top-k truth-campaigners having the highest TruthScore.

5 Performance Study

We carry out experiments to validate the performance of the \(k-TruthScore\) to identify top-k truth-campaigners.

5.1 Datasets

We perform the experiments on three real-world directed social networks, Digg, Facebook, and Twitter, as presented in Table 1. For each of them, the diameter is computed by taking the undirected version of the network.

We assign the influence probability of each edge (v, u) uniformly at random (u.a.r.) from the interval (0, 1]. Each node in the network has two bias values, one for the misinformation and another for the true information. For misinformation-starters, the bias for misinformation is randomly assigned a real value between [0.7, 1] as the nodes spreading misinformation will be highly biased towards it. For these nodes, the bias for true information will be assigned as, \(B_t[0] = 1-B_m[0]\).

Similarly, the nodes chosen to be prospective truth campaigners will have a high bias towards true information, and it will be assigned u.a.r. from the interval [0.7, 1]. For prospective truth-campaigners, the bias for misinformation will be assigned as, \(B_m[0] = 1-B_t[0]\). For the rest of the nodes, the bias value for misinformation and their counter true-information will be assigned uniformly at random from the interval (0, 1]. Note that the size of the prospective truth-campaigners set is fixed as \(|P|=50\), and set P is chosen u.a.r. from set \((V-R)\). We fix \(\theta =0.000001\) for all the experiments.

5.2 Baseline Methods

We have compared our method to the following two state-of-the-art methods.

-

1.

Temporal Influence Blocking (TIB) [22]. The TIB method runs into two phases. In the first phase, it identifies the set of nodes that can be reached by misinformation spreaders by the given deadline. Then, it identifies the potential nodes that can influence these nodes. In the second phase, it generates Weighted Reverse Reachable (WRR) trees to compute the influential power of identified potential mitigators by estimating the number of reachable nodes for each potential mitigator. In our experiments, we select the top-k nodes to be the prospective truth-campaigners having the highest influential power.

-

2.

Targeted Misinformation Blocking (TMB) [19]. The TMB computes the influential power of a given node by computing the number of saved nodes if the given node is immunized in the network. Therefore, the influence reduction of a node v is computed as \(h(v) = N(G) - N(G \setminus v)\), where N(G) and \(N(G \setminus v)\) denote the number of nodes influenced by misinformation starters in the G and \((G \setminus v)\), respectively. We then select top-k nodes having the highest influence reduction as truth-campaigners.

After selecting top-k truth campaigners using TIB and TMB methods, the CICMB model is used to propagate misinformation and counter true information.

If set R starts propagating misinformation, then S is the set of nodes whose state is M at \(t = \alpha \). If set R propagates misinformation, and set D propagates true information, then let I be the set of nodes whose state is T at \(t = \alpha \). The performance of various methods is evaluated by computing the percentage of nodes saved, i.e., \(\frac{|S|-|I|}{|S|}\cdot 100\).

We compute the results by choosing five different sets of misinformation starters and truth-campaigners. In several instances, each experiment is repeated 100 times, and we report their average value to show the percentage of saved nodes.

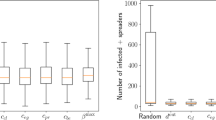

First, we study the performance of \(k-TruthScore\) as a function of chosen truth-campaigners k, varying k from 2 to 10. We also set the deadline for the misinformation to be the network diameter, if not specified otherwise. Figure 3 shows that the \(k-TruthScore\) outperforms state-of-the-art methods for finding the top-k truth-campaigners. TIB and TMB methods are designed to choose truth-campaigners globally, and we restrict these methods to choose truth-campaigners from the given set of prospective truth-campaigners. Under this restriction, \(k-TruthScore\) significantly outperforms both TIB and TMB methods.

Next, we study the impact of varying the number of rumor starters. We fix \(k = 5\), and allow M to vary from 5 to 25. Figure 4 shows that in this case, the percentage of nodes saved reduces as the number of rumor starters increases, while \(k-TruthScore\) still outperforms TIB and TMB methods.

We also study the impact of varying the deadline on the percentage of nodes saved when \(|M|=10\) and \(k=5\). The results are shown for the Digg network and \(\alpha \) varying from 4 to 20. The results show that the percentage of the saved nodes is maximum around the iteration time \(\alpha = diameter(G)\), and consistently \(k-TruthScore\) outperforms at any other set deadline (Fig. 5).

The results discussed so far depend on linear degradation, as presented in Sect. 3. We now study the efficiency of \(k-TruthScore\) for a quadratic bias function. We evaluate \(k-TruthScore\) for the following bias function:

When u changes its state to M, \(B_m(u)[i+1]=B_m(u)[i]\) & \(B_t(u)[i+1]=({B_t(u)}[i])^2\).

When u changes its state to T, \(B_m(u)[i+1]=({B_m(u)}[i])^2\) & \(B_t(u)[i+1]=B_t(u)[i]\).

The results show that \(k-TruthScore\) saves the maximum number of nodes for the quadratic bias function. However, the percentage of saved nodes is smaller than the ones observed in Fig. 6. This is due to the reason that the square function reduces the biases faster, and users are more stubborn to change their state once they have believed in one information.

6 Conclusion

The current research presents a solution to the problem of minimizing the impact of misinformation by propagating its counter true information in OSNs. In particular, we look to identify the top-k candidates as truth-campaigners from a given set of prospective truth-campaigners and given rumor starters. We first propose a propagation model called the Competitive Independent Cascade Model with users’ Bias that considers the presence of strong user bias towards different opinions, believes, or political parties. For our experiments, we used two different functions to capture the bias dynamics towards true and misinformation, one linear and one quadratic.

Next, we introduce an algorithm, \(k-TruthScore\), to identify top-k truth-campaigners, and compare the results against two state of the art algorithms, namely Temporal Influence Blocking and Targeted Misinformation Blocking. To compare the algorithms, we compute the percentage of saved nodes per network G, by the deadline \(\alpha \). A node is tagged as saved if at the deadline \(\alpha \) it believes in true information and would have believed in the misinformation in the absence of truth campaigners.

We compare the three algorithms under each of the two bias functions on three different networks, namely Digg, Facebook, and Twitter. Moreover, we compare the three algorithms by varying the number of originating rumor spreaders as well as varying the deadline at which we compute the TruthScore. Our results show that \(k-TruthScore\) outperforms the state of the art methods in every case.

In the future, we would like to do an in-depth analysis of the CICMB model for different bias functions, such as constant increase/decrease (where the bias values are increased or decreased by a constant value, respectively), other linear functions (for example, if one bias value of a user increases then the other decreases), different quadratic functions, and so on. The proposed \(k-TruthScore\) method outperforms for both the considered functions; however, one can propose a method, i.e., specific to a given bias function.

References

Google trends fake news. Accessed 11 September 2020

Amoruso, M., Anello, D., Auletta, V., Ferraioli, D.: Contrasting the spread of misinformation in online social networks. In: Proceedings of the 16th Conference on Autonomous Agents and MultiAgent Systems, pp. 1323–1331. International Foundation for Autonomous Agents and Multiagent Systems (2017)

Andrews, C., Fichet, E., Ding, Y., Spiro, E.S., Starbird, K.: Keeping up with the tweet-dashians: the impact of’official’accounts on online rumoring. In: Proceedings of the 19th ACM Conference on Computer-Supported Cooperative Work and Social Computing, pp. 452–465. ACM (2016)

Budak, C., Agrawal, D., El Abbadi, A.: Limiting the spread of misinformation in social networks. In: Proceedings of the 20th International Conference on World Wide Web, pp. 665–674. ACM (2011)

Chen, X., Sin, S.C.J., Theng, Y.L., Lee, C.S.: Why do social media users share misinformation? In: Proceedings of the 15th ACM/IEEE-CS Joint Conference on Digital Libraries, pp. 111–114. ACM (2015)

De Choudhury, M., Lin, Y.R., Sundaram, H., Candan, K.S., Xie, L., Kelliher, A.: How does the data sampling strategy impact the discovery of information diffusion in social media? In: Fourth International AAAI Conference on Weblogs and Social Media (2010)

De Choudhury, M., Sundaram, H., John, A., Seligmann, D.D.: Social synchrony: predicting mimicry of user actions in online social media. In: 2009 International Conference on Computational Science and Engineering, vol. 4, pp. 151–158. IEEE (2009)

Farajtabar, M., et al.: Fake news mitigation via point process based intervention. https://arxiv.org/abs/1703.07823 (2017)

Forum, W.E.: The global risks report 2017

Halimeh, A.A., Pourghomi, P., Safieddine, F.: The impact of Facebook’s news fact-checking on information quality (IQ) shared on social media (2017)

Kempe, D., Kleinberg, J., Tardos, É.: Influential nodes in a diffusion model for social networks. In: Caires, L., Italiano, G.F., Monteiro, L., Palamidessi, C., Yung, M. (eds.) ICALP 2005. LNCS, vol. 3580, pp. 1127–1138. Springer, Heidelberg (2005). https://doi.org/10.1007/11523468_91

Kim, J.H., Bock, G.W.: A study on the factors affecting the behavior of spreading online rumors: focusing on the rumor recipient’s emotions. In: PACIS, p. 98 (2011)

Lee, C., Shin, J., Hong, A.: Does social media use really make people politically polarized? Direct and indirect effects of social media use on political polarization in South Korea. Telemat. Inform. 35(1), 245–254 (2018)

Mosseri, A.: Working to stop misinformation and false news (2017). https://newsroom.fb.com/news/2017/04/working-to-stop-misinformation-and-false-news/

Nguyen, N.P., Yan, G., Thai, M.T., Eidenbenz, S.: Containment of misinformation spread in online social networks. In: Proceedings of the 4th Annual ACM Web Science Conference, pp. 213–222. ACM (2012)

Ozturk, P., Li, H., Sakamoto, Y.: Combating rumor spread on social media: the effectiveness of refutation and warning. In: 2015 48th Hawaii International Conference on System Sciences (HICSS), pp. 2406–2414. IEEE (2015)

Park, J., Cha, M., Kim, H., Jeong, J.: Managing bad news in social media: a case study on domino’s pizza crisis. In: ICWSM, vol. 12, pp. 282–289 (2012)

Pham, C.V., Phu, Q.V., Hoang, H.X.: Targeted misinformation blocking on online social networks. In: Nguyen, N.T., Hoang, D.H., Hong, T.-P., Pham, H., Trawiński, B. (eds.) ACIIDS 2018. LNCS (LNAI), vol. 10751, pp. 107–116. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-75417-8_10

Pham, C.V., Phu, Q.V., Hoang, H.X., Pei, J., Thai, M.T.: Minimum budget for misinformation blocking in online social networks. J. Comb. Optim. 38(4), 1101–1127 (2019). https://doi.org/10.1007/s10878-019-00439-5

Saxena, A., Hsu, W., Lee, M.L., Leong Chieu, H., Ng, L., Teow, L.N.: Mitigating misinformation in online social network with top-k debunkers and evolving user opinions. In: Companion Proceedings of the Web Conference, pp. 363–370 (2020)

Soares, F.B., Recuero, R., Zago, G.: Influencers in polarized political networks on twitter. In: Proceedings of the 9th International Conference on Social Media and Society, pp. 168–177 (2018)

Song, C., Hsu, W., Lee, M.: Temporal influence blocking: minimizing the effect of misinformation in social networks. In: 33rd IEEE International Conference on Data Engineering, ICDE 2017, San Diego, CA, USA, 19–22 April 2017, pp. 847–858 (2017). https://doi.org/10.1109/ICDE.2017.134

Tanaka, Y., Sakamoto, Y., Matsuka, T.: Toward a social-technological system that inactivates false rumors through the critical thinking of crowds. In: 2013 46th Hawaii International Conference on System Sciences (HICSS), pp. 649–658. IEEE (2013)

Viswanath, B., Mislove, A., Cha, M., Gummadi, K.P.: On the evolution of user interaction in Facebook. In: Proceedings of the 2nd ACM Workshop on Online Social Networks, pp. 37–42. ACM (2009)

Vosoughi, S., Roy, D., Aral, S.: The spread of true and false news online. Science 359(6380), 1146–1151 (2018)

Wang, Yu., Feng, Y., Hong, Z., Berger, R., Luo, J.: How polarized have we become? A multimodal classification of Trump followers and Clinton followers. In: Ciampaglia, G.L., Mashhadi, A., Yasseri, T. (eds.) SocInfo 2017. LNCS, vol. 10539, pp. 440–456. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-67217-5_27

Yang, L., Li, Z., Giua, A.: Influence minimization in linear threshold networks. Automatica 100, 10–16 (2019)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Saxena, A., Saxena, H., Gera, R. (2020). k-TruthScore: Fake News Mitigation in the Presence of Strong User Bias. In: Chellappan, S., Choo, KK.R., Phan, N. (eds) Computational Data and Social Networks. CSoNet 2020. Lecture Notes in Computer Science(), vol 12575. Springer, Cham. https://doi.org/10.1007/978-3-030-66046-8_10

Download citation

DOI: https://doi.org/10.1007/978-3-030-66046-8_10

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-66045-1

Online ISBN: 978-3-030-66046-8

eBook Packages: Computer ScienceComputer Science (R0)