Abstract

Automatically labeling intracranial arteries (ICA) with their anatomical names is beneficial for feature extraction and detailed analysis of intracranial vascular structures. There are significant variations in the ICA due to natural and pathological causes, making it challenging for automated labeling. However, the existing public dataset for evaluation of anatomical labeling is limited. We construct a comprehensive dataset with 729 Magnetic Resonance Angiography scans and propose a Graph Neural Network (GNN) method to label arteries by classifying types of nodes and edges in an attributed relational graph. In addition, a hierarchical refinement framework is developed for further improving the GNN outputs to incorporate structural and relational knowledge about the ICA. Our method achieved a node labeling accuracy of 97.5%, and 63.8% of scans were correctly labeled for all Circle of Willis nodes, on a testing set of 105 scans with both healthy and diseased subjects. This is a significant improvement over available state-of-the-art methods. Automatic artery labeling is promising to minimize manual effort in characterizing the complicated ICA networks and provides valuable information for the identification of geometric risk factors of vascular disease. Our code and dataset are available at https://github.com/clatfd/GNN-ART-LABEL.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

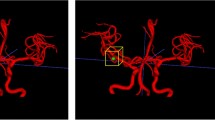

Intracranial arteries (ICA) have complex structures and are critical for maintaining adequate blood supply to the brain. There are substantial variations in these arteries among individuals that are associated with vascular disease and cognitive functions [1,2,3]. Comprehensive characterization of ICA including labeling each artery segment with its anatomical name (Fig. 1 (b)) is desirable for both clinical evaluation and research. The center of the ICA is the Circle of Willis (CoW, normally incorporating nine artery segments forming a ring shape), which connects the left and right hemispheres, as well as anterior and posterior circulations. It has been reported that only 52% of the population has a complete CoW [4]. Many natural variations of CoW exist, including those missing one or multiple arterial segments [5]. In addition, disease related changes within the complex network of ICA are also challenging for automated labeling. For example, stenosis may cause decreased cerebral flow, reflected as reduced blood signal in arterial images; collateral flow forms near the severe stenosis, leading to abnormal structures in the ICA. These situations make automated artery labeling challenging. A simplified graph illustration of ICA is shown in Fig. 1 (c).

(a) Time of flight (ToF) Magnetic Resonance Angiography (MRA) of cerebral arteries. (b) ICA labeled in different colors. (c) Illustration of CoW (yellow), left (blue) and right (red) anterior circulation, posterior circulation (red) and optional artery branches (black) with their anatomical names. When there are ICA variations, not only the optional artery branches, but also A1, M1, P1 segments may be missing. See supplementary material for abbreviations. (Colour figure online)

There have been continuous efforts in automating ICA labeling, using either private datasets with a limited number of scans or the publicly available UNC dataset with 50 cerebral Magnetic Resonance Angiography (MRA) images [6]. Takemura et al. [7] built a template of the CoW on five subjects, then arteries were labeled by template alignment and matching on fifteen scans. A more complete artery atlas was built from a population-based cohort of 167 subjects by Dunås et al. [8, 9] using a similar matching approach, and arteries were labeled in 10 clinical cases. Bilgel et al. [10] considered connection probability within the cerebral network using belief propagation for labeling 30 subjects but the method was limited to anterior circulations. Using the UNC dataset, in the serial work from Bogunović et al. [11,12,13], eight typical ICA graph templates were used to represent ICA with variations, and bifurcations of interest (BoI) were defined and classified so that vessels were labeled indirectly. However, more variations exist beyond the eight typical types. Using the same dataset, by combining artery segmentation along with the labeling, Robben et al. [14] simultaneously optimized the artery centerlines and their labels from an over complete graph. However, their computation involved thousands of variables and constraints, and takes as long as 510 s per case. In summary, while previous works have shown success in labeling relatively small datasets with limited variations in mostly healthy populations, prior knowledge about the global artery structures and relations has not been fully explored. Furthermore, labeling efficiency has not been considered for a large number of scans, which will be needed for clinical applications.

The Graph neural network (GNN) is an emerging network structure recently attracting significant interest [15, 16], including applications on vasculature [17, 18]. By passing information between nodes and edges within the graph, useful properties for the graph can be predicted. Considering the graph topology in anatomical structures of ICA, in this work, we propose a GNN model with hierarchical refinement (HR), aiming to overcome the challenges in arterial labeling by training with large and diversely labeled datasets (more than 500 scans in the training set from multiple sources) and applying refinements after network predictions to combine prior knowledge on ICA. In addition to its superior performance compared with methods described in the literature, our work shows robustness and generalizability on various challenging anatomical variations.

2 Methods

2.1 Intracranial Artery Labeling

The definition of arteries and their abbreviations follows [19] (also in supplementary material). All visible ICA in MRA are traced and labeled using a validated semi-automated artery analysis tool [19, 20] by experienced reviewers with the same labeling criteria, then examined by a peer-reviewer to control quality. Arteries not connected in the main artery tree are excluded from labeling, such as the arteries outside the skull.

2.2 Graph Neural Network (GNN) for Node and Edge Probabilities

The ICA network is represented as the centerlines of arteries, each with consecutively connected 3D points with radius. Centerlines in one MRA scan are constructed as an attributed relational graph \( G = \left( {V,E} \right) \). \( V = \left\{ {\varvec{v}_{i} } \right\} \) represents all unique points in the centerlines with node features of \( \varvec{v}_{i} \), and \( E = \left\{ {\varvec{e}_{k} ,r_{k} ,s_{k} } \right\} \) represents all point connections where edge \( k \) connects between the node index \( r_{k} ,s_{k} \) with edge features of \( \varvec{e}_{k} \). \( e\left( i \right)_{1, \ldots ,D\left( i \right)} \) are all the edges connected with node \( i \) (\( r_{k} = i \) or \( s_{k} = i \)). \( D\left( i \right) \) is the degree (number of neighbor nodes) of node \( i \).

Features for node \( \varvec{v}_{i} \) include \( \varvec{p}_{i} \) for \( x,y,z \) coordinates, \( r_{i} \) for radius and \( \varvec{b}_{i} \) for the directional embedding of the node. Due to the uncertain number of edges connected to the node, direction features cannot be directly used as an input in GNN. Here we use the multi-label binary encoding to represent direction features. First, 26 major directions in the 3D space are defined as \( n_{u} = \left( {x_{u} ,y_{u} ,z_{u} } \right)_{u = 1, \ldots ,26} \), with 45 degrees apart in each axis, excluding duplicates.

Then each edge direction \( \left( {x_{v} ,y_{v} ,z_{v} } \right) \) originating from the node is matched with the major directions with \( dir_{v} = argmax_{u} \left( {x_{u} x_{v} + y_{u} y_{v} + z_{u} z_{v} } \right) \). \( \varvec{b}_{i} \) is the 26-dimensional feature with encoded direction for all \( dir_{v} \). (an example in supplementary figure).

Features for edges \( \varvec{e}_{k} \) include edge direction \( \varvec{n}_{k} = \left( {\varvec{p}_{{s_{k} }} - \varvec{p}_{{r_{k} }} } \right) \), which is then normalized (and inverted) so that \( \left| {\left| {\varvec{n}_{k} } \right|} \right| = 1 \) and \( z_{k} > 0 \); distance between nodes at two ends \( d_{k} = \left| {\left| {\varvec{p}_{{s_{k} }} - \varvec{p}_{{r_{k} }} } \right|} \right| \); and mean radius at two nodes \( \overline{r}_{k} = \left( {r_{{s_{k} }} + r_{{r_{k} }} } \right)/2 \).

With similar purpose of BoI [11,12,13], we remove all nodes with a degree of 2 to reduce the graph size, as nodes requiring labeling are usually at bifurcations or ending points. If the remaining nodes are correctly predicted as one of the 21 possible bifurcation/ending types, then the ICA (edges) can be labeled based on their connections.

We implemented the GNN based on the message passing GNN framework proposed in [16, 21] to predict the types for each node and edge. The GNN takes a graph with node and edge features as input and returns a graph as output with additional features for node and edge types. The input features of edges and nodes in the graph are encoded to an embedding in the encoder layer. Then the core layer passes messages for 10 rounds by concatenating the encoder’s output with the previous output of the core layer. The embedding is restored to edge and node features in the decoder layer with additional label features. Computation in each graph block is shown in the Eq. 2. The edge attributes are updated through the per-edge “update” function \( \emptyset^{e} \), and features for edges connected to the same node are “aggregated” through function \( \rho^{e \to v} \) to update node features through the per-node “update” function \( \emptyset^{v} \). The network structure is shown in Fig. 2.

Probability \( P_{nt} \left( i \right) \) for node \( i \) being bifurcation/ending type \( nt \in \left\{ {0:Non\_Type,1:ICA\_Root\_L, \ldots ,20:ICA\_PComm} \right\} \) is calculated using a softmax function of GNN output \( O_{nt} \left( i \right) \). The predicted node type \( T_{n} \left( i \right) \) is then identified by selecting the node type with the maximum probability.

Similar for edges, \( et \in \left\{ {0:Non\_Type,1:ICA\_L, \ldots ,22:OA\_R} \right\} \), the edge probability and predicted edge type are

Ground truth types for nodes and edges are \( G\left( i \right), G\left( k \right) \).

The GNN was trained using combined weighted cross entropy losses in both nodes and edges, with weights inverse proportional to frequencies of the node and edge types. Batch size of 32 graphs was used in training the GNN. Adam optimizer [22] was used for controlling the learning rate. Positions of nodes from different datasets were normalized based on the imaging resolution, and a random translation of positions (within 10%) was used as the data augmentation method.

2.3 Hierarchical Refinement (HR)

Predictions from the GNN might not be perfect, as end-to-end training cannot easily learn global ICA structures and relations. Human reviewers are likely to subdivide ICA into three sub-trees (i.e., left/right anterior, posterior cerebral trees), find key nodes (such as the bifurcation for ICA/MCA/ACA) in sub-trees, then add additional branches which are less important and more prone to variations (such as PComm, AComm). Enlighted by the sequential behavior during manual labeling, a hierarchical refinement (HR) framework based on GNN outputs is proposed to further improve the labeling. Starting from the most confident nodes, the three-level refinement is shown in Fig. 3.

Workflow of HR framework. In the first level (blue box), confident nodes (circle and square dots) are identified from the GNN outputs. In the second level (orange boxes), confident nodes as well as their inter-connected edges in the left (blue lines)/right (red lines) anterior, posterior (green) sub-trees are identified. In the third level (grey boxes), optional nodes and edges (black lines) are added to each of the three sub-trees to form a complete artery tree. (Colour figure online)

Level One Labeling. We consider nodes as confident if the predicted node type fits the predicted edge types in edges they are connected with.

\( F \) is a lookup table for all valid pairs of edge types and node types. For example, \( F\left( {P1\_L,P1\_R,BA} \right) = PCA/BA \), \( F\left( {ICA\_L} \right) = ICA\_ROOT\_L \)

Level Two Labeling. From confident nodes, three sub-trees are built, and major-branch nodes are predicted in each sub-tree individually. Major node \( i \) is defined as ICA/MCA/ACA (for anterior trees) and PCA/BA (for posterior trees), and branch nodes \( \varvec{j} \) are defined as ICA_Root, M1/2, A1/2 (for anterior trees) and BA/VA, P1/2 (for posterior trees). If major nodes are not confident nodes in each sub-tree, they are predicted with type \( argmax_{i} (P_{{nt_{i} }} \left( i \right)|D\left( i \right) \ne 1) \) with additional constraints if branch nodes \( \varvec{j} \) are confident (\( i \notin \varvec{j} \) and \( i \) must be in the path between any pair of \( \varvec{j} \)). Then from the major node, all unconfident branch nodes are predicted using the target function of

On rare occasions, when the major nodes have a probability lower than a certain threshold \( Thres \) (when there are anatomical variations where major nodes do not exist), branch nodes are predicted without edge probability.

If certain distance between the optimal \( i \) and \( \varvec{j} \) is beyond the mean plus 1.5 standard deviation of \( G\left( k \right)|r_{k} = \varvec{j},s_{k} = i \) from the training set, labeling on \( \varvec{j} \) will be skipped and a node with a degree of 2 will be labeled so that its distance to node \( i \) is closest to the mean distance of \( G\left( k \right) \). This happens when there are missing Acomm or Pcomm.

Level Three Labeling. Optional branches are added to three sub-trees. M2+, A2+, and P2+ edges are assigned for all distal neighbors of M1/2, A1/2, P1/2 nodes. Based on node probabilities, OAs are identified on the path between ICA_Root and ICA/MCA/ACA nodes, Acomm is assigned if there is a connection between A1/2_L and A1/2_R, Pcomm is assigned if there is connection between P1/2 and ICA/MCA/ACA, VA_Root is predicted from neighbors of BA/VA.

2.4 Experiments

Datasets. Five datasets from our previous research [23] were used to train and evaluate our method, then the generalizability was assessed on the public UNC dataset with/without further training. Details for the datasets are in supplementary material.

Our five datasets were collected with different resolutions from different scanner manufacturers. Subjects enrolled in the datasets include both healthy (no recent or chronic vascular disease) and with various vascular related diseases, such as recent stroke events and hypertension. All the datasets were randomly divided into a training set (508 scans), a validation set (116 scans) and a testing set (105 scans). If the subject had multiple scans, these scans were divided into the same set. All scans from the UNC dataset (https://public.kitware.com/Wiki/TubeTK/Data, healthy volunteers) with publicly available artery traces (N = 41) were used. Generally, our dataset has more ICA variations and more challenging anatomies than the UNC dataset.

Evaluation Metrics. As our purpose is to label the ICA, the accuracy of predicted node labels is the primary metric for evaluation (Node_Acc). In addition, we also used number of wrongly predicted nodes per scan (Node_Wrong), edge accuracy (Edge_Acc) and the percent of scans with CoW nodes (ICA/MCA/ACA, PCA/BA, A1/2, P1/2/PComm, PComm/ICA), all nodes and all edges correctly predicted (CoW_Node_Solve, Node_Solve, Edge_Solve). For detailed analysis of detection performance on each bifurcation type, the detection accuracy, precision and recall for 7 major bifurcation types (ICA-OA, ICA-M1, ICA-PComm, ACA1-AComA, M1-M2, VBA-PCA1, PCA1-PComA) were calculated. The processing time was also recorded. Due to the lack of criteria for labeling nodes with degrees of 2, nodes such as A1/2 without AComm were excluded from the evaluation.

Comparison Methods. With the same artery traces of our dataset, three artery labeling methods [7, 9, 19] were used to compare the performance. Due to the unavailability of two methods using the UNC dataset, we only cite evaluation results from their publications. Direction features and HR were sequentially added to our baseline model to evaluate the contribution of different features and the effectiveness of the HR framework.

As the ablation study, GNN without HR predicts node and edge types directly from the GNN outputs \( T_{n} \left( i \right) \) and \( T_{e} \left( k \right) \). We further tested the removal of direction features.

3 Results

In the testing set of our dataset, 1035 confident nodes (9.86/scan) were identified, and 5 of them (0.5%, none are major or branch nodes) were predicted wrongly, showing labeling of confident nodes is reliable, so that labeling in the following up levels in HR was meaningful. \( Thres \) was chosen as 1e-10 from the validation set. Examples of correctly labeling challenging cases are shown in Fig. 4. Our method was robust, even with artificial noise branches added in the M1 branch shown in Fig. 4 (d).

Examples of challenging anatomical variations where our method predicted all arteries correctly. (a) A subject with Parkinson’s disease. Occlusions cause both right and left internal carotid arteries to be partially invisible. In addition, Pcomms are missing. (b) A hypertensive subject with rare A1_L artery missing, which is not among the 8 anatomical types and thus not solvable in [13]. (c) Some lenticulostriate arteries are visible in our dataset with higher resolution, an additional challenge for labeling, our method predicted it correctly as a non-type. (d) With more artificial lenticulostriate arteries added in the M1_L segment, our method is still robust to these additional noise branches.

The comparison with other artery labeling algorithms and the ablation study is shown in Table 1. Our method demonstrates a better node accuracy of 97.5% with 3.0 wrong nodes/scan. Our method is the only one with cases where all nodes and edges were predicted correctly with the minimum processing time (less than 0.1 s). With direction features and the HR added to the baseline model, the performance is further improved. ICA-OA is the most accurately detected bifurcation type with detection accuracy of 96.2% while the challenging M1/2 has an accuracy of 68.1%. Mean detection accuracy is 83.1%, precision is 91.3%, recall is 83.8%.

Our method showed good generalizability on the UNC dataset (Table 2). Even without additional training on the UNC dataset, the node accuracy was 99.03% (2.0 wrong nodes/scan) with 56% of cases solved. Mean detection accuracy for all node types was 92%. As a reference with methods using leave-one-out cross validation trained and evaluated using the same dataset, 58% of cases were solved [13]. The mean detection accuracies were 94% and 95% in [13, 14], respectively. If trained in combination with the UNC dataset using three fold cross validation, our method outperforms [13, 14].

4 Discussion and Conclusion

We have developed a GNN approach to label ICA with HR on our comprehensive ICA dataset (729 scans). Four contributions and novelties in our work are worth highlighting. 1) The dataset includes more diverse and challenging ICA variations compared with the existing UNC dataset, which is better suited to evaluate labeling performance. 2) The GNN and HR framework is an ideal method to learn from the graph representation of ICA and incorporate prior knowledge about ICA structure. 3) With accurate predictions of 20 node and 22 edge types covering all major artery branches visible in MRA, this method can automatically provide comprehensive features for detailed analysis of cerebral flow and structures in less than 0.1 s. 4) It should also be noted that our GNN and HR framework is not only applicable to ICA, but also to any graph structures where sequential labeling helps. For example, major lower extremity arteries and branches can be labeled ahead of labeling the collateral arteries.

Accurate ICA labeling using our method relies on reliable artery tracing, which is one of our limitations, although this is a lesser concern compared with non-graph based methods, where some artery tracing mistakes (such as centerlines off-center or zigzags in the path) can be avoided through a simplified representation of the ICA through graph constructions.

References

Kayembe, K.N., Sasahara, M., Hazama, F.: Cerebral aneurysms and variations in the circle of Willis. Stroke 15, 846–850 (1984)

Alpers, B.J., Berry, R.G., Paddison, R.M.: Anatomical studies of the circle of willis in normal brain. Arch. Neurol. Psychiatry. 81, 409–418 (1959)

Chen, L., et al.: Quantitative intracranial vasculature assessment to detect dementia using the intra-Cranial Artery Feature Extraction (iCafe) technique. In: Proc. Annu. Meet. Int. Soc. Magn. Reson. Med. Palais des congrès Montréal, Montréal, QC, Canada May, pp. 11–16 (2019)

Alpers, B.J., Berry, R.G.: Circle of willis in cerebral vascular disorders. Anat. Struct. Arch. Neurol. 8, 398–402 (1963)

Ustabaşıoğlu, F.E.: Magnetic resonance angiographic evaluation of anatomic variations of the circle of willis. Med. J. Haydarpaşa Numune Training Res. Hosp. 59, 291–295 (2018)

Bullitt, E., et al.: Vessel tortuosity and brain tumor malignancy: a blinded study. Acad. Radiol. 12, 1232–1240 (2005)

Takemura, A., Suzuki, M., Harauchi, H., Okumura, Y.: Automatic anatomical labeling method of cerebral arteries in MR-angiography data set. Japanese J. Med. Phys. 26, 187–198 (2006)

Dunås, T., Wåhlin, A., Ambarki, K., Zarrinkoob, L., Malm, J., Eklund, A.: A stereotactic probabilistic atlas for the major cerebral arteries. Neuroinformatics 15(1), 101–110 (2016). https://doi.org/10.1007/s12021-016-9320-y

Dunås, T., et al.: Automatic labeling of cerebral arteries in magnetic resonance angiography. Magn. Reson. Mater. Phys., Biol. Med. 29(1), 39–47 (2015). https://doi.org/10.1007/s10334-015-0512-5

Bilgel, M., Roy, S., Carass, A., Nyquist, P.A., Prince, J.L.: Automated anatomical labeling of the cerebral arteries using belief propagation. Med. Imaging 2013 Image Process. 8669, 866918 (2013)

Bogunović, H., Pozo, J.M., Cárdenes, R., Frangi, A.F.: Automatic identification of internal carotid artery from 3DRA images. In: 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology, pp. 5343–5346. IEEE (2010)

Bogunović, H., Pozo, J.M., Cárdenes, R., Frangi, A.F.: Anatomical labeling of the anterior circulation of the circle of willis using maximum a posteriori classification. In: Fichtinger, G., Martel, A., Peters, T. (eds.) MICCAI 2011. LNCS, vol. 6893, pp. 330–337. Springer, Heidelberg (2011). https://doi.org/10.1007/978-3-642-23626-6_41

Bogunović, H., Pozo, J.M., Cardenes, R., Roman, L.S., Frangi, A.F.: Anatomical labeling of the circle of willis using maximum a posteriori probability estimation. IEEE Trans. Med. Imaging. 32, 1587–1599 (2013)

Robben, D., et al.: Simultaneous segmentation and anatomical labeling of the cerebral vasculature. Med. Image Anal. 32, 201–215 (2016)

Zhou, J., et al.: Graph neural networks: a review of methods and applications, pp. 1–22 (2018)

Battaglia, P.W., et al.: Relational inductive biases, deep learning, and graph networks. arXiv: 1806.01261, pp. 1–40 (2018)

Zhai, Z., et al.: Linking convolutional neural networks with graph convolutional networks: application in pulmonary artery-vein separation. In: Zhang, D., Zhou, L., Jie, B., Liu, M. (eds.) GLMI 2019. LNCS, vol. 11849, pp. 36–43. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-35817-4_5

Wolterink, J.M., Leiner, T., Išgum, I.: Graph convolutional networks for coronary artery segmentation in cardiac CT angiography. In: Zhang, D., Zhou, L., Jie, B., Liu, M. (eds.) GLMI 2019. LNCS, vol. 11849, pp. 62–69. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-35817-4_8

Chen, L., et al.: Development of a quantitative intracranial vascular features extraction tool on 3D MRA using semiautomated open-curve active contour vessel tracing. Magn. Reson. Med. 79, 3229–3238 (2018)

Chen, L., et al.: Quantification of morphometry and intensity features of intracranial arteries from 3D TOF MRA using the intracranial artery feature extraction (iCafe): a reproducibility study. Magn. Reson. Imaging 57, 293–302 (2018)

Gilmer, J., Schoenholz, S.S., Riley, P.F., Vinyals, O., Dahl, G.E.: Neural message passing for quantum chemistry. arXiv:1704.01212v2, (2017)

Pinto, A., Alves, V., Silva, C.A.: Brain tumor segmentation using convolutional neural networks in MRI images. IEEE Trans. Med. Imaging 35, 1240–1251 (2016)

Chen, L., et al.: Quantitative assessment of the intracranial vasculature in an older adult population using iCafe (intraCranial Artery Feature Extraction). Neurobiol. Aging 79, 59–65 (2019)

Acknowledgements

This work was supported by National Institute of Health under grant R01-NS092207. We are grateful for the collaborators who provided the datasets for this study, including the CROP and BRAVE investigators, and researchers from the University of Arizona, USA, Beijing Anzhen hospital, China, and Tsinghua University, China and the public data from The University of North Carolina at Chapel Hill (distributed by the MIDAS Data Server at Kitware Inc.). We acknowledge NIVIDIA for providing the GPU used for training the neural network model.

Our code and dataset are available at https://github.com/clatfd/GNN-ART-LABEL.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Chen, L., Hatsukami, T., Hwang, JN., Yuan, C. (2020). Automated Intracranial Artery Labeling Using a Graph Neural Network and Hierarchical Refinement. In: Martel, A.L., et al. Medical Image Computing and Computer Assisted Intervention – MICCAI 2020. MICCAI 2020. Lecture Notes in Computer Science(), vol 12266. Springer, Cham. https://doi.org/10.1007/978-3-030-59725-2_8

Download citation

DOI: https://doi.org/10.1007/978-3-030-59725-2_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-59724-5

Online ISBN: 978-3-030-59725-2

eBook Packages: Computer ScienceComputer Science (R0)